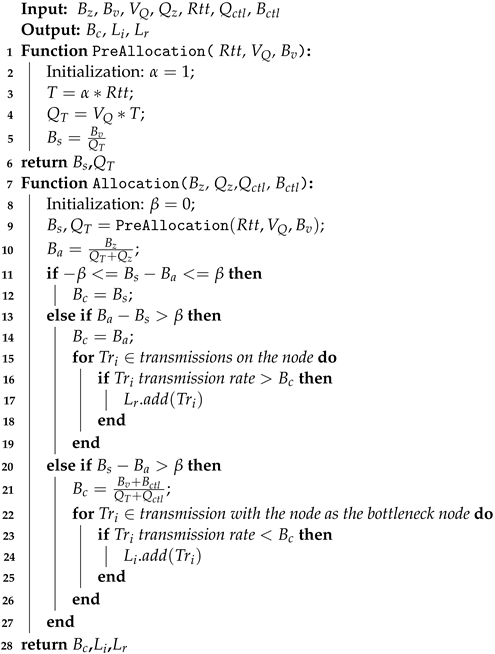

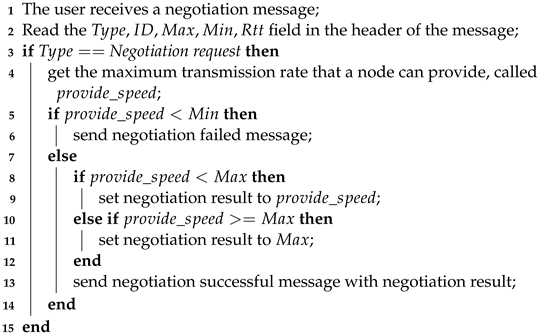

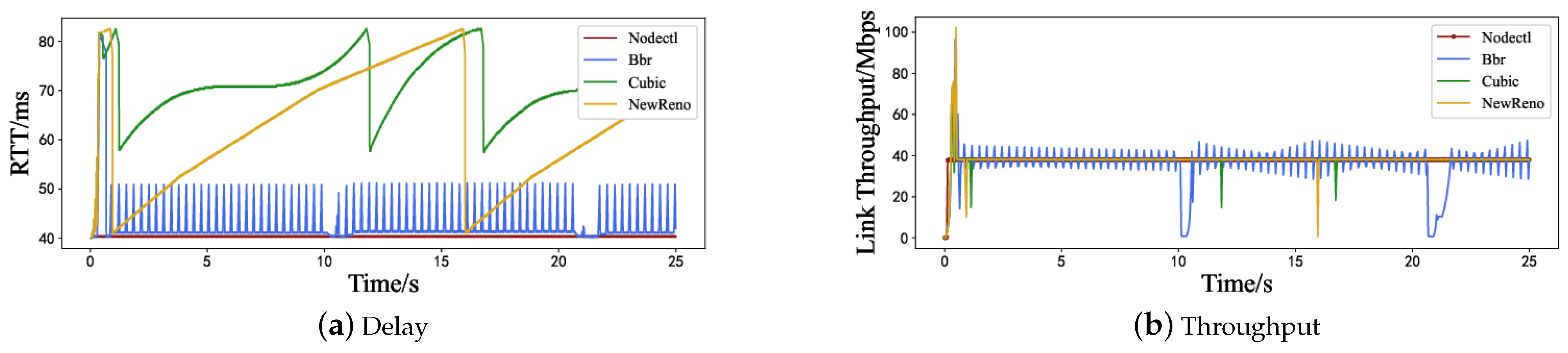

The bandwidth allocation algorithm is based on the above analysis and runs port by port. Each port on the network node allocates bandwidth for its transmission, and the detailed steps are described in Algorithm 1. Inputs and represent the current node port’s total and free bandwidth, respectively. represents the speed at which new transmissions arrive, and represents the total number of transmissions serviced by the current port. This information is obtained from the statistics of network nodes. comes from the rate negotiation message, where and represent the number and bandwidth occupied by the transmission with the current network node as the bottleneck, respectively. The following section will detail the rate negotiation message and the method of determining whether a network node is the bottleneck node for transmission. The output is the bandwidth allocation result of the current transmission, the list of transmissions that need to increase the transmission rate , and the list of transmissions that need to decrease it .

The algorithm consists of two parts. From line 1 to line 6 in Algorithm 1, the first part calculates the bandwidth the current network node can provide for new transmissions without adjusting existing transmissions. There is a delay of at least one between the completion of bandwidth allocation by the network node and the data arriving at the network node. The bandwidth allocation results of the network nodes must be valid when the data truly come. Otherwise, this may cause congestion. Therefore, we first calculate the control delay T (line 3), where is a constant coefficient and should be greater than or equal to one to make the allocation result effective within one . The larger the , the longer the network changes must be predicted, and the lower its accuracy. By default, we take to be equal to one (line 2). Then, based on the current new transmission arrival rate, we predict the number of transmissions that will arrive within the control delay T (line 4) and evenly allocate the remaining bandwidth to them, obtaining the transmission bandwidth that should be given to the current transmission without adjusting other existing transmissions (line 5).

The second part of the algorithm from line 7 to line 28 in Algorithm 1 calculates the bandwidth that the current network node can provide for new transmissions while adjusting other existing transmissions, as well as a list of transmissions that need to be changed and the corresponding adjustment results. Based on previous statistics, we predict the number of new transmissions in the future

T time, and the network situation is constantly changing. If the actual number increases in the future, then the bandwidth allocated to subsequent nodes will decrease. In the contrary situation, the bandwidth allocated to subsequent nodes will increase. We need to adjust the allocation results to ensure fair bandwidth allocation. Firstly, we calculate the bandwidth allocated for the current

(line 9) followed by the average bandwidth of all

, including the transmissions in the future

T time (line 10), and then compare the two. To avoid minor changes causing oscillation adjustment, we set an unfair tolerance coefficient

, where

. The stability of the transmission rate is directly proportional to

while adjusting the frequency, and the fairness is inversely proportional to

. This article considers a relatively ideal state, taking

(line 8). When the difference between the newly allocated bandwidth

and the average bandwidth

is within the unfair tolerance coefficient range (line 11), no adjustment is needed, and the freshly allocated

bandwidth can be used as the result

(line 12). Otherwise, adjustments need to be made. The bottleneck node determines the transmission rate. Therefore, we adopt the principle of a cautious rate increase and active rate decrease for adjustments, which means that all network nodes can force transmissions to slow down. In contrast, only the bottleneck node can recommend transmissions to increase their rate. When the average bandwidth minus the newly allocated bandwidth exceeds the tolerance coefficient (line 13), the average bandwidth is taken as the result (line 14), and all existing transmissions that exceed the average bandwidth are added to the adjustment list

(lines 15–19). Among them,

is the ith transmission of all transmissions that pass through the node. When the newly allocated bandwidth minus the average bandwidth exceeds the tolerance coefficient (line 20), it is necessary to calculate the average bandwidth allocation result (line 21) that includes the number of transmissions in the future

T time and all transmissions with this node as the bottleneck and add all existing transmissions below this result to the adjustment list

(lines 22–26). It is worth noting that if this node is not a bottleneck node for any transmission, as both

and

are zero, and the average value degrades to

, meaning no adjustment is needed. It is impossible to initiate an adjustment to increase the transmission rate when this node is not a bottleneck node.

| Algorithm 1: Bandwidth allocation algorithm. |

![Futureinternet 15 00385 i001]() |

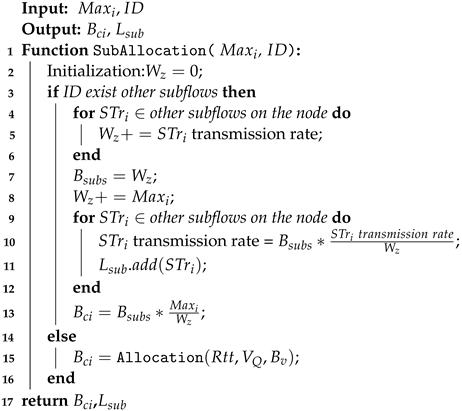

Multipath transmission involves multiple flows which should be considered as a whole. Using the transmission ID, we determine the transmission to which the subflow belongs and can also distinguish different subflows of the same transmission. Flows with the same transmission content ID and transmission destination ID are different subflows in the same transmission. To maintain fairness in bandwidth allocation and avoid multiple subflows from preempting bandwidth, we propose a bandwidth allocation algorithm for subflows based on the bandwidth allocation algorithm of the network nodes mentioned above. The specific steps are shown in Algorithm 2. The inputs are the transmission rate negotiation values and of the subflow taken from the corresponding fields of the transmission negotiation message, which will be detailed in the next section. The outputs are the bandwidth allocation result and adjustment list for the subflow.

Firstly, we determine whether there are already allocated subflows of the same transmission (line 3) on this port, where multiple subflows are transferred from the same port. If it exists, then this indicates that the bandwidth has already been allocated for the transmission. Based on the transmission rate of each subflow, the total bandwidth

and total weight

for all subflows are calculated (lines 4–8). Then, the bandwidth is allocated according to the weight, and adjustments are made to other subflows (lines 9–13). Among them,

is the ith subflow of all subflows belonging to the same transmission that passes through the node. If it does not exist, then the subflow can obtain all the newly allocated bandwidth (line 15).

| Algorithm 2: Subflow bandwidth allocation algorithm. |

![Futureinternet 15 00385 i002]() |