Designing an Interactive Communication Assistance System for Hearing-Impaired College Students Based on Gesture Recognition and Representation

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

3.1. Nonparticipatory Classroom Observations

- When did the interaction between students and teachers occur in class?

- What was the context when the interaction occurred?

- What was the behavior of the observed subjects during the interaction?

- How many times did that behavior occur during the interaction?

- How effective the communication was between student and teachers?

- Was there someone else involved except the hearing-impaired college students?

3.2. Interviews and Qualitative Analysis

3.3. Participants

3.4. Procedure

4. Results

4.1. Findings

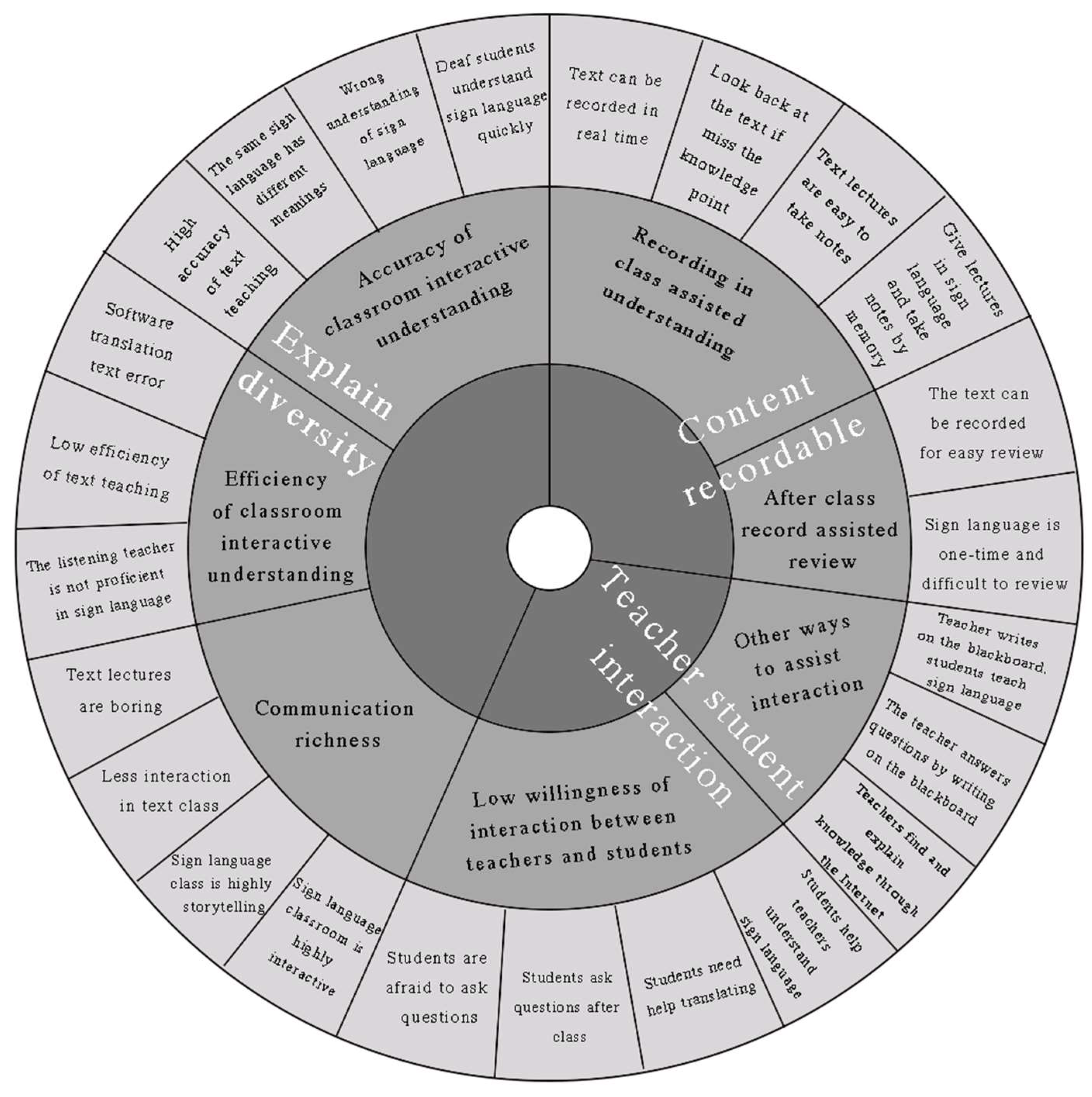

- In terms of teacher–student interaction efficiency, when college students attend classes in the classroom, different class methods initiate different class efficiency. In the non-signed classroom, the students’ learning efficiency is not high because they are not familiar with words or the presentation of words is too slow, such as typing on the spot, writing on the blackboard, etc. The teaching method based on sign language is easier to understand and obtain access into, which makes the classroom efficiency higher. Therefore, sign language should be the major measure to improve the classroom interaction efficiency.

- As for the recording of the classroom interaction contents, sign language cannot achieve a good recording function because of its transience. Therefore, they can understand and forget their knowledge points easily as well, but written words can be recorded and saved in real time, which solves this problem. At the same time, the recording function of the text can help them check repeatedly and understand the knowledge points better, hence, it enables them to keep up with the tempo of the teacher’s lecture and facilitate the review after class. Therefore, the need for written words plays an essential role in the classroom.

- When it comes to the accuracy of interactive understanding, there is no unified standard for sign language. There are different sign languages used by normal hearing teachers and the hearing-impaired college students, which leads to inaccurate transmission of sign language or different meanings of a gesture, and gives rise to mutual incomprehension or even misunderstanding between teachers and students. Compared with sign language, the accuracy of text is higher in conveying information. In addition, some mentioned that through textual communication, they can improve their communication level to communicate with hearing teachers more efficiently in the future and improve their adaptability into the society. Therefore, during the process of design, much emphasis will be laid on how to combine sign language with text and how to convert sign language accurately into text.

- In terms of classroom interaction efficiency, teacher–student communication directly affects the efficiency in the classroom. Due to the communication problems between the hearing-impaired college students and normal-hearing teachers, when asking or answering questions, students dare not to ask or answer for fear of misunderstanding, incomprehension, or embarrassment, thus lowering the interaction willingness. During the interaction, they often choose to use sign language repeatedly or slow down the speed of sign language to confirm whether they have understood accurately, which will also reduce the efficiency of the class. Furthermore, most interactions only happen between teachers and students, and other students remain passively receiving. They had no idea about what the interactive students had answered, which proves the lack of attraction in the classroom. Therefore, the classroom auxiliary system should have the function of improving students’ interactive participation. The construction of auxiliary system and terminal equipment is also an important part of the design.

4.2. Design Transformation

5. Assistant System Design

5.1. Overall Design

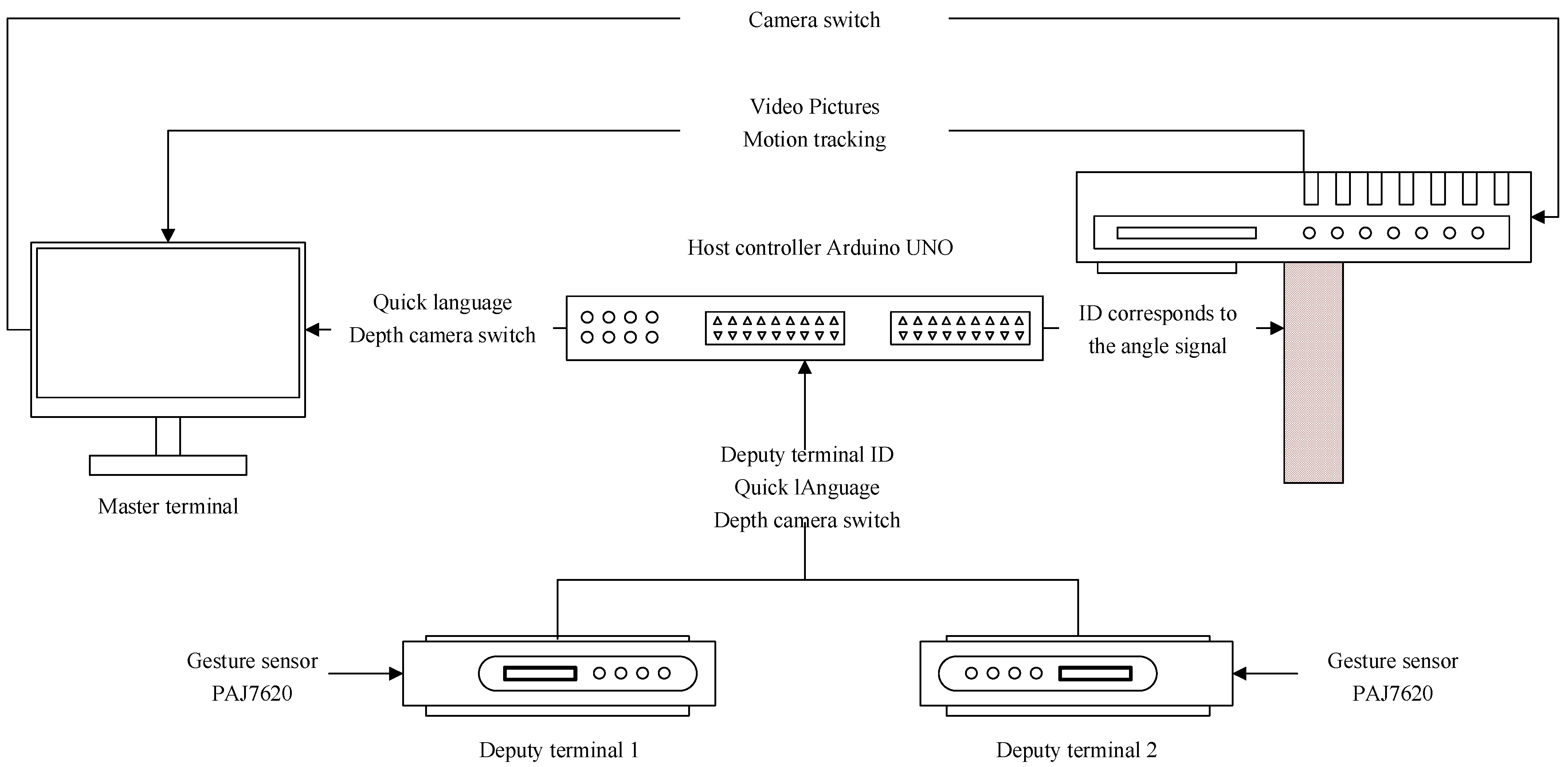

- The main terminal is a teacher’s computer, as an output unit, including a sign-language recognition module, display module, and voice module. The main terminal is used to track the joint position, display the text converted by the action of college students with hearing impairment, convert the text or action into voice information, and recognize sign language according to the action and the video picture obtained by the depth camera.

- The subterminal is mainly used to transmit the information ID number of the student’s name and the angle of the seat and the podium, which is used for the adjustment of the camera direction and the student information displayed on the teacher’s computer. The control part of the subterminal adopts the AT89C52 single-chip microcomputer as the processor, and the ID unit is allocated on the AT89C52 single-chip computer and is connected with the AT89C52 through the wireless serial port LC2S. In addition, the subterminal is equipped with a lighting module, used to indicate whether the student is actively participating. The indicator of the lighting module can convey common information, to intuitively attract the attention of teachers and students.

- The gesture sensor PAJ7620, fixed on the student’s desk, is connected with the deputy terminal to obtain and identify the movement information. Depending on the student’s action, the PAJ7620 can be used to identify the corresponding message and determine whether to activate the signal of the sensor, trigger quick messages, or activate the gesture-recognition system by the basic action that the deputy terminal gesture sensor made. The gesture sensor can improve the communication efficiency between these students and teachers by combining the sign-language recognition.

- The depth camera is connected to the master terminal and its lens faces the deputy terminal. The depth camera here is Kinect, with 1080P HD video recording and the ability to recognize human body movements. The angle between the depth camera and the deputy terminal should be no more than 10 degrees, to ensure that the master terminal can acquire the gestures captured by the depth camera, then convert the gestures to sign language, or trigger a swift message according to the gestures. The student could turn the depth camera on or off by performing a specific action to the gesture sensor.

- The tripod head is a rotatable structure on which the depth camera is installed. The tripod head is designed with single axis camera and is controlled by the master controller.

- The master controller is used to connect the master terminal and the deputy terminal as well as the tripod head. The master controller selects Arduino UNO development board based on Atmega328P as the core processor.

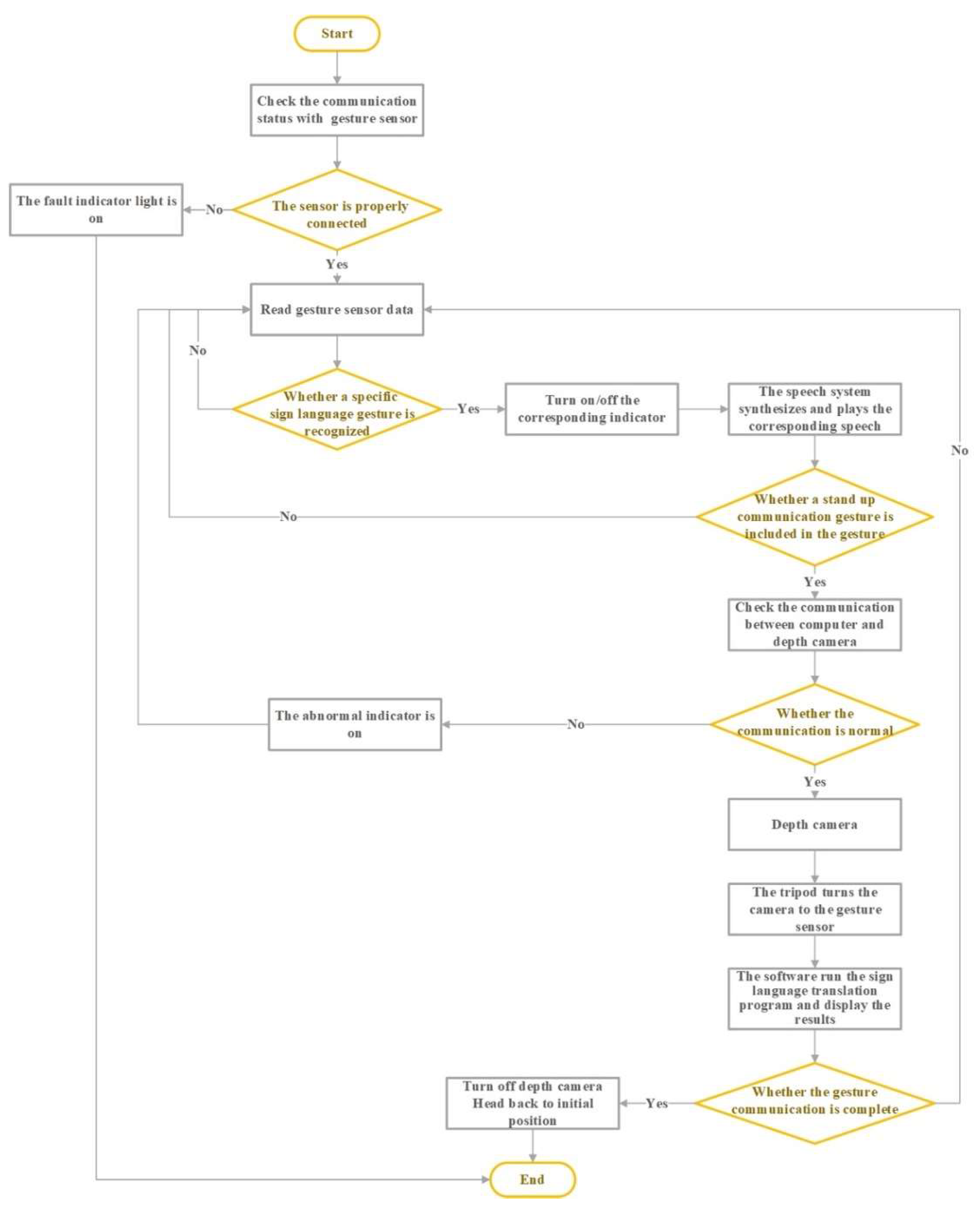

5.2. Operation Principle

6. Gesture Recognition and Representation

6.1. Basic Gesture Model

6.2. Gesture Recognition and Tracking

7. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gindis, B. Vygotsky’s vision: Reshaping the practice of special education for the 21st century. Remedial Spec. Educ. 1999, 20, 333–340. [Google Scholar] [CrossRef]

- Zhang, Y.; Rosen, S.; Cheng, L.; Li, J. Inclusive higher education for students with disabilities in China: What do the university teachers think? High. Educ. Stud. 2018, 8, 104–115. [Google Scholar] [CrossRef]

- Li, H.; Lin, J.; Wu, H.; Li, Z.; Han, M. “How do I survive exclusion?” Voices of students with disabilities at China’s top universities. Child. Youth Serv. Rev. 2021, 120, 105738. [Google Scholar] [CrossRef]

- Chadwick, D.; Wesson, C.; Fullwood, C. Internet Access by People with Intellectual Disabilities: Inequalities and Opportunities. Futur. Internet 2013, 5, 376–397. [Google Scholar] [CrossRef]

- Chen, Y.-T. A study to explore the effects of self-regulated learning environment for hearing-impaired students. J. Comput. Assist. Learn. 2014, 30, 97–109. [Google Scholar] [CrossRef]

- Xu, B. Using New Media in Teaching English Reading and Writing for Hearing Impaired Students—Taking Leshan Special Education School as an Example. Theory Pract. Lang. Stud. 2018, 8, 588–594. [Google Scholar] [CrossRef]

- Deb, S.; Bhattacharya, P. Augmented Sign Language Modeling (ASLM) with interaction design on smartphone—An assistive learning and communication tool for inclusive classroom. Procedia Comput. Sci. 2018, 125, 492–500. [Google Scholar] [CrossRef]

- Bragg, D.; Koller, O.; Bellard, M.; Berke, L.; Boudreault, P.; Braffort, A.; Caselli, N.; Huenerfauth, M.; Kacorri, H.; Verhoef, T.; et al. Sign language recognition, generation, and translation: An interdisciplinary perspective. In Proceedings of the 21st International Conference on Computers and Accessibility, New York, NY, USA, 28–30 October 2019; pp. 16–31. [Google Scholar]

- Zimmerman, T.G.; Lanier, J.; Blanchard, C.; Bryson, S.; Harvill, Y. A hand gesture interface device. ACM SIGCHI Bull. 1986, 18, 189–192. [Google Scholar] [CrossRef]

- Chang, C.-C.; Pengwu, C.-M. Gesture recognition approach for sign language using curvature scale space and hidden Markov model. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 27–30 June 2004; Volume 2, pp. 1187–1190. [Google Scholar]

- Geetha, M.; Menon, R.; Jayan, S.; James, R.; Janardhan, G.V.V. Gesture Recognition for American Sign Language with Polygon Approximation. In Proceedings of the IEEE International Conference on Technology for Education, Tamil Nadu, India, 14–16 July 2011; pp. 241–245. [Google Scholar]

- Zhao, M.; Quek, F.; Wu, X. RIEVL: Recursive induction learning in hand gesture recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1174–1185. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Exploiting Recurrent Neural Networks and Leap Motion Controller for the Recognition of Sign Language and Semaphoric Hand Gestures. IEEE Trans. Multimed. 2018, 21, 234–245. [Google Scholar] [CrossRef] [Green Version]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Fels, S.; Hinton, G. Glove-Talk: A neural network interface between a data-glove and a speech synthesizer. IEEE Trans. Neural Netw. 1993, 4, 2–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Glauser, O.; Wu, S.; Panozzo, D.; Hilliges, O.; Sorkine-Hornung, O. Interactive hand pose estimation using a stretch-sensing soft glove. ACM Trans. Graph. 2019, 38, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Thieme, A.; Belgrave, D.; Doherty, G. Machine learning in mental health: A systematic review of the HCI literature to support the development of effective and implementable ML systems. ACM Trans. Computer-Hum. Interact. 2020, 27, 1–53. [Google Scholar] [CrossRef]

- Desmet, P.; Xue, H.; Xin, X.; Liu, W. Emotion deep dive for designers: Seven propositions that operationalize emotions in design innovation. In Proceedings of the International Conference on Applied Human Factors and Ergonomics. AHFE International, New York, NY, USA, 24–28 July 2022. [Google Scholar]

- Zhu, Y.; Jing, Y.; Jiang, M.; Zhang, Z.; Wang, D.; Liu, W. A Experimental Study of the Cognitive Load of In-vehicle Multiscreen Connected HUD. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2021; pp. 268–285. [Google Scholar]

- Liu, W. Designing Generation Y Interactions: The Case of YPhone. Virtual Real. Intell. Hardw. 2022, 4, 132–152. [Google Scholar] [CrossRef]

- Desmet, P.; Overbeeke, K.; Tax, S. Designing products with added emotional value: Development and application of an approach for research through design. Des. J. 2001, 4, 32–47. [Google Scholar] [CrossRef]

- Gray, C.M.; Chivukula, S.S.; Lee, A. What Kind of Work Do “Asshole Designers” Create? Describing Properties of Ethical Concern on Reddit. In Proceedings of the ACM Designing Interactive Systems Conference, Eindhoven, The Netherlands, 6–10 July 2020; pp. 61–73. [Google Scholar]

- Xin, X.; Wang, Y.; Xiang, G.; Yang, W.; Liu, W. Effectiveness of Multimodal Display in Navigation Situation. In Proceedings of the Ninth International Symposium of Chinese CHI, Online, 16–17 October 2021; pp. 50–62. [Google Scholar]

- Kang, H.; Hur, N.; Lee, S.; Yoshikawa, H. Horizontal parallax distortion in toed-in camera with wide-angle lens for mobile device. Opt. Commun. 2008, 281, 1430–1437. [Google Scholar] [CrossRef]

- Li, W.; Jin, C.-B.; Liu, M.; Kim, H.; Cui, X. Local similarity refinement of shape-preserved warping for parallax-tolerant image stitching. IET Image Process. 2018, 12, 661–668. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, B.; Zhou, J.; Wang, K. Real-time 3D unstructured environment reconstruction utilizing VR and Kinect-based immersive teleoperation for agricultural field robots. Comput. Electron. Agric. 2020, 175, 105579. [Google Scholar] [CrossRef]

- Roy, P.P.; Kumar, P.; Kim, B.-G. An Efficient Sign Language Recognition (SLR) System Using Camshift Tracker and Hidden Markov Model (HMM). SN Comput. Sci. 2021, 2, 1–15. [Google Scholar] [CrossRef]

- Zhu, Y.; Tang, G.; Liu, W.; Qi, R. How Post 90’s Gesture Interact with Automobile Skylight. Int. J. Hum.-Comput. Interact. 2022, 38, 395–405. [Google Scholar] [CrossRef]

- Sagayam, K.M.; Hemanth, D.J. ABC algorithm based optimization of 1-D hidden Markov model for hand gesture recognition applications. Comput. Ind. 2018, 99, 313–323. [Google Scholar] [CrossRef]

- Charmaz, K.; Belgrave, L.L. Thinking about Data with Grounded Theory. Qual. Inq. 2019, 25, 743–753. [Google Scholar] [CrossRef]

- Raheja, J.L.; Mishra, A.; Chaudhary, A. Indian sign language recognition using SVM. Pattern Recognit. Image Anal. 2016, 26, 434–441. [Google Scholar] [CrossRef]

- Kishore, P.V.V.; Prasad, M.V.; Prasad, C.R.; Rahul, R. 4-Camera model for sign language recognition using elliptical fourier descriptors and ANN. In Proceedings of the International Conference on Signal Processing and Communication Engineering Systems, Vijayjawada, India, 2–3 January 2015; pp. 34–38. [Google Scholar]

- Thang, P.Q.; Dung, N.D.; Thuy, N.T. A comparison of SimpSVM and RVM for sign language recognition. In Proceedings of the International Conference on Machine Learning and Soft Computing, Ho Chi Minh City, Vietnam, 13–16 January 2017; pp. 98–104. [Google Scholar]

- Deng, C.X.; Wang, G.B.; Yang, X.R. Image edge detection algorithm based on improved canny operator. In Proceedings of the International Conference on Wavelet Analysis and Pattern Recognition, Tianjin, China, 14–17 July 2013; pp. 168–172. [Google Scholar]

- Zhao, H.; Qin, G.; Wang, X. Improvement of canny algorithm based on pavement edge detection. In Proceedings of the 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 2, pp. 964–967. [Google Scholar]

- Saxena, S.; Singh, Y.; Agarwal, B.; Poonia, R.C. Comparative analysis between different edge detection techniques on mammogram images using PSNR and MSE. J. Inf. Optim. Sci. 2022, 43, 347–356. [Google Scholar] [CrossRef]

| Selective Coding | Axial Coding | Reference Points | % |

|---|---|---|---|

| Variety of interactive lectures | Different ways of interaction in class have different understanding accuracy | 84 | 43.3 |

| Different ways of interaction in class have different understanding efficiency | |||

| There are differences in communication richness | |||

| Class interactions can be recorded | You need to take notes in class to help you understand | 72 | 37.1 |

| You need to take notes after class to help you review | |||

| Teacher–student interaction | The willingness of teacher–student interaction is low | 38 | 19.6 |

| Interaction needs to be assisted in other ways |

| Requirements | Transformation | Function Design |

| Variety of interactive lectures | Interactive content presentation | Live captioning |

| Multiple definition of words | ||

| Sign language is translated in literal form | ||

| Class interactions can be recorded | Interactive content recording | Classroom implementation records |

| Interactive content is saved in the cloud | ||

| Text interaction facilitates notetaking | ||

| Teacher–student interaction | Interactions | Remind when answering questions |

| Sight and hearing draw attention to each other | ||

| Choose answers |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Zhang, J.; Zhang, Z.; Clepper, G.; Jia, J.; Liu, W. Designing an Interactive Communication Assistance System for Hearing-Impaired College Students Based on Gesture Recognition and Representation. Future Internet 2022, 14, 198. https://doi.org/10.3390/fi14070198

Zhu Y, Zhang J, Zhang Z, Clepper G, Jia J, Liu W. Designing an Interactive Communication Assistance System for Hearing-Impaired College Students Based on Gesture Recognition and Representation. Future Internet. 2022; 14(7):198. https://doi.org/10.3390/fi14070198

Chicago/Turabian StyleZhu, Yancong, Juan Zhang, Zhaoxi Zhang, Gina Clepper, Jingpeng Jia, and Wei Liu. 2022. "Designing an Interactive Communication Assistance System for Hearing-Impaired College Students Based on Gesture Recognition and Representation" Future Internet 14, no. 7: 198. https://doi.org/10.3390/fi14070198

APA StyleZhu, Y., Zhang, J., Zhang, Z., Clepper, G., Jia, J., & Liu, W. (2022). Designing an Interactive Communication Assistance System for Hearing-Impaired College Students Based on Gesture Recognition and Representation. Future Internet, 14(7), 198. https://doi.org/10.3390/fi14070198