1. Introduction

Internet technology has been transforming in the form of all-pervasive, ever-connected devices, which opens opportunities for applications and novel processes. Real-time and critical systems require high reliability and low response times in the application fields of Industry 4.0 [

1], autopilot vehicles [

2], disaster management, and emergency response situations. Networks often tend to use low power, lossy networks, and avail link-layer protocols such as LoRAWAN and NB-IoT [

3], but quality of service (QoS) is not implicit, and the burden falls on the network layer to implement and achieve the desired reliability for data transmission.

The Named Data Network (NDN) [

4] is based on an information-centric networking (ICN) paradigm, introduced as an additional protocol which complements internet protocol (IP). There are two important packets in NDN, namely, “interest” and “data”. There are three components in NDN for stateful forwarding, namely, content stores (CS) [

4], forwarding information bases (FIBs) [

4], and Pending Interest Tables (PITs) [

4]. The consumer node issues interest. The successive NDN node, upon receiving a consumer interest packet, performs a lookup in its CS for the requested content; PITs perform the same lookup for the content name entry. A CS is a memory of variable size used to store any data which flows through the node temporarily. If the consumer request matches with the data available in the CS, then the interested data is served; otherwise, the content name, along with its associated incoming interface (information of neighboring node from which the interest packet was received), is recorded in the PIT. If the content name entry already exists in the PIT, indicating that there are other consumers waiting for the said content, then the incoming interface of the new interest is added to the content name entry of the PIT. The unsatisfied interest packet is forwarded to next node. An FIB maintains the interfaces of the neighboring nodes. The content names in the PIT are alive until the request is either satisfied or dropped due to expiry of time, pre-emption, etc. The sequence of interest-packet forwarding repeats until the interest reaches either the source of the content, or any CS of intermediate nodes where valid content is present. Once the content is found in an NDN node, the data packet (with requested content) traverses the reverse path by referring to the PIT entry and its associated interfaces to reach the consumers. The CS of the NDN nodes along the path may decide to cache the traversing content, depending upon their admission control strategy. The unique feature of an NDN is that every time a consumer requests content, the request need not be satisfied by the producer of that data. Intermediate nodes may have cached the data in their CS, and the data can be served directly from a CS. Content names are organized in a hierarchical manner, and are addressed as depicted in

Figure 1 [

4].

There is increasing research towards employing NDNs for communication network applications [

5,

6,

7]. Research studies [

8,

9] conclude that NDNs can constitute reliable network-layer protocols for networks with acceptable network throughput and latency. A survey of the existing literature shows that there is very little exploration on the QoS properties of NDNs from the context of content caching for networks which use a low-power network architecture, such as the Internet of Things (IOT). In an IP network, QoS involves managing network resources such as bandwidth, buffer allocation, and transmission priority [

10]. An NDN-based network adds an additional dimension of resource management, i.e., CS management and PIT management.

The implementation of QoS for a network involves assuring resource priority to the qualified content stream over the regular content stream. Flow-based QoS guarantees the priority for a content stream based on the application of origin, and class-based QoS guarantees the priority for a content stream based on traffic classes such as telephony, network control packets, etc. [

11]. In NDNs, content may have originated from multiple sources, and may be consumed by multiple destinations. For this reason, content-forwarding resources are distributed, and these NDN properties make QoS enforcement difficult. The request–response sequence in an NDN navigates in a hop-to-hop manner, which may trigger an unforeseen amount of data packets and quickly exhaust the resources. Pre-empting pending interests, dropping data packets, and prioritizing the interests of QoS by differentiating regular traffic without a proper strategy may lead to a burst in interest packet volume, and resources may become underutilized [

12].

For NDNs, QoS strategies can leverage the inherent advantages of a CS. A CS improves turn-around time, reduces traffic congestion by availing the content in demand through multiple NDN nodes, and acts as a re-transmission buffer. QoS strategies should consider managing PITs, which indicate pending interests to be satisfied. As priority interests become satisfied by data packets, regular interests recorded in a PIT may be starved. In another situation, a CS and PIT may become saturated with QoS traffic and become unable to cache further content and maintain the record of pending QoS content interests, respectively. In such situations, heuristic mechanisms are needed to manage the CS and the PIT with proper service balance for the regular and competing QoS content. While managing distributed resources such as PITs and CS, coordination between NDN nodes are essential. Identical content in a cluster of NDN nodes decreases the potential of the network [

13]. The overall CS capacity of the NDN may be efficiently used if a cooperative caching scheme is implemented. In the context of PITs, a saturated PIT may pre-emptively remove entries and terminate the path for the content flow. This wastes the content-forwarding resources of preceding NDN nodes. This is unacceptable in cases where the traffic is QoS guaranteed. In this light, this proposal explores ways to manage the PITs and CS of NDNs to implement QoS in NDNs.

The Internet Engineering Task Force (IETF) has published guidelines on tackling QoS issues in information-centric networks using the techniques of flow classification, PIT management, and content store management from a resource-management point of view [

14,

15,

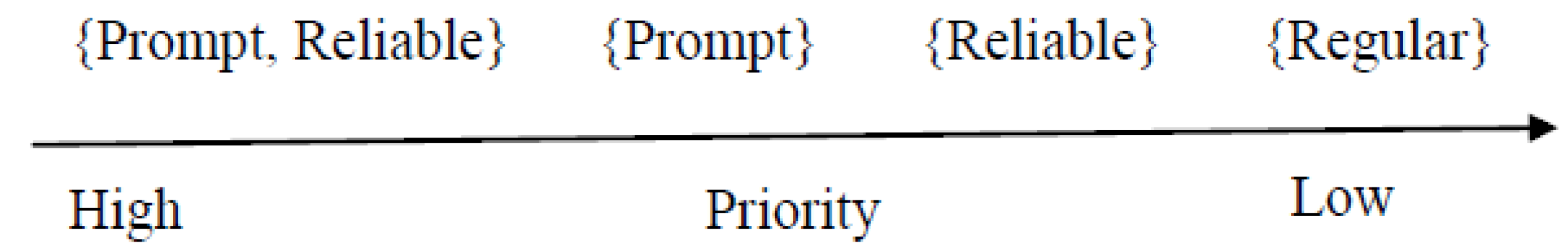

16]. The traffic flows are identified as, and in the order of

, for “prompt” QoS, which aims to minimize the delay for QoS traffic by providing a content-forwarding priority. For “reliable” services, the priority order is

, with the intention of guaranteeing the reliable delivery of data packets to consumers. The nomenclature “prompt”, “reliable”, and their priority orders are preserved in QLPCC, as proposed by the IETF publication. The flow classification introduces the concept of embedding name components to establish equivalence among different traffic flow priorities [

14,

15,

16]. The proposed QoS-linked privileged content-caching (QLPCC) mechanism employs this concept to facilitate the assignment of priorities for different traffic flows indicated by the Flow ID. A Flow ID is a name component which uniquely identifies a priority traffic flow for the implementation of flow differentiation. QLPCC proposes the calculation of an eviction score for a given priority content entry, for eviction from a saturated PIT. Content store management consists of an admission-control phase, where heuristics decide whether or not to cache the content. Each content belongs to a traffic flow, and each QoS node adopts a Flow ID. If the content belongs to the adopted Flow ID, then it is cached by the CS; otherwise, the content is forwarded without caching. The admission-control algorithm defines the steps to adopt new Flow IDs and to drop the old ones. The second phase of CS management is content eviction for the better utilization of limited available memory. QLPCC proposes a novel heuristic-based content-eviction algorithm by considering their QoS priorities, usage frequency, and content freshness. Time-expired content is evicted first, followed by prompt-priority content which was overlapped by Least Frequently Used (LFU) and Least Fresh First (LFF) algorithms. If such an overlapping was not found, then LFU prompt-priority content is evicted. If a memory requirement still persists, then the content-eviction strategy applies LFU and LFF algorithms on reliable priority content. Content that is overlapped by LFU and LFF is evicted first, followed by LFU reliable-priority content.

The proposed QLPCC designates dedicated NDN nodes as QoS nodes for implementing the proposed strategies. QoS nodes are resourceful NDN nodes that handle QoS traffic flows as per the QLPCC strategy. A QoS node can be any resourceful NDN node, including routers. The unique feature of the proposed QLPCC is that it provides support for privileged content through both PIT management and CS management. The distinguishing features of QLPCC, when compared with other schemes, are provided in

Table 1.

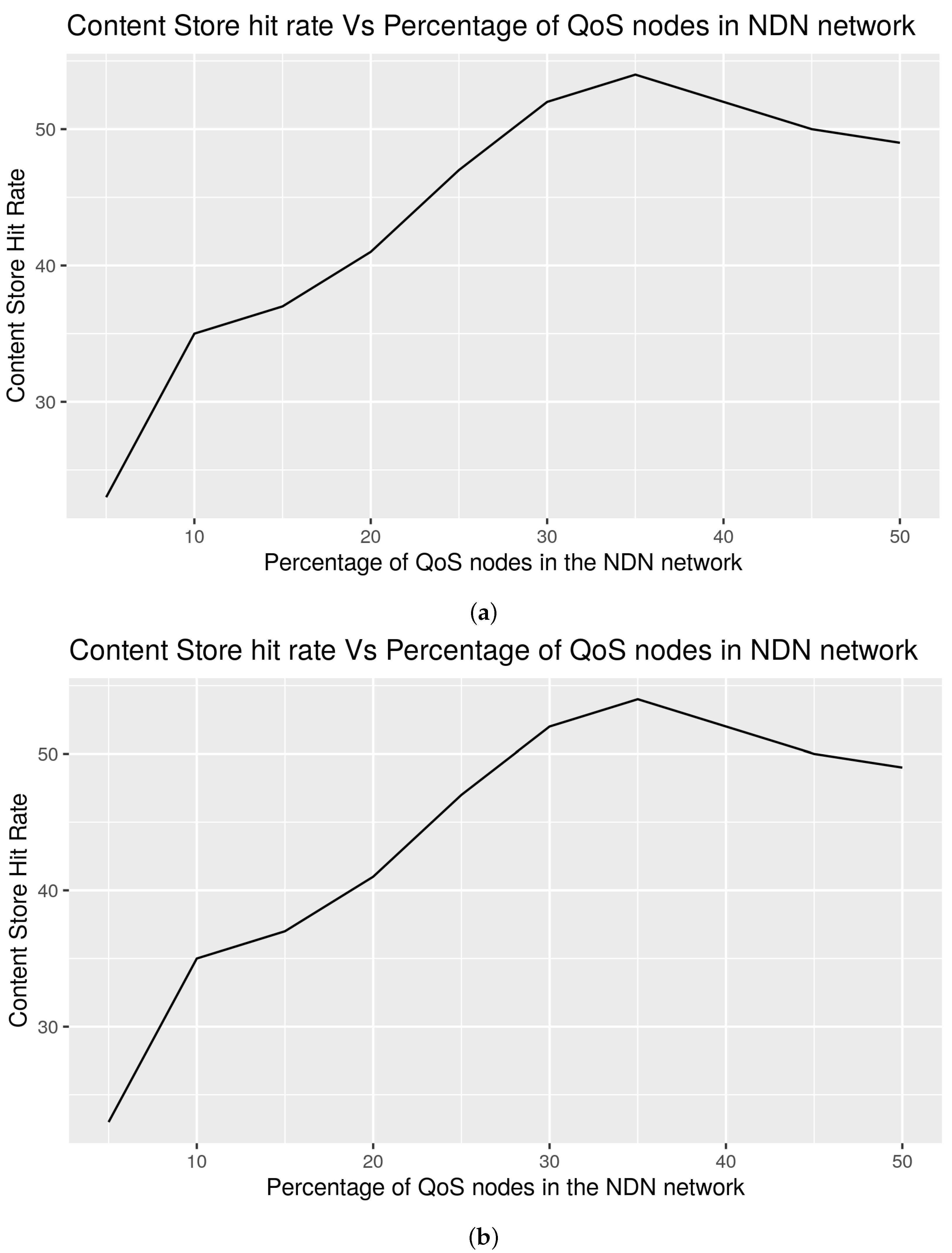

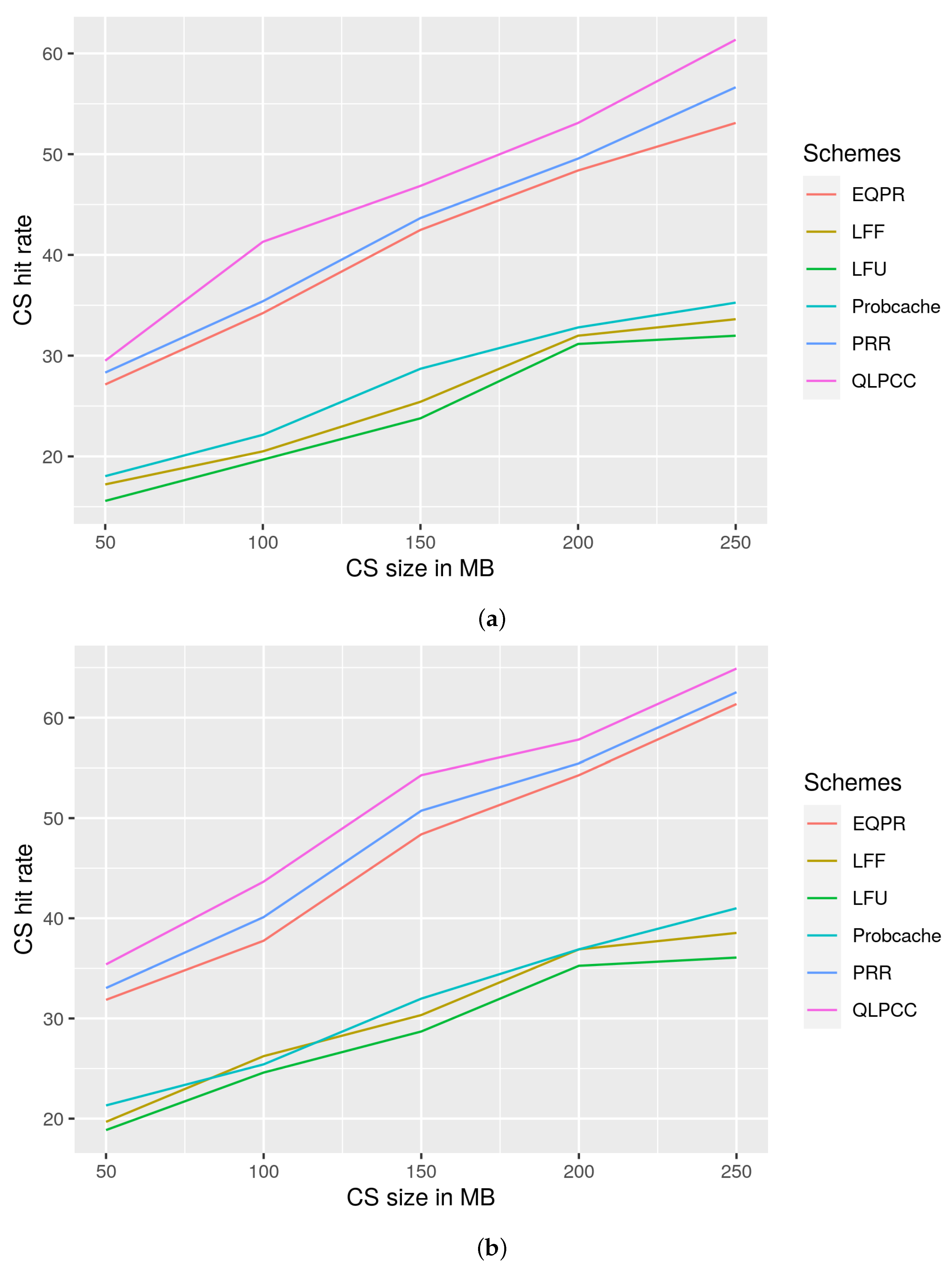

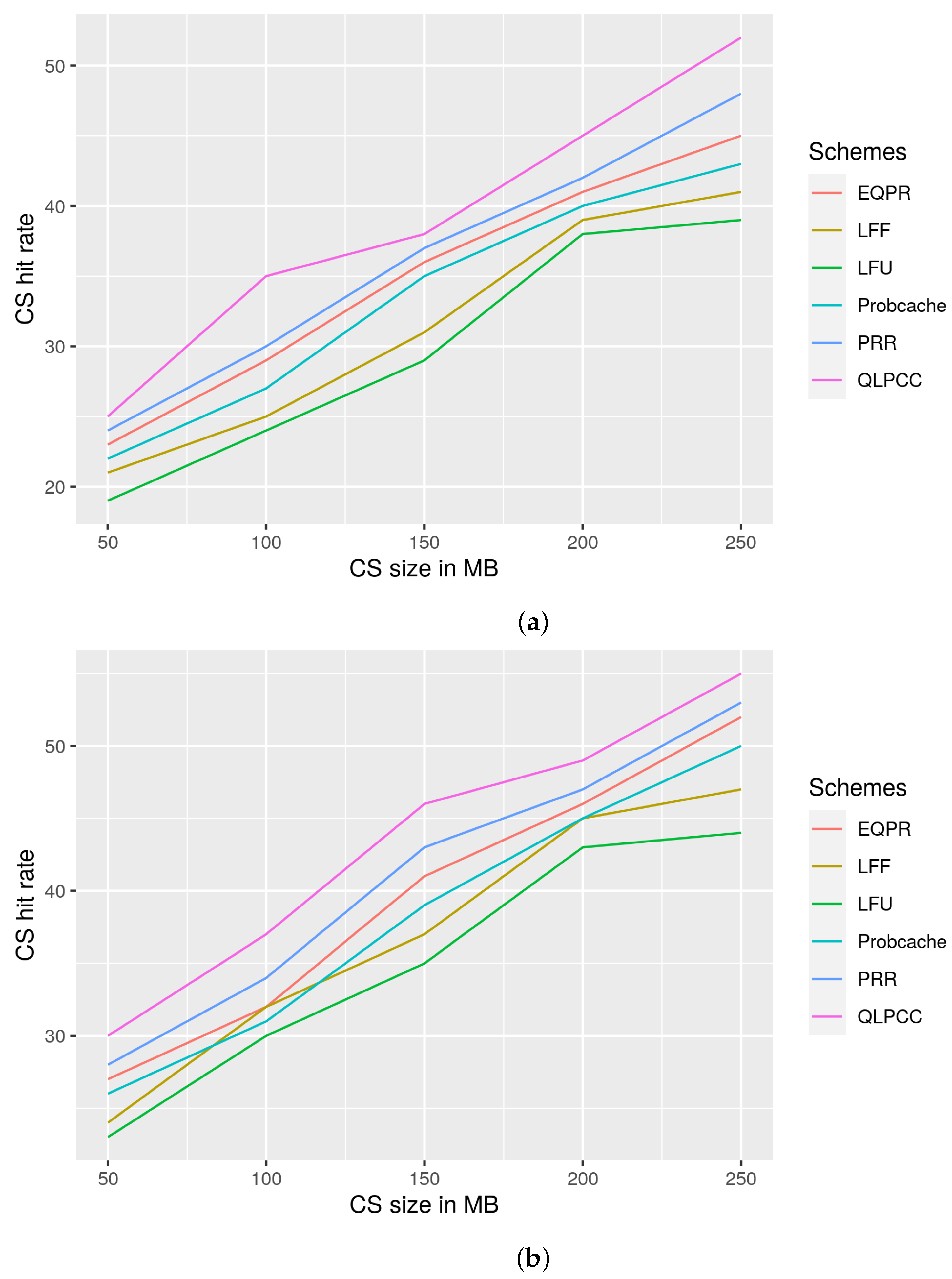

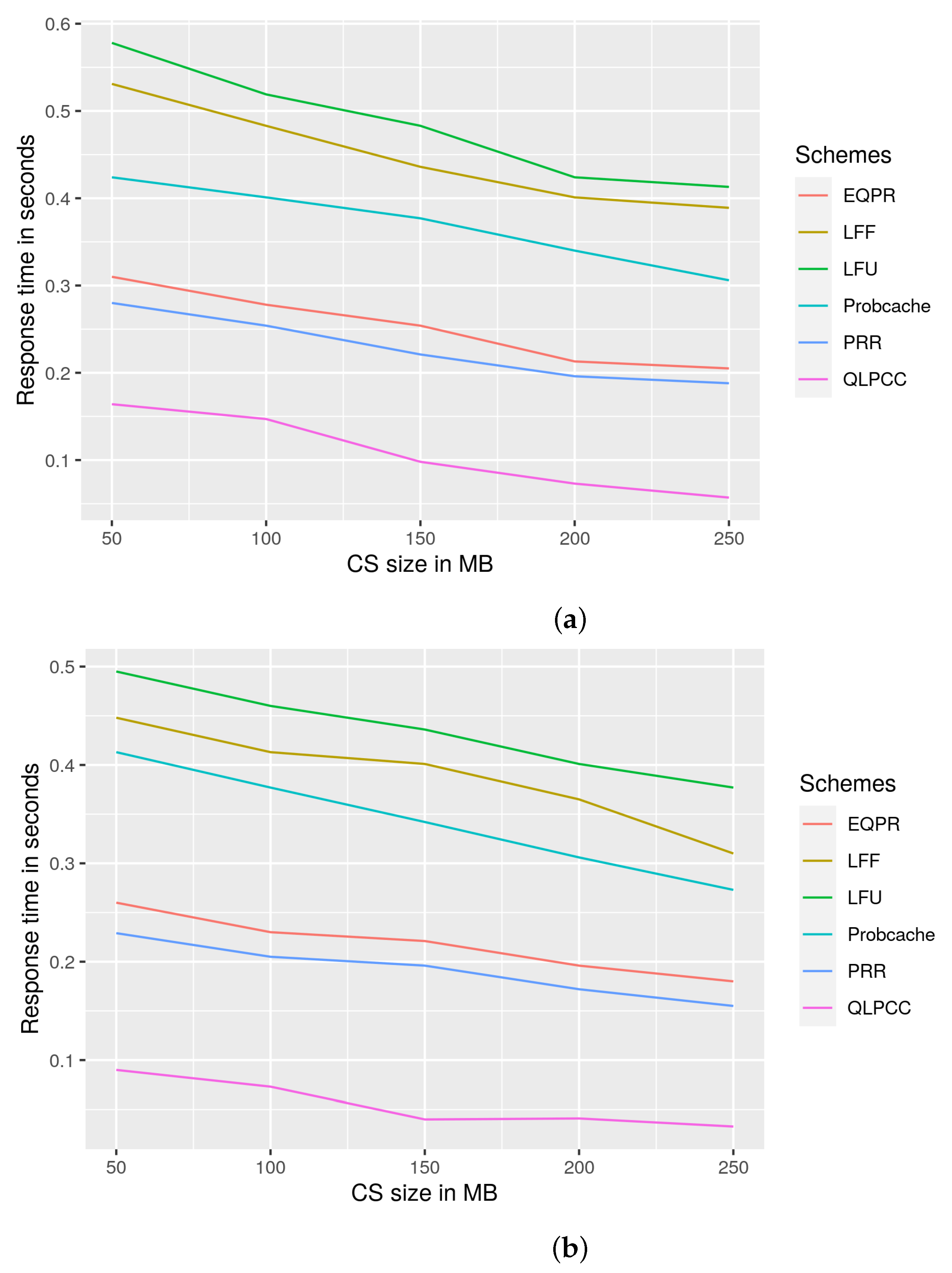

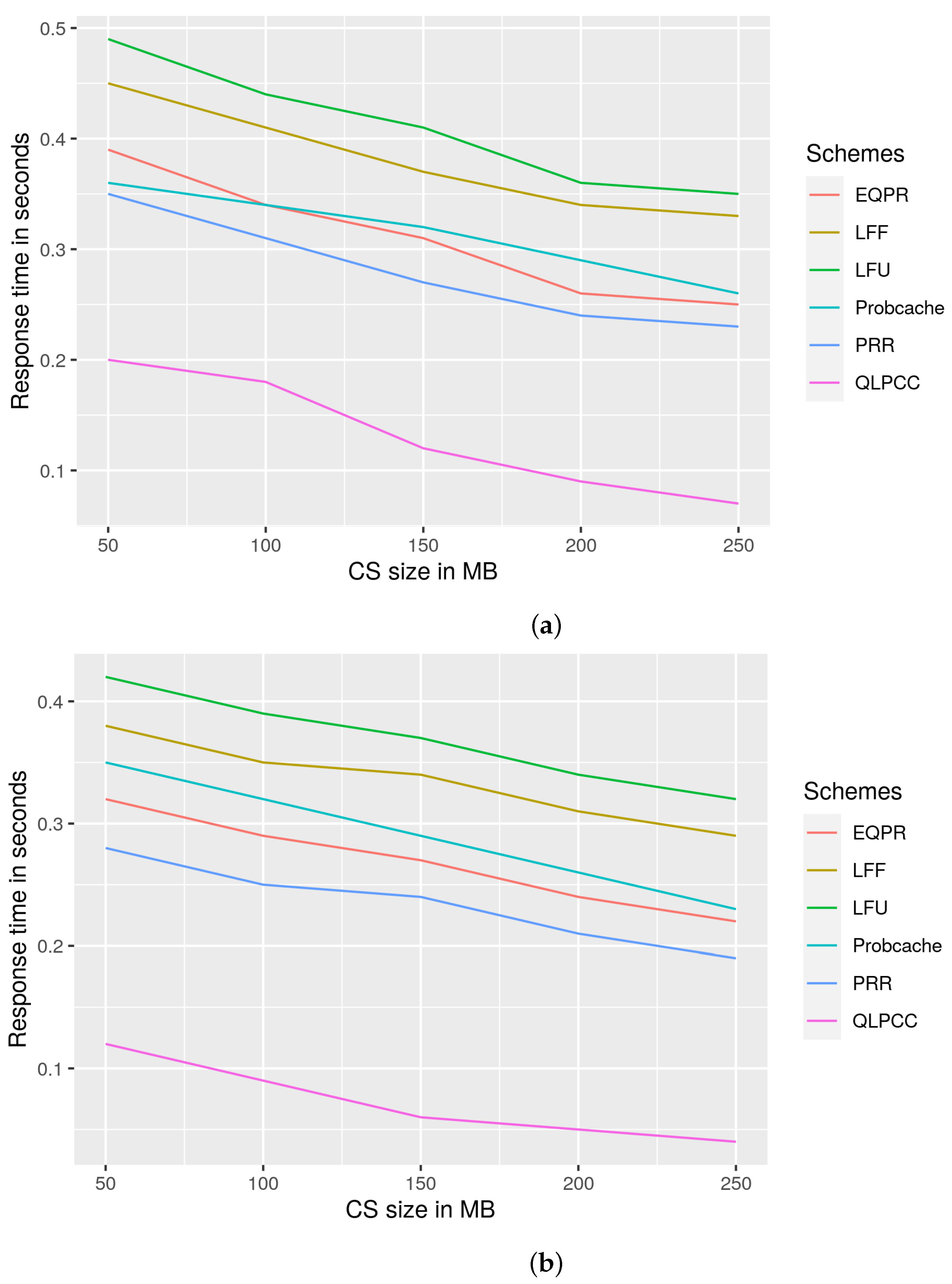

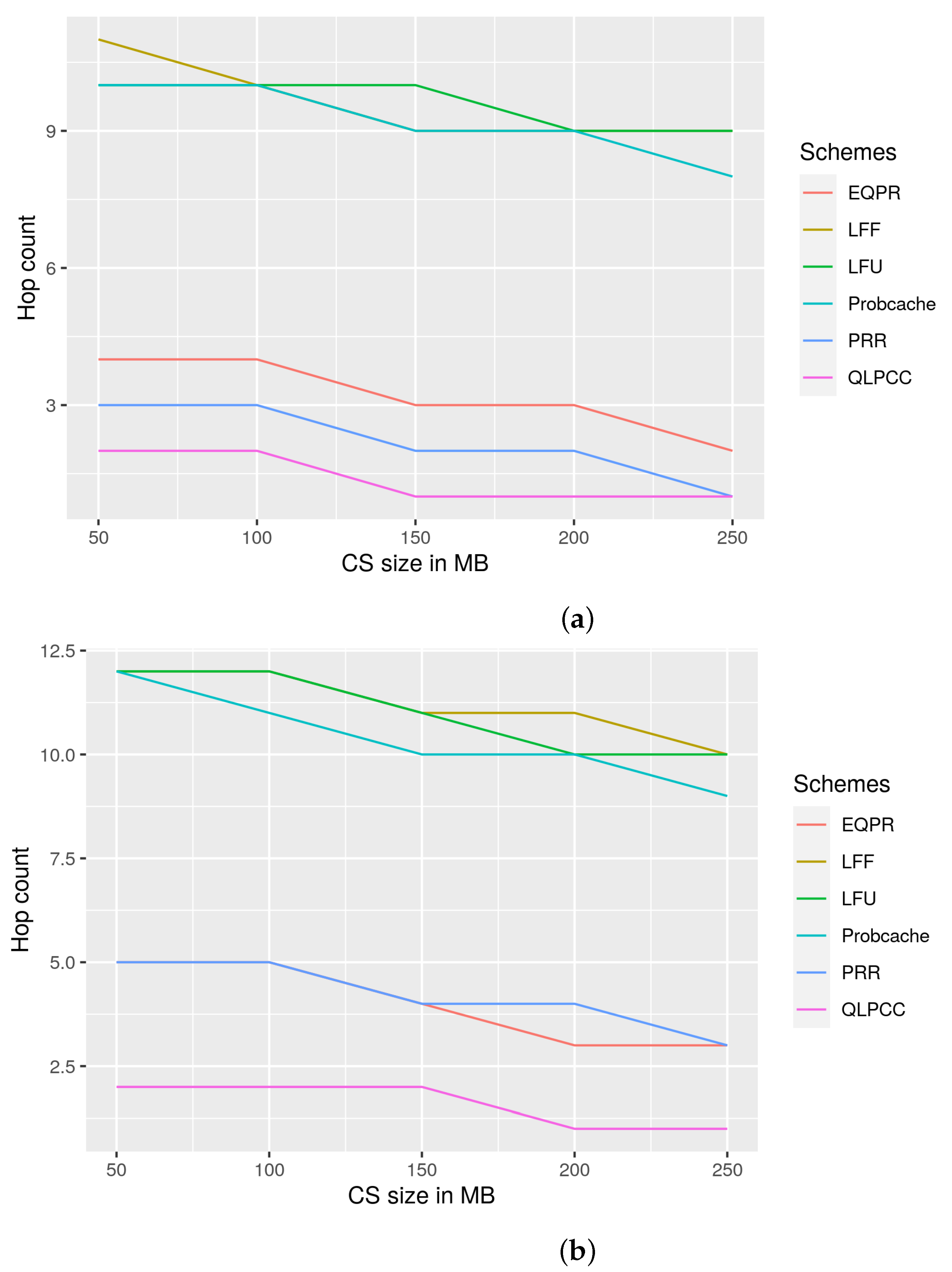

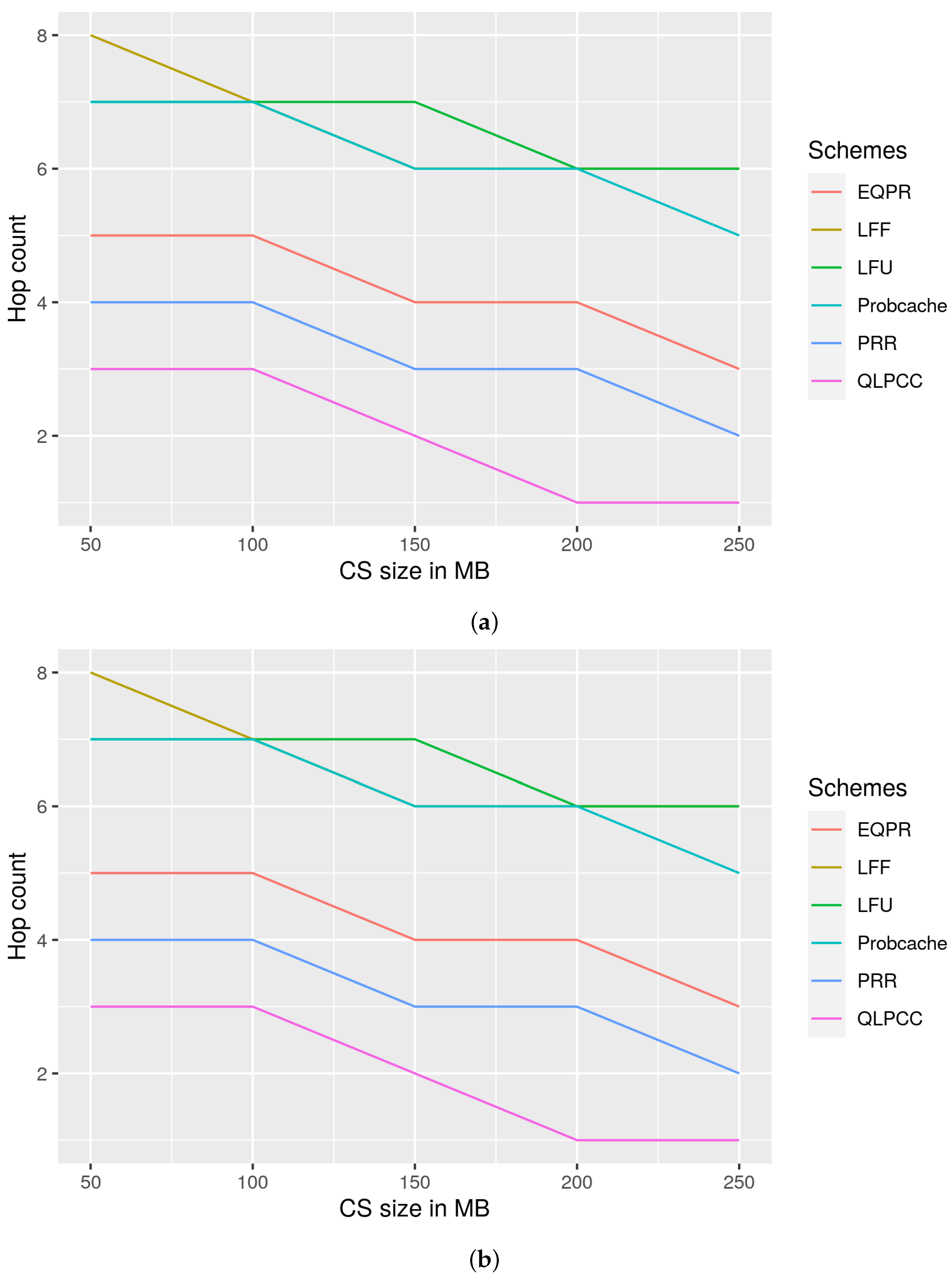

QLPCC is simulated on an ndnSIM [

30] platform, and results are compared with EQPR [

28], PRR [

29], probability cache, and LFU and LFF schemes. QLPCC outperformed the previously mentioned schemes in terms of content store hit rate, response time, and hop count reduction from the perspective of priority traffic and overall traffic. QLPCC is also evaluated in terms of content store hit rate vs. the percentage of QoS nodes in the network.

The contributions of the proposed QLPCC strategy are listed as follows:

The QLPCC strategy to manage QoS content in NDN-based networks;

A QoS-based PIT management scheme through a novel Flow Table involving the calculation of eviction scores;

A QoS-based content store management scheme, which proposes the content store admission-control and content-eviction heuristics;

A priority flow adoption method for caching QoS-linked privileged content among QoS nodes;

A reduction in hop count, an increase in content store hit rate, and an improvement in response time.

The paper is organized as follows.

Section 1, above, provided an introduction to the work, and will be followed by a brief discussion of the QoS guidelines published by the IETF, with respect to ICN, in

Section 2.

Section 3 overviews the related works in terms of NDN traffic classification, content store management policies, and PIT management policies.

Section 4 provides a description of the QLPCC strategy in terms of Flow Table description, the QoS table, eviction score calculation, PIT management, content store management, and finally, an illustration through a case example. Results and discussions are provided in

Section 5, followed by a conclusion in

Section 6.

4. QoS-Linked Privileged Content Caching

The QLPCC manages the QoS traffic through dedicated QoS nodes. QoS nodes are embedded as intermediate NDN nodes between a consumer and a producer, specifically for handling QoS contents in terms of content caching and PIT management, as proposed in the QLPCC strategy. Other non-QoS NDN nodes do not differentiate between regular and privileged traffic, and handle them according to standard PIT and CS policies. This method benefits regular traffic, allowing it to obtain its fair share of network bandwidth. This proposal introduces the implementation of Flow Tables for PIT management and QoS Tables for content store management policies of content eviction and content-admission control, as novel proposals to be implemented in QoS nodes. The Flow Table identifies the content name, extracts the Flow ID, determines the equivalence class, and calculates the eviction score. For each traffic flow, a Flow ID is assigned to identify the content streams and to provide a continuous streaming path through the QoS node. The eviction score provides a measure of importance that the PIT entry has among the content of the same QoS privilege. This helps PIT eviction strategies to decide when there are overlapping candidates with the same degree of privilege. The PIT entry-eviction strategy is presented in Algorithm 1. A detailed description of the working of the Flow Table, along with the PIT entry-eviction algorithm, is provided in

Section 4.1.

| Algorithm 1 PIT entry-eviction algorithm. |

Evict all TTL-expired interest entries for Regular content names in PIT do Evict the content name entry with the highest eviction score return evicted content name end for for Reliable content names in PIT do Evict the content name entry with the highest eviction score return evicted content name end for for Prompt content names in PIT do Evict the content name entry with the highest eviction score return evicted content name end for

|

The QoS Table identifies the content name, extracts the Flow ID, monitors the content time stamp and expiry time, and documents the path of the QoS nodes where a given Flow ID is adopted. Content names are used to extract Flow ID and QoS privilege. The content-eviction algorithm examines the expiry time for evicting the obsolete content and freeing up the memory. QoS privilege provides the order in which the content has to be considered for eviction. The content-eviction strategy, presented in Algorithm 2, is called when the CS memory is exhausted and there is a need to free up the limited available memory. The content-admission-control strategy is presented in Algorithm 3. A detailed description of the working of the QoS Table, along with the content-eviction and admission-control algorithms, is provided in

Section 4.2.

| Algorithm 2 Content-eviction algorithm. |

Evict all time-expired obsolete content for Prompt content in content store do Apply LFU Apply LFF Evict the content overlapped by LFU and LFF, followed by LFU content return evicted content name end for for Reliable content in content store do Apply LFU Apply LFF Evict the content overlapped by LFU and LFF, followed by LFU content return evicted content name end for

|

| Algorithm 3 Content-admission-control algorithm. |

if Content Store memory full then Call content-eviction procedure end if if Flow ID adopted by content store then Cache the content else Issue interest packet for QoS table update Lookup the QoS table for the QoS node which has adopted the given Flow ID if FlowID adopted ? then Forward the content end if if QoS table==full then Forward the content to be adopted in future QoS nodes else if Flow ID has not been adopted then Adopt the Flow ID Set Flow ID expiry time to the longest TTL value among the content belonging to Flow ID return Flow ID end if end if

|

4.1. Flow Table and PIT Management

The purpose of the Flow Table is to maintain a record of equivalence classes of the PIT entries that are currently active through the given QoS node.

Table 3 provides the format of the Flow Table attributes. The Flow ID and equivalence class ID are extracted from the content name hierarchy, where the granularity of QoS is implemented. Eviction score is a function that generates a value that assists with evaluating the candidate content for PIT eviction in cases of PIT saturation. The novelty of eviction-score assignment is the ability to arbitrate the priority between the same level of QoS privilege. Though the IETF documents [

14,

15,

16] abstractly order the privileges into (prompt, reliable), prompt, reliable, and regular services, respectively, eviction score acts as an additional tool to handle inter-prompt, inter-reliable PIT entries which are not addressed by the said IETF publication.

The ECNCT [

15] name component for QoS is embedded in the name hierarchy at the granularity level of QoS enforcement and identified using the naming convention “name—Equivalence Class ID”. The application searches for the name—Equivalence Class ID string pattern in the content name and extracts the Flow ID and equivalence class ID to populate the Flow Table. Each content name is associated with the eviction score value, as per Equation (

2).

where TTL represents the time To live for the given PIT entry, and

represents the number of request interfaces (indicates the number of consumers waiting to be satisfied) associated with the given PIT entry. A higher eviction score represents a higher probability of PIT eviction for a given equivalence class. The PIT eviction strategy selects an entry with a higher eviction score to evict among the same equivalence class, when there is a requirement for more memory after the time-expired entries are removed from the PIT. The eviction score mechanism acts as an arbitrator between contesting QoS-privileged interests in a resource-constrained PIT. The steps to evict PIT entries by referring to the Flow Table and using the eviction score are provided in Algorithm 1.

Algorithm 1 first searches for TTL-expired PIT entries and evicts them, followed by the eviction of PIT entries of regular content in descending order of eviction score. Further memory requirements are satisfied by evicting PIT interest entries of reliable-equivalence-class in descending order of eviction score, followed by prompt-equivalence-class PIT entries in descending order of eviction score. The content names of the (prompt, reliable) equivalence class, indicating both prompt and reliable privileges, are evicted only on TTL expiry.

4.2. QoS Table and Content Store Management

Content store management deals with admission-control techniques for content caching and cached-content-replacement strategies. Admission control is the process of determining whether or not to cache the content. Replacement strategies dictate which content has to be replaced in the scenario of CS memory exhaustion. Admission control for the content follows the priority order

[

14,

16]. CS management follows a federated approach for caching the content, with the aim of eliminating redundancy and saving precious memory space. QoS nodes implement the QoS Table, which is used to arbitrate the decisions of admission control and content replacement. Each CS of a given QoS node federates itself with other QoS nodes, based on traffic flows which are distinguished based on Flow ID. If a given flow is already being adopted by a QoS node, then content belonging to that Flow ID is cached by that QoS node. The format of the QoS Table is shown in

Table 4.

CS memory is limited and may become exhausted with time. Therefore, to free up the memory, a content-eviction strategy is proposed in Algorithm 2. The content-eviction algorithm considers content freshness, usage frequency, and content privilege as criteria to evaluate the suitability for eviction. Expired content is evicted in the first pass, irrespective of its QoS privilege. Upon further requirements for memory, the strategy applies LFU and LFF on “prompt” content. Content that is overlapped by both algorithms is evicted first, followed by LFU content. If a further memory requirement persists, then “reliable” content is evicted based on overlapping LFU and LFF algorithms, followed by LFU content evictions. The content with (prompt, reliable) status is evicted only upon expiry.

An admission-control strategy is proposed in Algorithm 3. Admission-control algorithms evaluate the suitability of content to be cached in the CS of a QoS node. If the CS memory is exhausted, the algorithm calls the content-eviction procedure. The content streamed through the NDN are part of a traffic identified by a Flow ID. The Flow ID is extracted using content name, as illustrated by the QoS Table in

Table 4. Each QoS node caches the content in its CS, depending upon whether a given content belongs to an adopted Flow ID. If the Flow ID is adopted by the QoS node, then the content is cached. If the Flow ID is not adopted by QoS node, then an interest packet is issued to te NDN for the QoS Table update, which will indicate the latest status of the Flow ID adoption. The QoS table is searched to identify the QoS node where the Flow ID is adopted, and the given content is forwarded. If the Flow ID is not adopted by any node, and if the QoS table is not yet full, the current QoS node adopts the Flow ID and sets an expiry time for the adoption, which reflects the longest TTL value of the content belonging to the Flow ID. If the QoS node cannot adopt the Flow ID due to memory requirements, it forwards the traffic towards further QoS nodes for adoption.

4.3. An Illustration

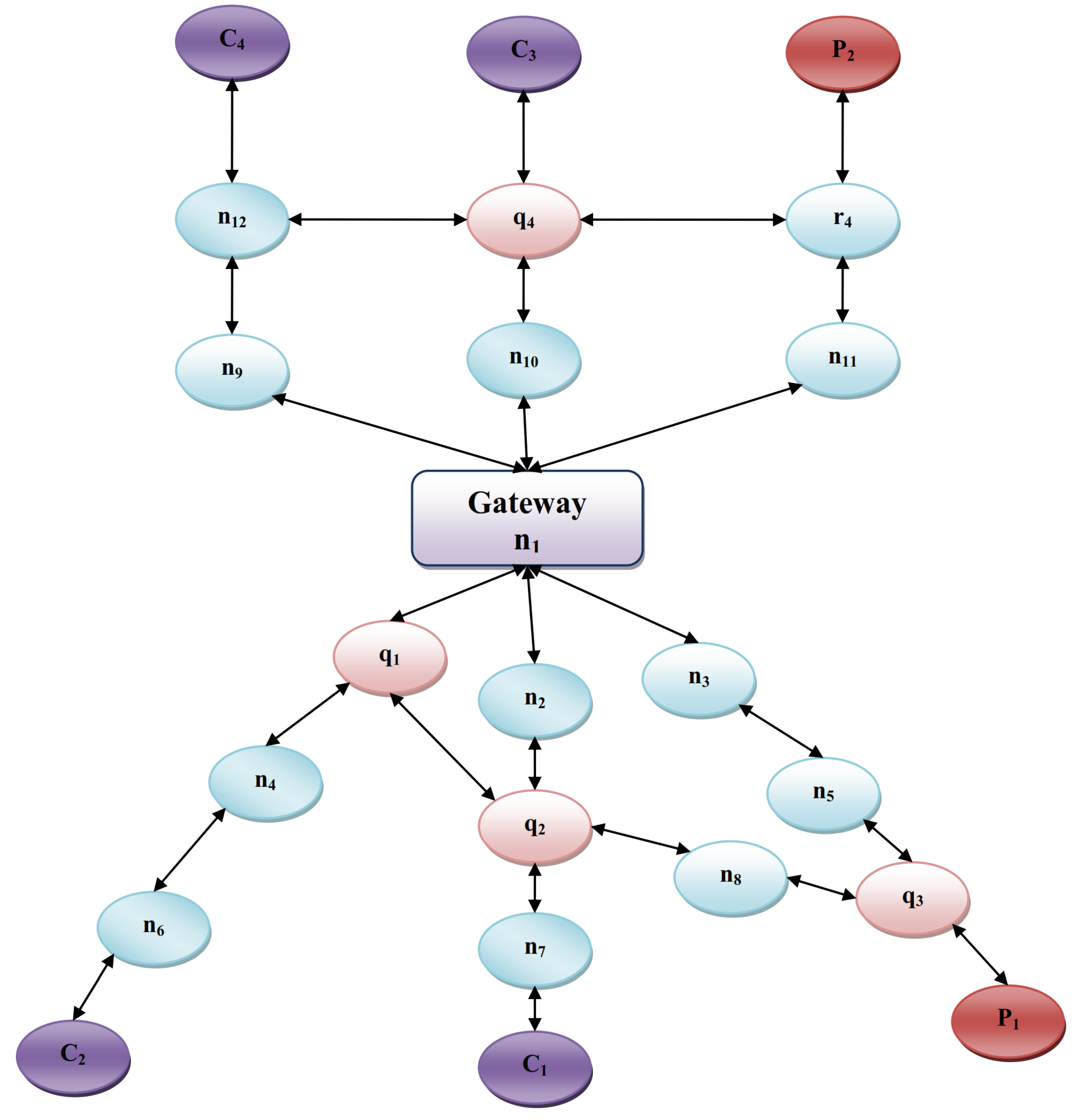

A computer network comprising IoT sensors, actuators, and resourceful NDN nodes (routers/gateways) interconnected by a wired or wireless medium is considered as an experimental scenario. All nodes of the experimental network are presented as

, where the content producers

, content consumers

, and QoS nodes

all belong to

M, i.e.,

,

, and

. The proposal caches the QoS content from the producers in QoS nodes. The producers generate both QoS and non-QoS data. The QoS data are cached by QoS nodes, and non-QoS data are cached in regular intermediate nodes (no privileged treatment). Let the QoS contents produced by producer nodes

P be denoted as

, and let

show the amount of QoS content cached in the content store of a given QoS node. Content consumers issue and propagate the interest packets towards content producers. The set of interest packets that reach the NDN node at time

t is denoted by

, and

represents the content store content at time

t for an NDN node

,

represents the number of interests packets that are served for

t, and the cached content hit rate is the ratio of the number of interest packet served by the content store to the total number of interest packets received and calculated using Equation (

3).

An illustration of a case example of the proposed QoS-linked collaborative content caching is presented in this section, using the network scenario represented in

Figure 3. Suppose that QoS content produced by

is requested by consumer

by issuing an interest packet and forwarding it to node

through

. The content is served if it has been cached in the content store; otherwise, an entry is made in the PIT and the Flow Table, and the interest packet is forwarded to

through

. The content is served if it has been cached in the content store; otherwise, an entry is made in the PIT and the Flow Table, and the interest packet is forwarded to

. Producer

responds to the interest packet by issuing corresponding content towards

through the path

,

,

,

. The first encounter of the QoS content with a QoS node

invokes the admission-control algorithm for its content store, and leads to the adoption of the Flow ID for that content, so that all future content belonging to that Flow ID is cached in

.

knows that the content Flow ID is adopted by

through interest/data packet exchange triggered by the admission-control algorithm, so the content is streamed through

without adoption or caching. The format of the QoS table for

is shown in

Table 4.

6. Conclusions

QoS-linked privileged content caching (QLPCC) is a Pending Interest Table (PIT) and content store (CS) management strategy for NDN-based networks for the scenarios of Quality-of-Service (QoS) implementation. QLPCC proposes a QoS node as an intermediate resourceful NDN node which handles only QoS traffic in the network. QoS traffic receives regular treatment in other NDN nodes, which ensures that regular traffic is not starved. QLPCC proposes a Flow Table-based PIT management scheme, which categorizes each QoS traffic into (prompt, reliable), prompt, reliable, and regular service categories [

14,

15,

16], and calculates an eviction score for the content of the traffic identified by a Flow ID. The PIT entry with the highest eviction score is pre-empted when the need arises. QLPCC proposes a QoS Table-based content store management scheme for content-admission-control and content-eviction operations. The QoS Table maintains a record of content expiry times, Flow IDs, and paths for QoS nodes which have adopted other Flow IDs. Using QoS Tables, the QLPCC strategy decides whether to cache the content or to forward the content towards other QoS nodes. QLPCC proposes a content store eviction algorithm to free the limited memory. The content-eviction algorithm evicts the content in the order of obsolete content, prompt-privileged content, and reliable-privileged content, respectively. The proposed QLPCC strategy is simulated on an ndnSIM [

30] platform for 500 nodes and 1000 nodes as a proof of scalability. The results are compared with EQPR [

28], PRR [

29], probability cache, and Least Frequently Used (LFU) and Least Fresh First (LFF) schemes, and are analyzed from the viewpoint of content store size vs. hit rate, response time vs. QoS-node content store size, and hop count vs. content store size. QLPCC has outperformed all of the previously mentioned measures.

For NDN-based ad hoc networks, NDN nodes should be dynamically designated as QoS nodes. Future work explores this challenge and the heuristics required to solve this problem.