An Active Path-Associated Cache Scheme for Mobile Scenes

Abstract

:1. Introduction

- Reactive: the old path node continues to cache the content requested by the user until the user accesses the new connection point, and the new connection point requests the previously cached content.

- Persistent subscription: cache the items subscribed by mobile users through an intermediate agent.

- Active: the content is cached to the new access point in advance, and the content is directly obtained when the user connects to the new access point.

- Enable: dynamically adjust the cache policy to improve the hit rate of the local cache.

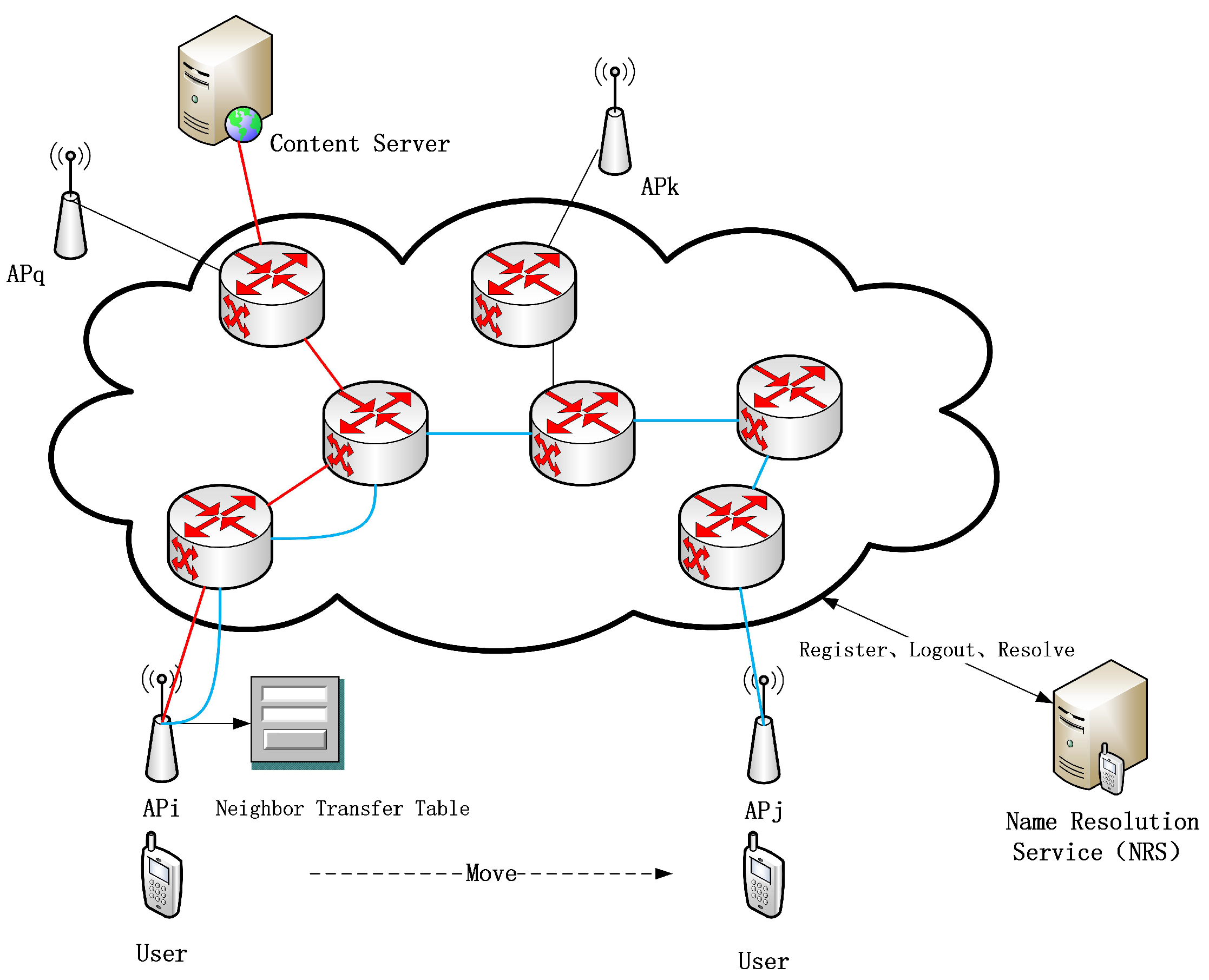

- We propose a caching mechanism for neighbor message propagation based on user behavior. This mechanism selects the target AP set according to the transition probability table and the transition threshold of the source AP and triggers active caching when the user moves.

- Different from other active caching methods that directly push content to the target AP, our idea is to make full use of the forwarding path from the source AP to the destination AP and cache content with high freshness and popularity in high-value nodes. This idea ensures the cache hit rate while using the limited network equipment space and reduces the network delay.

- We use real network topology to evaluate the performance of the proposed cache co-location scheme. Through experiments, the active caching strategy and other caching schemes are compared, and the results show that our scheme has the best performance in different network topologies.

2. Related Work

2.1. Mobility of the ICN Network

2.2. Mobility Caching in ICN Networks

3. The Proposed Approach

3.1. Neighbor Selection Mechanism

3.1.1. Acquisition of Transition Probability

3.1.2. Threshold of Transition Probability

3.2. Neighbor Selection Mechanism OVER ICN

4. Cooperative Caching Mechanism

4.1. ICN Architecture Supporting IP Network

4.2. Caching Mechanism Based on Node Cache Value

4.2.1. Node Cache Value

4.2.2. The Process of Cache Decision

| Algorithm 1 Mark Cache Node. |

|

4.2.3. The Process of Cache Decision

| Algorithm 2 Confirm Cache Node. |

|

5. Simulation Results and Analysis

- EDGE: The full name of this policy is edge cache policy. In this strategy, content is only cached at the edge [47].

- LCE: The full name of this policy is Leave Copy Everywhere strategy. In this policy, a copy of the content is stored in any cache node on the cache path [11].

- LCD: The full name of this policy is Leave Copy Down strategy. In this policy, the content cache is only cached in the cache node where the service node jumps to the user direction [48].

- CL4M: The full name of this policy is Cache Less for More strategy. This policy is triggered only once in the cache path, and the content is cached in the node with the greatest mediation centrality [49].

- ProbCache: This strategy caches the content in the cache path according to the probability. The probability factors mainly include the distance between the source and the target and the available cache space on the cache path [50].

- Random: This strategy randomly selects a node on the cache path for caching [47].

5.1. Setting of Experimental Environment

5.2. Cache Hit Ratio

5.3. Latency

5.4. Link Load

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balakrishnan, H.; Kaashoek, M.F.; Karger, D.; Morris, R.; Stoica, I. Looking up data in P2P systems. Commun. ACM 2003, 46, 43–48. [Google Scholar] [CrossRef] [Green Version]

- Peng, G. CDN: Content distribution network. arXiv 2004, arXiv:cs/0411069. [Google Scholar]

- Koponen, T.; Chawla, M.; Chun, B.G.; Ermolinskiy, A.; Kim, K.H.; Shenker, S.; Stoica, I. A data-oriented (and beyond) network architecture. In Proceedings of the 2007 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications, Kyoto, Japan, 27–31 August 2007; pp. 181–192. [Google Scholar]

- Jacobson, V.; Smetters, D.K.; Thornton, J.D.; Plass, M.F.; Briggs, N.H.; Braynard, R.L. Networking named content. In Proceedings of the 5th International Conference on Emerging Networking Experiments and Technologies, Rome Italy, 1–4 December 2009; pp. 1–12. [Google Scholar]

- Dannewitz, C.; Kutscher, D.; Ohlman, B.; Farrell, S.; Ahlgren, B.; Karl, H. Network of information (netinf)—An information-centric networking architecture. Comput. Commun. 2013, 36, 721–735. [Google Scholar] [CrossRef]

- Ain, M.; Trossen, D.; Nikander, P.; Tarkoma, S.; Visala, K.; Rimey, K.; Burbridge, T.; Rajahalme, J.; Tuononen, J.; Jokela, P.; et al. Deliverable D2.3—Architecture Definition, Component Descriptions, and Requirements. PSIRP 7th FP EU-Funded Project. 2009. Volume 11. Available online: http://www.psirp.org/files/Deliverables/FP7-INFSO-ICT-216173-PSIRP-D2.3_ArchitectureDefinition.pdf (accessed on 12 December 2021).

- Ahlgren, B.; Dannewitz, C.; Imbrenda, C.; Kutscher, D.; Ohlman, B. A survey of information-centric networking. IEEE Commun. Mag. 2012, 50, 26–36. [Google Scholar] [CrossRef]

- Fang, C.; Yao, H.; Wang, Z.; Wu, W.; Jin, X.; Yu, F.R. A survey of mobile information-centric networking: Research issues and challenges. IEEE Commun. Surv. Tutor. 2018, 20, 2353–2371. [Google Scholar] [CrossRef]

- Rosenberg, J.; Schulzrinne, H.; Camarillo, G.; Johnston, A.; Peterson, J.; Sparks, R.; Handley, M.; Schooler, E. SIP: Session Initiation Protocol; Association for Computing Machinery: New York, NY, USA, 2002. [Google Scholar]

- Perkins, C.E. Mobile ip. IEEE Commun. Mag. 1997, 35, 84–99. [Google Scholar] [CrossRef]

- Zhang, L.; Estrin, D.; Burke, J.; Jacobson, V.; Thornton, J.D.; Smetters, D.K.; Zhang, B.; Tsudik, G.; Massey, D.; Papadopoulos, C.; et al. Named Data Networking (NDN) Project; Relatório Técnico NDN-0001; Xerox Palo Alto Research Center-PARC: Palo Alto, CA, USA, 2010. [Google Scholar]

- Fotiou, N.; Nikander, P.; Trossen, D.; Polyzos, G.C. Developing information networking further: From PSIRP to PURSUIT. In Proceedings of the International Conference on Broadband Communications, Networks and Systems, Athens, Greece, 25–27 October 2010; pp. 1–13. [Google Scholar]

- Seskar, I.; Nagaraja, K.; Nelson, S.; Raychaudhuri, D. Mobilityfirst future internet architecture project. In Proceedings of the IEEE 7th Asian Internet Engineering Conference, Bangkok, Thailand, 9–11 November 2011; pp. 1–3. [Google Scholar]

- Kent, S.; Lynn, C.; Seo, K. Secure border gateway protocol (S-BGP). IEEE J. Sel. Areas Commun. 2000, 18, 582–592. [Google Scholar] [CrossRef]

- Vasilakos, X.; Siris, V.A.; Polyzos, G.C.; Pomonis, M. Proactive selective neighbor caching for enhancing mobility support in information-centric networks. In Proceedings of the IEEE Second Edition of the ICN Workshop on Information-Centric Networking, Helsinki, Finland, 17 August 2012; pp. 61–66. [Google Scholar]

- Caporuscio, M.; Carzaniga, A.; Wolf, A.L. Design and evaluation of a support service for mobile, wireless publish/subscribe applications. IEEE Trans. Softw. Eng. 2003, 29, 1059–1071. [Google Scholar] [CrossRef]

- Fiege, L.; Gartner, F.C.; Kasten, O.; Zeidler, A. Supporting mobility in content-based publish/subscribe middleware. In Proceedings of the IEEE ACM/IFIP/USENIX International Conference on Distributed Systems Platforms and Open Distributed Processing, Beijing, China, 9–13 December 2003; pp. 103–122. [Google Scholar]

- Sourlas, V.; Paschos, G.S.; Flegkas, P.; Tassiulas, L. Mobility support through caching in content-based publish/subscribe networks. In Proceedings of the IEEE 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, Australia, 17–20 May 2010; pp. 715–720. [Google Scholar]

- Wang, J.; Cao, J.; Li, J.; Wu, J. MHH: A novel protocol for mobility management in publish/subscribe systems. In Proceedings of the IEEE 2007 International Conference on Parallel Processing (ICPP 2007), Xi’an, China, 10–14 September 2007; p. 54. [Google Scholar]

- Farooq, U.; Parsons, E.W.; Majumdar, S. Performance of publish/subscribe middleware in mobile wireless networks. ACM SIGSOFT Softw. Eng. Notes 2004, 29, 278–289. [Google Scholar] [CrossRef]

- Burcea, I.; Jacobsen, H.A.; De Lara, E.; Muthusamy, V.; Petrovic, M. Disconnected operation in publish/subscribe middleware. In Proceedings of the IEEE IEEE International Conference on Mobile Data Management, Berkeley, CA, USA, 19–22 January 2004; pp. 39–50. [Google Scholar]

- Gaddah, A.; Kunz, T. Extending mobility to publish/subscribe systems using a pro-active caching approach. Mob. Inf. Syst. 2010, 6, 293–324. [Google Scholar] [CrossRef]

- Siris, V.A.; Vasilakos, X.; Polyzos, G.C. Efficient proactive caching for supporting seamless mobility. In Proceedings of the IEEE International Symposium on a World of Wireless, Mobile and Multimedia Networks 2014, Sydney, Australia, 19 June 2014; pp. 1–6. [Google Scholar]

- Siris, V.A.; Vasilakos, X.; Polyzos, G.C. A Selective Neighbor Caching Approach for Supporting Mobility in Publish/Subscribe Networks. In Proceedings of the Fifth ERCIM Workshop on Emobility, Catalonia, Spain, 14 June 2011; p. 63. [Google Scholar]

- Jiang, P.; Jin, Y.; Yang, T.; Geurts, J.; Liu, Y.; Point, J.C. Handoff prediction for data caching in mobile content centric network. In Proceedings of the IEEE 2013 15th IEEE International Conference on Communication Technology, Guilin, China, 17–19 November 2013; pp. 691–696. [Google Scholar]

- Li, C.; Song, M.; Yu, C.; Luo, Y. Mobility and marginal gain based content caching and placement for cooperative edge-cloud computing. Inf. Sci. 2021, 548, 153–176. [Google Scholar] [CrossRef]

- Yu, Z.; Hu, J.; Min, G.; Zhao, Z.; Miao, W.; Hossain, M.S. Mobility-aware proactive edge caching for connected vehicles using federated learning. IEEE Trans. Intell. Transp. Syst. 2020, 22, 5341–5351. [Google Scholar] [CrossRef]

- Raychaudhuri, D.; Nagaraja, K.; Venkataramani, A. Mobilityfirst: A robust and trustworthy mobility-centric architecture for the future internet. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2012, 16, 2–13. [Google Scholar] [CrossRef]

- Mishra, A.; Shin, M.; Arbaush, W. Context caching using neighbor graphs for fast handoffs in a wireless network. In Proceedings of the IEEE INFOCOM 2004, Hong Kong, China, 7–11 March 2004; Volume 1. [Google Scholar]

- Pack, S.; Jung, H.; Kwon, T.; Choi, Y. Snc: A selective neighbor caching scheme for fast handoff in ieee 802.11 wireless networks. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2005, 9, 39–49. [Google Scholar] [CrossRef]

- Davidson, J.; Liebald, B.; Liu, J.; Nandy, P.; Van Vleet, T.; Gargi, U.; Gupta, S.; He, Y.; Lambert, M.; Livingston, B.; et al. The YouTube video recommendation system. In Proceedings of the Fourth ACM Conference on Recommender Systems, Barcelona, Spain, 26–30 September 2010; pp. 293–296. [Google Scholar]

- Balachandran, A.; Voelker, G.M.; Bahl, P.; Rangan, P.V. Characterizing user behavior and network performance in a public wireless LAN. In Proceedings of the 2002 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, Marina Del Rey, CA, USA, 15–19 June 2002; pp. 195–205. [Google Scholar]

- Schwab, D.; Bunt, R. Characterising the use of a campus wireless network. In Proceedings of the IEEE INFOCOM 2004, Hong Kong, China, 7–11 March 2004; Volume 2, pp. 862–870. [Google Scholar]

- Lika, B.; Kolomvatsos, K.; Hadjiefthymiades, S. Facing the cold start problem in recommender systems. Expert Syst. Appl. 2014, 41, 2065–2073. [Google Scholar] [CrossRef]

- Schein, A.I.; Popescul, A.; Ungar, L.H.; Pennock, D.M. Methods and metrics for cold-start recommendations. In Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Tampere, Finland, 11–15 August 2002; pp. 253–260. [Google Scholar]

- Wei, J.; He, J.; Chen, K.; Zhou, Y.; Tang, Z. Collaborative filtering and deep learning based recommendation system for cold start items. Expert Syst. Appl. 2017, 69, 29–39. [Google Scholar] [CrossRef] [Green Version]

- Prados-Garzon, J.; Adamuz-Hinojosa, O.; Ameigeiras, P.; Ramos-Munoz, J.J.; Andres-Maldonado, P.; Lopez-Soler, J.M. Handover implementation in a 5G SDN-based mobile network architecture. In Proceedings of the 2016 IEEE 27th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Valencia, Spain, 4–8 September 2016; pp. 1–6. [Google Scholar]

- Natale, D. Dynamic end-to-end guarantees in distributed real time systems. In Proceedings of the 1994 Proceedings Real-Time Systems Symposium, San Juan, PR, USA, 7–9 December 1994; pp. 216–227. [Google Scholar]

- Mankiw, N.G. Principles of Economics, 8th ed.; Cengage Learning: Boston, MA, USA, 2018; Available online: https://voltariano.files.wordpress.com/2020/03/mankiw_principles_of_economic.pdf (accessed on 12 December 2021).

- Bather, J.A. Mathematical Induction. 1994. Available online: https://sms.math.nus.edu.sg/smsmedley/Vol-16-1/Mathematical%20induction(John%20A%20Bather).pdf (accessed on 12 December 2021).

- Wang, J.; Chen, G.; You, J.; Sun, P. SEANet: Architecture and Technologies of an On-site, Elastic, Autonomous Network. J. Netw. New Media 2020, 9, 1–8. [Google Scholar]

- Man, D.; Wang, Y.; Wang, H.; Guo, J.; Lv, J.; Xuan, S.; Yang, W. Information-Centric Networking Cache Placement Method Based on Cache Node Status and Location. Wirel. Commun. Mob. Comput. 2021, 2021. [Google Scholar] [CrossRef]

- Li, S.; Xu, J.; Van Der Schaar, M.; Li, W. Popularity-driven content caching. In Proceedings of the IEEE INFOCOM 2016—The 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Suksomboon, K.; Tarnoi, S.; Ji, Y.; Koibuchi, M.; Fukuda, K.; Abe, S.; Motonori, N.; Aoki, M.; Urushidani, S.; Yamada, S. PopCache: Cache more or less based on content popularity for information-centric networking. In Proceedings of the 38th Annual IEEE Conference on Local Computer Networks, Sydney, Australia, 21–24 October 2013; pp. 236–243. [Google Scholar]

- Wang, W.; Sun, Y.; Guo, Y.; Kaafar, D.; Jin, J.; Li, J.; Li, Z. CRCache: Exploiting the correlation between content popularity and network topology information for ICN caching. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, Australia, 10–14 June 2014; pp. 3191–3196. [Google Scholar]

- Patro, S.; Sahu, K.K. Normalization: A preprocessing stage. arXiv 2015, arXiv:1503.06462. [Google Scholar] [CrossRef]

- Saino, L.; Psaras, I.; Pavlou, G. Icarus: A caching simulator for information centric networking (icn). In Proceedings of the SIMUTools 2014: 7th International ICST Conference on Simulation Tools and Techniques, Lisbon, Portugal, 17–19 March 2014; Volume 7, pp. 66–75. [Google Scholar]

- Laoutaris, N.; Che, H.; Stavrakakis, I. The LCD interconnection of LRU caches and its analysis. Perform. Eval. 2006, 63, 609–634. [Google Scholar] [CrossRef]

- Chai, W.K.; He, D.; Psaras, I.; Pavlou, G. Cache “less for more” in information-centric networks. In Proceedings of the International Conference on Research in Networking, Prague, Czech Republic, 21–25 May 2012; pp. 27–40. [Google Scholar]

- Psaras, I.; Chai, W.K.; Pavlou, G. Probabilistic in-network caching for information-centric networks. In Proceedings of the Second Edition of the ICN Workshop on Information-Centric Networking, Helsinki, Finland, 17 August 2012; pp. 55–60. [Google Scholar]

| Neighbor AP | BSSID | Number of Moves from | Transition Probability |

|---|---|---|---|

| a8:82:38:3f:40:4B | 12,000 | 0.80 | |

| a8:82:38:3f:40:4E | 2500 | 0.17 | |

| a8:82:38:3f:40:4A | 500 | 0.03 |

| Parameters | Value |

|---|---|

| Topology structure | GARR, WIDE, and GEANT |

| Replacement policy | LRU |

| Number of contents | 3 × 10 |

| Requests number for system warm-up | 10 |

| Total mobile user requests | 6 × 10 |

| Number of mobile users per second | 10 per/s |

| Cache size ratio | [0.004, 0.002, 0.01–0.05] |

| Skewness () | [0.6, 0.7, 0.8, 0.9, 1.0, 1.1, 1.2] |

| Experiment run time for each scenario | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, T.; Sun, P.; Han, R. An Active Path-Associated Cache Scheme for Mobile Scenes. Future Internet 2022, 14, 33. https://doi.org/10.3390/fi14020033

Zhou T, Sun P, Han R. An Active Path-Associated Cache Scheme for Mobile Scenes. Future Internet. 2022; 14(2):33. https://doi.org/10.3390/fi14020033

Chicago/Turabian StyleZhou, Tianchi, Peng Sun, and Rui Han. 2022. "An Active Path-Associated Cache Scheme for Mobile Scenes" Future Internet 14, no. 2: 33. https://doi.org/10.3390/fi14020033

APA StyleZhou, T., Sun, P., & Han, R. (2022). An Active Path-Associated Cache Scheme for Mobile Scenes. Future Internet, 14(2), 33. https://doi.org/10.3390/fi14020033