Abstract

The pervasiveness of online social networks has reshaped the way people access information. Online social networks make it common for users to inform themselves online and share news among their peers, but also favor the spreading of both reliable and fake news alike. Because fake news may have a profound impact on the society at large, realistically simulating their spreading process helps evaluate the most effective countermeasures to adopt. It is customary to model the spreading of fake news via the same epidemic models used for common diseases; however, these models often miss concepts and dynamics that are peculiar to fake news spreading. In this paper, we fill this gap by enriching typical epidemic models for fake news spreading with network topologies and dynamics that are typical of realistic social networks. Specifically, we introduce agents with the role of influencers and bots in the model and consider the effects of dynamical network access patterns, time-varying engagement, and different degrees of trust in the sources of circulating information. These factors concur with making the simulations more realistic. Among other results, we show that influencers that share fake news help the spreading process reach nodes that would otherwise remain unaffected. Moreover, we emphasize that bots dramatically speed up the spreading process and that time-varying engagement and network access change the effectiveness of fake news spreading.

1. Introduction

The pervasiveness of online social networks (OSNs) has given people unprecedented power to share information and reach a huge number of peers, both in a short amount of time and at no cost. This has shaped the way people interact and access information: currently, it has become more common to access news on social media, rather than via traditional sources [1]. A drawback of this trend is that OSNs are very fertile grounds to spread false information [2]. To avoid being gulled, users therefore need to continuously check facts, and possibly establish reliable news access channels.

Contrasting unverified information has become an important topic of discussion in our society. In this hugely inter-connected world, we constantly witness attempts at targeting and persuading users both for commercial [3] and political purposes [4]. A single piece of false or misinterpreted information can not just severely damage the reputation of an individual, but also influence public opinion and affect the life of millions of people [4]. In particular, social networking is a fundamental interaction channel for people, communities, and interest groups. Not only does social networking offer the chance to exchange opinions, information, discussion, as well as multimedia contents: it is also an invaluable source of data [3]. Unhealthy online social behaviors can damage this source by working as echo chambers of toxic contents and may reduce the trust of the users in social media by posing privacy breaching concerns [5,6].

The very structure of OSNs, i.e., the underlying topology induced by the connections among the users, is key to amplifying the impact of fake news. The presence of “influencers” on social networks, such as celebrities or public figures with an uncommonly high number of followers, drastically speeds up information spreading [7]. In this context, another issue is the presence of bots, i.e., autonomous agents that aim to flood communities with malicious messages, in order to manipulate consensus or to influence user behavior [8].

Epidemic models [9] are viable and common tools to study the spreading of fake news on social networks. They were first introduced to model the spreading of diseases among interacting agents [10]. In these models, people are considered as the nodes of a social network graph, whose edges convey the social relationship between the corresponding nodes. We consider a variant to the classical compartmental susceptible, infected, recovered (SIR) model: the susceptible, believer, fact-checker (SBFC) model [9]. Here, a node is initially susceptible to being gulled by a fake news item; it may then become a believer (i.e., it believes in the fake news item) when getting in contact with another believer; it may also become a fact-checker (i.e., it believes that the news item is fake) if it verifies the news item, or if it comes in contact with another fact-checker node.

The key question driving our work is the following: Can we perform an agent-based simulation of an online social network that reproduces key aspects of fake news injection and spreading and at the same time is realistic enough to enable a reliable analysis of multiple OSN scenarios? In fact, the spreading of fake news has several peculiar characteristics that differentiate it from the spreading of common diseases. These aspects include the different connectedness of agents in an OSN (e.g., bots, influencers, and common agents), the presence of fact-checkers, the spreading of debunking information that reduces the engagement of fake news, and the time-varying aspects of OSN user activity. Such peculiarities need to be taken into account in order to accurately characterize fake news spreading.

To answer the above research question, in this study, we build on the SBFC model to introduce some realistic extensions. In particular, we address the fundamental role of influencers and bots in the spreading of fake news online: this helps understand how fake news spreads over a social network and provides insight into how to limit its impact. Moreover, we consider the effects of dynamical network access patterns, time-varying engagement, and different degrees of trust in the sources of circulating information. These factors concur to make agent-based simulations more realistic, in the perspective that they should become a key tool to study the most effective fake news spreading strategies, and thus inform the most appropriate corrective actions.

In more detail, the contributions of this paper are the following:

- We propose an agent-based simulation model, where different agents act independently to share news over a social network. We consider two adversary spreading processes, one for the fake news and a second one for debunking.

- We differentiate among agents’ roles and characteristics, by distinguishing among common users, influencers, and bots. Every agent is characterized by its own set of parameters, chosen according to statistical distributions in order to create heterogeneity;

- We propose a network construction algorithm that favors the emergence both of geographical user clusters and of clusters of users with common interests; this improves the degree of realism of the final simulated social network connections; moreover, we account for the presence of influencers and bots and for unequal trust among agents;

- We propose ways to add time dynamical effects in fake news epidemic models, by modeling the connection time of each agent to the social network and the decay of the population’s interest on a news item over time.

The remainder of this paper is organized as follows. In Section 2, we survey related work. In Section 3, we introduce the model of the agents and the dynamics of our epidemic model, as well as our network construction algorithm. In Section 4, we present the results of our simulations. In Section 5, we discuss our findings and contextualize them in light of the current literature, before drawing concluding remarks, discussing the limitations of our work, and presenting future directions in Section 6.

2. Related Work

Detecting fake news and bots on social networks and limiting their impact are active research fields. Numerous approaches tackle this issue by applying techniques from fields as different as network science, data science, machine learning, evolutionary algorithms, or simulation and performance evaluation.

For example, the comprehensive survey in [3] showed that, among other uses, data science can be exploited to identify and prevent fake news in digital marketing, thus ruling out false content about companies and products and helping customers avoid predatory commercial campaigns [3]. Given the large impact that data and genuine user behavior have on many processes, including data-driven innovation [5], preserving a healthy online interaction environment is extremely important. Among other consequences, this may reduce concerns about the use of hyper-connected technologies, increasing trust and enabling the widespread deployment of high value-added services, as recently seen in contact tracing applications for pandemic tracking and prevention [6]. This is especially important, as not only did fake news contribute to spreading a diffused uncertainty and confusion with respect to COVID-19, but it was also shown [11] that the majority of the authoritative news regarding scientific studies on COVID-19 tend not to reach and influence people. This signifies a lack of correct communication from health authorities and politicians and a tendency to spread misleading content that can only be fought through the increased presence of health authorities in social channels.

In the following, we survey key contributions to fake news and bot detection (Section 2.1), as well as modeling fake news spreading as an epidemic (Section 2.2). As a systematic literature review falls outside the scope of this paper, we put special focus on those contributions that tackle fake news spreading from a simulation point of view, which forms the main foundation of our work. We refer the reader to the surveys in [12,13,14] and the references therein, for an extensive survey of the research in the field of fake news, as well as of general research directions.

2.1. Fake News and Bot Detection

In recent years, there has been a growing interest in analyzing and classifying online news, as well as in telling apart human-edited content from content produced by bots.

Machine learning techniques have been heavily applied to the problem of classifying news pieces as fake or genuine. In particular, a recent trend is to exploit deep learning approaches [15,16,17,18]. A breakthrough in fake news detection comes from geometric deep learning techniques [19,20], which exploit heterogeneous data (i.e., content, user profile and activity, social graph) to classify online news.

In the same vein, the work in [21] employed supervised learning techniques to detect fake news at an early stage, when no information exists about the spreading of the news on social media. Thus, the approach relies on the lexicon, syntax, semantics, and argument organization of a news item. This aspect is particularly important: As noted in [2], acting on sentiment and feelings is key to push a group of news consumers to also produce and share news pieces. As feelings appear fast and lead to quick action, the spreading of fake news develops almost immediately after a fake news item is sowed into a community. The process then tends to escape traditional fact-based narratives from authoritative sources.

A common way to extract features from textual content is to perform sentiment analysis, a task that has been made popular by the growing availability of textual data from Twitter posts [22,23,24]. Sentiment analysis is particularly useful in the context of fake news classification, since real news is associated with different sentiments with respect to fake news [25]. For a more in-depth comparison among different content-based supervised machine learning models to classify fake news from Twitter, we refer the interested reader to [26].

When analyzing fake news spreading processes, it must be taken into account that OSNs host both real human users and software-controlled agents. The latter, better known as bots, are programmed to send automatic messages so as to influence the behavior of real users and polarize communities [27]. Machine learning techniques also find significant applications in this field. Machine learning has been used to classify whether a content was created by a human or a bot, or to detect bots using clustering approaches [27,28,29,30,31,32].

Other works propose genetic algorithms to study the problem of bot detection: In [9], the authors designed an evolutionary algorithm that makes bots able to evade detection by mimicking human behavior. The authors proposed an analysis of such evolved bots to understand their characteristics, and thus make bot detectors more robust.

Recently, some services of practical utility have been deployed. For example, in [33], the authors presented BotOrNot, a tool that evaluates to what extent a Twitter account exhibits similarities to known characteristics of social media bots. For a more in-depth analysis of bot detection approaches and studies about the impact of bots in social networks, we refer the interested reader to [8,34,35] and the references therein.

2.2. Epidemic Modeling

Historically, the spreading of misinformation has been modeled using the same epidemic models employed for common viruses [10].

In [36], the authors proposed a fake news spreading model based on differential equations and studied the parameters of the model that keep a fake news item circulating in a social network after its onset. Separately, the authors suggested which parameters would make the spreading die out over time. In [37], the authors modeled the engagement onset and decrease for fake news items on Twitter as two subsequent cascade events affecting a Poisson point process. After training the parameters of the model through fake news spreading data from Twitter, the authors showed that the model predicts the evolution of fake news engagement better than linear regression, or than models based on reinforced Poisson processes.

In [9], the authors simulated the spreading of a hoax and its debunking at the same time. They built upon a model for the competitive spreading of two rumors, in order to describe the competition among believers and fact-checkers. Users become fact-checkers through the spreading process, or if they already know that the news is not true, or because they decide to verify the news by themselves. The authors also took forgetfulness into account by making a user lose interest in the fake news item with a given probability. They studied the existence of thresholds for the fact-checking probability that guarantees the complete removal of the fake news from the network and proved that such a threshold does not depend on the spreading rate, but only on the gullibility and forgetting probability of the users. The same authors extended their previous study assessing the role of network segregation in misinformation spreading [38] and comparing different fact-checking strategies on different network topologies to limit the spreading of fake news [39].

In [40], the authors proposed a mixed-method study: they captured the personality of users on a social network through a questionnaire and then modeled the agents in their simulations according to the questionnaire, in order to understand how the different personalities of the users affect the epidemic spreading. In [41], the authors studied the influence of online bots on a network through simulations, in an opinion dynamics setting. The clusterization of opinions in networks was the focus of [42], who observed the emergence of echo chambers that amplify the influence of a seeded opinion, using a simplified agent interaction model that does not include time-dynamical settings. In [43], the authors proposed an agent-based model of two separate, but interacting spreading processes: one for the physical disease and a second one for low-quality information about the disease. The spreading of false information was shown to worsen the spreading of the disease itself. In [44], the authors studied how the presence of heterogeneous agents affects the competitive spreading of low- and high-quality information. They also proposed methods to mitigate the spreading of false information without affecting a system’s information diversity.

In Table 1, we summarize the contributions in the literature that are most related to our approach based on the realistic agent-based simulation of fake news and debunking spreading over OSNs. The references appear in order of citation within the manuscript. We observe that while several contributions exist, most of them consider analytical models of the spreading process or simplified agent interactions that may not convey the behavior of a true OSN user. This prompted us to propose an improved simulation model including different types of agents, time dynamical events affecting the agent interactions, and non-uniform node trust. Moreover, some of the works in Table 1 focus just on the spreading of the fake news itself, and neglect debunking. Instead, we explicitly model both competing processes.

Table 1.

Summary of the contributions and missing aspects of the works in the literature that are most related to our agent-based fake news spreading simulation approach.

3. Model

We now characterize the agents participating in the simulations (i.e., the users of the social network) by discussing their roles, parameters, and dynamics. We also introduce the problem of constructing a synthetic network that resembles a real OSN and state our assumptions about the time dynamics involved in our fake news epidemic model.

3.1. Agent Modeling

In this study, we consider three types of agents: (i) commons, (ii) influencers, and (iii) bots. The set of commons contains all “average” users of the social network. When we collect statistics to characterize the impact of the fake news epidemic, our target population is the set of common nodes.

By observing real OSNs and their node degree distributions, some nodes exhibit an anomalously high out-degree: these nodes are commonly called influencers [45]. When an influencer shares some contents, these contents can usually reach a large fraction of the population. Bots [46] also play an important role in OSNs. Bots are automated accounts with a specific role. In our case, they help the conspirator who created the fake news item in two ways: by echoing the fake news during the initial phase of the spreading and by keeping the spreading process alive. Being automated accounts, bots never change their mind.

Agents of type common and influencer can enter any of the possible states: susceptible, believer, and fact-checker. However, we further make our simulation more realistic by considering the presence of special influencers called eternal fact-checkers [39]. These influencers constantly participate in the debunking of any fake news item.

With respect to other approaches based on simulations, we model the attributes of each agent via Gaussian distributions having different means and standard deviations . We used Gaussian distributions to model the fact that the population follows an average behavior with high probability, whereas extremely polarized behaviors (e.g., very high or very low vulnerability to fake news) occur with low probability. The values drawn from the distributions are then clipped between 0 and 1 (denoted as in Table 2), except for the interest attributes, which are clipped between and 1 (denoted as in Table 2). This simplifies the incorporation of the parameters in the computation of probabilities (e.g., to spread a fake news item or debunking information). Therefore, the specific values of the attributes are different for every OSN agent. We provide a summary of the parameters and of their statistical distributions in Table 2. We remark that agents of type bot remain in the believer state throughout all simulations, have a fixed sharing rate equal to 1 (meaning they keep sharing fake news at any network access), and bear no other attributes.

Table 2.

Attributes of common and influencer nodes.

3.2. Network Construction

We model our social network as a graph. Every node of the graph represents an agent, and edges convey a relationship between its two endpoints. A key problem in this scenario is to generate a synthetic network that resembles real OSNs like Facebook or Twitter. Generating graphs with given properties is a well-known problem: network models are the fundamental pieces to study complex networks [47,48,49]. However, these mathematical models are solely driven by the topology of a network and do not take into account features and similarities among nodes, which instead have a key role in shaping the connections in an OSN. In this work, we build our network model via an approach similar to [50]. We generate our networks according to the following properties:

- Geographical proximity: A user is likely to be connected with another user if they live nearby, e.g., in the same city. The coordinates of a node are assigned by sampling from a uniform distribution in the square . This procedure follows the random geometric graph formalism [51] and ensures that geographical clusters appear.We evaluate the distance between two nodes using the Euclidean distance formula, normalized such that the maximum possible distance is 1. In our simulations, we created an edge between two nodes if their attribute distance was less than a threshold equal to .

- Attributes’ proximity: Each node has a set of five “interest” attributes, distributed according to a truncated Gaussian distribution; we employ these parameters to model connections between agents based on their interests. This helps create connections and clusters in the attribute domain, rather than limiting connections to geographical proximity criteria.The distance between two sets of interest attributes is evaluated using the Euclidean distance formula, normalized such that the maximum possible distance is 1. In our simulations, an edge between two nodes is created if their distance is less than a threshold equal to .

- Randomness: To introduce some randomness in the network generation process, an edge satisfying the above geographical and attribute proximity criteria is removed from the graph with a given probability .

The above thresholds reproduce the higher likelihood of connections among OSN agents that are geographically close or have similar interests.

In addition to connectivity properties, every node has three parameters that affect the behavior of the corresponding user in the simulation: the vulnerability (defined as the tendency to follow opinions: if higher, it makes it easier for a user to change its state when receiving fake news or its debunking); the sharing rate (defined as the probability to share fake news or debunking piece); and the recovery rate (defined as the probability to do fact-checking: if higher, it makes the node more inclined to verify a fake news item before re-sharing it). We assigned these parameters at random to each node, according to a truncated Gaussian distribution with the parameters reported in Table 2. The distributions were truncated between 0 and 1.

We build the network starting from common nodes and creating edges according to the above rules. After this process, we enrich the network with influencers, which exhibit a much higher proportion of out-connections to in-connections with respect to a common node. To model this, we assign a higher threshold (twice those of common agents) to create an out-edge from geographical and attribute proximity and a lower threshold (halved with respect to common agents) to create an in-edge. As a result, influencers have many more out-edges than in-edges.

Finally, we deploy bot nodes. As opposed to other agents, edges involving bots do not occur based on geographical or attribute proximity. Rather, we assume that bots have a target population coverage rate, and randomly connect bots to other nodes in order to attain this coverage. For example, for a realistic target coverage rate of 2%, we connect bots to other nodes with a probability .

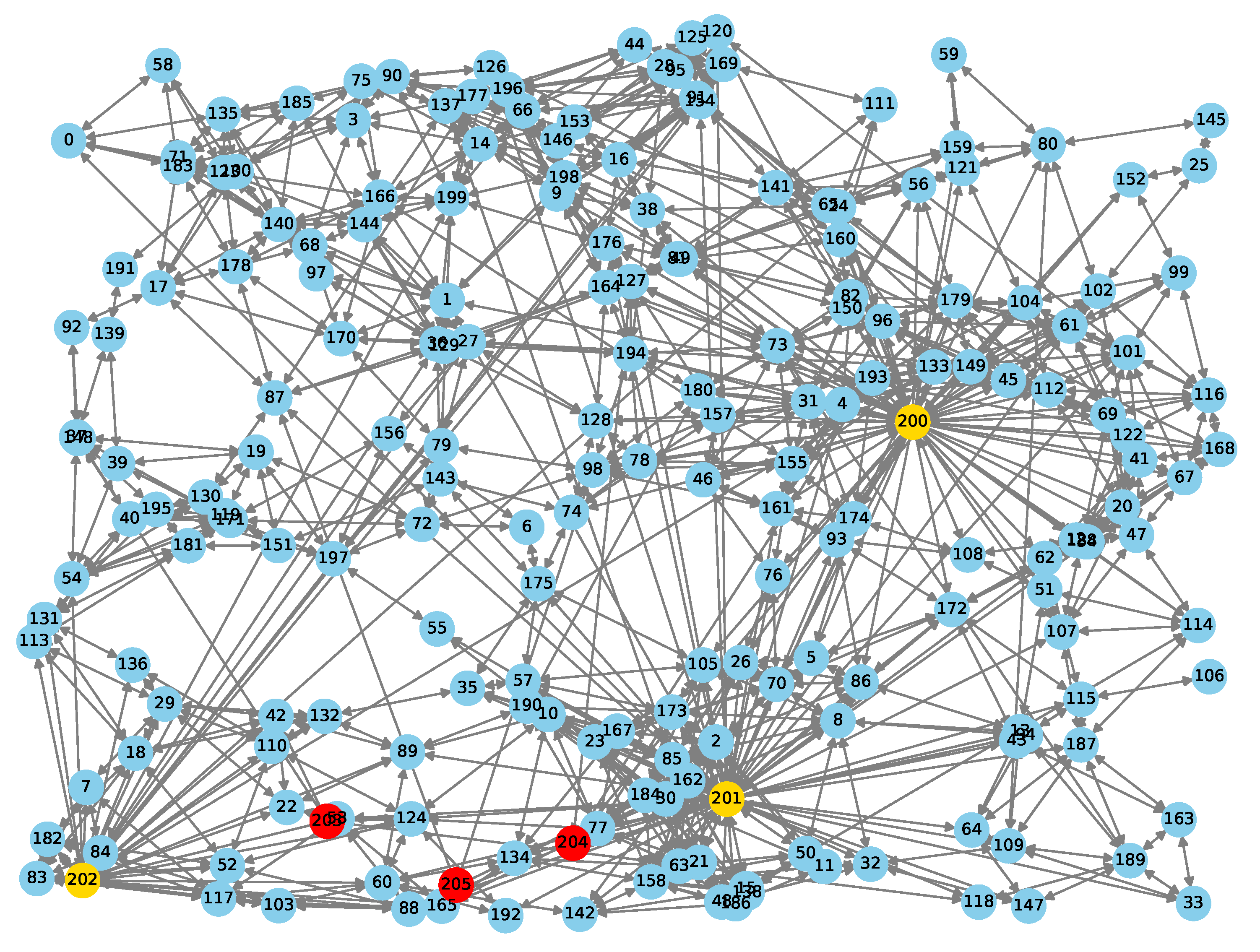

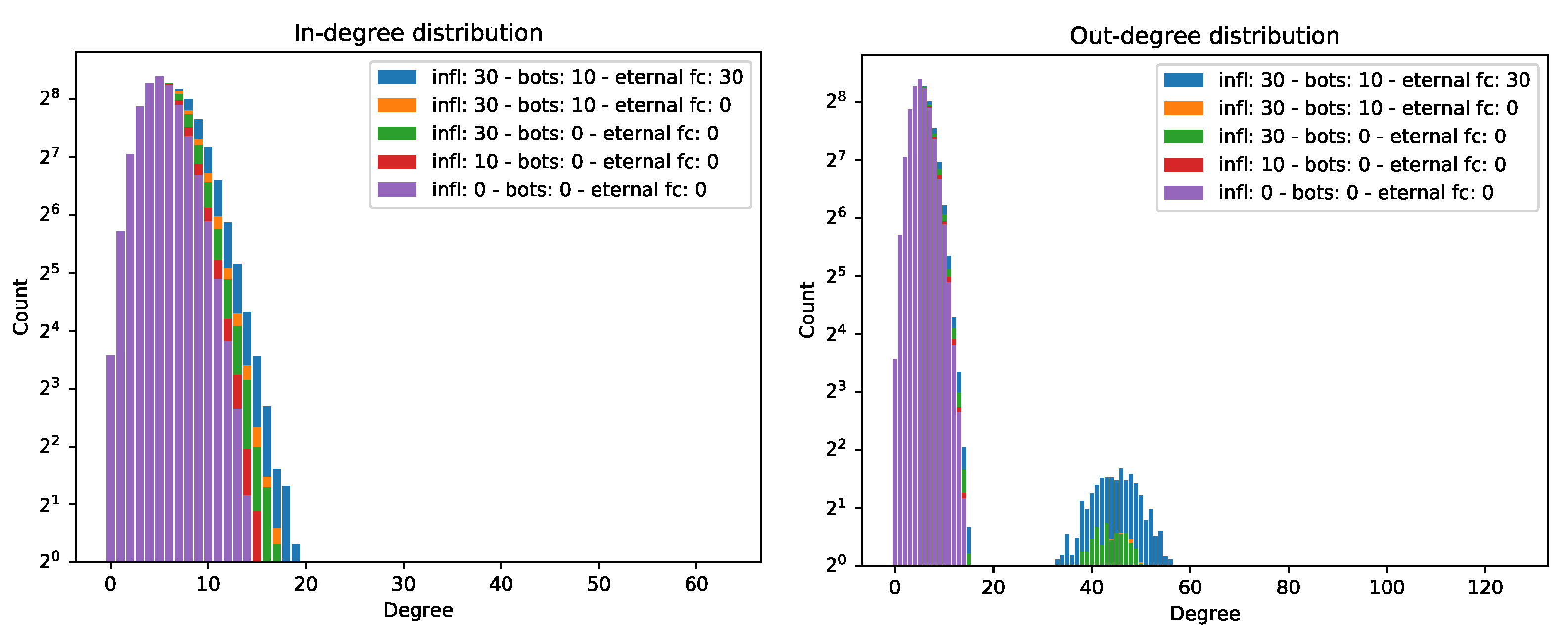

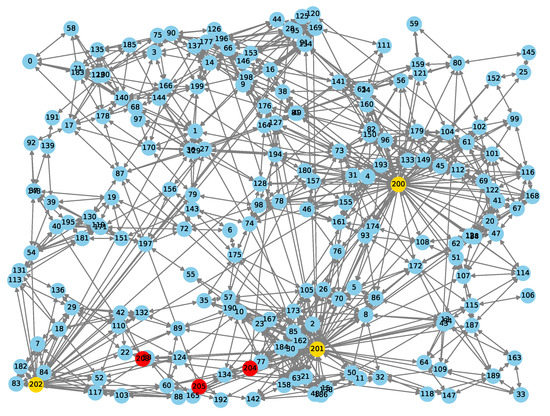

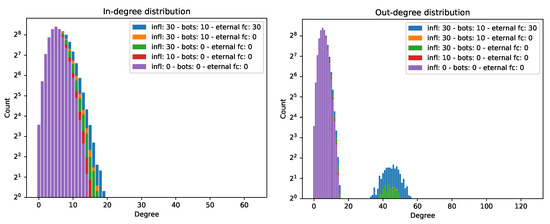

Figure 1 shows a sample network generated through our algorithm, including common nodes (blue), influencers (gold), and bots (red). Our network generation procedure ensures the emergence of realistic degree distributions, and therefore of a realistic web of social connections. Figure 2 shows the in-degree and out-degree distributions of the nodes, averaged over 50 networks having different configurations. In each network, we deploy 2000 common nodes. The figures’ keys report the number of additional influencers (), bots (), and eternal fact-checkers ().

Figure 1.

Example of a synthetic network generated through our algorithm. We depict common agents in blue, influencers in yellow, and bots in red. We observe the appearance both of geographical clusters involving nearby nodes and of interest-based clusters, joining groups of nodes located far from one another.

Figure 2.

In-degree (left) and out-degree (right) distributions for the networks considered in the experiments. The presence of bots and influencers with a large degree distribution yields the second out-degree peak between degree values of 30 and 60.

Adding agents to the networks implies some slight shifts of the in-degree distributions to the right and the appearance of a few nodes with a high in-degree. In the out-degree distributions, instead, there appear large shifts to the right, in particular when adding agents with many followers, such as influencers and eternal fact-checkers. The larger the number of such nodes, the higher the second peak between in-degree values of 35 and 60. Hence, the most connected nodes can directly access up to 3% of the entire population.

3.3. Trust among Agents

A particular trait of human behavior that arises in the spreading of fake news is that people do not trust one another in the same way; for instance, social network accounts belonging to bots tend to replicate and insistingly spread content in a way that is uncommon for typical social network users. As a result, common people tend to place less trust in accounts that are known to be bots.

To model this fact, we assigned a weight to every edge [52]: this weight represents how much a node trusts another. Given a pair of connected nodes, we computed the weight as the arithmetic mean of the geographical distance and of the attribute distance between the two nodes. The resulting network graph thus becomes weighted and directed (we assumed symmetry for common users, but not for influencers). The weight of an edge influences the probability of infection of a node: if a node sends news to another node, but the weight of the linking edge is low (meaning that the recipient puts little trust in the message sender), the probability that the news will change the state of the recipient correspondingly decreases. When considering weighted networks, we set the weights of the edges having a bot on one end to . This allows us to evaluate the effects of placing a low (albeit non-zero) trust on bot-like OSN accounts.

3.4. Time Dynamics

We considered two time dynamical aspects in our simulation: the connection of an agent to the network and the engagement of an agent.

Several simulation models assume that agents connect to the networks simultaneously at fixed time intervals or “slots”. While this may be a reasonable model as a first approximation, it does not convey realistic OSN interaction patterns.

Conversely, in our model, we let each agent decide independently when to connect to the network. Therefore, agents are not always available to spread contents and do not change state instantly upon the transmission of fake news or debunking messages. We modeled agent inter-access time as having an exponential distribution, with a different average value that depends on the agent. This makes it possible to differentiate between common users and bots. In particular, we set the average time between subsequent OSN accesses for bots to be:

or four times that of common users, where (minutes−1). Conversely, in the baseline case without time dynamics, all nodes access the network at the same, a fixed rate of once every 16 minutes.

When accessing the OSN, we assume that every agent reads its own feed, or “wall”. In this feed, the agent will find the news received while it was offline. The feed may contain messages that induce a state change in the agent (e.g., from susceptible to believer, or from believer to fact-checker). We also assumed the first node was infected at time .

To model the typical loss of interest in a news item over time and also to model the different spreading effectiveness (or “virality”) of different types of news, we introduced an engagement coefficient, whose variation over time obeys the differential equation:

where is the decay constant. The solution to (2) is:

and models an exponential interest decay, where the engagement at time controls the initial virality of the news and the constant engagement decay factor controls how fast the population loses interest in a news item. The factor allows us to model the different level of initial engagement expected for different types of messages. For example, in our simulations, we set for fake news and for debunking messages. This gives debunking messages a 10-fold lower probability to change the state of a user from believer or susceptible to fact-checker, with respect to the probability of transitioning from susceptible to believer.

In our setting, this means that a user believing in a fake news item will likely be attracted by similar pieces confirming the fake news, rather than by debunking, a behavior know as confirmation bias [53]. In the same way, a susceptible agent will tend to pay more attention to fake news, rather than to common news items. This models the fact that the majority of news regarding scientific studies tends not to reach and influence people [11].

In addition, we set , where is the simulation time; in this way, at the end of the simulation, fake news still induces some small, non-zero engagement. By setting this value, we can simulate the lifetime of the fake news, from its maximum engagement power to the lowest one. For a given time t, is a multiplicative factor that affects the probability of successfully infecting an individual at time t.

3.5. Agent-Based Spreading Simulation

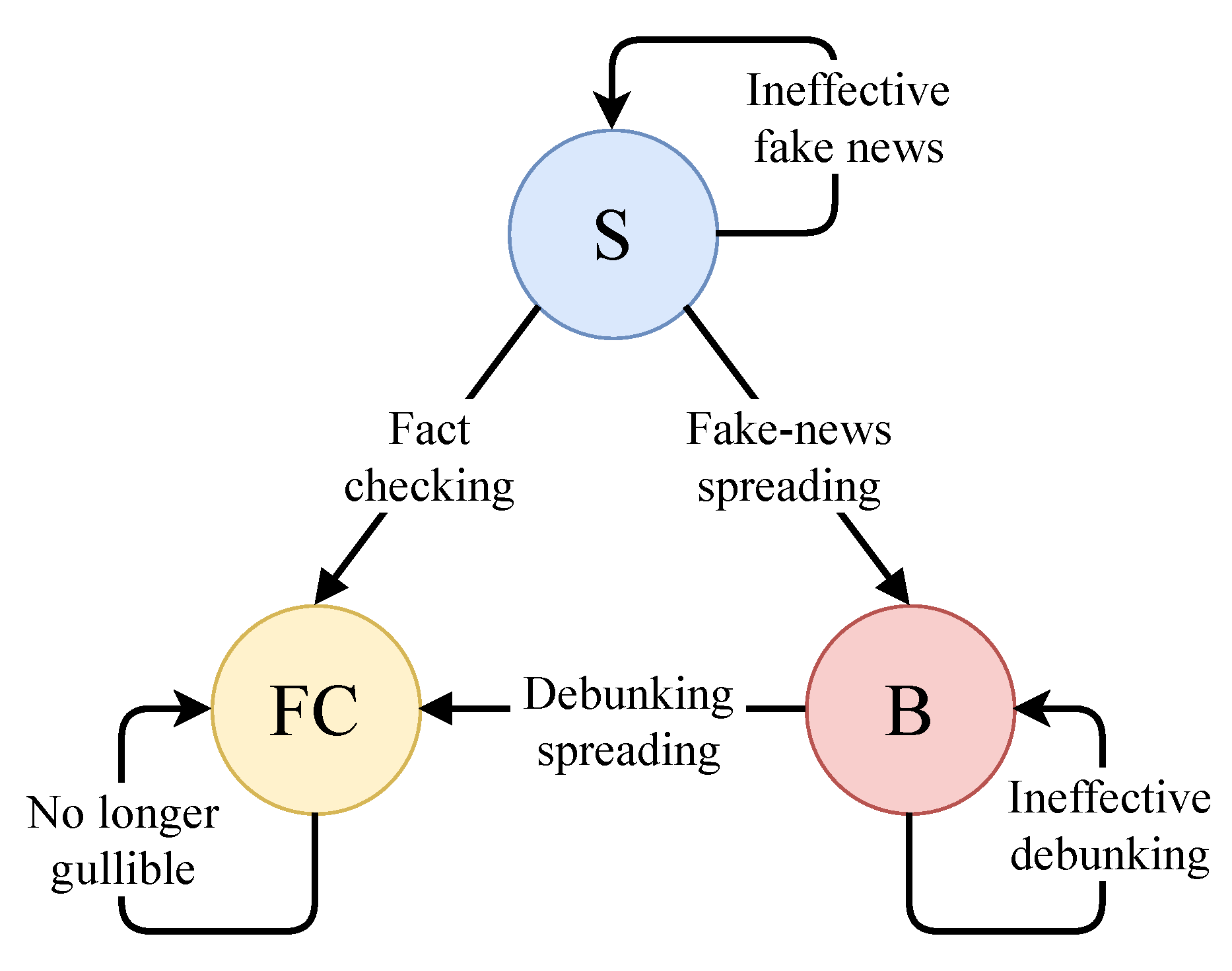

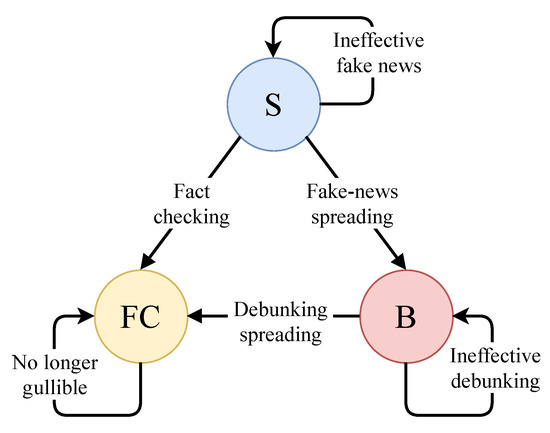

With reference to the SBFC model in Figure 3, two parallel spreading processes take place in our simulations:

Figure 3.

Fake news epidemic model. S, susceptible; FC, fact-checker; B, believer.

- Fake news spreading process: a node creates a fake news item and shares it over the network for the first time. The fake news starts to spread within a population of susceptible (S) agents. When a susceptible node gets “infected” in this process, it becomes a believer (B);

- Debunking process: a node does fact-checking. It becomes “immune” to the fake news item and rather starts spreading debunking messages within the population. When a node gets “infected” in this process, it becomes a fact-checker (FC).

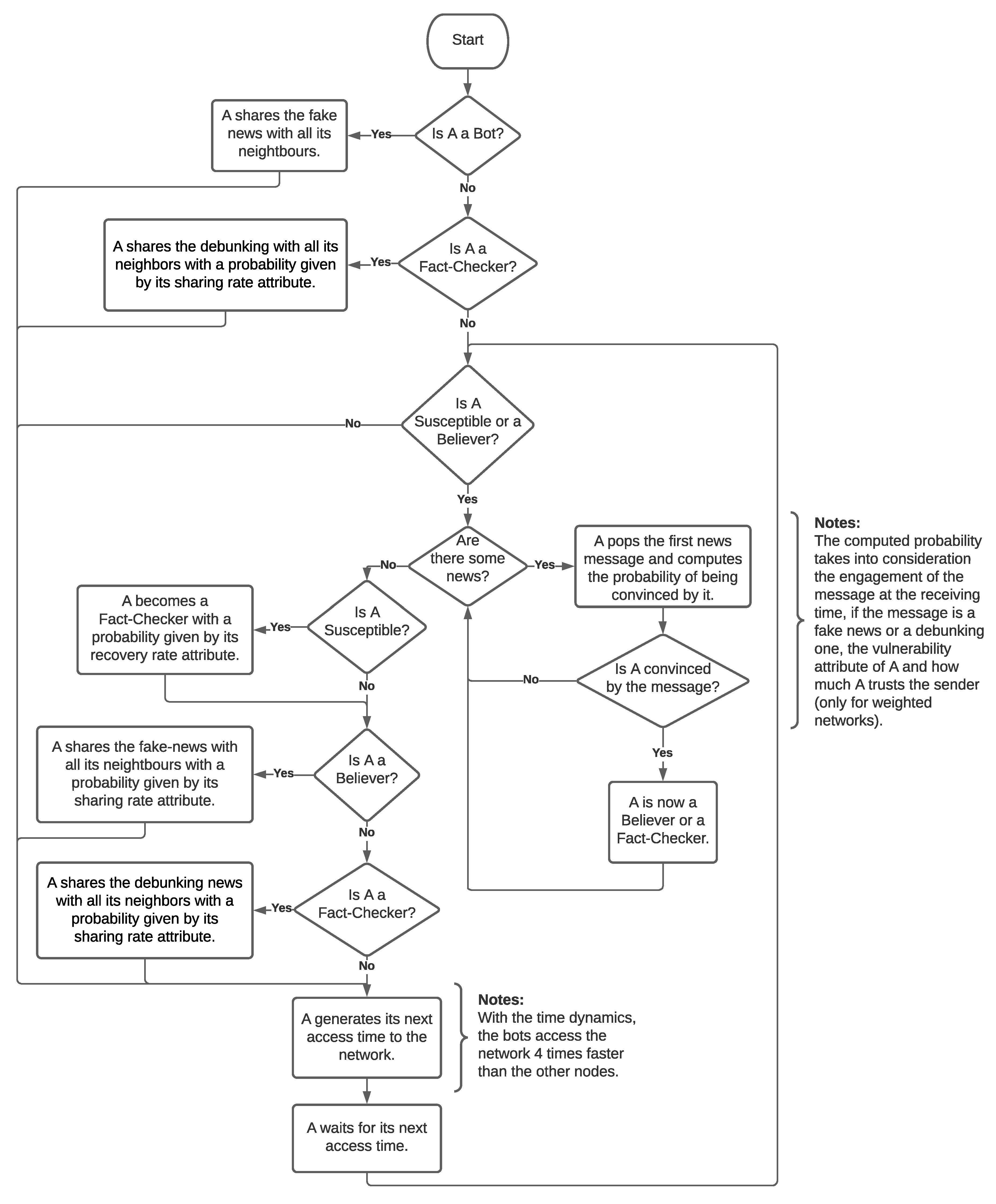

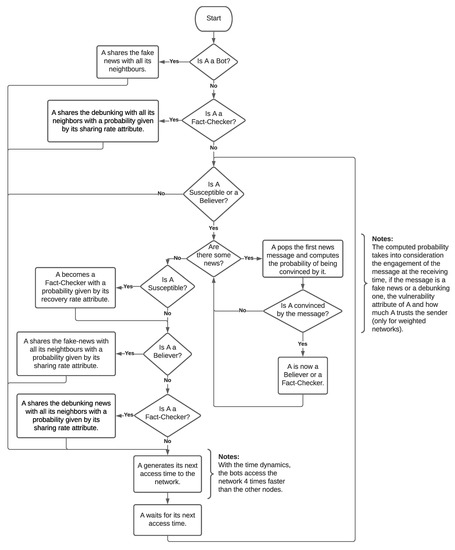

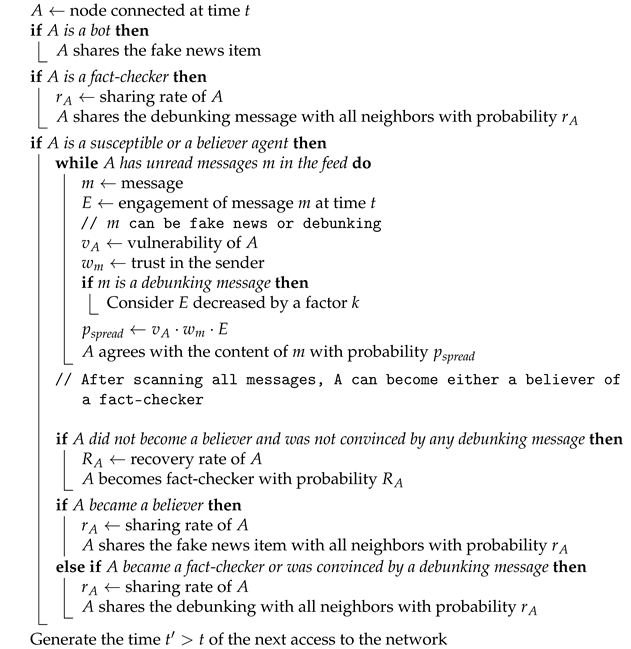

Based on the content of the messages found in its own feed upon accessing the OSN, an agent has a probability of being infected (i.e., either gulled by fake news from other believers or convinced by debunking from other fact-checkers) and a probability of becoming a fact-checker itself. If the agent changes its state, it has a probability of sharing its opinion to its neighbors. If the agent is a bot, it is limited to sharing the news piece. The algorithmic description of the node infection and OSN interaction process is provided in more detail in Algorithm 1. For better clarity, we also provide a diagrammatic representation of our agent-based fake news epidemic simulation process in Figure 4. The diagram reproduces the operations of each agent and helps differentiate between the baseline behavior and the improvements we introduce in this work. In the figure, margin notes explain these improvements, showing where enhancements (such as time-varying news engagement, weighted networks, and non-synchronous social network access) act to make the behavior of the nodes more realistic.

Figure 4.

Diagrammatic representation of our fake news epidemic simulation process.

| Algorithm 1: Spreading of fake news and debunking. |

|

4. Results

4.1. Roadmap

In this section, we present the results of the fake news epidemic from a number of simulations with different starting conditions. We carried out all simulations using custom software written in Python 3.7 and publicly available on GitHub in order to enable the reproducibility of our results (https://github.com/FraLotito/fakenews_simulator, accessed on 16 March 2021). The section is organized as follows. In Section 4.2, we describe the baseline configuration of our simulations, which contrasts with the realistic settings we propose to help emphasize the contribution of our simulation model. Section 4.3 shows the impact of influencers and bots on the propagation of a fake news item. Section 4.4 describes the effects of time dynamical network access, and finally, Section 4.5 demonstrates how weighted network links (expressing non-uniform trust levels) change the speed of fake news spreading over the social network.

4.2. Baseline Configuration

As a baseline scenario, we consider a network including no bots and no influencers, with unweighted edges and no time dynamics. The latter means that all agents access the network at the same time and with the same frequency, and the engagement coefficient of a fake news item does not change over time.

As discussed in Section 3, this is the simplest configuration for an agent-based simulation, but also the least informative. The network is flat, as all nodes are similar in terms of connectivity, vulnerability, and capability to recover from fake news. Fake news always has the same probability to deceive a susceptible OSN user, and OSN activities are synchronized across all nodes. While these aspects are clearly unrealistic, using this baseline configuration helps emphasize the effect of our improvements on fake news propagation. In the results below, we gradually introduce influencers, bots, and eternal fact-checkers, and comment on their effect on the fake news epidemic. Afterwards, we illustrate the impact of time dynamics and non-uniform node trust, which further increase the degree of realism of agent-based OSN simulations.

4.3. Impact of Influencers and Bots

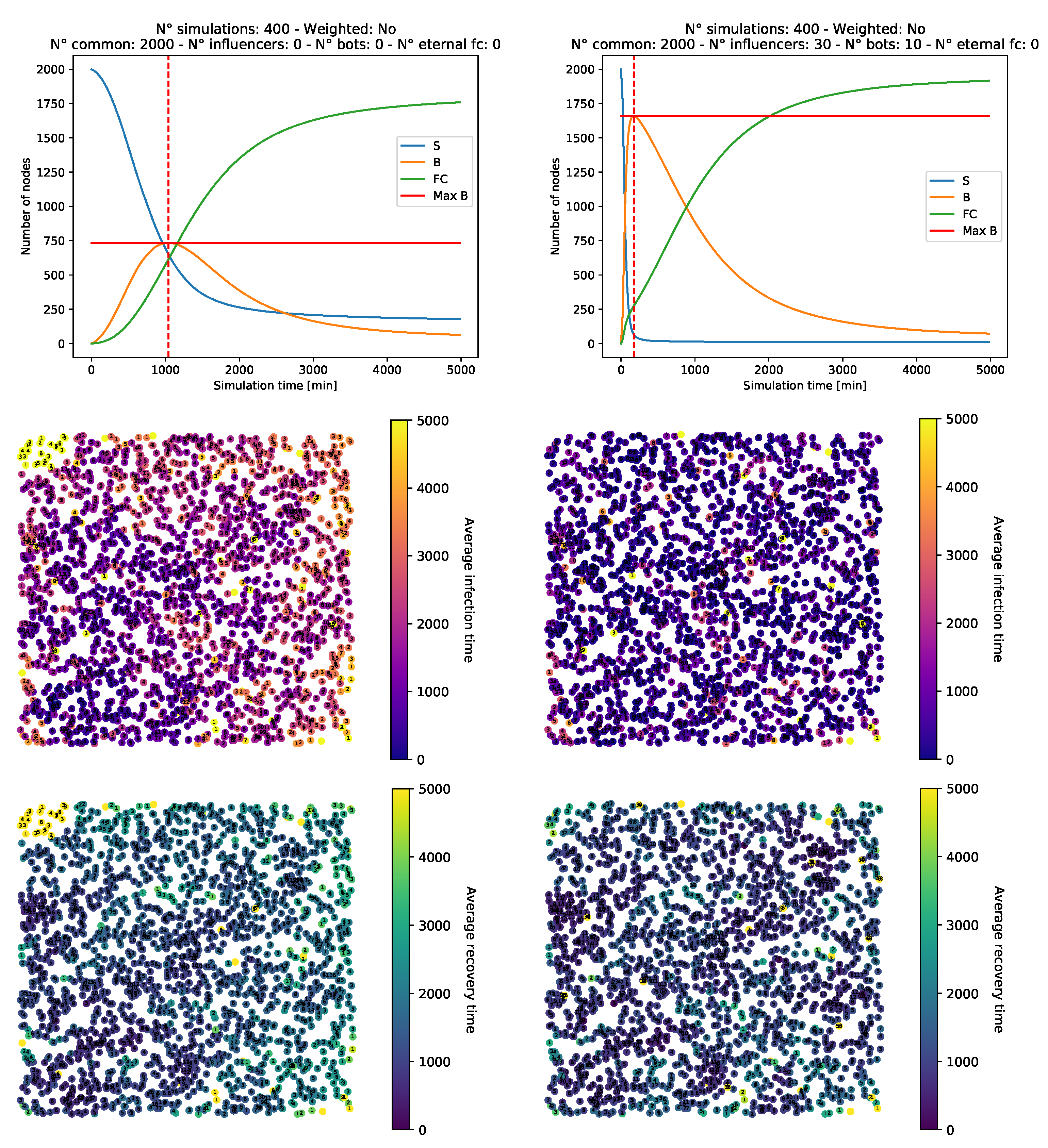

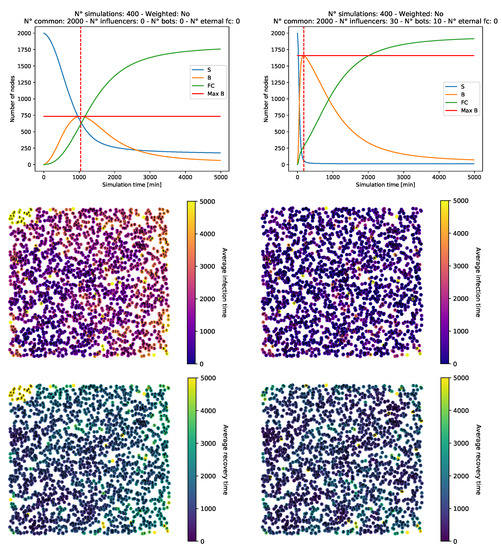

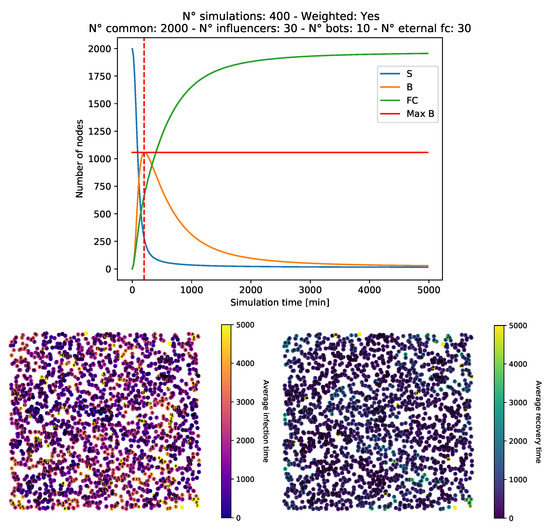

We start with Figure 5, which shows the results of simulations in two different scenarios through three plots. In the top row, SBFC plots shows the variation of the number of susceptible (blue), believer (orange), and fact-checker nodes (green) throughout the duration of the simulation, expressed in seconds.

Figure 5.

SBFC plots (top row), network infection graphs (middle row), and network recovery graphs (bottom row) for fake news spread in simulated online social networks (OSNs). (Left) Baseline network. (Right) Baseline network plus 30 influencers and 10 bots. In the middle row, the darker a node’s color is, the earlier that node starts believing in the fake news item. In the bottom row, the darker a node’s color is, the earlier that node performs fact-checking.

In the middle row, we show the spreading of the fake news over the network (drawn without edges for better readability). The color of each node denotes its average infection time, where the fake news starts spreading from the same node over the same network (i.e., with always the same first believer). A node depicted with a dark color is typically infected shortly after the start of the simulation, whereas a light-colored one is typically infected much later. In the third row, the graphs similarly show the average time of recovery. The darker the color is, the faster the recovery is.

For this first comparison, we considered both our baseline network composed only of common nodes (left column in Figure 5) and a network that additionally has 30 influencers and 10 bots. We constructed the SBFC plots by averaging the results of 400 simulations. In particular, we generated 50 different network topologies at random using the same set of parameters; these networks are the same for both our set of simulations, in order to obtain comparable results. Then, we executed eight simulations for each topology. Instead, for the infection and recovery time graphs, we computed the corresponding average times over a set of 100 simulations.

In the baseline network, the spreading is slow and critically affects only part of the network. The B curve (orange) reaches a peak of about 750 believer nodes simultaneously present in the network and then decreases, quenched by the debunking and fact-checking processes (FC, green curve). Adding influencers and bots accelerates the infection and helps both the fake news and the debunking spread out to more nodes.

In particular, with 2000 common nodes and 30 influencers, fake news spreading infects (on average) almost half of the common nodes. When 10 bots are further added to the network (right column in Figure 5), the spreading is faster, and after a short amount of time, the majority of the common nodes believe in the fake news. The SBFC plot in the top-right panel shows that the fake news reaches more than 80% of the network nodes, whereas the middle panel confirms that infections occur typically early in the process, with the exception of a few groups of nodes that get infected later on. Similarly, it takes more time to recover from the fake news and perform fact-checking than in the baseline case (bottom-right graph).

The above simulations already confirm the strong impact influencers and bots may have on fake news spreading: influencers make it possible to convey fake news through their many out-connections, and reach otherwise secluded portions of the population. Bots, by insisting on spreading fake news, increase the infection probability early on in the fake news spreading process. As a result, the effectiveness of the fake news is higher, and more agents become believers before the debunking process starts to create fact-checkers.

4.4. Impact of Time Dynamics

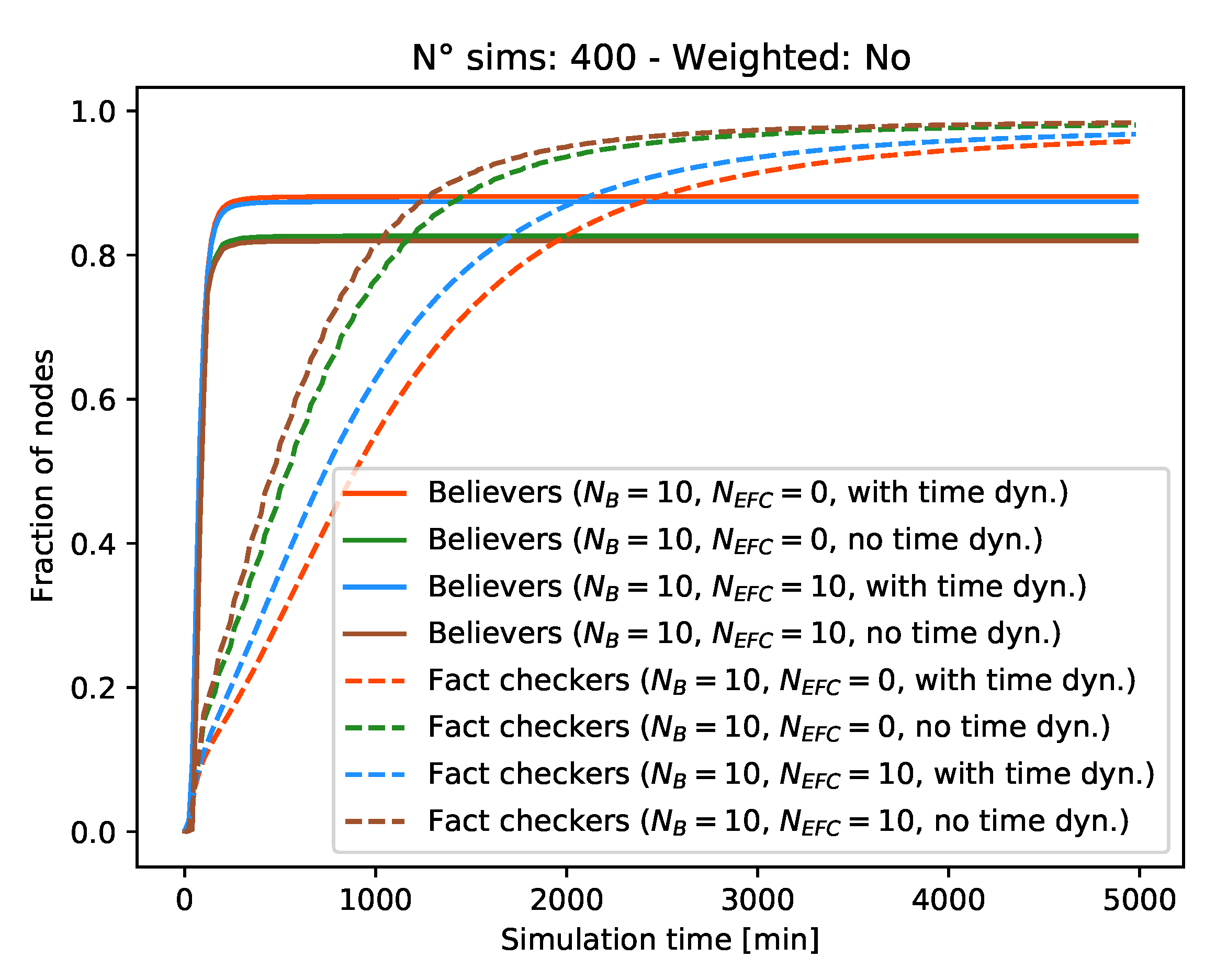

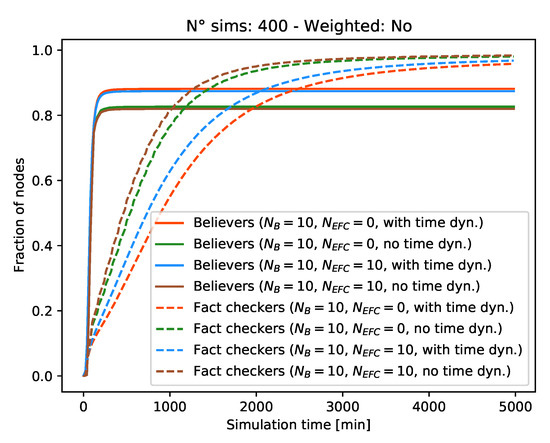

We now analyze the impact of time dynamics on the simulation results. In particular, we show that including time dynamics is key to obtaining realistic OSN interactions. We focus on Figure 6, where we show the all-time maximum fraction of believers and fact-checkers over time for different network configurations. We present results both with and without bots and eternal fact-checkers, as well as with and without time dynamics.

Figure 6.

All-time maximum number of believers and fact-checkers over time with and without time dynamics.

In the absence of time dynamics, all types of agents access the network synchronously. Such synchronous access patterns make simulated OSN models inaccurate as, e.g., we expect that bots access social media much more often than common users, in order to increase and maintain the momentum of the fake news spreading. Conversely, enabling time dynamics allows us to model more realistic patterns. Specifically, we recall that we allow bots to access the network four times more often than a common user.

In the absence of time dynamics, a larger fraction of the network remains unaffected by the fake news. For example, in the case that the network contains 10 bots, 30 influencers, and no eternal fact-checker, the maximum fraction of believers is 0.81 in the absence of time dynamics (light blue solid curve) and increases to 0.87 (+7%) with time dynamics (brown solid curve), thanks to the higher access rate of the bots. Because time dynamics enable the fake news to spread faster, the number of fact-checkers increases more slowly. In Figure 6, we observe this by comparing the orange and green dashed lines.

Figure 6 also shows the effect of eternal fact-checkers: their presence does not slow down the spreading process significantly, as negligibly fewer network users become believers. Instead, eternal fact-checkers accelerate the recovery of believers, as seen by comparing, e.g., the red and blue lines (for the case without time dynamics) and the green and brown lines (for the case with time dynamics). In any event, fact-checkers access the networks as often as common nodes; hence, their impact is limited. This is in line with the observation that time dynamic network access patterns slow down epidemics in OSNs [54].

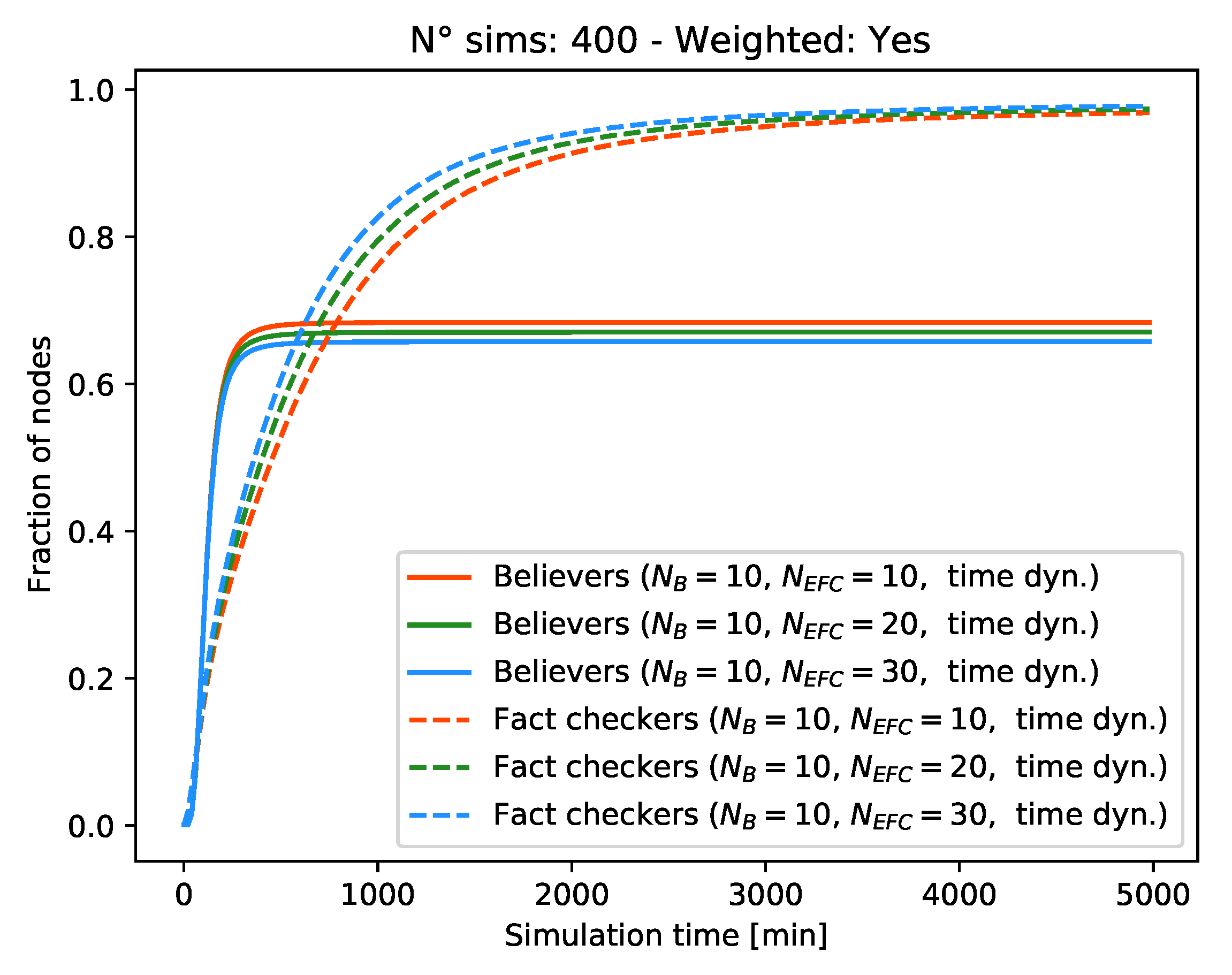

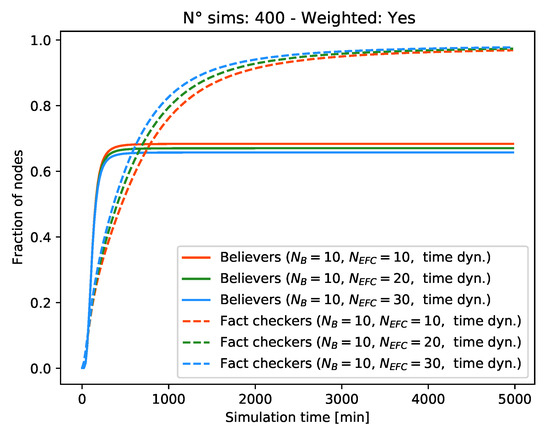

4.5. Impact of Weighted Networks Expressing Non-Uniform Node Trust

In Figure 7, we show the result of the same experiments just described, but introduce weights on the network edges, as explained in Section 3.2. We recall that weights affect the trust that a node puts in the information passed on by another connected node. Therefore, a lower weight on an edge directly maps to lower trust in the corresponding node and to a lower probability of changing state when receiving a message from that node. As the weight of any link connecting a bot to any other node is now , the infection slows down due to the reduced trust in bots that results. We observe that, by increasing the number of eternal fact-checkers while keeping the number of bots constant, the fraction of the network affected by the fake news spreading decreases slightly. However, the speed of the fake news onset does not change significantly, as it takes time to successfully spread the debunking. This is in line with the results in the previous plots.

Figure 7.

All-time maximum number of Believers and Fact-checkers over time in a weighted network.

Figure 7 also confirms that eternal fact-checkers increase the recovery rate of believers: a few hundred seconds into the process, the number of fact-checkers increases by about 1.5% for every 10 eternal fact-checkers added to the network, before saturating towards the end of the simulation, where all nodes (or almost all) have recovered.

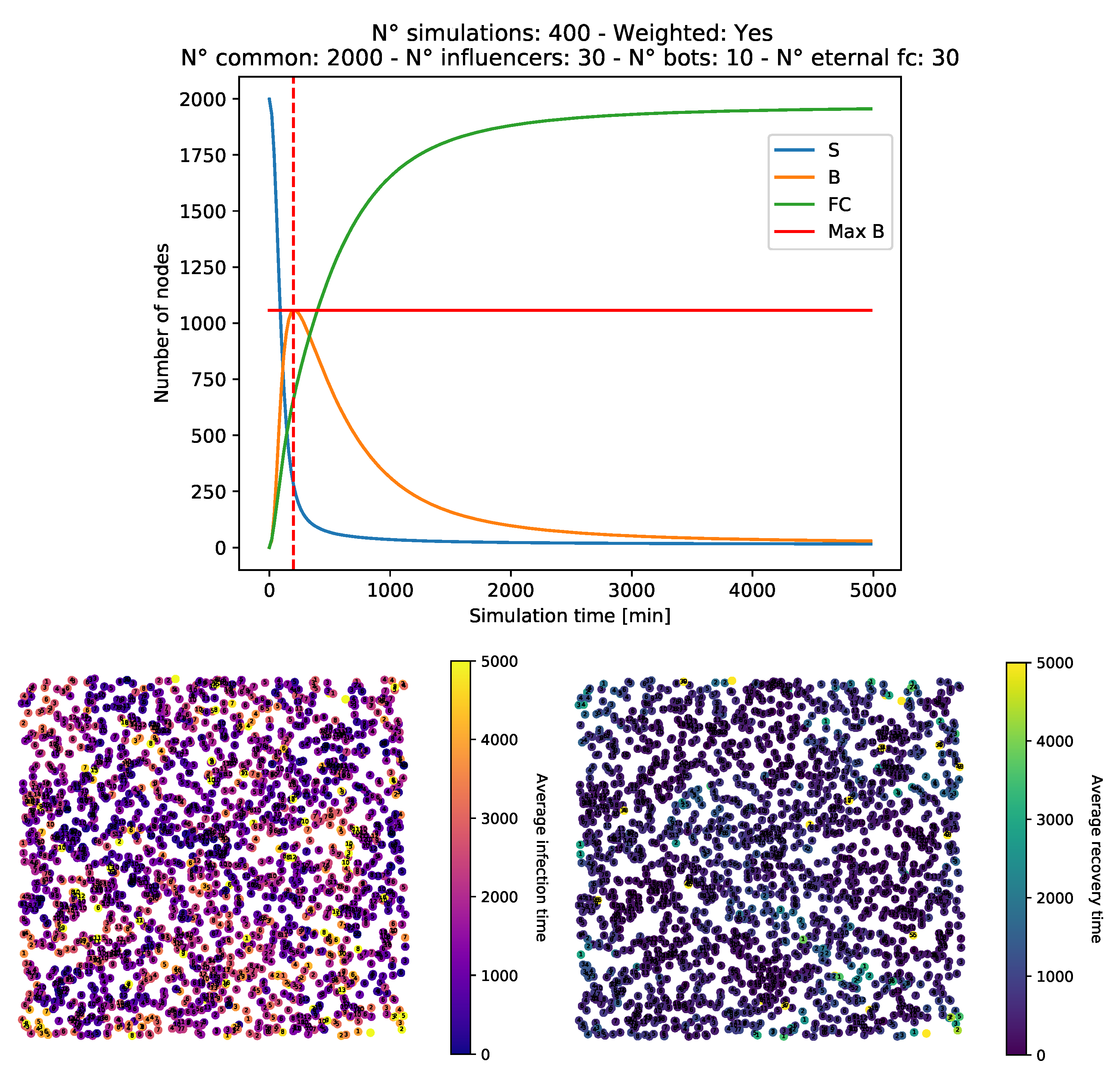

We conclude with Figure 8, where we consider the joint effect of eternal fact-checkers operating on a weighted network graph. The peak of the believers is markedly lower than in Figure 5, because the weights on the connections tend to scale down the spreading curve. The impact of the eternal fact-checkers can be noticed from the fact-checker curve (green), which increases significantly faster than in Figure 5. We observe a similar effect from the believer curve (orange), which instead decreases more rapidly after reaching a lower peak. In this case, the maximum number of believers is about one half of the network nodes. Correspondingly, a noticeable portion of the nodes in the infection graph (bottom-left panel in Figure 8) is yellow, showing that the fake news reaches these nodes very late in the simulation. Instead, most of the nodes in the recovery graph (bottom-right panel in Figure 8) are dark blue, showing that they stop believing in the fake news and become fact-checkers early on. These results confirm the importance of fact checking efforts in the debunking of a fake news item, and the need to properly model different classes of fact checking agents.

Figure 8.

SBFC plot, network infection, and recovery graphs for the same networks of Figure 5, but with the addition of eternal fact-checkers and weights on the connections.

5. Discussion

As fake news circulation is characteristic of all OSNs, several studies have attempted to model and simulate the spreading of fake news, both independently of and jointly with the spreading of debunking messages belying the fake news. For example, the simulation and modeling approaches most related to our contribution are listed in Table 1.

We identify a number of shortcomings in these works, such as exceedingly synchronous dynamics, constant fake news engagement, and uniform trust. We improve over these works by proposing an agent-based simulation system where each agent accesses the network independently and reacts to the messages shared by its contacts. Another improvement concerns the fact that the interest of the population in a fake news item decays over time, which realistically models the loss of engagement of fake news pieces. By considering a directed weighted network model, we convey realistic information flows better than in undirected, unweighted models. A consequence of this network model is that we can explicitly consider influencers and bots [9].

Several previous studies assumed the emergence of influencers in the network as a result of the preferential attachment process [48], but did not explicitly model their role, so that the impact of these agents remains largely unexplored. An exception is [41], where the authors explicitly cited the role of influencers on a network and proposed the characterization and modeling of the role of bots. However, unlike our work, the authors of [41] operated in an opinion dynamics setting and focused on binary opinions, which evolve throughout an initially evenly distributed population. Instead, we integrate influencers as agents that participate in the news spreading process and may become both believers (thus spreading fake news) and recover to become fact-checkers (thus promoting debunking).

The effects of heterogeneous agents were studied in [40]. In our case, to model the personalities of the agents and properly assign their attributes, we sampled from statistical distributions instead of relying on questionnaires, in order to provide a simple, but realistic source of variability for the parameters that characterize OSN agents.

We believe that the main advantage of our model is to introduce realistic OSN agent behaviors. As a result, our agent-based simulation model can help assess the impact of fake news over an OSN population in different scenarios. This enables authorities and interested stakeholders alike to also evaluate effective strategies and countermeasures that limit or contrast fake news impact.

6. Conclusions

In this work, we argue that most modeling efforts for fake news epidemics over social networks rely on analytical models that capture the general trends of the epidemics (with some necessary approximations) or on simple simulation approaches that often lack sufficiently realistic settings. We fill this gap by extending the simple susceptible believer fact-checker (SBFC) model to make it more realistic. We propose adding time dynamical features to the simulations (including variable access times to the network and news engagement decay), node-dependent attributes, and a richer context for mutual node trust through weighted network edges. Moreover, we analyze the impact of influencers, bots, and eternal fact-checkers on the spreading of a fake news item.

Our simulations confirm that the above realistic factors are very impactful, and neglecting them (e.g., via a model that considers synchronous OSN access for all agents, or a network where all agents trust one another equally) masks several key outcomes. The importance of a realistic simulation tool lies in the reliability of the analyses it enables. For example, the designers of marketing campaigns could assess how much fake news would damage a company or product by spreading into a population of interest. Similarly, managers and policy-makers may assess opinion spreading and polarization (which follows similar mechanisms as fake news spreading) by means of realistic simulations.

In practical terms, our results suggest that reducing the impact of bots and increasing the presence and impact of authoritative personalities [11] are key to limiting fake news spreading. This may entail educating OSN users to recognize bot behavior or by implementing forms of automatic recognition that flag suspicious activity patterns as bot-like. The impact of influencers is also very relevant, as they significantly speed up the spreading of the news online and amplify the “small-world effect” [49], avoiding influencers falling prey of fake news, and rather increasing their involvement in stopping fake news and/or promoting their debunking. The above methods would further help improve the healthiness of interactions and opinion communications over social networks, reducing the concerns of online users.

The main limitations of this study concern the test scenarios, which should be extended to comprise a greater number of nodes and to include agents with multiple levels of popularity, besides common users and influencers. We leave this investigation for a future extension of our work. Similarly, our agent-based simulation model recreates network access patterns (hence, the storyboard of social interactions) according to a mathematical model. While this is still better than having all agents interact synchronously, we could further improve the level of realism by testing our model on social network datasets that include temporal annotations. Moreover, we could consider additional dynamics in the network model. For example, we plan to extend our interaction model through an unfollowing functionality (i.e., social connection removal) in order to amplify the circulation of some news pieces in specific communities.

Author Contributions

Conceptualization, Q.F.L. and D.Z.; methodology, Q.F.L., D.Z. and P.C.; software, Q.F.L. and D.Z.; writing—original draft preparation, Q.F.L., D.Z. and P.C.; writing—review and editing, Q.F.L. and P.C. All authors read and agreed to the present version of the manuscript.

Funding

This research was supported in part by the Italian Ministry for Education (MIUR) under the initiative Departments of Excellence (Law 232/2016).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All results in this paper can be derived from our simulation software, publicly available on GitHub (https://github.com/FraLotito/fakenews_simulator, accessed on 16 March 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Perrin, A. Social Media Usage: 2005–2015. Pew Res. Cent. Int. Technol. 2015, 125, 52–68. [Google Scholar]

- Albright, J. Welcome to the Era of Fake News. Media Commun. 2017, 5, 87–89. [Google Scholar] [CrossRef]

- Shrivastava, G.; Kumar, P.; Ojha, R.P.; Srivastava, P.K.; Mohan, S.; Srivastava, G. Using Data Sciences in Digital Marketing: Framework, methods, andperformance metrics. J. Innov. Knowl. 2020, in press. [Google Scholar]

- Bovet, A.; Makse, H.A. Influence of fake news in Twitter during the 2016 US presidential election. Nat. Commun. 2019, 10, 7. [Google Scholar] [CrossRef] [PubMed]

- Saura, J.R.; Ribeiro-Soriano, D.; Palacios-Marqués, D. From user-generated data to data-driven innovation: A research agenda to understand user privacy in digital markets. Int. J. Inf. Manag. 2021, in press. [Google Scholar] [CrossRef]

- Ribeiro-Navarrete, S.; Saura, J.R.; Palacios-Marqués, D. Towards a new era of mass data collection: Assessing pandemic surveillance technologies to preserve user privacy. Technol. Forecast. Soc. Chang. 2021, 167. in press. [Google Scholar] [CrossRef]

- Lewandowsky, S.; Ecker, U.K.; Cook, J. Beyond Misinformation: Understanding and Coping with the “Post-Truth” Era. J. Appl. Res. Mem. Cogn. 2017, 6, 353–369. [Google Scholar] [CrossRef]

- Aiello, L.M.; Deplano, M.; Schifanella, R.; Ruffo, G. People are Strange when you’re a Stranger: Impact and Influence of Bots on Social Networks. arXiv 2014, arXiv:1407.8134. [Google Scholar]

- Tambuscio, M.; Ruffo, G.; Flammini, A.; Menczer, F. Fact-Checking Effect on Viral Hoaxes: A Model of Misinformation Spread in Social Networks. In Proceedings of the 24th International Conference on World Wide Web; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Newman, M.E.J. Spread of epidemic disease on networks. Phys. Rev. E 2002, 66, 016128. [Google Scholar] [CrossRef]

- Furini, M.; Mirri, S.; Montangero, M.; Prandi, C. Untangling between fake-news and truth in social media to understand the COVID-19 Coronavirus. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7–10 July 2020; pp. 1–6. [Google Scholar]

- Fernandez, M.; Alani, H. Online Misinformation: Challenges and Future Directions. In Proceedings of the ACM WWW’18 Companion, Lyon, France, 23–27 April 2018. [Google Scholar]

- Bondielli, A.; Marcelloni, F. A Survey on Fake News and Rumour Detection Techniques. Inf. Sci. 2019, 497, 38–55. [Google Scholar] [CrossRef]

- Zhou, X.; Zafarani, R. Fake News: A Survey of Research, Detection Methods, and Opportunities. arXiv 2018, arXiv:1812.00315. [Google Scholar]

- Liu, Y.; Wu, Y.B. Early Detection of Fake News on Social Media Through Propagation Path Classification with Recurrent and Convolutional Networks. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Ma, J.; Gao, W.; Mitra, P.; Kwon, S.; Jansen, B.J.; Wong, K.F.; Cha, M. Detecting Rumors from Microblogs with Recurrent Neural Networks. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence; AAAI Press: Palo Alto, CA, USA, 2016; p. 38183824. [Google Scholar]

- Socher, R.; Lin, C.C.Y.; Ng, A.Y.; Manning, C.D. Parsing Natural Scenes and Natural Language with Recursive Neural Networks. In Proceedings of the 28th International Conference on International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011. [Google Scholar]

- Kaliyar, R.K.; Goswami, A.; Narang, P.; Sinha, S. FNDNet A deep convolutional neural network for fake news detection. Cogn. Syst. Res. 2020, 61, 32–44. [Google Scholar] [CrossRef]

- Bronstein, M.M.; Bruna, J.; LeCun, Y.; Szlam, A.; Vandergheynst, P. Geometric Deep Learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [Google Scholar] [CrossRef]

- Monti, F.; Frasca, F.; Eynard, D.; Mannion, D.; Bronstein, M.M. Fake News Detection on Social Media using Geometric Deep Learning. arXiv 2019, arXiv:1902.06673. [Google Scholar]

- Zhou, X.; Jain, A.; Phoha, V.V.; Zafarani, R. Fake News Early Detection: A Theory-driven Model. Digit. Threat. Res. Pract. 2020, 1, 1–25. [Google Scholar] [CrossRef]

- Krouska, A.; Troussas, C.; Virvou, M. Comparative Evaluation of Algorithms for Sentiment Analysis over Social Networking Services. J. Univers. Comput. Sci. 2017, 23, 755–768. [Google Scholar]

- Troussas, C.; Krouska, A.; Virvou, M. Evaluation of ensemble-based sentiment classifiers for Twitter data. In Proceedings of the 2016 7th International Conference on Information, Intelligence, Systems & Applications (IISA), Chalkidiki, Greece, 13–15 July 2016; pp. 1–6. [Google Scholar]

- Krouska, A.; Troussas, C.; Virvou, M. The effect of preprocessing techniques on Twitter sentiment analysis. In Proceedings of the IISA, Chalkidiki, Greece, 13–15 July 2016; pp. 1–5. [Google Scholar]

- Zuckerman, M.; DePaulo, B.M.; Rosenthal, R. Verbal and Nonverbal Communication of Deception. Adv. Exp. Soc. Psychol. 1981, 14, 1–59. [Google Scholar]

- Abdullah-All-Tanvir; Mahir, E.M.; Akhter, S.; Huq, M.R. Detecting Fake News using Machine Learning and Deep Learning Algorithms. In Proceedings of the 2019 7th International Conference on Smart Computing Communications (ICSCC), Sarawak, Malaysia, 28–30 June 2019; pp. 1–5. [Google Scholar]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. USA 2018, 115, 1243512440. [Google Scholar] [CrossRef] [PubMed]

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. The Online Human-Bot Interactions: Detection, Estimation, and Characterization. In Proceedings of the International AAAI Conference on Web and Social Media, Palo Alto, CA, USA, 25–28 June 2018. [Google Scholar]

- Yang, K.; Varol, O.; Davis, C.A.; Ferrara, E.; Flammini, A.; Menczer, F. Arming the public with artificial intelligence to counter social bots. Hum. Behav. Emerg. Technol. 2019, 1, 4861. [Google Scholar] [CrossRef]

- Gilani, Z.; Kochmar, E.; Crowcroft, J. Classification of Twitter Accounts into Automated Agents and Human Users. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017; Association for Computing Machinery: New York, NY, USA, 2017; p. 489496. [Google Scholar]

- Yang, K.C.; Varol, O.; Hui, P.M.; Menczer, F. Scalable and Generalizable Social Bot Detection through Data Selection. Proc. Aaai Conf. Artif. Intell. 2020, 34, 10961103. [Google Scholar] [CrossRef]

- Kudugunta, S.; Ferrara, E. Deep neural networks for bot detection. Inf. Sci. 2018, 467, 312322. [Google Scholar] [CrossRef]

- Davis, C.A.; Varol, O.; Ferrara, E.; Flammini, A.; Menczer, F. BotOrNot. In Proceedings of the 25th International Conference Companion on World Wide Web-WWW ’16 Companion, Cambridge, UK, 11–14 December 2016. [Google Scholar]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The Rise of Social Bots. Commun. ACM 2016, 59, 96104. [Google Scholar] [CrossRef]

- des Mesnards, N.G.; Hunter, D.S.; El Hjouji, Z.; Zaman, T. Detecting Bots and Assessing Their Impact in Social Networks. arXiv 2018, arXiv:1810.12398. [Google Scholar]

- Shrivastava, G.; Kumar, P.; Ojha, R.P.; Srivastava, P.K.; Mohan, S.; Srivastava, G. Defensive Modeling of Fake News through Online Social Networks. IEEE Trans. Comput. Social Syst. 2020, 7, 1159–1167. [Google Scholar] [CrossRef]

- Murayama, T.; Wakamiya, S.; Aramaki, E.; Kobayashi, R. Modeling and Predicting Fake News Spreading on Twitter. arXiv 2020, arXiv:2007.14059. [Google Scholar]

- Tambuscio, M.; Oliveira, D.F.M.; Ciampaglia, G.L.; Ruffo, G. Network segregation in a model of misinformation and fact-checking. J. Comput. Soc. Sci. 2018, 1, 261–275. [Google Scholar] [CrossRef]

- Tambuscio, M.; Ruffo, G. Fact-checking strategies to limit urban legends spreading in a segregated society. Appl. Netw. Sci. 2019, 4, 1–9. [Google Scholar] [CrossRef]

- Burbach, L.; Halbach, P.; Ziefle, M.; Calero Valdez, A. Who Shares Fake News in Online Social Networks? In Proceedings of the ACM UMAP, Larnaca, Cyprus, 9–12 June 2019. [Google Scholar]

- Ross, B.; Pilz, L.; Cabrera, B.; Brachten, F.; Neubaum, G.; Stieglitz, S. Are social bots a real threat? An agent-based model of the spiral of silence to analyse the impact of manipulative actors in social networks. Eur. J. Inf. Syst. 2019, 28, 394–412. [Google Scholar] [CrossRef]

- Törnberg, P. Echo chambers and viral misinformation: Modeling fake news as complex contagion. PLoS ONE 2018, 13, 1–21. [Google Scholar] [CrossRef]

- Brainard, J.; Hunter, P.; Hall, I. An agent-based model about the effects of fake news on a norovirus outbreak. Rev. D’épidémiologie Santé Publique 2020, 68, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Cisneros-Velarde, P.; Oliveira, D.F.M.; Chan, K.S. Spread and Control of Misinformation with Heterogeneous Agents. In Proceedings of the Complex Networks, Lisbon, Portugal, 10–12 December 2019. [Google Scholar]

- Norman, B.; Ann, M.J. Mapping and leveraging influencers in social media to shape corporate brand perceptions. Corp. Commun. Int. J. 2011, 16, 184–191. [Google Scholar]

- Caldarelli, G.; Nicola, R.D.; Vigna, F.D.; Petrocchi, M.; Saracco, F. The role of bot squads in the political propaganda on Twitter. CoRR 2019, 3, 1–5. [Google Scholar]

- Erdös, P.; Rényi, A. On Random Graphs I. Publ. Math. Debr. 1959, 6, 290. [Google Scholar]

- Barabási, A.L.; Albert, R. Emergence of Scaling in Random Networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef] [PubMed]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ’small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Wahid-Ul-Ashraf, A.; Budka, M.; Musial, K. Simulation and Augmentation of Social Networks for Building Deep Learning Models. arXiv 2019, arXiv:1905.09087. [Google Scholar]

- Dall, J.; Christensen, M. Random geometric graphs. Phys. Rev. E 2002, 66, 016121. [Google Scholar] [CrossRef] [PubMed]

- Spricer, K.; Britton, T. An SIR epidemic on a weighted network. Netw. Sci. 2019, 7, 556580. [Google Scholar] [CrossRef]

- Zhou, X.; Zafarani, R. A Survey of Fake News: Fundamental Theories, Detection Methods, and Opportunities. ACM Comput. Surv. 2020, 53, 1–40. [Google Scholar] [CrossRef]

- Liu, S.Y.; Baronchelli, A.; Perra, N. Contagion dynamics in time-varying metapopulation networks. Phys. Rev. E 2013, 87, 032805. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).