Abstract

Education 4.0 demands a flexible combination of digital literacy, critical thinking, and problem-solving in educational settings linked to real-world scenarios. Haptic technology incorporates the sense of touch into a visual simulator to enrich the user’s sensory experience, thus supporting a meaningful learning process. After developing several visuo-haptic simulators, our team identified serious difficulties and important challenges to achieve successful learning environments within the framework of Education 4.0. This paper presents the VIS-HAPT methodology for developing realistic visuo-haptic scenarios to promote the learning of science and physics concepts for engineering students. This methodology consists of four stages that integrate different aspects and processes leading to meaningful learning experiences for students. The different processes that must be carried out through the different stages, the difficulties to overcome and recommendations on how to face them are all described herein. The results are encouraging since a significant decrease (of approximately 40%) in the development and implementation times was obtained as compared with previous efforts. The quality of the visuo-haptic environments was also enhanced. Student perceptions of the benefits of using visuo-haptic simulators to enhance their understanding of physics concepts also improved after using the proposed methodology. The incorporation of haptic technologies in higher education settings will certainly foster better student performance in subsequent real environments related to Industry 4.0.

1. Introduction

Education 4.0 has been associated with the period conceptualized as the Fourth Industrial Revolution, where innovative pedagogical technologies, procedures, and best practices are essential for future professionals to successfully perform [1,2,3]. For this reason, the Education 4.0 approach in higher education seeks to train new highly competitive professionals capable of applying appropriate physical and digital resources to provide innovative solutions to current and future social challenges.

Therefore, in Education 4.0, traditional learning methods must be adapted to include strategies, technologies, and activities that allow students to access appropriate learning and training programs. Since Education 4.0 seeks to provide more efficient, accessible, and flexible educational programs, new teaching–learning methods emerge that use technologies and proven pedagogical strategies in higher education. Advances in multi-modal interaction have thus enriched teaching–learning models.

Embodied cognition theory is based on the paradigm of adding senses, enhancing the learning experience, and enabling the student to acquire knowledge at a more profound and longer-term level [4,5,6]. Haptic feedback (from the Greek haptesthai, meaning “contact” or “touch”), incorporates the tactile sense in a virtual world, which is the most sophisticated of all our senses, allowing the users to perceive different sensations such as pressure, texture, hardness, weight, and the form of virtual objects in a visuo-haptic simulator [7]. In this sense, the use of visuo-haptic environments, in which haptic technology is combined with virtual learning environments (e.g., [6]), allows the student’s brain to receive stimuli from different channels, thus enhancing a better acquisition of concepts during the learning process [8], and promoting the development of critical competencies that will prepare them to more efficiently respond in future scenarios.

The use of low-cost devices for visuo-haptic environments in educational settings has allowed the development and creation of innovative interactive applications. However, some of the most important difficulties faced when developing successful visuo-haptic environments are: (a) difficulties in designing the learning experience to optimize the use of haptic devices; (b) inadequate visualization of the variables and elements of the simulator; (c) poor and unrealistic matching between the real physical phenomenon and the experience in the visuo-haptic environment; (d) lag between the haptic feedback force and the corresponding digital visuals; (e) overreaction and/or limits of the feedback forces; (f) very long development and implementation times for the visuo-haptic environments to be ready; and (g) redundancy and duplication of efforts along the implementation processes. In particular, during the implementation and evaluation of the learning experience, there are also several difficulties such as: (a) an insufficient number of groups and students for an adequate selection of experimental and control group; (b) compatibility of group schedules and laboratory training times; (c) inadequate student guidelines during the development of the activity; (d) not enough time for the students to carry out the session and freely experiment with the haptic device; and (e) the design of appropriate evaluation instruments. One of our first efforts to optimize development times and reduce programming difficulty was to establish a generic architecture [9], which helped in some aspects, but it was not enough to tackle other problems of the involved processes.

In this work, we propose a general methodology (called VIS-HAPT) aimed to develop, implement, and evaluate visuo-haptic environments within the framework of Education 4.0. Related work is presented in Section 2. Previous work by the authors is presented in Section 3, while the VIS-HAPT methodology is presented in Section 4. A comparison and discussion of methodologies for different visuo-haptic simulators is presented in Section 5. Finally, Section 6 presents our main conclusions and future work.

2. Related Work

Recent studies in psychological science suggest that detailed lasting memories are promoted by introducing the sense of touch [10], and this finding can benefit both training and educational settings. Therefore, haptic technology has been introduced to promote expertise and learning in myriad areas such as industry, navigation, e-commerce, gaming, arts, medicine, and education [11]. In Industry 4.0, for example, pilots are often trained in flight simulators where mid-air haptic devices are installed to control the plane. Only after perfect command is achieved on the simulator can the pilot oversee a real plane [12]. In the medical field, haptic training environments have also been used to perform diverse procedures in surgery such as suturing [13]. Dental training with artificial teeth has also used haptic tools, and oral implant therapy has used haptic technologies as a relevant part of the dental practice [14,15].

Modeling a physical situation with a visuo-haptic environment has the advantage that the related physical parameters may be controlled and adjusted within a large range of values—in contrast to a real-lab setting in which only a limited number of options may be given to the student [16]. The use of haptic environments with educative purposes has notably increased within the last two decades, especially for teaching abstract or complex concepts—from scenarios aimed to master basic science concepts [17] to secondary schools combining haptic interfaces and virtual reality applications for educational purposes [18]. Overall, the experience shows that haptic technology improves the level of human perception due to the deeper immersion it provides [19,20].

There are several examples in the literature about the design, implementation, and evaluation of the impact of using visuo-haptic environments with educational purposes, such as frictional forces [21,22,23], the Coriolis effect [8], precession [23], fluids concepts [7], chemical bonding [24], and electric forces [6,25,26]. These studies suggest that embodied learning significantly contributes to a better understanding of abstract concepts dealing with forces. Similarly, some of these studies have administered perception and attitude surveys (e.g., [23]) and found that the use of visuo-haptic simulators stimulates multiple users’ senses, meeting the needs of kinesthetic learners as well as enhancing existing e-learning systems, user engagement, and concept retention [27].

After developing several visuo-haptic simulators for the teaching of physics in OpenGL and Unity environments [6,22,28,29,30], the main contribution of this work is the identification of a methodology to facilitate the conceptualization, design, implementation, and evaluation of visuo-haptic environments aiming to foster the understanding and applications of physics and science concepts by engineering students in order to develop skills within the framework of Education 4.0.

From a search in the related literature, we failed to identify a paper explicitly dealing with the definition of such a methodology. Instead, authors usually start by identifying a possible physics situation related to a given physics curricula for undergrad engineering students and then—according to the available haptic devices, school environment and student populations—identify a programming language and engine to design the visuo-haptic simulator, implement its use, and administer an assessment tool to measure the impact of the use of the haptic simulators in the learning process (e.g., [7,8,21,25,29,31]). There has not been a general methodological scheme to approach this problem.

3. Authors’ Previous Work

The authors of this work have contributed important efforts in the development of visuo-haptic applications focused on education. In this section, the main results of these studies are summarized, including the scenarios that will be discussed in Section 5 below.

In Neri et al. (2015) [28], three visuo-haptic simulators designed to feel the strength nature of electric forces were presented: point charge; line charge; and plane charge. The purpose of this preliminary study was to compare the dependence of the electric forces with the distance for these charge distributions. These simulators were developed with Chai 3D and Open-GL. From a perception survey, undergraduate engineering students revealed that most of them expressed a very positive opinion on the use of the visuo-haptic simulators and expressed that these facilitated their understanding of the nature and origin of electric forces. Preliminary learning gain studies with experimental and control groups of students have suggested that students from the experimental group achieve a better understanding than those from the control group.

Neri et al. (2018) [22] developed four visuo-haptic environments for understanding classical mechanics concepts: (i) block on a rough incline; (ii) rotating door; (iii) double Atwood’s machine; and (iv) rolling reel. The visualization of the visuo-haptic simulators was displayed in two dimensions. An architecture for developing the scenarios was presented. The visuo-haptic environments were developed with Chai 3D and Open-GL, and similar students’ perception and learning gains studies were performed. Very good students’ perceptions were obtained, and statistically significant learning gains for experimental group students were also achieved for the block on a rough incline and the double Atwood’s machine scenarios.

Escobar-Castillejos et al. (2020) [9] proposed a novel architecture for the fast development of interactive visuo-haptic applications in game engines. They validated the architecture developing and implementing a plugin for coupling the haptic device with UNITY, named the Haptic Device Integration for Unity (HaDIU). Their approach allowed achieving remarkably better visualizations than those obtained in existing single-purpose applications. Additionally, their results suggested that a faster development of interactive visuo-haptic simulators could be achieved by implementing this approach than using traditional techniques, especially in terms of reducing the times used for developing the technological model and for the calibration and testing phase of the methodology.

In a recent work, Neri et al. (2020) [6] presented a new version of the visuo-haptic simulators for the electric forces of the preliminary work by [28], which included the new architecture and the HaDIU plugin proposed by [9]. Moreover, several design elements were incorporated to improve the 3D perception of the simulators. A pre-test and post-test were applied to a student sample before and after working with the simulators, respectively, and statistically significant average learning gains were obtained for the comprehension of the force dependence in the case of the line charge and plane charge visuo-haptic environments. These results suggested that the use of the visuo-haptic simulators may help students to better identify the dependence of electric forces on distance. It was also observed that the potential effect of improving the understanding of electric interactions was higher among students with lower previous familiarity with these topics as compared to more advanced students. Additionally, through perception surveys, it was found that the students very much liked the haptic activity.

The aforementioned visuo-haptic developments used diverse architectures and technological approaches requiring diverse amounts of effort and development time. Therefore, considering the experience acquired through designing, developing, implementing, and assessing visuo-haptic environments, the authors decided to propose an integrated, structured, and comprehensive methodology, named VIS-HAPT, covering all the development and implementation phases, in order to help future developers of visuo-haptic environments reduce the time and effort required by the design and implementation of their environments. This is the aim of the present research.

4. The VIS-HAPT Methodology

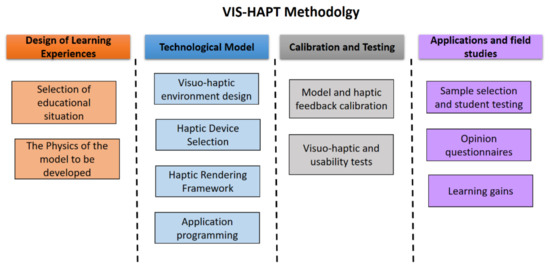

In this section, the VIS-HAPT methodology is described. This methodology was divided into four major phases: (a) design of the learning experience; (b) definition of the technological model; (c) environment calibration and tests; and (d) recommendations for application in a student environment and field studies (Figure 1).

Figure 1.

VIS-HAPT methodology. The diagram shows the four major phases of the development of visuo-haptic environments in Education 4.0: (a) design of the learning experience; (b) technological model; (c) calibration and testing; and (d) application and field studies.

4.1. Design of Learning Experiences

The design of virtual learning environments should consider an innovative learning experience that attracts students’ attention. In this phase, the goal is the conceptualization of the visuo-haptic learning environment.

4.1.1. Selection of Educational Situation

Visuo-haptic applications have been successfully applied in various simulators in both physics instruction [6,22,30] and medical training [14,15]. In our case, the educational scenario selection is being crafted by first highlighting the main physics concepts in which forces are relevant. It is important to choose ideas that are usually hard to grasp and make them user friendly when using the visuo-haptic environment. Secondly, the careful simulator design of physical phenomena must be contextualized in a problem. Then, the main variables must be identified, and realistic ranges must be defined. Once all this is set, the student can freely choose the set of input numerical values for the corresponding variables in a particular exercise. Dynamic visualizations of the applied forces lead to a dynamic output that must be analyzed. The following drawbacks were identified in the previous process: (a) lengthy physics scenarios; (b) complex scenarios leading to hard programming codes; (c) input and output variables that are not properly visualized; and (d) an inadequate deployment of the graphic design.

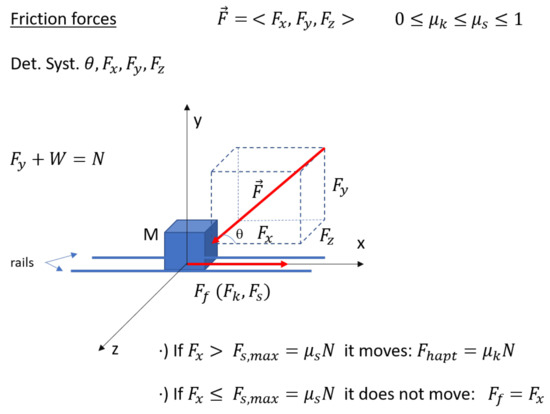

In order to construct a high-quality educational scenario, we suggested the following: (a) add the sense of touch to enrich the learning experience; (b) sketch a draft of the physical phenomena with the corresponding equations (see Figure 2); (c) carefully choose the input and output variables; (d) properly time the length of the simulation; and (e) ensure that the variables are clearly visualized so that the phenomena can be easily and better understood.

Figure 2.

Sketch of the displacement of a box in a track when a force is applied with a haptic device. The mass of the box as well as the static and dynamic frictional coefficients can be modified at will.

4.1.2. The Physics of the Model to Be Developed

Once the learning experience is selected, the next step is to model the characteristics and behavior of the bodies that will occur in the environment. The realistic ranges of variables such as the size, mass, density, and gravity of the objects must all be incorporated. The applied forces such as weight and friction must be included in the environment. Another key element is the correct use of the related physical equations.

The identified difficulties include: (a) trivializing the model to take the challenging part out of the experience; (b) not taking all the possible variable combination into account in the programmed equations; (c) oversimplifying the realistic scenario to easily model it in a haptic environment; (d) defining inadequate variable ranges; (e) lengthy simulation times; and (f) not including a wide variety of conditions in the model to give rise to non-realistic scenarios which may even confuse the students. The worst that can happen is that students leave the learning experience with distorted concepts and misunderstandings.

To overcome the aforementioned difficulties, physics experts must design the learning model. This way, the laws of physics will be carefully incorporated, and the possible realistic alternatives will be provided to the students within the correct variable ranges. The duration must be such that the simulation can be enjoyed and appreciated. The visualization of the physical concepts must be clear, so that the learning experience can go beyond end-of-book problems.

Another good idea is to use physics engines to decrease the modeling efforts required by both the physics and programming. As explained in Section 4.2, it is possible to use physics engines that are already available in development frameworks. For example, the Nvidia PhysX engine can simulate the behavior of an object on the GPU and handle interactions between objects. However, in some versions, the information on the collisions between solid and deformable objects is not available. Therefore, in some environments, it is necessary to program additional libraries to achieve proper collisions between objects [13]. Currently, there are game engines, such as Unity and Unreal, that integrate physics engines to model physical behavior and which can add an attractive visual modeling feature to the environment [9].

4.2. The Definition of the Technological Model

The next design phase should consider the development of the application, the appropriate use of the screen space for interaction, and the integration of haptic devices in the simulation.

4.2.1. Visuo-Haptic Environment Design

Once the model is conceptualized, application developers should design the visuo-haptic environment. In this process, the developers visualize the type of aid that would enhance students’ interactions (a variety of views in the simulation, visual panels where interactions parameters will be displayed, guiding lines to help students locate in the virtual space, etc.). Developers should consider user experience practices to motivate students and engage them in understanding a complex concept or activity.

The identified drawbacks in this process include: (a) user manual guides are not provided as to take full advantage of the simulator; (b) some of the visualization spaces are either too small or too big; (c) lack of auxiliary elements that can enrich the phenomena; and (d) the presence of too many significant figures in the outcome variables blurring the physical meaning of the solution.

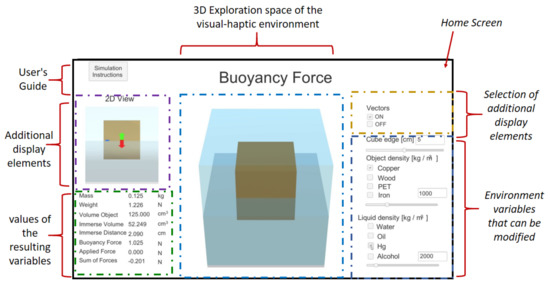

The authors strongly recommend the careful consideration of which simulation parameters can be controlled by the students (size, mass, gravity, density) and where to deploy them on the screen. Allowing students to modify environmental variables can increase their interactivity. Consequently, student engagement will last much longer, leading to the growth of learning outcomes. Figure 3 shows an example of the design of a developing interface for a visuo-haptic simulator designed to teach the buoyancy phenomenon.

Figure 3.

Example of an interface design in a visuo-haptic environment focused on teaching the buoyancy concept.

4.2.2. Haptic Device Selection

Once the visual design and selection of the interaction are established, the developers and physics experts should decide which haptic device is better suited for the interaction medium between the simulator and user. Haptic devices are electromechanical tools used to recreate the sense of touch in virtual environments (Figure 4). These can either be impedance-based or admittance-based [32]. In the first case, the user sends the interaction movements to the simulator, and the application is responsible for calculating the feedback force. On the other hand, in admittance-based haptic devices, the user applies a force on the device, which reacts by displacing it and sends a proportional distance to the application to update the visuals in the simulation. In general, impedance-based haptics are preferred due to their lower cost and size. However, it must be noted that admittance-based haptic devices provide freedom in the mechanical design and deliver greater force and stiffness feedback.

Figure 4.

Novint Falcon haptic device used in interactive visuo-haptic environments.

Haptic devices can also be classified by the number of degrees of freedom and degrees of force feedback they provide. Commercial haptic devices usually consider three or six degrees of freedom in the design; however, low-cost devices typically provide three degrees of force feedback. A haptic with six degrees of force feedback is required if torque is also to be modeled. This specification is principally required in visuo-haptic simulations used in the medical field [14].

Several obstacles must be overcome when selecting appropriate haptic devices. For example, this may be the need to import the devices in some countries where additional customs fees considerably delay the entire design process. Maintenance is not usually provided by dealers or manufacturers in all countries. Additionally, if a certain educational scenario requires more than three degrees of freedom, it is difficult to modify the experimental setting to include additional degrees of freedom.

A careful selection of the brand and model strongly suggests avoiding unnecessary setbacks in the simulator requirements. One must always first look into academic agreements to reduce the costs. It is advisable to avoid, if possible, customs fees, and it is strongly recommended that maintenance services be provided.

The use of haptic devices has considerably increased in the last decade in education, gaming, and training (automotive, aviation medicine, robotics, etc.). The Novint Falcon devices have the benefit of being low-cost devices with good expansion capabilities. Their design is based on the high-end OMEGA haptic device, developed by Force Dimension. However, Novint Technologies has been out of business since 2010; therefore, Force Dimension, which has continued to provide software support to these devices and their stores, such as haptic houses, has continued the commercialization of these types of devices [33].

4.2.3. Haptic Rendering Framework

Haptic rendering is the process by which the user can feel, touch, and manipulate virtual objects through a haptic interface [32]. In haptic-based simulators, the user interacts with the environment using a joystick. To enable haptic rendering, a renderer framework is needed to communicate applications with haptic devices. Currently, there are four solutions for haptic rendering:

- H3DAPI, previously known as HAPI, is an open source, cross-platform haptics rendering engine entirely written in C++. It is device-independent and supports multiple currently available commercial haptics devices;

- OpenHaptics is a proprietary haptics library developed by SensAble Technologies, currently known as Geomagic. However, it only works with haptics that are manufactured by them;

- Haptic Lab, previously known as Immersion Studio, is an application aimed at software design, where users can add multi-layer, high-fidelity haptic feedback with ease using TouchSense Force technology;

- HDAL is a software development kit for connecting Novint products. It enables users to add the haptic capability to their application and display high-fidelity force rendering.

The library implementation to correct connectivity between the application and the haptic device is a pitfall that must be overcome. Generally, haptic device manufacturers provide SKD to allow haptic representation in virtual environments. However, engine games do not have native functions that can easily admit them. Therefore, third party solutions heavily depend on the CPU architecture and do not consider multiple devices’ operability. Moreover, the visuo-haptic solution creation usually takes a long time due to the complexity of graphic engines that can deliver state-of-the-art visualizations.

To solve some of the mentioned difficulties, an HaDIU architecture was designed [9]. It allows one to successfully develop multiple device connectivity in game engines. At the same time, the performance of the application is not affected by multiple connectivity. One must have a functional blending of different environments which accounts for the connectivity of each device as well as optimizes the developing times of the visuo-haptic applications.

4.2.4. Application Programming

Once these requirements are considered, developers need to decide how the visuo-haptic environment can be best programmed. There are diverse programming languages that can be used to program 2D and 3D applications (C++, C#, Java, Python, etc.). Works on incorporating haptic devices in simulation frameworks can be found in the research of Conti et al. [34]. They created Chai3D, a C++ cross-platform graphic simulation framework. It is open source and supports a variety of commercially available haptic devices. Moreover, efforts focused on incorporating haptic devices’ in-game engines can be found in the work of Escobar-Castillejos et al., where the authors added the connectivity of haptic devices (Novint Falcon, Omega, and Geomagic Touch) with Unity game engine [9]. The latter is an example of how current approaches are considering the use of game engines for the development of visuo-haptic simulators. Game engines are software applications that facilitate the implementation of graphics rendering, collision detection, and physics simulation, which allow the developer to focus on the game logic and interactions.

Before selecting the haptic rendering module, researchers should select which technology they will use in the visual rendering and visuo-haptic environment modules. As stated above, game engines are great tools for the development of high-end applications such as games and simulations. In the case of Unity, it is a cross-platform game engine developed by Unity Technologies. It can be used for 2D or 3D graphics applications, as well as interactive virtual environments. This game engine is recommended for its ease of use and scripting language, which facilitates the definition of the simulated elements’ behaviors.

Finally, HaDIU Unity plugin is recommended as the haptic rendering module in the pipeline [9]. This plugin enables the proper connection between a haptic device and the Unity game engine. A challenge that researchers face during the development of visuo-haptic applications is the proper addition of haptic devices in the simulation (see Section 4.2.2). Developers should consider that for haptics devices to work properly, these devices need to run at a minimum rate of 1000 Hz to avoid anomalies in their performance. This plugin has been tested and used to develop electromagnetism simulators and it obtained proper and stable interactions between the user and the visuo-haptic virtual environment [6].

4.3. The Calibration of the Environment and Tests

In phase three of the methodology, developers and physics experts need to test the preliminary version of the visuo-haptic simulator.

4.3.1. Model and Haptic Feedback Calibration

Physics experts need to test the simulator to validate that the values calculated by the simulator are close or equal to the force feedback that the student will feel. Moreover, in this step, developers and physics experts need to test how the haptic device reacts according to the proposed physics model.

If the feedback forces’ response is not correctly calibrated in the haptic device, instability in the simulation may occur. If the defined variables are within an unrealistic range, the entire learning activity will be counterproductive. It is strongly recommended to perform several tests, and let developers establish the limits in the simulation (removing interaction variables, setting fixed values to physics parameters, or setting a range of values to physics variables) to avoid instabilities during the simulation.

4.3.2. Visuo-Haptic and Usability Tests

Usability tests measure the ease of use of the set of objects of elements in the interface design and consist of selecting a group of users (average students) who will use the visuo-haptic environment and ask them to carry out the tasks for which the environment is designed. In addition to the calibration of the behavior of the bodies, according to the physical model designed by the experts, it is important to carry out usability tests by these representative users who will use the environment [35]. In this step, the design and development team take note of the interaction, errors and difficulties users may encounter.

The usability metrics successfully used by the authors’ team in visuo-haptic environments include: (a) the accuracy in identifying the mistakes made by the test students and whether these were recoverable when using the appropriate data or procedures; (b) the time required to complete the activity; and (c) the student memory, to track how much the student remembers during a given period without using the environment.

4.4. Recommendations for Application in a Student Environment and Field Studies

Once the visuo-haptic environment is mature—that is, it behaves without errors, complying with the laws of physics according to the models designed by the experts and with the appropriate usability metrics—its application in real learning environments must be carefully implemented to identify the benefits for the students.

The authors propose the following aspects for the assessment: (a) sample selection considering the control and experimental groups for student testing; (b) administration of opinion questionnaires; and (c) measurement of student learning gains.

4.4.1. Sample Selection and Student Testing

Student control and experimental groups are needed to measure the benefits of using the visuo-haptic environments for students to acquire knowledge and develop reasoning skills. The experimental group uses the visuo-haptic environment while the control group does not. One of the difficulties faced is that, in general, the processes for registering groups and assigning teachers do not depend on researchers. If this is the case, the allocation of participants is carried out using quasi-experimental designs [36]. It is recommended that the deliberate manipulation of at least one independent variable to observe its effect and its relationship with one or more dependent variables is considered. Additionally, when defining the number of experimental groups and the control groups, it is important to consider the equivalence of the didactic resources given to each group, such as the content, audiovisual materials, labs, and lecture time.

4.4.2. Opinion Questionnaires

Opinion questionnaires are a detailed instrument to solicit personal comments and points of view, in this case intended to be applied to students about the design and use of the visuo-haptic environments. These should be addressed to the students after they used the environment, questioning them about its different cognitive and functioning aspects. The questionnaires usually contain closed questions (multiple choice), using a Likert scale to facilitate their processing, and open questions to know the students’ points of view about specific aspects of the environment and their interaction with it. Opinion questionnaires are important and include questions related to the general usability of the system, its simplicity, and the accuracy between the system and the real world. Usually, the results of opinion tests can be qualitatively evaluated. Once the opinion questionnaires are designed and applied, we recommend performing Cronbach statistical validations to guarantee the robustness of the instrument.

4.4.3. Learning Gains

Learning gains are used to test whether the use of the visuo-haptic environment has a positive effect on student outcomes. Initially, the pre-test and post-test are administered to both the experimental and control groups. The pre-test is an indicator of the student prior knowledge about a given subject. The experimental group interacts with the visuo-haptic environment, while the control group is given traditional content. Finally, the post-test is also administered to the whole student sample. To measure the effectiveness of the applied methodology in the student learning process, it is useful to define either an absolute learning gain: , where and are the average post-test and average pre-test for either the experimental or control groups. Additionally, a relative learning gain may be calculated [37], such that: . For a given student, the relative learning gain is the actual student learning gain relative to the maximum student learning gain they may attain.

For the results to be meaningful, we recommend that during the process (a) the sample is as large as possible, for both the experimental and control groups; (b) a professor teaches both the experimental and control groups, to mitigate as much as possible the “teacher effect”; and (c) the pre-test and post-test are administered under similar and controlled conditions to both groups.

We also suggest validating the significance of the results by means of t-test and p-value analyses, having defined null () and alternative () hypotheses, or by the effect–size considering the average gains and their standard deviations [38,39].

5. VIS-HAPT Methodology, Results and Discussion

To determine the usefulness of the VIS-HAPT methodology, various development studies of visuo-haptic environments were carried out with and without the proposed methodology.

5.1. Description of the Selected Visuo-Haptic Environments

The four selected visuo-haptic environments common to both cases—with and without methodology—are described as follows:

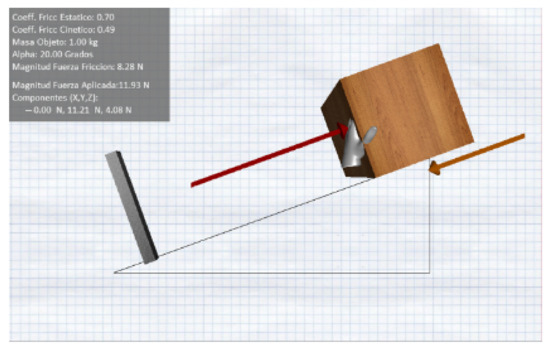

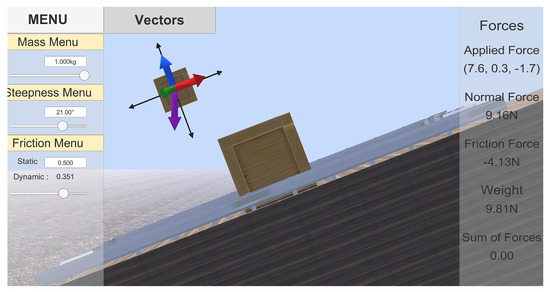

- Block on a rough incline. The main purpose of this scenario is that students understand the nature of the friction force that a rough incline exerts on a block located on it (Figure 5 and Figure 6). The user manipulates a virtual hand or a haptic sphere to push the block upwards or downwards the incline with an applied force, so as to feel the actual force that the block exerts on the virtual hand or the haptic sphere. The simulator reproduces the resulting block’s motion. The user can select the values of the static and kinetic friction coefficient, the block’s mass, and the angle of the incline.

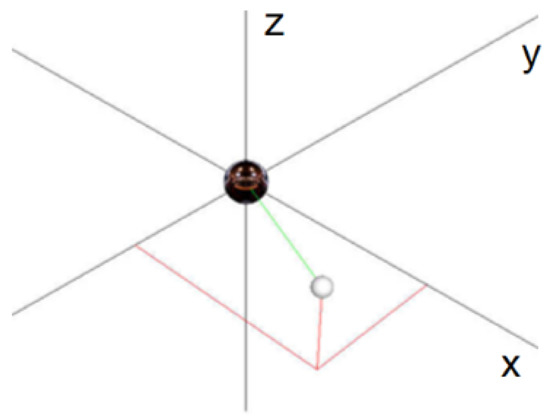

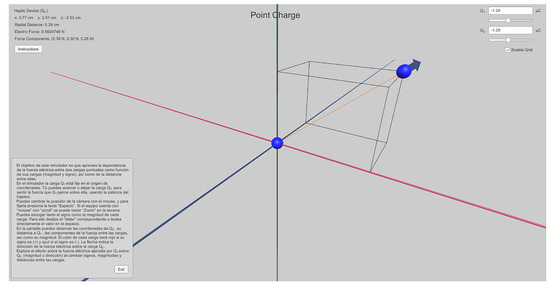

- Point charge. This simulator allows exploring the electric force exerted between two-point charges (Figure 7 and Figure 8). A point charge is fixed at the origin of the reference frame and another point charge can be displaced around the former by the user, changing the distance between the charges. The magnitudes and signs of the charges can be changed by the user. The purpose of the simulator is for the user to feel the strength of the attractive or repulsive force that the charge at the origin exerts on the other one at different distances and for different charge magnitudes.

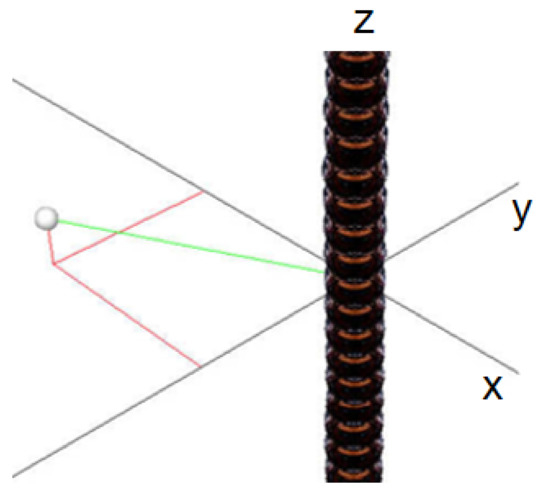

- Line charge. This simulator is aimed at assessing the direction and strength of the electric force exerted by a long straight-line charge on a point charge (Figure 9 and Figure 10). The line charge is fixed along the z axis and the point charge can be displaced at any desired position around the line charge. Users can select the values and signs for both the linear charge density and the point-charge, so as to feel the force that the line charge exerts on the point charge at different distances and for different charge magnitudes.

- Plane charge. In this simulator, the force exerted by a very large plane charge on a test point charge is explored (Figure 11 and Figure 12). The plane charge is fixed at the YZ plane, and the users can select the values and signs of the plane charge density and the point charge. Displacing the point charge at any desired position around the plane in the environment, the users can feel the strength and direction of the electric force exerted by the plane charge on the point charge at different distances and for different charge magnitudes.

5.2. Technological Model Developments

A comparison of the technological model developments with and without VIS-HAPT methodology is presented to emphasize the affordances and the improvements introduced with the methodology. To make this comparison more visual and direct, the main characteristics of the two versions of the four selected visuo-haptic simulators are described below.

5.2.1. Block on a Rough Incline

Without VIS-HAPT methodology: The development of this environment using Chai 3D—OpenGL, without applying the proposed methodology, has been reported along with other developments by [22]. Figure 5 shows the application interface. A 2D visualization was displayed with a virtual hand used to apply the external force (red arrow). The corresponding friction force is shown by the brown arrow. A box was included to enter the physical data: block mass; friction coefficient (static or kinetic); and incline angle. The box also displays the values for the applied force and the corresponding friction force.

Figure 5.

Interface elements of the “block on a rough incline” visuo-haptic environment developed with Chai 3D—OpenGL.

With VIS-HAPT Methodology +HaDIU: The graphical interface was improved showing a 3D display. Additionally, several elements were integrated into the visualization, such as showing or hiding the block’s free-body diagram, and improvements in the interaction scheme, such as sliding bars and spaces, enable directly entering the values for the physical variables: block mass; friction coefficient (static or kinetic); and incline angle (shown in the left panel). The applied force, the friction force, and the normal forces are displayed on the right panel.

Figure 6.

Interface elements for the “block on a rough incline” visuo-haptic environment developed with VIS-HAPT and HaDIU.

5.2.2. Point Charge

Without VIS-HAPT methodology: The development of this environment using Chai 3D—OpenGL, without applying the proposed methodology, was reported by [28]. Guiding lines and the line connecting the charge at the origin of the coordinate system and the movable point charge are included to provide a 3D visualization. Figure 7 shows the corresponding application interface.

Figure 7.

Interface elements of the “point charge” visuo-haptic environment developed with Chai 3D—OpenGL.

With VIS-HAPT Methodology +HaDIU: This visuo-haptic simulator was previously presented in [6]. For the electric force scenarios, the graphical interface was improved with a more realistic 3D visualization, including guiding lines, the line connecting the two charges, and the electric force vector on the mobile point charge, with changing magnitude according to the force strength between the charges. The students’ learning experience was improved by including on-screen user guides available to them when required. Additionally, the possibility of changing the reference axes and fixing them at different positions was included to help users choose a better visual perspective. Spaces to directly enter the values for physical variables and sliding bars were also added. The values for the distance between charges and the magnitude and the components of the electric force exerted on the mobile charge are also displayed. Figure 8 shows the corresponding application interface.

Figure 8.

Interface elements of the point charge visuo-haptic environment developed with VIS-HAPT and HaDIU architecture.

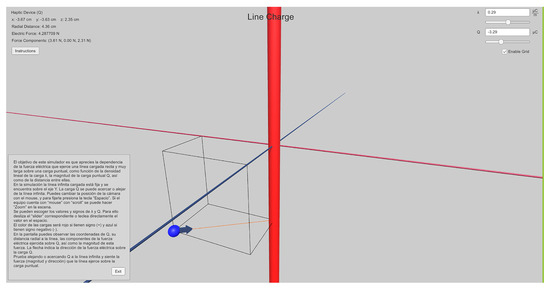

5.2.3. Line Charge

Without VIS-HAPT methodology: The development of this environment using Chai 3D—OpenGL, without applying the proposed methodology, was reported by [28]. In a similar way to the point charge visuo-haptic simulator, guiding lines and the line connecting the line charge and the movable point charge are included to provide a 3D visualization. Figure 9 shows the corresponding application interface.

Figure 9.

Interface elements of the “line charge” visuo-haptic environment developed with Chai 3D—OpenGL.

With VIS-HAPT Methodology + HaDIU: This visuo-haptic simulator was previously presented in [6]. As in the case of the point charge environment above, the graphical interface was also improved with a more realistic 3D visualization, including guiding lines, the line connecting the line charge and the mobile point charge, and the electric force vector on the point charge, with changing magnitude according to the distance between the line and point charges. The students’ learning experience was improved by including on-screen user guides available to them when required. Additionally, the possibility of changing the reference axes and fixing them at different positions was included to help users choose a better visual perspective. Spaces to directly enter the values for physical variables and sliding bars were also added. The values for the distance between line and point charges, and the magnitude and components of the electric force exerted on the mobile charge, are displayed on the screen. Figure 10 shows the corresponding application interface.

Figure 10.

Interface elements of the line charge visuo-haptic environment developed with VIS-HAPT and HaDIU architecture.

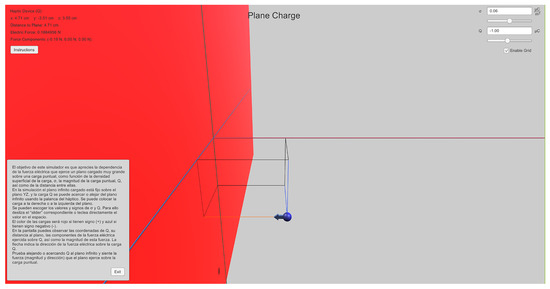

5.2.4. Plane Charge

Without Methodology Chai 3D—OpenGL: The development of this environment using Chai 3D—OpenGL, without applying the proposed methodology, was reported by [28]. The plane charge is seen edge-on, shown as a line on the XZ plane, and therefore providing a 2D visualization of the scenario. Guiding lines show the distance of the point charge to the plane charge and to the XY plane. Figure 11 shows the corresponding application interface.

Figure 11.

Interface elements of the “plane charge” visuo-haptic environment developed with Chai 3D—OpenGL.

With VIS-HAPT Methodology +HaDIU: This visuo-haptic simulator was also previously presented in [6]. The graphical interface was also improved with a more realistic 3D visualization. The plane charge is presented in perspective, guiding lines are included, and the electric force vector on the mobile point charge is also shown, with changing magnitude according to the distance between the plane and point charges. The students’ learning experience was improved by including on-screen user guides available to them when required. Spaces to directly enter the values for physical variables and sliding bars were also added. The values for the distance between the plane and point charges, and the magnitude and components of the electric force exerted on the mobile charge are displayed on the screen. Figure 12 shows the corresponding application interface.

Figure 12.

Interface elements of the plane charge visuo-haptic environment developed with VIS-HAPT and HaDIU architecture.

5.3. Comparative Study of the Times Used for Each Phase with and without VIS-HAPT Methodology

5.3.1. Reported Times without and with Methodology

The times recorded (in hours) for the different phases—with and without applying the proposed methodology—were estimated from a weekly log in which the times employed in each stage by the developers and researchers were carefully recorded, as required by our institution. The recorded times for the different phases considered the following aspects:

- Learning experience design. The time dedicated in this phase includes: (a) the number of sessions and hours spent on selecting each educational scenario; (b) the time needed to design the contextualized physical phenomenon; (c) the time spent on the design of the visualization elements; and (d) the time invested in deriving the physical model needed to build the visuo-haptic environment;

- Definition of the technological model. In this phase, we consider (a) the time taken to prepare the visuo-haptic design; (b) the time of the sessions dedicated to defining the visuo-haptic device and programming its connections to the environment; (c) the time invested to enable the haptic rendering; and (d) the time of the application programming.

- Calibration and testing. The time recorded for this phase includes: (a) the time for planning and executing the tests with model students; (b) the time needed to recalibrate the models; and (c) the time spent in the planning, design, and application of the usability tests.

- Applications and field studies. This phase includes (a) the recorded planning times for the implementation of the field studies with experimental and control groups; (b) the time needed for the design the activity guides given to the students during the learning experience; (c) the time needed design and administer the pre-test and post-test; (d) the time needed to design and administer the opinion surveys; and (e) the time taken to analyze the data and results to measure learning gains and size effects.

The reported times for phases 1 and 4 were estimated using the hours registered in our session logs, considering the number of sessions held by our team working on task (during the learning experience design), as well as through the different moments needed to carry out the field studies of the visuo-haptic application with the students.

On the other hand, the times reported in phases 2 and 3 were taken from [9] and were used here to compare the Chai3D + OpenGL framework with the HaDIU plugin for each visuo-haptic simulator (as can be seen in Table 1 below), along with phases 1 and 4 included in the present work.

Table 1.

Times used for the development of the different phases of the visuo-haptic environments using the VIS-HAPT and HaDIU architecture. Data marked with * were published by [9].

Table 1 and Table 2 show the recorded times (in hours) for the different phases with and without applying the proposed methodology, respectively.

Table 2.

Times used for the development of the different phases of the visuo-haptic environments without VIS-HAPT methodology. Data marked with * were reported in [9].

Comparing the times in Table 1 and Table 2, the methodology helped to substantially reduce these times in all four phases. The average times dedicated to developing and implementing the visuo-haptic environments for these phases were reduced by 42%, 69%, 26% and 27%, respectively, with an average reduction of 44% compared to the cases without methodology. Therefore, the obtained times with the VIS-HAPT methodology are significantly smaller than those obtained without following the phases of the methodology.

It is important to recognize that this remarkable time decrement may also be in part due to the acquired knowledge of the visuo-haptic environments’ technological models, as well as to the experience gained by the authors in the application and field studies phase.

Our previous developments and publications (Section 3) were mainly focused on educational field studies to determine learning gains and student perception. Nevertheless, we are not yet including a learning-gain comparative study in the present work since we have not had the appropriate conditions during the application and field study processes such as: (a) appropriate student samples; (b) different student conditions; and (c) haptic devices conditions.

5.3.2. Significant Test for Time Differences with and without Methodology

A hypothesis test of paired samples was carried out to explore whether there is a statistically significant difference in the used times in each phase with and without methodology, with a null hypothesis () and an alternative hypothesis (). The results are shown in Table 3.

Table 3.

Hypotheses test of the paired samples of reported times with and without VIS-HAPT methodology.

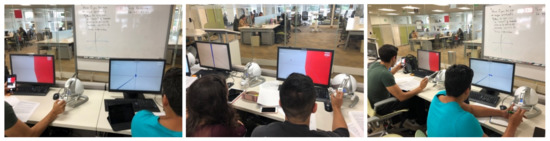

5.3.3. Student Perception Surveys

In addition to recording times for the design, development, calibration and testing, as well as application and field studies, usability studies for the visuo-haptic environments were also performed based on students’ perception questionnaires after experimenting with the visuo-haptic simulators. Figure 13 shows some images of students working with the visuo-haptic simulators of electrical forces in the laboratory.

Figure 13.

Students interacting with the visuo-haptic environments of electrical forces.

The questions of the instrument used to study students’ perceptions are presented in Table 4. A three-step Likert-scale was used, where 1 = disagreement or total disagreement; 2 = neutral; and 3 = agreement or total agreement.

Table 4.

Questions on the exit perception survey for electric force simulators, adapted from [28] without methodology and [6] with the proposed methodology.

From Table 4, students’ perceptions of the use of the electric force visuo-haptic simulators were very positive for all questions and for the two approaches, without methodology or with methodology. Furthermore, a closer look at the values reveals that the students’ perceptions were even better for the case with the proposed methodology. In this latter case, no student rated any question with a score of “1”, and more than 90% of them assigned “3” to all items.

A hypothesis test of the comparison of percentages, using Fisher’s exact method, was carried out with Minitab for the results of Table 4. It is presented in Table 5, with and . In all cases, a higher proportion of agreement and total agreement students using the environments with methodology than without was obtained. However, the difference was only significant at the level of = 0.05 in item PQ3 (appropriate and attractive visualization).

Table 5.

Hypotheses test of the paired samples of the perception survey with and without methodology.

6. Conclusions

A well-structured methodology, named VIS-HAPT, was proposed to carry out the process of designing, implementing, and assessing learning experiences incorporating visuo-haptic devices for engineering students. The methodology is organized in four phases: (a) learning experience design; (b) development of the technological model; (c) environment calibration and testing; and (d) application and field studies. With VIS-HAPT methodology, it was possible to: (i) create better scenarios to enrich the learning experiences by adding the sense of touch; (ii) make more efficient processes to build the physical models of the selected scenarios; (iii) standardize the interface design processes to provide better quality visualizations; (iv) establish a process to select suitable haptic devices to optimize programming efforts; (v) integrate the HaDIU generic architecture to Unity game engine in order to facilitate the operation of haptics from different brands and models; (vi) calibrate the process of the physical model used in the simulator coupled to the haptic device; (vii) standardize the performance of usability tests with physics experts and students; and (viii) improve the selection process of student samples and the design of perception questionnaires.

A significant reduction in the time and effort by approximately 44% for all four phases of the proposed methodology was attained, as compared to the cases without methodology. Despite the fact that the tests were only carried out with four visuo-haptic environments, significant reduction times were obtained in almost all phases, and it can be concluded that the times were shorter when the methodology was used. The results of this work also suggest a slight additional improvement in students’ perceptions when applying the VIS-HAPT methodology, making students feel comfortable with the improvements introduced to their learning activities.

In terms of future work, the authors envisage the design of new visuo-haptic environments with the VIS-HAPT methodology for physical scenarios that are typically difficult for students and that involve the use of forces. Additionally, it will be necessary to perform further learning gains studies, comparing the results of experimental and control groups to better assess the impact of the use of visuo-haptic environments on student outcomes. When transferring these benefits to the Education 4.0 framework, near-reality learning experiences should be added, with multiple applications in professional performance, for students to acquire knowledge and develop skills to face the challenges of Industry 4.0.

Author Contributions

Conceptualization, J.N. and L.N.; Data curation, A.G.-N.; formal analysis, V.R.-R. and A.G.-N.; funding acquisition, A.M.; investigation, V.R.-R. and R.M.G.G.-C.; methodology, J.N. and D.E.-C.; project administration, J.N.; resources, A.M.; software, D.E.-C.; supervision, A.M.; validation, L.N.; visualization, J.N., A.G.-N. and D.E.-C.; writing—original draft, L.N., J.N. and D.E.-C.; writing—review and editing, L.N., V.R.-R., R.M.G.G.-C. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the NOVUS Grant (PEP No. PHHT032-17CX00004), the Writing Lab, the TecLabs, and the Institute for the Future of the Education of the Tecnologico de Monterrey.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the Vicerrectoría de Investigación y Posgrado, the Research Group of Product Innovation, the Cyber Learning and Data Science Laboratory, and the Science Department of Tecnologico de Monterrey, Mexico City Campus. Finally, we would like to thank Roberto Alejandro Cardenas Ovando for their help during the development of the visuo-haptic simulations.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Domínguez Osuna, P.M.; Oliveros Ruiz, M.A.; Coronado Ortega, M.A.; Valdez Salas, B. Retos de ingeniería: Enfoque educativo STEM+A en la revolución industrial 4.0. Innov. Educ. 2019, 19, 15–32. [Google Scholar]

- Miranda, J.; Navarrete, C.; Noguez, J.; Molina-Espinosa, J.M.; Ramírez-Montoya, M.S.; Navarro-Tuch, S.A.; Bustamante-Bello, M.R.; Rosas-Fernández, J.B.; Molina, A. The core components of education 4.0 in higher education: Three case studies in engineering education. Comput. Electron. Eng. 2021, 93, 107278. [Google Scholar] [CrossRef]

- Ramírez-Montoya, M.S.; Loaiza-Aguirre, M.I.; Zúñiga-Ojeda, A.; Portuguez-Castro, M. Characterization of the Teaching Profile within the Framework of Education 4.0. Future Internet 2021, 13, 91. [Google Scholar] [CrossRef]

- Barsalou, L.W. Grounded Cognition. Annu. Rev. Psychol. 2008, 59, 617–645. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shapiro, L. Embodied Cognition, 2nd ed.; Routledge: Milton, UK, 2019. [Google Scholar]

- Neri, L.; Robledo-Rella, V.; García-Castelán, R.M.G.; Gonzalez-Nucamendi, A.; Escobar-Castillejos, D.; Noguez, J. Visuo-Haptic Simulations to Understand the Dependence of Electric Forces on Distance. Appl. Sci. 2020, 10, 7190. [Google Scholar] [CrossRef]

- Hamza-Lup, F.G.; Sopin, I. Web-Based 3D and Haptic Interactive Environments for e-Learning, Simulation, and Training. In Web Information Systems and Technologies; Cordeiro, J., Hammoudi, S., Filipe, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 18, pp. 349–360. [Google Scholar] [CrossRef] [Green Version]

- Hamza-Lup, F.G. Kinesthetic Learning—Haptic User Interfaces for Gyroscopic Precession Simulation. Rev. Romana Interactiune Om-Calc. 2019, 11, 185–204. [Google Scholar]

- Escobar-Castillejos, D.; Noguez, J.; Cárdenas-Ovando, R.A.; Neri, L.; Gonzalez-Nucamendi, A.; Robledo-Rella, V. Using Game Engines for Visuo-Haptic Learning Simulations. Appl. Sci. 2020, 10, 4553. [Google Scholar] [CrossRef]

- Hutmacher, F.; Kuhbandner, C. Long-Term Memory for Haptically Explored Objects: Fidelity, Durability, Incidental Encoding, and Cross-Modal Transfer. Psychol. Sci. 2018, 29, 2031–2038. [Google Scholar] [CrossRef]

- Saddik, A.E. The Potential of Haptics Technologies. IEEE Instrum. Meas. Mag. 2007, 10, 10–17. [Google Scholar] [CrossRef]

- Girdler, A.; Georgiou, O. Mid-Air Haptics in Aviation—Creating the sensation of touch where there is nothing but thin air. arXiv 2020, arXiv:cs.HC/2001.01445. [Google Scholar]

- Ricardez, E.; Noguez, J.; Neri, L.; Escobar-Castillejos, D.; Munoz, L. SutureHap: Use of a physics engine to enable force feedback generation on deformable surfaces simulations. Int. J. Adv. Robot. Syst. 2018, 15, 1729881417753928. [Google Scholar] [CrossRef]

- Escobar-Castillejos, D.; Noguez, J.; Neri, L.; Magana, A.; Benes, B. A Review of Simulators with Haptic Devices for Medical Training. J. Med. Syst. 2016, 40, 1–22. [Google Scholar] [CrossRef]

- Escobar-Castillejos, D.; Noguez, J.; Bello, F.; Neri, L.; Magana, A.J.; Benes, B. A Review of Training and Guidance Systems in Medical Surgery. Appl. Sci. 2020, 10, 5752. [Google Scholar] [CrossRef]

- Han, I.; Black, J.B. Incorporating haptic feedback in simulation for learning physics. Comput. Educ. 2011, 57, 2281–2290. [Google Scholar] [CrossRef]

- Pantelios, M.; Tsiknas, L.; Christodoulou, S.; Papatheodorou, T. Haptics technology in Educational Applications, a Case Study. J. Digit. Inf. Manag. 2004, 2, 171–178. [Google Scholar]

- Kamińska, D.; Sapiński, T.; Wiak, S.; Tikk, T.; Haamer, R.E.; Avots, E.; Helmi, A.; Ozcinar, C.; Anbarjafari, G. Virtual Reality and Its Applications in Education: Survey. Information 2019, 10, 318. [Google Scholar] [CrossRef] [Green Version]

- Fernández, C.; Esteban, G.; Conde-González, M.; García-Peñalvo, F. Improving Motivation in a Haptic Teaching/Learning Framework. Int. J. Eng. Educ. 2016, 32, 553–562. [Google Scholar]

- Coe, P.; Evreinov, G.; Ziat, M.; Raisamo, R. Generating Localized Haptic Feedback over a Spherical Surface. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Vienna, Austria, 8–10 February 2021; pp. 15–24. [Google Scholar] [CrossRef]

- Hamza-Lup, F.G.; Baird, W.H. Feel the Static and Kinetic Friction. In Haptics: Perception, Devices, Mobility, and Communication; Isokoski, P., Springare, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 181–192. [Google Scholar]

- Neri, L.; Noguez, J.; Robledo-Rella, V.; Escobar-Castillejos, D.; Gonzalez-Nucamendi, A. Teaching Classical Mechanics Concepts using Visuo-haptic Simulators. Educ. Technol. Soc. 2018, 21, 85–97. [Google Scholar]

- Hamza-Lup, F.G.; Kocadag, F.A.L. Simulating Forces—Learning Through Touch, Virtual Laboratories. arXiv 2019, arXiv:cs.HC/1902.07807. [Google Scholar]

- Zohar, A.R.; Levy, S.T. From feeling forces to understanding forces: The impact of bodily engagement on learning in science. J. Res. Sci. Teach. 2021, 58, 1–35. [Google Scholar] [CrossRef]

- Hamza-Lup, F.; Goldbach, I. Multimodal, visuo-haptic games for abstract theory instruction: Grabbing charged particles. J. Multimodal User Interfaces 2020, 15, 1–10. [Google Scholar] [CrossRef]

- Magana, A.; Sanchez, K.; Shaikh, U.; Jones, M.; Tan, H.; Guayaquil, A.; Benes, B. Exploring multimedia principles for supporting conceptual learning of electricity and magnetism with visuohaptic simulations. Comput. Educ. J. 2017, 8, 8–23. [Google Scholar]

- Shams, L.; Seitz, A.R. Benefits of multisensory learning. Trends Cogn. Sci. 2008, 12, 411–417. [Google Scholar] [CrossRef]

- Neri, L.; Shaikh, U.A.; Escobar-Castillejos, D.; Magana, A.J.; Noguez, J.; Benes, B. Improving the learning of physics concepts by using haptic devices. In Proceedings of the Frontiers in Education Conference (FIE), El Paso, TX, USA, 21–24 October 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Shaikh, U.A.S.; Magana, A.J.; Neri, L.; Escobar-Castillejos, D.; Noguez, J.; Benes, B. Undergraduate students’ conceptual interpretation and perceptions of haptic-enabled learning experiences. Int. J. Educ. Technol. High. Educ. 2017, 14, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Neri, L.; Magana, A.J.; Noguez, J.; Walsh, Y.; Gonzalez-Nucamendi, A.; Robledo-Rella, V.; Benes, B. Visuo-haptic Simulations to Improve Students’ Understanding of Friction Concepts. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; pp. 1–6. [Google Scholar]

- Walsh, Y.; Magana, A.; Feng, S. Investigating Students’ Explanations about Friction Concepts after Interacting with a Visuohaptic Simulation with Two Different Sequenced Approaches. J. Sci. Educ. Technol. 2020, 29, 443–458. [Google Scholar] [CrossRef]

- Coles, T.R.; Meglan, D.; John, N.W. The Role of Haptics in Medical Training Simulators: A Survey of the State of the Art. IEEE Trans. Haptics 2011, 4, 51–66. [Google Scholar] [CrossRef] [PubMed]

- HapticsHouse. HapticsHouse.com—Selling Falcon Haptic Controllers. Available online: https://hapticshouse.com/ (accessed on 17 June 2021).

- Conti, F.; Barbagli, F.; Balaniuk, R.; Halg, M.; Lu, C.; Morris, D.; Sentis, L.; Warren, J.; Khatib, O.; Salisbury, K. The CHAI libraries. In Proceedings of the Eurohaptics, Dublin, Ireland, 6–9 July 2003; pp. 496–500. [Google Scholar]

- Srinivasan, K.; Thirupathi, D. Software Metrics Validation Methodologies in Software Engineering. Int. J. Softw. Eng. Appl. 2014, 5, 87–102. [Google Scholar] [CrossRef]

- Sampieri, H.; Mendoza Torres, C.P. Metodología de la Investigación, 1st ed.; McGraw-Hill: Mexico City, Mexico, 2018. [Google Scholar]

- Hake, R.R. Interactive-engagement versus traditional methods: A six-thousand-student survey of mechanics test data for introductory physics courses. Am. J. Phys. 1998, 66, 64–74. [Google Scholar] [CrossRef] [Green Version]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 1988. [Google Scholar]

- McLeod, S.A. What Does Effect Size Tell You? Simply Psychology. Available online: https://www.simplypsychology.org/effect-size.html/ (accessed on 17 June 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).