Isolated Sandbox Environment Architecture for Running Cognitive Psychological Experiments in Web Platforms

Abstract

:1. Introduction

- Is it possible to reduce the influence of a certain category of devices on the reaction time?

- Which sandbox environment architecture should be used to conduct research in psychology using web platforms?

2. Background

3. Evaluation of Reaction Time Bias

4. Proposed Sandbox Environment Architecture

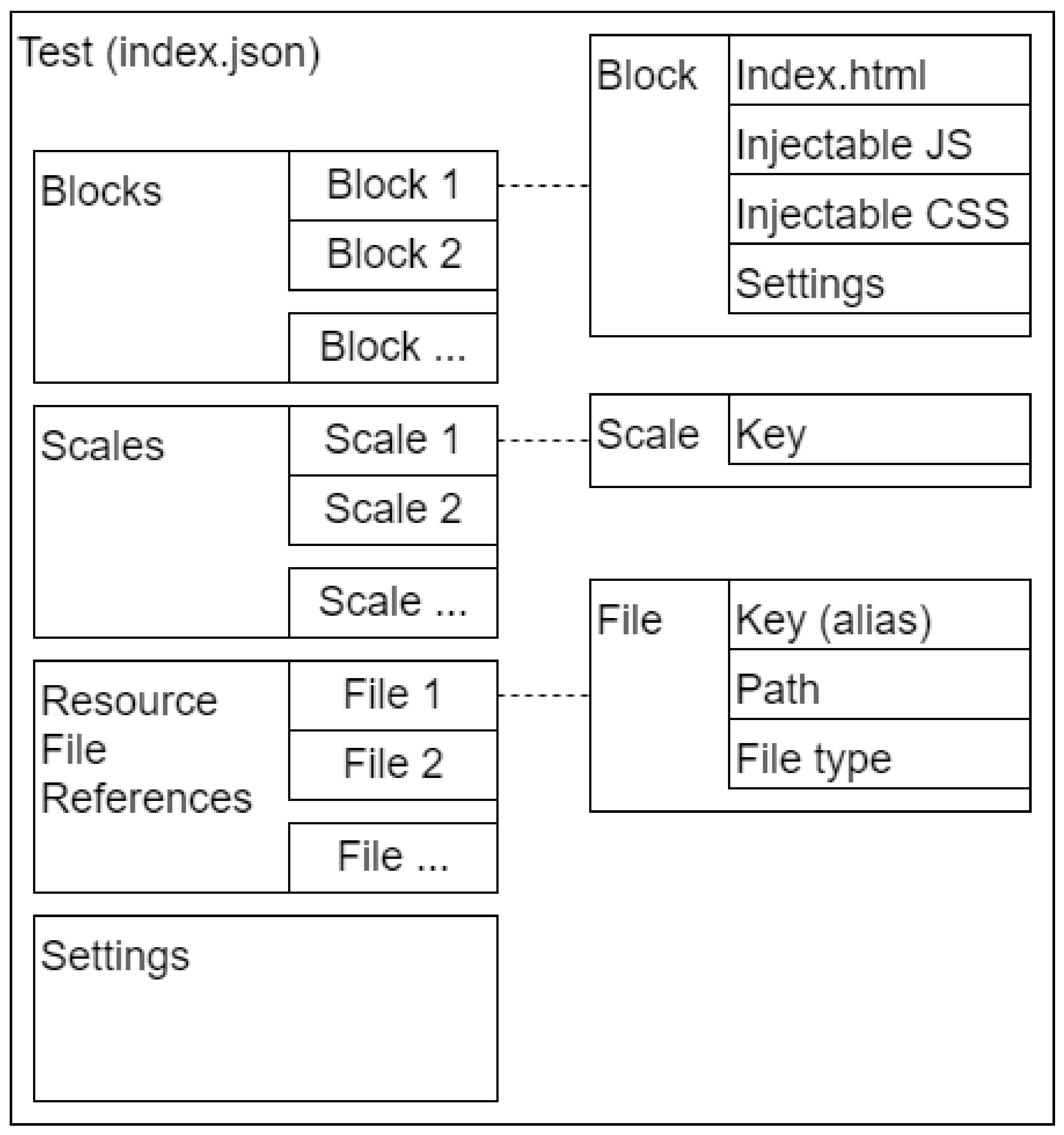

4.1. Programmable Psychological Test Structure

- 3.

- Blocks are one or more test blocks, each of which is initialized by tools of the platform. Within the block, it is declared which HTML file will be used as a basis, and which JS and CSS files must be injected for the block to work. In addition, each block has a number of its own settings, such as an interrupt condition or a time limit.

- 4.

- Scales are a list of scales, the values of which will be calculated during testing. All the logic for calculating values is implemented in the test itself, and only the names of the scales are declared in index.json.

- 5.

- Resources are a list of resource files that can be requested during the test. For each file, the path to the file in the archive, the file type (Image/HTML/JS/CSS) and its alias, which will be accessed, are indicated.

- 6.

- Settings are settings for the entire test (for example, limitation on device requirements).

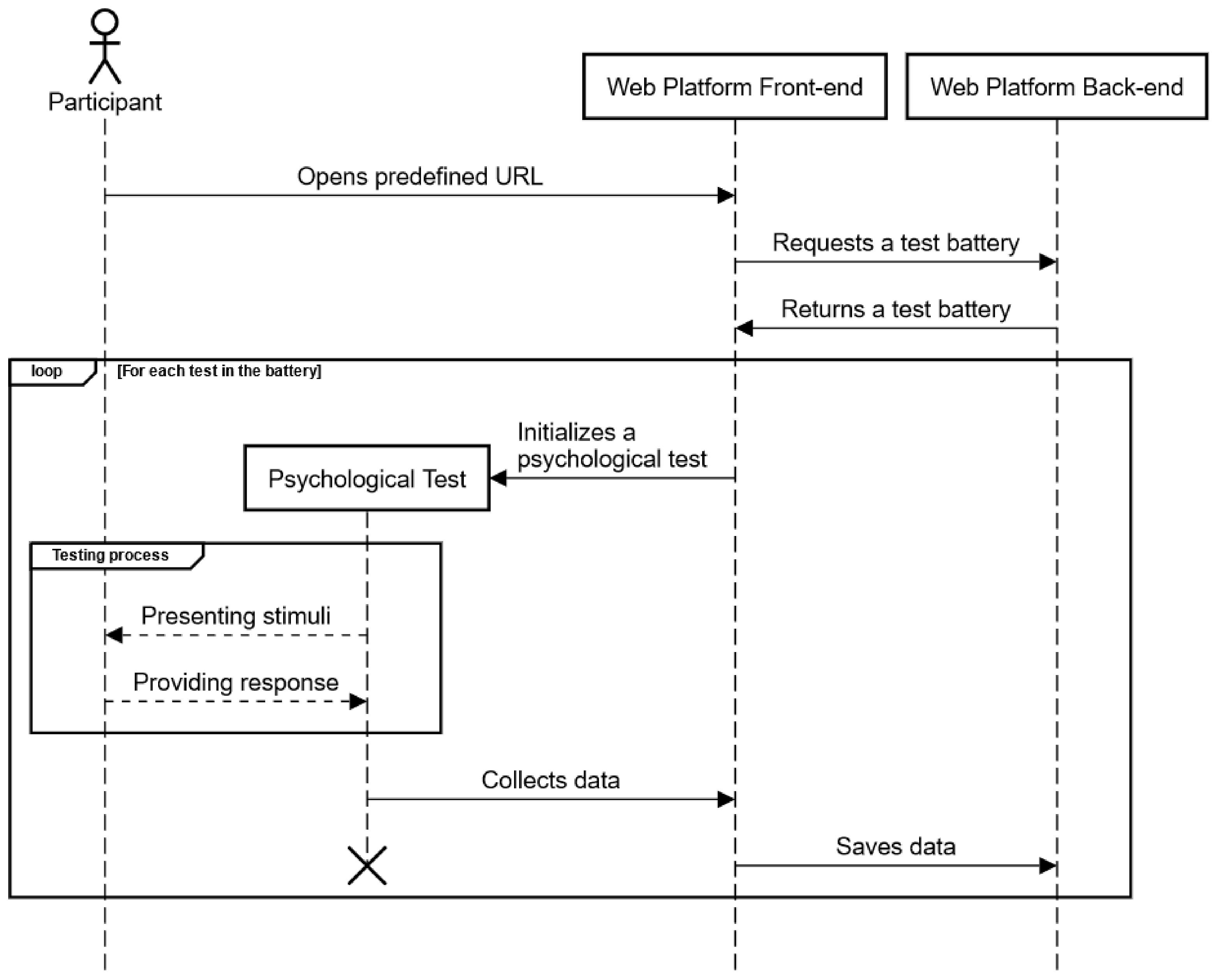

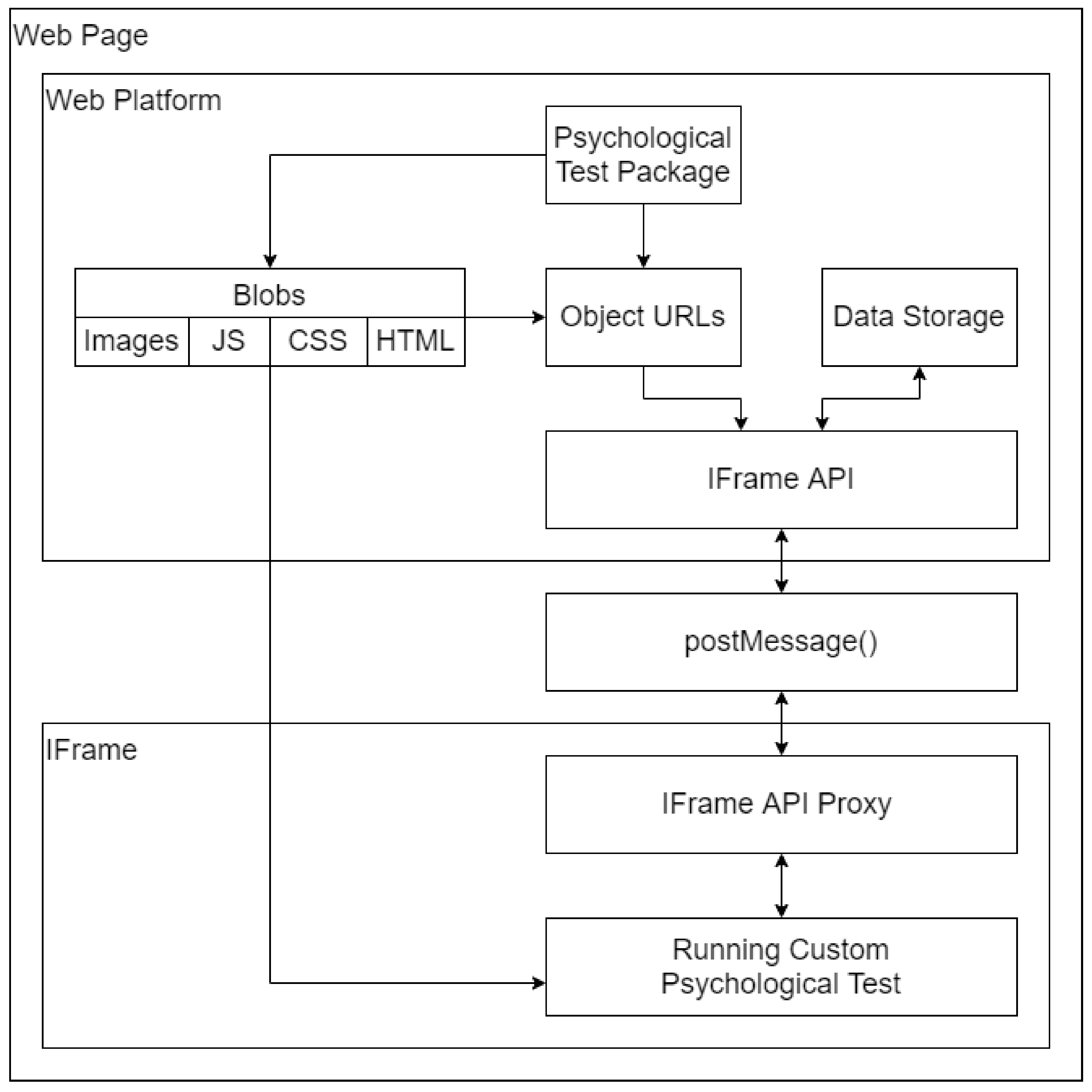

4.2. Interaction of a Psychological Test with Web Platform

- The player selects a test from the battery, which must be presented to the participant (the choice is made trivially, in order).

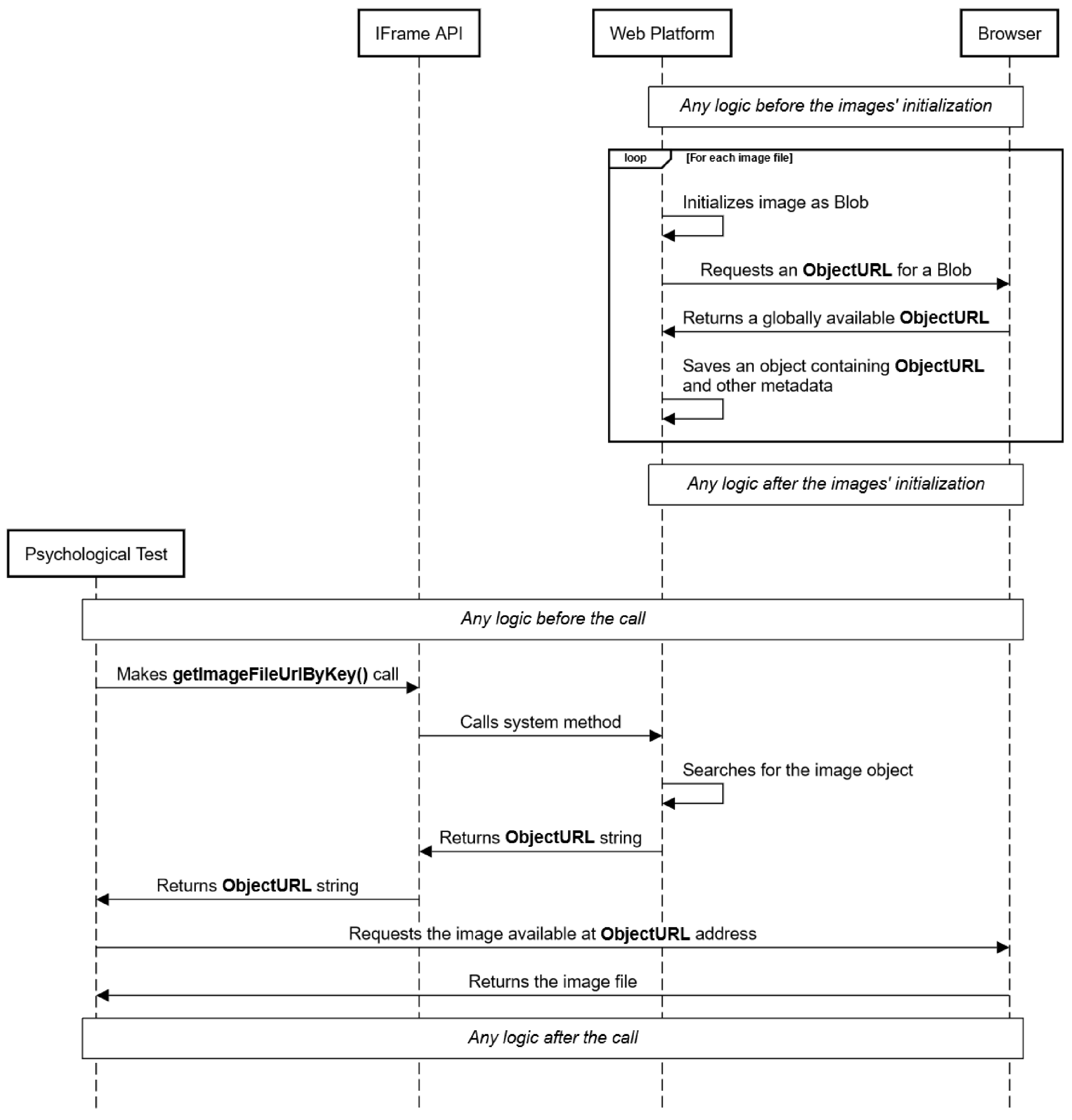

- For the test, a search is made for all resource files such as Image, HTML, JS, CSS (by their extensions); ObjectURLs are created for each.

- The content of the index.json file is read.

- Based on the content of the index.json file, it is checked what type of test (programmable or questionnaire).

- It is checked whether the test launch is allowed on the research participant’s device (the determination is based on the screen size and test settings).

- Further, for a certain type of test, a separate initialization procedure is performed.

- Once the test has started, the first block of the programmable test is selected.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

| Method Name | Parameters | Returned Values | Description |

|---|---|---|---|

| setIframeSize | Size—the object with two fields (width, height) Width—working area width. Height—working area height. Can be specified in any units allowed by the browser. | - | Sets the size of the work area for the psychological test. |

| setBackgroundColor | Color—any valid color used in the background-color CSS property | - | Sets the background color of the page. |

| getWindowSize | - | Size—the object with two fields (width, height) | Returns an object with the current size of the working area of the browser window. |

| getJsFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getCssFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getImageFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getJsFileUrls | - | Array<ObjectURL>—links to JS files specified in index.json for the current block | Returns a list of links JS files related to the current block. |

| getCssFileUrls | - | Array<ObjectURL>—links to CSS files specified in index.json for the current block | Returns a list of links CSS files related to the current block. |

| injectJS | - | - | Using the getJsFileUrls method, it finds links to JS files and inside the body tag creates a script tag for each of them, to connect on the current page inside an iframe. |

| injectCSS | - | - | Using the getCssFileUrls method, it finds links to CSS files and inside the head tag creates a link tag for each of them to connect on the current page inside an iframe. |

| nextBlock | - | - | Ends the current block of test and starts the next block. If the next block fails, the test ends. |

| interrupt | - | - | Interrupts the operation of the current block (or test, depending on the interrupt settings). The user is shown a message with a “Next” button. |

| isInterrupted | - | Interrupted—flag indicating whether the interrupt condition has been reached. True if achieved, False otherwise. | Returns a flag signaling that an interrupt condition has been reached. |

| saveEvent | Tags—tags array Event—data object | - | Saves the event object with data, attaching tags to it for later access to them. |

| getEventsByTags | Tags—tags array | Events—an array of events, each event has the form: | Returns all saved events that match the given set of tags. |

| getEventsByTag | Tag—tags array | Events—an array of events, each event has the form: | Returns all saved events matching the passed tag. |

| setScaleValue | Key—scale key | - | Retains the specified value at the specified scale key. |

| getScaleValue | Key—scale key | Value—scale value | Returns the previously saved value at the specified scale key. |

| getMetadata | - | Metadata—metadata object | Returns an object with service information (for example, test start time, browser version). |

References

- Callegaro, M.; Yang, Y. The role of surveys in the era of “big data”. In The Palgrave Handbook of Survey Research; Palgrave Macmillan: Cham, Switzerland, 2018; pp. 175–192. [Google Scholar]

- Nadile, E.M.; Williams, K.D.; Wiesenthal, N.J.; Cooper, K.M. Gender Differences in Student Comfort Voluntarily Asking and Answering Questions in Large-Enrollment College Science Courses. J. Microbiol. Biol. Educ. 2021, 22, e00100-21. [Google Scholar] [CrossRef]

- Butler, C.C.; Connor, J.T.; Lewis, R.J.; Verheij, T. Answering patient-centred questions efficiently: Response-adaptive platform trials in primary care. Br. J. Gen. Pract. 2018, 68, 294–295. [Google Scholar] [CrossRef] [PubMed]

- Mutabazi, E.; Ni, J.; Tang, G.; Cao, W. A Review on Medical Textual Question Answering Systems Based on Deep Learning Approaches. Appl. Sci. 2021, 11, 5456. [Google Scholar] [CrossRef]

- Stewart, N.; Chandler, J.; Paolacci, G. Crowdsourcing samples in cognitive science. Trends Cogn. Sci. 2017, 21, 736–748. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gureckis, T.M.; Martin, J.; McDonnell, J.; Chan, P. psiTurk: An open-source framework for conducting replicable behavioral experiments online. Behav. Res. Methods 2016, 48, 829–842. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reinecke, K.; Gajos, K.Z. Labin the Wild: Conducting Large-Scale Online Experiments with Uncompensated Samples. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, New York, NY, USA, 14–18 March 2015; pp. 1364–1378. [Google Scholar] [CrossRef]

- Magomedov, S.; Gusev, A.; Ilin, D.; Nikulchev, E. Users’ Reaction Time for Improvement of Security and Access Control in Web Services. Appl. Sci. 2021, 11, 2561. [Google Scholar] [CrossRef]

- Magomedov, S.G.; Kolyasnikov, P.V.; Nikulchev, E.V. Development of technology for controlling access to digital portals and platforms based on estimates of user reaction time built into the interface. Russ. Technol. J. 2020, 8, 34–46. [Google Scholar] [CrossRef]

- Hilbig, B.E. Reaction time effects in lab-versus Web-based research: Experimental evidence. Behav. Res. Methods 2016, 48, 1718–1724. [Google Scholar] [CrossRef]

- Reips, U.-D. Standards for Internet-based experimenting. Exp. Psychol. 2002, 49, 243–256. [Google Scholar] [CrossRef]

- Zhou, H.; Fishbach, A. The pitfall of experimenting on the web: How unattended selective attrition leads to surprising (yet false) research conclusions. J. Personal. Soc. Psychol. 2016, 111, 493–504. [Google Scholar] [CrossRef] [Green Version]

- Reimers, S.; Stewart, N. Presentation and response timing accuracy in Adobe Flash and HTML5/JavaScript Web experiments. Behav. Res. Methods 2015, 47, 309–327. [Google Scholar] [CrossRef] [PubMed]

- Antoun, C.; Couper, M.P.; Conrad, F.G. Effects of Mobile versus PC Web on Survey Response Quality: A Crossover Experiment in a Probability Web Panel. Public Opin. Q. 2017, 81, 280–306. [Google Scholar] [CrossRef]

- Trübner, M. Effects of Header Images on Different Devices in Web Surveys. Surv. Res. Methods 2020, 14, 43–53. [Google Scholar]

- Conrad, F.G.; Couper, M.P.; Tourangeau, R.; Zhang, C. Reducing speeding in web surveys by providing immediate feedback. Surv. Res. Methods 2017, 11, 45–61. [Google Scholar] [CrossRef] [PubMed]

- Von Bastian, C.C.; Locher, A.; Ruflin, M. Tatool: A Java-based open-source programming framework for psychological studies. Behav. Res. Methods 2013, 45, 108–115. [Google Scholar] [CrossRef] [Green Version]

- De Leeuw, J.R.; Motz, B.A. jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behav. Res. Methods 2015, 47, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Basok, B.M.; Frenkel, S.L. Formalized approaches to assessing the usability of the user interface of web applications. Russ. Technol. J. 2021, 9, 7–21. [Google Scholar] [CrossRef]

- Tomic, S.T.; Janata, P. Ensemble: A Web-based system for psychology survey and experiment management Springer Link. Behav. Res. Methods 2007, 39, 635–650. [Google Scholar] [CrossRef] [Green Version]

- Stoet, G. PsyToolkit: A Novel Web-Based Method for Running Online Questionnaires and Reaction-Time Experiments. Teach. Psychol. 2017, 44, 24–31. [Google Scholar] [CrossRef]

- Schubert, T.W.; Murteira, C.; Collins, E.C.; Lopes, D. Scripting RT: A Software Library for Collecting Response Latencies in Online Studies of Cognition. PLoS ONE 2013, 8, e67769. [Google Scholar] [CrossRef] [Green Version]

- McGraw, K.O.; Tew, M.D.; Williams, J.E. The Integrity of Web-Delivered Experiments: Can You Trust the Data? Psychol. Sci. 2000, 11, 502–506. [Google Scholar] [CrossRef]

- van Steenbergen, H.; Bocanegra, B.R. Promises and pitfalls of Web-based experimentation in the advance of replicable psychological science: A reply to Plant (2015). Behav. Res. Methods 2016, 48, 1713–1717. [Google Scholar] [CrossRef] [Green Version]

- Theisen, K.J. Programming languages in chemistry: A review of HTML5/JavaScript. J. Cheminform. 2019, 11, 11. [Google Scholar] [CrossRef]

- Robinson, S.J.; Brewer, G. Performance on the traditional and the touch screen, tablet versions of the Corsi Block and the Tower of Hanoi tasks. Comput. Hum. Behav. 2016, 60, 29–34. [Google Scholar] [CrossRef] [Green Version]

- Frank, M.C.; Sugarman, E.; Horowitz, A.C.; Lewis, M.L.; Yurovsky, D. Using Tablets to Collect Data From Young Children. J. Cogn. Dev. 2016, 17, 1–17. [Google Scholar] [CrossRef]

- Ackerman, R.; Koriat, A. Response latency as a predictor of the accuracy of children’s reports. J. Exp. Psychol. Appl. 2011, 17, 406–417. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kochari, A.R. Conducting Web-Based Experiments for Numerical Cognition Research. J. Cogn. 2019, 2, 39. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Toninelli, D.; Revilla, M. Smartphones vs. PCs: Does the device affect the web survey experience and the measurement error for sensitive topics? A replication of the Mavletova & Couper’s 2013 experiment. Surv. Res. Methods 2016, 10, 153–169. [Google Scholar]

- Chen, C.; Johnson, J.G.; Charles, A.; Weibel, N. Understanding Barriers and Design Opportunities to Improve Healthcare and QOL for Older Adults through Voice Assistants. In Proceedings of the 23rd International ACM SIGACCESS Conference on Computers and Accessibility (Virtual Event, USA)(ASSETS’21), New York, NY, USA, 23–25 October 2021. [Google Scholar]

- Singh, R.; Timbadia, D.; Kapoor, V.; Reddy, R.; Churi, P.; Pimple, O. Question paper generation through progressive model and difficulty calculation on the Promexa Mobile Application. Educ. Inf. Technol. 2021, 26, 4151–4179. [Google Scholar] [CrossRef] [PubMed]

- Höhne, J.K.; Schlosser, S. Survey Motion: What can we learn from sensor data about respondents’ completion and response behavior in mobile web surveys? Int. J. Soc. Res. Methodol. 2019, 22, 379–391. [Google Scholar] [CrossRef]

- Osipov, I.V.; Nikulchev, E. Wawcube puzzle, transreality object of mixed reality. Adv. Intell. Syst. Comput. 2019, 881, 22–33. [Google Scholar]

- Höhne, J.K.; Schlosser, S.; Couper, M.P.; Blom, A.G. Switching away: Exploring on-device media multitasking in web surveys. Comput. Hum. Behav. 2020, 111, 106417. [Google Scholar] [CrossRef]

- Schwarz, H.; Revilla, M.; Weber, W. Memory Effects in Repeated Survey Questions: Reviving the Empirical Investigation of the Independent Measurements Assumption. Surv. Res. Methods 2020, 14, 325–344. [Google Scholar]

- Wenz, A. Do distractions during web survey completion affect data quality? Findings from a laboratory experiment. Soc. Sci. Comput. Rev. 2021, 39, 148–161. [Google Scholar] [CrossRef]

- Toninelli, D.; Revilla, M. How mobile device screen size affects data collected in web surveys. In Advances in Questionnaire Design, Development, Evaluation and Testing; 2020; pp. 349–373. Available online: https://onlinelibrary.wiley.com/doi/10.1002/9781119263685.ch14 (accessed on 22 September 2021).

- Erradi, A.; Waheed Iqbal, A.M.; Bouguettaya, A. Web Application Resource Requirements Estimation based on the Workload Latent. Memory 2020, 22, 27–35. [Google Scholar] [CrossRef]

- Kim, J.; Francisco, E.; Holden, J.; Lensch, R.; Kirsch, B.; Dennis, R.; Tommerdahl, M. Visual vs. Tactile Reaction Testing Demonstrates Problems with Online Cognitive Testing. J. Sci. Med. 2020, 2, 1–10. [Google Scholar]

- Holden, J.; Francisco, E.; Tommerdahl, A.; Tommerdahl, A.; Lensch, R.; Kirsch, B.; Zai, L.; Tommerdahl, M. Methodological problems with online concussion testing. Front. Hum. Neurosci. 2020, 14, 394. [Google Scholar] [CrossRef] [PubMed]

- Nikulchev, E.; Ilin, D.; Silaeva, A.; Kolyasnikov, P.; Belov, V.; Runtov, A.; Malykh, S. Digital Psychological Platform for Mass Web-Surveys. Data 2020, 5, 95. [Google Scholar] [CrossRef]

- Gusev, A.; Ilin, D.; Kolyasnikov, P.; Nikulchev, E. Effective Selection of Software Components Based on Experimental Evaluations of Quality of Operation. Eng. Lett. 2020, 28, 420–427. [Google Scholar]

- Nikulchev, E.; Ilin, D.; Belov, B.; Kolyasnikov, P.; Kosenkov, A. E-learning Tools on the Healthcare Professional Social Networks. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 29–34. [Google Scholar] [CrossRef] [Green Version]

- Faust, M.E.; Balota, D.A.; Spieler, D.H.; Ferraro, F.R. Individual differences in information-processing rate and amount: Implications for group differences in response latency. Psychol. Bull. 1999, 125, 777–799. [Google Scholar] [CrossRef] [PubMed]

- Magomedov, S.; Ilin, D.; Silaeva, A.; Nikulchev, E. Dataset of user reactions when filling out web questionnaires. Data 2020, 5, 108. [Google Scholar] [CrossRef]

- Ozkok, O.; Zyphur, M.J.; Barsky, A.P.; Theilacker, M.; Donnellan, M.B.; Oswald, F.L. Modeling measurement as a sequential process: Autoregressive confirmatory factor analysis (AR-CFA). Front. Psychol. 2019, 10, 2108. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Group | Criteria |

|---|---|

| Mobile Device | “android” OR “ios” |

| Legacy PC | “windows” AND “xp” |

| Modern PC | “windows” AND (“7” OR “10”) |

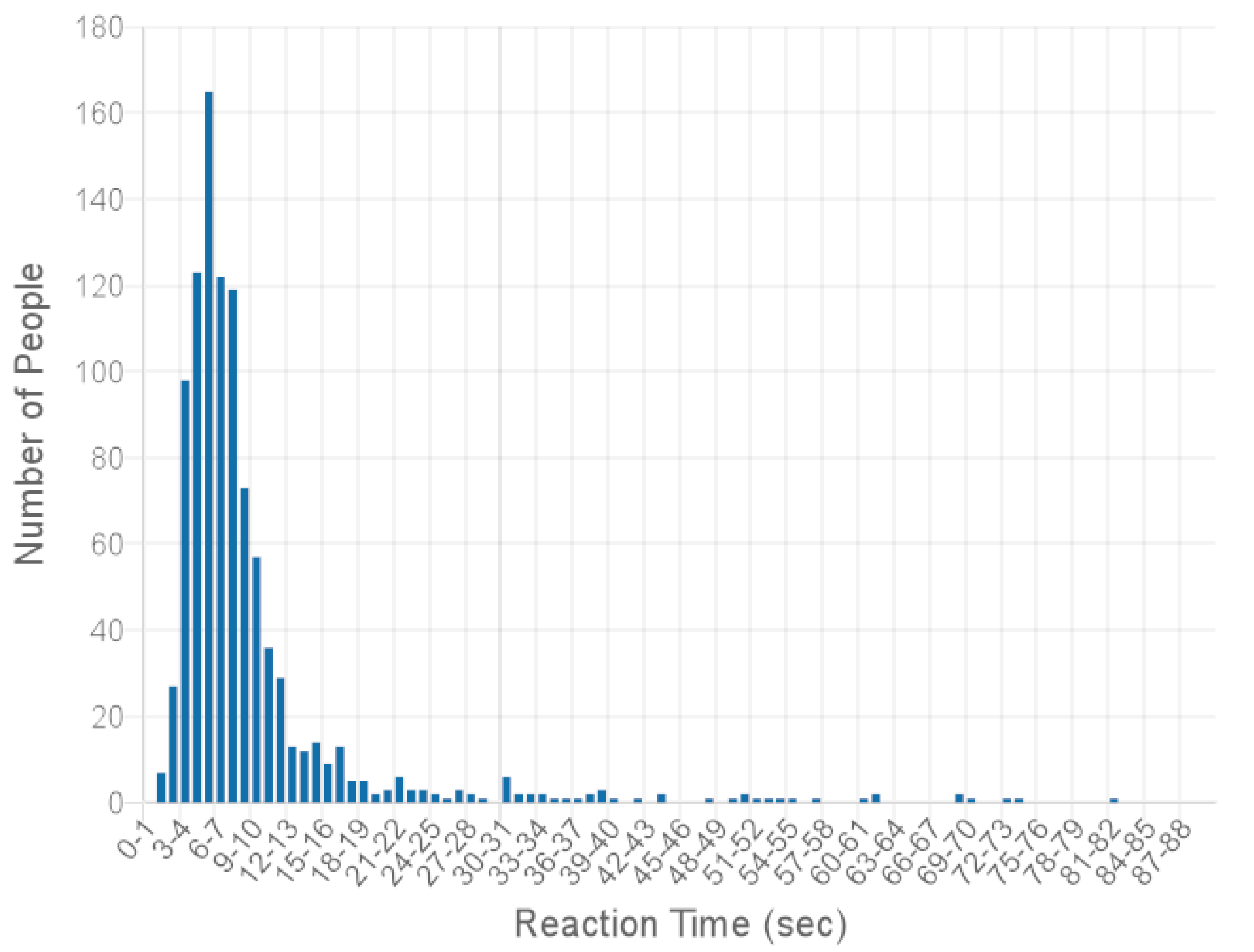

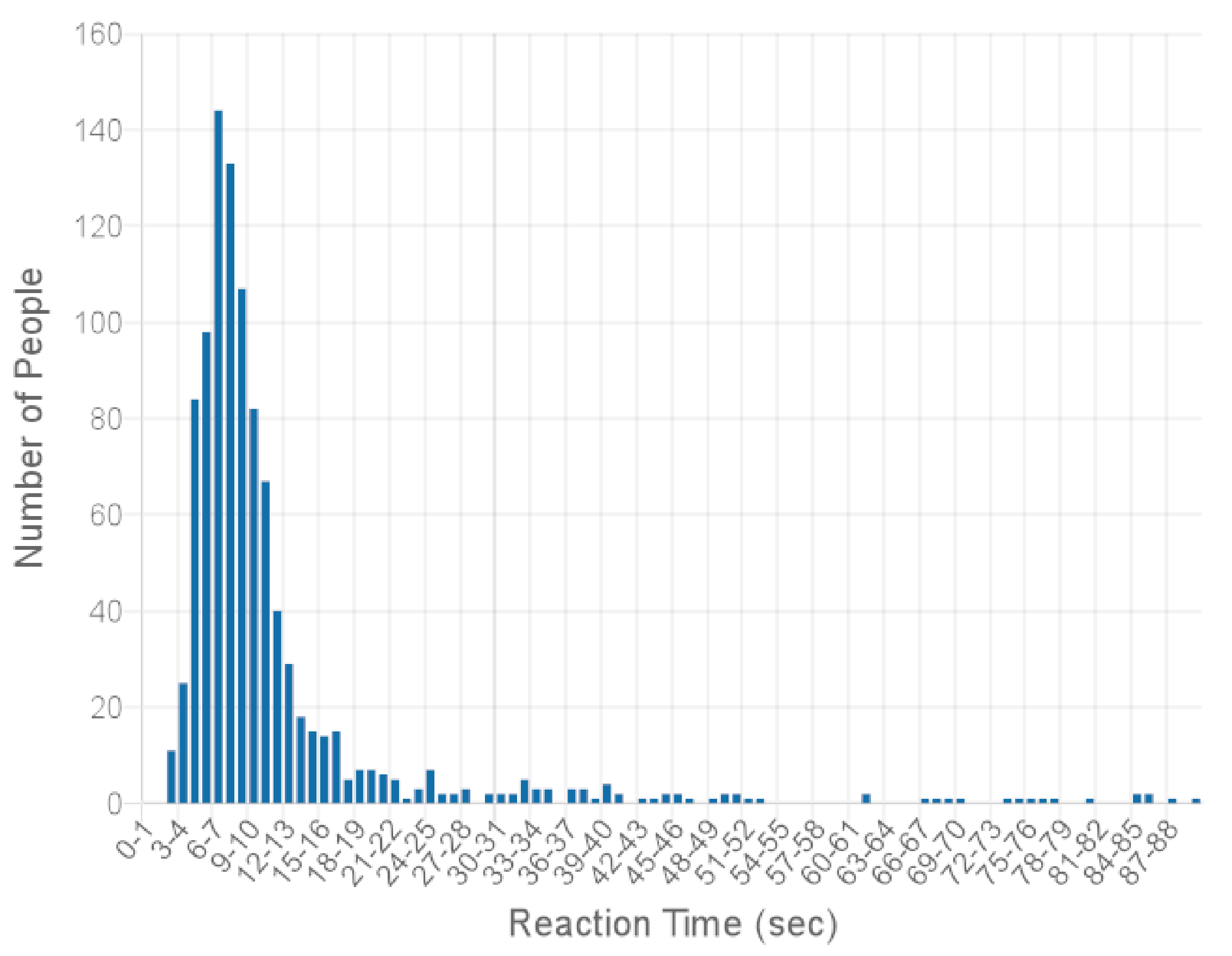

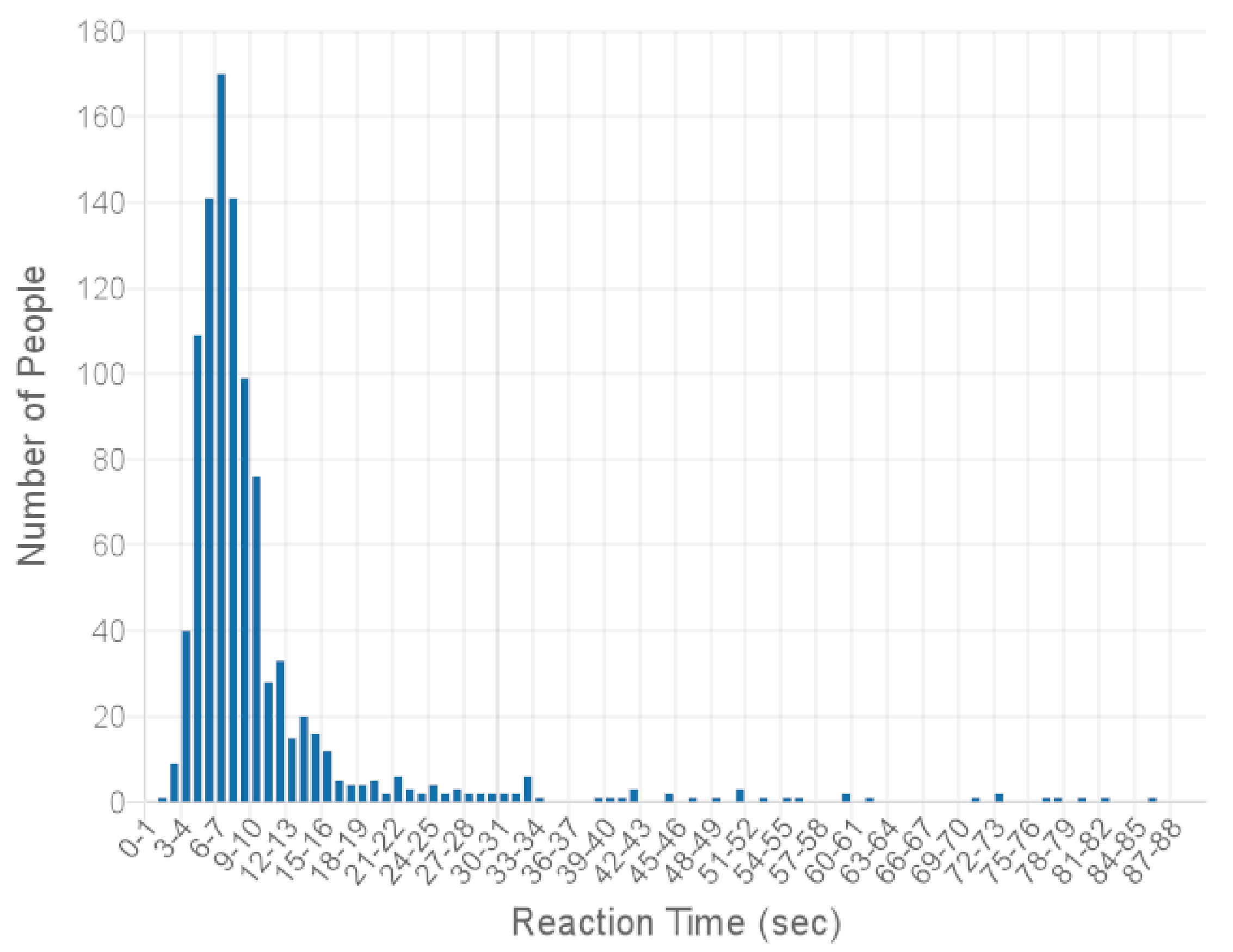

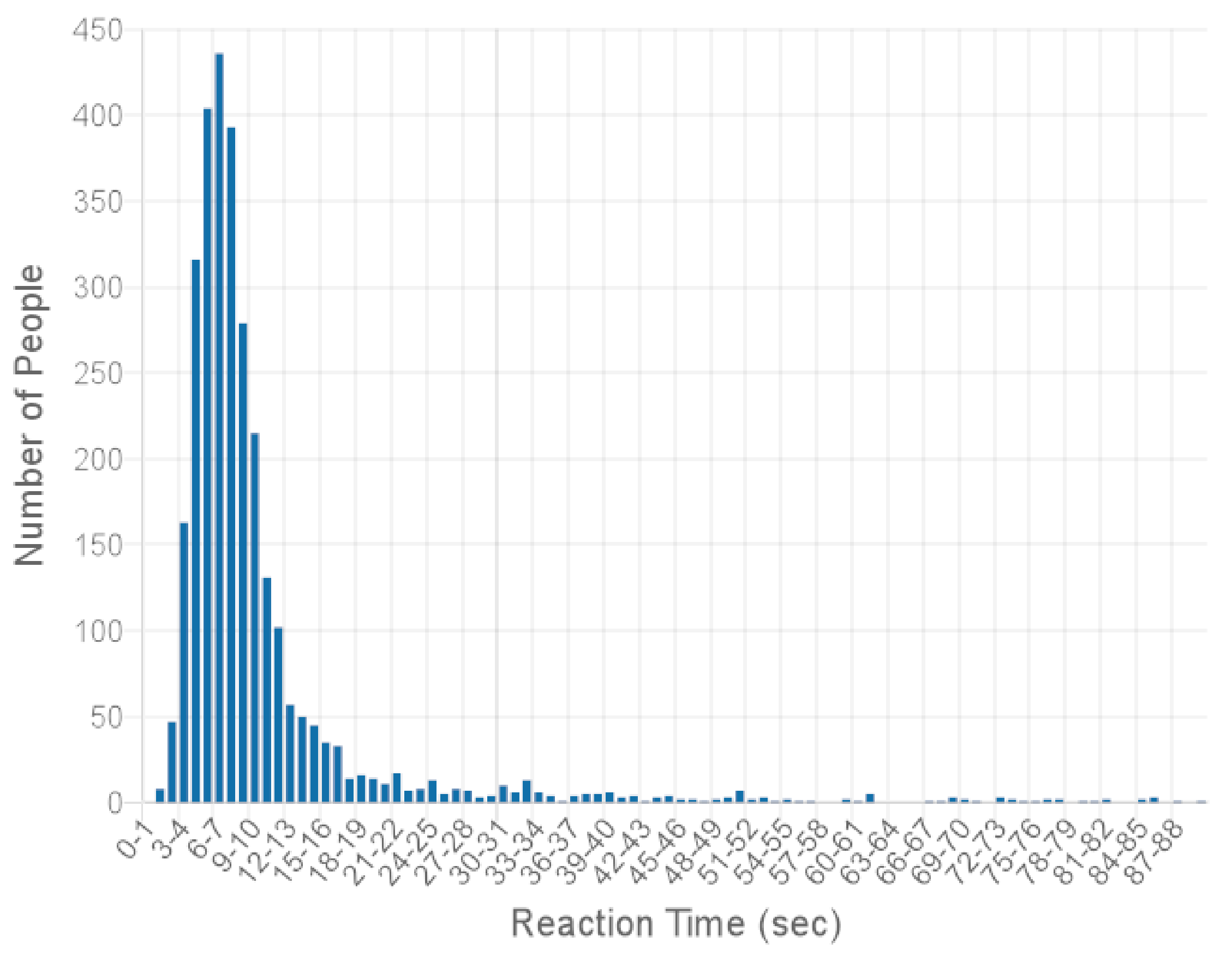

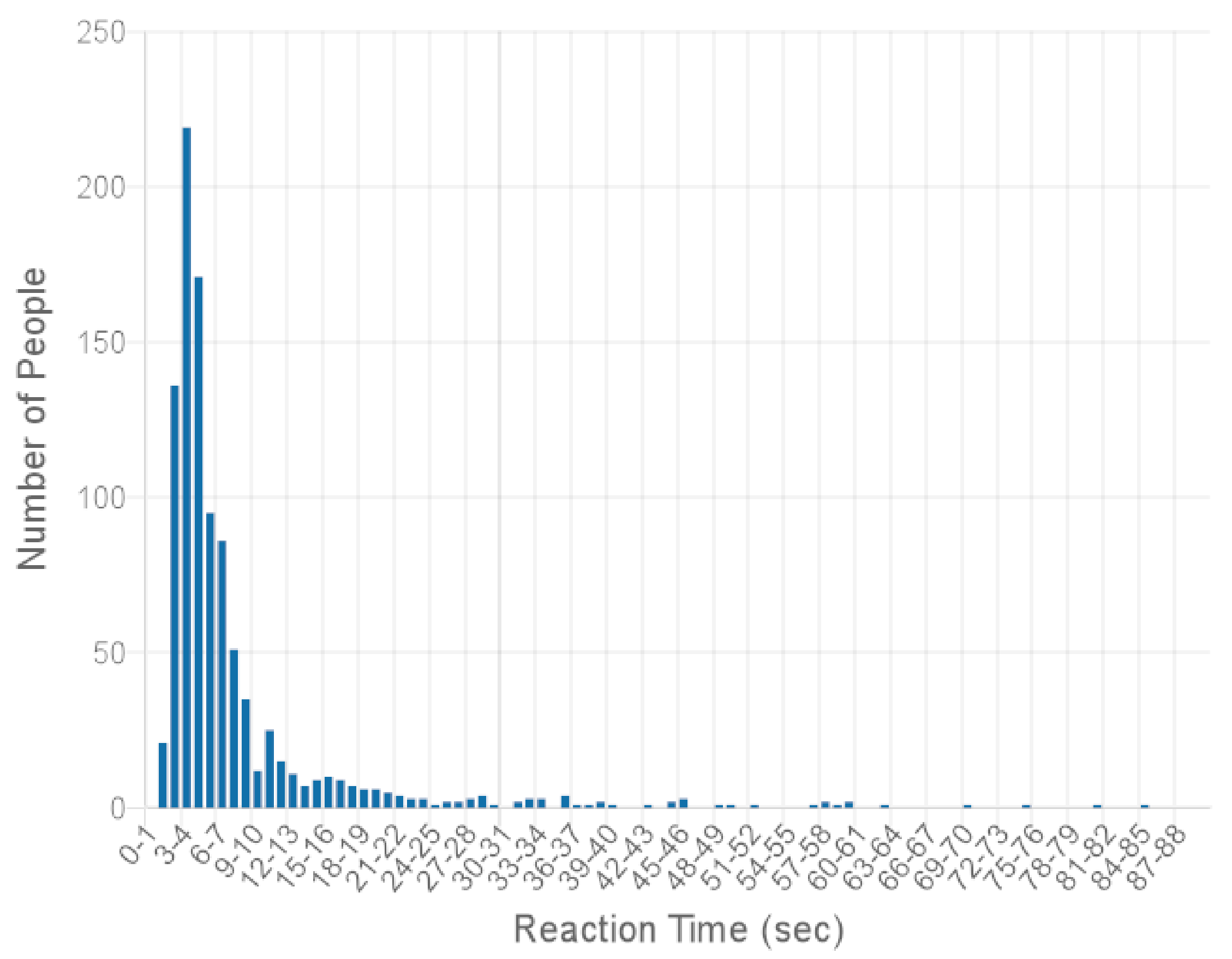

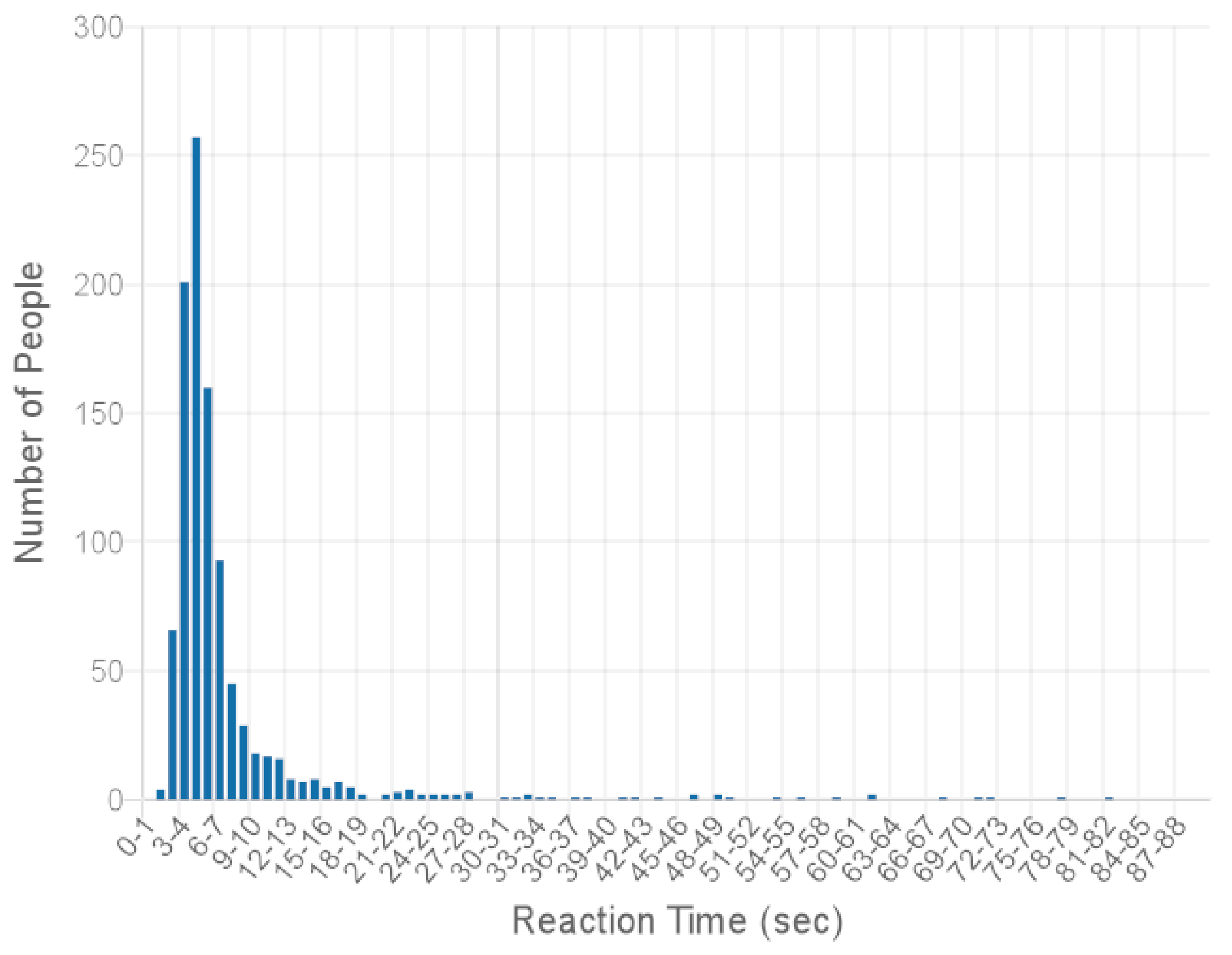

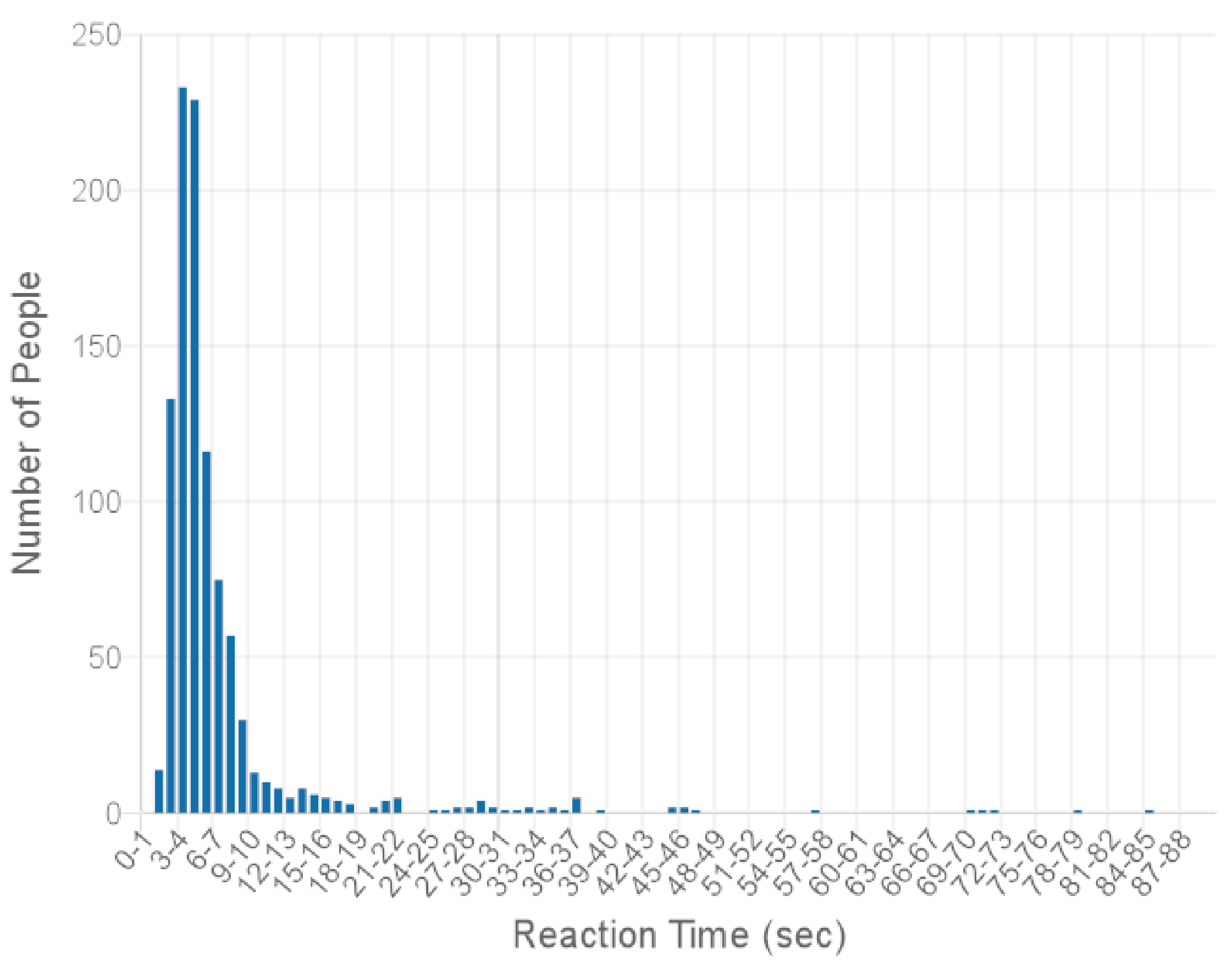

| Mobile Device | Legacy PC | Modern PC | All Devices | |

|---|---|---|---|---|

| Sample Size | 1000 | 1000 | 1000 | 3000 |

| Mean | 9.596 | 12.217 | 10.251 | 10.688 |

| First Quartile | 4.963 | 6.232 | 5.607 | 5.512 |

| Median | 6.633 | 8.043 | 7.150 | 7.283 |

| Third Quartile | 9.211 | 10.974 | 9.441 | 9.939 |

| Standard Deviation | 11.718 | 15.456 | 12.265 | 13.296 |

| Mobile Device | Legacy PC | Modern PC | All Devices | |

|---|---|---|---|---|

| Sample Size | 1000 | 1000 | 1000 | 3000 |

| Mean | 8.115 | 7.732 | 6.877 | 7.575 |

| First Quartile | 3.414 | 3.899 | 3.423 | 3.540 |

| Median | 4.680 | 4.893 | 4.531 | 4.687 |

| Third Quartile | 7.387 | 6.644 | 6.283 | 6.730 |

| Standard Deviation | 11.670 | 12.008 | 10.480 | 11.416 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nikulchev, E.; Ilin, D.; Kolyasnikov, P.; Magomedov, S.; Alexeenko, A.; Kosenkov, A.N.; Sokolov, A.; Malykh, A.; Ismatullina, V.; Malykh, S. Isolated Sandbox Environment Architecture for Running Cognitive Psychological Experiments in Web Platforms. Future Internet 2021, 13, 245. https://doi.org/10.3390/fi13100245

Nikulchev E, Ilin D, Kolyasnikov P, Magomedov S, Alexeenko A, Kosenkov AN, Sokolov A, Malykh A, Ismatullina V, Malykh S. Isolated Sandbox Environment Architecture for Running Cognitive Psychological Experiments in Web Platforms. Future Internet. 2021; 13(10):245. https://doi.org/10.3390/fi13100245

Chicago/Turabian StyleNikulchev, Evgeny, Dmitry Ilin, Pavel Kolyasnikov, Shamil Magomedov, Anna Alexeenko, Alexander N. Kosenkov, Andrey Sokolov, Artem Malykh, Victoria Ismatullina, and Sergey Malykh. 2021. "Isolated Sandbox Environment Architecture for Running Cognitive Psychological Experiments in Web Platforms" Future Internet 13, no. 10: 245. https://doi.org/10.3390/fi13100245

APA StyleNikulchev, E., Ilin, D., Kolyasnikov, P., Magomedov, S., Alexeenko, A., Kosenkov, A. N., Sokolov, A., Malykh, A., Ismatullina, V., & Malykh, S. (2021). Isolated Sandbox Environment Architecture for Running Cognitive Psychological Experiments in Web Platforms. Future Internet, 13(10), 245. https://doi.org/10.3390/fi13100245