Abstract

Web surveys are an integral part of the feedback of Internet services, a research tool for respondents, including in the field of health and psychology. Web technologies allow conducting research on large samples. For mental health, an important metric is reaction time in cognitive tests and in answering questions. The use of mobile devices such as smartphones and tablets has increased markedly in web surveys, so the impact of device types and operating systems needs to be investigated. This article proposes an architectural solution aimed at reducing the effect of device variability on the results of cognitive psychological experiments. An experiment was carried out to formulate the requirements for software and hardware. Three groups of 1000 respondents were considered, corresponding to three types of computers and operating systems: Mobile Device, Legacy PC, and Modern PC. The results obtained showed a slight bias in the estimates for each group. It is noticed that the error for a group of devices differs both upward and downward for various tasks in a psychological experiment. Thus, for cognitive tests, in which the reaction time is critical, an architectural solution was synthesized for conducting psychological research in a web browser. The proposed architectural solution considers the characteristics of the device used by participants to undergo research in the web platform and allows to restrict access from devices that do not meet the specified criteria.

Keywords:

cognitive tests; psychological tests; web; sandboxing; software architecture; web platform 1. Introduction

Internet technologies are actively used to conduct surveys and web studies in the field of psychology because they are usually cheaper, faster, and easier to conduct than in other modes. Surveys are an integral part of feedback from Internet services [1], questionnaires in the education system [2], in the field of health [3,4]. Web technologies allow conducting studies on large samples [5,6,7], attracting respondents on the terms of crowdsourcing [5,6] in psychological research. Web surveys allow researchers to capture psychomotor parameters such as reaction time [8,9]. The response of the answers should consider individually dependent cognitive and affective factors, as well as individual-dependent cognitive and affective factors as well as individual-independent contextual factors of hidden questions. However, determining the correct values for estimating outliers is challenging. The bias is determined, including the uncertainty of the experimental conditions [10,11], the increased risk of dropouts [11,12], differences in device configurations [6,13,14], on which a psychological experiment is carried out, web pages coding [15], as well as the uncontrollable behavior of the respondent. The bias can be both upward and downward. Some users may choose without thinking any answer in a survey [16], so it is necessary to consider both the deceleration rate and the very fast response.

For psychological testing, web technologies are used [17,18,19,20,21] such as Flash, Java, and JavaScript + HTML5. It is noted that these technologies provide a delay in data entry and display of incentives, but for most studies, the delay is acceptable. A few studies have shown [13,22] that the difference in the data obtained between the listed technologies is insignificant; there are studies showing the comparability of the accuracy of the results between web-based tools and those used in the laboratory [23,24]. Since support for Flash and Java is no longer carried out in browsers [25], it makes no sense to consider them for new developments. In addition to the differences related to technology, the impact of the hardware component is considered. As shown in [13], the differences can be nontrivial. In addition, the tools of input and output of information [6,26,27], for example, the use of touch devices and keyboard with a mouse, as well as display size, also vary.

This paper addresses two issues:

- Is it possible to reduce the influence of a certain category of devices on the reaction time?

- Which sandbox environment architecture should be used to conduct research in psychology using web platforms?

The Background section (Section 2) is devoted to an examination of current research in the field of web surveys. The Evaluation of reaction time bias section (Section 3) presents the data of the web survey, which was used as a prerequisite for the formation of technical requirements for an architectural solution. In addition, the results of the survey illustrate that the influence of the device on the reaction time can be nontrivial, which is consistent with existing scientific data. In the Proposed sandbox environment architecture section (Section 4), the physical and logical structure of the implementation of a psychological experiment for launching in web platforms is presented. It also presents a diagram of the interaction between a web platform and a psychological experiment executed in an isolated sandbox, including the proposed API. The Discussion section (Section 5) highlights the key features of the architectural solution and its limitations. The Conclusion (Section 6) summarizes the learned results.

2. Background

Reaction time can be essential for assessing memory and reaction time [28] in cognitive tests [29], confirmation of the user’s identity [8], and others, while organizational and methodological measures [23] for conducting experiments are important.

The use of mobile devices such as smartphones and tablets in web surveys has increased markedly, especially smartphones [30] since they allow to participate in surveys wherever it is required. This is due to the widespread use of both the devices themselves and high-speed networks, and the habit of using mobile services, including in the field of medicine, for example, monitoring the health of the elderly [31], widespread introduction of online education during the pandemic [32]. Several studies [30,33] show that the parameter characterizing the dispersion of the influence of users is their movement; therefore, additional smartphone capabilities, such as the accelerometer [34], are used to detect movement. However, in addition to the results of these studies, it should be noted that distractions (music in the background, conversation, etc.) are typical in general for web surveys, so when testing the reaction speed, the user can bend down for a fallen object, straighten his clothes, etc. The presence of multitasking and the diversion of resources to other processes carried out in devices [35,36] can be passively registered by software.

For studies in which the bias in the average estimates of the measured indicators may be insignificant, according to laboratory studies [37], a negligible effect on test results was found in the context of talking in the room and the presence of music. At the same time, it is difficult to estimate the value of the biased estimate. Both screen size and web page optimization [38] are a minor distraction. Thus, external distractions of the respondent are less likely to affect experimental results than delays caused by [39] application responses. Sometimes, laboratory tests change the response time significantly [40]; therefore, the choice of questions is also important for assessing the quality of the tests, as well as the choice of equipment [41] required to achieve a given level of bias in the response time estimates.

3. Evaluation of Reaction Time Bias

The hypothesis is considered that the bias of the average value when using web interfaces on various devices is a system discrepancy and can be configured programmatically, for example, by determining the type of operating system before the experiment.

As the initial data, the results of a mass survey of students in the education system were used. The survey was conducted using the web platform [42,43]. The study involved 3786 students of grades 7–9 (45.8% of boys) from secondary schools of the Russian Federation. The age of participants is 12.8–17.6 years.

To assess the reaction time, two questions were chosen: one of them was placed in the beginning of the psychological methodology, the second one was the last question of the methodology.

Both questions imply the choice of one answer from several being offered. The key criterion when choosing questions for analyzing the reaction time was the maximum ease of choosing an answer, that is, it is assumed that the respondent should give an answer without thinking. The selected questions are formulated as follows.

The first question “I enjoy spending time with my parents” involves choosing one of the following answers: “never”, “sometimes”, “often”, “almost always”, “always”.

The last question is “My friends will help me if necessary” assumes the choice of one of the following answer options: “never”, “sometimes”, “often”, “almost always”, “always”.

Further, for each record, the total reaction time was calculated. The upper percentile was dropped with respect to the total reaction time. Too long reaction time is associated with a long pause in the process of filling out the questionnaire.

The participant’s reaction time for each question was determined by the formula:

where is time when the user last changed their answer; is time when the question first appeared on the screen.

The questionnaires were filled out using various devices. Operating system versions were fixed using the library Platform.js v1.3.5 (https://www.npmjs.com/package/platform, accessed on 22 September 2021). The OS field was used to determine the operating system.

Based on operating systems, three groups were formed: Mobile Device, Legacy PC, and Modern PC. Groups were selected by the occurrence of text fragments from Table 1 in the OS field, and the group size was limited to 1000 records. The initial data is sorted in chronological order, the first records of 1000 records found by the specified criterion were selected into groups. The All Devices group was also formed, which includes the results of all three groups, in total containing 3000 records.

Table 1.

Group-forming rules.

For each of the groups, a statistical analysis was performed to determine the relationship between the response time when answering the questionnaire and the category of devices used.

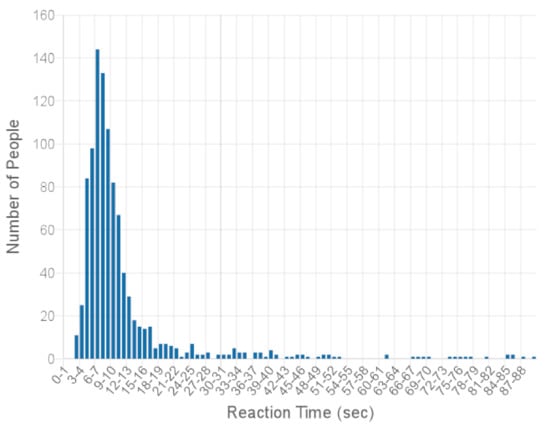

For question PQ106-01, the statistical evaluation showed that responding from mobile devices took less time for respondents than for modern PC users. At the same time, users of old PCs, as a rule, took even more time to choose an answer. The data are given in Table 2.

Table 2.

Estimation of reaction time when answering a question PQ106-01.

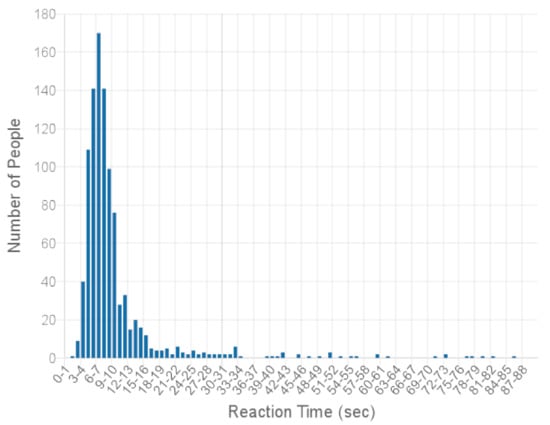

For question PQ610, the results show (presented in Table 3) that respondents took longer to respond from mobile devices than modern PC users. Response times for older PC users are on average longer than modern PC users, but median response times are longer than for the other two categories.

Table 3.

Estimating the response time when answering question PQ610.

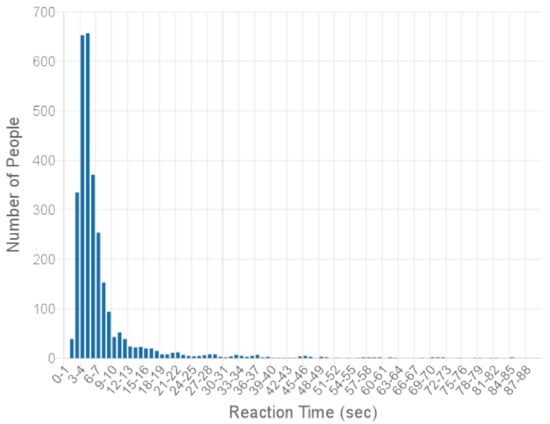

Distribution charts are presented in Appendix A, in Figure A1, Figure A2, Figure A3 and Figure A4 for question PQ106-01 and in Figure A5, Figure A6, Figure A7 and Figure A8 for question PQ610, respectively.

Thus, the hypothesis under consideration was not confirmed by experiment.

From the results obtained, it can be concluded that adding correction coefficients for a particular category of devices is difficult, since the reaction time can differ both upward and downward for different tasks within the same psychological methodology. In this regard, it is advisable to develop such a sandbox environment architecture that would make it possible to indicate the target category of devices that are acceptable for use in psychological research with a specific psychological method.

4. Proposed Sandbox Environment Architecture

Based on the evaluation of reaction time bias and an analysis of the technical requirements for programmable cognitive tests, an architectural solution was synthesized for conducting cognitive research in a web browser.

4.1. Programmable Psychological Test Structure

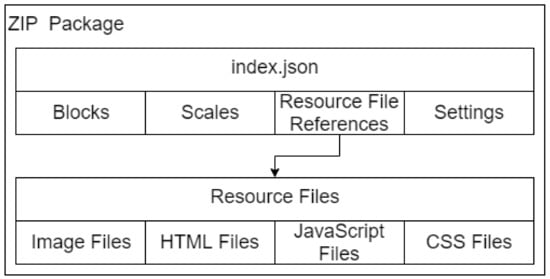

It is advisable to store the test in the form of a packed ZIP archive (Figure 1) [44]. This ensures the reliability and guaranteed delivery of the entire package of sources required for testing. The index.json file must be present at the root of the archive, which describes the structure and settings of the test. It also announces the entire list of resource files that can be used in a psychological test. Resource files can be located in the archive in any way convenient for the developer. The index.json file for each resource file specifies a path relative to the root of the archive.

Figure 1.

Structure of ZIP-archive containing psychological tests.

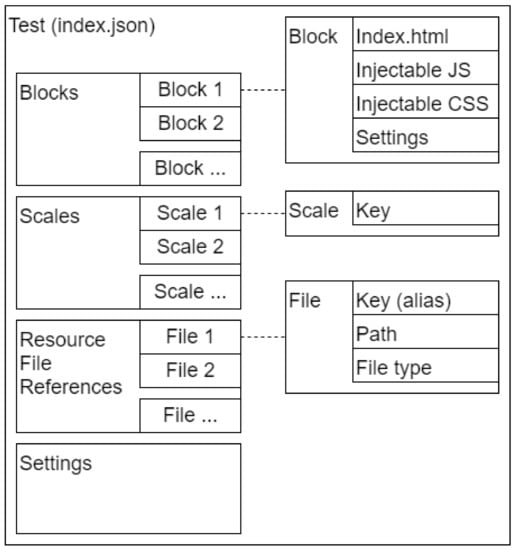

The description of the test is proposed to be formed from four sections (Figure 2):

- 3.

- Blocks are one or more test blocks, each of which is initialized by tools of the platform. Within the block, it is declared which HTML file will be used as a basis, and which JS and CSS files must be injected for the block to work. In addition, each block has a number of its own settings, such as an interrupt condition or a time limit.

- 4.

- Scales are a list of scales, the values of which will be calculated during testing. All the logic for calculating values is implemented in the test itself, and only the names of the scales are declared in index.json.

- 5.

- Resources are a list of resource files that can be requested during the test. For each file, the path to the file in the archive, the file type (Image/HTML/JS/CSS) and its alias, which will be accessed, are indicated.

- 6.

- Settings are settings for the entire test (for example, limitation on device requirements).

Figure 2.

File structure of index.json describing programmable psychological test.

Currently, it is assumed that resource files can be of four types: Image, HTML, JS, or CSS. In the future, it is possible to expand this list, for example, in the direction of supporting data or audio files. In the current solution, it is assumed that data of various types can be pre-converted into JS files in order to be able to programmatically access them.

The following extensions are supported for Image files: gif, jpg, jpeg, tiff, png.

The following extensions are supported for HTML files: html, htm, html5, xhtml.

The following extensions are supported for CSS files: css, css3.

Only one extension is supported for JS files: js.

4.2. Interaction of a Psychological Test with Web Platform

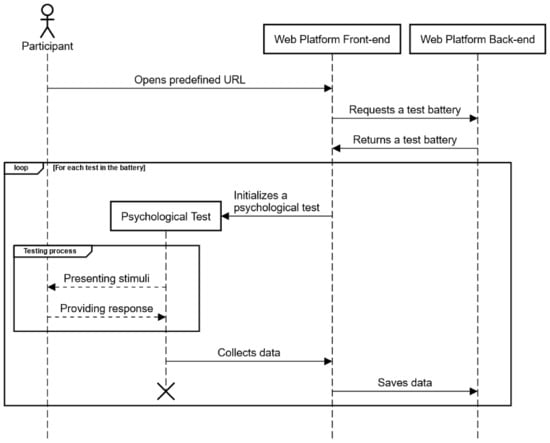

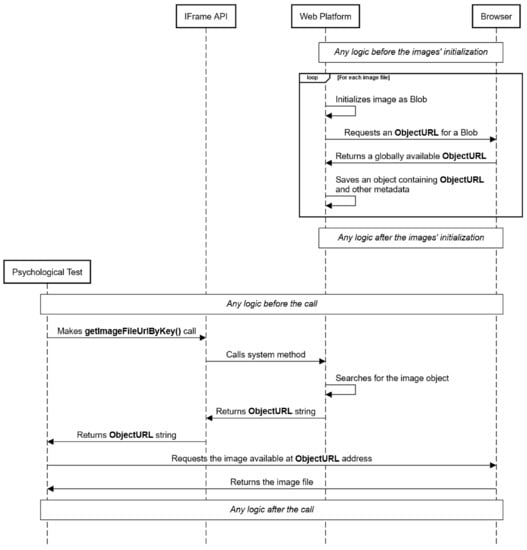

Typically, psychological tests are presented in a series named a battery of tests [44,45]. After the entire battery of tests is loaded by the web platform, before the research participant sees the test on the screen, the test is programmatically initialized (Figure 3).

Figure 3.

Sequence diagram of the test procedure in a web platform.

Test run consists of the following steps:

- The player selects a test from the battery, which must be presented to the participant (the choice is made trivially, in order).

- For the test, a search is made for all resource files such as Image, HTML, JS, CSS (by their extensions); ObjectURLs are created for each.

- The content of the index.json file is read.

- Based on the content of the index.json file, it is checked what type of test (programmable or questionnaire).

- It is checked whether the test launch is allowed on the research participant’s device (the determination is based on the screen size and test settings).

- Further, for a certain type of test, a separate initialization procedure is performed.

- Once the test has started, the first block of the programmable test is selected.

For it, it is required to create an iframe HTML-element with the sandbox attribute set to “allow-script sallow-same-origin”. It must be set to the value of the src attribute of the corresponding HTML file specified in the block settings. The iframe is then written inside the container tag on the web page. For an exceptional case, to make it work on the InternetExplorer, the src attribute can be replaced with ‘about: blank’, and instead the content of the HTML file can be written inside contentWindow.document using the open, write, and close methods

It is important to note that before the specified HTML file can be used directly or at the ObjectURL, several changes must be made to it. Inside the head tag, it is required to embed a script tag containing the program code to access the platform API. If the head tag was not found in the file, then the file is considered invalid, since it will not have tools for accessing the platform API. This behavior only applies to files used as a web page inside an iframe, other HTML files are not affected.

After the iframe is created, the API is initialized from the side of the web page, which allows processing the calls coming from the iframe. The actual connection to the API is based on the software implementation of each test. As a rule, this can happen at the very beginning of the test, since each test needs to set the size of the work area, which is performed through the provided API. When the API is initialized from the side of the psychological test, the version of the API is also selected in the iframe, which the test will use. This provides backward compatibility for previously developed tests in the event of significant changes to the platform API.

If the test implies several blocks, then when moving to the next block, a similar procedure is repeated. Iframe from the previous block is previously removed from the web page code.

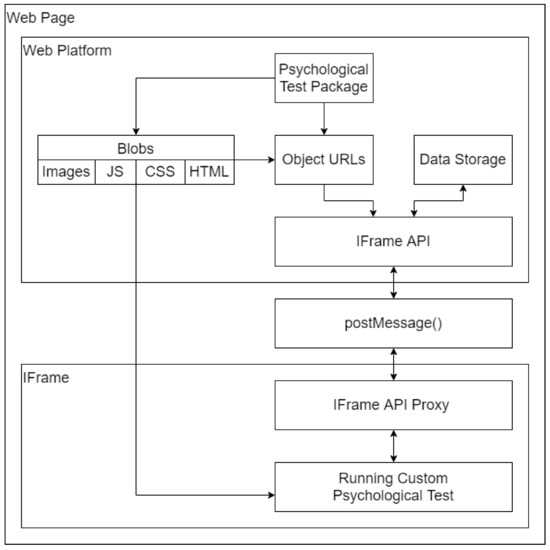

Data exchange between API components (from the side of the web page, platform, and iframe, psychological test) is implemented through the postMessage method, as shown in Figure 4. Data exchange occurs asynchronously, so that rendering in the browser is not blocked and it is possible to process the events generated by the research participant.

Figure 4.

Scheme of interaction and data exchange between the platform web page and the programmable psychological test in the iframe.

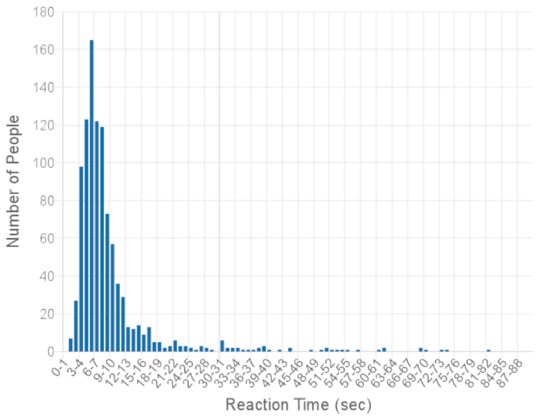

The transfer of links to resource files (ObjectURL) occurs through the API, while the transfer of the files themselves is implemented by the browser tools (Figure 5), since the files available through the ObjectURL are available not only for the web page itself, but also for iframes inside the platform.

Figure 5.

Sequence diagram of the procedure for accessing an image resource file.

To implement the interaction of the psychological test inside the Iframe and the web platform, an API is offered, presented in Appendix B.

5. Discussion

As the assessment of the reaction time to trivial questions and a number of other studies [46] show, there is a fairly large list of factors that affect the accuracy of the results of cognitive research. In addition to the latency of input devices, the response time can be influenced by the type of device, screen size, and operating system features.

Based on the estimates obtained, it cannot be concluded that a certain group of devices gives a predictable error in the response time. Probably, it may differ depending on the specific psychological test and the form of presentation of tasks.

In this paper, an architecture was proposed that considers the possibility of presenting requirements to the device of the research participant. The requirements are specified in the source code of the test itself, namely, in the index.json file, and checked before running it. The size of the work area and all the logic of the experiment are also set in the test itself. This allows to avoid running a psychological test in conditions that differ from those predetermined by the developer. In addition, the above allows to separate the group of participants of interest from participants with an inappropriate device configuration even at the stage of data collection.

The proposed architecture is limited by the client’s web browser. When using specialized tools, it is possible to substitute UserAgent, which can allow testing if it does not meet the specified requirements. Based on this, it is advisable to conduct research on small groups of participants in laboratory conditions using the same equipment.

6. Conclusions

Existing studies note differences in response times when using different devices for psychological research using the web platforms [10,11,12,13,14,15,16,23,28,29,30,31,32,33,47] discussed above. In this regard, the paper considered the issue of the possibility of reducing the influence of a certain category of devices on the reaction time. The paper also considered the development of an architectural solution that reduces the impact of differences in devices when conducting research in psychology using web platforms.

The survey was conducted using the web platform to verify this. A total of 3786 students of grades 7–9 took part in the survey. The results were divided into three categories of devices: mobile devices, legacy PC, and modern PC. Statistical analysis of data on two questions from the questionnaire was carried out. The analysis showed the difference between various groups of devices. It is important to note that for different questions, the difference could be both upward and downward relative to other groups. From this, it was concluded that the introduction of restrictions on the characteristics of the device would be more expedient than trying to reduce the error due to differences in devices.

Therefore, this paper proposes an architectural solution aimed at reducing the effect of device variability on the results of cognitive psychological experiments. It involves isolating the context of a psychological experiment from the web platform. The architectural solution considers the characteristics of the device used by participants to undergo psychological research in the web platform and makes it possible to restrict access to those whose devices do not meet the specified criteria in the psychological methodology. The limitations of the architectural solution include the fact that its capabilities are dictated by the functionality of web browsers.

Future studies are planned to use a wider range of statistical tools.

Author Contributions

Conceptualization, E.N. and S.M. (Sergey Malykh); methodology, E.N. and D.I.; software, D.I. and P.K.; validation, A.N.K., A.S., A.A., S.M. (Shamil Magomedov), A.M., V.I. and S.M. (Sergey Malykh); formal analysis, E.N., D.I., A.N.K., A.S., A.A., S.M. (Shamil Magomedov), A.M., V.I. and S.M. (Sergey Malykh); resources, D.I.; data curation, E.N., D.I., A.N.K., A.S., A.A., S.M. (Shamil Magomedov), A.M., V.I. and S.M. (Sergey Malykh); writing—original draft preparation, E.N. and D.I.; writing—review and editing, P.K., A.N.K., A.S., A.A., S.M. (Shamil Magomedov), A.M., V.I. and S.M. (Sergey Malykh); supervision, E.N.; project administration, E.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Russian Science Foundation, grant number 17-78-30028.

Data Availability Statement

Not applicable, the study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

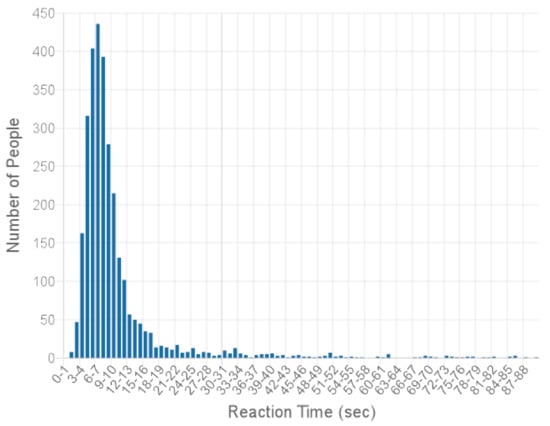

Figure A1.

Reaction time distribution for Mobile Device category, question PQ106-01. Missing values in the histogram: 5.

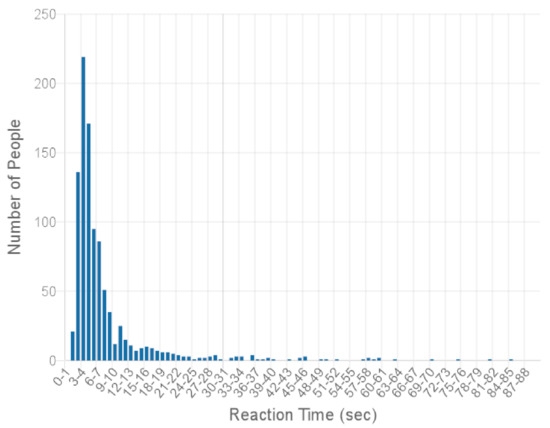

Figure A2.

Reaction time distribution for Legacy PC category, question PQ106-01. Missing values in the histogram: 8.

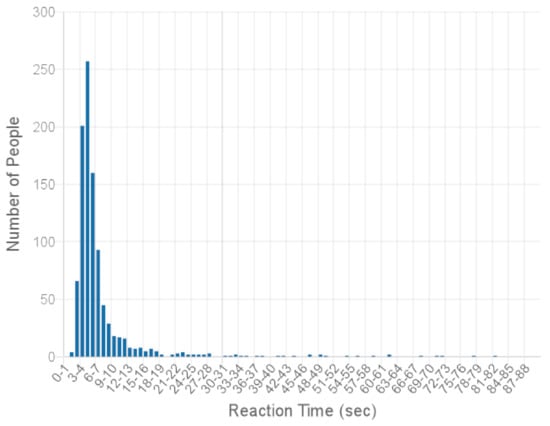

Figure A3.

Reaction time distribution for Modern PC category, question PQ106-01. Missing values in the histogram: 6.

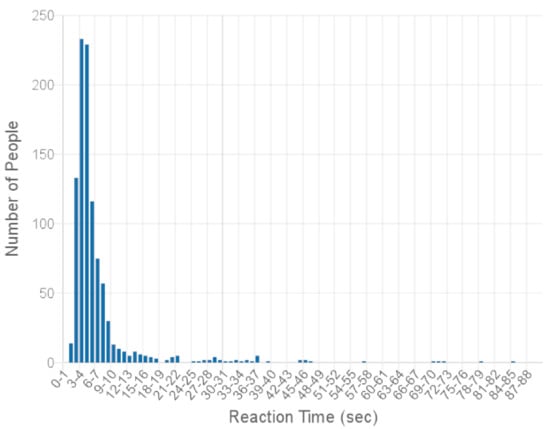

Figure A4.

Reaction time distribution for All Devices category, question PQ106-01. Missing values in the histogram: 19.

Figure A5.

Reaction time distribution for Mobile Device category, question PQ610. Missing values in the histogram: 4.

Figure A6.

Reaction time distribution for Legacy PC category, question PQ610. Missing values in the histogram: 6.

Figure A7.

Reaction time distribution for Modern PC category, question PQ610. Missing values in the histogram: 3.

Figure A8.

Reaction time distribution for All Devices category, question PQ610. Missing values in the histogram: 13.

Appendix B

Table A1.

Table of API methods for interaction between web platform and programmable test.

Table A1.

Table of API methods for interaction between web platform and programmable test.

| Method Name | Parameters | Returned Values | Description |

|---|---|---|---|

| setIframeSize | Size—the object with two fields (width, height) Width—working area width. Height—working area height. Can be specified in any units allowed by the browser. | - | Sets the size of the work area for the psychological test. |

| setBackgroundColor | Color—any valid color used in the background-color CSS property | - | Sets the background color of the page. |

| getWindowSize | - | Size—the object with two fields (width, height) | Returns an object with the current size of the working area of the browser window. |

| getJsFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getCssFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getImageFileUrlByKey | Key—key (alias) to access the file | ObjectURL—file link | Finds the file specified in the Resources section and returns a link to it. |

| getJsFileUrls | - | Array<ObjectURL>—links to JS files specified in index.json for the current block | Returns a list of links JS files related to the current block. |

| getCssFileUrls | - | Array<ObjectURL>—links to CSS files specified in index.json for the current block | Returns a list of links CSS files related to the current block. |

| injectJS | - | - | Using the getJsFileUrls method, it finds links to JS files and inside the body tag creates a script tag for each of them, to connect on the current page inside an iframe. |

| injectCSS | - | - | Using the getCssFileUrls method, it finds links to CSS files and inside the head tag creates a link tag for each of them to connect on the current page inside an iframe. |

| nextBlock | - | - | Ends the current block of test and starts the next block. If the next block fails, the test ends. |

| interrupt | - | - | Interrupts the operation of the current block (or test, depending on the interrupt settings). The user is shown a message with a “Next” button. |

| isInterrupted | - | Interrupted—flag indicating whether the interrupt condition has been reached. True if achieved, False otherwise. | Returns a flag signaling that an interrupt condition has been reached. |

| saveEvent | Tags—tags array Event—data object | - | Saves the event object with data, attaching tags to it for later access to them. |

| getEventsByTags | Tags—tags array | Events—an array of events, each event has the form: | Returns all saved events that match the given set of tags. |

| getEventsByTag | Tag—tags array | Events—an array of events, each event has the form: | Returns all saved events matching the passed tag. |

| setScaleValue | Key—scale key | - | Retains the specified value at the specified scale key. |

| getScaleValue | Key—scale key | Value—scale value | Returns the previously saved value at the specified scale key. |

| getMetadata | - | Metadata—metadata object | Returns an object with service information (for example, test start time, browser version). |

References

- Callegaro, M.; Yang, Y. The role of surveys in the era of “big data”. In The Palgrave Handbook of Survey Research; Palgrave Macmillan: Cham, Switzerland, 2018; pp. 175–192. [Google Scholar]

- Nadile, E.M.; Williams, K.D.; Wiesenthal, N.J.; Cooper, K.M. Gender Differences in Student Comfort Voluntarily Asking and Answering Questions in Large-Enrollment College Science Courses. J. Microbiol. Biol. Educ. 2021, 22, e00100-21. [Google Scholar] [CrossRef]

- Butler, C.C.; Connor, J.T.; Lewis, R.J.; Verheij, T. Answering patient-centred questions efficiently: Response-adaptive platform trials in primary care. Br. J. Gen. Pract. 2018, 68, 294–295. [Google Scholar] [CrossRef] [PubMed]

- Mutabazi, E.; Ni, J.; Tang, G.; Cao, W. A Review on Medical Textual Question Answering Systems Based on Deep Learning Approaches. Appl. Sci. 2021, 11, 5456. [Google Scholar] [CrossRef]

- Stewart, N.; Chandler, J.; Paolacci, G. Crowdsourcing samples in cognitive science. Trends Cogn. Sci. 2017, 21, 736–748. [Google Scholar] [CrossRef] [PubMed]

- Gureckis, T.M.; Martin, J.; McDonnell, J.; Chan, P. psiTurk: An open-source framework for conducting replicable behavioral experiments online. Behav. Res. Methods 2016, 48, 829–842. [Google Scholar] [CrossRef] [PubMed]

- Reinecke, K.; Gajos, K.Z. Labin the Wild: Conducting Large-Scale Online Experiments with Uncompensated Samples. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, New York, NY, USA, 14–18 March 2015; pp. 1364–1378. [Google Scholar] [CrossRef]

- Magomedov, S.; Gusev, A.; Ilin, D.; Nikulchev, E. Users’ Reaction Time for Improvement of Security and Access Control in Web Services. Appl. Sci. 2021, 11, 2561. [Google Scholar] [CrossRef]

- Magomedov, S.G.; Kolyasnikov, P.V.; Nikulchev, E.V. Development of technology for controlling access to digital portals and platforms based on estimates of user reaction time built into the interface. Russ. Technol. J. 2020, 8, 34–46. [Google Scholar] [CrossRef]

- Hilbig, B.E. Reaction time effects in lab-versus Web-based research: Experimental evidence. Behav. Res. Methods 2016, 48, 1718–1724. [Google Scholar] [CrossRef]

- Reips, U.-D. Standards for Internet-based experimenting. Exp. Psychol. 2002, 49, 243–256. [Google Scholar] [CrossRef]

- Zhou, H.; Fishbach, A. The pitfall of experimenting on the web: How unattended selective attrition leads to surprising (yet false) research conclusions. J. Personal. Soc. Psychol. 2016, 111, 493–504. [Google Scholar] [CrossRef]

- Reimers, S.; Stewart, N. Presentation and response timing accuracy in Adobe Flash and HTML5/JavaScript Web experiments. Behav. Res. Methods 2015, 47, 309–327. [Google Scholar] [CrossRef] [PubMed]

- Antoun, C.; Couper, M.P.; Conrad, F.G. Effects of Mobile versus PC Web on Survey Response Quality: A Crossover Experiment in a Probability Web Panel. Public Opin. Q. 2017, 81, 280–306. [Google Scholar] [CrossRef]

- Trübner, M. Effects of Header Images on Different Devices in Web Surveys. Surv. Res. Methods 2020, 14, 43–53. [Google Scholar]

- Conrad, F.G.; Couper, M.P.; Tourangeau, R.; Zhang, C. Reducing speeding in web surveys by providing immediate feedback. Surv. Res. Methods 2017, 11, 45–61. [Google Scholar] [CrossRef] [PubMed]

- Von Bastian, C.C.; Locher, A.; Ruflin, M. Tatool: A Java-based open-source programming framework for psychological studies. Behav. Res. Methods 2013, 45, 108–115. [Google Scholar] [CrossRef]

- De Leeuw, J.R.; Motz, B.A. jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behav. Res. Methods 2015, 47, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Basok, B.M.; Frenkel, S.L. Formalized approaches to assessing the usability of the user interface of web applications. Russ. Technol. J. 2021, 9, 7–21. [Google Scholar] [CrossRef]

- Tomic, S.T.; Janata, P. Ensemble: A Web-based system for psychology survey and experiment management Springer Link. Behav. Res. Methods 2007, 39, 635–650. [Google Scholar] [CrossRef]

- Stoet, G. PsyToolkit: A Novel Web-Based Method for Running Online Questionnaires and Reaction-Time Experiments. Teach. Psychol. 2017, 44, 24–31. [Google Scholar] [CrossRef]

- Schubert, T.W.; Murteira, C.; Collins, E.C.; Lopes, D. Scripting RT: A Software Library for Collecting Response Latencies in Online Studies of Cognition. PLoS ONE 2013, 8, e67769. [Google Scholar] [CrossRef]

- McGraw, K.O.; Tew, M.D.; Williams, J.E. The Integrity of Web-Delivered Experiments: Can You Trust the Data? Psychol. Sci. 2000, 11, 502–506. [Google Scholar] [CrossRef]

- van Steenbergen, H.; Bocanegra, B.R. Promises and pitfalls of Web-based experimentation in the advance of replicable psychological science: A reply to Plant (2015). Behav. Res. Methods 2016, 48, 1713–1717. [Google Scholar] [CrossRef]

- Theisen, K.J. Programming languages in chemistry: A review of HTML5/JavaScript. J. Cheminform. 2019, 11, 11. [Google Scholar] [CrossRef]

- Robinson, S.J.; Brewer, G. Performance on the traditional and the touch screen, tablet versions of the Corsi Block and the Tower of Hanoi tasks. Comput. Hum. Behav. 2016, 60, 29–34. [Google Scholar] [CrossRef]

- Frank, M.C.; Sugarman, E.; Horowitz, A.C.; Lewis, M.L.; Yurovsky, D. Using Tablets to Collect Data From Young Children. J. Cogn. Dev. 2016, 17, 1–17. [Google Scholar] [CrossRef]

- Ackerman, R.; Koriat, A. Response latency as a predictor of the accuracy of children’s reports. J. Exp. Psychol. Appl. 2011, 17, 406–417. [Google Scholar] [CrossRef] [PubMed]

- Kochari, A.R. Conducting Web-Based Experiments for Numerical Cognition Research. J. Cogn. 2019, 2, 39. [Google Scholar] [CrossRef] [PubMed]

- Toninelli, D.; Revilla, M. Smartphones vs. PCs: Does the device affect the web survey experience and the measurement error for sensitive topics? A replication of the Mavletova & Couper’s 2013 experiment. Surv. Res. Methods 2016, 10, 153–169. [Google Scholar]

- Chen, C.; Johnson, J.G.; Charles, A.; Weibel, N. Understanding Barriers and Design Opportunities to Improve Healthcare and QOL for Older Adults through Voice Assistants. In Proceedings of the 23rd International ACM SIGACCESS Conference on Computers and Accessibility (Virtual Event, USA)(ASSETS’21), New York, NY, USA, 23–25 October 2021. [Google Scholar]

- Singh, R.; Timbadia, D.; Kapoor, V.; Reddy, R.; Churi, P.; Pimple, O. Question paper generation through progressive model and difficulty calculation on the Promexa Mobile Application. Educ. Inf. Technol. 2021, 26, 4151–4179. [Google Scholar] [CrossRef] [PubMed]

- Höhne, J.K.; Schlosser, S. Survey Motion: What can we learn from sensor data about respondents’ completion and response behavior in mobile web surveys? Int. J. Soc. Res. Methodol. 2019, 22, 379–391. [Google Scholar] [CrossRef]

- Osipov, I.V.; Nikulchev, E. Wawcube puzzle, transreality object of mixed reality. Adv. Intell. Syst. Comput. 2019, 881, 22–33. [Google Scholar]

- Höhne, J.K.; Schlosser, S.; Couper, M.P.; Blom, A.G. Switching away: Exploring on-device media multitasking in web surveys. Comput. Hum. Behav. 2020, 111, 106417. [Google Scholar] [CrossRef]

- Schwarz, H.; Revilla, M.; Weber, W. Memory Effects in Repeated Survey Questions: Reviving the Empirical Investigation of the Independent Measurements Assumption. Surv. Res. Methods 2020, 14, 325–344. [Google Scholar]

- Wenz, A. Do distractions during web survey completion affect data quality? Findings from a laboratory experiment. Soc. Sci. Comput. Rev. 2021, 39, 148–161. [Google Scholar] [CrossRef]

- Toninelli, D.; Revilla, M. How mobile device screen size affects data collected in web surveys. In Advances in Questionnaire Design, Development, Evaluation and Testing; 2020; pp. 349–373. Available online: https://onlinelibrary.wiley.com/doi/10.1002/9781119263685.ch14 (accessed on 22 September 2021).

- Erradi, A.; Waheed Iqbal, A.M.; Bouguettaya, A. Web Application Resource Requirements Estimation based on the Workload Latent. Memory 2020, 22, 27–35. [Google Scholar] [CrossRef]

- Kim, J.; Francisco, E.; Holden, J.; Lensch, R.; Kirsch, B.; Dennis, R.; Tommerdahl, M. Visual vs. Tactile Reaction Testing Demonstrates Problems with Online Cognitive Testing. J. Sci. Med. 2020, 2, 1–10. [Google Scholar]

- Holden, J.; Francisco, E.; Tommerdahl, A.; Tommerdahl, A.; Lensch, R.; Kirsch, B.; Zai, L.; Tommerdahl, M. Methodological problems with online concussion testing. Front. Hum. Neurosci. 2020, 14, 394. [Google Scholar] [CrossRef] [PubMed]

- Nikulchev, E.; Ilin, D.; Silaeva, A.; Kolyasnikov, P.; Belov, V.; Runtov, A.; Malykh, S. Digital Psychological Platform for Mass Web-Surveys. Data 2020, 5, 95. [Google Scholar] [CrossRef]

- Gusev, A.; Ilin, D.; Kolyasnikov, P.; Nikulchev, E. Effective Selection of Software Components Based on Experimental Evaluations of Quality of Operation. Eng. Lett. 2020, 28, 420–427. [Google Scholar]

- Nikulchev, E.; Ilin, D.; Belov, B.; Kolyasnikov, P.; Kosenkov, A. E-learning Tools on the Healthcare Professional Social Networks. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 29–34. [Google Scholar] [CrossRef]

- Faust, M.E.; Balota, D.A.; Spieler, D.H.; Ferraro, F.R. Individual differences in information-processing rate and amount: Implications for group differences in response latency. Psychol. Bull. 1999, 125, 777–799. [Google Scholar] [CrossRef] [PubMed]

- Magomedov, S.; Ilin, D.; Silaeva, A.; Nikulchev, E. Dataset of user reactions when filling out web questionnaires. Data 2020, 5, 108. [Google Scholar] [CrossRef]

- Ozkok, O.; Zyphur, M.J.; Barsky, A.P.; Theilacker, M.; Donnellan, M.B.; Oswald, F.L. Modeling measurement as a sequential process: Autoregressive confirmatory factor analysis (AR-CFA). Front. Psychol. 2019, 10, 2108. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).