Aggregated Indices in Website Quality Assessment

Abstract

1. Introduction

2. Website Quality

2.1. Design Standards and Guidelines

- de jure standards—ISO standards and other standards approved by an authorised organisational unit; documents laying down, inter alia, the principles, requirements, characteristics, parameters, methods or rule ensuring quality of a particular component, or other normative documents of legal regulation nature;

- de facto standards—customary solutions which do not result from formal arrangements but, however, are often regarded as standard ones, for example the use of a particular graphic or colour to mark objects intended for a specific purpose.

2.2. High-Quality Website Specification

2.3. Research Into Website Quality

3. Materials and Methods

4. Results

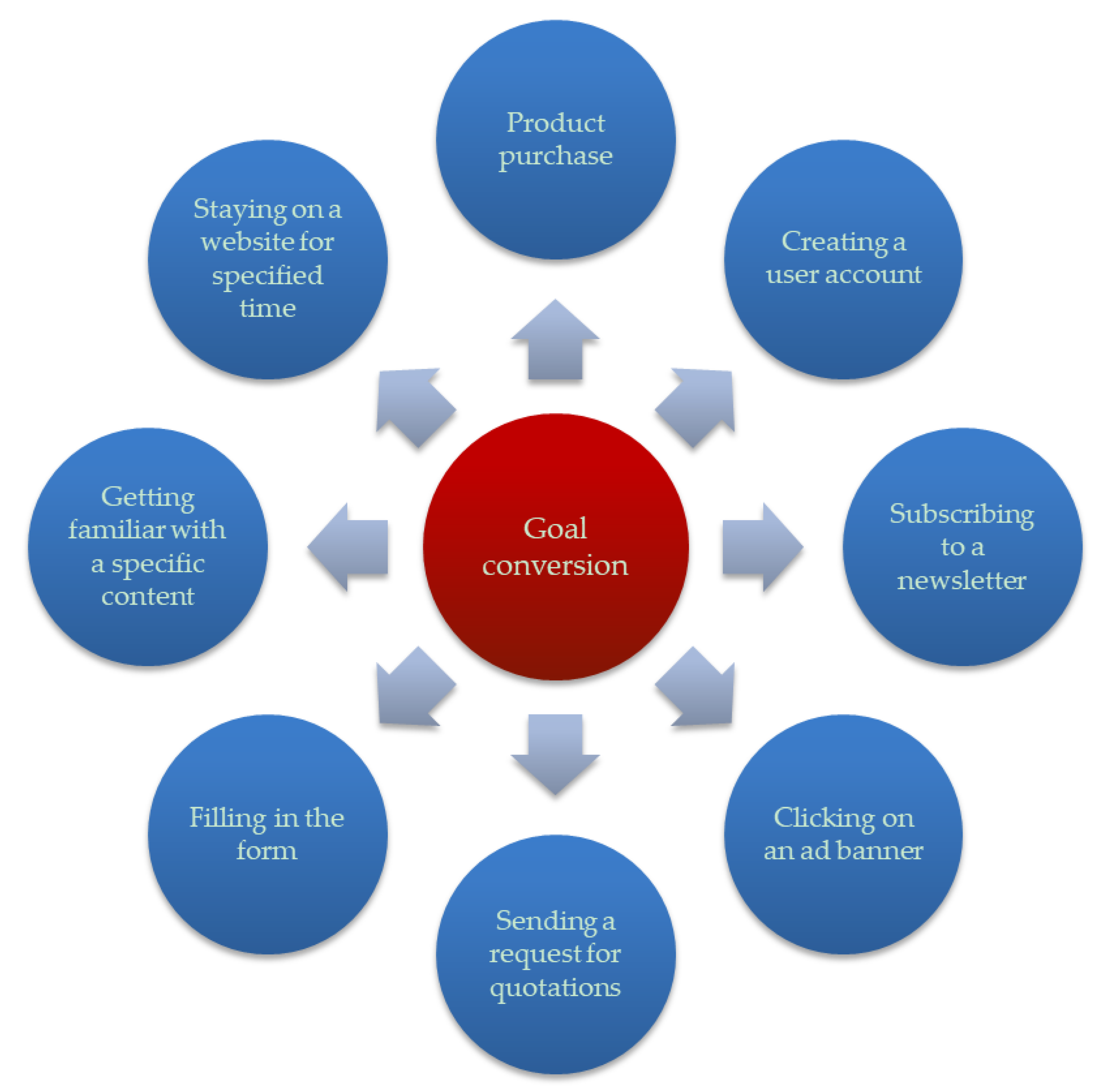

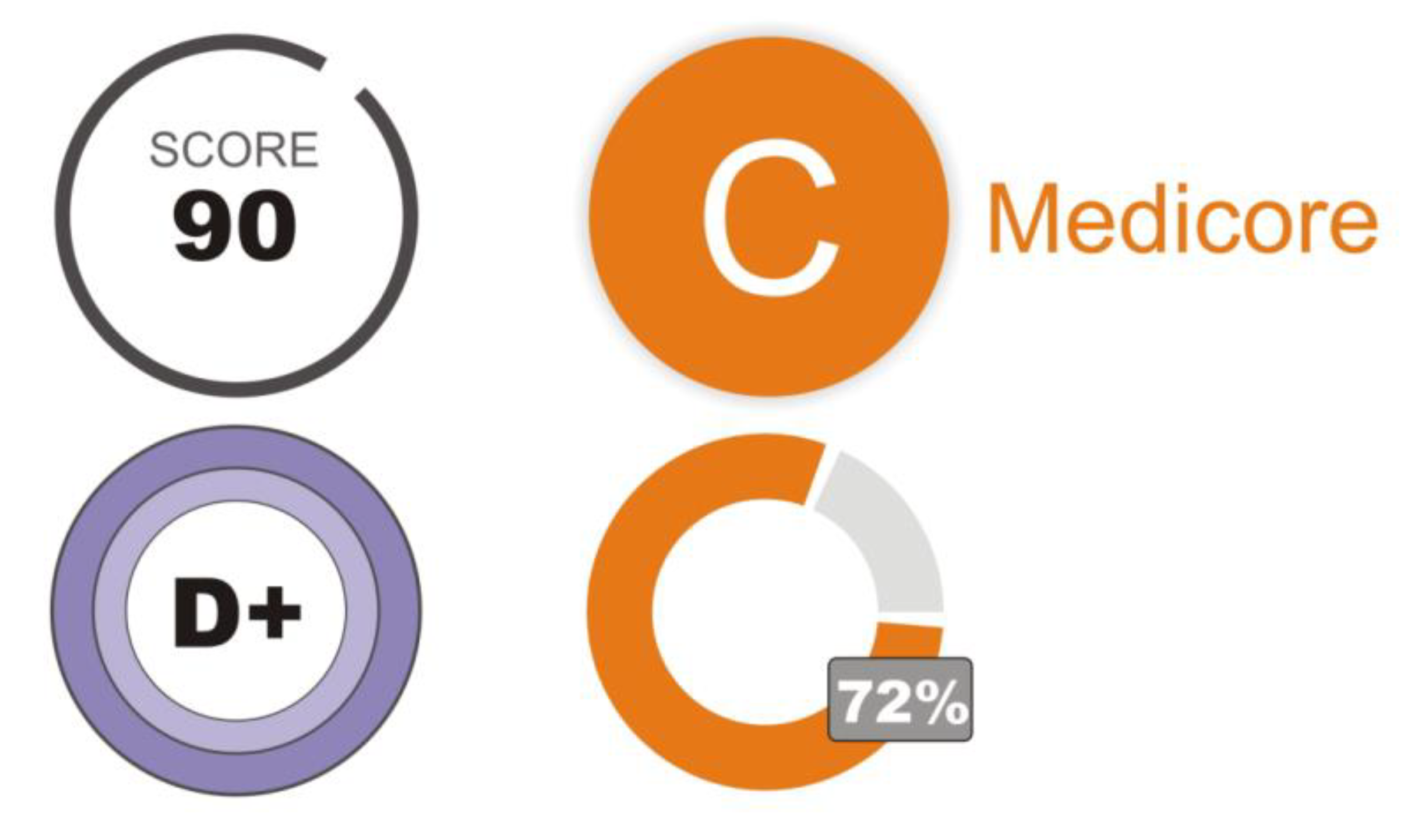

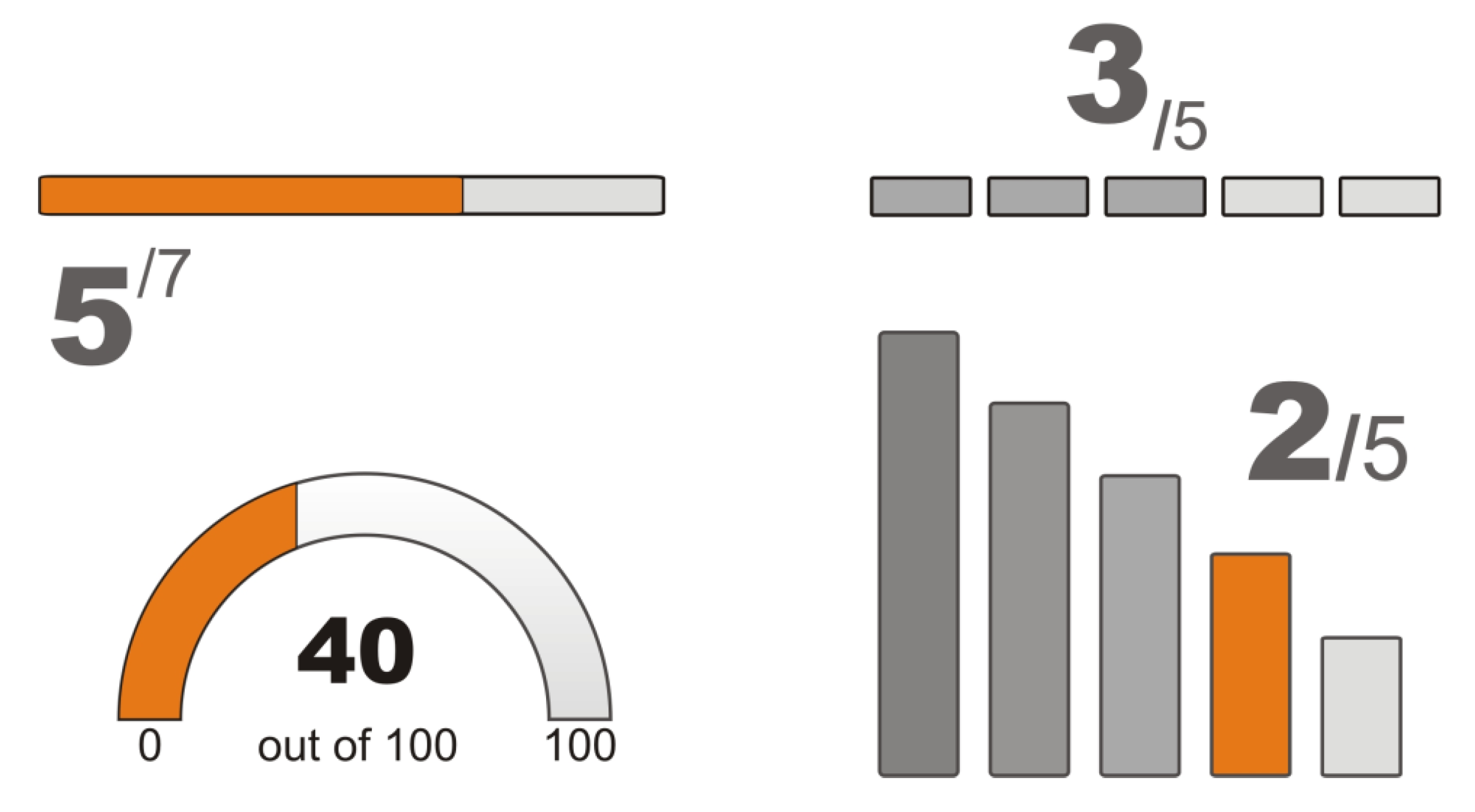

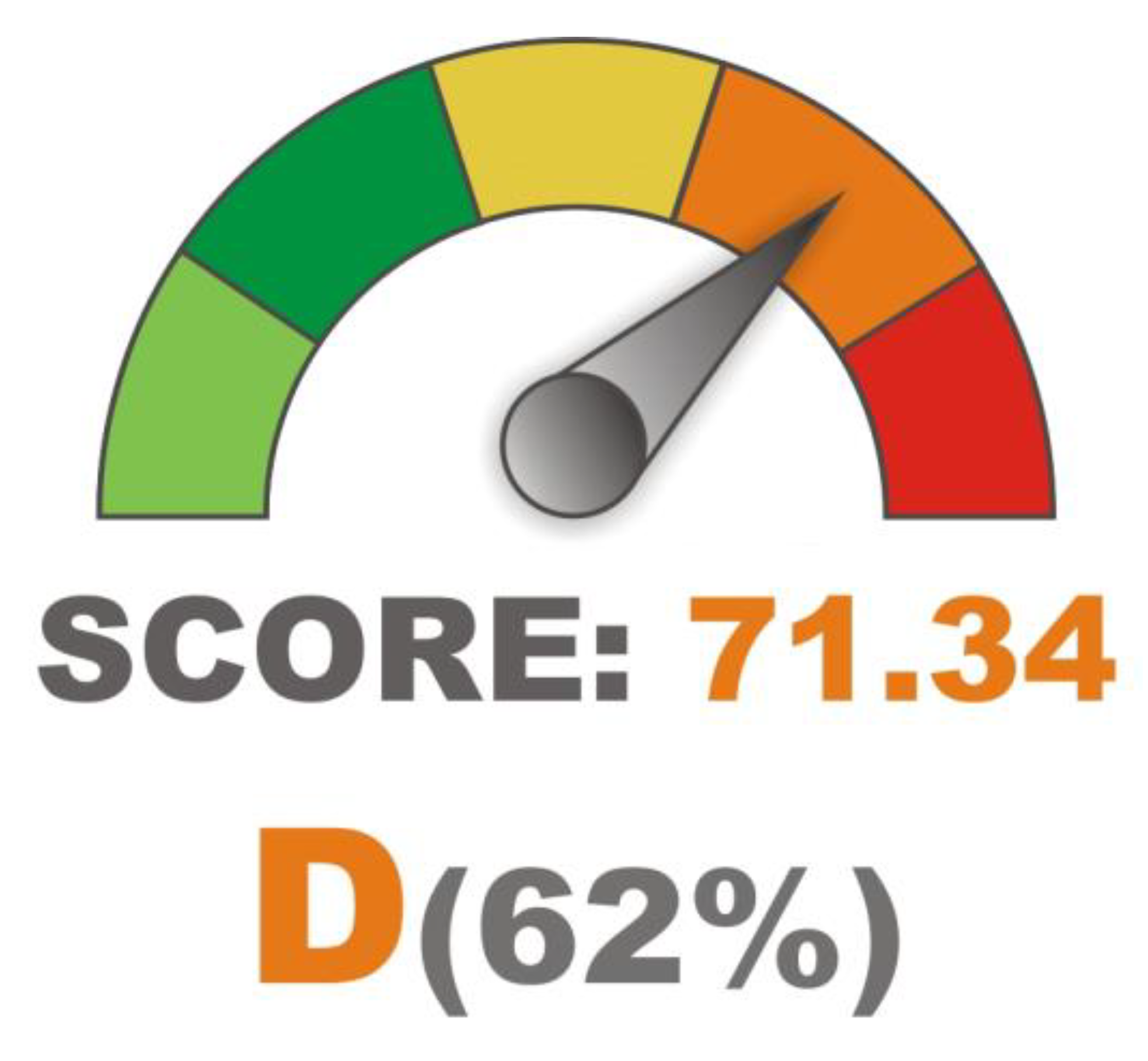

4.1. The Form of Presentation Matters

4.2. SEO Indices

4.3. Aggregated Performance Indices

4.4. Content Quality Indices

4.5. Link Quality

4.6. Website Accessibility for the Disabled

4.7. Measurement of the Global Website Potential

4.8. Aggregated Indices

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wells, J.D.; Parboteeah, V.; Valacich, J.S. Online impulse buying: Understanding the interplay between consumer impulsiveness and website quality. J. Assoc. Inf. Syst. 2011, 12, 3. [Google Scholar] [CrossRef]

- Kincl, T.; Štrach, P. Measuring website quality: Asymmetric effect of user satisfaction. Behav. Inf. Technol. 2012, 31, 647–657. [Google Scholar] [CrossRef]

- Juran, J.; Godfrey, A.B. Quality Handbook; Republished McGraw-Hill: New York, NY, USA, 1999. [Google Scholar]

- Chung, L.; do Prado Leite, J.C.S. On non-functional requirements in software engineering. In Conceptual Modeling: Foundations and Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 363–379. [Google Scholar] [CrossRef]

- Sam, M.; Fazli, M.; Tahir, M.N.H. Website quality and consumer online purchase intention of air ticket. Int. J. Basic Appl. Sci. 2009, 9. Available online: https://ssrn.com/abstract=2255286 (accessed on 31 March 2020).

- Kim, S.; Stoel, L. Dimensional hierarchy of retail website quality. Inf. Manag. 2004, 41, 619–633. [Google Scholar] [CrossRef]

- Wells, J.D.; Hess, J.S.V.J. What Signal Are You Sending? How Website Quality Influences Perceptions of Product Quality and Purchase Intentions. MIS Q. 2011, 35, 373. [Google Scholar] [CrossRef]

- Lin, H.-F. The Impact of Website Quality Dimensions on Customer Satisfaction in the B2C E-commerce Context. Total. Qual. Manag. Bus. Excel. 2007, 18, 363–378. [Google Scholar] [CrossRef]

- User Expectations and Rankings of Quality Factors in Different Web Site Domains. Int. J. Electron. Commer. 2001, 6, 9–33. [CrossRef]

- Loiacono, E.T. WebQual™: A website quality instrument. Ph.D. Thesis, University of Georgia, Athens, Greece, 2000. [Google Scholar]

- Giannakoulopoulos, A.; Konstantinou, N.; Koutsompolis, D.; Pergantis, M.; Varlamis, I. Academic Excellence, Website Quality, SEO Performance: Is there a Correlation? Futur. Internet 2019, 11, 242. [Google Scholar] [CrossRef]

- Sikorski, M. Usługi On-Line: Jakość, Interakcje, Satysfakcja Klienta; Wyd. PJWSTK: Warszawa, Polska, 2012. [Google Scholar]

- Bai, B.; Law, R.; Wen, I.; Law, R. The impact of website quality on customer satisfaction and purchase intentions: Evidence from Chinese online visitors. Int. J. Hosp. Manag. 2008, 27, 391–402. [Google Scholar] [CrossRef]

- Schlosser, A.E.; White, T.B.; Lloyd, S.M. Converting Web Site Visitors into Buyers: How Web Site Investment Increases Consumer Trusting Beliefs and Online Purchase Intentions. J. Mark. 2006, 70, 133–148. [Google Scholar] [CrossRef]

- Helms, M.M. Encyklopedia of Management; Thompson Gale: Detroit, MI, USA, 2006. [Google Scholar]

- Dickinger, A.; Stangl, B. Website performance and behavioral consequences: A formative measurement approach. J. Bus. Res. 2013, 66, 771–777. [Google Scholar] [CrossRef]

- Belanche, D.; Casalo, L.V.; Guinaliu, M. Website usability, consumer satisfaction and the intention to use a website: The moderating effect of perceived risk. J. Retail. Consum. Serv. 2012, 19, 124–132. [Google Scholar] [CrossRef]

- Lee, Y.; Kozar, K.A. Understanding of website usability: Specifying and measuring constructs and their relationships. Decis. Support Syst. 2012, 52, 450–463. [Google Scholar] [CrossRef]

- Schubert, D. Influence of Mobile-friendly Design to Search Results on Google Search. Procedia - Soc. Behav. Sci. 2016, 220, 424–433. [Google Scholar] [CrossRef]

- Król, K. Comparative Analysis of the Performance of Selected Raster Map Viewers. Geomat. Landmanag. Landsc. 2018, 2, 23–32. [Google Scholar] [CrossRef]

- Luna-Nevarez, C.; Hyman, M.R. Common practices in destination website design. J. Destin. Mark. Manag. 2012, 1, 94–106. [Google Scholar] [CrossRef]

- Hasan, L.; Abuelrub, E. Assessing the quality of web sites. Appl. Comput. Inform. 2011, 9, 11–29. [Google Scholar] [CrossRef]

- Lowry, P.B.; Wilson, D.W.; Haig, W.L. A Picture is Worth a Thousand Words: Source Credibility Theory Applied to Logo and Website Design for Heightened Credibility and Consumer Trust. Int. J. Hum.-Comput. Interact. 2013, 30, 63–93. [Google Scholar] [CrossRef]

- Federici, S.; Bracalenti, M.; Meloni, F.; Luciano, J.V. World Health Organization disability assessment schedule 2.0: An international systematic review. Disabil. Rehab. 2016, 39, 2347–2380. [Google Scholar] [CrossRef]

- Baye, M.R.; Santos, B.D.L.; Wildenbeest, M.R. Search Engine Optimization: What Drives Organic Traffic to Retail Sites? J. Econ. Manag. Strat. 2015, 25, 6–31. [Google Scholar] [CrossRef]

- Djonov, E. Website hierarchy and the interaction between content organization, webpage and navigation design: A systemic functional hypermedia discourse analysis perspective. Inf. Des. J. 2007, 15, 144–162. [Google Scholar] [CrossRef]

- Griffiths, K.M.; Christensen, H. Quality of web based information on treatment of depression: Cross sectional survey. BMJ 2000, 321, 1511–1515. [Google Scholar] [CrossRef] [PubMed]

- Holliman, G.; Rowley, J. Business to business digital content marketing: Marketers’ perceptions of best practice. J. Res. Interact. Mark. 2014, 8, 269–293. [Google Scholar] [CrossRef]

- Rahimnia, F.; Hassanzadeh, J.F. The impact of website content dimension and e-trust on e-marketing effectiveness: The case of Iranian commercial saffron corporations. Inf. Manag. 2013, 50, 240–247. [Google Scholar] [CrossRef]

- Hudson, S.; Thal, K. The Impact of Social Media on the Consumer Decision Process: Implications for Tourism Marketing. J. Travel Tour. Mark. 2013, 30, 156–160. [Google Scholar] [CrossRef]

- Kenekayoro, P.; Buckley, K.; Thelwall, M. Hyperlinks as inter-university collaboration indicators. J. Inf. Sci. 2014, 40, 514–522. [Google Scholar] [CrossRef]

- Plaza, B. Google Analytics for measuring website performance. Tour. Manag. 2011, 32, 477–481. [Google Scholar] [CrossRef]

- Cui, M.; Hu, S. Search Engine Optimization Research for Website Promotion. In Proceedings of the 2011 International Conference of Information Technology, Computer Engineering and Management Sciences, Nanjing, China, 24–25 September 2011; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2011; Volume 4, pp. 100–103. [Google Scholar] [CrossRef]

- Król, K. Forgotten agritourism: Abandoned websites in the promotion of rural tourism in Poland. J. Hosp. Tour. Technol. 2019, 10, 431–442. [Google Scholar] [CrossRef]

- Gao, L.; Bai, X. Online consumer behaviour and its relationship to website atmospheric induced flow: Insights into online travel agencies in China. J. Retail. Consum. Serv. 2014, 21, 653–665. [Google Scholar] [CrossRef]

- Chi, T. Understanding Chinese consumer adoption of apparel mobile commerce: An extended TAM approach. J. Retail. Consum. Serv. 2018, 44, 274–284. [Google Scholar] [CrossRef]

- Liu, K.P.A.C.; Liu, C.; Arnett, K.P.; Litecky, C. Design Quality of Websites for Electronic Commerce: Fortune 1000 Webmasters’ Evaluations. Electron. Mark. 2000, 10, 120–129. [Google Scholar] [CrossRef]

- Joury, A.; Alshathri, M.; Alkhunaizi, M.; Jaleesah, N.; Pines, J.M. Internet Websites for Chest Pain Symptoms Demonstrate Highly Variable Content and Quality. Acad. Emerg. Med. 2016, 23, 1146–1152. [Google Scholar] [CrossRef] [PubMed]

- Charnock, D.; Shepperd, S.; Needham, G.; Gann, R. DISCERN: An instrument for judging the quality of written consumer health information on treatment choices. J. Epidemiol. Community Heal. 1999, 53, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Griffiths, K.M.; Christensen, H.; Witteman, H.; Shepperd, S. Website Quality Indicators for Consumers. J. Med. Internet Res. 2005, 7, 55. [Google Scholar] [CrossRef]

- Silberg, W.M.; Lundberg, G.D.; Musacchio, R.A. Assessing, Controlling, and Assuring the Quality of Medical Information on the Internet. JAMA 1997, 277, 1244. [Google Scholar] [CrossRef]

- Dueppen, A.J.; Bellon-Harn, M.L.; Radhakrishnan, N.; Manchaiah, V. Quality and Readability of English-Language Internet Information for Voice Disorders. J. Voice 2019, 33, 290–296. [Google Scholar] [CrossRef]

- Galati, A.; Crescimanno, M.; Tinervia, S.; Siggia, D. Website quality and internal business factors. Int. J. Wine Bus. Res. 2016, 28, 308–326. [Google Scholar] [CrossRef]

- Mateos, M.B.; Chamorro-Mera, A.; González, F.J.M.; López, O.G. A new Web assessment index: Spanish universities analysis. Internet Res. 2001, 11, 226–234. [Google Scholar] [CrossRef]

- Miranda, F.J.; Cortés, R.; Barriuso, C. Quantitative evaluation of e-banking web sites: An empirical study of Spanish banks. Electron. J. Inf. Syst. Eval. 2006, 9, 73–82. [Google Scholar]

- Miranda-Gonzalez, F.J.; Sanguino, R.; Bañegil, T.M. Quantitative assessment of European municipal web sites. Internet Res. 2009, 19, 425–441. [Google Scholar] [CrossRef]

- Sanders, J.; Galloway, L. Rural small firms’ website quality in transition and market economies. J. Small Bus. Enterp. Dev. 2013, 20, 788–806. [Google Scholar] [CrossRef]

- Miranda-Gonzalez, F.J.; Rubio, S.; Chamorro, A.; Chamorro-Mera, A. The Web as a Marketing Tool in the Spanish Foodservice Industry: Evaluating the Websites of Spain’s Top Restaurants. J. Foodserv. Bus. Res. 2015, 18, 146–162. [Google Scholar] [CrossRef]

- Ecer, F. A Hybrid Banking Websites Quality Evaluation Model Using AHP and COPRAS-G: A Turkey Case. Technol. Econ. Dev. Econ. 2014, 20, 757–782. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision making with the analytic hierarchy process. Int. J. Serv. Sci. 2008, 1, 83. [Google Scholar] [CrossRef]

- Zavadskas, E.K.; Kaklauskas, A.; Turskis, Z.; Tamošaitienė, J. Multi-attribute decision-making model by applying grey numbers. Informatica 2009, 20, 305–320. [Google Scholar]

- Gregg, D.; Walczak, S. The relationship between website quality, trust and price premiums at online auctions. Electron. Commer. Res. 2010, 10, 1–25. [Google Scholar] [CrossRef]

- Rolland, S.; Freeman, I. A new measure of e-service quality in France. Int. J. Retail. Distrib. Manag. 2010, 38, 497–517. [Google Scholar] [CrossRef]

- Hur, Y.; Ko, Y.J.; Valacich, J. A Structural Model of the Relationships Between Sport Website Quality, E-Satisfaction, and E-Loyalty. J. Sport Manag. 2011, 25, 458–473. [Google Scholar] [CrossRef]

- Islam, A.; Tsuji, K. Evaluation of Usage of University Websites in Bangladesh. DESIDOC J. Libr. Inf. Technol. 2011, 31, 469–479. [Google Scholar] [CrossRef]

- Chou, W.-C.; Cheng, Y.-P. A hybrid fuzzy MCDM approach for evaluating website quality of professional accounting firms. Expert Syst. Appl. 2012, 39, 2783–2793. [Google Scholar] [CrossRef]

- Zech, C.; Wagner, W.; West, R. The Effective Design of Church Web Sites: Extending the Consumer Evaluation of Web Sites to the Non-Profit Sector. Inf. Syst. Manag. 2013, 30, 92–99. [Google Scholar] [CrossRef]

- Al-Debei, M.; Akroush, M.N.; Ashouri, M.I. Consumer attitudes towards online shopping. Internet Res. 2015, 25, 707–733. [Google Scholar] [CrossRef]

- Wang, L.; Law, R.; Guillet, B.D.; Hung, K.; Fong, D.K.C.; Law, R. Impact of hotel website quality on online booking intentions: eTrust as a mediator. Int. J. Hosp. Manag. 2015, 47, 108–115. [Google Scholar] [CrossRef]

- Ali, F. Hotel website quality, perceived flow, customer satisfaction and purchase intention. J. Hosp. Tour. Technol. 2016, 7, 213–228. [Google Scholar] [CrossRef]

- Chi, T. Mobile Commerce Website Success: Antecedents of Consumer Satisfaction and Purchase Intention. J. Internet Commer. 2018, 17, 1–26. [Google Scholar] [CrossRef]

- Martínez, A.B.; López, O.G.; Sanguino, R.; Buenadicha-Mateos, M. Analysis and Evaluation of the Largest 500 Family Firms’ Websites through PLS-SEM Technique. Sustainability 2018, 10, 557. [Google Scholar] [CrossRef]

- Liang, D.; Zhang, Y.; Xu, Z.; Jamaldeen, A. Pythagorean fuzzy VIKOR approaches based on TODIM for evaluating internet banking website quality of Ghanaian banking industry. Appl. Soft Comput. 2019, 78, 583–594. [Google Scholar] [CrossRef]

- Hsu, Y.C.; Chen, T.-J.; Chu, F.Y.; Liu, H.-Y.; Chou, L.-F.; Hwang, S.-J. Official Websites of Local Health Centers in Taiwan: A Nationwide Study. Int. J. Environ. Res. Public Heal. 2019, 16, 399. [Google Scholar] [CrossRef]

- Saverimoutou, A.; Mathieu, B.; Vaton, S. Web View: Measuring & Monitoring Representative Information on Websites. In Proceedings of the 2019 22nd Conference on Innovation in Clouds, Internet and Networks and Workshops (ICIN), Paris, France, 9–21 February 2019; pp. 133–138. [Google Scholar] [CrossRef]

- Garcia-Madariaga, J.; Virto, N.R.; López, M.F.B.; Manzano, J.A. Optimizing website quality: The case of two superstar museum websites. Int. J. Cult. Tour. Hosp. Res. 2019, 13, 16–36. [Google Scholar] [CrossRef]

- Law, R. Evaluation of hotel websites: Progress and future developments (invited paper for ‘luminaries’ special issue of International Journal of Hospitality Management). Int. J. Hosp. Manag. 2019, 76, 2–9. [Google Scholar] [CrossRef]

- Windhager, F.; Salisu, S.; Mayr, E. Exhibiting Uncertainty: Visualizing Data Quality Indicators for Cultural Collections. Informatics 2019, 6, 29. [Google Scholar] [CrossRef]

- Ismailova, R.; Inal, Y. Web site accessibility and quality in use: A comparative study of government Web sites in Kyrgyzstan, Azerbaijan, Kazakhstan and Turkey. Univers. Access Inf. Soc. 2016, 16, 987–996. [Google Scholar] [CrossRef]

- Sinha, P. Web Accessibility Analysis of Government Tourism Websites in India. SSRN Electron. J. 2018, 26–27. [Google Scholar] [CrossRef]

- Król, K. Stopień optymalizacji witryn internetowych obiektów turystyki wiejskiej dla wyszukiwarek internetowych. Roczniki Naukowe Ekonomii Rolnictwa i Rozwoju Obszarów Wiejskich 2018, 105, 110–121. [Google Scholar] [CrossRef]

- Nielsen, J. Website Response Times. Nielsen Norman Group. Available online: https://goo.gl/MymMco (accessed on 31 March 2020).

- Rasmusson, M.; Eklund, M. “It’s easier to read on the Internet—You just click on what you want to read…”. Educ. Inf. Technol. 2012, 18, 401–419. [Google Scholar] [CrossRef]

- Broda, B.; Ogrodniczuk, M.; Nitoń, B.; Gruszczynski, W. Measuring Readability of Polish Texts: Baseline Experiments. In Proceedings of the 9th International Conference on Language Resources and Evaluation, Reykjavik, Iceland, 26–31 May 2014. [Google Scholar]

- Lo, K.; Ramos, F.; Rogo, R. Earnings management and annual report readability. J. Account. Econ. 2017, 63, 1–25. [Google Scholar] [CrossRef]

- Loughran, T.; McDonald, B. Measuring Readability in Financial Disclosures. J. Finance 2014, 69, 1643–1671. [Google Scholar] [CrossRef]

- Sehra, S.S.; Singh, J.; Rai, H. Assessing OpenStreetMap Data Using Intrinsic Quality Indicators: An Extension to the QGIS Processing Toolbox. Futur. Internet 2017, 9, 15. [Google Scholar] [CrossRef]

- Król, K. Wirtualizacja oferty agroturystycznej. Handel Wewnętrzny 2018, 1, 274–283. [Google Scholar]

- Benbow, M. File not found: The problems of changing URLs for the World Wide Web. Internet Res. 1998, 8, 247–250. [Google Scholar] [CrossRef]

- Król, K.; Zdonek, D. Peculiarity of the bit rot and link rot phenomena. Glob. Knowl. Mem. Commun. 2019, 69, 20–37. [Google Scholar] [CrossRef]

- Vollenwyder, B.; Iten, G.; Brühlmann, F.; Opwis, K.; Mekler, E. Salient beliefs influencing the intention to consider Web Accessibility. Comput. Hum. Behav. 2019, 92, 352–360. [Google Scholar] [CrossRef]

- Yoon, K.; Dols, R.; Hulscher, L.; Newberry, T. An exploratory study of library website accessibility for visually impaired users. Libr. Inf. Sci. Res. 2016, 38, 250–258. [Google Scholar] [CrossRef]

- Król, K. Marketing Potential of Websites of Rural Tourism Facilities in Poland. Econ. Reg. Stud./Stud. Èkon. i Reg. 2019, 12, 158–172. [Google Scholar] [CrossRef]

- Thakur, A.; Sangal, A.L.; Bindra, H. Quantitative Measurement and Comparison of Effects of Various Search Engine Optimization Parameters on Alexa Traffic Rank. Int. J. Comput. Appl. 2011, 26, 15–23. [Google Scholar] [CrossRef]

- Król, K. Jakość witryn internetowych w zarządzaniu marketingowym na przykładzie obiektów turystyki wiejskiej w Polsce. Infrastruktura i Ekologia Terenów Wiejskich; Komisja Technicznej Infrastruktury Wsi Oddziału Polskiej Akademii Nauk: Kraków, Polska, 2018; Volume III, p. 181. [Google Scholar] [CrossRef]

- Król, K.; Bitner, A. Impact of raster compression on the performance of a map application. Geomat. Landmanag. Landsc. 2019, 3, 41–51. [Google Scholar] [CrossRef]

- Król, K.; Ziernicka-Wojtaszek, A.; Zdonek, D. Polish agritourism farm website quality and the nature of services provided. Organ. Manag. Sci. Q. 2019, 3, 73–93. [Google Scholar] [CrossRef]

- YSlow. Available online: http://yslow.org/ (accessed on 31 March 2020).

- Król, K.; Strzelecki, A.; Zdonek, D. Credibility of automatic appraisal of domain names. Sci. Pap. Sil. Univ. Technol. Organ. Manag. Ser. 2018, 127, 107–115. [Google Scholar] [CrossRef]

| Source | Dimensions | Area |

|---|---|---|

| [52] | Information quality, web design | Auction website |

| [53] | Ease of use, information content, security/privacy, fulfilment reliability, post-purchase customer service | French “e-tail” market |

| [54] | Information, interaction, design, system, fulfilment | Sport website |

| [55] | Content, organisation, readability, navigation and links, user interface design, performance and effectiveness, educational information | University websites in Bangladesh |

| [56] | System quality (accessibility, navigability, usability, privacy), information quality (relevance, understandability, richness, currency), service quality (responsiveness, reliability, assurance, empathy) | The top-four CPA (certified public accountant) firms in Taiwan |

| [57] | Technical adequacy (ease of navigation, interactivity, search engine list accuracy, valid links) Web content presentation (Usefulness, Clarity, Currency, Conciseness, Accuracy) Web appearance (Attractiveness, Organization, Effective use of fonts, Effective use of colours, Innovative use of multimedia) | Church web sites |

| [58] | Functionality (ease of navigation, responsiveness, interactivity, ease of accessing the site) Search facilities (simplicity, speed, and effectiveness of the process of collecting data and information about prices, performance, attributes, and other aspects of products) | Online shopping web site in Jordan |

| [59] | Usability; functionality; security and privacy | Hotel website |

| [60] | Usability (clear language, easily understandable information, user-friendly layout, well-organised information, graphics matched with texts, simple website navigations) Functionality (hotel reservation information, hotel facilities information, information on promotions/special offers, price information on hotel rooms, information on destination where hotel is located) Security and privacy (privacy policy relating to customers’ personal data, information of secured online payment system, information of third-party recognition) | Hotel website |

| [61] | System quality, information quality, service quality | Chines e-commerce website |

| [62] | Content (about us, blog, newsletter Copyright, legal disclaimer, FAQ, news, private policy trust mark, terms of use) Form (animation, color background, pictures, color text, video) Function (search, e-mail, fax, postal address, telephone, last update, forums, languages, site map, navigation menu, register, rss) Social network (Facebook, Flickr, Instagram, linkedin, Pinterest, Tumblr, Twitter, Weibo, Xing, Youtube) | Corporate website |

| [63] | Product quality; ease of use; security; responsiveness; privacy | Banking website in Ghana |

| Item | Quality Index | Testing Application | Result Range | Unit |

|---|---|---|---|---|

| 1. | On-page SEO Score | Neil Patel SEO Analyzer | 0–100 | points |

| 2. | SEO Score | Positioning | 0–5 | points |

| 3. | Score | ZadroWeb | 0–100 | % |

| 4. | SEO Score | Website Grader | 0–100 | points |

| 5. | SEO Results | SEOptimer | A–F | letter |

| 6. | SEO Score | SEO Tester Online | 0–100 | points |

| 7. | SEO Site Checkup score | SEO Site Checkup | 0–100 | points |

| 8. | SEO Score | Geekflare | 0–100 | points |

| 9. | SEO Score | Semtec | 0–100 | points |

| Item | Quality Index | Testing Application | Result Range | Unit |

|---|---|---|---|---|

| 1. | YSlow Score, PageSpeed Score | GTMetrix | 0–100 | % |

| 2. | Performance grade | Pingdom Website Speed Test | 0–100 | points |

| 3. | SCORE | Dareboost | 0–100 | % |

| 4 | Speed Index | Dareboost | >0 | points |

| 5. | Optimum Score | GiftOfSpeed | 0–100 | points |

| 6. | Performance Score | Geekflare | 0–100 | points |

| 7. | PageSpeed Insights | Google PageSpeed Insights | 0–100 | points |

| 8. | Google PageSpeed score | Uptrends Website Speed Test | 0–100 | points |

| Item | Quality Index | Subject under Assessment | Testing Application | Result Range | Unit |

|---|---|---|---|---|---|

| 1 | Fully Loaded Time, Load Time, First Byte, Start Render | Website loading time | GTMetrix, Dareboost, Pingdom Website Speed Test | >0 | seconds |

| 2 | Time spent on the website | Estimation of the time spent by users on the website | Positioning | >0 | seconds/minutes |

| 3 | Backlinks | Hyperlinks | Neil Patel SEO Analyzer | >0 | pcs |

| 4 | Total Page Size | Website volume in bytes | Pingdom Website Speed Test | >0 | KB/MB |

| 5 | Requests | Number of website components | Pingdom Website Speed Test | >0 | pcs |

| 6 | Organic Keywords | Number of keywords | Neil Patel SEO Analyzer | >0 | pcs |

| 7 | Organic monthly traffic | Traffic estimation | Neil Patel SEO Analyzer | >0 | pcs |

| 8 | HTTP transmission time; Average latency time (ping) | Server parameters | Webspeed Intensys | >0 | seconds |

| 9 | Fully Loaded and others | Web Page Performance Test | WebPageTest | A-F >0 | letters seconds |

| Item | Quality Index | Subject under Assessment | Testing Application | Result Range | Unit |

|---|---|---|---|---|---|

| 1. | Semantic result | Text semantics audit (qualitative metric); assessment of marketing usefulness | Blink Audit Tool | 0–100 | % |

| 2. | Perceptual accessibility of a text | Measurement of the comprehensibility of Polish non-literary texts | Jasnopis | 0–7 | points |

| 3. | Text to HTML Ratio (THR) | Measurement of the content-to-code ratio (a quantitative measure) | Siteliner | 0–100 | % |

| 4. | Perceptual accessibility of a text | Text comprehensibility measurement | Logios | 0–22 | points |

| 5. | Code to Text Ratio | Code-to-text ratio | Webanaliza | 0–100 | % |

| 6. | Perceptual accessibility of a text | Readability | Readable | A–F | letters |

| Item | Index | Subject under Assessment | Testing Application |

|---|---|---|---|

| 1. | Broken links | Number of broken links (‘link rot’ phenomenon assessment) | Broken Link Checker |

| 2. | Backlinks | Number of backlinks | Neil Patel SEO Analyzer |

| 3. | Total links, link types, Dofollow/Nofollow, other | Assessment of internal and external link profile | Dr. Link Check |

| 4. | Site Checker: Free Broken Link Tool | Number of broken links | Dead link checker |

| Item | Index | Subject under Assessment | Testing Application | Result Range | Unit |

|---|---|---|---|---|---|

| 1. | Accessibility | Website accessibility for the disabled | Utilitia | 0.00–10.00 | points |

| 2. | Errors, Contrast Errors | Web content availability | WAVE Web Accessibility Evaluation Tool | >0 | points |

| 3. | Number of Rules: Violations, Warnings, Manual Checks, Passed | Ruleset: HTML5 and ARIA Techniques | Functional Accessibility Evaluator (FAE) | 0–100 | Score, Status |

| Item | Index | Testing Tool or Measurement Method |

|---|---|---|

| 1. | Serpstat Visibility (SV), Serpstat SE Traffic | Serpstat |

| 2. | Alexa Global Rank, Alexa Rank in Country (Poland) | Alexa Tool |

| 3. | SimilarWeb Global Rank, SimilarWeb Traffic Overview (Total Visits) | SimilarWeb |

| 4. | Open PageRank | Open PageRank Online Tool |

| Item | Quality Index | Subject under Assessment | Testing Application | Result Range | Unit |

|---|---|---|---|---|---|

| 1. | Woorank Score | Website quality | Woorank Website Review Tool & SEO Checker | 0–100 | points |

| 2. | Overall | Website quality | Nibbler | 0–10 | points |

| 3. | Global score | Website quality | Yellow Lab Tools | A–F 0–100 | letters and points |

| 4. | Best Practices Score | Website quality | Geekflare | 0–100 | points |

| 5. | Page Authority | Score developed by Moz | Page Authority Checker | 0–100 | points |

| 6. | Domain Authority | Search engine ranking score developed by Moz | Moz Free Domain SEO Analysis Tool | 0–100 | points |

| 7. | Domain Rating | Website authority metric based on the backlink profile | Ahrefs | 0–100 | points |

| Testing Application | SEO Measurement Result * | Testing Application | Performance * |

|---|---|---|---|

| Neil Patel SEO Analyzer | 85 | PageSpeed Insights | 64 |

| ZadroWeb | 71.03 | GTMetrix PageSpeed Score | 79 |

| Website Grader | 74 | GTMetrix YSlow | 74 |

| Blink Audit Tool | 77 | Pingdom Performance grade | 82 |

| SEO Tester Online | 57.3 | GiftOfSpeed | 53 |

| Positioning | 3+ (3.5) | Geekflare | 100 |

| Semtec | 65 | Uptrends Website Speed Test (Google PageSpeed score) | 94 |

| Testing Application | Fully Loaded Time (s) * |

|---|---|

| GTMetrix | 2.9 |

| Pingdom Website Speed Test | 1.0 |

| GiftOfSpeed | 1.8 |

| Geekflare | 1.3 |

| Dareboost | 1.96 |

| WebPageTest | 4.617 |

| Uptrends Website Speed Test | 1.6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Król, K.; Zdonek, D. Aggregated Indices in Website Quality Assessment. Future Internet 2020, 12, 72. https://doi.org/10.3390/fi12040072

Król K, Zdonek D. Aggregated Indices in Website Quality Assessment. Future Internet. 2020; 12(4):72. https://doi.org/10.3390/fi12040072

Chicago/Turabian StyleKról, Karol, and Dariusz Zdonek. 2020. "Aggregated Indices in Website Quality Assessment" Future Internet 12, no. 4: 72. https://doi.org/10.3390/fi12040072

APA StyleKról, K., & Zdonek, D. (2020). Aggregated Indices in Website Quality Assessment. Future Internet, 12(4), 72. https://doi.org/10.3390/fi12040072