Multi-formalism Models for Performance Engineering

Abstract

1. Introduction

2. Related Work

3. Queueing Networks Techniques

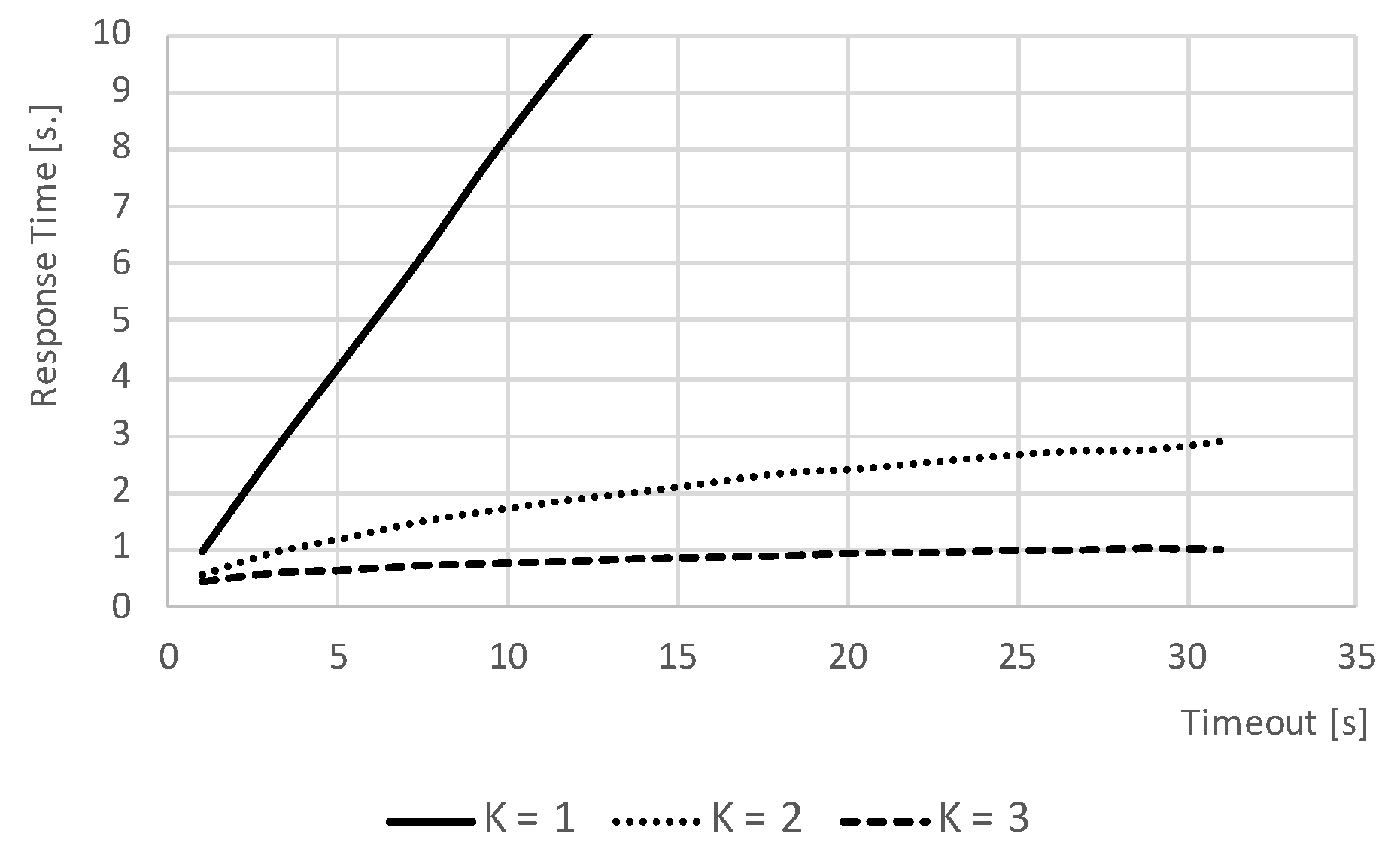

3.1. Timeout with Quorum Based Join

3.1.1. Description of the Problem

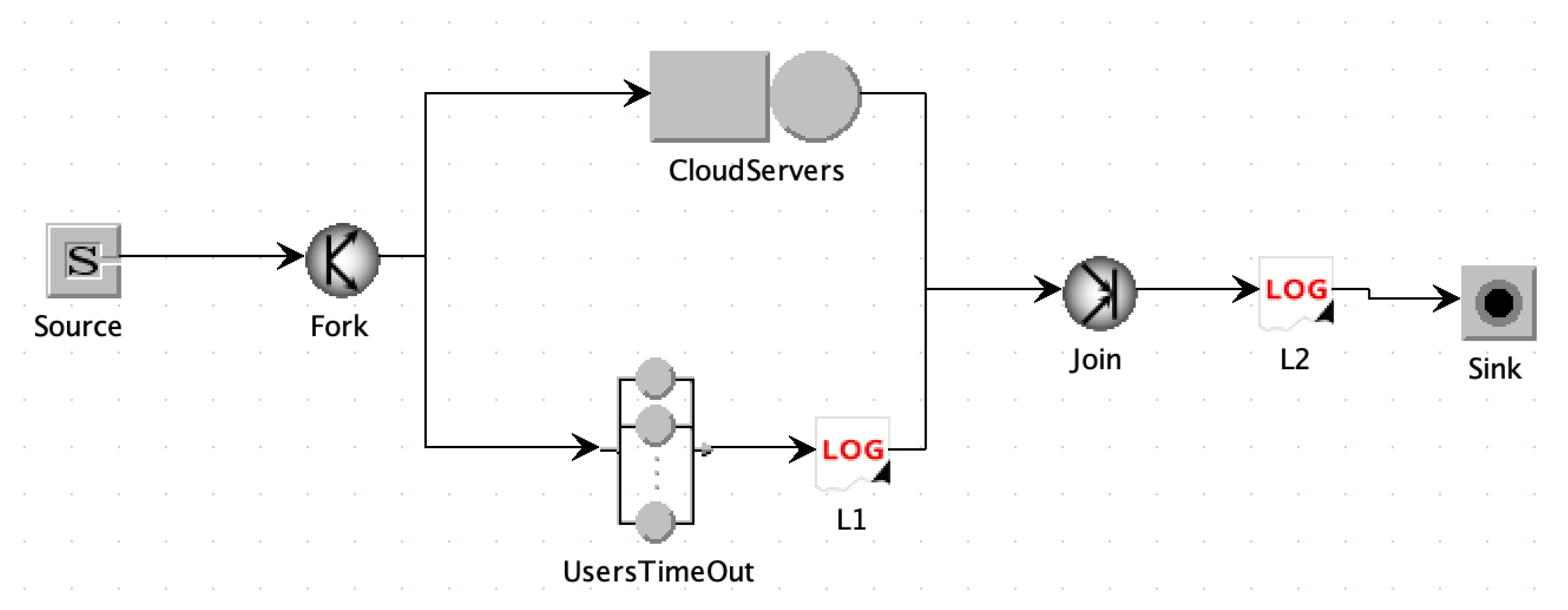

3.1.2. Fork/Join Paradigm

3.1.3. Model Description

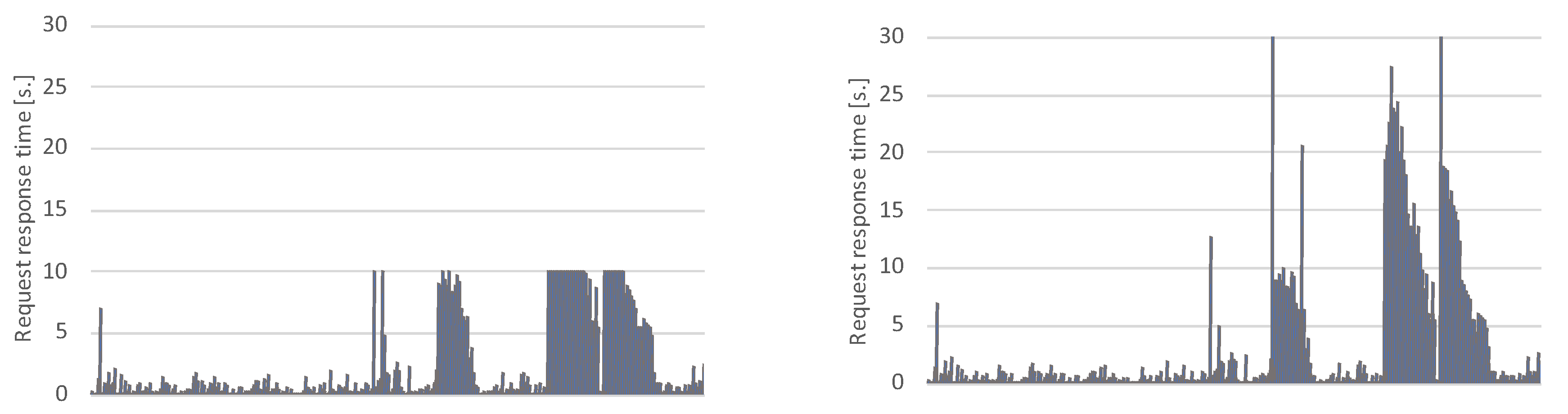

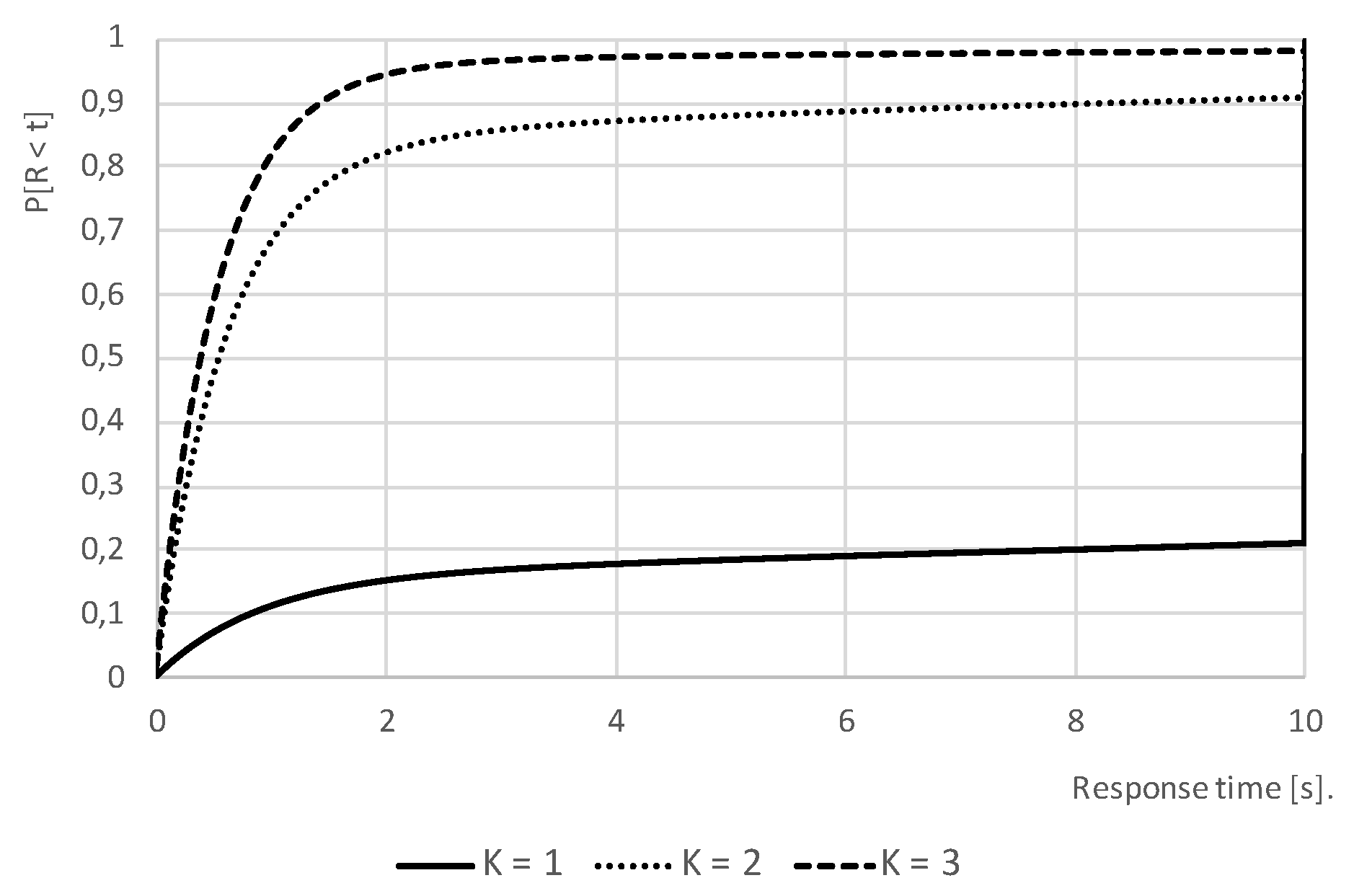

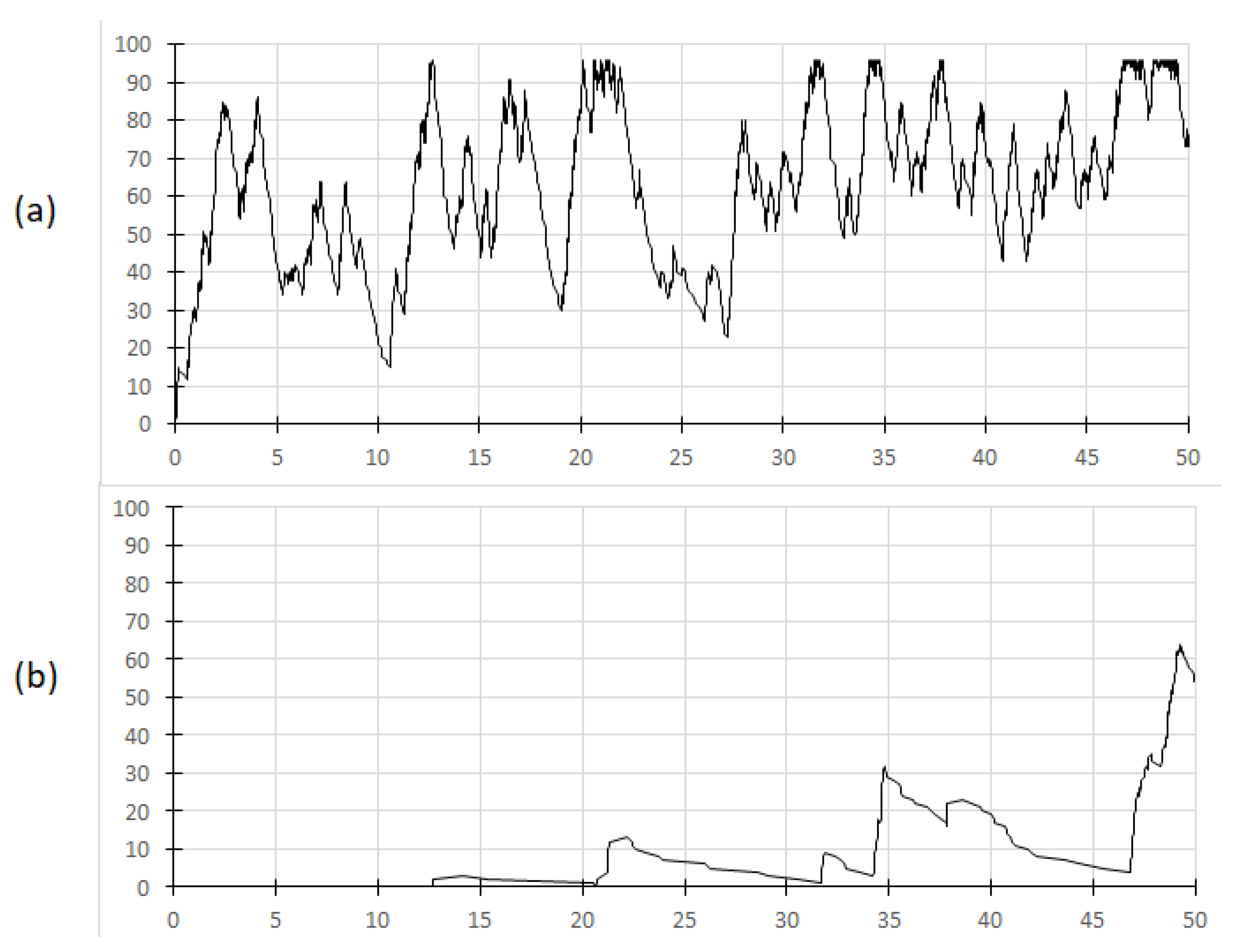

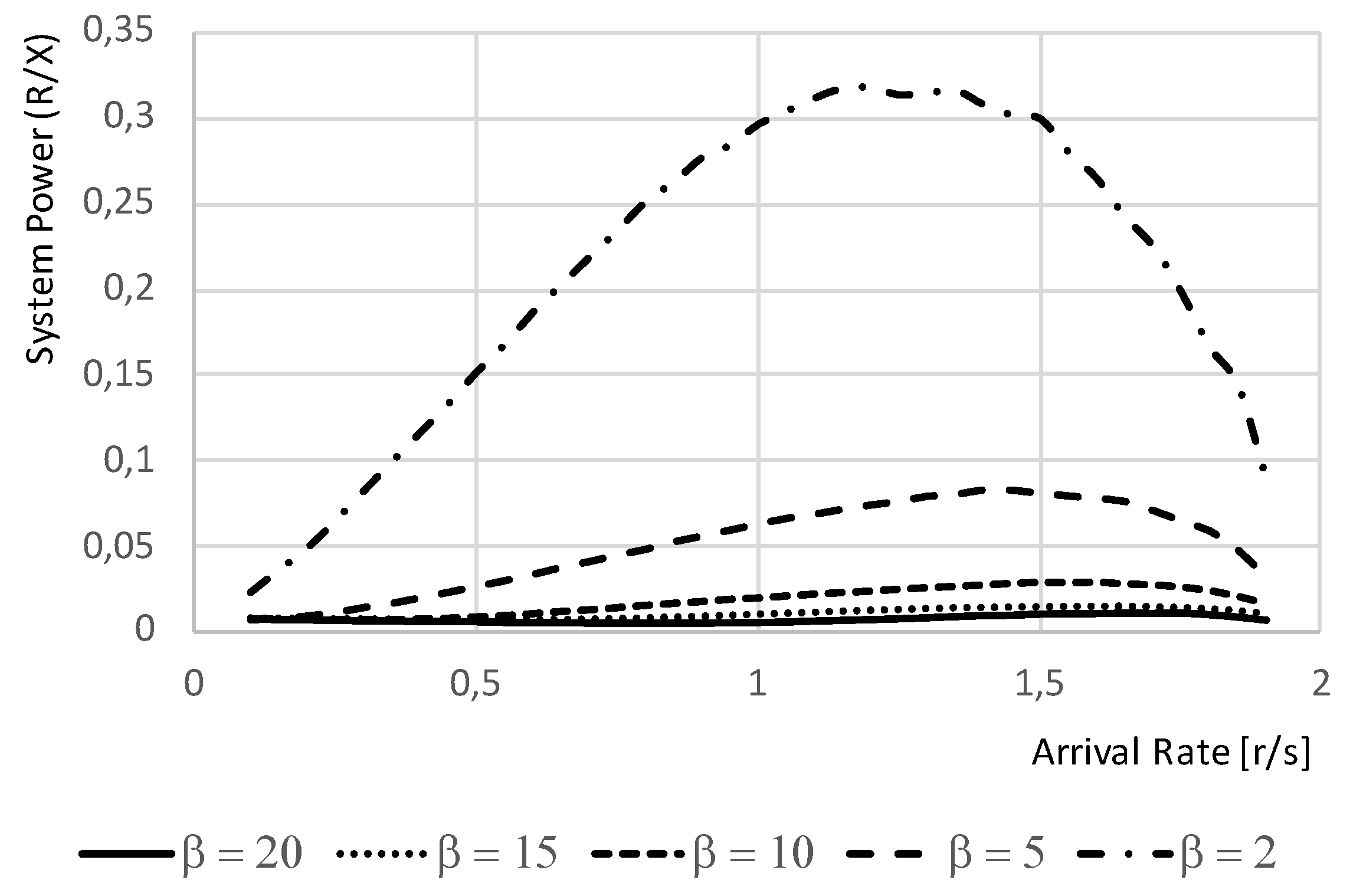

3.1.4. Model results

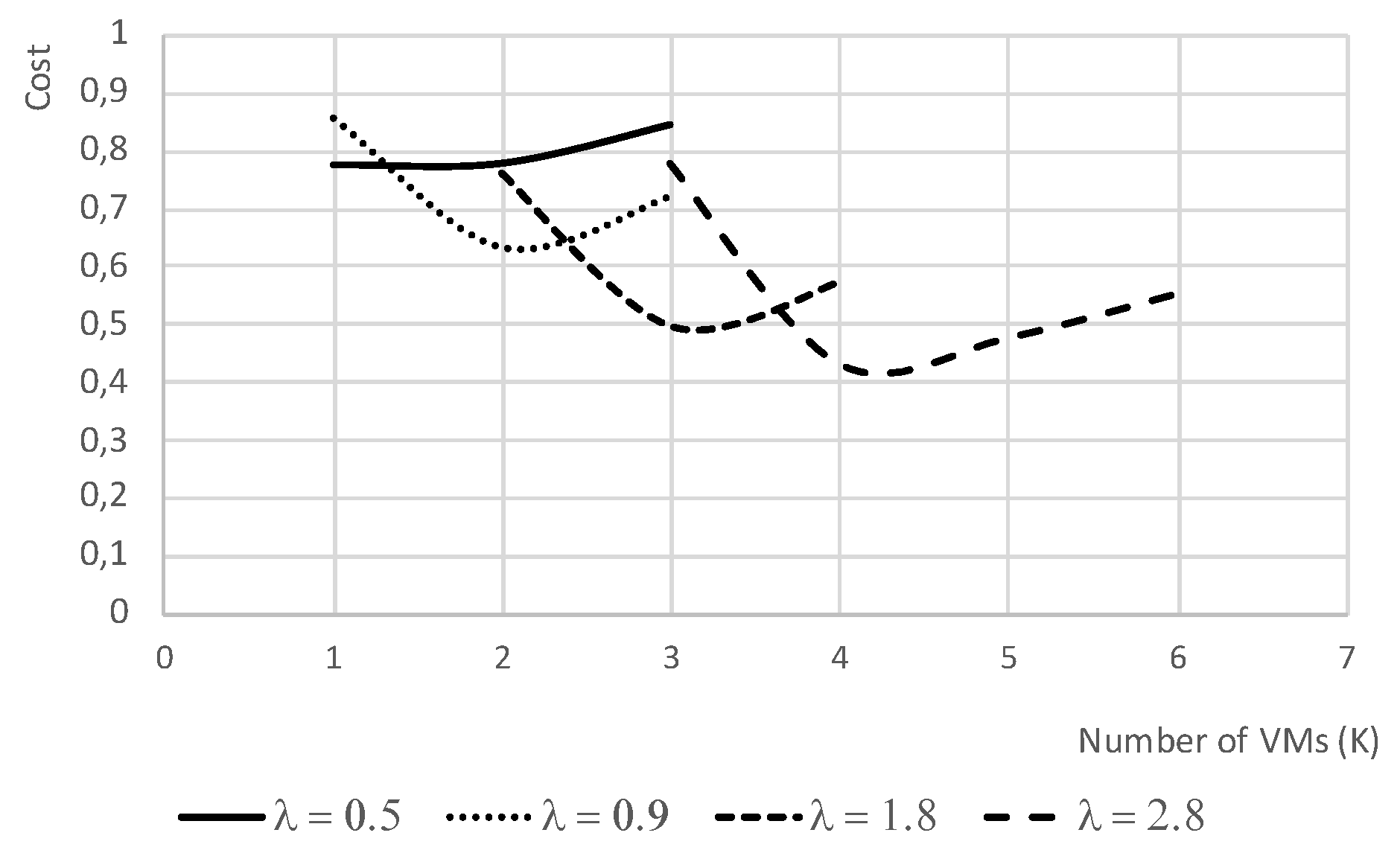

3.2. Approximate Computing with Finite Capacity Region

3.2.1. Description of the Problem

3.2.2. Finite Capacity Regions

3.2.3. Model Description

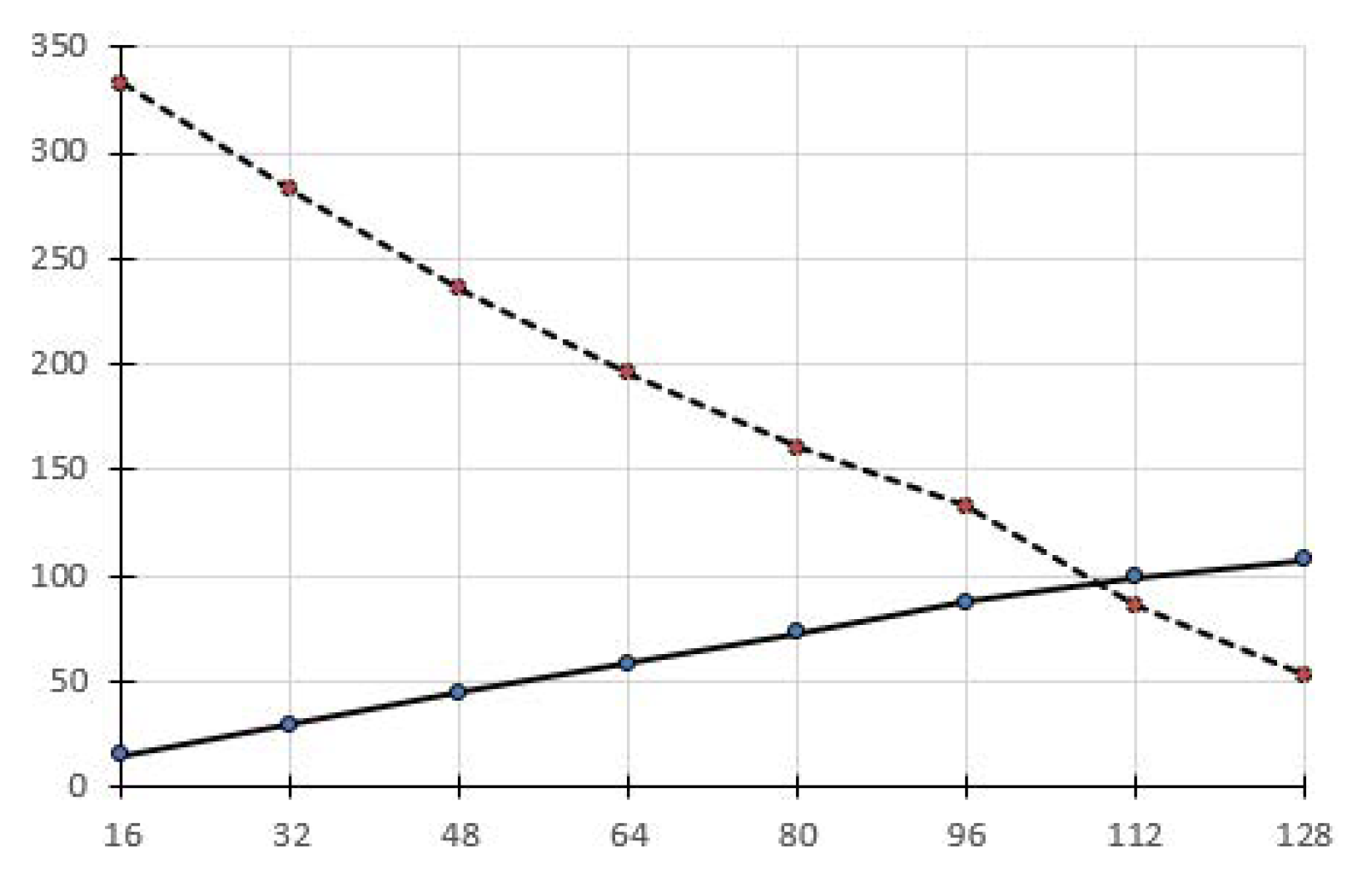

3.2.4. Model Results

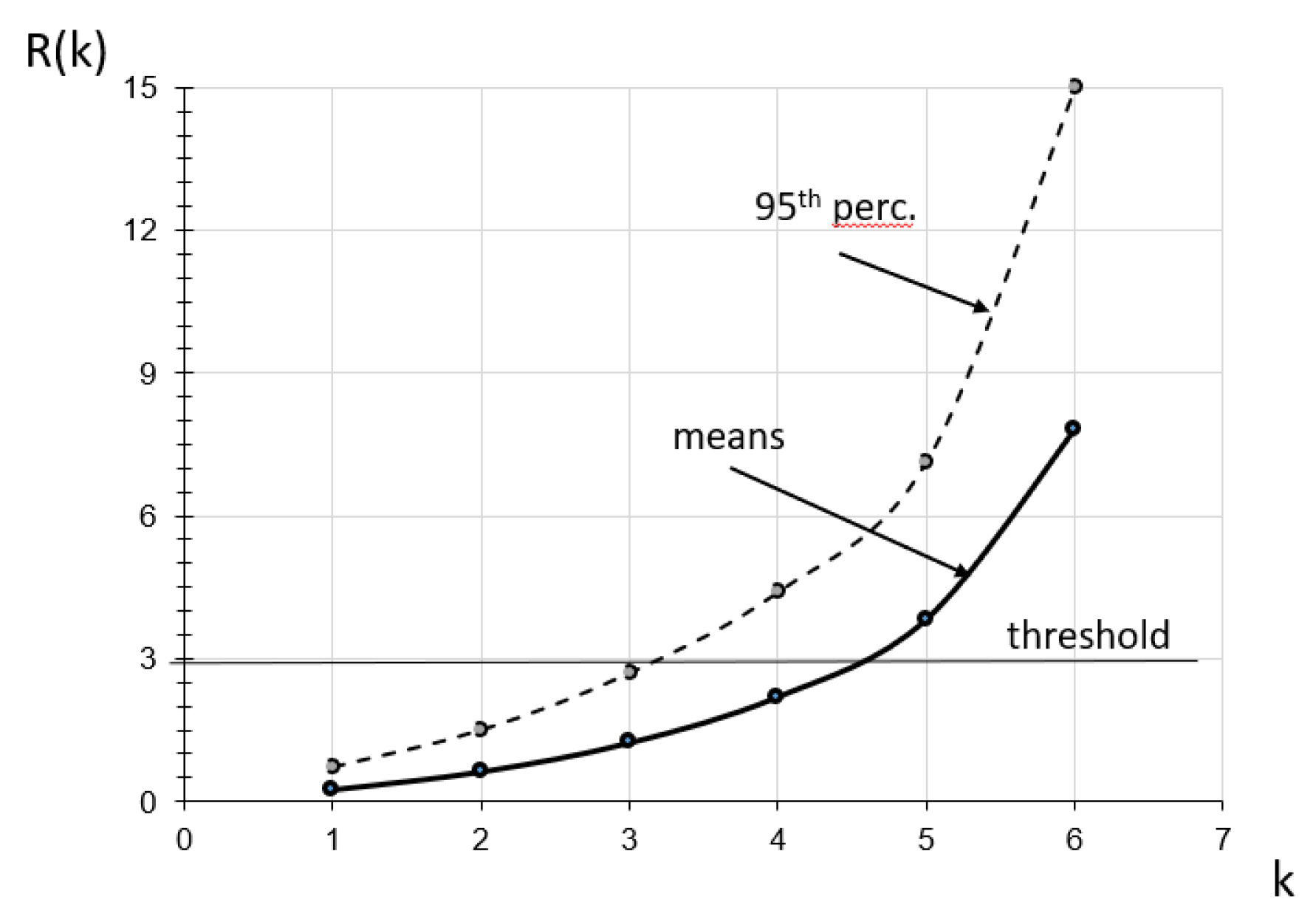

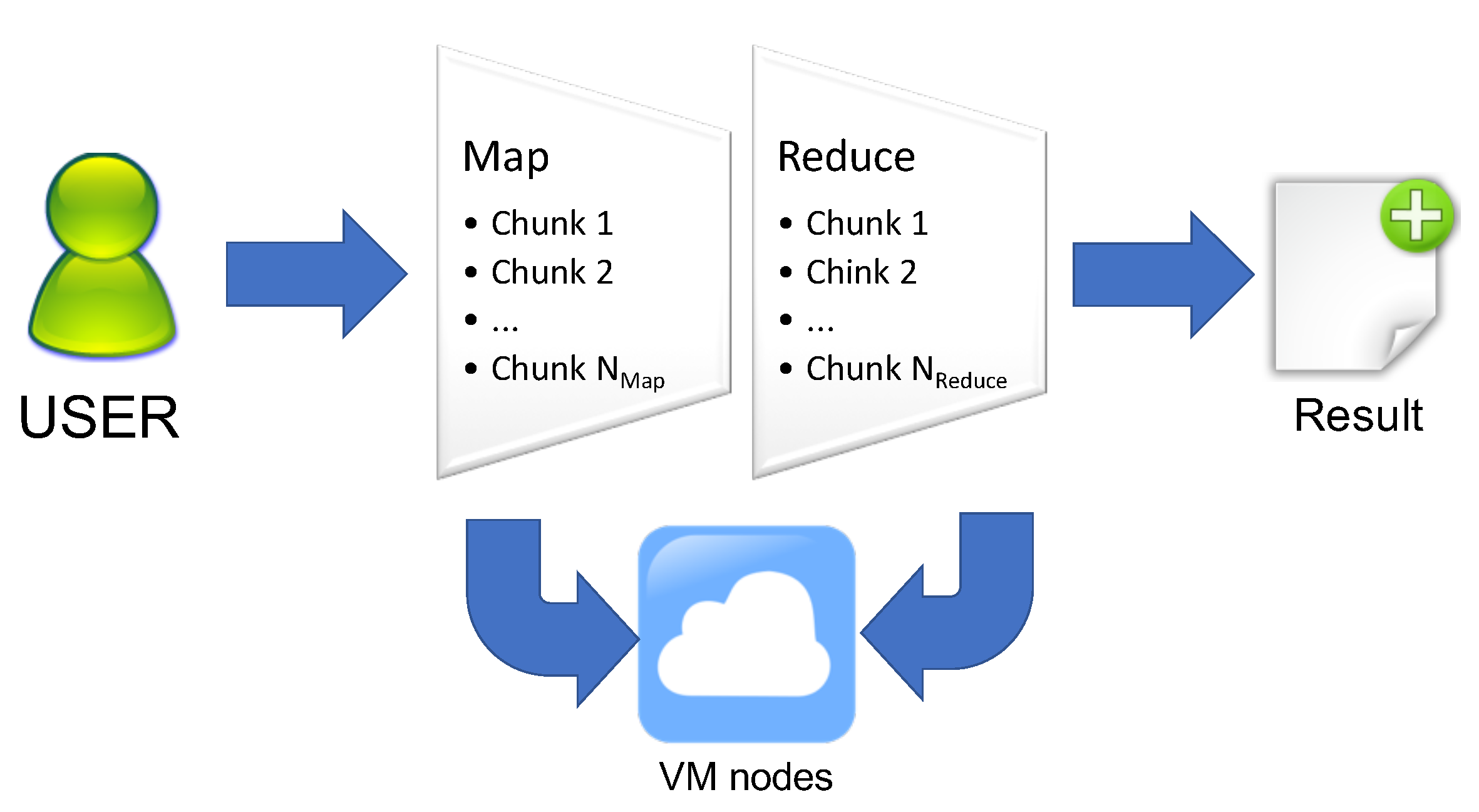

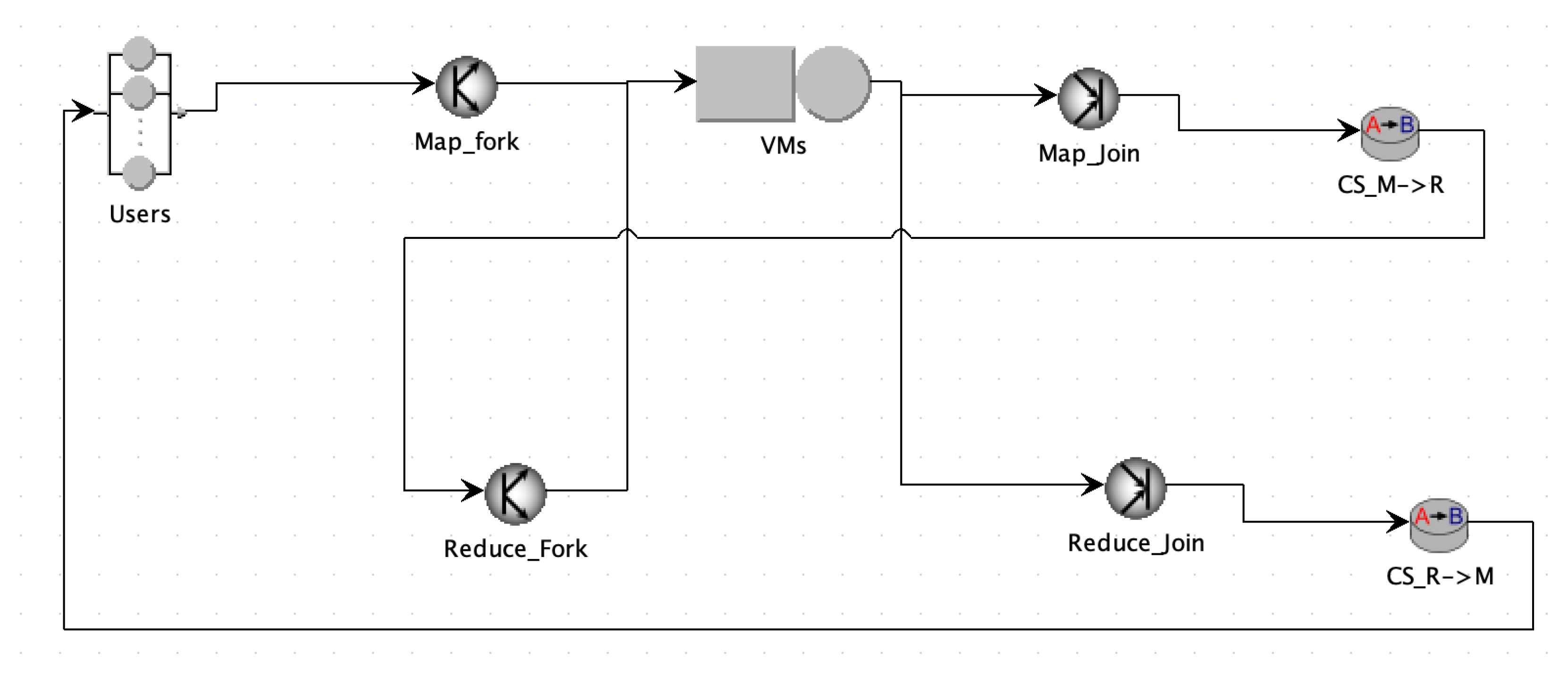

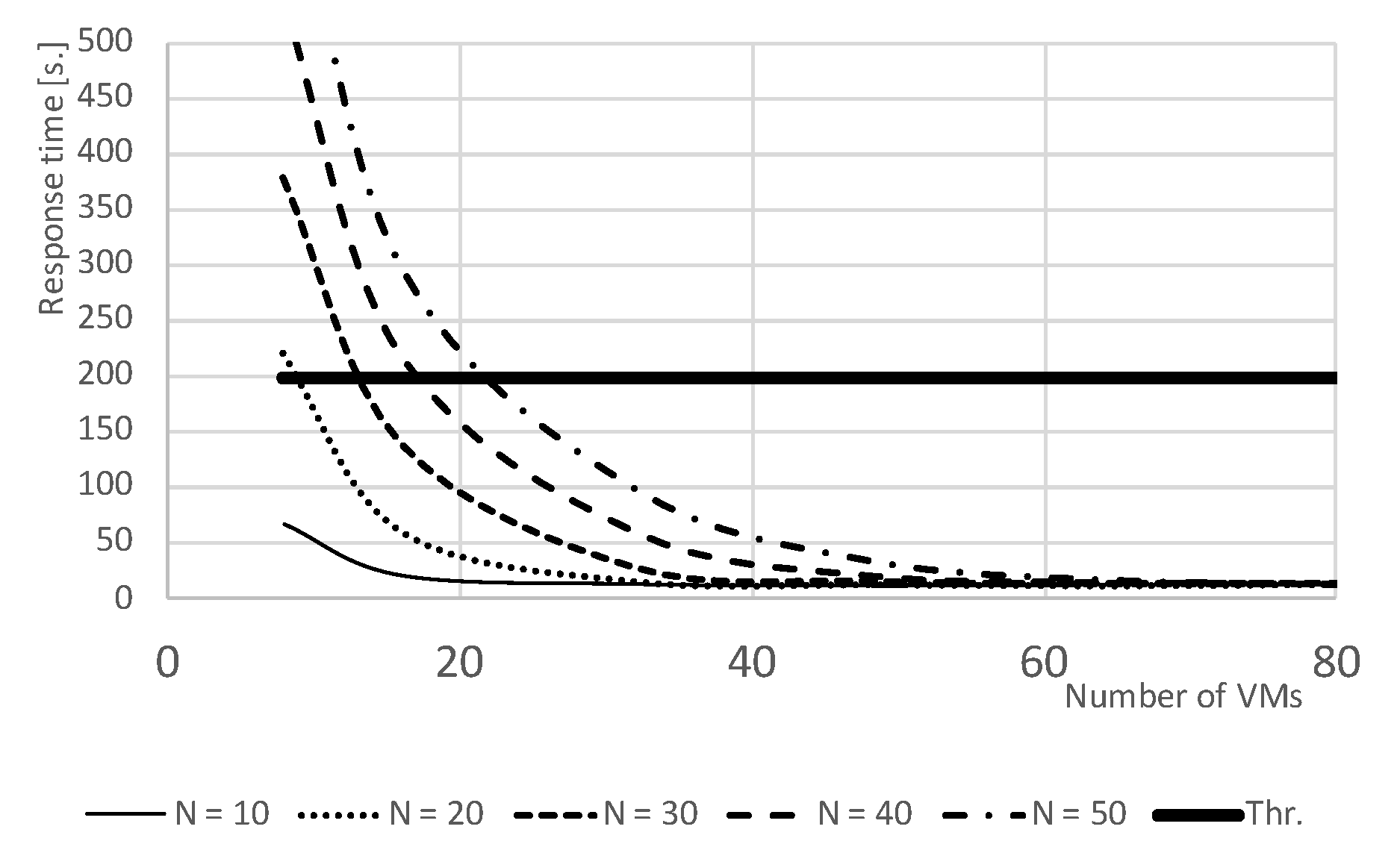

3.3. MapReduce with Class Switch

3.3.1. Description of the Problem

3.3.2. Class Switch

3.3.3. Model Description

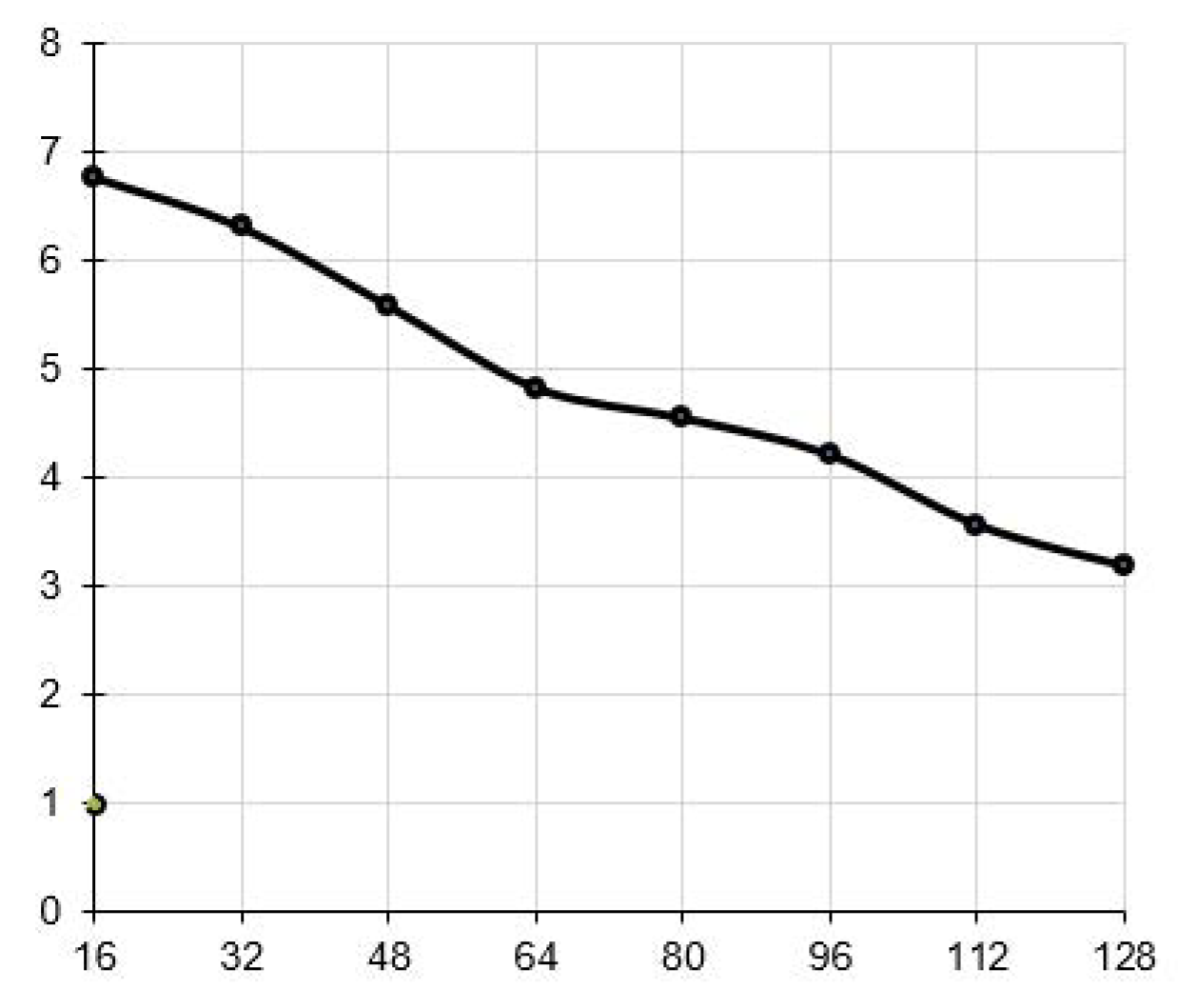

3.3.4. Model Results

4. Multi-Formalism QN/PN Techniques

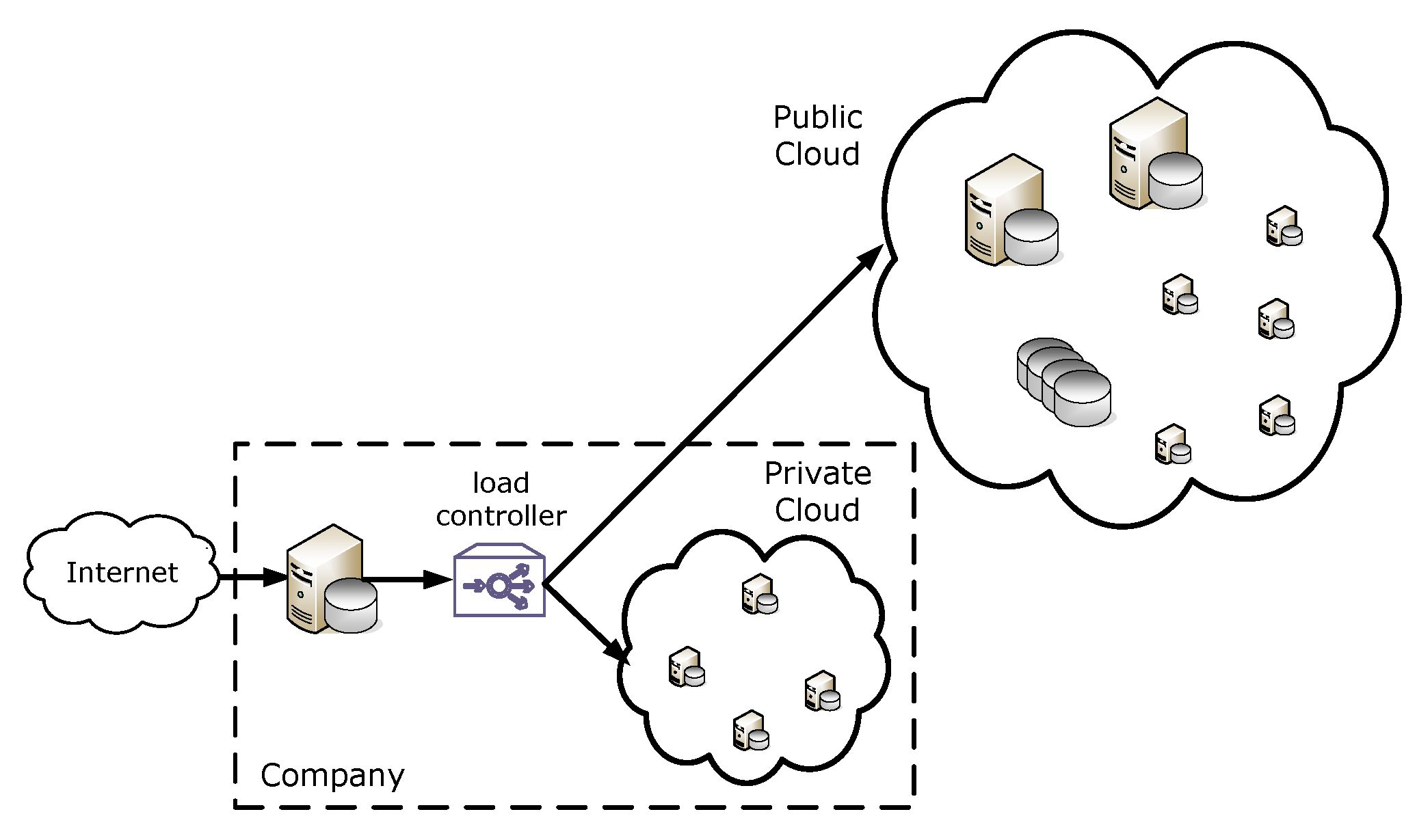

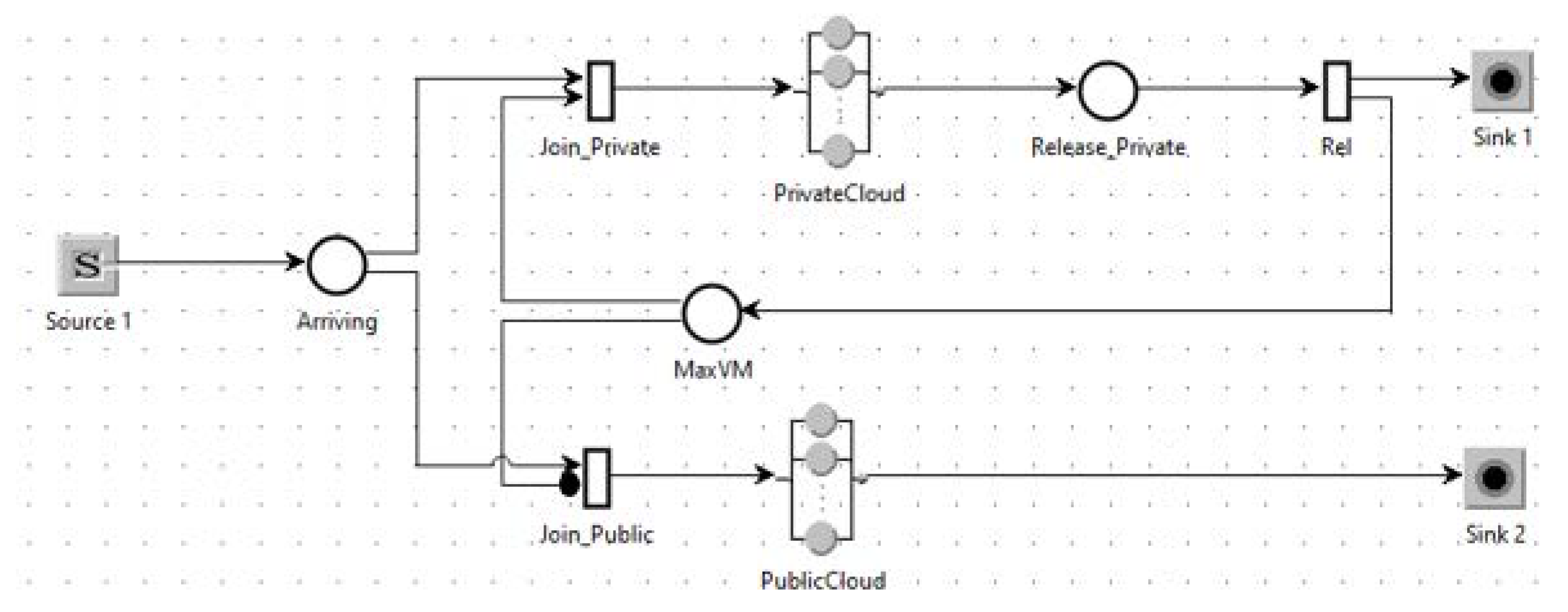

4.1. Hybrid Cloud

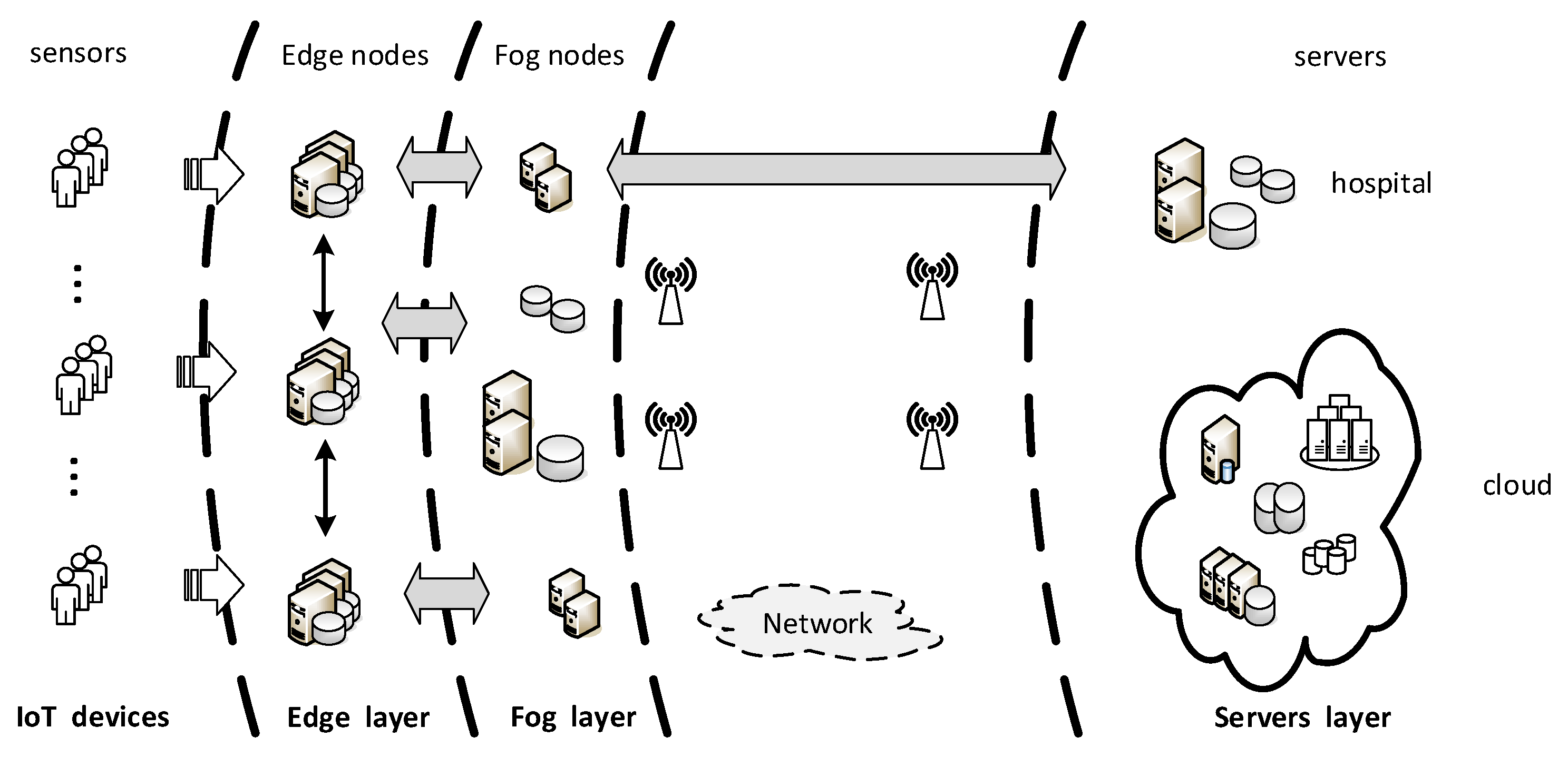

4.1.1. Description of the Problem

4.1.2. Model Description

4.1.3. Model Results

4.2. Batching in IoT-Based Healthcare

4.2.1. Description of the Problem

- identification of the amount of data that must be considered in each transmission to hospital servers in order to satisfy the performance requirement in term of end-to-end data delivery time and minimize the energy consumption of the operations; and

- identification of potential critical health conditions of patients that need urgent investigation, i.e., fast response time.

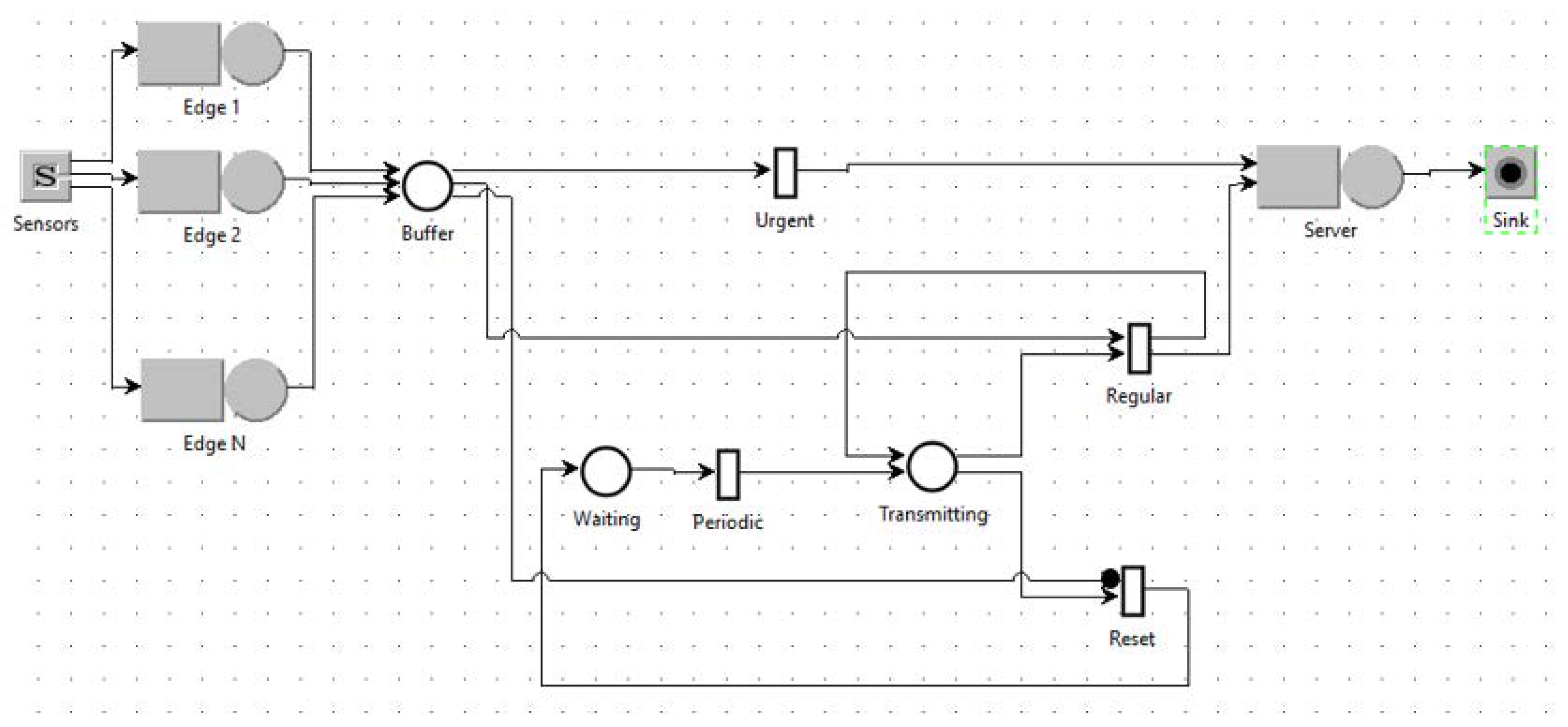

4.2.2. The Model

- The buffer is emptied (i.e., the requests that are in the buffer are transmitted) periodically with a period defined according to the number and type of signals detected by all sensors. Requests are assumed to belong to patients under Regular conditions and are sent at the end of the period.

- The buffer is emptied when the number of requests in the buffer reaches a threshold value , i.e., the maximum batch size. In this case, requests with such a high arrival rate are assumed to indicate the presence of a critical condition for one or more patients. Therefore, requests in the buffer are considered Urgent and must be sent immediately without waiting for the end of the emptying period.

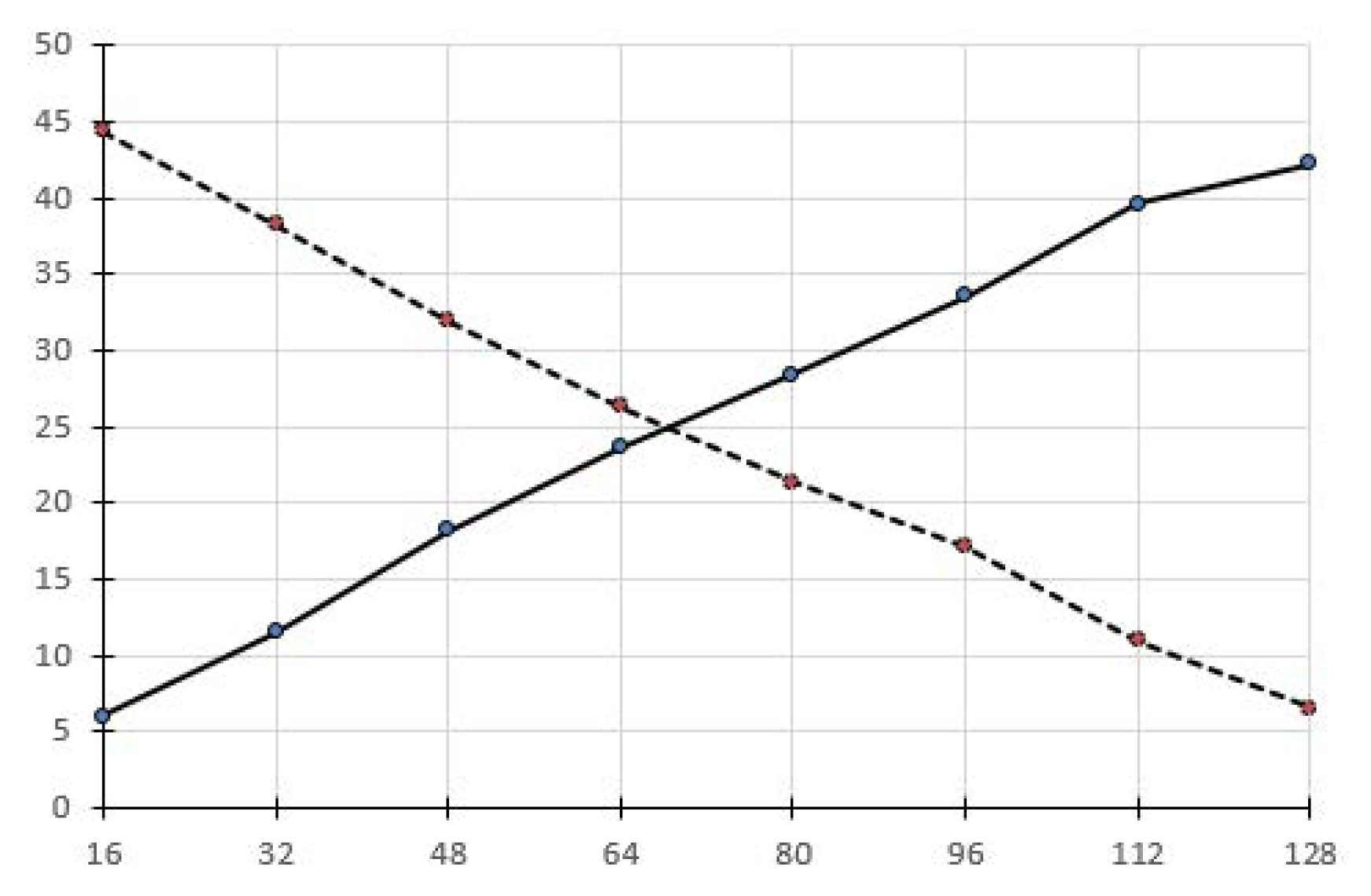

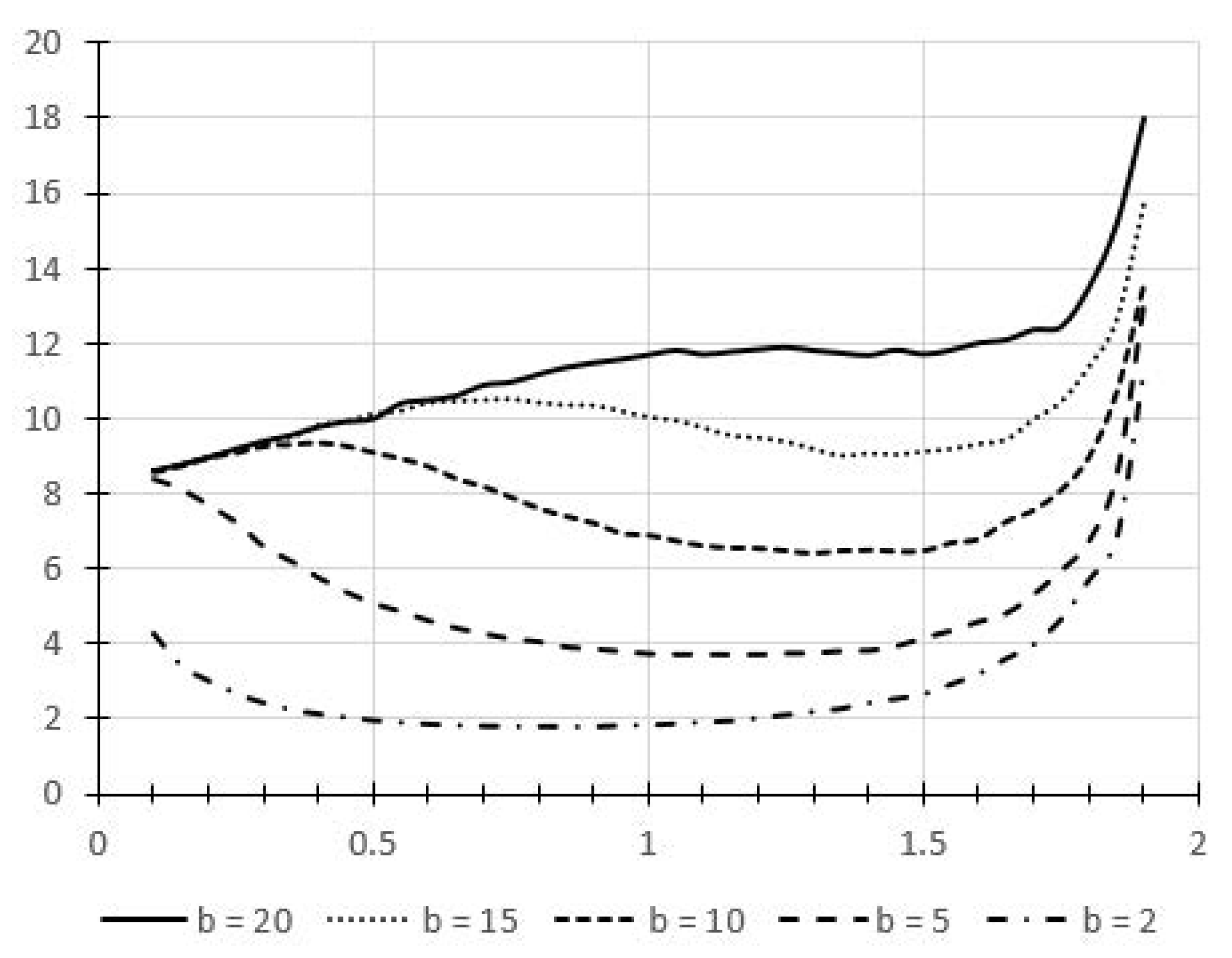

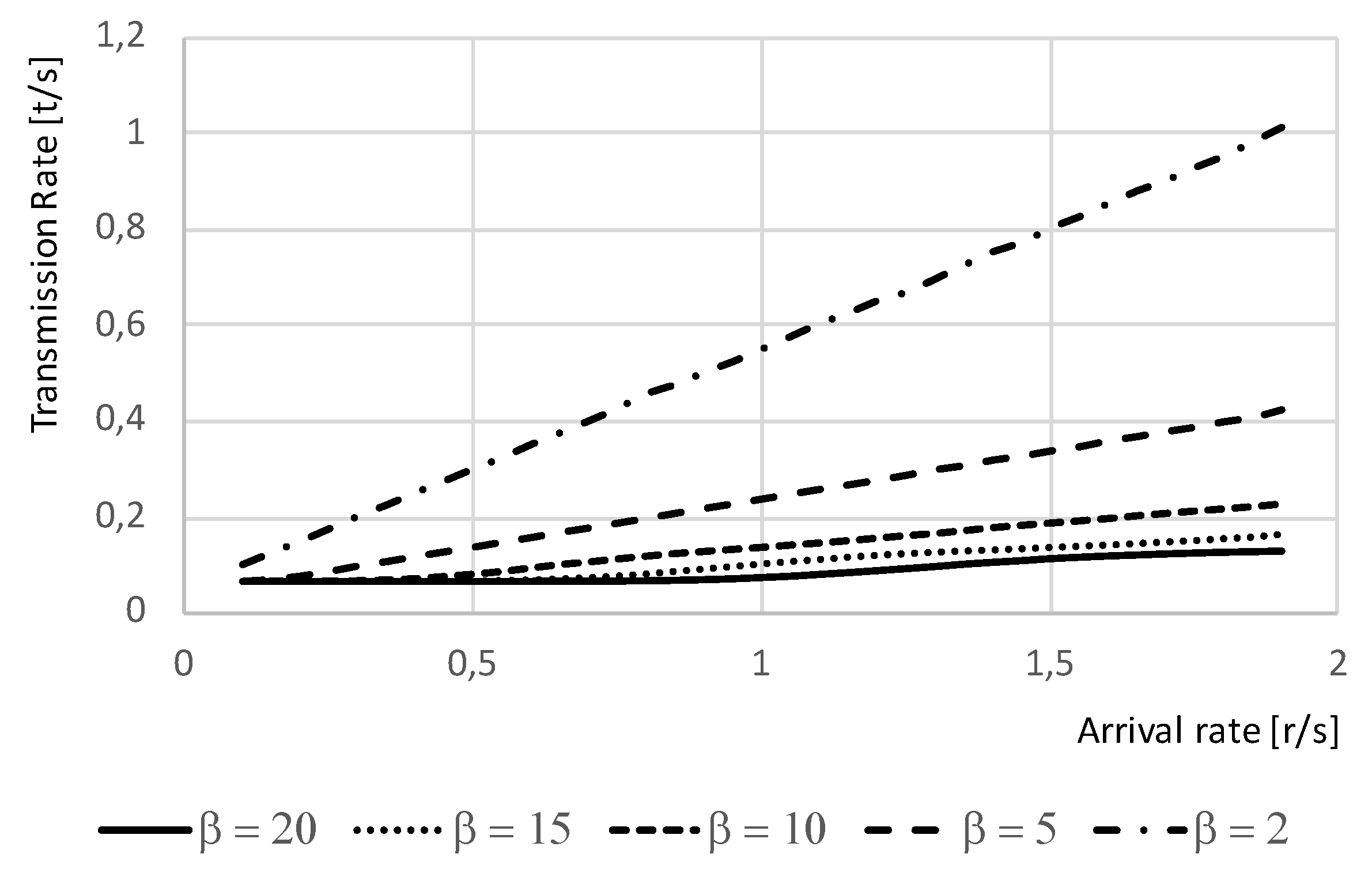

4.2.3. Model Results

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Economist. The World’s Most Valuable Resource Is No Longer Oil, But Data. Available online: www.economist.com/leaders/2017/05/06/the-worlds-most-valuable-resource-is-no-longer-oil-but-data. (accessed on 15 January 2020).

- Guardian. Tech Giants May Be Huge, But Nothing Matches Big Data. Available online: https://www.theguardian.com/technology/2013/aug/23/tech-giants-data. (accessed on 19 December 2019).

- Flender, S. Data Is Not the New Oil. 2019. Available online: https://towardsdatascience.com/data-is-not-the-new-oil-bdb31f61bc2d (accessed on 19 December 2019).

- Ramadan, H.; Kashyap, D. Quality of Service (QoS) in Cloud Computing. Int. J. Comput. Sci. Inf. Technol. 2017, 8, 318–320. [Google Scholar]

- Bertoli, M.; Casale, G.; Serazzi, G. JMT: Performance engineering tools for system modeling. SIGMETRICS Perform. Eval. Rev. 2009, 36, 10–15. [Google Scholar] [CrossRef]

- Varghese, B.; Buyya, R. Next Generation Cloud Computing: New Trends and Research Directions. Future Gener. Comput. Syst. 2017. [Google Scholar] [CrossRef]

- Sajjad, M.; Ali, A.; Khan, A.S. Performance Evaluation of Cloud Computing Resources. Perform. Eval. 2018, 9, 187–199. [Google Scholar] [CrossRef]

- Duan, Q. Cloud service performance evaluation: Status, challenges, and opportunities–a survey from the system modeling perspective. Digit. Commun. Netw. 2017, 3, 101–111. [Google Scholar] [CrossRef]

- Maheshwari, S.; Raychaudhuri, D.; Seskar, I.; Bronzino, F. Scalability and performance evaluation of edge cloud systems for latency constrained applications. In Proceedings of the 2018 IEEE/ACM Symposium on Edge Computing (SEC), Bellevue, WA, USA, 25–27 October 2018; pp. 286–299. [Google Scholar]

- Calzarossa, M.C.; Massari, L.; Tessera, D. Workload Characterization: A Survey Revisited. ACM Comput. Surv. 2016, 48. [Google Scholar] [CrossRef]

- Megyesi, P.; Molnár, S. Analysis of Elephant Users in Broadband Network Traffic. In Proceedings of the Meeting of the European Network of Universities and Companies in Information and Communication Engineering Chemnitz, Berlin, Germany, 28–30 August 2013; pp. 37–45. [Google Scholar] [CrossRef]

- Casale, G.; Gribaudo, M.; Serazzi, G. Tools for Performance Evaluation of Computer Systems: Historical Evolution and Perspectives. In Proceedings of the Performance Evaluation of Computer and Communication Systems. Milestones and Future Challenges: IFIP WG 6.3/7.3 International Workshop, PERFORM 2010, Vienna, Austria, 14–16 October 2010. [Google Scholar] [CrossRef]

- Ciardo, G.; Jones, R.L., III; Miner, A.S.; Siminiceanu, R.I. Logic and stochastic modeling with SMART. Perform. Eval. 2006, 63, 578–608. [Google Scholar] [CrossRef]

- Hillston, J. Tuning Systems: From Composition to Performance. Comput. J. 2005, 48, 385–400. [Google Scholar] [CrossRef]

- Hoare, C.A.R. Communicating Sequential Processes; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1985. [Google Scholar]

- Milner, R. Communication and Concurrency; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1989. [Google Scholar]

- Sanders, W.H.; Courtney, T.; Deavours, D.; Daly, D.; Derisavi, S.; Lam, V. Multi-Formalism and Multi-Solution-Method Modeling Frameworks: The Mobius Approach. Available online: https://pdfs.semanticscholar.org/c461/31d01a25adb51a3a068703e56406ea62ae84.pdf?ga=2.91422174.531965311.1583560048-792180686.1567480596 (accessed on 19 December 2019).

- Vittorini, V.; Iacono, M.; Mazzocca, N.; Franceschinis, G. The OsMoSys approach to multi-formalism modeling of systems. Softw. Syst. Model. 2004, 3, 68–81. [Google Scholar] [CrossRef]

- Barbierato, E.; Gribaudo, M.; Iacono, M. Multi-formalism and Multisolution Strategies for Systems Performance Evaluation. In Quantitative Assessments of Distributed Systems; Wiley Online Library: New York, NY, USA, 2015; pp. 201–222. [Google Scholar] [CrossRef]

- Barbierato, E.; Iacono, M.; Gribaudo, M. Multi-formalism and Multisolution Strategies for System Performances Evaluation; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 2015. [Google Scholar]

- Khan, W.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge computing: A survey. Future Gener. Comput. Syst. 2019, 97. [Google Scholar] [CrossRef]

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.; Morrow, M.; Polakos, P. A Comprehensive Survey on Fog Computing: State-of-the-art and Research Challenges. IEEE Commun. Surv. Tutor. 2017. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. Mobile Edge Computing: Survey and Research Outlook. arXiv 2017, arXiv:abs/1701.01090. Available online: https://arxiv.org/abs/1701.01090 (accessed on 19 December 2019).

- Ajila, S.; Bankole, A. Using Machine Learning Algorithms for Cloud Client Prediction Models in a Web VM Resource Provisioning Environment. Trans. Mach. Learn. Artif. Intell. 2016, 4, 134–142. [Google Scholar] [CrossRef]

- Ardagna, D.; Barbierato, E.; Evangelinou, A.; Gianniti, E.; Gribaudo, M.; Pinto, T.B.M.; Guimarães, A.; Couto da Silva, A.P.; Almeida, J.M. Performance Prediction of Cloud-Based Big Data Applications. In Proceedings of the 2018 ACM/SPEC International Conference on Performance Engineering, Berlin, Germany, 9–13 April 2018; pp. 192–199. [Google Scholar] [CrossRef]

- Didona, D.; Romano, P. Hybrid Machine Learning/Analytical Models for Performance Prediction: A Tutorial. In Proceedings of the 6th ACM/SPEC International Conference on Performance Engineering, Austin, TX, USA, 31 January 2015; pp. 341–344. [Google Scholar] [CrossRef]

- Conway, M.E. A Multiprocessor System Design. In Proceedings of the Fall Joint Computer Conference, Las Vegas, NV, USA, 12–14 November 1963; pp. 139–146. [Google Scholar] [CrossRef]

- Blumofe, R.D.; Leiserson, C.E. Scheduling Multithreaded Computations by Work Stealing. In Proceedings of the 35th Annual Symposium on Foundations of Computer Science, Santa Fe, NM, USA, 20–22 November 1994; pp. 356–368. [Google Scholar] [CrossRef]

- Arcari, L.; Gribaudo, M.; Palermo, G.; Serazzi, G. Performance-Driven Analysis for an Adaptive Car-Navigation Service on HPC Systems. SN Comput. Sci. 2020, 1, 41:1–41:8. [Google Scholar] [CrossRef]

- Chondrogiannis, T.; Bouros, P.; Gamper, J.; Leser, U. Alternative Routing: K-Shortest Paths with Limited Overlap. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WC, USA, 3–6 November 2015. [Google Scholar] [CrossRef]

- Balbo, G. Introduction to Generalized Stochastic Petri Nets. In Proceedings of the Formal Methods for Performance Evaluation: 7th International School on Formal Methods for the Design of Computer, Communication, and Software Systems, SFM 2007, Bertinoro, Italy, 28 May–2 June 2007; pp. 83–131. [Google Scholar] [CrossRef]

- Pinciroli, R.; Gribaudo, M.; Roveri, M.; Serazzi, G. Capacity Planning of Fog Computing Infrastructures for Smart Monitoring; Springer: Berlin, Germany, 2018; pp. 72–81. [Google Scholar] [CrossRef]

- Kleinrock, L. Power and Deterministic Rules of Thumb for Probabilistic Problems in Computer Communications. In Proceedings of the International Conference on Communications, Boston, MA, USA, 10–14 June 1979. [Google Scholar]

- Holvoet, T.; Verbaeten, P. Using Petri Nets for Specifying Active Objects and Generative Communication; Springer: Berlin, Germany, 2001; pp. 38–72. [Google Scholar] [CrossRef]

- Jensen, K. Coloured Petri Nets. In Petri Nets: Central Models and Their Properties; Brauer, W., Reisig, W., Rozenberg, G., Eds.; Springer: Berlin, Germany, 1987; pp. 248–299. [Google Scholar] [CrossRef]

- Wyse, M. Modeling Approximate Computing Techniques. Available online: https://homes.cs.washington.edu/~wysem/publications/wysem-msreport.pdf (accessed on 19 December 2019).

- Bernardi, S.; Gianniti, E.; Aliabadi, S.; Perez-Palacin, D.; Requeno, J. Modeling Performance of Hadoop Applications: A Journey from Queueing Networks to Stochastic Well Formed Nets. In Proceedings of the International Conference on Algorithms and Architectures for Parallel Processing, Granada, Spain, 14–16 December 2016; pp. 599–613. [Google Scholar] [CrossRef]

- Bi, J.; Zhu, Z.; Tian, R.; Wang, Q. Dynamic Provisioning Modeling for Virtualized Multi-tier Applications in Cloud Data Center. In Proceedings of the 2010 IEEE 3rd International Conference on Cloud Computing, Miami, FL, USA, 5–10 July 2010; pp. 370–377. [Google Scholar] [CrossRef]

- El Kafhali, S.; Salah, K. Performance Modeling and Analysis of Internet of Things enabled Healthcare Monitoring Systems. IET Netw. 2019, 8, 48–58. [Google Scholar] [CrossRef]

| Scenario | Solution | Section |

|---|---|---|

| Timeout with Quorum based Join | Fork/Join paradigm | 3.1 |

| Approximate Computing with Finite Capacity Region | Finite capacity regions | 3.2 |

| MapReduce with Class Switch | Class Switch | 3.3 |

| Dynamic provisioning in Hybrid Clouds | Multi-formalism | 4.1 |

| Batching of requests in e-Health applications | Multi-formalism | 4.2 |

| Component | Parameters | ||

|---|---|---|---|

| Mean | Coeff. of Var. | Distribution | |

| Algorithm 1 | 1 | cv = 5 | hyperexp |

| Algorithm 2 | 3 | cv = 3 | hyperexp |

| Algorithm 3 | 1 | cv = 1 | exp |

| Algorithm 4 | 2 | cv = 1 | exp |

| Algorithm 5 | 4 | cv = 0.7 | Erlang |

| Algorithm 6 | 5 | cv = 0.5 | Erlang |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbierato, E.; Gribaudo, M.; Serazzi, G. Multi-formalism Models for Performance Engineering. Future Internet 2020, 12, 50. https://doi.org/10.3390/fi12030050

Barbierato E, Gribaudo M, Serazzi G. Multi-formalism Models for Performance Engineering. Future Internet. 2020; 12(3):50. https://doi.org/10.3390/fi12030050

Chicago/Turabian StyleBarbierato, Enrico, Marco Gribaudo, and Giuseppe Serazzi. 2020. "Multi-formalism Models for Performance Engineering" Future Internet 12, no. 3: 50. https://doi.org/10.3390/fi12030050

APA StyleBarbierato, E., Gribaudo, M., & Serazzi, G. (2020). Multi-formalism Models for Performance Engineering. Future Internet, 12(3), 50. https://doi.org/10.3390/fi12030050