Proposal for a System Model for Offline Seismic Event Detection in Colombia

Abstract

1. Introduction

2. Problem Statement

3. Earthquake Detection Methodologies

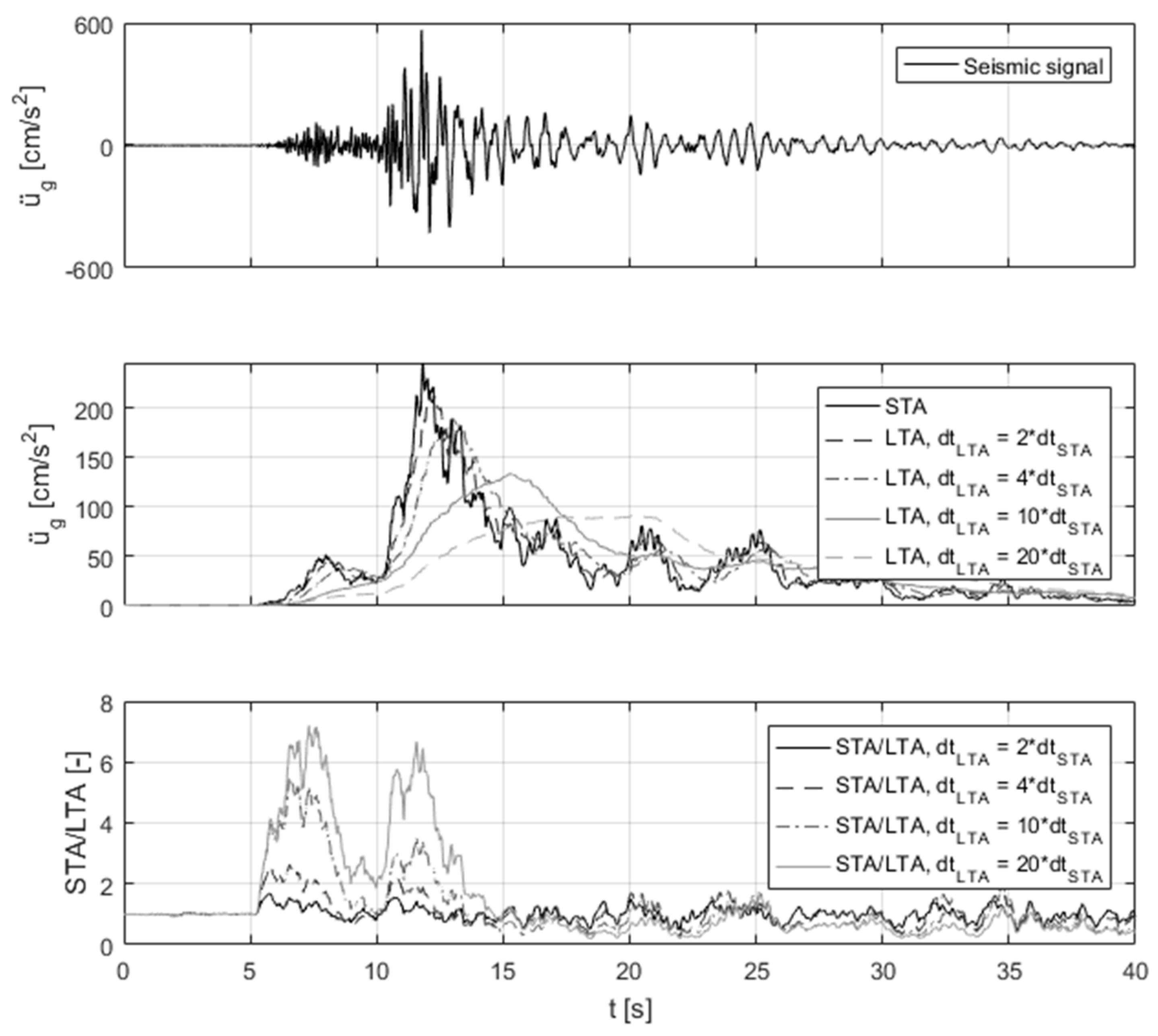

3.1. Traditional Approaches

3.2. Current Approaches

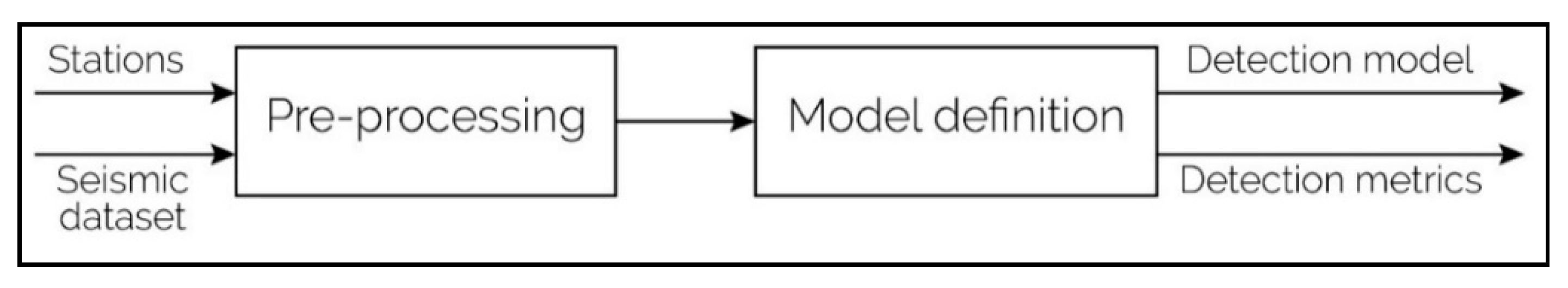

4. Seismic Detection Model Proposal

4.1. Seismic Data Seeking and Gathering

- Trace files (Waveforms), which contain the seismic samples taken by all seismological stations available around the region of interest.

- Parameter files (Sfiles), which provide detailed information about the seismic events, such as the longitude and latitude of the epicenter and the P-wave and the S-wave arrival times, among others.

4.2. Reading and Interpretation of the Seismic Records

4.3. Analysis of Seismological Stations

- The distance from the hypocenter and the epicenter (hypocenter and epicenter distances) to the geographic position of the station defines the amount of attenuation of the seismic wave.

- The geomorphology to which the seismic waves are exposed on the way to the station defines the propagation pattern and the attenuation of the seismic waves.

- The natural and artificial noise sources demean the seismic records due to the loss of quality regarding the content associated with seismic information, adding sources of information that concern other events that are not from a seismic nature.

- The technical parameters of the stations such as measurement channels, signal-to-noise ratio, analog-to-digital conversion, sampling rates, sensitivity, and dynamic range define how the seismic event is perceived from an analog source to a digital environment.

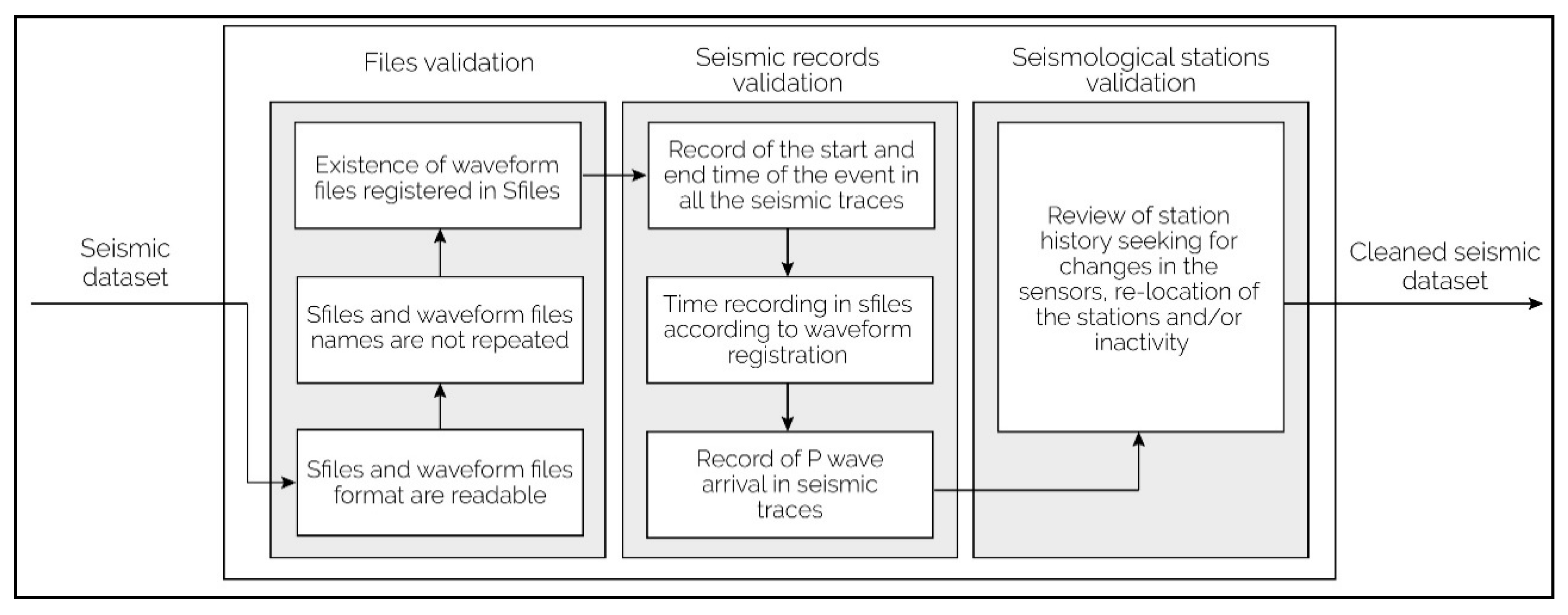

4.4. Sample Selection

- Inconsistencies in the file formats: There are different formats in which a seismic file can be structured, as SEED and miniSEED. During the processing and storage stages, the data are susceptible to be modified or lost, since there are multiple sources of information. Sometimes, these modifications alter the file formats, making them inconsistent. The files that present inconsistencies in the format and cannot be read correctly must be discarded.

- Absence of trace files that correspond to SEED and SAC existing parameter files: As part of the data storage process, the seismic information extracted from the seismic events (Sfiles) and the seismic samples (Waveforms) are recorded in separate files, as described in previous sections. Some of them are stored as part of the dataset without being associated. In this way, cases in which seismic information is recorded and samples were lost and vice versa can be found. Those files where the description data do not correspond to the seismic traces must be discarded.

- Lack of start and end times and/or inexistence of P-wave and S-wave arrival times in the events recorded: when a seismic event is recorded, some variables are measured, among which are the start time and end time of the event and the P-wave and S-wave arrival times. These values are very important to train classification algorithms, as some specific samples can be extracted from the seismograms, knowing when the earthquake began and when it finished. Unfortunately, some files can be well stored but lacking one or more of these four key parameters. In this case, it should be analyzed whether it is possible to determine the start or end date of the event by processing the seismic traces. If this is not possible, the files must be discarded.

4.5. Classification Process

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- El Tiempo, C.E.E. ¿Cuán Vulnerable es Colombia Ante un Sismo? Available online: https://www.eltiempo.com/archivo/documento/CMS-16571309 (accessed on 5 November 2019).

- El Tiempo, C.E.E. Los Peores Terremotos en la Historia de Colombia. Available online: https://www.eltiempo.com/colombia/otras-ciudades/terremotos-mas-fuertes-de-colombia-155006 (accessed on 18 February 2020).

- Wen-xiang, J.; Hai-ying, Y.; Li, L. A Robust Algorithm for Earthquake Detector. Available online: /paper/A-Robust-Algorithm-for-Earthquake-Detector-Wen-xiang-Hai-ying/fa34661c1689cc9ee9a871ae4e1740bf323a54d2 (accessed on 18 February 2020).

- Cuéllar, A.; Suárez, G.; Espinosa-Aranda, J.M. Performance Evaluation of the Earthquake Detection and Classification Algorithm 2( tS–tP) of the Seismic Alert System of Mexico (SASMEX). Bull. Seismol Soc. Am. 2017, 107, 1451–1463. [Google Scholar] [CrossRef]

- Sharma, B.K.; Kumar, A.; Murthy, V.M. Evaluation of seismic events detection algorithms. J. Geol. Soc. India 2010, 75, 533–538. [Google Scholar] [CrossRef]

- USGS 20 Largest Earthquakes in the World. Available online: https://www.usgs.gov/natural-hazards/earthquake-hazards/science/20-largest-earthquakes-world?qt-science_center_objects=0#qt-science_center_objects (accessed on 27 November 2020).

- Sarria Molina, A. Ingeniería Sísmica; Ediciones Uniandes, Ecoe Ediciones: Bogotá, Colombia, 1995; ISBN 958-9057-49-7. [Google Scholar]

- Frohlich, C.; Kadinsky-Cade, K.; Davis, S.D. A reexamination of the Bucaramanga, Colombia, earthquake nest. Bull. Seismol. Soc. Am. 1995, 85, 1622–1634. [Google Scholar]

- Prieto, G.A.; Beroza, G.C.; Barrett, S.A.; López, G.A.; Florez, M. Earthquake nests as natural laboratories for the study of intermediate-depth earthquake mechanics. Tectonophysics 2012, 570, 42–56. [Google Scholar] [CrossRef]

- Bernal-Olaya, R.; Mann, P.; Vargas, C.A. Earthquake, tomographic, seismic reflection, and gravity evidence for a shallowly dipping subduction zone beneath the Caribbean Margin of Northwestern Colombia. In Petroleum Geology and Potential of the Colombian Caribbean Margin; American Association of Petroleum Geologists: Tulsa, OK, USA, 2015; pp. 247–269. [Google Scholar] [CrossRef]

- Ochoa, L.H.; Niño, L.F.; Vargas, C.A. Fast magnitude determination using a single seismological station record implementing machine learning techniques. Geod. Geodyn. 2018, 9, 34–41. [Google Scholar] [CrossRef]

- Konovalov, A.V.; Stepnov, A.A.; Patrikeev, V.N. SEISAN software application for developing an automated seismological data analysis workstation. Seism. Instrum. 2012, 48, 270–281. [Google Scholar] [CrossRef]

- Stepnov, A.A.; Gavrilov, A.V.; Konovalov, A.V.; Ottemöller, L. New architecture of an automated system for acquisition, storage, and processing of seismic data. Seism. Instrum. 2014, 50, 67–74. [Google Scholar] [CrossRef]

- Havskov, J.; Ottemoller, L. SEISAN earthquake analysis software. Seismol. Res. Lett. 1999, 70, 532–534. [Google Scholar] [CrossRef]

- Weber, B.; Becker, J.; Hanka, W.; Heinloo, A.; Hoffmann, M.; Kraft, T.; Pahlke, D.; Reinhardt, J.; Thoms, H. SeisComP3—Automatic and interactive real time data processing. Geophys. Res. Abstracts 2007, 9, 09219. [Google Scholar]

- Alfaro, A. Difficulties on psha in Colombia because of data scarcity. Bull. Int. Inst. Seismol. Earthq. Eng. 2012, 46, 145–152. [Google Scholar]

- Mora-Páez, H.; Peláez-Gaviria, J.-R.; Diederix, H.; Bohórquez-Orozco, O.; Cardona-Piedrahita, L.; Corchuelo-Cuervo, Y.; Ramírez-Cadena, J.; Díaz-Mila, F. Space Geodesy Infrastructure in Colombia for Geodynamics Research. Seismol. Res. Lett. 2018, 89, 446–451. [Google Scholar] [CrossRef]

- Zhu, W.; Mousavi, S.M.; Beroza, G.C. Seismic Signal Denoising and Decomposition Using Deep Neural Networks. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9476–9488. [Google Scholar] [CrossRef]

- Perol, T.; Gharbi, M.; Denolle, M. Convolutional neural network for earthquake detection and location. Sci. Adv. 2018, 4, e1700578. [Google Scholar] [CrossRef] [PubMed]

- Hildyard, M.W.; Nippress, S.E.J.; Rietbrock, A. Event Detection and Phase Picking Using a Time-Domain Estimate of Predominate Period Tpd. Bull. Seismol. Soc. Am. 2008, 98, 3025–3032. [Google Scholar] [CrossRef]

- Toledo Peña, P.A. Algoritmo de Detección de Ondas P Invariante de Escala: Caso de Réplicas del Sismo del 11 de Marzo de 2010. Ph.D. Thesis, Universidad de Chile, Santiago, Chile, 2014. [Google Scholar]

- Allen, R.M.; Gasparini, P.; Kamigaichi, O.; Bose, M. The status of earthquake early warning around the world: An introductory overview. Seismol. Res. Lett. 2009, 80, 682–693. [Google Scholar] [CrossRef]

- Joswig, M. Pattern recognition for earthquake detection. Bull. Seismol. Soc. Am. 1990, 80, 170–186. [Google Scholar]

- Donoho, D.L. Nonlinear Wavelet Methods for Recovery of Signals, Densities, and Spectra from Indirect and Noisy Data. 1993. Available online: https://statistics.stanford.edu/sites/g/files/sbiybj6031/f/EFS%20NSF%20437.pdf (accessed on 2 December 2020).

- Hafez, A.G.; Khan, T.A.; Kohda, T. Earthquake onset detection using spectro-ratio on multi-threshold time–frequency sub-band. Digit. Signal Process. 2009, 19, 118–126. [Google Scholar] [CrossRef]

- Withers, M.; Aster, R.; Young, C.; Beiriger, J.; Harris, M.; Moore, S.; Trujillo, J. A comparison of select trigger algorithms for automated global seismic phase and event detection. Bull. Seismol. Soc. Am. 1998, 88, 95–106. [Google Scholar]

- Allen, R.V. Automatic earthquake recognition and timing from single traces. Bull. Seismol. Soc. Am. 1978, 68, 1521–1532. [Google Scholar]

- Leonard, M. Comparison of Manual and Automatic Onset Time Picking. Bull. Seismol. Soc. Am. 2000, 90, 1384–1390. [Google Scholar] [CrossRef]

- Li, Z.; Peng, Z.; Hollis, D.; Zhu, L.; McClellan, J. High-resolution seismic event detection using local similarity for Large-N arrays. Sci. Rep. 2018, 8, 1646. [Google Scholar] [CrossRef] [PubMed]

- Schorlemmer, D.; Woessner, J. Probability of Detecting an Earthquake. Bull. Seismol. Soc. Am. 2008, 98, 2103–2117. [Google Scholar] [CrossRef]

- Yoon, C.E.; O’Reilly, O.; Bergen, K.J.; Beroza, G.C. Earthquake detection through computationally efficient similarity search. Sci. Adv. 2015, 1, e1501057. [Google Scholar] [CrossRef] [PubMed]

- Avvenuti, M.; Cresci, S.; Marchetti, A.; Meletti, C.; Tesconi, M. EARS (Earthquake Alert and Report System): A Real Time Decision Support System for Earthquake Crisis Management. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; ACM: New York, NY, USA, 2014; pp. 1749–1758. [Google Scholar]

- Sakaki, T.; Okazaki, M.; Matsuo, Y. Earthquake shakes Twitter users: Real-time event detection by social sensors. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; ACM: New York, NY, USA, 2010; pp. 851–860. [Google Scholar]

- Earle, P.S.; Bowden, D.C.; Guy, M. Twitter earthquake detection: Earthquake monitoring in a social world. Ann. Geophys. 2012, 54. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, J.Z. STA/LTA algorithm analysis and improvement of Microseismic signal automatic detection. Prog. Geophys. 2014, 29, 1708–1714. [Google Scholar]

- Havskov, J.; Ottemöller, L. Seisan: The Earthquake Analysis Software for Windows, Solaris, Linuz and MacOSx Version 8.1; Institute of Solid Earth Science, University of Bergen: Bergen, Norway, 2008. [Google Scholar]

- IRIS: Data Formats. Available online: https://ds.iris.edu/ds/nodes/dmc/data/formats/ (accessed on 19 February 2020).

- Pavlis, G. SEISPP Library: C++ Seismic Data Processing Library. Available online: https://pavlab.sitehost.iu.edu/software/seispp/html/index.html (accessed on 9 October 2020).

- Beyreuther, M.; Barsch, R.; Krischer, L.; Megies, T.; Behr, Y.; Wassermann, J. ObsPy: A Python Toolbox for Seismology. Seismol. Res. Lett. 2010, 81, 530–533. [Google Scholar] [CrossRef]

- United States Geological Survey Earthquake Hazards—Software. Available online: https://www.usgs.gov/natural-hazards/earthquake-hazards/software (accessed on 19 February 2020).

- Miranda Calle, J.D.; Gamboa Entralgo, C.A. Desarrollo de un Sistema para la Detección de Movimientos Sísmicos usando Redes Neuronales Artificiales; Universidad Pontificia Bolivariana: Medellín, Colombia, 2018. [Google Scholar]

- Chen, Z.; Stewart, R.R. A multi-window algorithm for real-time automatic detection and picking of p-phases of microseismic events. CREWES Res. Rep. 2006, 18. Available online: https://www.crewes.org/ForOurSponsors/ResearchReports/2006/2006-15.pdf (accessed on 2 December 2020).

- SeisComP3 Scautopick. Makes Picks on Waveforms. Available online: https://www.seiscomp3.org/doc/jakarta/current/apps/scautopick.html (accessed on 19 February 2020).

- Servicio Geológico Colombiano Consulta Catálogo Sísmico. Available online: https://www2.sgc.gov.co/sgc/sismos/Paginas/catalogo-sismico.aspx (accessed on 11 February 2020).

- Catálogo Mecanismo Focal y Tensor Momento. Available online: http://bdrsnc.sgc.gov.co/sismologia1/sismologia/focal_seiscomp_3/index.html (accessed on 9 October 2020).

- Zhang, J.; Tang, Y.; Li, H. STA/LTA Fractal Dimension Algorithm of Detecting the P-Wave ArrivalSTA/LTA Fractal Dimension Algorithm of Detecting the P-Wave Arrival. Bull. Seismol. Soc. Am. 2018, 108, 230–237. [Google Scholar] [CrossRef]

- Akram, J.; Peter, D.; Eaton, D. A k-mean characteristic function to improve STA/LTA detection. In Proceedings of the Geoconvention, Calgary, AB, Canada, 7–11 May 2018; p. 5. Available online: https://geoconvention.com/wp-content/uploads/abstracts/2018/287_GC2018_A_k-mean_characteristic_function_to_improve_STALTA1_detection.pdf (accessed on 2 December 2020).

- Gentili, S.; Michelini, A. Automatic picking of P and S phases using a neural tree. J. Seismol. 2006, 10, 39–63. [Google Scholar] [CrossRef]

- Audretsch, J.M.L. Earthquake Detection using Deep Learning Based Approaches. Master’s Thesis, King Abdullah University of Science and Technology, Thuwal, Saudi Arabia, 2020. [Google Scholar]

- Wiszniowski, J.; Plesiewicz, B.M.; Trojanowski, J. Application of real time recurrent neural network for detection of small natural earthquakes in Poland. Acta Geophys. 2014, 62, 469–485. [Google Scholar] [CrossRef]

- Kong, Q.; Trugman, D.T.; Ross, Z.E.; Bianco, M.J.; Meade, B.J.; Gerstoft, P. Machine Learning in Seismology: Turning Data into Insights. Seismol. Res. Lett. 2019, 90, 3–14. [Google Scholar] [CrossRef]

- Venkatesh, B.; Anuradha, J. A Review of Feature Selection and Its Methods. Cybern. Inf. Technol. 2019, 19, 3–26. [Google Scholar] [CrossRef]

- Miranda, J.D.; Gamboa, C.A.; Flórez, A.; Altuve, M. Voting-based seismic data classification system using logistic regression models. In Proceedings of the 2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA), Bucaramanga, Colombia, 24–26 April 2019; pp. 1–5. [Google Scholar]

- Bormann, P.; Wielandt, E. Seismic Signals and Noise. New Man. Seismol. Obs. Pract. 2 NMSOP2 2013, 1–62. [Google Scholar] [CrossRef]

- Kaur, K.; Wadhwa, M.; Park, E.K. Detection and identification of seismic P-Waves using Artificial Neural Networks. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–6. [Google Scholar]

- Beyreuther, M.; Hammer, C.; Wassermann, J.; Ohrnberger, M.; Megies, T. Constructing a Hidden Markov Model based earthquake detector: Application to induced seismicity: Constructing a HMM based earthquake detector. Geophys. J. Int. 2012, 189, 602–610. [Google Scholar] [CrossRef]

- Riggelsen, C.; Ohrnberger, M. A Machine Learning Approach for Improving the Detection Capabilities at 3C Seismic Stations. Pure Appl. Geophys. 2014, 171, 395–411. [Google Scholar] [CrossRef]

- Ruano, A.E.; Madureira, G.; Barros, O.; Khosravani, H.R.; Ruano, M.G.; Ferreira, P.M. Seismic detection using support vector machines. Neurocomputing 2014, 135, 273–283. [Google Scholar] [CrossRef]

- Asencio–Cortés, G.; Morales–Esteban, A.; Shang, X.; Martínez–Álvarez, F. Earthquake prediction in California using regression algorithms and cloud-based big data infrastructure. Comput. Geosci. 2018, 115, 198–210. [Google Scholar] [CrossRef]

- Li, W.; Narvekar, N.; Nakshatra, N.; Raut, N.; Sirkeci, B.; Gao, J. Seismic Data Classification Using Machine Learning. In Proceedings of the 2018 IEEE Fourth International Conference on Big Data Computing Service and Applications (BigDataService), Bamberg, Germany, 26–29 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 56–63. [Google Scholar]

- Amidan, B.G.; Hagedom, D.N. Logistic Regression Applied to Seismic Discrimination; No. PNNL-12031; Pacific Northwest National Lab.: Richland, WA, USA, 1998. [Google Scholar]

- Reynen, A.; Audet, P. Supervised machine learning on a network scale: Application to seismic event classification and detection. Geophys. J. Int. 2017, 210, 1394–1409. [Google Scholar] [CrossRef]

- Ibs-von Seht, M. Detection and identification of seismic signals recorded at Krakatau volcano (Indonesia) using artificial neural networks. J. Volcanol. Geotherm. Res. 2008, 176, 448–456. [Google Scholar] [CrossRef]

- HassanAitLaasri, E.; Akhouayri, E.-S.; Agliz, D.; Atmani, A. Seismic Signal Classification using Multi-Layer Perceptron Neural Network. Int. J. Comput. Appl. 2013, 79, 35–43. [Google Scholar] [CrossRef]

- Giudicepietro, F.; Esposito, A.M.; Ricciolino, P. Fast Discrimination of Local Earthquakes Using a Neural Approach. Seismol. Res. Lett. 2017, 88, 1089–1096. [Google Scholar] [CrossRef]

- Vallejos, J.A.; McKinnon, S.D. Logistic regression and neural network classification of seismic records. Int. J. Rock Mech. Min. Sci. 2013, 62, 86–95. [Google Scholar] [CrossRef]

- Diersen, S.; Lee, E.-J.; Spears, D.; Chen, P.; Wang, L. Classification of Seismic Windows Using Artificial Neural Networks. Procedia Comput. Sci. 2011, 4, 1572–1581. [Google Scholar] [CrossRef]

- Amei, A.; Fu, W.; Ho, C.-H. Time Series Analysis for Predicting the Occurrences of Large Scale Earthquakes. Int. J. Appl. Sci. Technol. 2012, 2, 12. [Google Scholar]

- Adhikari, R.; Agrawal, R.K. An Introductory Study on Time series Modeling and Forecasting. arXiv 2013, arXiv:1302.6613. Available online: https://arxiv.org/abs/1302.6613 (accessed on 2 December 2020).

- Yu, J.; Wang, R.; Liu, T.; Zhang, Z.; Wu, J.; Jiang, Y.; Sun, L.; Xia, P. Seismic energy dispersion compensation by multi-scale morphology. Pet. Sci. 2014, 11, 376–384. [Google Scholar] [CrossRef]

- Egrioglu, E.; Khashei, M.; Aladag, C.H.; Turksen, I.B.; Yolcu, U. Advanced Time Series Forecasting Methods. Available online: https://www.hindawi.com/journals/mpe/2015/918045/ (accessed on 9 October 2020).

- Fong, S.; Deb, S.; Fong, S.; Deb, S. Prediction of Major Earthquakes as Rare Events Using RF-Typed Polynomial Neural Networks. In Encyclopedia of Information Science and Technology; IGI Global: Hershey, PN, USA, 2015; ISBN 978-1-4666-5888-2. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miranda, J.; Flórez, A.; Ospina, G.; Gamboa, C.; Flórez, C.; Altuve, M. Proposal for a System Model for Offline Seismic Event Detection in Colombia. Future Internet 2020, 12, 231. https://doi.org/10.3390/fi12120231

Miranda J, Flórez A, Ospina G, Gamboa C, Flórez C, Altuve M. Proposal for a System Model for Offline Seismic Event Detection in Colombia. Future Internet. 2020; 12(12):231. https://doi.org/10.3390/fi12120231

Chicago/Turabian StyleMiranda, Julián, Angélica Flórez, Gustavo Ospina, Ciro Gamboa, Carlos Flórez, and Miguel Altuve. 2020. "Proposal for a System Model for Offline Seismic Event Detection in Colombia" Future Internet 12, no. 12: 231. https://doi.org/10.3390/fi12120231

APA StyleMiranda, J., Flórez, A., Ospina, G., Gamboa, C., Flórez, C., & Altuve, M. (2020). Proposal for a System Model for Offline Seismic Event Detection in Colombia. Future Internet, 12(12), 231. https://doi.org/10.3390/fi12120231