Abstract

In an era of accelerating digitization and advanced big data analytics, harnessing quality data and insights will enable innovative research methods and management approaches. Among others, Artificial Intelligence Imagery Analysis has recently emerged as a new method for analyzing the content of large amounts of pictorial data. In this paper, we provide background information and outline the application of Artificial Intelligence Imagery Analysis for analyzing the content of large amounts of pictorial data. We suggest that Artificial Intelligence Imagery Analysis constitutes a profound improvement over previous methods that have mostly relied on manual work by humans. In this paper, we discuss the applications of Artificial Intelligence Imagery Analysis for research and practice and provide an example of its use for research. In the case study, we employed Artificial Intelligence Imagery Analysis for decomposing and assessing thumbnail images in the context of marketing and media research and show how properly assessed and designed thumbnail images promote the consumption of online videos. We conclude the paper with a discussion on the potential of Artificial Intelligence Imagery Analysis for research and practice across disciplines.

1. Introduction

Innovative technologies have triggered the current omnipresence of visual data. These large amounts of visual data are the raw material for obtaining rich insights for research and practice. A number of approaches to big data analytics have evolved that increasingly offer a set of tools for analyzing large amounts of data. One area of big data analytics that has recently come to maturity is computer vision, which in itself involves an array of technologies and methodologies.

Artificial Intelligence Imagery Analysis is one approach that is relevant to computer vision, and it is starting to gain attention among researchers and practitioners. Using machine learning and neural networks, Artificial Intelligence Imagery Analysis detects various types of imagery features in pictorial data. It provides insights on those data and thereby helps to cluster, filter, or otherwise analyze large amounts of images.

Until a few years ago, pictorial data largely constituted a black box for research and practice, even though such data drive important and tangible outcomes such as the adoption and use of systems or the consumption and sales of digital content. Recently, information systems researchers have called for the increased use of images as a data source, since the field is “overwhelmingly visual in nature” [1].

So far, information systems research has relied mostly on traditional methods in responding to the call for the increased use of pictorial data. The dominant quantitative approach for analyzing imagery data is the experimental approach, which can be further differentiated into traditional, neuroimaging, and psychophysiological approaches. In traditional experiment-based designs, participants typically view imagery stimuli on a screen and answer questions or make choices to determine attitudinal or behavioral outcomes [2]. Neuroimaging studies use data obtained via electroencephalography (EEG) [3] or functional magnetic resonance imaging (fMR) [4]. Functional near infrared spectroscopy (fNIR) provides a third neuroimaging approach [5]. The main psychophysiological approach is eye tracking [6,7]. Surveys are often used to complement quantitative, experimental approaches [6].

However, so far it has hardly been possible to dig deeper into the thematic and semantic levels of large amounts of pictorial data and systematically analyze them and the responses they trigger. Recent advancements in Artificial Intelligence (AI) has made it possible, for the first time, to analyze the features of a large number of images with a precision that recently exceeded the rating and classification precision of human raters [8]. We distinguish (1) basic features such as dominant colors, shapes, or symmetry, (2) textual or conceptual representations of important topics, and (3) human features (e.g., faces and emotion). Hence, Artificial Intelligence Imagery Analysis seems to be a promising method for information systems research as it allows for comprehensive pre-testing, simplifies longitudinal research designs that require collecting data over weeks or months, and reduces the cost and time for data collection by a factor of 100 to 1000. Further, it overcomes typical human-related biases that are common in research using human raters.

Below, we provide background information and outline the application of Artificial Intelligence Imagery Analysis for analyzing the content of large amounts of pictorial data. We suggest that Artificial Intelligence Imagery Analysis constitutes a profound improvement over previous methods that mostly rely on manual work by humans. Further, we discuss applications of Artificial Intelligence Imagery Analysis for research and in practice and provide a case study for its use in research. In the case study, we employed Artificial Intelligence Imagery Analysis for decomposing and assessing thumbnail images in the context of marketing and media research and show how properly assessed and designed thumbnail images promote the consumption of online videos. We conclude with a discussion on the potential of Artificial Intelligence Imagery Analysis for research and in practice across disciplines.

2. Artificial Intelligence Imagery Analysis: Background, Evolution, and Illustration

2.1. Background

Artificial Intelligence Imagery Analysis, belongs to the field of computer vision, and it is a sub-discipline of AI. It deals with the segmentation, analysis, and understanding of the content of stationary and moving images by computers [9]. Using machine learning and AI methods, models are trained on “training datasets”, for which images and digital formats are given. The output, on which the machine learning model is intended to be trained on is known, for instance, through prior rating by humans.

Scientific research has developed and improved Artificial Intelligence Imagery Analysis methods and the application of these methods. Work on Artificial Intelligence Imagery Analysis methods originates from various fields including computer science, applied mathematics, neuroscience, and fundamental psychology research [10] and recent research has mainly been concerned with the ongoing refinement of these methods and their extension to new applications. Areas for the groundwork on Artificial Intelligence Imagery Analysis are diverse and include video tracking or taking, object recognition, for instance, for the driverless car, image database indexing, scene reconstruction, and event detection [9].

Research on concrete applications related to Artificial Intelligence Imagery Analysis spreads across many application sectors. We find early research and applications in medicine, in relation to driverless cars and in (industrial) robotics. For instance, in medicine, research has targeted the detection of skin cancer [11] and the detection of cut marks in bones [12]. Other works have focused on analyzing images of the human eye to detect the state and progression of certain eye diseases [13]. In the context of the driverless car, research has been concerned with road boundary detection [14], traffic sign detection [15], and visual localization [16].

Finally, in (industrial) robotics, researchers have employed Artificial Intelligence Imagery Analysis for object detection that supports indoor robot navigation [17], for detecting forest trails for a mobile robot travelling through the forest [18], and for spotting and identifying apples in an automated apple harvesting system [19].

2.2. Evolution

The evolution of Artificial Intelligence Imagery Analysis started in the 1940s. Back then, research gained initial insights on the working mechanisms of the human brain and the initial concepts of artificial neural networks were developed. In the 1960s, universities around the globe built on this fundamental research and began to experiment with Artificial Intelligence Imagery Analysis on a larger scale with the goal to understand the content of scenic situations [20].

However, it quickly became obvious that the computing power available at the time was a major bottleneck. The tasks to be accomplished were more complex than initially thought. In particular, conducting computer vision in our natural environment—a three-dimensional space—was challenging because identical objects can look much different depending on the angle, proximity, lighting and other conditions [21].

Methods for Artificial Intelligence Imagery Analysis improved though the 1990s. Research made significant progress in addressing some of the complexity of natural images, for instance, in the area of 3D reconstruction and especially with regard to statistical learning techniques, for instance, for recognizing faces in pictures.

Recent work has seen the renaissance of feature-based methods used in conjunction with machine learning techniques and complex optimization frameworks [22].

2.3. Azure Cognitive Services from Microsoft

The applications within Azure Cognitive Services from Microsoft are among the leaders in the industry; they comprise numerous applications around decision-making, speech, language, vision and search [23]. For vision, the applications comprise image classification, scene and activity recognition, optical character recognition, face detection, emotion recognition, video indexing, and form recognition [24]. The literature provides detailed technical descriptions of three major services for Artificial Intelligence Imagery Analysis—see [25] on the computer vision Application Program Interface (API), [26,27] on the face API, and [28] on the emotion API.

The cloud-based Azure Cognitive Services enable developers to harness the power of Artificial Intelligence Imagery Analysis without requiring superior computing power or in-depth skills and knowledge in the area of machine learning. Provided via an API, it is possible to integrate the services into applications that enable us to “see”, “hear”, “speak”, “understand”, and even “begin to reason” [29].

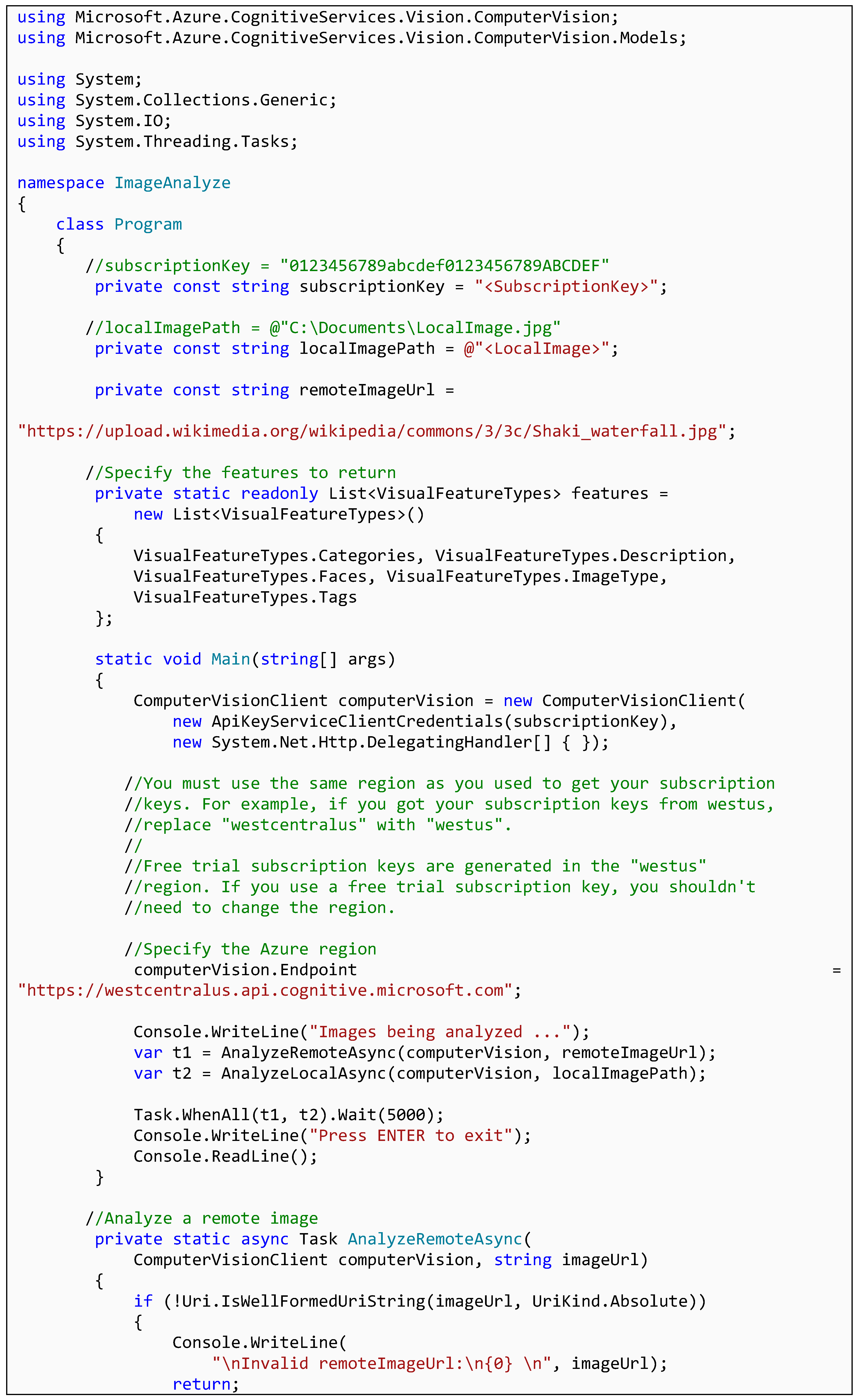

The API then provides the result as JSON output. Application developers can parse the JSON output. The actual procedure depends on their needs and the required information to integrate the basic analysis results for gaining higher-level insights. Microsoft provides the following C# code (docs.microsoft.com/de-de/azure/cognitive-services/computer-vision/quickstarts-sdk/csharp-analyze-sdk) that can be used as a template to access and obtain information from the Computer Vision API within the Azure Cognitive Services.

It is organized around the following logic:

- Provide the subscription key for Azure Cognitive Services for the purpose of invoicing

- Provide the URL of the input image

- Specify for what features the API should analyze the image

- Display the results of the analysis

The actual image analysis is completed in the cloud. Due to the proprietary nature of the cloud applications, Microsoft does not disclose the source code or any documentation on the application itself (apart from how to use it). Still, developers can build on the generally known mechanisms of Computer Vision and Artificial Intelligence Imagery. Every image analysis starts with some pre-processing of the input image. This includes filtering, segmentation, edge detection, and the analysis of colors. More advanced applications, for instance for the recognition of objects, rely on convolutional neural networks—the state-of-the-art for image analysis. Those networks mimic the biological and mental processes of visual perception (for further technical details, the literature provides more detailed insights [30]).

Within the large array of different available Artificial Intelligence Imagery Analysis applications, data requirements and the process of data preparation differ depending on the Artificial Intelligence Imagery Analysis service used and the specific task at hand. However, the prerequisites no longer create any impediments for conducting research based on Artificial Intelligence Imagery Analysis services. For instance, analyzing images with Microsoft Azure Cognitive Services only requires [31]:

- Image URL or path to locally stored image

- Supported input methods: raw image binary in the form of an application/octet stream or image URL

- Supported image formats: JPEG, PNG, GIF, BMP,

- Image file size: less than 4 MB, and

- Image dimension: greater than 50 × 50 pixels.

3. Potential Applications

Artificial Intelligence Imagery Analysis allows researchers and practitioners to systematically generate insights from unstructured pictorial data that no human could ever analyze in a timely manner. To this end, existing Artificial Intelligence Imagery Analysis applications help research and practice not only to cope with large amounts of pictorial data, but also to continuously extend and improve the arsenal of new applications.

In some cases in research or practice, the first round of Artificial Intelligence Imagery Analysis may not work properly for non-standard issues. The underlying systems first need to “learn” how to accomplish a complex imagery analysis task based on known data relations before they can work on unknown datasets.

3.1. Supporting Research Designs and Processes

We envision that Artificial Intelligence Imagery Analysis has applicability in a wide variety of research areas. Four exemplary settings include: (1) design science studies on human-computer interaction, (2) studies on the adoption and usage of information systems and technology, particularly with regard to the visual components, (3) studies on visual-content-driven social media, and (4) studies on systems for content recommendation, segmentation and filtering. Many of these research areas have experienced a large growth of pictorial input data. New touch-based devices such as smartphones and tablets come with simplified interfaces that favor pictorial over text-based information. Digitization lowers the barrier to producing pictorial data in any context, for instance around user-generated content in social media.

In all research areas, Artificial Intelligence Imagery Analysis allows for the large sample sizes required to draw robust statistical inferences from real-world contexts. Thus, it constitutes a valuable extension of the methodological pool available to researchers, particularly as it overcomes the sample size drawbacks related to research designs that rely on manual human rating and classification of pictorial data.

The least common denominator for applying Artificial Intelligence Imagery Analysis services is targeting any research question that asks for a relation between any antecedents such as, for instance, human behavior, pictorial data, and their outcomes.

Overall, Artificial Intelligence Imagery Analysis supports the research process in several respects.

Reduction of biases. Traditional image rating and classification tasks, as employed for experimental research designs that involve humans, are subject to numerous biases, such as anchoring, attentional biases, contrast effects, courtesy biases, distinction biases, framing effects, negativity biases, salience biases, selective perception, or stereotyping. In addition, human performance on large series of “dull” image rating and classification tasks tends to decline over time. Artificial Intelligence Imagery Analysis significantly reduces these biases. The “system” that performs a task is not subject to previous biases and its rating performance does not decline with an increasing number of tasks.

Reduction of costs. Compared to traditional, experimental research designs for the rating and classification of images by humans, Artificial Intelligence Imagery Analysis reduces the costs of empirical studies by a factor of 100 to 1000. This is because human time is a comparatively costly resource. Also, compared to computers, humans are significantly slower when it comes to image rating and classification tasks. When using Artificial Intelligence Imagery Analysis, however, costs arise only for the initial development and training of the system. This is usually not done on the basis of the individual research project, but only once by an external service provider. After the system is set up, marginal costs for analyzing additional images converge to zero.

Reduction of time. Artificial Intelligence Imagery Analysis can quickly analyze millions of images and thereby provide empirical results “by the end of the day”, as opposed to studies involving human participants that often require months for planning, execution, data preparation, and analysis.

Simplification of data management. Experiments involving traditional image rating and classification tasks often come with a significant overhead of data management requirements. Researchers need to set up questionnaires or rating tools, administer these to study participants and collect their responses, and often also need to collect demographic data for the different human participants. When using Artificial Intelligence Imagery Analysis, one computer replaces many human raters, and thus significantly reduces the complexity of data management.

Facilitation of longitudinal research designs. Research designs that involve human participants for analyzing images are often cross-sectional because it is challenging and costly for research practice to have the same human participant available at numerous points in time (as is necessary for longitudinal research designs). Due to its low costs, high availability, and computer-based execution, Artificial Intelligence Imagery Analysis makes it easier to conduct longitudinal research.

Reduction of study complexity and researcher cognitive overload. Conducting empirical studies that involve human participants often ties up significant person power in researcher teams or even requires additional team members just to administer and execute the empirical study. Artificial Intelligence Imagery Analysis, in contrast, frees up human researchers from many details around the gathering of the empirical data, and thus leaves more room for the research design and interpretation of the data.

Increase in rating precision. During the last two decades, systems for Artificial Intelligence Imagery Analysis have continuously improved and now provide a high rate of precision that outperforms human raters [8]. The system makes fewer objective errors, such as missing objects that should be classified or wrongly classifying objects due to inattention.

Allows for “serendipity” and better explorative data analysis in the early stages of research projects. Artificial Intelligence Imagery Analysis supports typical data sciences’ research efforts as it allows for quickly testing datasets for certain patterns, and thereby more easily allows for finding something “interesting” or unexpected in a dataset, which might ultimately stimulate a research project that would not otherwise have existed.

However, there are also challenges arising from applying Artificial Intelligence Imagery Analysis in research.

Makes machine learning models interpretable. While systems for Artificial Intelligence Imagery Analysis can perform sophisticated rating and classification tasks, the underlying model obtained from machine learning is usually highly complex and not interpretable. Therefore, when researchers not only want to perform certain rating or classification tasks, but also gain some insights into how the rating process came to its conclusions, Artificial Intelligence Imagery Analysis alone is not sufficient. At least up to now, Artificial Intelligence Imagery Analysis itself does not offer any insights on how it solves a problem.

Coping with researcher skill reduction. When insights are just “one click away” and the tools around systems for Artificial Intelligence Imagery Analysis become more sophisticated, this may lead to an overall reduction in researcher skills. Here, researchers have to balance on the one hand the benefits from using a technology that frees them up from some complexity in their work, and on the other hand, the potential drawbacks of “outsourcing” an important part of their intellectual and cognitive skills to a machine and accept the “responses” from the black box as given.

Machine encoding of knowledge/information. The machine executing the Artificial Intelligence Imagery Analysis takes certain ontologies for granted when performing the rating tasks. Examples of such ontologies are the categories used to for categorize certain images cues. As the input drives the output, deploying Artificial Intelligence Imagery Analysis requires questioning the underlying ontologies—ideally before running any analysis and not just upon interpreting results.

Coping with empiricism. Artificial Intelligence Imagery Analysis speeds up and eases the process of obtaining large empirical datasets at low cost, compared to, for instance, running experiments with human participants. However, the ease of obtaining new data and having the corresponding tools for data analysis at hand may result in an overemphasis on empirical work as a means of research. Staying focused on well-grounded science could become more challenging. Seemingly impressive insights and research “findings” based on large data sets that lead to statistically significant “results” can come to life rather fast and cheaply–often hidden from detailed inspection.

3.2. Applications in Practice

We anticipate an array of applications of Artificial Intelligence Imagery Analysis in practice. They include, but are not limited to the following.

Aggregating and organizing content, especially user-generated content. Customers and users enjoy a large assortment of barely distinguishable online content. This also applies to user-generated videos, which are often created for non-monetary reasons such as positive feedback, reputation, status, or “warm glow”. To support users’ searches across content offerings, platforms favor imagery cues that users can process quickly and subconsciously. Organizing and filtering content at the thumbnail level reduces the decision complexity and decreases the depth of search for users. Thus, it may help to foster sales or monetarily harvest the value from their users.

Targeting and mass-customization of content. Platforms may want to algorithmically align thumbnails to content and target individual users with their individual preferences and taste, and harvest the additional monetary value from them. Artificial Intelligence Imagery Analysis can help build models that algorithmically decide on thumbnail composition against a desired outcome and at near-zero marginal costs. This allows providers to economically optimize a thumbnail for a piece of content and its prospective consumers – at near-zero marginal costs, and if necessary, in real-time.

Targeting advertising. Similar to the advertising market [32], platforms can charge publishers for targeting their content to users. As not all users react equally to different kinds of imagery cues along the dimensions of complexity, emotional strength, concreteness, and uniqueness in thumbnails, platforms can customize thumbnails to the individual user in a way that either promotes or inhibits consumption.

Enhancing business processes. Managers can implement Artificial Intelligence Imagery Analysis into their processes or for their end-user platforms. Regarding internal processes, deploying Artificial Intelligence Imagery Analysis can lead to recommendations for the design of content “packaging” and give real-time feedback to design drafts. When producers upload their content onto platforms, Artificial Intelligence Imagery Analysis allows for building toolboxes and checklists for stronger embedding of the content into the platform via its “packaging”.

4. Research Illustration

To demonstrate the value that can be obtained from Artificial Intelligence Imagery Analysis, this section summarizes an actual research case.

To facilitate choice among growing media assortments, media platforms use recommendation systems such as thumbnail images (in brief: thumbnails) as information cues to promote their media goods.

Typically, every thumbnail shows a scene with objects that possess characteristics [33]. We refer to those objects as visual information cues. By distinguishing human information processing into lower-level processing based on perception and higher-level processing based cognition [34], we differentiate between low-level cues (contrast, color, shapes) and high-level cues. The latter, we differentiate further into conceptual and social cues, as humans differently process lifeless objects and living beings, particularly humans [34]. Conceptual cues encompass imagery and textual cues [2,35]. For social cues [6,36], we distinguish social presence and emotional presence. Social presence refers to the emotionally neutral presence of living beings (animals or humans). Emotions cannot be detected because a body is only visible from a distance. Emotional presence refers to facially communicating emotional states, such as anger, contempt, disgust, fear, happiness, sadness, or surprise.

The advancement of innovative technologies has increased the potential of thumbnails. They are particularly significant in fostering online video consumption. New touch-based devices such as smartphones and tablets come with simplified interfaces that favor pictorial over text-based information.

Artificial Intelligence Imagery Analysis allows us to analyze about 400,000 thumbnails and videos from YouTube (2005–2015) via Microsoft Cognitive Services APIs for detecting imagery concepts, faces, and emotions in faces within pictures and Tesseract for obtaining data on text within the thumbnails [37], and thereby quantifying the visual content of the thumbnails.

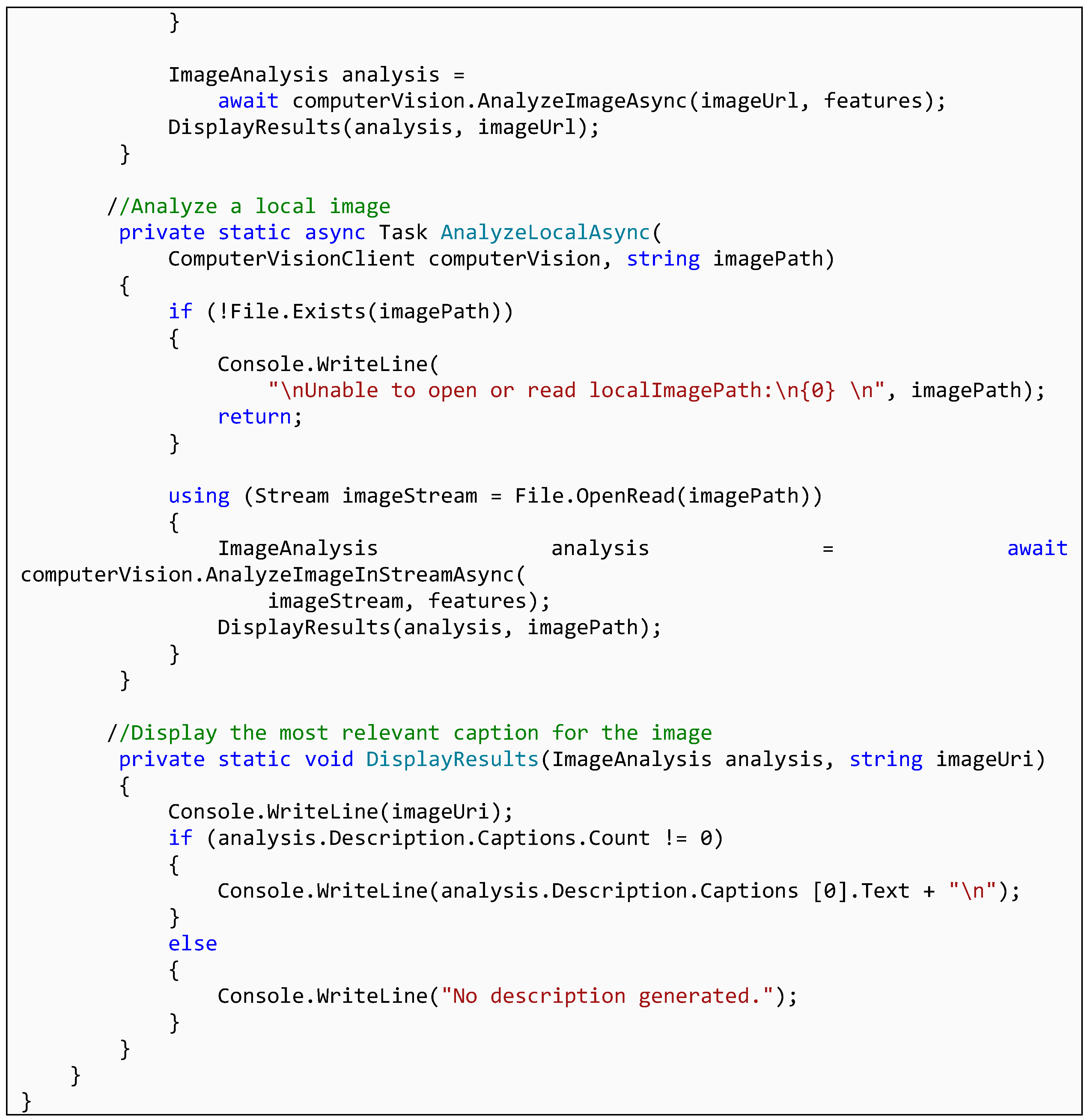

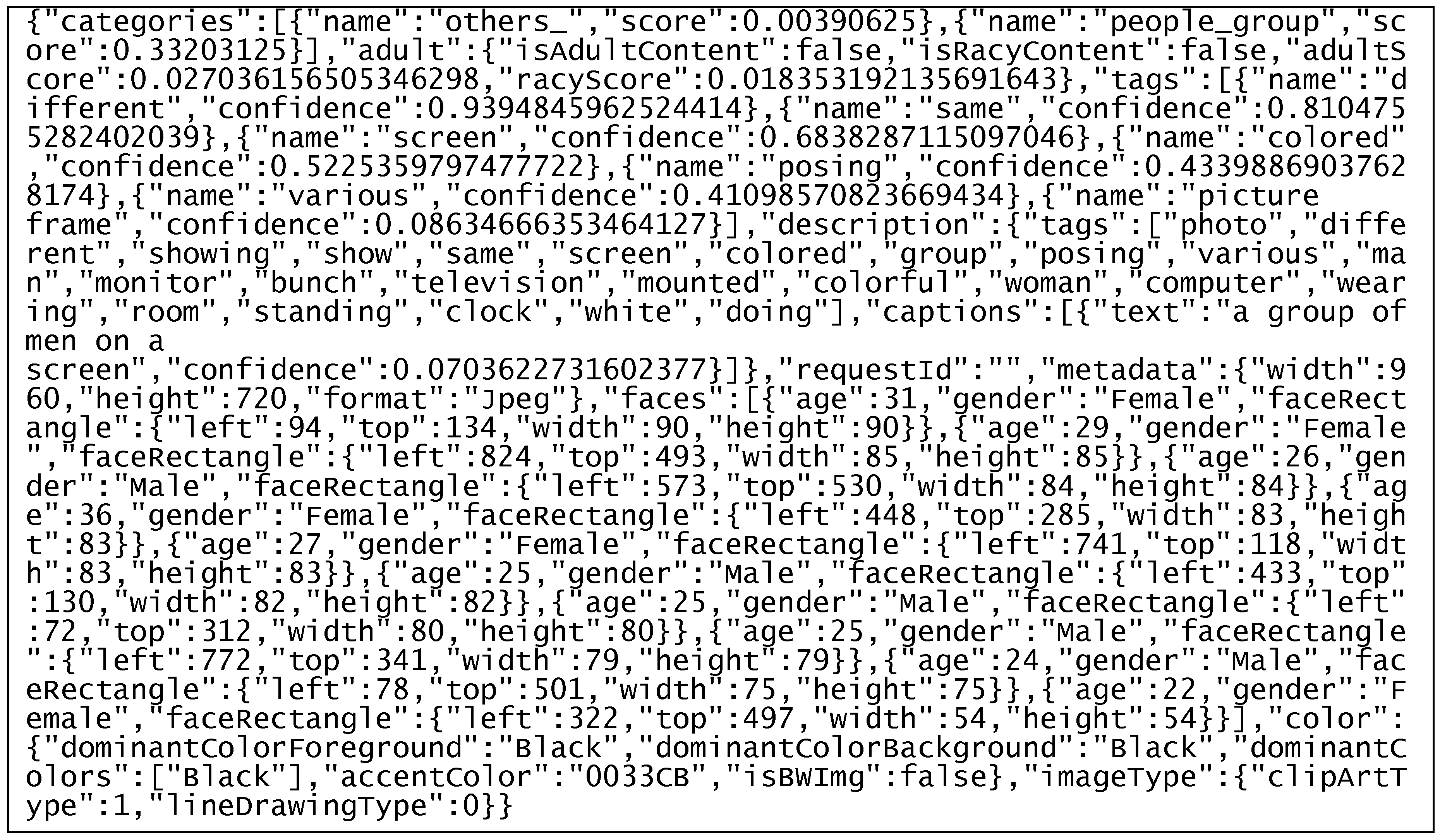

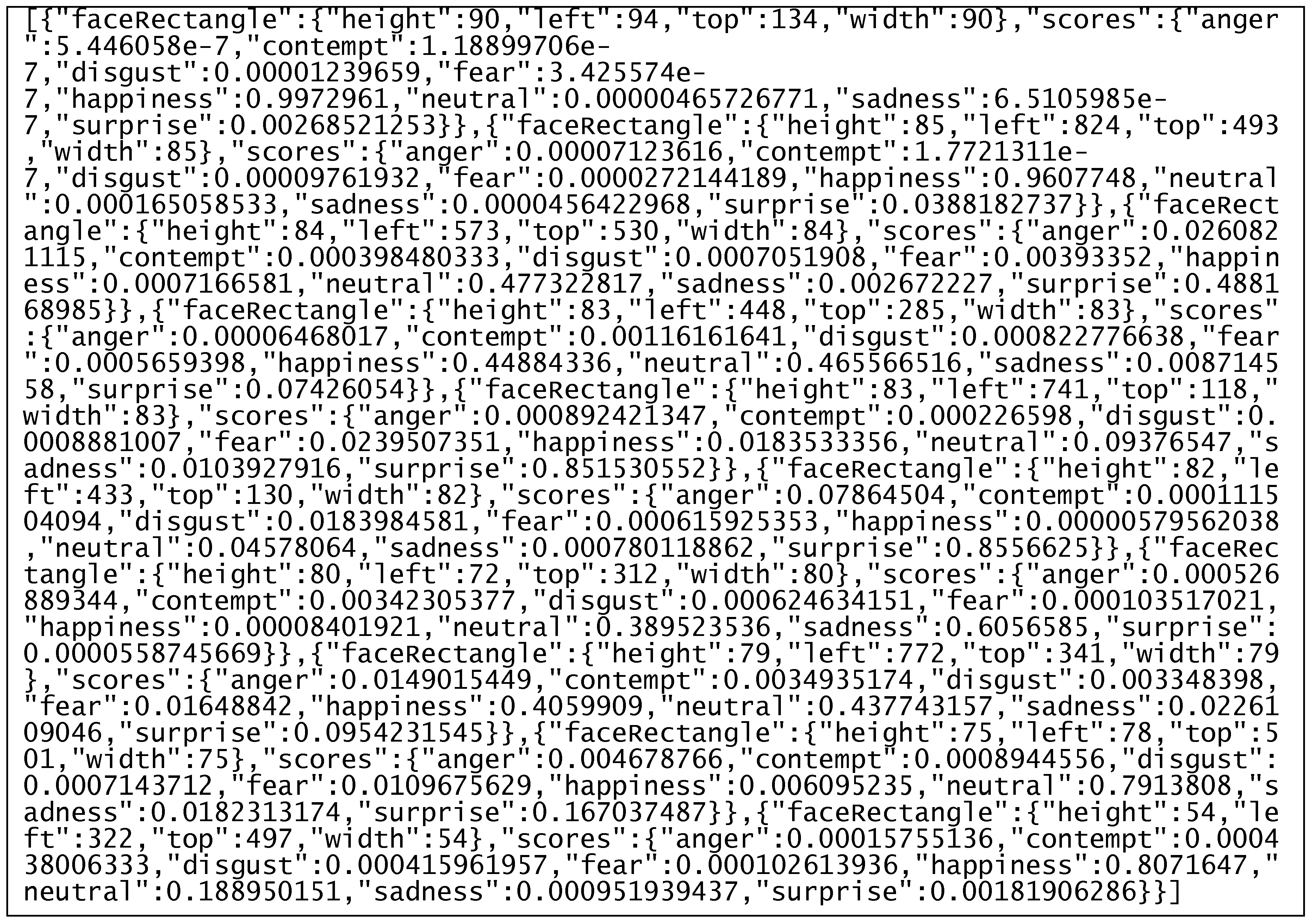

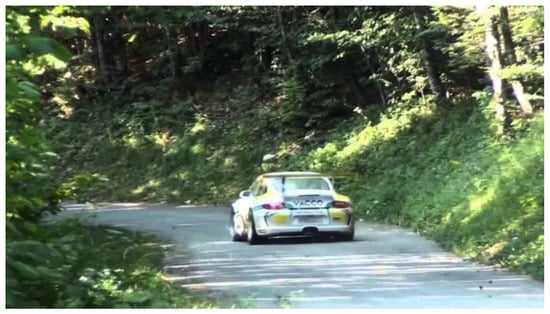

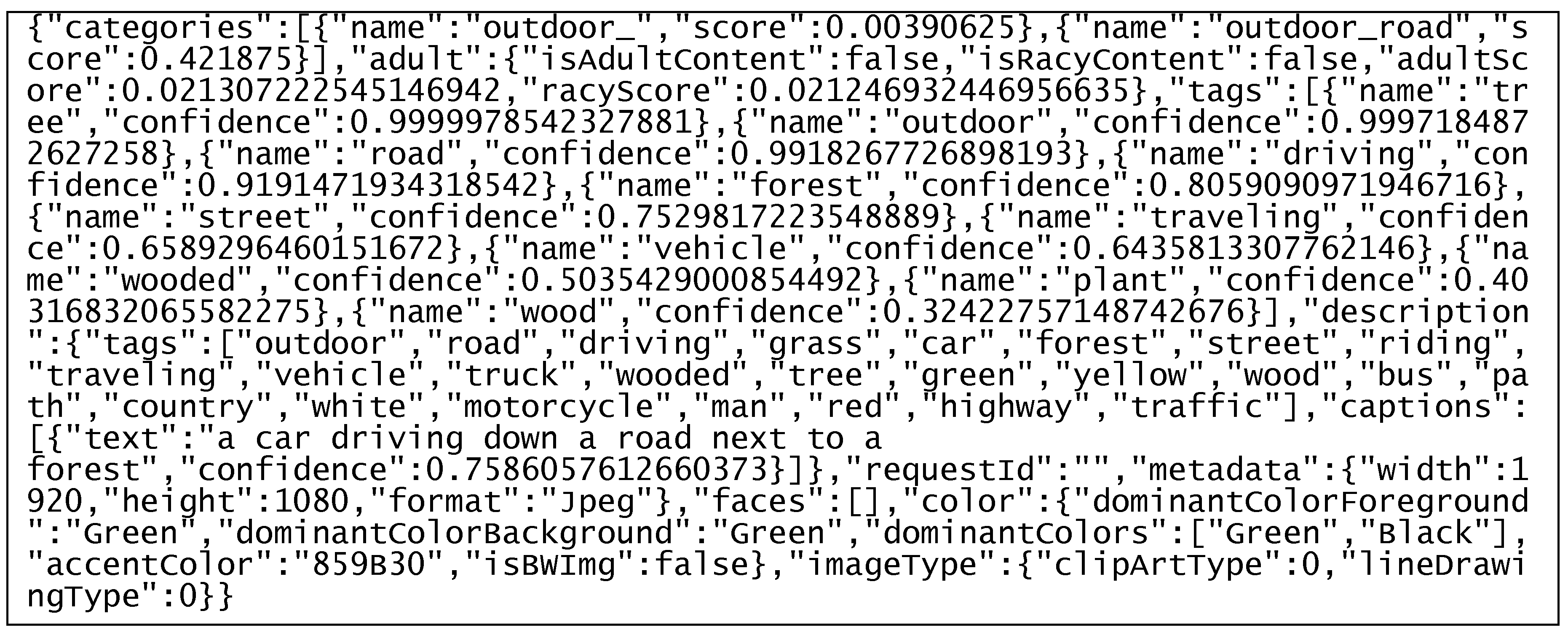

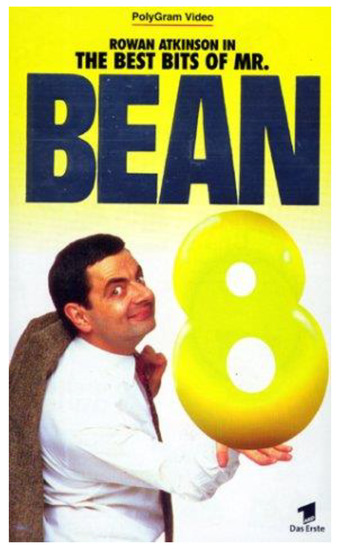

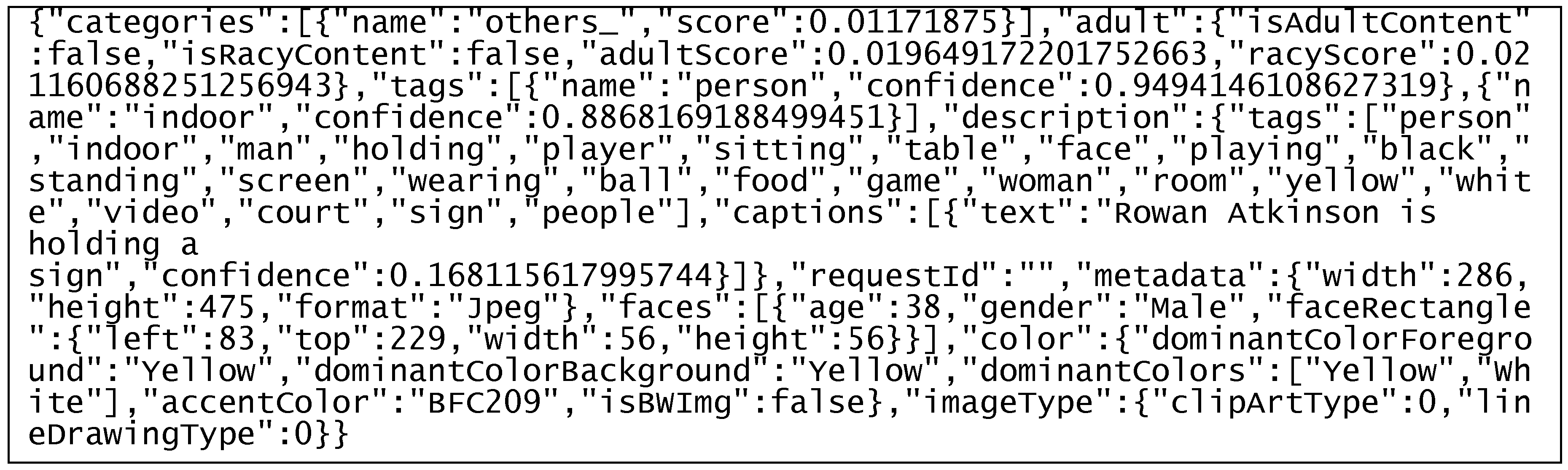

For illustration purposes, we provide the raw analysis output for three out of the 400,000 thumbnails in JSON format (see Figure 1, Figure 2 and Figure 3). For each example, we provide

- The input image uploaded to the API: Every image is a thumbnail shown on YouTube. By clicking on the thumbnail, the user can access the YouTube video behind.

- The computer vision API output follows the general format of first stating an assessment with regard to the input image and then, where applicable, a confidence score (indicating to what degree the API was “confident” to make this assessment, with 1 as the highest level of confidence). The output starts with a general categorization of the image and then indicates to what extent the image shows content that is adult or racy. Then, it lists tags assigned either to the image as a whole, or certain objects in the image. What follows is a summarized text description for the image as a whole, for instance “a group of men on a screen” and some metadata of the image. The output then lists the faces identified in the image, including coordinates and dimensions, age and gender. In the end, the API output identifies dominant colors, accent colors, whether the image is black and white and what type the image is, for instance clipart or a naturalistic photography.

- The emotion API output is organized around the faces identified in the input image. For every face, the output first states the coordinates and dimensions. It then provides scores that indicate to what degree the face shows anger, contempt, disgust, fear, happiness, sadness, surprise, or is totally neutral. For each emotion, there is a score between 0 and 1, with 1 indicating that the face expresses the emotion as strongly as possible. The API does not only assign a single dominant emotion to a face, but recognizes when different types of emotions are present in different degrees. The categorization for negative emotions (5 categories) is more fine-grained than for positive emotions (1 category), because human emotions are more complex in the negative range than in the positive range.

- The Optical Character Recognition (OCR) output originates from the Tesseract open-source software and first states the two-digit language code for the primary language the OCR engine has looked for in the thumbnail. It further identifies whether all text in the image is rotated by a certain angle and whether the text orientation is “up” or “down”. Afterwards, the output is organized by regions of text, and then by bounding boxes that contain a piece of text with characters that are spatially close, such as a word. For every bounding box, the output identifies coordinates and dimensions and the characters recognized within the box.

Figure 1.

Sample image 1 and related API output.

Computer Vision API Output

Emotion API Output

OCR Output

Figure 2.

Sample image 2 and related API output.

Computer Vision API Output

Emotion API Output

OCR Output

Figure 3.

Sample image 3 and related API output.

Computer Vision API Output

Emotion API Output

OCR Output

Once the thumbnail content is quantified, several multiple linear regression analyses are run to investigate how different imagery features affect the consumption, here that is views of the different respective videos.

Overall, the Artificial Intelligence Imagery Analysis enables regression analyses that offer numerous insights for research and practice, regarding the design and the impact of thumbnails in selling digital hedonic goods [37]. First of all, the uniqueness of a pictured concept within a dataset decreases the consumption of online videos. However, the uniqueness of a color scheme within a thumbnail, respective to all color schemes in the dataset, increases consumption. Interpreting unique color schemes as novelty, this suggests that novel information cues have an impact on attention and behavior as they require more initial processing. Also, adding imagery and text to thumbnails lowers the consumption of online videos. In contrast, adding several faces and “loading them with emotion” increases it. Further, positive and especially negative emotions pictured in thumbnails promote the consumption of hedonic media goods. Finally, higher image resolution and stronger confidence in identifying the pictured concept lower the consumption of hedonic media goods, suggesting images in thumbnails should be vague rather than too concrete.

5. Outlook

The growth of digitization, big data analytics, and AI cannot be slowed down, let alone stopped or reversed; data continues to increase in volume and importance. In this context, Artificial Intelligence Imagery Analysis has the potential to fundamentally change and inspire research and practice by enriching traditional approaches. However, it is not only suitable for addressing existing topics in various research areas, it may also challenge research design and outcomes.

When applying Artificial Intelligence Imagery Analysis, researchers have to be careful to make their models for machine learning interpretable. They also have to cope with the potential loss of human skills. The applications for Artificial Intelligence Imagery Analysis build on certain ontologies that researchers often take for granted—even if they may not be appropriate for their specific research context. Lastly, researchers will have to find balance between taking any pattern they find in the data and building a theory around it versus developing a theory and searching for suitable data to test it.

Overall, we envision that Artificial Intelligence Imagery Analysis will be utilized in a wide variety of application scenarios and research contexts. Examples include design science in human-computer interactions, the study of adoption and usage in IS, particularly with regard to the visual components, and the study of visual-content-driven social media. Artificial Intelligence Imagery Analysis is a promising alternative or supplement to traditional research methods when it comes to rating precision (He et al. 2015) [8], the reduction of common biases, comprehensive pre-testing, new longitudinal research designs, and last, but by no means least the reduction in cost and time for data collection.

Author Contributions

Both authors contributed equally to the article.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Diaz Andrade, A.; Urquhart, C.; Arthanari, T. Seeing for understanding: Unlocking the potential of visual research in Information Systems. J. Assoc. Inf. Syst. 2015, 16, 646–673. [Google Scholar] [CrossRef]

- Jiang, Z.; Benbasat, I. The effects of presentation formats and task complexity on online consumers’ product understanding. Manag. Inf. Syst. Q. 2007, 31, 475–500. [Google Scholar] [CrossRef]

- Gregor, S.; Lin, A.; Gedeon, T.; Riaz, A.; Zhu, D. Neuroscience and a nomological network for the understanding and assessment of emotions in Information Systems research. J. Manag. Inf. Syst. 2014, 30, 13–48. [Google Scholar] [CrossRef]

- Benbasat, I.; Dimoka, A.; Pavlou, P.; Qiu, L. Incorporating social presence in the design of the anthropomorphic interface of recommendation agents: Insights from an fMRI study. In Proceedings of the 2010 International Conference on Information Systems, Saint Louis, MO, USA, 6–8 June 2010. [Google Scholar]

- Gefen, D.; Ayaz, H.; Onaral, B. Applying Functional Near Infrared (fNIR) spectroscopy to enhance MIS research. Trans. Hum. Comput. Interact. 2014, 6, 55–73. [Google Scholar] [CrossRef][Green Version]

- Cyr, D.; Head, M.; Larios, H.; Pan, B. Exploring human images in website design: A multi-method approach. Manag. Inf. Syst. Q. 2009, 33, 539–566. [Google Scholar] [CrossRef]

- Djamasbi, S.; Siegel, M.; Tullis, T. Generation Y, web design, and eye tracking. Int. J. Hum. Comput. Stud. 2010, 68, 307–323. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. 2015. Available online: arxiv.org/pdf/1502.01852v1 (accessed on 25 July 2019).

- Morris, T. Computer Vision and Image Processing; Palgrave Macmillan: London, UK, 2004. [Google Scholar]

- Brown, C. Computer vision and natural constraints. Science 1984, 224, 1299–1305. [Google Scholar] [CrossRef] [PubMed]

- Jianu, S.; Ichim, L.; Popescu, D. Automatic Diagnosis of Skin Cancer Using Neural Networks. In Proceedings of the 2019—11th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 28–30 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Byeon, W.; Domínguez-Rodrigo, M.; Arampatzis, G.; Baquedano, E.; Yravedra, J.; Maté-González, M.; Koumoutsakos, P. Automated identification and deep classification of cut marks on bones and its paleoanthropological implications. J. Comput. Sci. 2019, 32, 36–43. [Google Scholar] [CrossRef]

- De Fauw, J.; Keane, P.; Tomasev, N.; Visentin, D.; van den Driessche, G.; Johnson, M.; Hughes, C.; Chu, C.; Ledsam, J.; Back, T.; et al. Automated analysis of retinal imaging using machine learning techniques for computer vision. F1000Resarch 2016, 5, 1573. [Google Scholar]

- Zhu, X.; Gao, M.; Li, S. A real-time Road Boundary Detection Algorithm Based on Driverless Cars. In Proceedings of the 2015 4th National Conference on Electrical, Electronics and Computer Engineering, Xi’an, China, 12–13 December 2015; Atlantis Press: Paris, France. [Google Scholar]

- Houben, S.; Stallkamp, J.; Salmen, J.; Schlipsing, M.; Igel, C. Detection of traffic signs in real-world images: The German traffic sign detection benchmark. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN 2013), Dallas, TX, USA, 4–9 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–8. [Google Scholar]

- Wolcott, R.; Eustice, R. Visual localization within LIDAR maps for automated urban driving. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, (IROS 2014), Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 176–183. [Google Scholar]

- Takacs, M.; Bencze, T.; Szabo-Resch, M.; Vamossy, Z. Object recognition to support indoor robot navigation. In Proceedings of the CINTI 2015, 16th IEEE International Symposium on Computational Intelligence and Informatics: Budapest, Hungary, 19–21 November 2015; Szakál, A., Ed.; IEEE: Piscataway, NJ, USA, 2015; pp. 239–242. [Google Scholar]

- Giusti, A.; Guzzi, J.; Ciresan, D.; He, F.; Rodriguez, J.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Silwal, A.; Gongal, A.; Karkee, M. Apple identification in field environment with over the row machine vision system. Agric. Eng. Int. CIGR J. 2014, 16, 66–75. [Google Scholar]

- Hornberg, A. Handbook of Machine Vision; Wiley-VCH: Hoboken, NJ, USA, 2007. [Google Scholar]

- Szeliski, R. Computer Vision. Algorithms and Applications; Springer: London, UK, 2011. [Google Scholar]

- Dechow, D. Explore the Fundamentals of Machine Vision: Part 1. Vision Systems Design. Available online: https://www.vision-systems.com/cameras-accessories/article/16736053/explore-the-fundamentals-of-machine-vision-part-i (accessed on 24 July 2019).

- Microsoft 2019a. Cognitive Services | Microsoft Azure. Available online: azure.microsoft.com/en-us/services/cognitive-services/ (accessed on 25 July 2019).

- Microsoft 2019b. Cognitive Services Directory | Microsoft Azure. Available online: azure.microsoft.com/en-us/services/cognitive-services/directory/vision/ (accessed on 25 July 2019).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. In Computer Vision—ECCV 2014, Proceedings of the Computer Vision – ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Ed.; Springer: Cham, Switzerland, 2014; pp. 346–361. [Google Scholar]

- Chen, D.; Cao, X.; Wen, F.; Sun, J. Blessing of dimensionality: High-dimensional feature and its Efficient Compression for Face Verification. In Proceedings of the 2013 IEEE Conference on Computer Vision and pattern recognition, Portland, OR, USA, 23–28 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3025–3032. [Google Scholar]

- Chen, D.; Ren, S.; Wei, Y.; Cao, X.; Sun, J. Joint Cascade Face Detection and Alignment. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 Septembe 2014; Fleet, D., Ed.; Springer: Cham, Switzerland, 2014; pp. 109–122. [Google Scholar]

- Yu, Z.; Zhang, C. Image based static facial expression recognition with multiple deep network learning. In Proceedings of the 2015 International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; Zhang, Z., Cohen, P., Bohus, D., Horaud, R., Meng, H., Eds.; Association for Computing Machinery: New York, NY, USA, 2015; pp. 435–442. [Google Scholar]

- Microsoft 2019c. What are Azure Cognitive Services? Available online: docs.microsoft.com/en-us/azure/cognitive-services/welcome (accessed on 25 July 2019).

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, UK, 2016. [Google Scholar]

- Microsoft 2019d. Example: Call the Analyze Image API—Computer Vision. Available online: docs.microsoft.com/en-us/azure/cognitive-services/computer-vision/vision-api-how-to-topics/howtocallvisionapi (accessed on 25 July 2019).

- Aslam, B.; Karjaluoto, H. Digital advertising around paid spaces, e-advertising industry’s revenue engine: A review and research agenda. Telemat. Inform. 2017, 34, 1650–1662. [Google Scholar] [CrossRef]

- Henderson, J.; Hollingworth, A. High-level scene perception. Annu. Rev. Psychol. 1999, 50, 243–271. [Google Scholar] [CrossRef] [PubMed]

- Snowden, R.; Thompson, P.; Troscianko, T. Basic Vision: An Introduction to Visual Perception; Oxford University Press: Oxford, London, UK, 2012. [Google Scholar]

- Speier, C. The influence of information presentation formats on complex task decision-making performance. Int. J. Hum. Comput. Stud. 2006, 64, 1115–1131. [Google Scholar] [CrossRef]

- Djamasbi, S.; Siegel, M.; Tullis, T. Faces and viewing behavior: An exploratory investigation. Trans. Hum. Comput. Interact. 2012, 4, 190–211. [Google Scholar] [CrossRef][Green Version]

- Cremer, S.; Ma, A. Predicting e-commerce sales of hedonic information goods via artificial intelligence imagery analysis of thumbnails. In Proceedings of the 2017 International Conference on Electronic Commerce, Pangyo, Korea, 17–18 August 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–8. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).