Joint Location-Dependent Pricing and Request Mapping in ICN-Based Telco CDNs For 5G

Abstract

1. Introduction

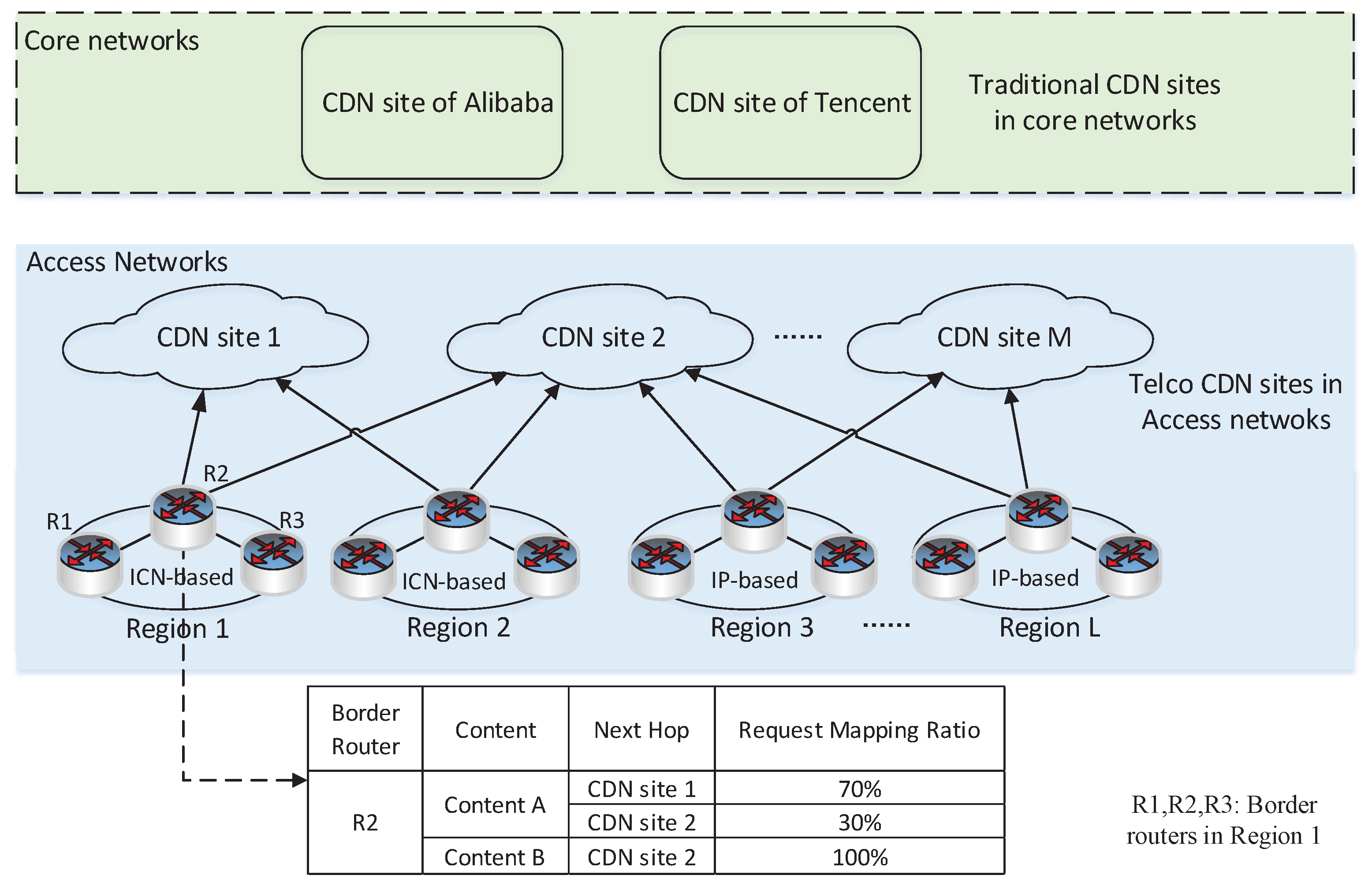

- First, we propose an integrated framework for cost-efficient and effective deployment of ICN-based telco CDNs. Specifically, we propose to employ powerful off-path caching provided by telco CDNs to complement on-path caching in ICN. In our design, once a cache miss occurs at an ICN router, the content request is directed to the most suitable telco CDN site by the border router of the access network. Therefore, the integrated design has the features of both content-centric forwarding and powerful off-path caching, giving a cost-efficient and realistic choice for the deployment of ICN-based telco CDNs in the 5G era;

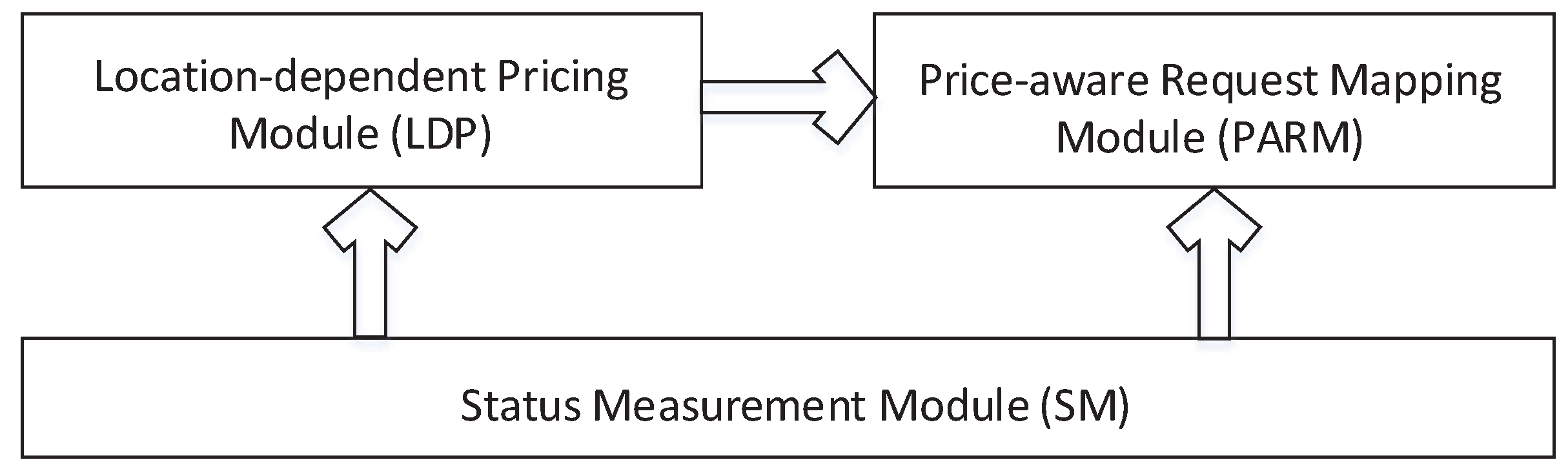

- Second, we propose a framework that consists of a location-dependent pricing (LDP) module, price-aware request mapping (PARM) module and status measurement (SM) modules. The LDP module dynamically sets prices of different CDN sites according to the sites’ bandwidth usage measured by the SM modules. The PARM module calculates the request mapping rules according to the prices and the application access statistics measured by the SM modules;

- Third, we carefully design the LDP module and the PARM module. Specifically, an algorithm is presented to describe the LDP procedure, considering the spatial and temporal heterogeneity of different telco CDN sites. Then, an optimization problem that minimizes user perceived latency and the total payment is formulated to make the PARM decisions;

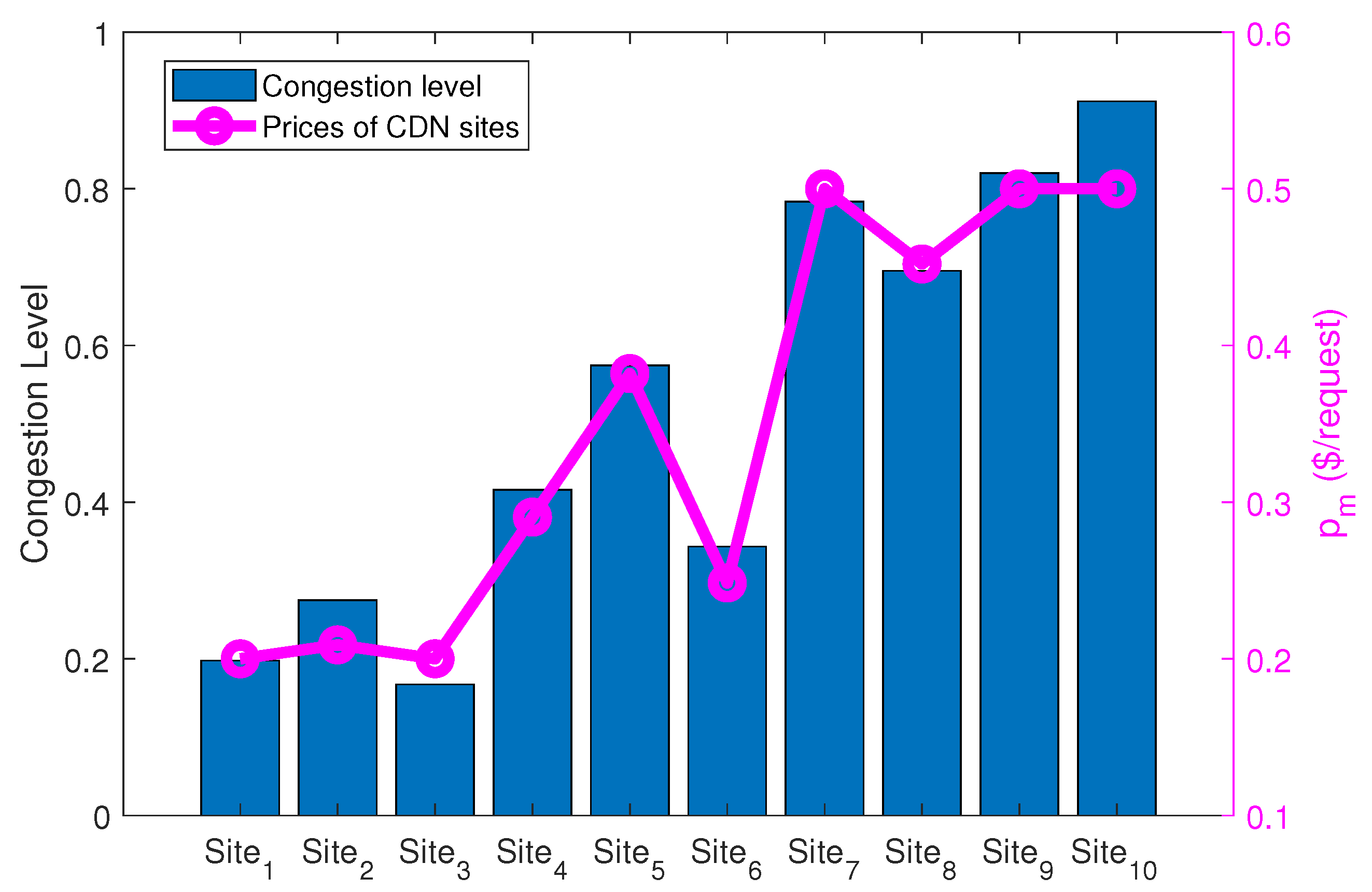

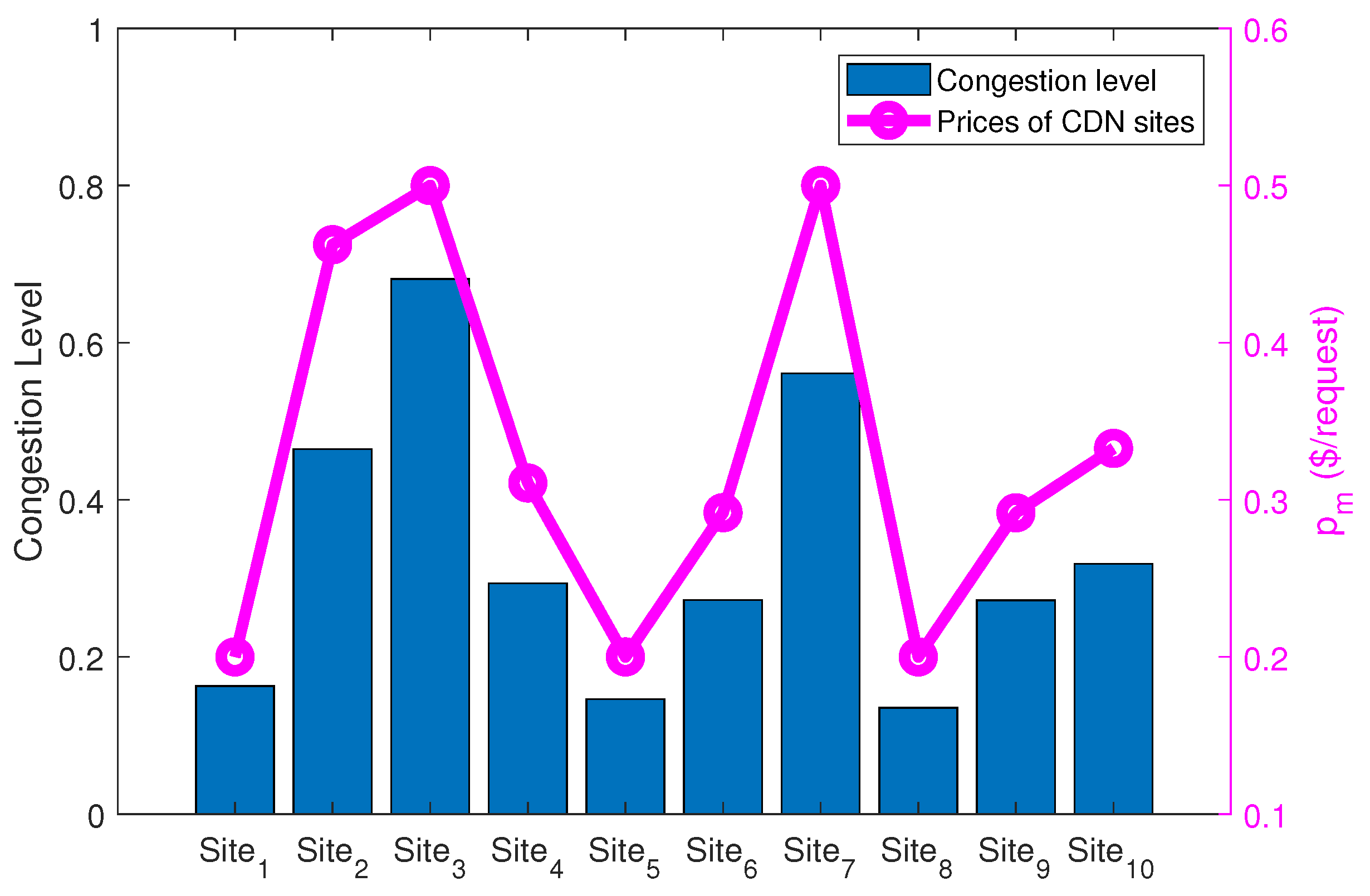

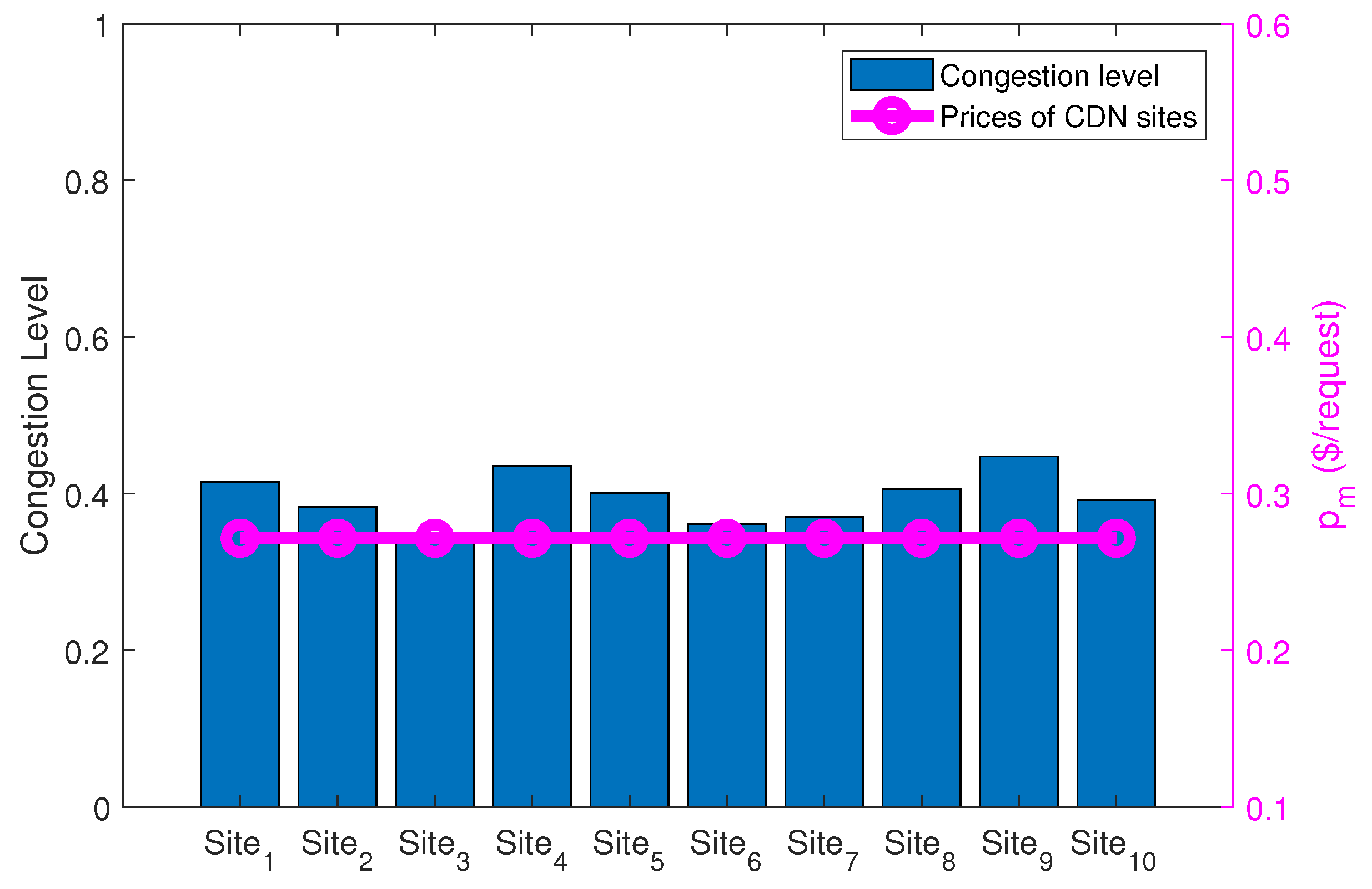

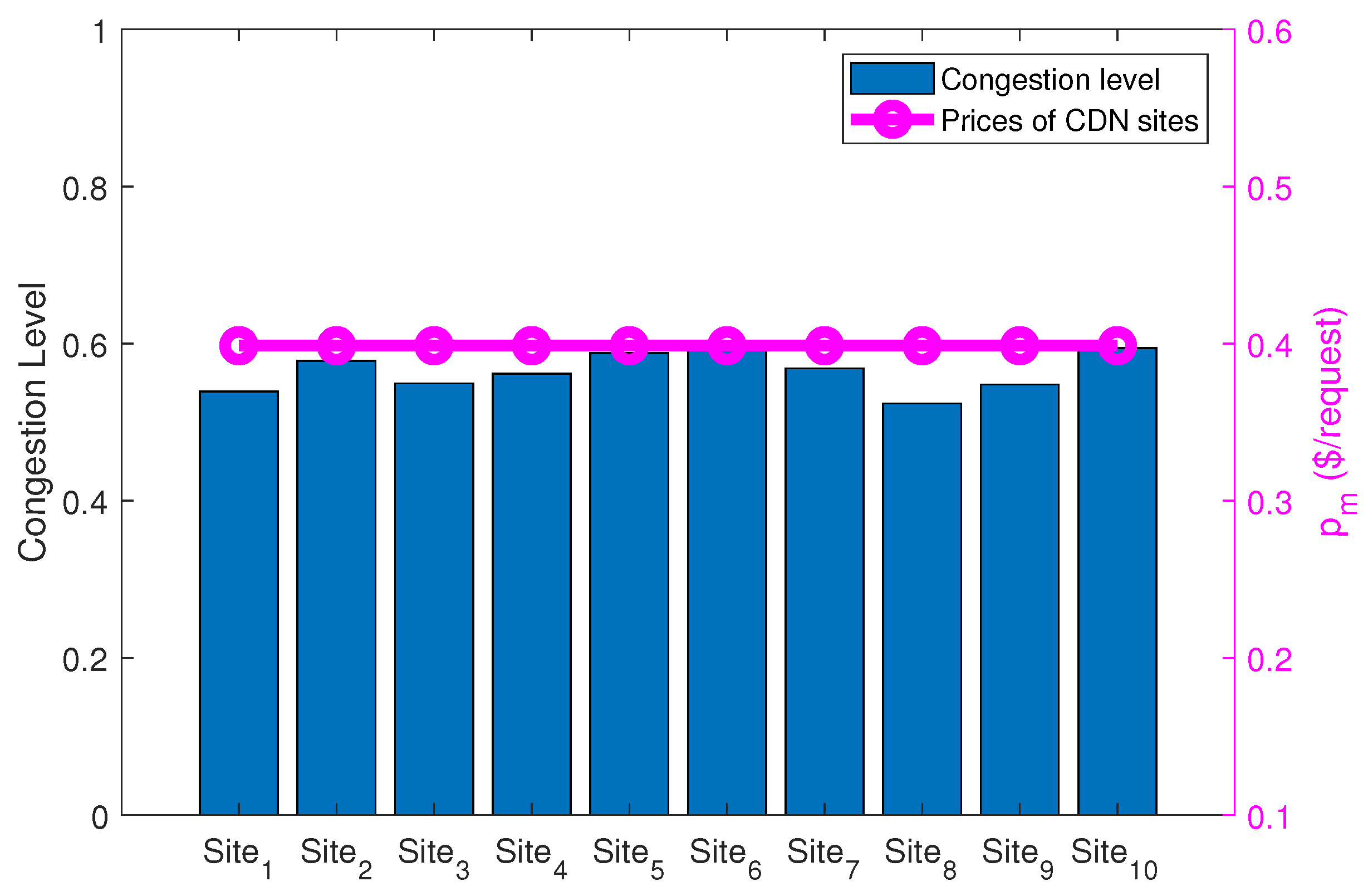

- Fourth, we conduct extensive simulations to evaluate the proposed design. The simulation results show that our design helps ICN-based telco CDNs flexibly price different CDN sites according to their congestion levels. When the congestion level of a CDN site increases to a certain level, LDP sets the price higher, helping the telco keep pace with its increasing bandwidth cost. Moreover, we observe the impact of some key parameter settings on the telco’s revenue and cost, helping a telco to set proper parameters when adopting our design.

2. Related Work

3. System Model

4. Joint Pricing and Request Mapping

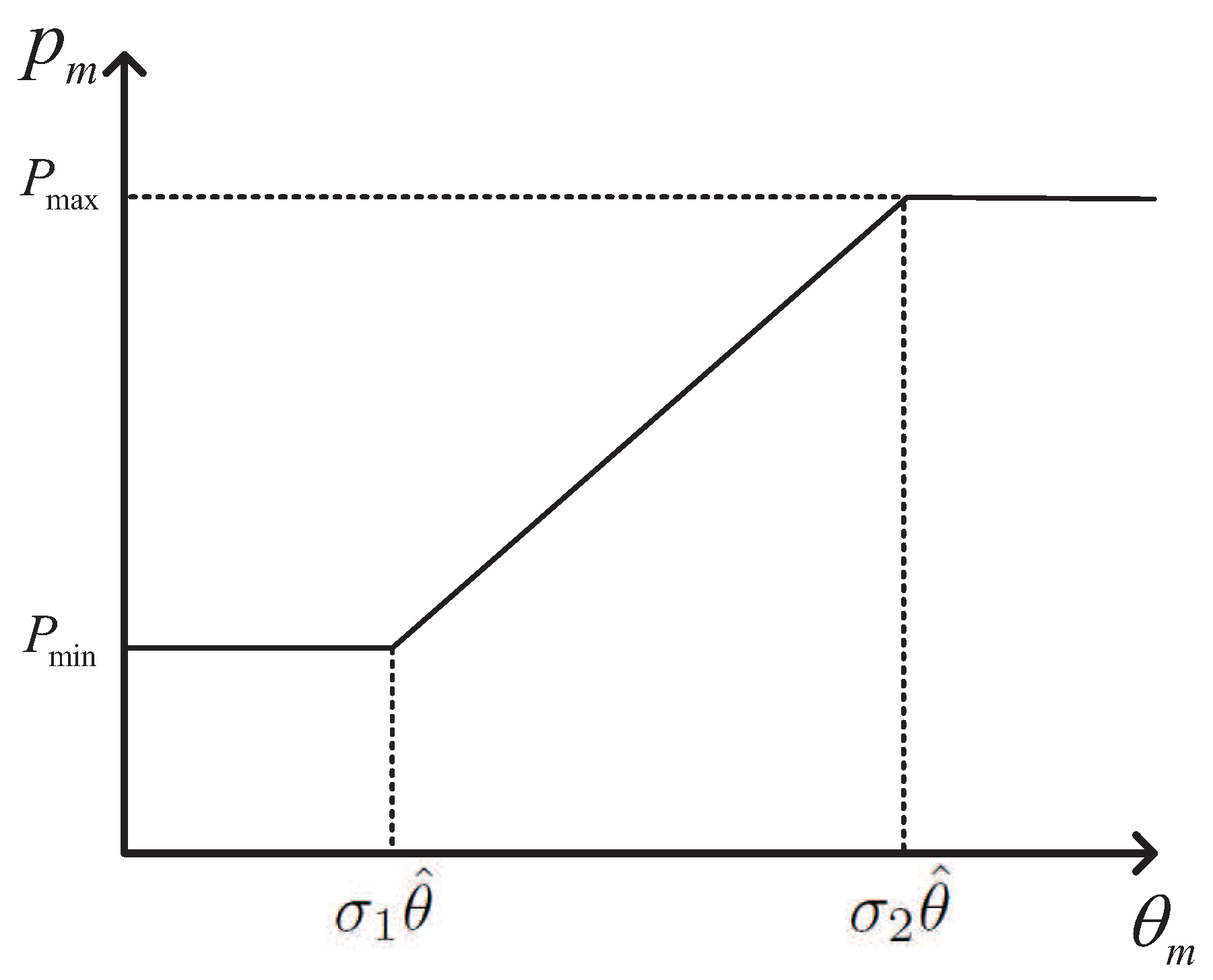

4.1. The Design of LDP Module

| Algorithm 1 The congestion-aware bandwidth pricing strategy (CABP) algorithm |

| Input:, , , , , |

Output:

|

| Algorithm 2 The LDP Algorithm |

| Input:, |

Output:P

|

4.2. The Design of PARM Module

5. Evaluation

5.1. Experiment Setup

5.1.1. Traffic Model

5.1.2. Performance Metrics and the Compared Cases

- The variation of the sites’ congestion level;

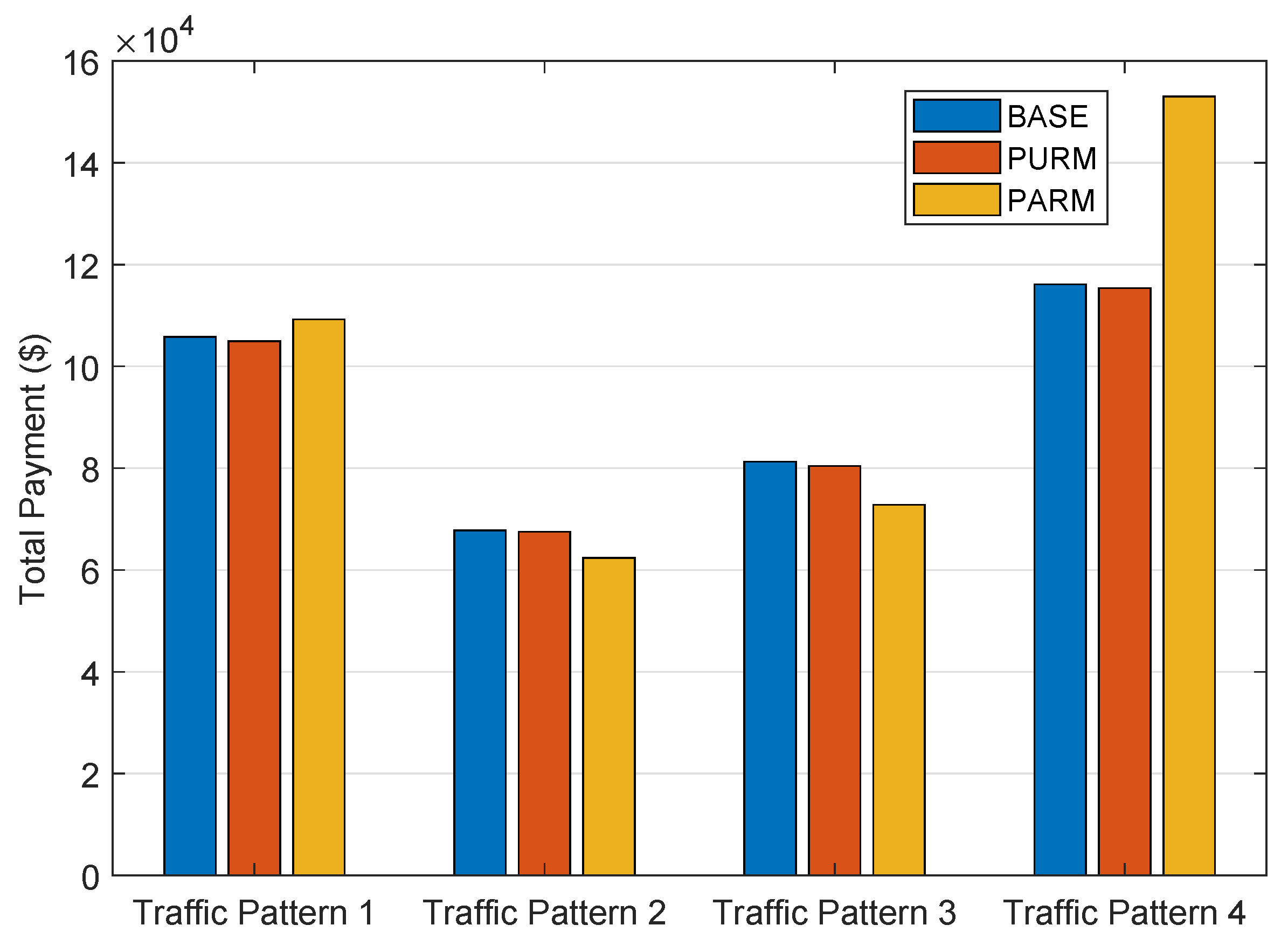

- Total bandwidth payment of the application providers;

- Average latency perceived by end users.

5.2. Experiment Results

5.2.1. Results of LDP

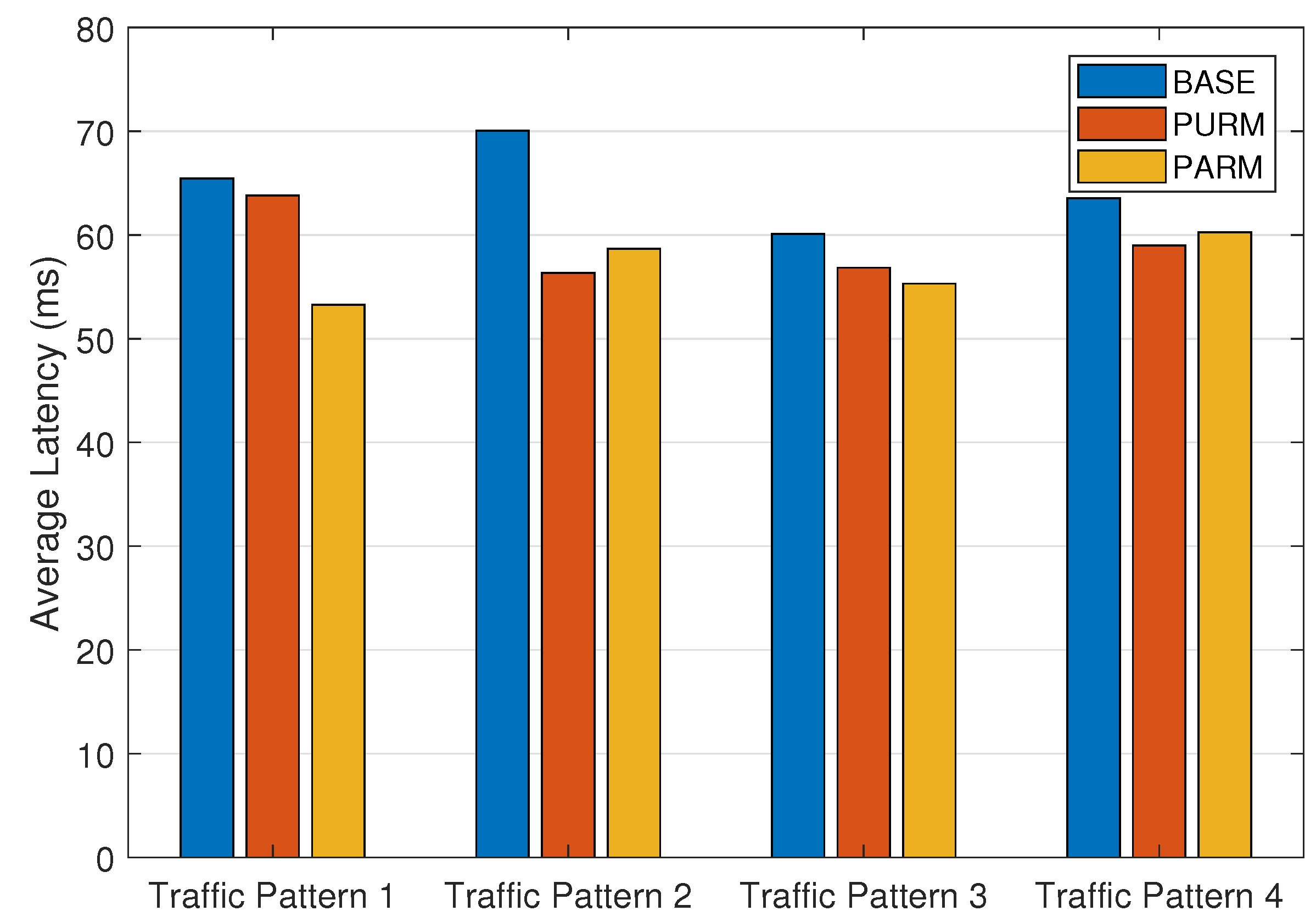

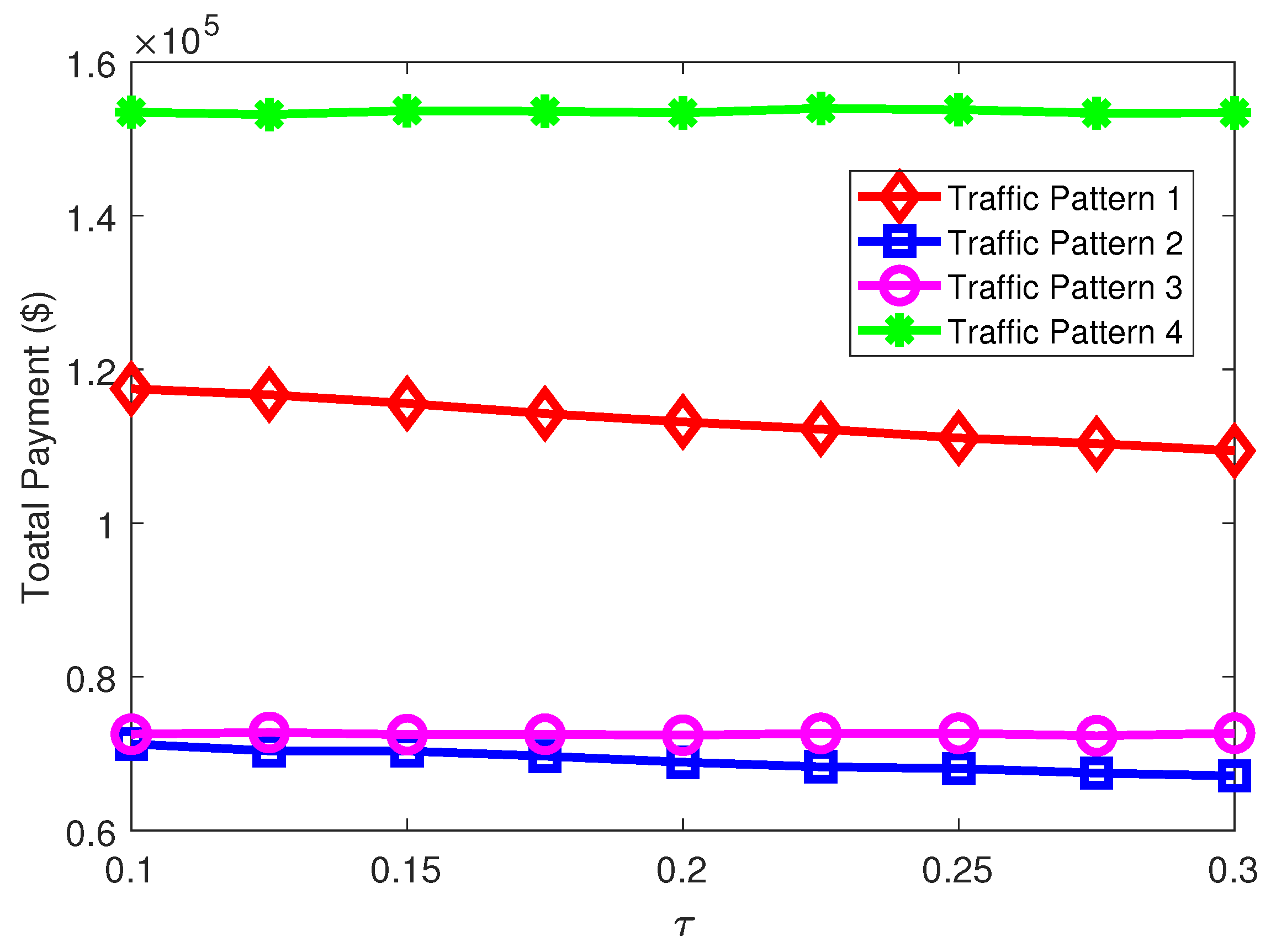

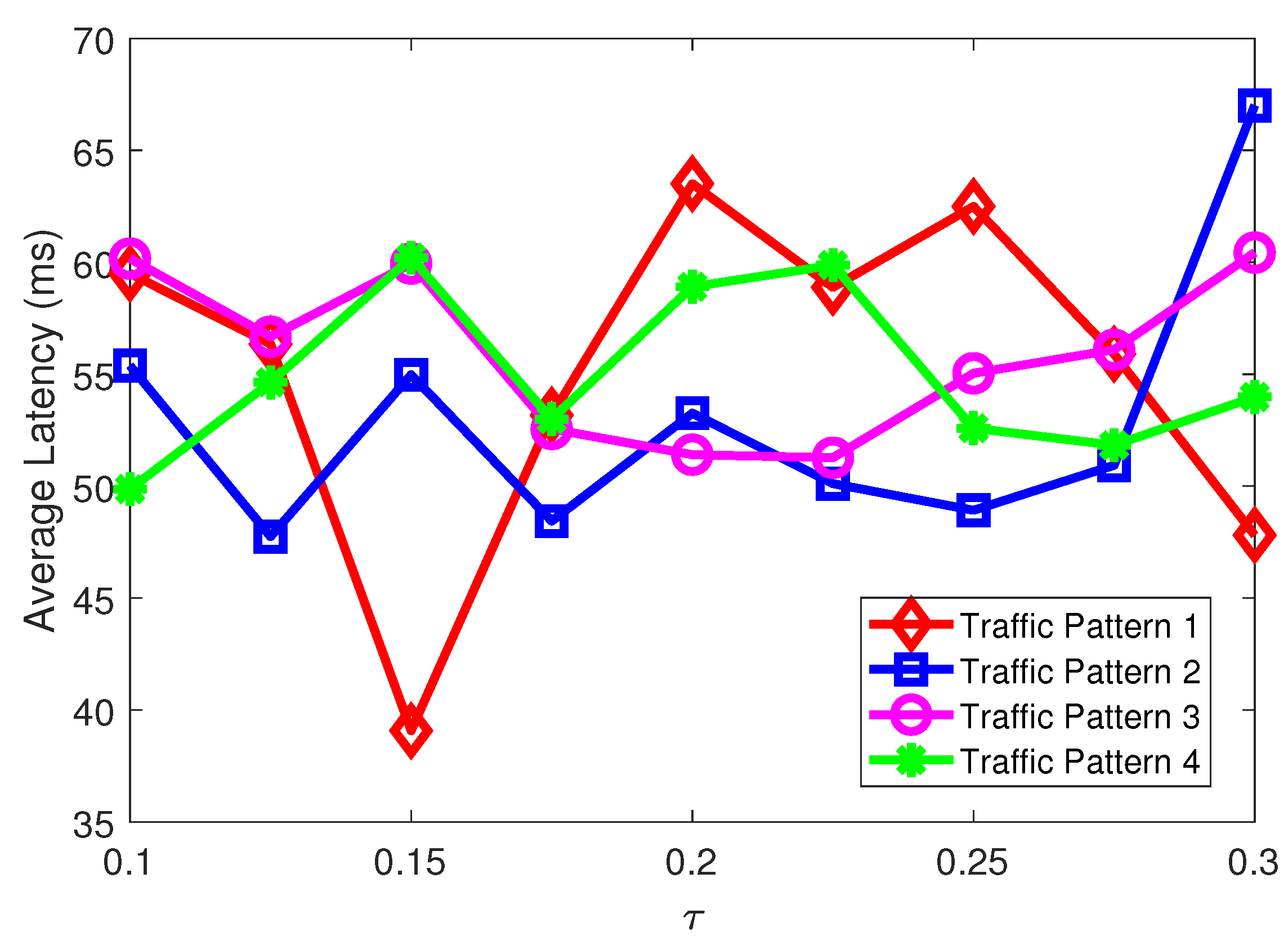

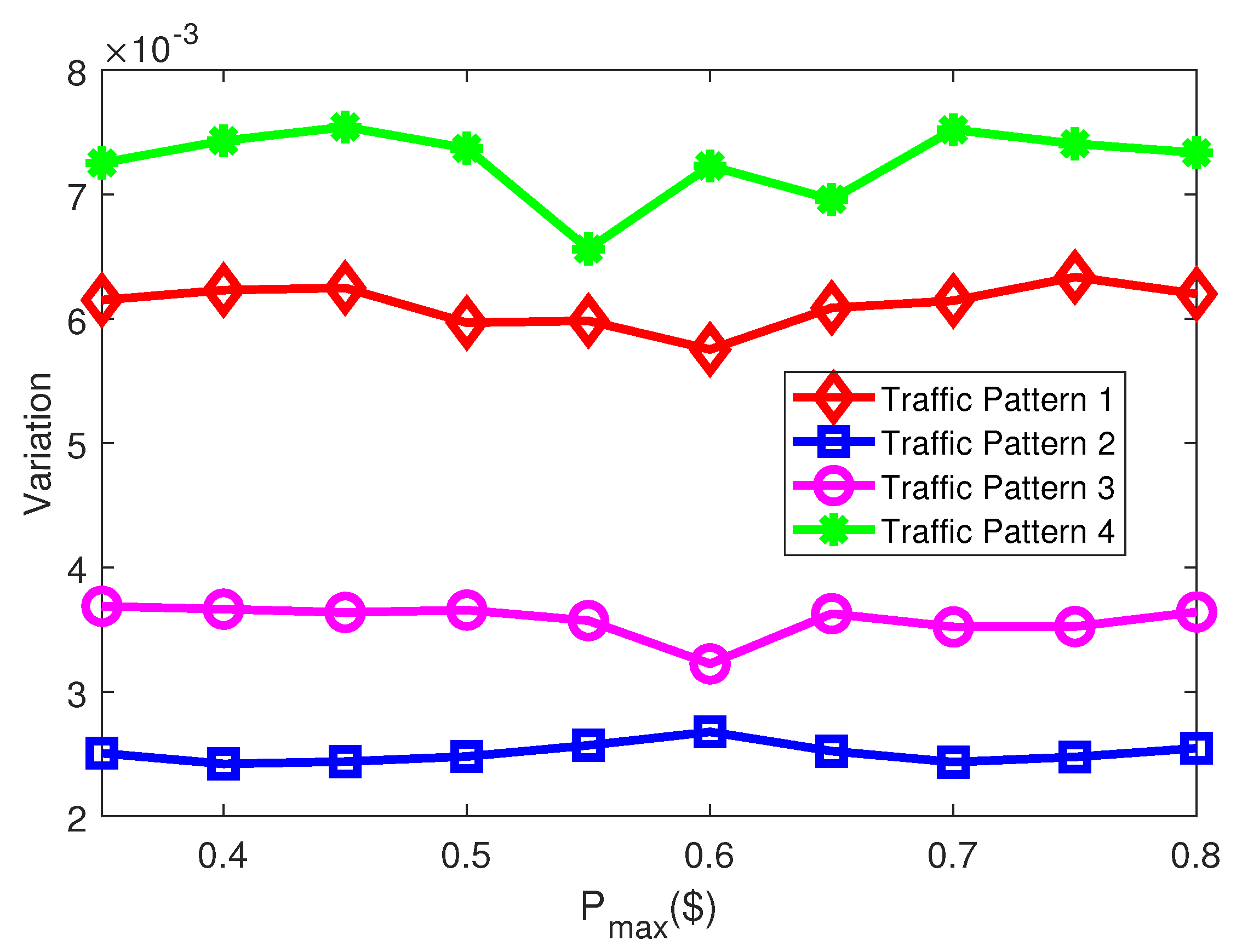

5.2.2. Results of PARM

- For the traffic patterns with high variations, our design is efficient to reduce the variation and thus reduce the telco CDN’s total bandwidth cost;

- For any traffic pattern, our design helps a telco CDN better match its revenue to cost. Specifically, if the overall congestion level is relatively low, a discount is provided (compared with ) to encourage more usage. Otherwise, prices of the congested sites are set higher than , making the total revenue match with the increasing bandwidth cost;

- The latency performance provided by PARM is similar to that provided by PURM, which minimizes latency only.

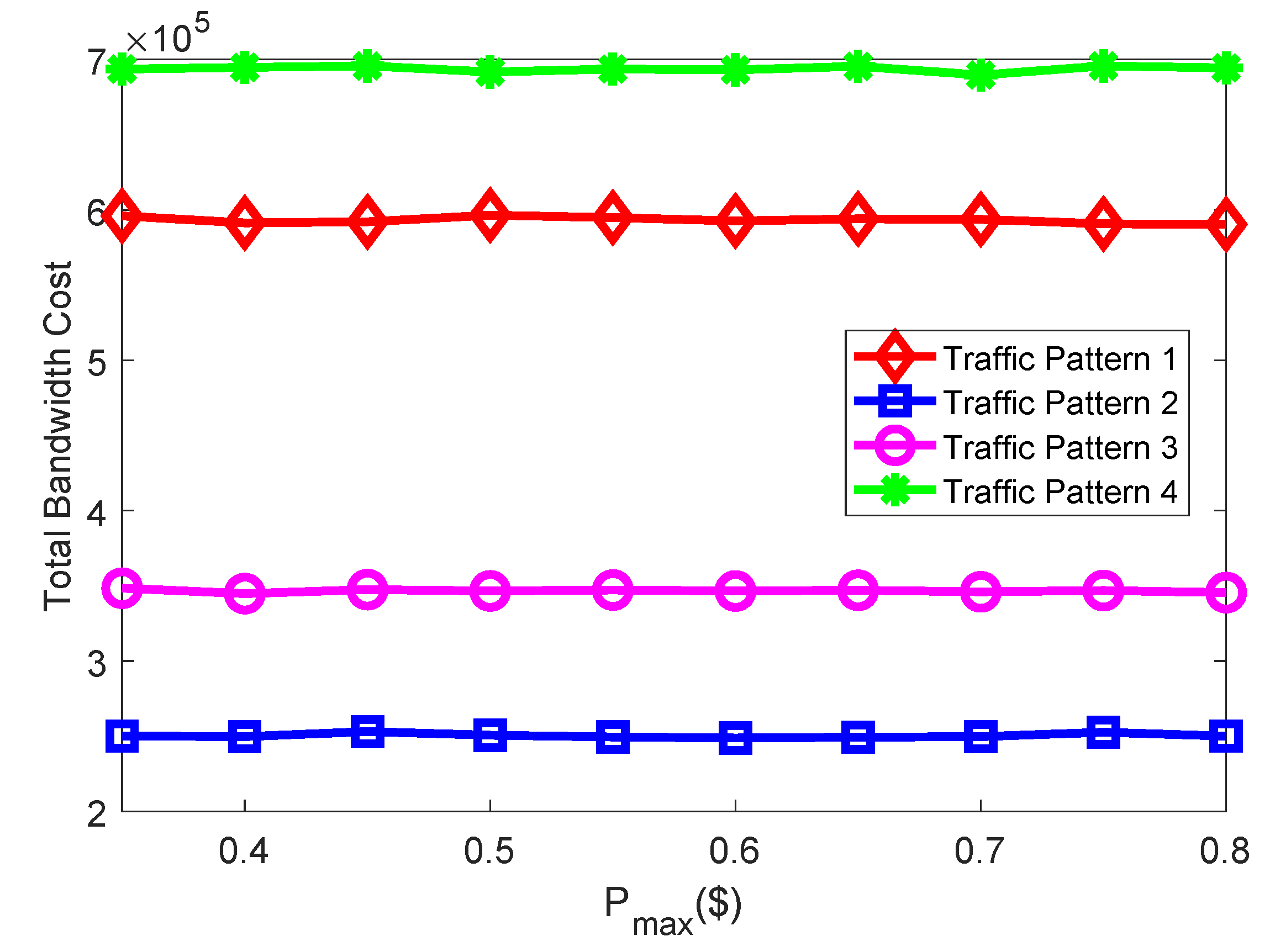

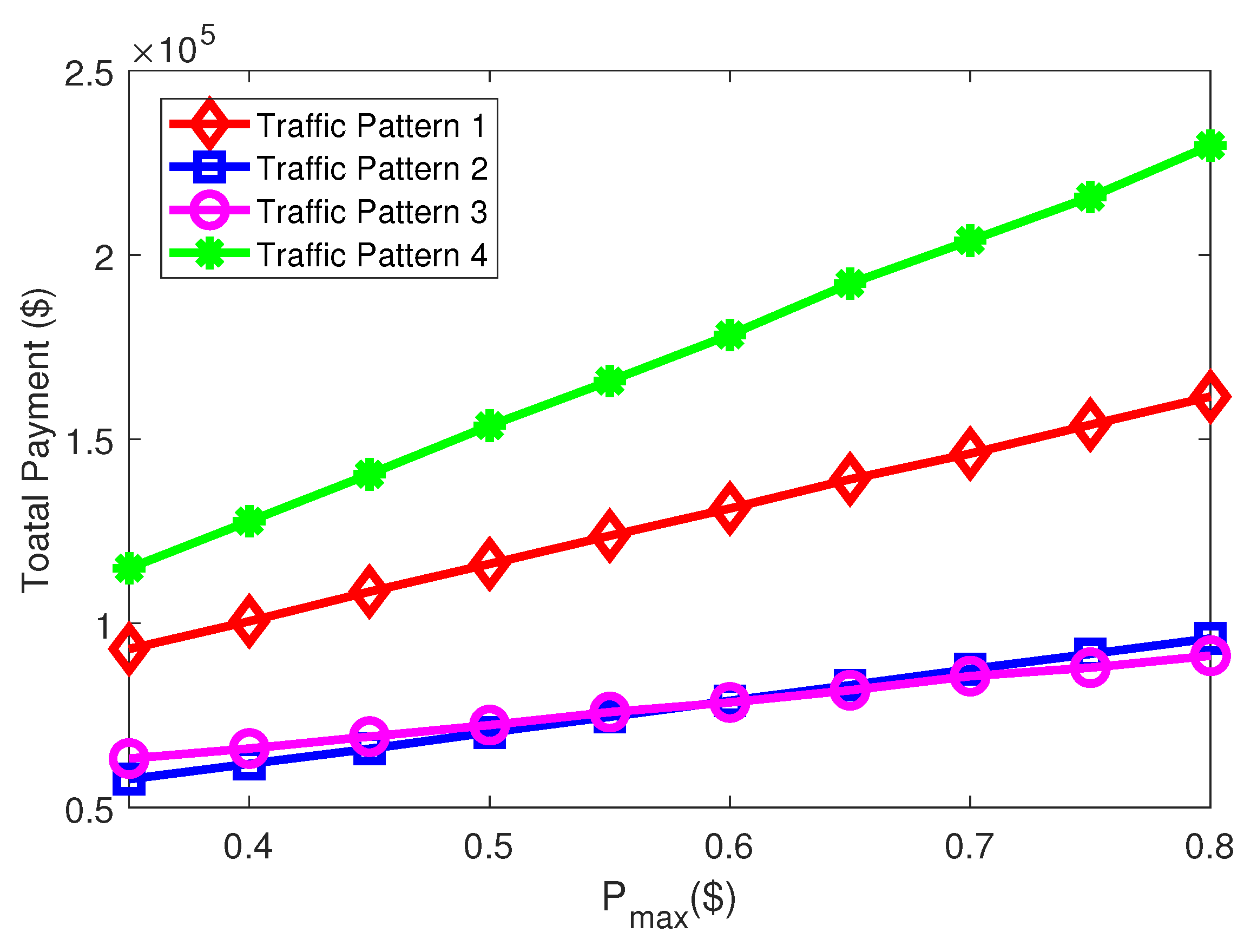

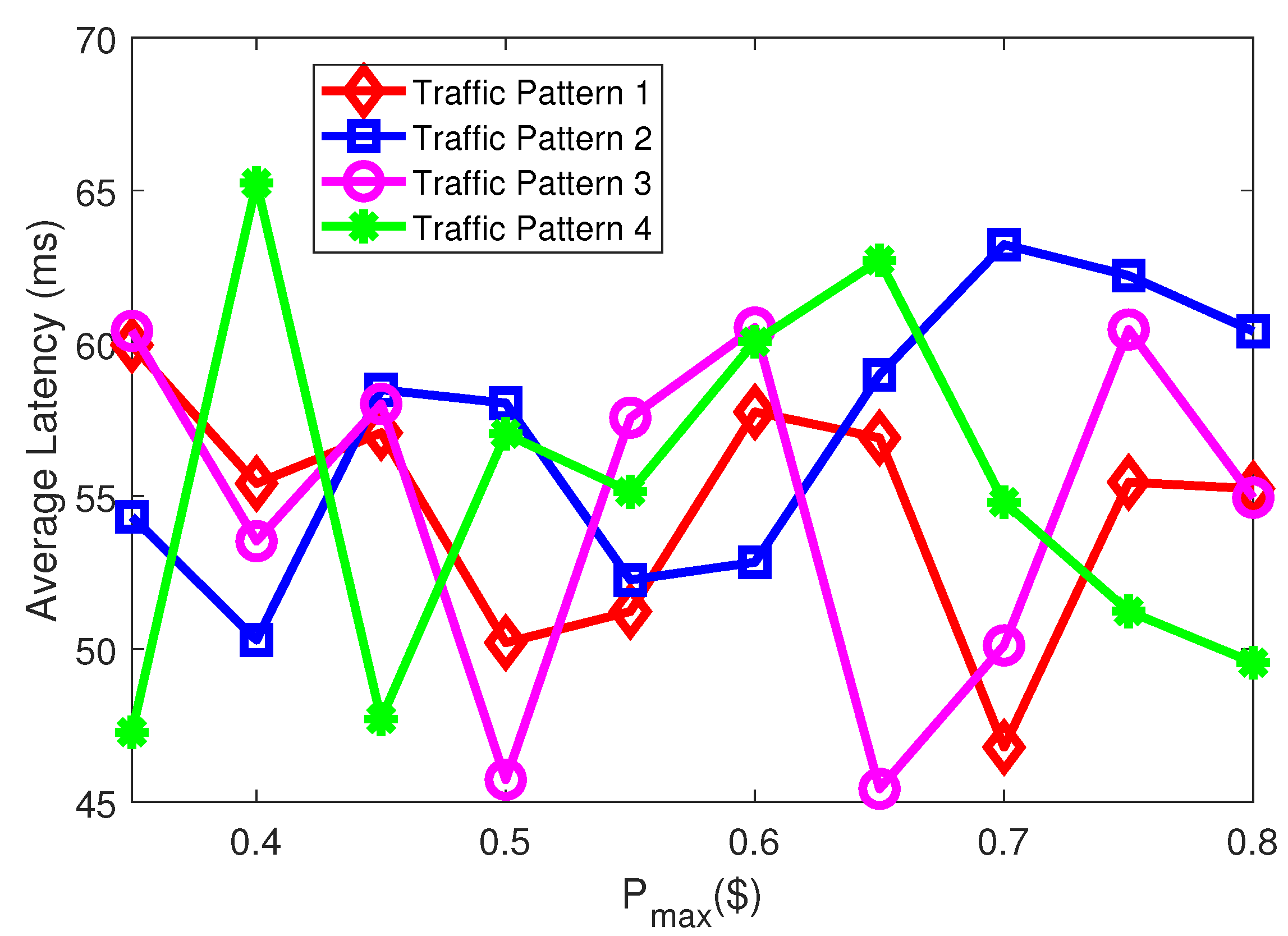

5.2.3. Impact of and on Performance of PARM

- is effective in affecting the sites’ variation and the total bandwidth cost. Note that if is too big, the total bandwidth cost will grow rapidly when sites become too congested (as Traffic Pattern 4 in our simulation). Therefore, the overall congestion level should be taken into account when setting ;

- is effective in affecting the application providers’ total payment. If the overall congestion level is high, a bigger will give more economic incentives to help a telco CDN match its increasing bandwidth cost. Certainly, the tolerance of CDN users (i.e., application providers) should be taken into account when a telco CDN is making the decision of raising ;

- Average latency does not have a direct relationship with or .

6. Discussions of Parameter Setting in Implementation Aspect

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cisco Visual Networking Index: Forecast and Methodology 2017–2022; Cisco: San Jose, CA, USA, 2019.

- Sousa, B.; Cordeiro, L.; Simoes, P.; Edmonds, A.; Ruiz, S.; Carella, G.A.; Corici, M.; Nikaein, N.; Gomes, A.S.; Schiller, E.; et al. Toward a fully cloudified mobile network infrastructure. IEEE Trans. Netw. Serv. Manag. 2016, 13, 547–563. [Google Scholar] [CrossRef]

- Alnoman, A.; Carvalho, G.H.; Anpalagan, A.; Woungang, I. Energy efficiency on fully cloudified mobile networks: Survey, challenges, and open issues. IEEE Commun. Surv. Tutor. 2017, 20, 1271–1291. [Google Scholar] [CrossRef]

- Ibrahim, A.I.A.; Khalifa, F.E.I. Cloudified NGN infrastructure based on SDN and NFV. In Proceedings of the 2015 International Conference on Computing, Control, Networking, Electronics and Embedded Systems Engineering (ICCNEEE), Khartoum, Sudan, 7–9 September 2015; pp. 270–274. [Google Scholar]

- White Paper for China Unicom’s Edge-Cloud; Technical Report; China Unicom: Beijing, China, 2018.

- White Paper for China Unicom’s CUBE CDN; Technical Report; China Unicom: Beijing, China, 2018.

- Ibrahim, G.; Chadli, Y.; Kofman, D.; Ansiaux, A. Toward a new Telco role in future content distribution services. In Proceedings of the 2012 16th International Conference on Intelligence in Next Generation Networks (ICIN), Berlin, Germany, 8–11 October 2012; pp. 22–29. [Google Scholar]

- Spagna, S.; Liebsch, M.; Baldessari, R.; Niccolini, S.; Schmid, S.; Garroppo, R.; Ozawa, K.; Awano, J. Design principles of an operator-owned highly distributed content delivery network. IEEE Commun. Mag. 2013, 51, 132–140. [Google Scholar] [CrossRef]

- Fayazbakhsh, S.K.; Lin, Y.; Tootoonchian, A.; Ghodsi, A.; Koponen, T.; Maggs, B.; Ng, K.; Sekar, V.; Shenker, S. Less pain, most of the gain: Incrementally deployable icn. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 147–158. [Google Scholar] [CrossRef]

- Frangoudis, P.A.; Yala, L.; Ksentini, A. CDN-as-a-Service Provision over a Telecom Operator’s Cloud. IEEE Trans. Netw. Serv. Manag. 2017, 14, 702–716. [Google Scholar] [CrossRef]

- Herbaut, N.; Negru, D.; Dietrich, D.; Papadimitriou, P. Dynamic Deployment and Optimization of Virtual Content Delivery Networks. IEEE Multimed. 2017, 24, 28–37. [Google Scholar] [CrossRef]

- Yala, L.; Frangoudis, P.A.; Ksentini, A. QoE-aware computing resource allocation for CDN-as-a-service provision. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Washington, DC, USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Yala, L.; Frangoudis, P.A.; Lucarelli, G.; Ksentini, A. Balancing between Cost and Availability for CDNaaS Resource Placement. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Llorca, J.; Sterle, C.; Tulino, A.M.; Choi, N.; Sforza, A.; Amideo, A.E. Joint content-resource allocation in software defined virtual CDNs. In Proceedings of the 2015 IEEE International Conference on Communication Workshop (ICCW), London, UK, 8–12 June 2015; pp. 1839–1844. [Google Scholar]

- Herbaut, N.; Negru, D.; Magoni, D.; Frangoudis, P.A. Deploying a content delivery service function chain on an SDN-NFV operator infrastructure. In Proceedings of the 2016 International Conference on Telecommunications and Multimedia (TEMU), Heraklion, Greece, 25–27 July 2016; pp. 1–7. [Google Scholar]

- Herbaut, N.; Negru, D.; Dietrich, D.; Papadimitriou, P. Service chain modeling and embedding for NFV-based content delivery. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar]

- Ibn-Khedher, H.; Hadji, M.; Abd-Elrahman, E.; Afifi, H.; Kamal, A.E. Scalable and cost efficient algorithms for virtual CDN migration. In Proceedings of the 2016 IEEE 41st Conference on Local Computer Networks (LCN), Dubai, UAE, 7–10 November 2016; pp. 112–120. [Google Scholar]

- Ibn-Khedher, H.; Abd-Elrahman, E. Cdnaas framework: Topsis as multi-criteria decision making for vcdn migration. Procedia Comput. Sci. 2017, 110, 274–281. [Google Scholar] [CrossRef]

- De Vleeschauwer, D.; Robinson, D.C. Optimum caching strategies for a telco CDN. Bell Labs Tech. J. 2011, 16, 115–132. [Google Scholar] [CrossRef]

- Liu, D.; Chen, B.; Yang, C.; Molisch, A.F. Caching at the wireless edge: Design aspects, challenges, and future directions. IEEE Commun. Mag. 2016, 54, 22–28. [Google Scholar] [CrossRef]

- Sharma, A.; Venkataramani, A.; Sitaraman, R.K. Distributing content simplifies ISP traffic engineering. ACM SIGMETRICS Perform. Eval. Rev. 2013, 41, 229–242. [Google Scholar] [CrossRef]

- Li, Z.; Simon, G. In a Telco-CDN, pushing content makes sense. IEEE Trans. Netw. Serv. Manag. 2013, 10, 300–311. [Google Scholar]

- Claeys, M.; Tuncer, D.; Famaey, J.; Charalambides, M.; Latré, S.; Pavlou, G.; Turck, F.D. Hybrid multi-tenant cache management for virtualized ISP networks. J. Netw. Comput. Appl. 2016, 68, 28–41. [Google Scholar] [CrossRef]

- Diab, K.; Hefeeda, M. Cooperative Active Distribution of Videos in Telco-CDNs. In Proceedings of the SIGCOMM Posters and Demos, Los Angeles, CA, USA, 22–24 August 2017; pp. 19–21. [Google Scholar]

- Liu, J.; Yang, Q.; Simon, G. Congestion Avoidance and Load Balancing in Content Placement and Request Redirection for Mobile CDN. IEEE/ACM Trans. Netw. 2018, 26, 851–863. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Wan, P.J.; Han, Z.; Leung, V.C. Hierarchical Edge Caching in Device-to-Device Aided Mobile Networks: Modeling, Optimization, and Design. IEEE J. Sel. Areas Commun. 2018, 36, 1768–1785. [Google Scholar] [CrossRef]

- Tran, T.X.; Le, D.V.; Yue, G.; Pompili, D. Cooperative Hierarchical Caching and Request Scheduling in a Cloud Radio Access Network. IEEE Trans. Mob. Comput. 2018. [Google Scholar] [CrossRef]

- Yang, P.; Zhang, N.; Zhang, S.; Yu, L.; Zhang, J.; Shen, X. Dynamic Mobile Edge Caching with Location Differentiation. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Lee, D.; Mo, J.; Park, J. ISP vs. ISP+ CDN: Can ISPs in Duopoly profit by introducing CDN services? ACM SIGMETRICS Perform. Eval. Rev. 2012, 40, 46–48. [Google Scholar] [CrossRef]

- Lee, H.; Duan, L.; Yi, Y. On the competition of CDN companies: Impact of new telco-CDNs’ federation. In Proceedings of the 2016 14th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Tempe, AZ, USA, 9–13 May 2016; pp. 1–8. [Google Scholar]

- Zhong, Y.; Xu, K.; Li, X.Y.; Su, H.; Xiao, Q. Estra: Incentivizing storage trading for edge caching in mobile content delivery. In Proceedings of the 2015 IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar]

- Shen, F.; Hamidouche, K.; Bastug, E.; Debbah, M. A Stackelberg game for incentive proactive caching mechanisms in wireless networks. In Proceedings of the 2016 IEEE Global Communications Conference (GLOBECOM), Washington, DC, USA, 4–8 December 2016; pp. 1–6. [Google Scholar]

- Liu, T.; Li, J.; Shu, F.; Guan, H.; Yan, S.; Jayakody, D.N.K. On the incentive mechanisms for commercial edge caching in 5G Wireless networks. IEEE Wirel. Commun. 2018, 25, 72–78. [Google Scholar] [CrossRef]

- Krolikowski, J.; Giovanidis, A.; Di Renzo, M. A decomposition framework for optimal edge-cache leasing. IEEE J. Sel. Areas Commun. 2018, 36, 1345–1359. [Google Scholar] [CrossRef]

- Cloud, A. Pricing for CDN. Available online: https://www.aliyun.com/price/?spm=5176.7933777.1280361.25.113d56f5wjp666 (accessed on 12 April 2019).

- Li, Y.; Xu, F. Trace-driven analysis for location-dependent pricing in mobile cellular networks. IEEE Netw. 2016, 30, 40–45. [Google Scholar] [CrossRef]

- Maggs, B.M.; Sitaraman, R.K. Algorithmic nuggets in content delivery. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 52–66. [Google Scholar] [CrossRef]

- Benchaita, W.; Ghamri-Doudane, S.; Tixeuil, S. On the Optimization of Request Routing for Content Delivery. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 347–348. [Google Scholar] [CrossRef]

- Chen, F.; Sitaraman, R.K.; Torres, M. End-user mapping: Next generation request routing for content delivery. ACM SIGCOMM Comput. Commun. Rev. 2015, 45, 167–181. [Google Scholar] [CrossRef]

- Wang, M.; Jayaraman, P.P.; Ranjan, R.; Mitra, K.; Zhang, M.; Li, E.; Khan, S.; Pathan, M.; Georgeakopoulos, D. An overview of cloud based content delivery networks: research dimensions and state-of-the-art. In Transactions on Large-Scale Data-and Knowledge-Centered Systems XX; Springer: Berlin, Germany, 2015; pp. 131–158. [Google Scholar]

- Yuan, H.; Bi, J.; Li, B.H.; Tan, W. Cost-aware request routing in multi-geography cloud data centres using software-defined networking. Enterp. Inf. Syst. 2017, 11, 359–388. [Google Scholar] [CrossRef]

- Fan, Q.; Yin, H.; Jiao, L.; Lv, Y.; Huang, H.; Zhang, X. Towards optimal request mapping and response routing for content delivery networks. IEEE Trans. Serv. Comput. 2018. [Google Scholar] [CrossRef]

- Lin, T.; Xu, Y.; Zhang, G.; Xin, Y.; Li, Y.; Ci, S. R-iCDN: An approach supporting flexible content routing for ISP-operated CDN. In Proceedings of the 9th ACM workshop on Mobility in the evolving internet architecture, Maui, HI, USA, 11 September 2014; pp. 61–66. [Google Scholar]

- Jiang, W.; Zhang-Shen, R.; Rexford, J.; Chiang, M. Cooperative content distribution and traffic engineering in an ISP network. ACM SIGMETRICS Perform. Eval. Rev. 2009, 37, 239–250. [Google Scholar]

- Chang, C.H.; Lin, P.; Zhang, J.; Jeng, J.Y. Time dependent adaptive pricing for mobile internet access. In Proceedings of the 2015 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Hong Kong, China, 26 April–1 May 2015; pp. 540–545. [Google Scholar]

- Ma, Q.; Liu, Y.F.; Huang, J. Time and location aware mobile data pricing. IEEE Trans. Mob. Comput. 2016, 15, 2599–2613. [Google Scholar] [CrossRef]

- Jin, M.; Gao, S.; Luo, H.; Li, J.; Zhang, Y.; Das, S.K. An Approach to Pre-Schedule Traffic in Time-Dependent Pricing Systems. IEEE Trans. Netw. Serv. Manag. 2019, 16, 334–347. [Google Scholar] [CrossRef]

| Parameters or Variables | Meaning |

|---|---|

| M | Number of telco CDN sites |

| L | Number of user access regions |

| N | Set of applicaitons that adopt the telco CDN service |

| Bandwidth capacity of site m | |

| Historical traffic usage of site m | |

| Latency between region l and site m | |

| Expected response time of application n | |

| Request arrival rate of application n in region l | |

| Congestion level of site m | |

| Congestion level set of all CDN sites | |

| Reference congestion level to evaluate relative congestion level of a certain site | |

| , | Congestion level thresholds in Algorithm 1 |

| The lowest price of a site in LDP design | |

| The highest price of a site in LDP design | |

| Fixe price of a site in traditional pricing strategy | |

| Unit bandwidth price of site m | |

| Portion of application n requests from region l directed to site m |

| Index | User Numbers in Every Region |

|---|---|

| Traffic Pattern 1 | [110, 150, 90, 230, 320, 190, 430, 380, 450, 500] |

| Traffic Pattern 2 | [90, 255, 375, 160, 80, 150, 310, 75, 150, 175] |

| Traffic Pattern 3 | [230, 210, 190, 240, 220, 200, 205, 225, 245, 215] |

| Traffic Pattern 4 | [300, 320, 305, 310, 325, 330, 315, 290, 305, 325] |

| Parameter | Value |

|---|---|

| M | 10 |

| L | 10 |

| N | 3 |

| [90 ms, 70 ms, 60 ms] | |

| [61.92, 39.84, 22.08] | |

| 0.3 | |

| 0.2 | |

| 0.5 | |

| 0.5 | |

| , |

| Case Name | The Bandwidth Pricing Strategy | The Request Mapping Approach |

|---|---|---|

| BASE | Fixed pricing as | Direct Requests to the Nearest site |

| PURM | Fixed pricing as | PARM with |

| PARM | Location dependent pricing as | PARM with |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, M.; Luo, H.; Gao, S.; Feng, B. Joint Location-Dependent Pricing and Request Mapping in ICN-Based Telco CDNs For 5G. Future Internet 2019, 11, 125. https://doi.org/10.3390/fi11060125

Jin M, Luo H, Gao S, Feng B. Joint Location-Dependent Pricing and Request Mapping in ICN-Based Telco CDNs For 5G. Future Internet. 2019; 11(6):125. https://doi.org/10.3390/fi11060125

Chicago/Turabian StyleJin, Mingshuang, Hongbin Luo, Shuai Gao, and Bohao Feng. 2019. "Joint Location-Dependent Pricing and Request Mapping in ICN-Based Telco CDNs For 5G" Future Internet 11, no. 6: 125. https://doi.org/10.3390/fi11060125

APA StyleJin, M., Luo, H., Gao, S., & Feng, B. (2019). Joint Location-Dependent Pricing and Request Mapping in ICN-Based Telco CDNs For 5G. Future Internet, 11(6), 125. https://doi.org/10.3390/fi11060125