1. Introduction

The global societal development trends have shown an unprecedented change in the manner mobile and wireless communication systems are have been used in the recent years [

1,

2]. It has been predicted by the Mobile and wireless communications Enablers for Twenty-twenty (2020) Information Society (METIS) project, that by 2020, global mobile traffic alone will increase by 33 times that of the 2010 traffic [

1]. In addition to the huge number of human mobile devices, the Internet of Things (IoTs) will dominate the endless list of massive wireless devices. In order to transmit the flood of data traffic with a high level of mobility, such mobile devices will require not only more efficient Radio Access Technologies but ones that will be pervasive [

1,

3]. Hence, the need for future wireless communication systems to implement the visions of the fifth generation (5G) Radio Access Network (RAN) systems.

No doubt, the 5G targets [

4,

5,

6] are hugely challenging to fulfil. Hence, several key enabling technologies will be needed in order to meet up with the 5G requirements [

7,

8,

9,

10,

11,

12,

13]. However, due to these sets of distinct technologies of the 5G RAN networks [

14], the usual Radio Interference Resource Management (RIRM) schemes for 4G RANs such as the spectrum management, resource allocation, packet scheduling, handover control, user offloading, power control, and cell association will not be deemed efficient and effective enough for a 5G RAN [

15,

16].

Also, recent studies estimate that approximately 4 billion people still lack access to internet [

17]. The economic and technical viabilities of the implementation of terrestrial global coverage remains infeasible not only due to rural and remote areas but also passengers on high-speed trains, speed boats or aircrafts. Hence, the need for the satellite segment to complement the terrestrial network infrastructure in the envisaged 5G communication systems cannot be over emphasized in order to address the limitations of the terrestrial network infrastructure. The satellite components will also be able to support new machine-type communications-based applications and the new 5G system is expected to accommodate diverse RANs to operate together, including satellites [

18]. It is also envisaged that the satellite systems can support all the use case scenarios that the terrestrial counterparts will be able to provide [

19]. It is indeed well recognized that one of the directions in the evolution of the 5G is towards the concept of Internet of Things (IoT) and it is the concept of smart objects that drives the implementation of this new paradigm [

20]. No doubt, the satellite communications systems have the potential to play a crucial role in this 5G framework for different reasons.

Firstly, smart objects are often located over a wide geographical area, remote or inaccessible. This scenario of IoT is referred to as the Internet of (Remote) Things (IoRT). In the IoRT scenario, satellite communication systems provide a more cost-effective solution with respect to other terrestrial technologies. Secondly, several IoT and Machine-to-Machine (M2M) applications use group-based communications that allow smart objects to be grouped according to the information they receive, and task performed. In this kind of scenario, the volume of data to be transmitted in the network can be optimized when the same message is to be transmitted to the IoT/M2M devices. By exploiting the broadcast, multicast or geocast transmission capabilities of satellite systems, this kind of application can naturally be supported. Lastly, in critical applications, such as smart grid, utilities etc. where network availability is crucial within reasonable cost, an alternate or redundant connection is required. The satellite system remains a viable solution in this regard, as terrestrial redundant connections are prone to severe disruptions on the ground. In fact, IoT/M2M communication via satellite air interface is a reality and represents a great opportunity for the satellite industry [

21].

Despite the prospects of the involvement of the satellite component in the provision of IoRT services in 5G networks, there are still reservations like issues of long propagation delay, power consumption, inter-operation of terrestrial and satellite segments, channel model etc. which have been raised as issues affecting satellite systems. However, recent advances in satellite communication technology have dispelled most of these misconceptions on the usage of satellite systems. Most of these issues have been addressed using new techniques or/and from architectural solutions. Satellite networks are nowadays capable of providing 0.9999 availability [

21,

22]. Nevertheless, as acknowledged in [

21], in order to achieve an effective and reliable IoRT communications via satellite, just as the case for terrestrial networks, several issues are still open and need solutions. Some of these issues are but not limited to Radio Interference and Resource Management (RIRM) functions including spectrum management for IoRT devices in future 5G satellite networks as identified in [

21].

An overview of the challenges of spectrum management for wireless IoT networks is presented and a regulatory framework is recommended in [

23], the Narrowband IoT (NB-IoT) spectrum management system, which is a narrowband system based on LTE, is introduced for M2M communications in [

24], while [

25] investigates the effect of NB-IoT on existing LTE cellular network. While there are some notable works done on spectrum management in 4G and emerging 5G terrestrial networks, to the best of the authors’ knowledge, no spectrum management investigation or framework have been proposed in the literature for IoRT in 5G satellite networks. The peculiar nature of satellite networks and IoRT, as compared to terrestrial and general IoT devices, compels the need for a study of spectrum management in this scenario. It is on this premise that the focus of this research study is on spectrum management for IoRT devices in 5G satellite networks. A new scheduling scheme is also introduced in order to investigate the considered spectrum management schemes.

The remainder of this paper is organized as follows.

Section 2 presents the system description. The considered spectrum management schemes to be investigated are presented in

Section 3.

Section 4 presents a new scheduling scheme and existing one that are used for the investigation. The simulation model and numerical results are presented in

Section 5 and

Section 6, respectively.

Section 7 concludes this paper.

2. System Description

The 5G satellite Radio Access Networks (RAN) are envisaged to use Orthogonal Frequency Division Multiple Access (OFDMA) and support multiple antenna transmission schemes. Satellite technology can adopt OFDMA due to the fact that OFDMA allows flexible bandwidth operation with low complexity receivers and easily exploits frequency selectivity [

26]. The Multi-User Multi-Input Multi-Output (MU-MIMO) mode that allows simultaneous transmission to multiple users is considered. The 5G New Radio (NR) base station termed gNB is expected to be also suitable for satellite network scenario and is assumed to be located on the earth station. Both the satellite gNB and the User Equipment (UE) are considered to have multiple antennas; each assuming a baseline of 2 × 2 MIMO configuration. The 5G NR modulation technique and frame structure are designed to be compatible with LTE. The 5G NR duplex frequency configuration will allow alignment among 5G NR, NB-IoT and LTE-M subcarrier grids [

27]. Hence, it is expected that the 5G NR user equipment (UE) will not only exist with 5G satellite networks but also coexist with satellite LTE networks.

2.1. Satellite Air Interface

There are several types of satellite systems that can be adopted for this work, these include Low Earth Orbit (LEO), Medium Earth Orbit (MEO) and Geostationary Equatorial Orbit (GEO) satellites. Each of these satellite types comes with its own challenges. The GEO satellite can maintain constant transmission delay across multiple sites and a single GEO satellite is able to provide coverage for dense spotbeams [

28]. However, GEO satellites comes with issues of long propagation delay, new technologies like edge caching can be used to mitigates this. While LEO satellite provides a lower propagation delay, it is not able to achieve a constant delay due to its constant movement and its smaller coverage area. However, the LEO satellites can be preferred due to its low propagation delay, the GEO satellites are preferred for multimedia communications due to high bandwidth and constant delay. The GEO satellites have also been used to implement 5G satellite networks [

28]. The scenario considered in this paper is used to investigate the co-existence in terms of spectrum management for both broadband and IoRT use cases. It is on this premise, that a transparent GEO Satellite has been adopted for this work.

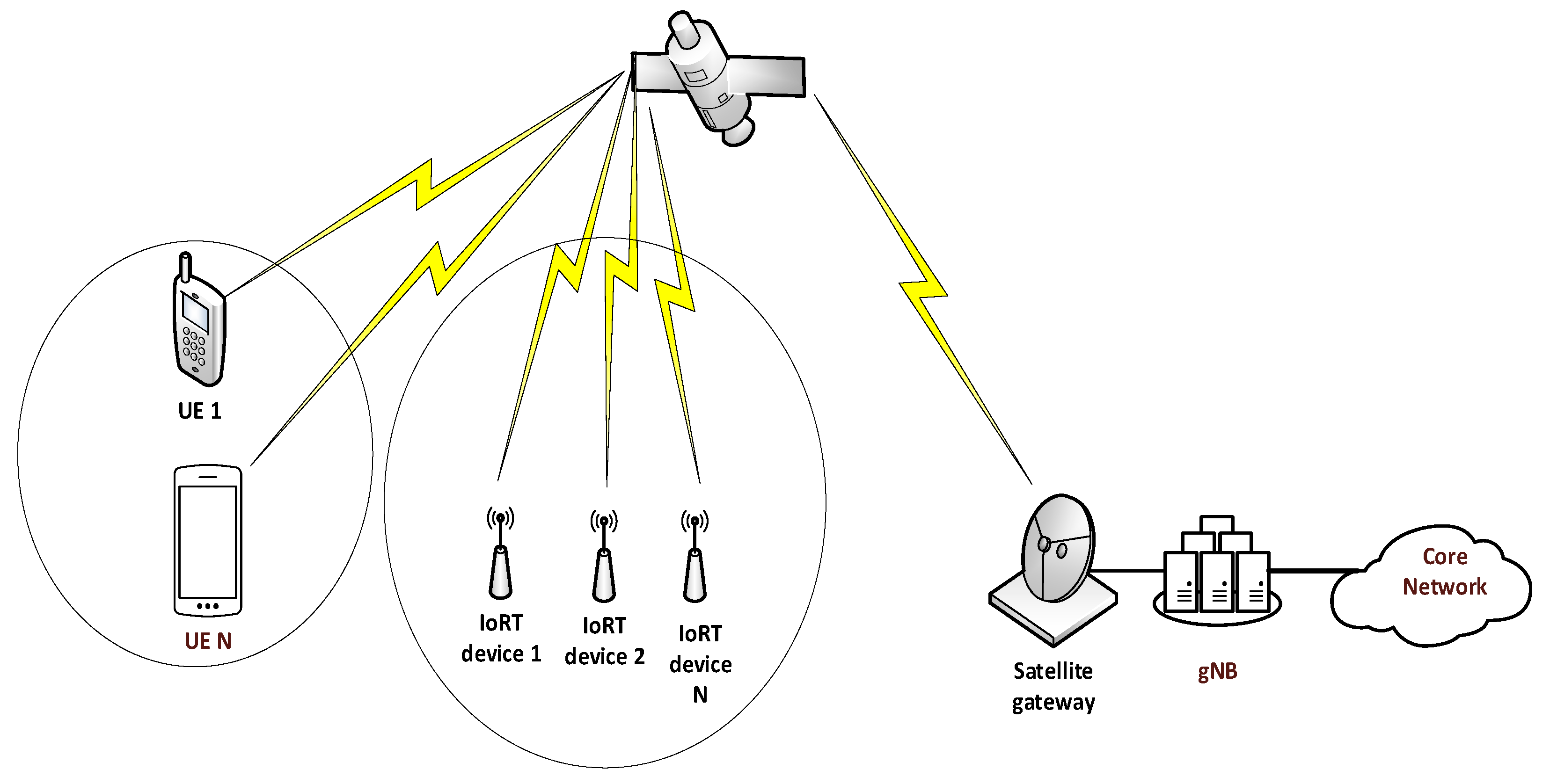

As shown in

Figure 1, the satellite gNodeB located on the earth connects to UEs via the transparent GEO satellite. This setup supports simultaneous transmissions from the satellite gNodeB via the transparent GEO satellite to different UEs [

29]. This transmission setup is envisaged to have feedback from the UE to the satellite gNodeB for not only link adaptation purposes but also to determine the transmission rate [

30]. Hence, the UE is assumed to send Channel Quality Indicator (CQI) report via the transparent GEO satellite to the gNodeB located on the earth station at certain intervals. The reported CQI at the satellite gNodeB can then be used for transmission and scheduling purposes.

At the MAC layer of the satellite gNodeB, the scheduling scheme module schedules UEs and IoRT devices. The scheduled UEs and IoRT devices are then mapped to the available resource blocks at every Transmission Time Interval (TTI). The available resource blocks are a function of the available spectrum and the available spectrum depends on how the spectrum is being managed or shared. The size of spectrum used, and the number of antennas deployed determines the number of available resource blocks at every TTI. The allocation of available resources is implemented at every TTI.

2.2. Channel Model

The channel model that is considered in this paper is an empirical-stochastic model for Land Mobile Satellite MIMO (LMS-MIMO) used in [

31,

32]. The stochastic properties of this channel model are obtained from the S-band tree-lined road campaign’s measurement done in a suburban area using dual circular polarizations. The Markov model in

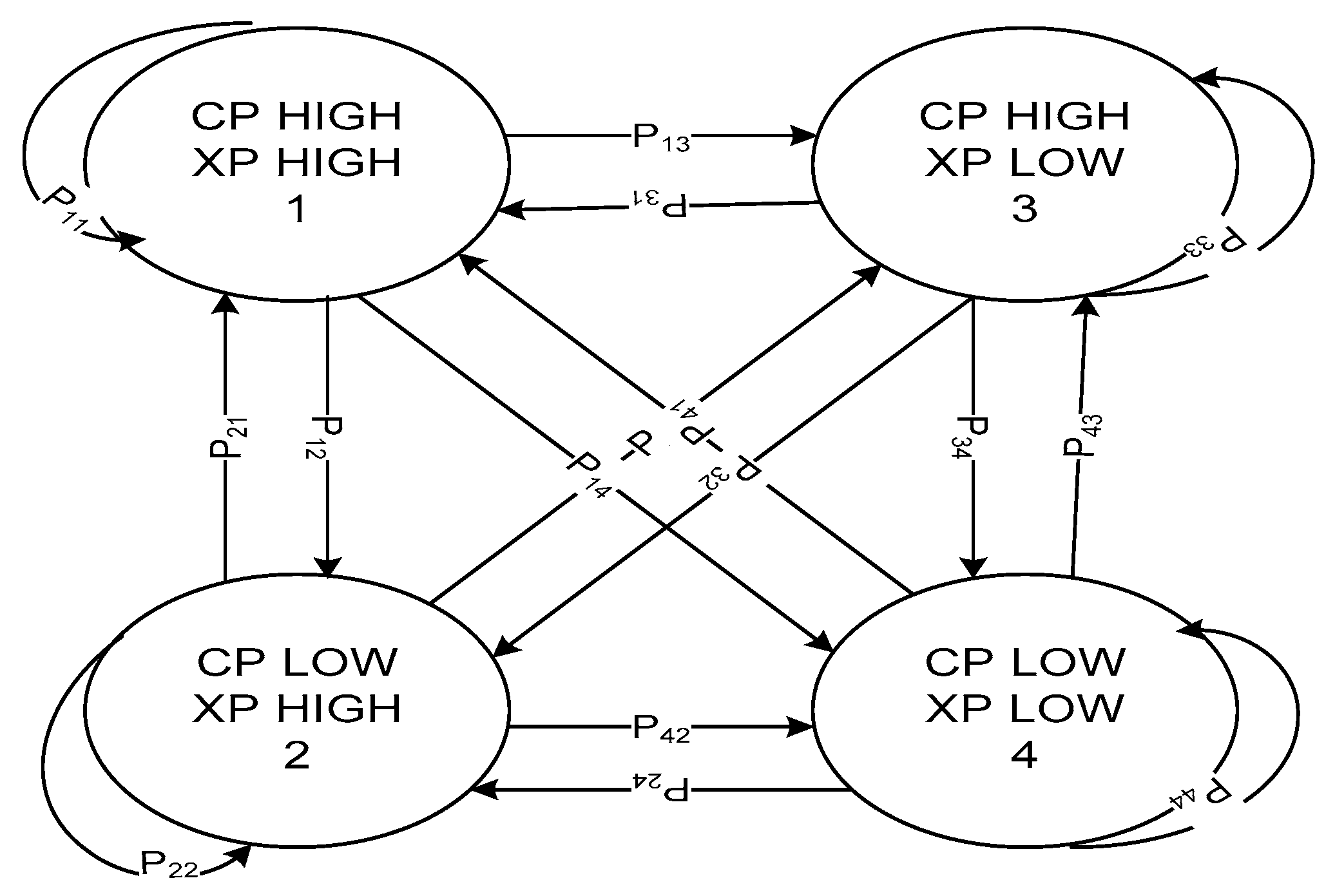

Figure 2 is used to determine the large-scale fading (shadowing) states, which varies between low and high shadowing values for both co-polar and cross-polar channels. The four possible states in the Markov model are due to the high or low shadowing state of both the cross-polar (XP) and co-polar (CP) channels. The 4 × 4 transition matrix, P, below obtained from the measurement in [

33] is used to determine the next possible channel state. State 1 is CP High XP High, State 2 is CP Low XP High, State 3 is CP High XP Low and State 4 is CP Low XP Low. The top right corner value of 0.1037 is the probability of “CP High, XP High” to “CP Low, XP Low”.

The small-scale fading is modelled using Ricean distribution. The Ricean fading for each of the MIMO channels is generated using Ricean factors. The details of how the large scale and small scale fadings are obtained are shown in [

33]. The path loss (in dB) at 2 GHz in GEO satellite channel can be computed as follows:

The total loss considered in this channel model includes large scale fading, small scale fading, the path loss (

LFs), and polarization loss. The inter-spotbeam interference,

I, has been considered in this channel model. This interference is obtained as a result of power received from satellite gNodeBs sharing the same frequency. Details of how the interference is computed and the considered channel parameters are provided in [

31]. The Signal-to-Noise plus Interference Ratio (SNIR) for each subchannel can be expressed as follows in dB:

The Channel Quality Indicator (CQI) is determined from the computed SNIR in (3) using the SNIR-CQI mapping used in [

31] for a Block Error Rate (BLER) of 10

−3. This SNIR-CQI mapping can be expressed as follows:

2.3. Traffic Model

The IoRT and video streaming traffic have been considered in this paper. In a mixed traffic setup, for every 50 IoRT devices, 10 video streaming users are considered. This number increases proportionally.

The IoRT traffic is modelled using a Constant Bit Rate (CBR) traffic model with a packet size of 400 Bytes and time interval of 40 ms [

34], while the realistic video trace files that are provided in [

35] are used to model the video streaming traffic. The obtained video sequence of 25 frames per second has been compressed using the H.264 standard [

34]. The mean bit-rate of the video source is 242 kbps.

3. Spectrum Management

The emergence of massive numbers of IoRT devices will form part of the 5G networks ecosystem despite the limited spectrum. In a satellite network, it remains unclear what the best spectrum management solution for this scenario is. In this paper, two different spectrum management schemes are investigated in order to determine the best spectrum management scheme that will ensure good network performance for IoRT devices without also compromising human-to-human communications.

3.1. Dedicated IoRT Spectrum

This spectrum management scheme dedicates two separate frequency bands for machine type communications (IoRT devices) and human to human communications (video streaming, web browsing etc.) respectively. A relatively small portion of the bandwidth is dedicated for IoRT devices and the remaining part of the bandwidth is dedicated for human type communications. Hence, the machine type communications are always guaranteed this dedicated, but relatively small frequency band (smaller proportion of Resource Blocks (RBs)).

| Dedicated IoRT spectrum management |

1. Initialize BIoRT = BT − BH

2. Initialize SIoRT = and

for all selected IoRT n

3. While

4. For IoRT i = 1 to I

Compute scheduling metric for each RB j

5.

6.

7.

end |

Where BIoRT is the bandwidth dedicated for IoRT, BT is the total bandwidth, BH is the bandwidth dedicated for human type communications, SIoRT is the available resource blocks for IoRT devices, Sn is the allocated resource blocks that have been assigned to the scheduled or selected IoRT devices, I is the number of IoRT devices and is the scheduling metric for IoRT i for RB j.

3.2. Shared Spectrum for Mixed Use Case or Traffic

This spectrum management scheme allows total available bandwidth to be shared by both human type and machine type communications. This scheme allows the human mobile and IoRT devices to compete for the same pool of resource blocks and depending on the scheduling metric, the available resource blocks can be assigned to either one of the IoRT or human mobile devices. This scheme does not guarantee resource blocks to IoRT devices. Hence, the scheduling algorithm will play a vital role in ensuring that IoRT devices are to a large extent fairly treated in terms of resource allocation. However, this scheme allows unused spectrum by human type communications to be used for machine type communications.

| Shared spectrum management |

1. Initialize BT

2. Initialize ST = and

for all selected UEs (including IoRT devices) n

3. While

4. For UE i = 1 to I

Compute scheduling metric for each RB j

5.

6.

7.

end |

Where BT is the total bandwidth, ST is the available resource blocks for UE including IoRT devices, Sn is the allocated resource blocks that have been assigned to the scheduled or selected UEs, I is the number of UEs and is the scheduling metric for UE i for RB j.

6. Simulation Results

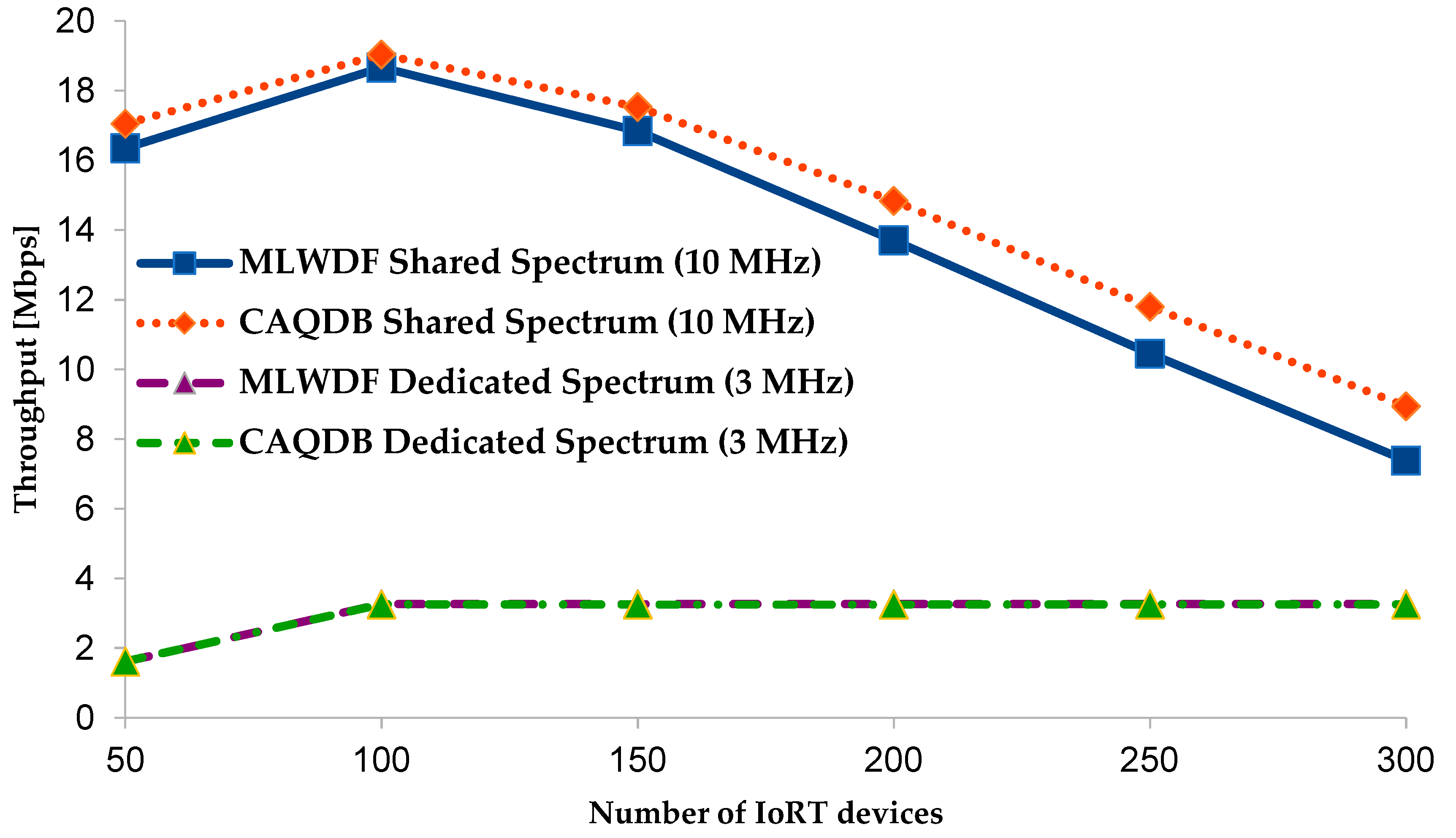

The results obtained from the simulations show the performances of the two spectrum management schemes using two different packet scheduling algorithms (MLWDF and CAQDB). As shown in

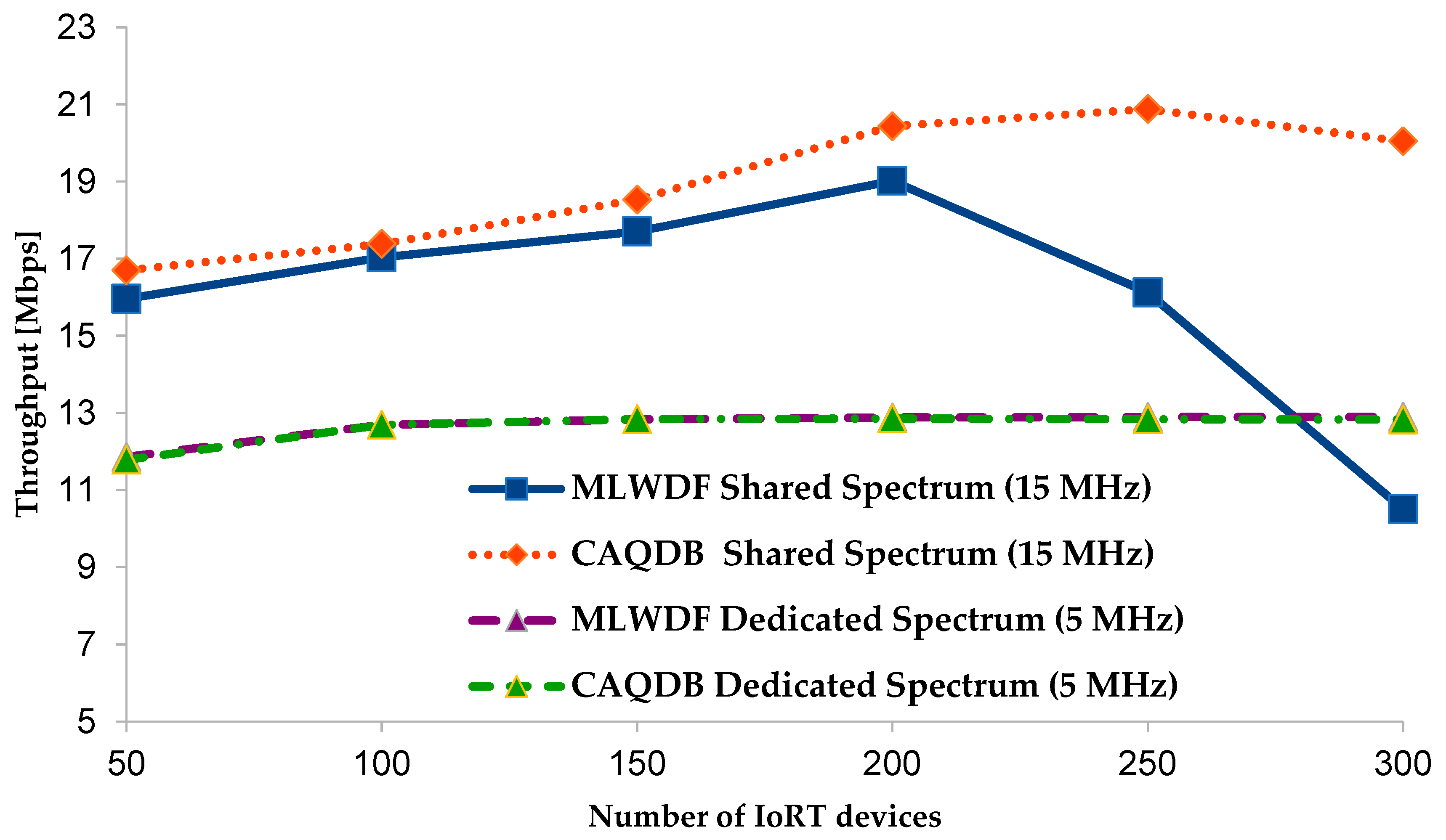

Figure 3, the throughput of IoRT devices traffic is much better using a shared spectrum management scheme as compared to dedicated spectrum management. Though, as the number of IoRT devices increases, the throughput performance of the shared spectrum management drops but the performance of the shared spectrum management is still at least approximately 4 Mbps better than that of the dedicated spectrum management. The two schedulers (MLWDF and CAQDB) produces a similar performance for both dedicated and shared spectrum management schemes, though, the proposed CAQDB scheduler edges the MLWDF when using shared spectrum management scheme. The drop in throughput performance using shared spectrum management scheme as the number of IoRT devices increase is a result of more demand from the video streaming traffic and limited spectrum. However, with more spectrum of 15 MHz in scenario B, as shown in

Figure 4, using the proposed CAQDB scheduler, the throughput performance of the shared spectrum management scheme is consistently above 6 Mbps even as the number of IoRT devices increase. This cannot be said of the MLWDF scheduler due to drop in throughput performance from IoRT devices of 200 and above. The CAQDB scheduler can be said to produce this performance because unlike MLWDF it does not only considers closeness of the packet’s delay to the delay deadline, but also the state of the queue. By only considering the delay disadvantages the IoRT traffic, as they are mostly less delay sensitive traffic.

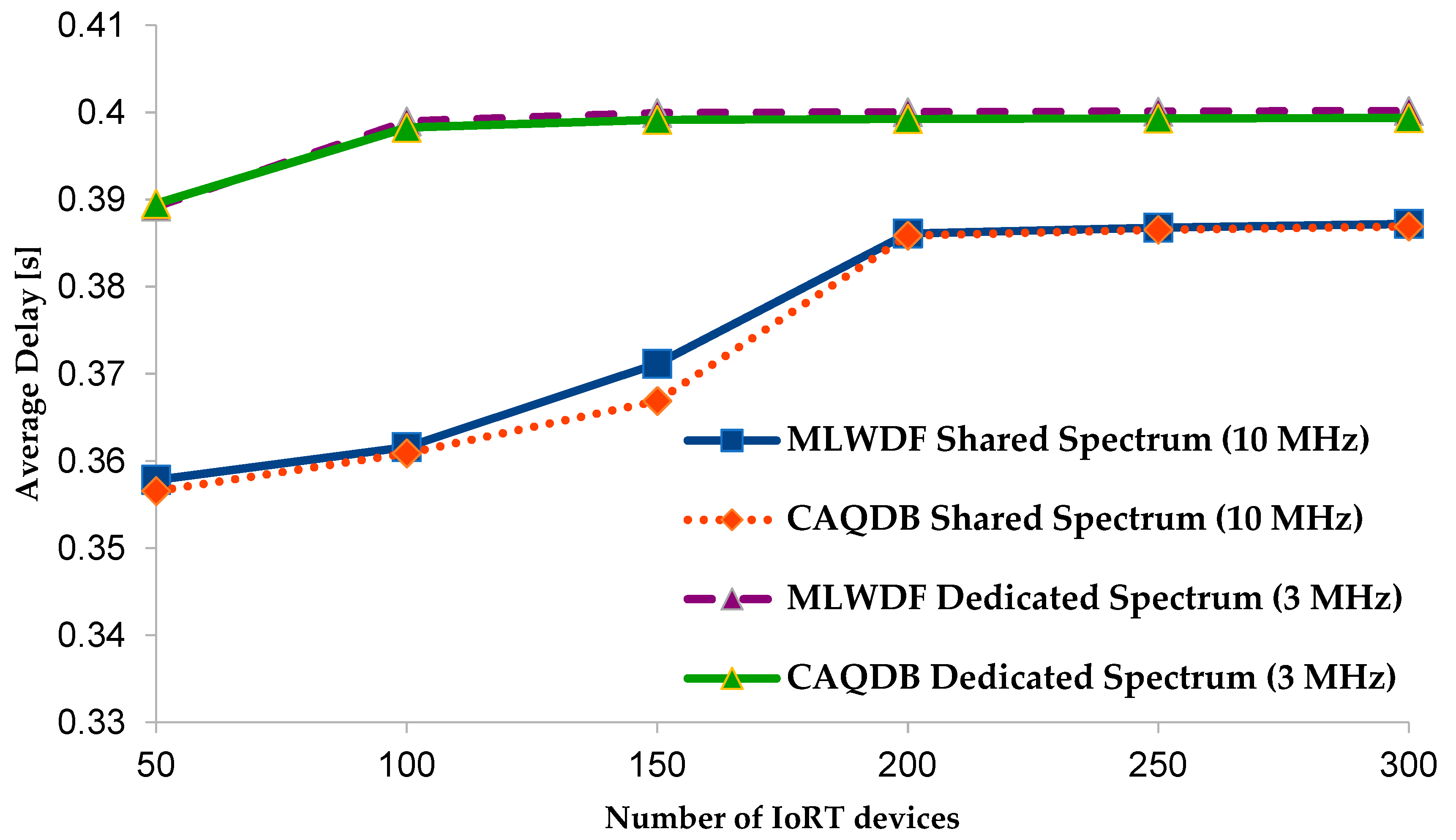

As shown in

Figure 5, the average delay performances of the shared spectrum management scheme for both schedulers are better than the dedicated spectrum management across the varying number of IoRT devices. Both schedulers have a similar delay performance for both spectrum management schemes in scenario A.

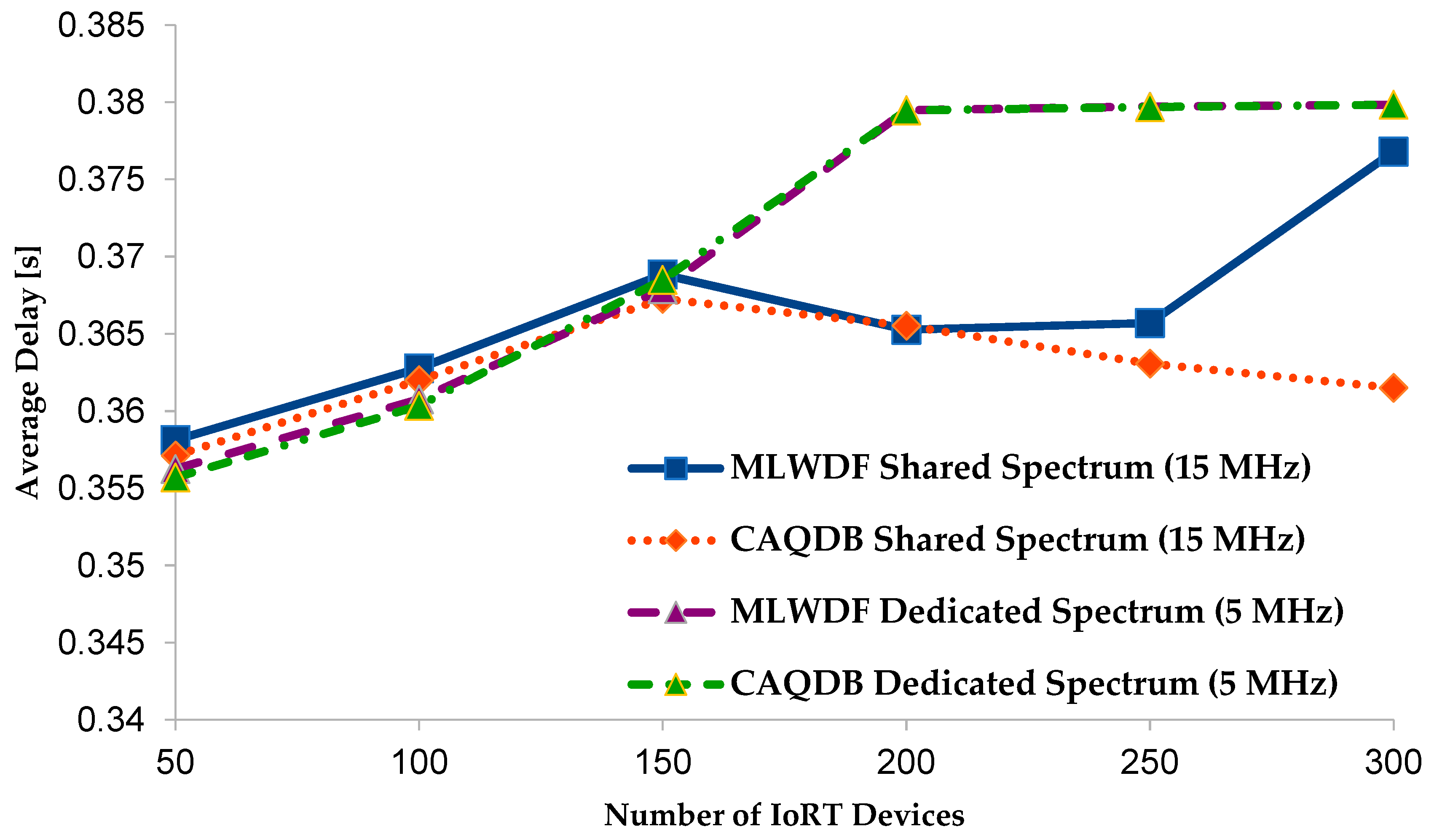

However, as shown in

Figure 6, in scenario B, both spectrum management schemes produce a very close average delay performance up to 150 IoRT devices for both schedulers. But, as the number of IoRT increases from 150, the shared spectrum management for both schedulers produces a better average delay performance up till 250 IoRT devices. From 250 to 300 IoRT devices, the average delay performance of shared spectrum management scheme using MLWDF becomes increasingly poorer until it reaches the same level of performance as the dedicated spectrum management scheme but the proposed CAQDB scheduler is able to maintain a better average delay performance. The average delay performance results also confirm the impact of packet scheduler on the performance of spectrum management schemes.

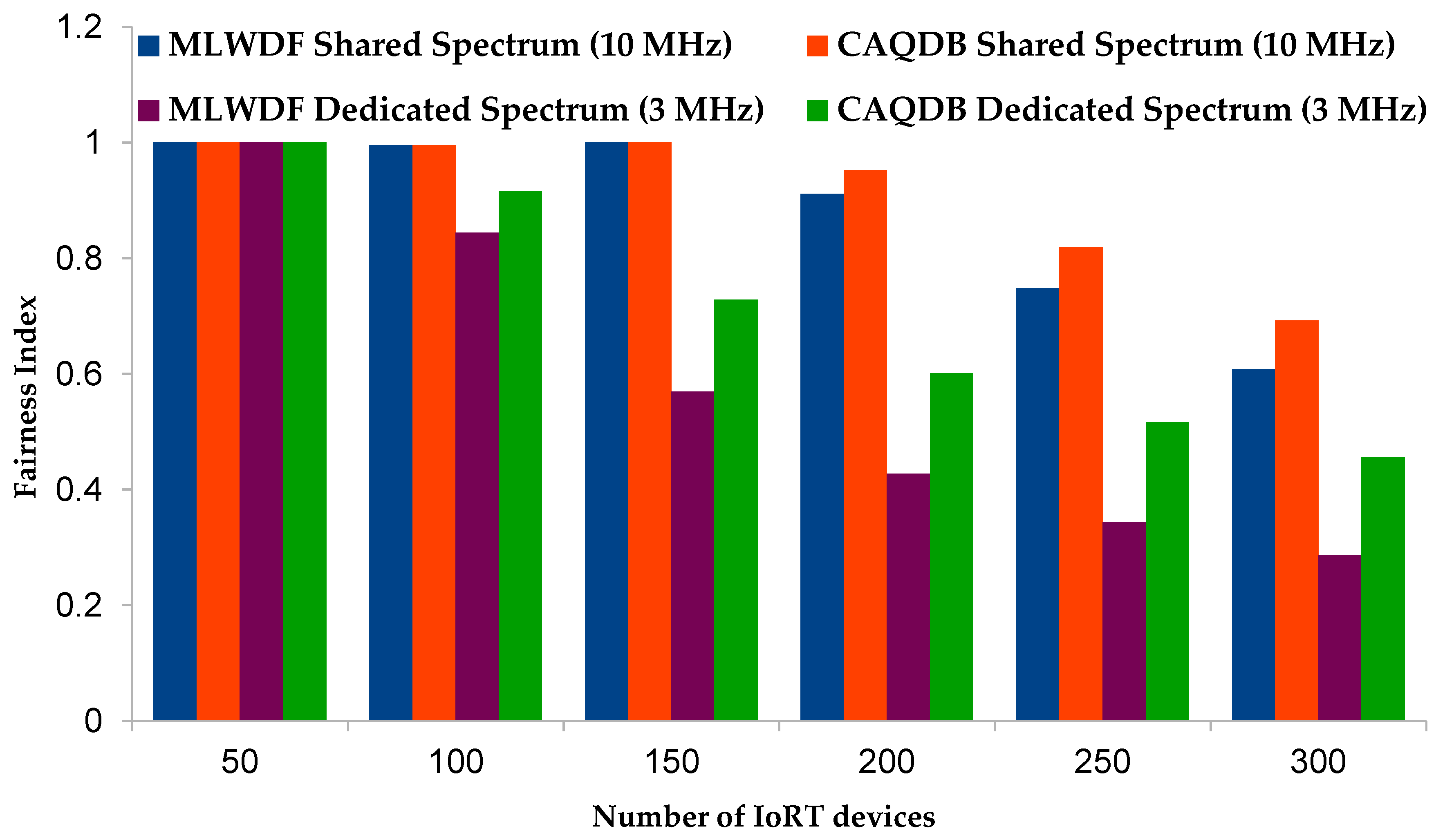

The fairness index performances for both scenarios A and B are presented in

Figure 7 and

Figure 8, respectively. In scenario A as shown in

Figure 7, though, all the spectrum management schemes have the same fairness index for 50 IoRT devices but as the IoRT devices increase, the shared spectrum management scheme for both schedulers performed better than the dedicated spectrum management scheme. In addition, the CAQDB scheduler performed better than MLWDF scheduler for both shared and dedicated spectrum management scheme. In scenario B, as depicted in

Figure 8, the dedicated spectrum management scheme edges the shared management scheme for both schedulers and the CAQDB scheduler edges the MLWDF scheduler fairness index performance for the dedicated spectrum management scheme. Even though, the fairness index performance trend for both scenarios are different, the impact of scheduler on the spectrum management scheme performance is further established from these set of results.

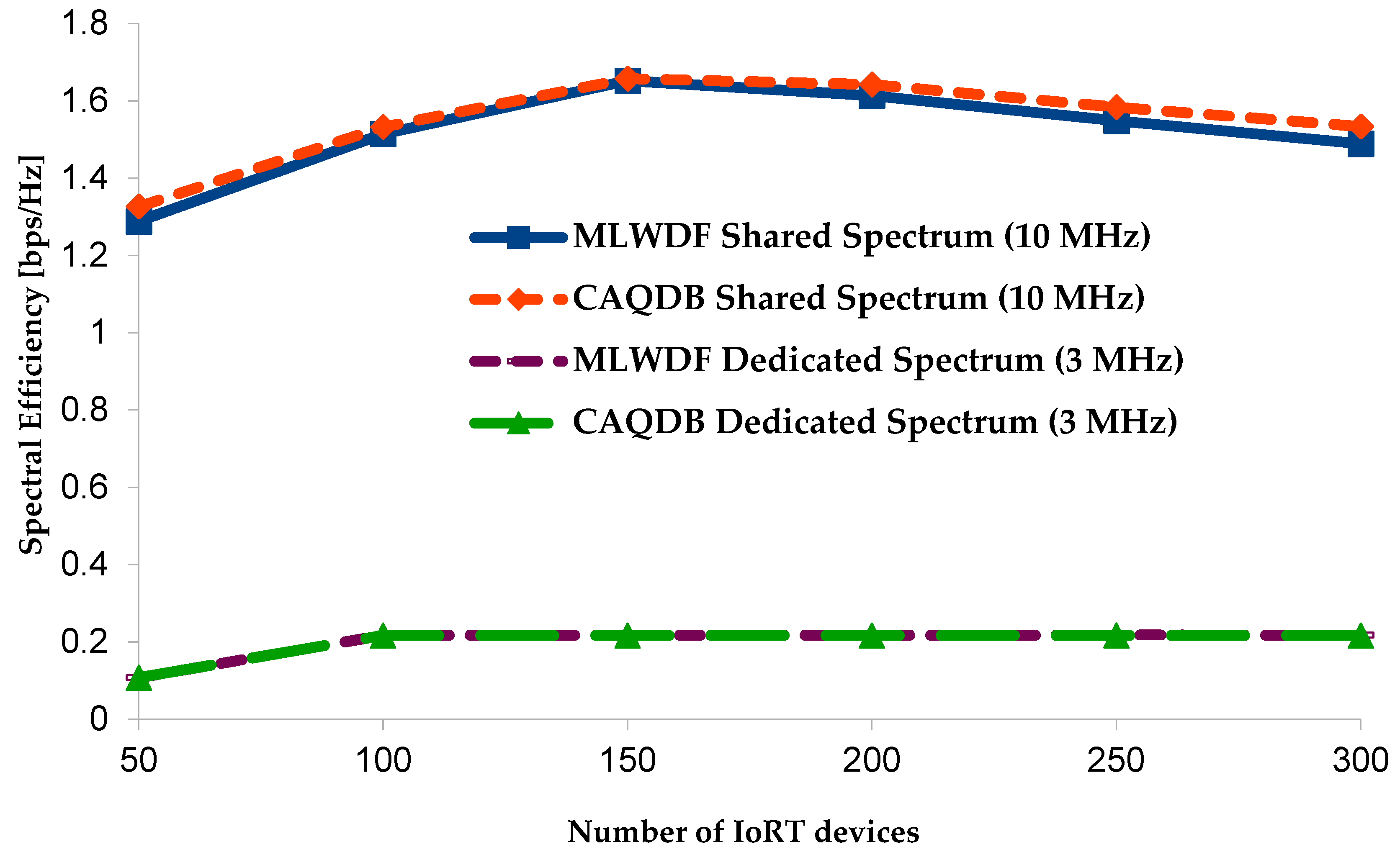

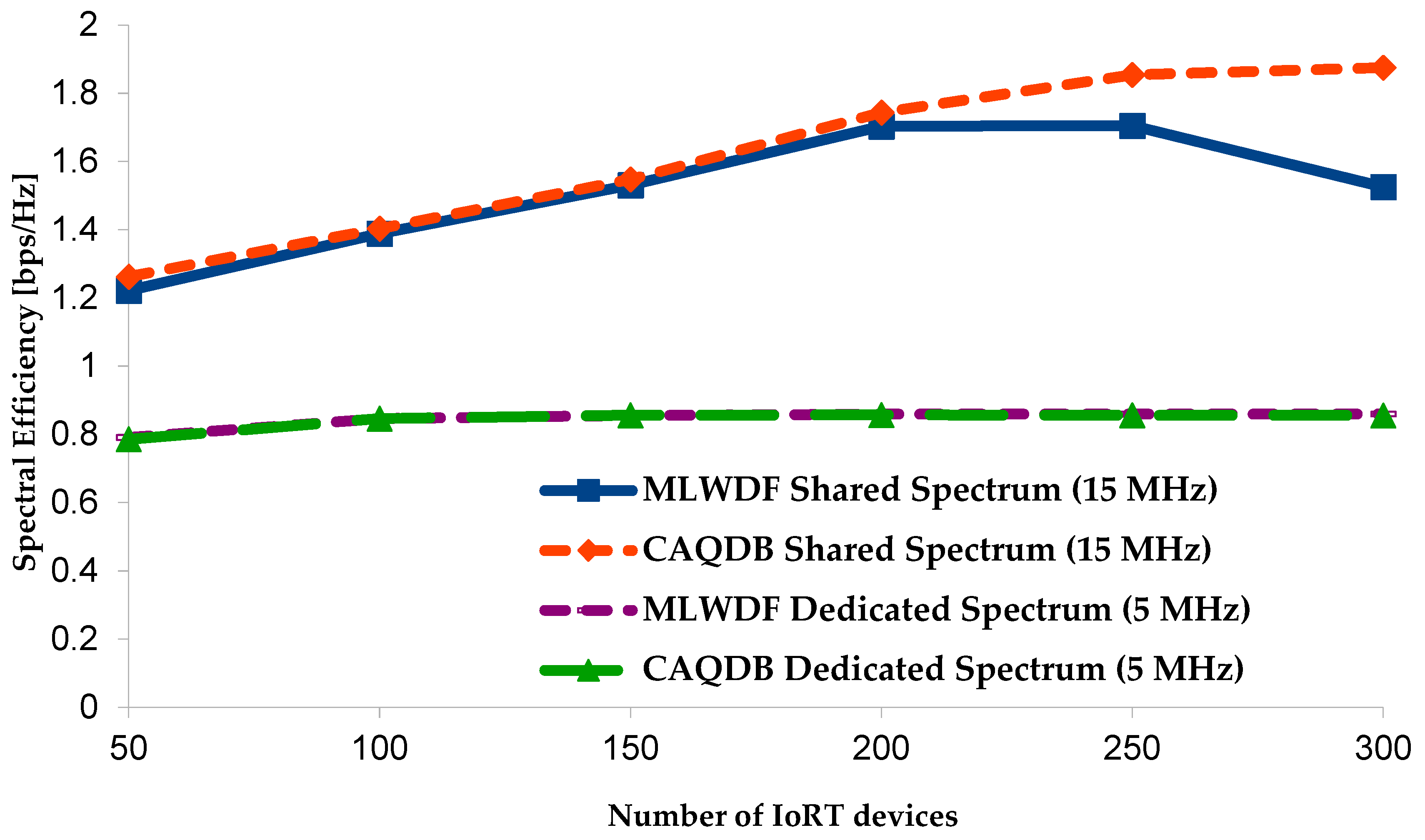

The overall spectral efficiency results of the two spectrum management schemes are presented in

Figure 9 and

Figure 10. As shown in

Figure 9 and

Figure 10, the spectral efficiency performance of the shared spectrum management scheme for both schedulers is much better as compared to the dedicated spectrum management scheme across varying number of IoRT devices. The same performance comparison is experienced in both scenarios A and B. Hence, it can be deduced that the shared spectrum management scheme allows the spectrum to be fully utilized as a result of mixed use case scenario, such that whenever the spectrum is not been used by IoRT devices, the video streamers are utilizing the spectrum and vice versa. The performance of the two schedulers are very close for the dedicated spectrum management for both scenarios, however, the same can only be said for shared spectrum management scheme in scenario A. In scenario B, the proposed CAQDB scheduler produces a better spectral efficiency from 200 IoRT devices upward but close performance below 200 IoRT devices. This further shows that the scheduler has an impact on the performance of a shared spectrum management scheme, especially as the number of IoRT devices increase.

7. Conclusions

This paper presents an investigation of two spectrum management schemes for IoRT devices communications in 5G satellite networks. In addition, a newly proposed scheduler called CAQDB scheduler is presented and compared with existing MLWDF scheduler in order to study the impact of scheduling algorithms on the performance of spectrum management schemes especially in shared spectrum management schemes, where different traffic or use cases are expected. From the presented results, the shared spectrum management scheme produces a better performance in almost all the performance metrics for the two scenarios considered. Furthermore, it can be concluded that the packet scheduler has an impact on the performance of spectrum management scheme especially the shared spectrum management scheme, as the proposed CAQDB scheduler provides better performance compared to the MLWDF scheduler, mainly when the shared spectrum management is used. In conclusion, the shared spectrum management scheme is recommended provided an appropriate scheduling algorithm like the proposed CAQDB, that will consider not only the delay as QoS factor but also the state of queue, is used. For future work, a more dynamic spectrum management scheme can be considered in the investigation and more diverse use cases will be considered.