Edge Computing Simulators for IoT System Design: An Analysis of Qualities and Metrics

Abstract

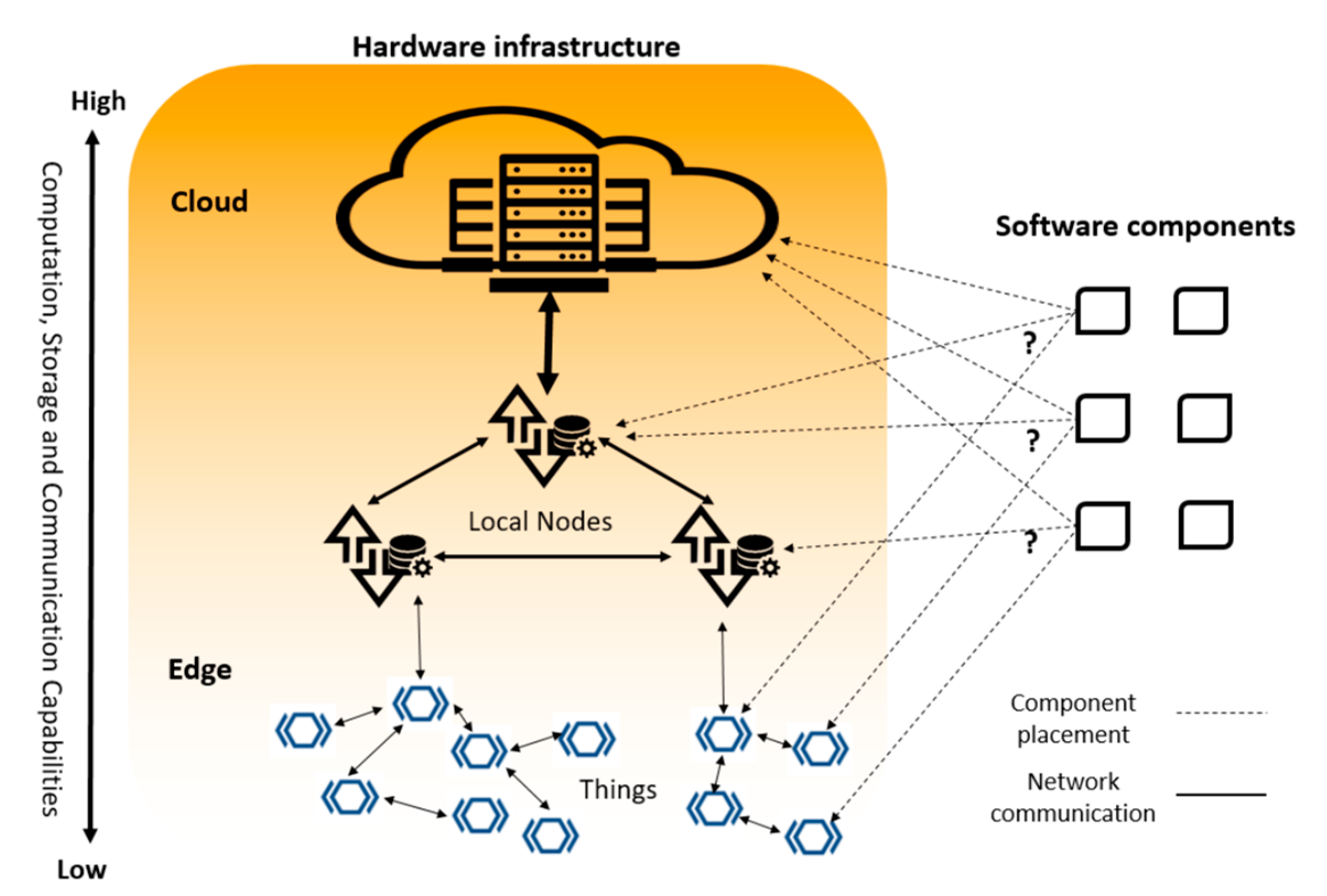

1. Introduction

- A set of the relevant qualities for edge computing simulation based on a systematic review of the literature;

- An analysis of the Edge computing simulators in terms of which of the identified qualities and the related metrics they support.

2. Related Work

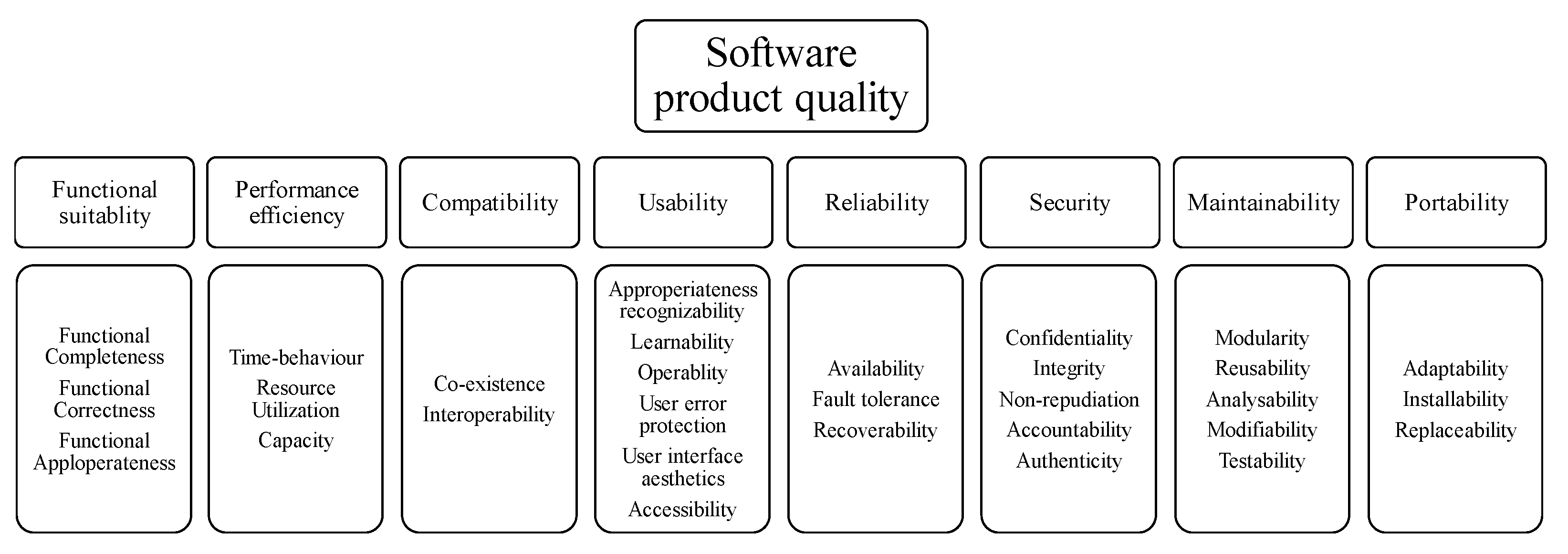

3. Identification of Relevant Qualities

3.1. Literature Study and Metric Extraction

- Functional suitability: The degree to which a product or system provides functions that meet stated and implied needs when used under specified conditions.

- Performance efficiency: The performance relative to the amount of resources used under stated conditions.

- Compatibility: The degree to which a product, system, or component can exchange information with other products, systems, or components, and/or perform its required functions while sharing the same hardware or software environment.

- Usability: The degree to which a product or system can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use.

- Reliability: The degree to which a system, product, or component performs specified functions under specified conditions for a specified period of time.

- Security: The degree to which a product or system protects information and data so that persons or other products or systems have the degree of data access appropriate to their types and levels of authorization.

- Maintainability: The degree of effectiveness and efficiency with which a product or system can be modified to improve it, correct it or adapt it to changes in the environment, and in requirements.

- Portability: The degree of effectiveness and efficiency with which a system, product, or component can be transferred from one hardware, software, or other operational or usage environment to another.

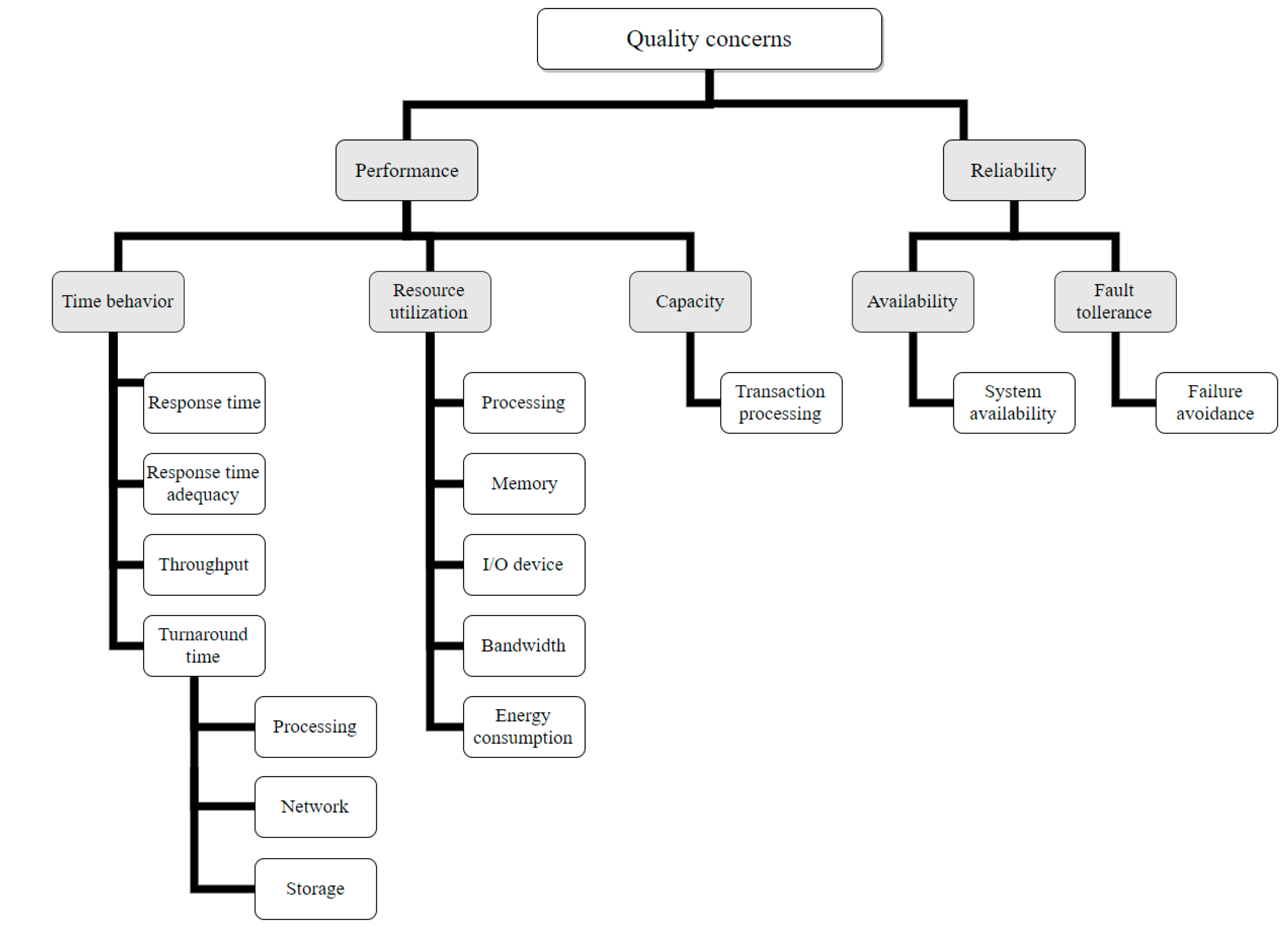

3.2. Deriving Qualities and Metrics

- Application-specific metrics, such as decision time of an application, ECG waveform, or wireless signal level;

- Not possible to measure by simulation, for example, user experience can be measured by survey;

- Can be calculated by other metrics, such as the average slowdown of an application, that is average system waiting time divided by its service time, can be calculated by combining other metrics;

- Abstract metrics without detailed information regarding how to measure it, such as degree of trust;

- Metrics that are input for the simulation, such as processing capacity of a device.

- Mean response time: The mean time taken by the system to respond to a user or system task.

- Mean turnaround time: The mean time taken for completion of a job or an asynchronous process.

- ▪

- Processing delay: The amount of time needed for processing a job.

- ▪

- Network delay: The amount of time needed for transmission of a unit of data between the devices.

- ▪

- Storage read and write delay: The amount of time needed to read/write a unit of data from disk or long-term memories.

- Mean throughput: The mean number of jobs completed per unit time.

- Bandwidth utilization: The proportion of the available bandwidth utilized to perform a given set of tasks.

- Mean processor utilization: The amount of processor time used to execute a given set of tasks as compared to the operation time.

- Mean memory utilization: The amount of memory used to execute a given set of tasks as compared to the available memory.

- Mean I/O devices utilization: The amount of an I/O device busy time that used to perform a given set of tasks as compared to the I/O operation time.

- Energy consumption: The amount of energy used to perform a specific operation like data processing, storage or transfer.

- Transaction processing capacity: The number of transactions that can be processed per unit time.

- System availability: The proportion of the scheduled system operational time that the system is actually available.

- Fault avoidance: The proportion of fault patterns that has been brought under control to avoid critical and serious failures.

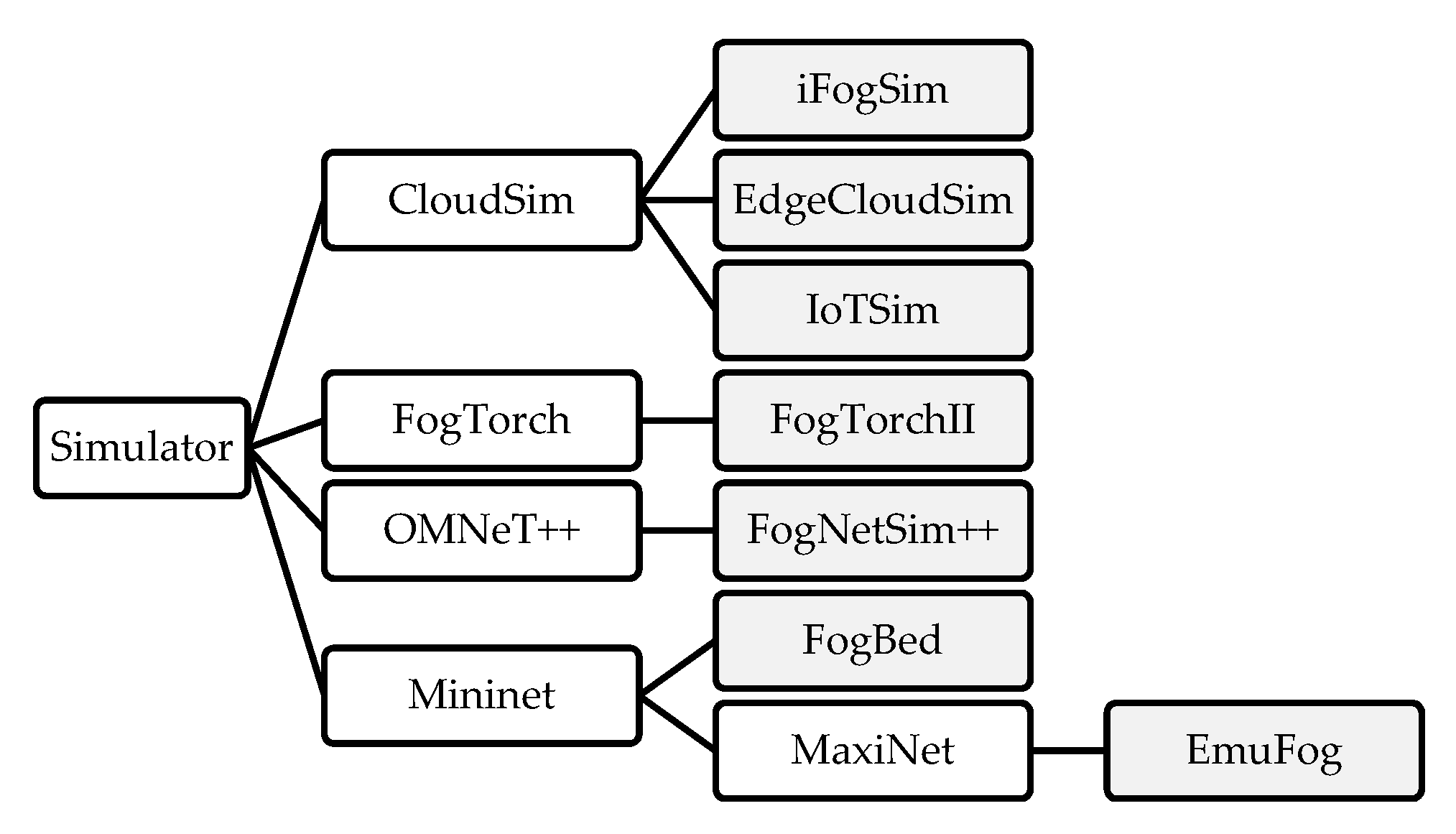

4. Supported Qualities by Edge Computing Simulators

5. Discussion and Research Gaps

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A: Extracted Metrics

| ISO/IEC 25010 Qualities | ISO/IEC 25023 Measures | Related Metrics Used in the Literature |

|---|---|---|

| Time behavior | Mean response time | Service allocation delay, first response time of processing a dataset, service latency (used in 3 papers), system response time (3), service execution delay |

| Mean turnaround time (processing, network storage delay) | CPU time, round-trip time (2), processing delay, latency of application, communications latency, latency ratio, delay of transferring datasets, completion time, time to write or read (2), time taken to create container for new images, time taken to create container for existing images, time taken to transfer the application file, time taken to transfer the log file, transmission delay, loading time, delay jitter, execution time (2), delivery latency, response times of computation tasks, activation times, running time, accessing cost of data chunks, handover latency, latency of data synchronization, workload completion time, waiting time, end to end delay, sync time, delay, queuing delay (2), execution time, container activation time | |

| Throughput | Number of messages processed, throughput of request serviced, successful executed job throughput, queue length in time, bandwidth throughput, disk throughput | |

| Resource utilization | Memory utilization | Queue utilization, allocated slots |

| Processor utilization | Processing power, processing cost for each application, CPU utilization (3), computing load, CPU consumption, max system load, fairness by Gini coefficient, computation cost, system load | |

| I/O devices utilization usage | Storage overhead | |

| Bandwidth utilization | Communication cost, number of retransmissions (2), bandwidth consumptions, required bandwidth, traffic load, amount of data sent between fog sites, volume of data transmitted, amount of network traffic sent, communication overhead | |

| Energy consumption* | Energy consumption (7), transmission energy, power consumption (3), allocated power, residual energy, energy efficiency | |

| Capacity | Transaction processing capacity | Number of satisfied requests, system loss rate, cloud requests fulfilled, number of existing jobs in the CPU queues, rate of targets dropped over arrival, cumulative delivery ratio, provisioned capacity, service drop rate, number of finalized jobs, number of executed packets |

| Availability | System availability | Uptime |

| Fault tolerance | Failure avoidance | Scheduled requests with crash |

References

- Zikria, Y.B.; Yu, H.; Afzal, M.K.; Rehmani, M.H.; Hahm, O. Internet of Things (IoT): Operating System, Applications and Protocols Design, and Validation Techniques. Future Gener. Comput. Syst. 2018, 88, 699–706. [Google Scholar] [CrossRef]

- Miorandi, D.; Sicari, S.; De Pellegrini, F.; Chlamtac, I. Internet of things: Vision, applications and research challenges. Ad Hoc Netw. 2012, 10, 1497–1516. [Google Scholar] [CrossRef]

- Chiang, M.; Zhang, T. Fog and IoT: An Overview of Research Opportunities. IEEE Int. Things 2016, 3, 854–864. [Google Scholar] [CrossRef]

- Shi, W.; Dustdar, S. The promise of edge computing. Computer 2016, 49, 78–81. [Google Scholar] [CrossRef]

- Li, W.; Santos, I.; Delicato, F.C.; Pires, P.F.; Pirmez, L.; Wei, W. System modelling and performance evaluation of a three-tier Cloud of Things. Futur. Gener. Comput. Syst. 2017, 70, 104–125. [Google Scholar] [CrossRef]

- Bittencourt, L.; Immich, R.; Sakellariou, R.; Fonseca, N.; Madeira, E.; Curado, M.; Villas, L.; DaSilva, L.; Lee, C.; Rana, O. The Internet of Things, Fog and Cloud continuum: Integration and challenges. Int. Things 2018, 3, 134–155. [Google Scholar] [CrossRef]

- Brogi, A.; Forti, S.; Ibrahim, A. How to Best Deploy Your Fog Applications, Probably. In Proceedings of the 2017 IEEE 1st International Conference on Fog and Edge Computing (ICFEC), Madrid, Spain, 14–15 May 2017. [Google Scholar]

- ISO/IEC. Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—System and Software Quality Models; BS ISO/IEC 25010: 2011; BSI Group: Geneva, Switzerland, 31 March 2011. [Google Scholar]

- Law, A.M.; Kelton, W.D. Simulation modeling and analysis; McGraw-Hill Education: New York City, NY, USA, 2013; Volume 3. [Google Scholar]

- Sotiriadis, S.; Bessis, N.; Asimakopoulou, E.; Mustafee, N. Towards Simulating the Internet of Things. In Proceedings of the 2014 28th International Conference on Advanced Information Networking and Applications Workshops, Victoria, BC, Canada, 13–16 May 2014. [Google Scholar]

- Svorobej, S.; Takako Endo, P.; Bendechache, M.; Filelis-Papadopoulos, C.; Giannoutakis, K.; Gravvanis, G.; Tzovaras, D.; Byrne, J.; Lynn, T. Simulating Fog and Edge Computing Scenarios: An Overview and Research Challenges. Futur. Internet 2019, 11, 55. [Google Scholar] [CrossRef]

- Ning, Z.; Dong, P.; Wang, X.; Rodrigues, J.J.P.C.; Xia, F. Deep Reinforcement Learning for Vehicular Edge Computing: An Intelligent Offloading System. ACM Trans. Intell. Syst. Technol. 2019, 10, 60. [Google Scholar] [CrossRef]

- Balasubramanian, V.; Wang, M.; Reisslein, M.; Xu, C. Edge-Boost: Enhancing Multimedia Delivery with Mobile Edge Caching in 5G-D2D Networks. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019. [Google Scholar]

- Masip-Bruin, X.; Marín-Tordera, E.; Tashakor, G.; Jukan, A.; Ren, G.J. Foggy clouds and cloudy fogs A real need for coordinated management of fog-to-cloud computing systems. IEEE Wirel. Commun. 2016, 23, 120–128. [Google Scholar] [CrossRef]

- Sarkar, S.; Chatterjee, S.; Misra, S. Assessment of the Suitability of Fog Computing in the Context of Internet of Things. IEEE Trans. Cloud Comput. 2015, 6, 1–10. [Google Scholar] [CrossRef]

- Byrne, J.; Svorobej, S.; Giannoutakis, K.M.; Tzovaras, D.; Byrne, P.J.; Östberg, P.O.; Gourinovitch, A.; Lynn, T. A Review of Cloud Computing Simulation Platforms and Related Environments. In Proceedings of the 7th International Conference on Cloud Computing and Services Science, Porto, Portugal, 24–26 April 2017. [Google Scholar]

- Brady, S.; Hava, A.; Perry, P.; Murphy, J.; Magoni, D.; Portillo-Dominguez, A.O. Towards an emulated IoT test environment for anomaly detection using NEMU. Glob. Int. Things Summit (GIoTS) 2017, 1–6. [Google Scholar]

- Gupta, H.; Buyya, R. iFogSim: A toolkit for modeling and simulation of resource management techniques in the Internet of Things, Edge and Fog computing environments. Softw.: Pract. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef]

- Sonmez, C.; Ozgovde, A. EdgeCloudSim: An environment for performance evaluation of edge computing systems. Trans. Emerg. Telecommun. Technol. 2018, 29, 1–17. [Google Scholar] [CrossRef]

- Zeng, X.; Garg, S.K.; Strazdins, P.; Jayaraman, P.P.; Georgakopoulos, D.; Ranjan, R. IOTSim: A simulator for analysing IoT applications. J. Syst. Archit. 2017, 72, 93–107. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.F.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Blas, M.J.; Gonnet, S.; Leone, H. An ontology to document a quality scheme specification of a software product. Expert Syst. 2017, 34, 1–21. [Google Scholar] [CrossRef]

- Petersen, K.; Vakkalanka, S.; Kuzniarz, L. Guidelines for conducting systematic mapping studies in software engineering: An update. Inf. Softw. Technol. 2015, 64, 1–18. [Google Scholar] [CrossRef]

- ISO/IEC. Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Measurement of System and Software Product Quality; ISO/IEC 25023: 2016; BSI Group: Geneva, Switzerland, 15 June 2016. [Google Scholar]

- D’Angelo, G.; Ferretti, S.; Ghini, V. Simulation of the Internet of Things. In Proceedings of the 2016 International Conference on High Performance Computing & Simulation (HPCS), Innsbruck, Austria, 18–22 July 2016. [Google Scholar]

- Lynn, T.; Gourinovitch, A.; Byrne, J.; Byrne, P.; Svorobej, S.; Giannoutakis, K.; Kenny, D.; Morrison, J. A Preliminary Systematic Review of Computer Science Literature on Cloud Computing Research using Open Source Simulation Platforms. In Proceedings of the 7th International Conference on Cloud Computing and Services Science, Porto, Portugal, 24–26 April 2017. [Google Scholar]

- Qayyum, T.; Malik, A.W.; Khattak, M.A.K.; Member, S. FogNetSim ++: A Toolkit for Modeling and Simulation of Distributed Fog Environment. IEEE Access 2018, 6, 63570–63583. [Google Scholar] [CrossRef]

- Varga, A.; Hornig, R. An overview of the OMNeT++ simulation environment. In Proceedings of the 1st International Conference on Simulation Tools and Techniques for Communications, Networks and Systems &Workshops, Marseille, France, 3–7 March 2008. [Google Scholar]

- Mayer, R.; Graser, L.; Gupta, H.; Saurez, E.; Ramachandran, U. EmuFog: Extensible and scalable emulation of large-scale fog computing infrastructures. In Proceedings of the 2017 IEEE Fog World Congress (FWC), Santa Clara, CA, USA, 30 October–1 November 2017. [Google Scholar]

- Wette, P.; Draxler, M.; Schwabe, A.; Wallaschek, F.; Hassan Zahraee, M.; Karl, H. Maxinet: Distributed emulation of software-defined networks. In Proceedings of the 2014 IFIP Networking Conference, Trondheim, Norway, 2–4 June 2014. [Google Scholar]

- Coutinho, A.; Greve, F.; Prazeres, C.; Cardoso, J. Fogbed: A Rapid-Prototyping Emulation Environment for Fog Computing. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018. [Google Scholar]

- Duboc, L.; Rosenblum, D.; Wicks, T. A Framework for Characterization and Analysis of Software System Scalability. In Proceedings of the 6th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, Dubrovnik, Croatia, 3–7 September 2007. [Google Scholar]

| iFogSim | FogNetSim++ | EdgeCloudSim | IoTSim | FogTorch II | EmuFog | FogBed | ||

|---|---|---|---|---|---|---|---|---|

| Time behavior | Response time | Y | - | Y | - | Y | Y | Y |

| Processing delay | Y | Y | Y | Y | - | - | - | |

| Network delay | - | Y | Y | - | Y | Y | - | |

| Storage read/write delay | - | - | - | - | - | - | - | |

| Throughput | - | - | - | - | - | - | - | |

| Resource Utilization | Bandwidth utilization | Y | - | Y | Y | Y | - | - |

| Processing utilization | Y | - | - | Y | - | - | - | |

| Memory utilization | - | - | - | - | Y | - | - | |

| I/O devices utilization | - | - | - | - | Y | - | - | |

| Energy consumption | Y | Y | - | - | - | - | - | |

| Capacity | Transaction processing capacity | - | Y | Y | - | - | - | - |

| Availability | System availability | - | - | - | - | - | - | - |

| Fault tolerance | Failure avoidance | - | - | - | - | - | - | - |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashouri, M.; Lorig, F.; Davidsson, P.; Spalazzese, R. Edge Computing Simulators for IoT System Design: An Analysis of Qualities and Metrics. Future Internet 2019, 11, 235. https://doi.org/10.3390/fi11110235

Ashouri M, Lorig F, Davidsson P, Spalazzese R. Edge Computing Simulators for IoT System Design: An Analysis of Qualities and Metrics. Future Internet. 2019; 11(11):235. https://doi.org/10.3390/fi11110235

Chicago/Turabian StyleAshouri, Majid, Fabian Lorig, Paul Davidsson, and Romina Spalazzese. 2019. "Edge Computing Simulators for IoT System Design: An Analysis of Qualities and Metrics" Future Internet 11, no. 11: 235. https://doi.org/10.3390/fi11110235

APA StyleAshouri, M., Lorig, F., Davidsson, P., & Spalazzese, R. (2019). Edge Computing Simulators for IoT System Design: An Analysis of Qualities and Metrics. Future Internet, 11(11), 235. https://doi.org/10.3390/fi11110235