Predicting Rogue Content and Arabic Spammers on Twitter

Abstract

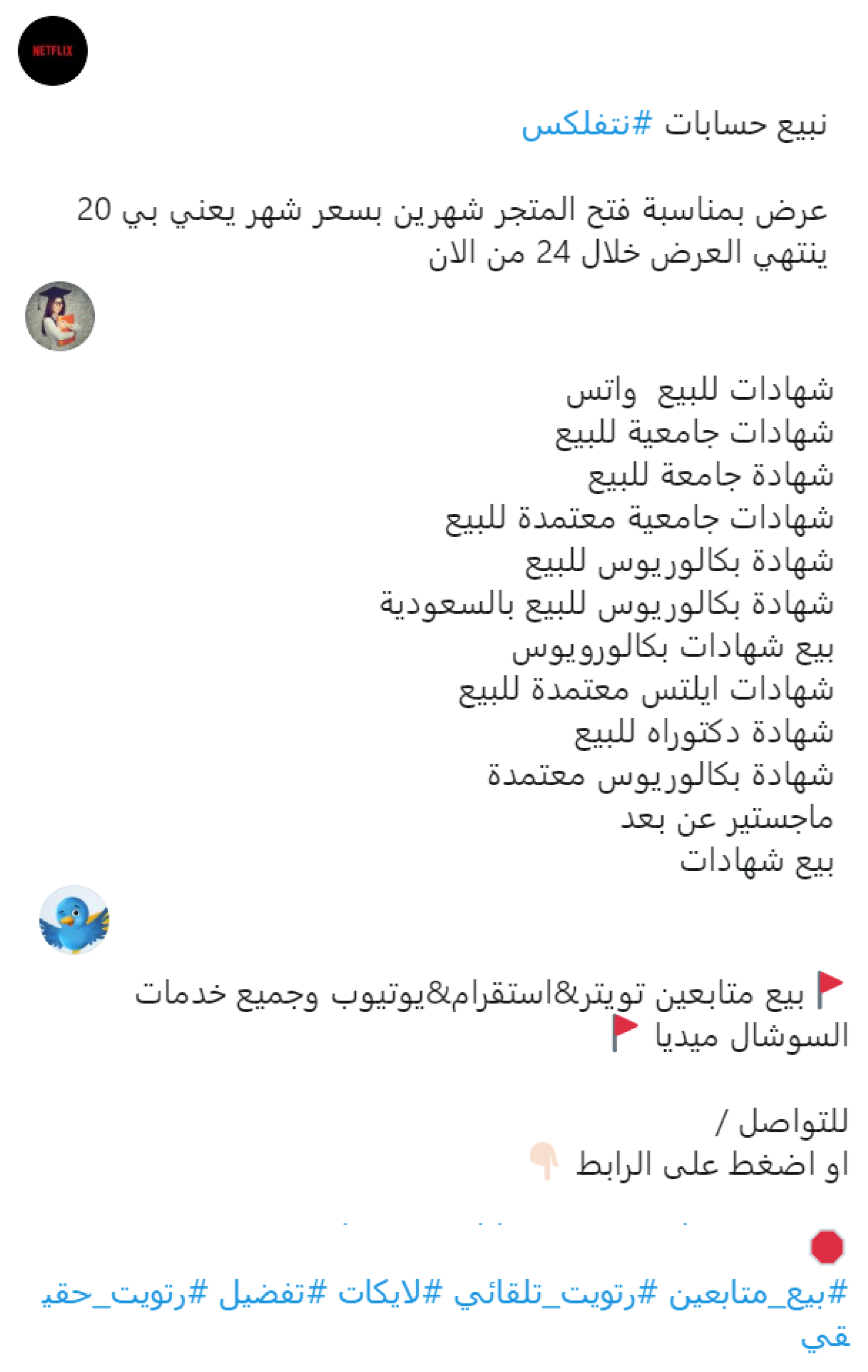

:1. Introduction

- We obtained one of the largest data sets for rogue and spam accounts with Arabic tweets by directly collecting the tweets from such accounts.

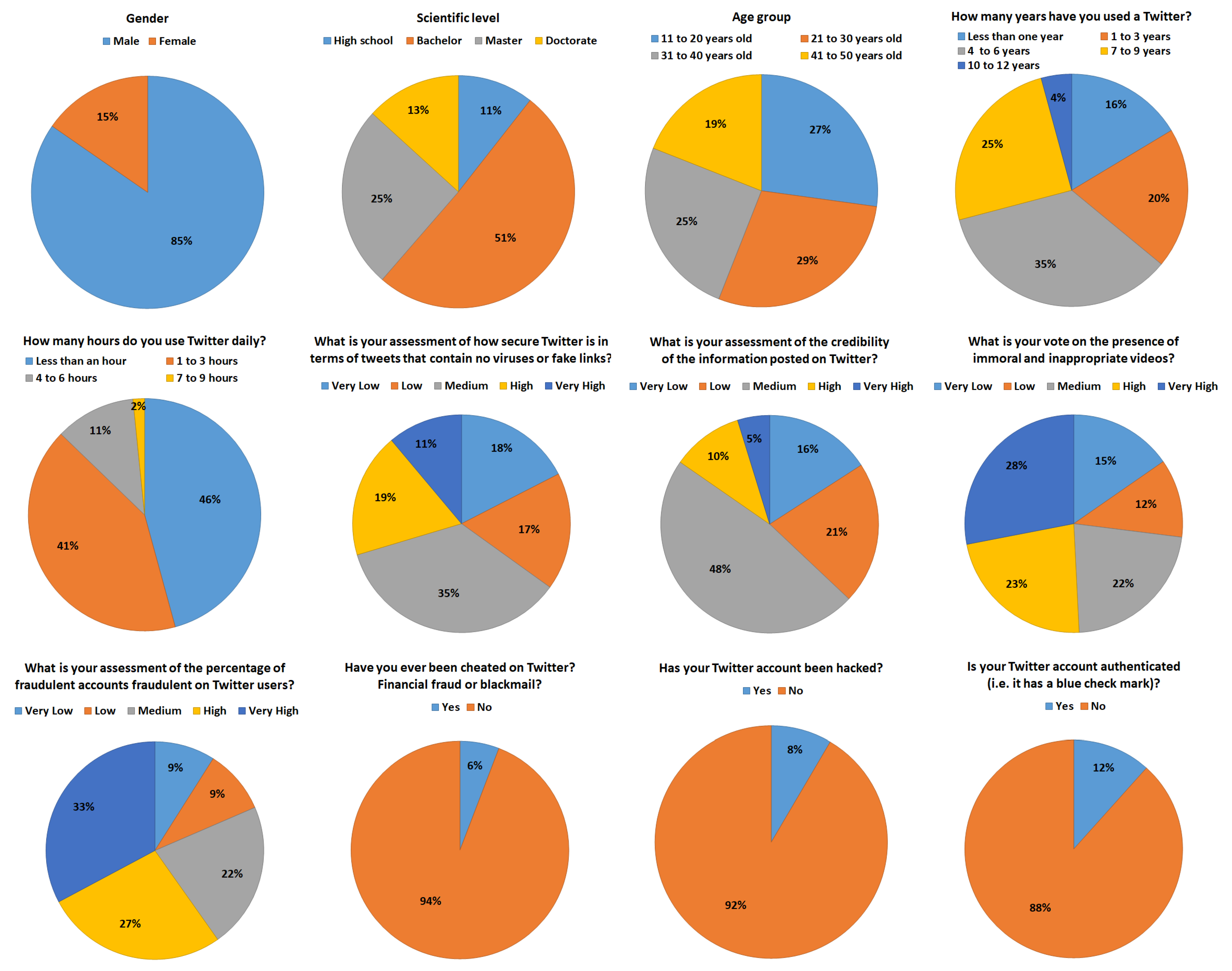

- We surveyed more than 180 Arabian participants by administering Twitter security-related questionnaires to measure and discover the area of security that could be of the most concern to Twitter users.

- We enhanced and engineered 47 of the most effective and simple features based on tweets, profile content, and social graphs.

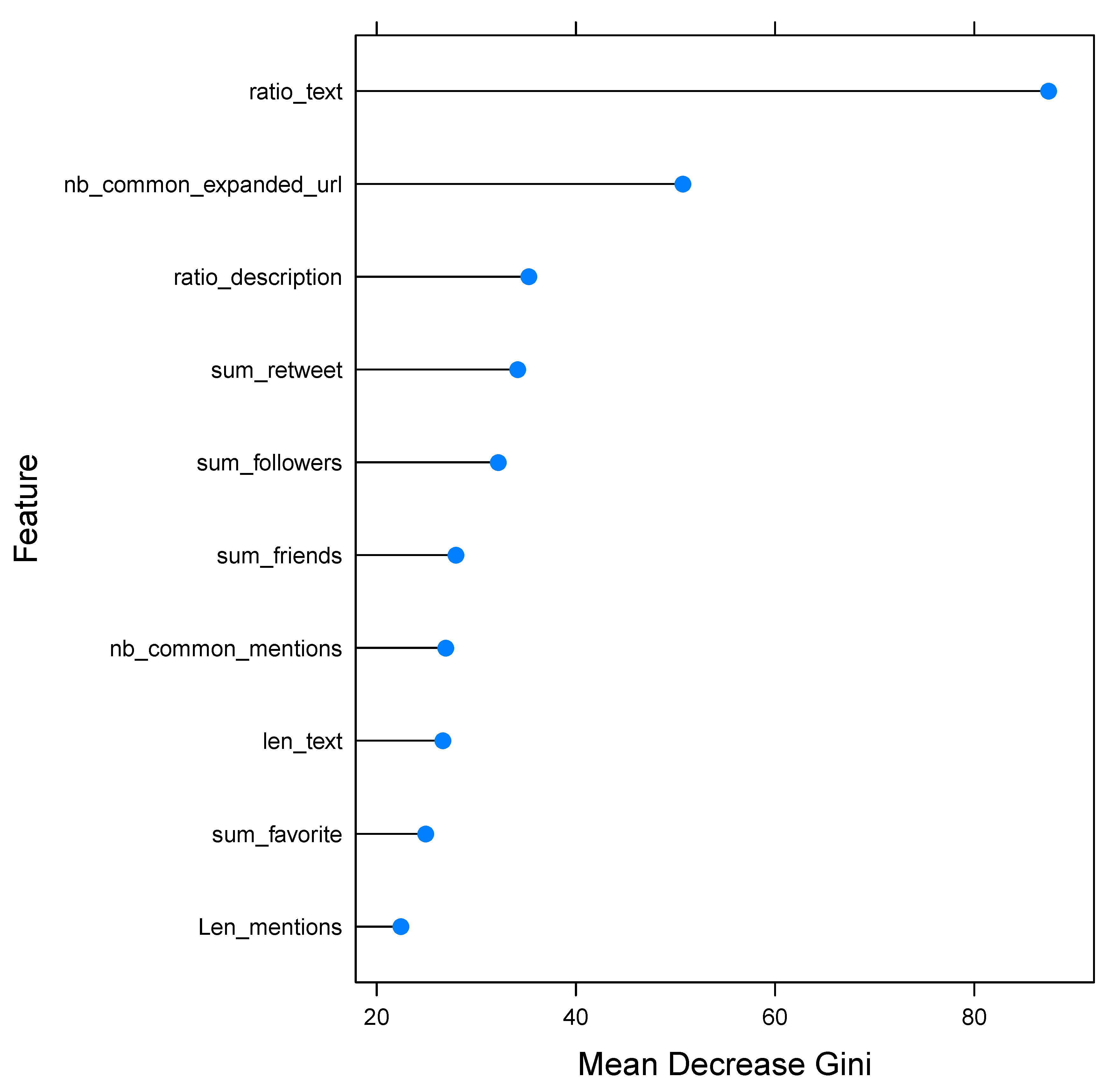

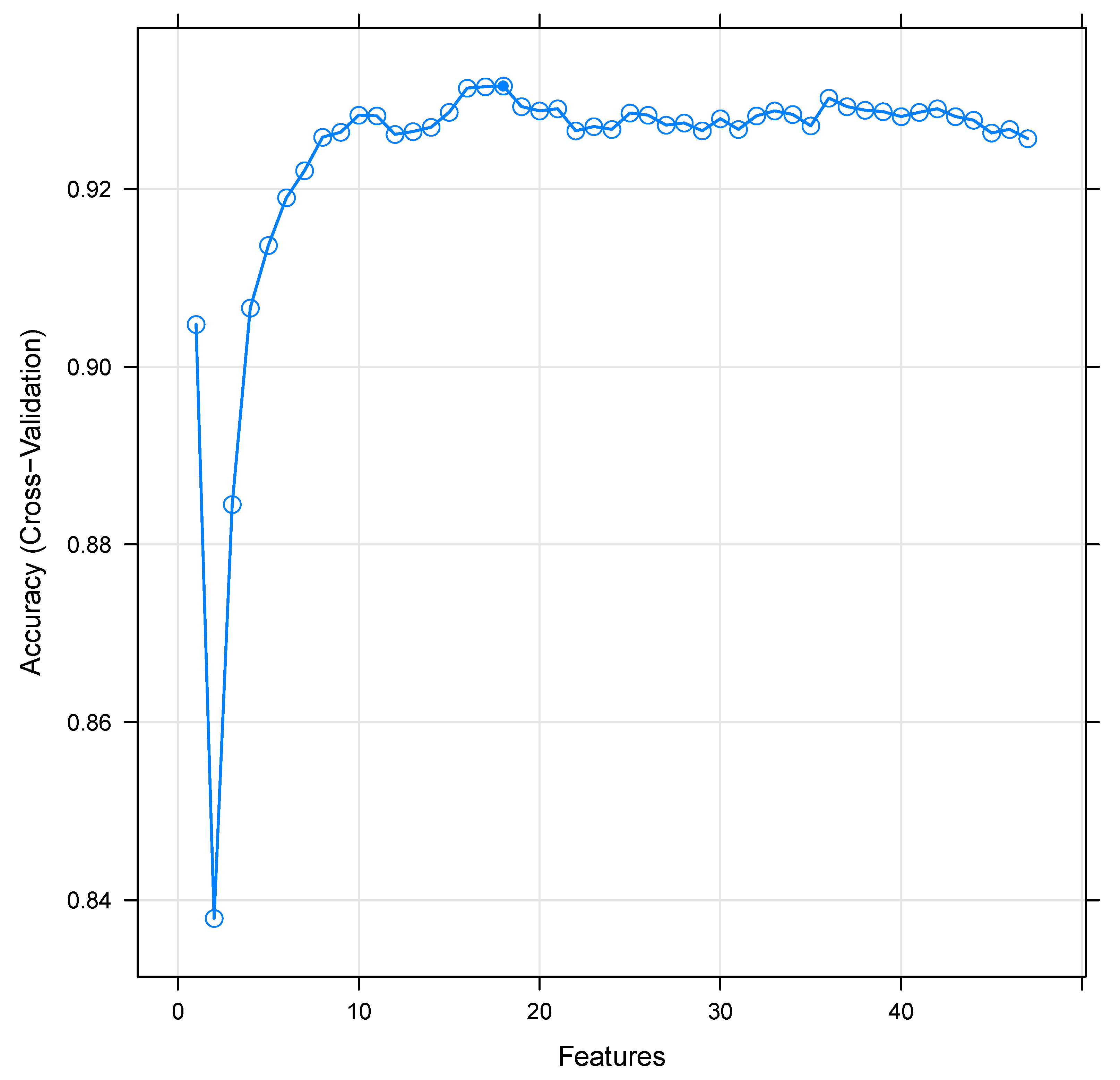

- Using the random forest feature selection method, we evaluated and compared different numbers of features and found that the method with 16 features performed best.

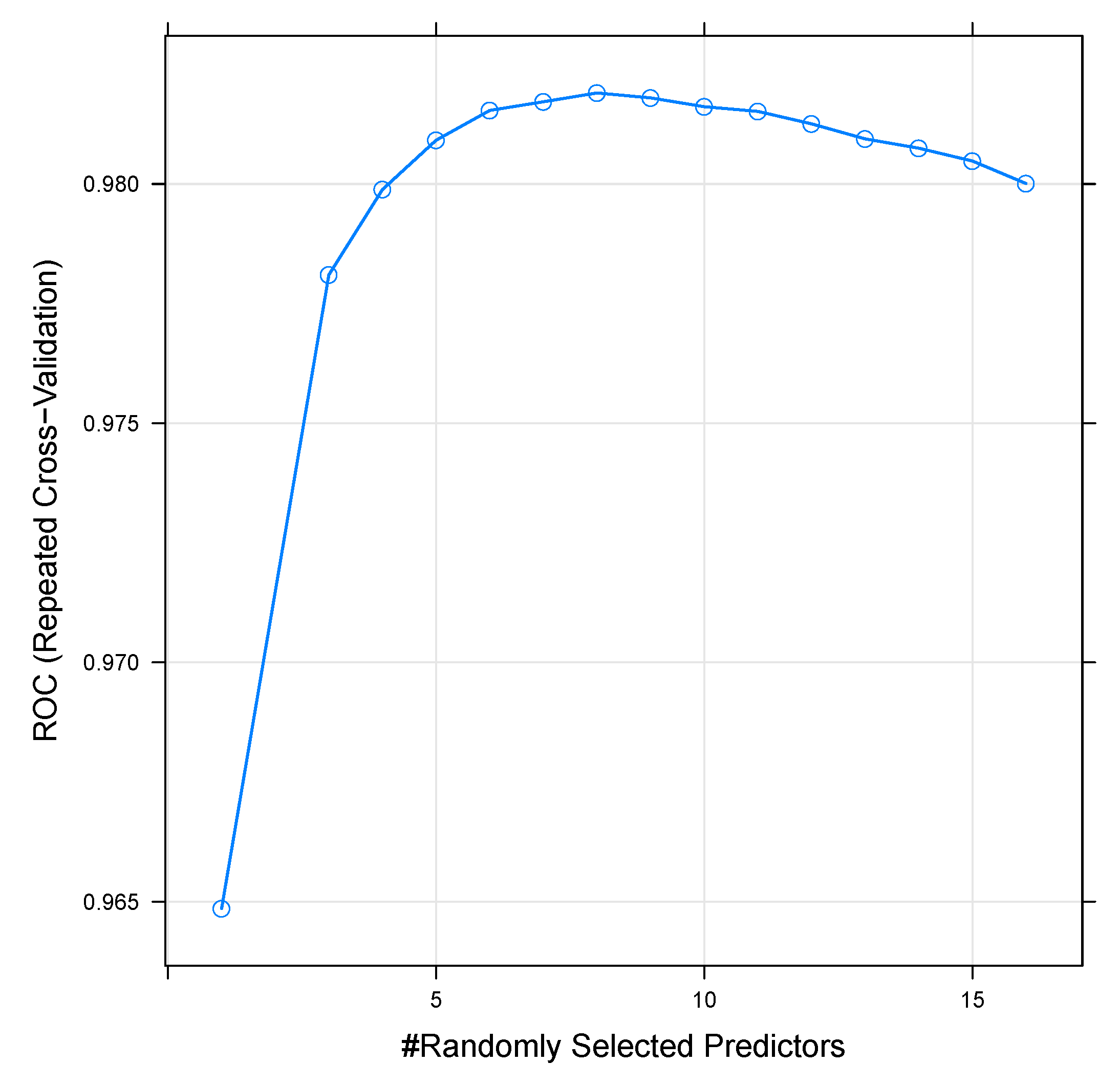

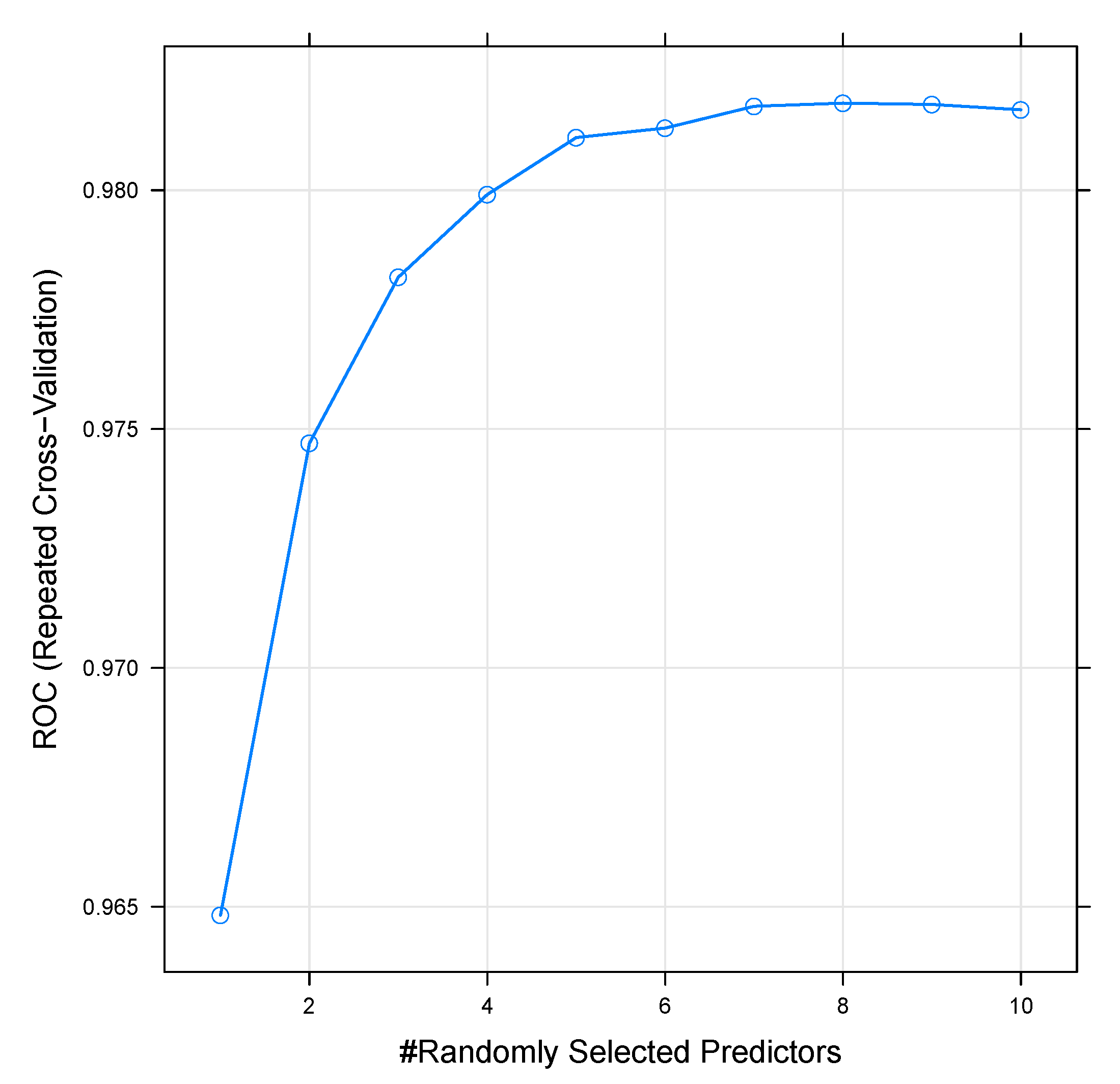

- Using the random forest classifier, we evaluated and compared different numbers of variables, which were randomly sampled as candidates at each split, and we found that the method with 8 variables performed best.

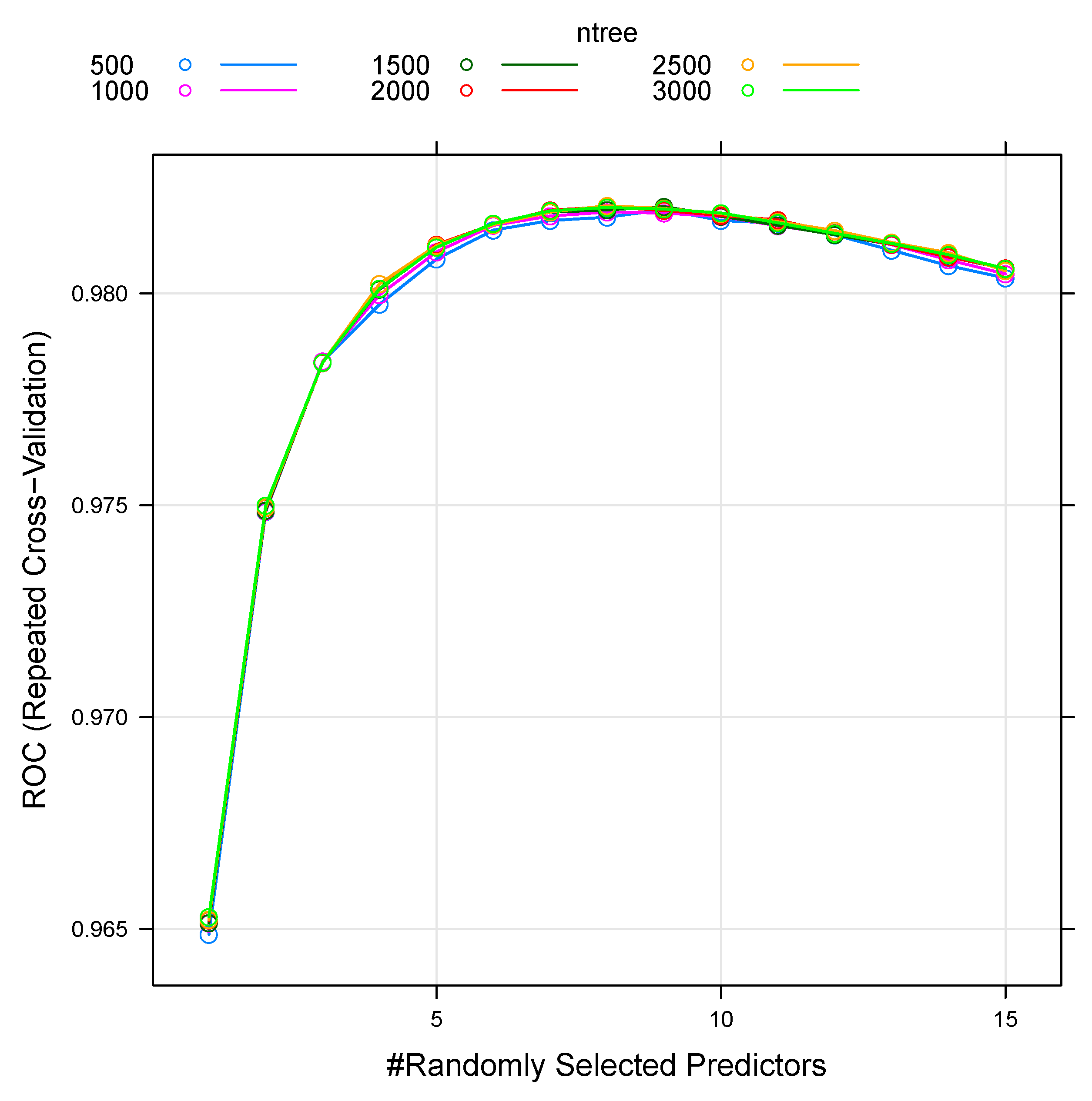

- Using the random forest classifier, we evaluated and compared different numbers of trees and found that 2500 trees performed best.

2. Motivation

3. Literature Review

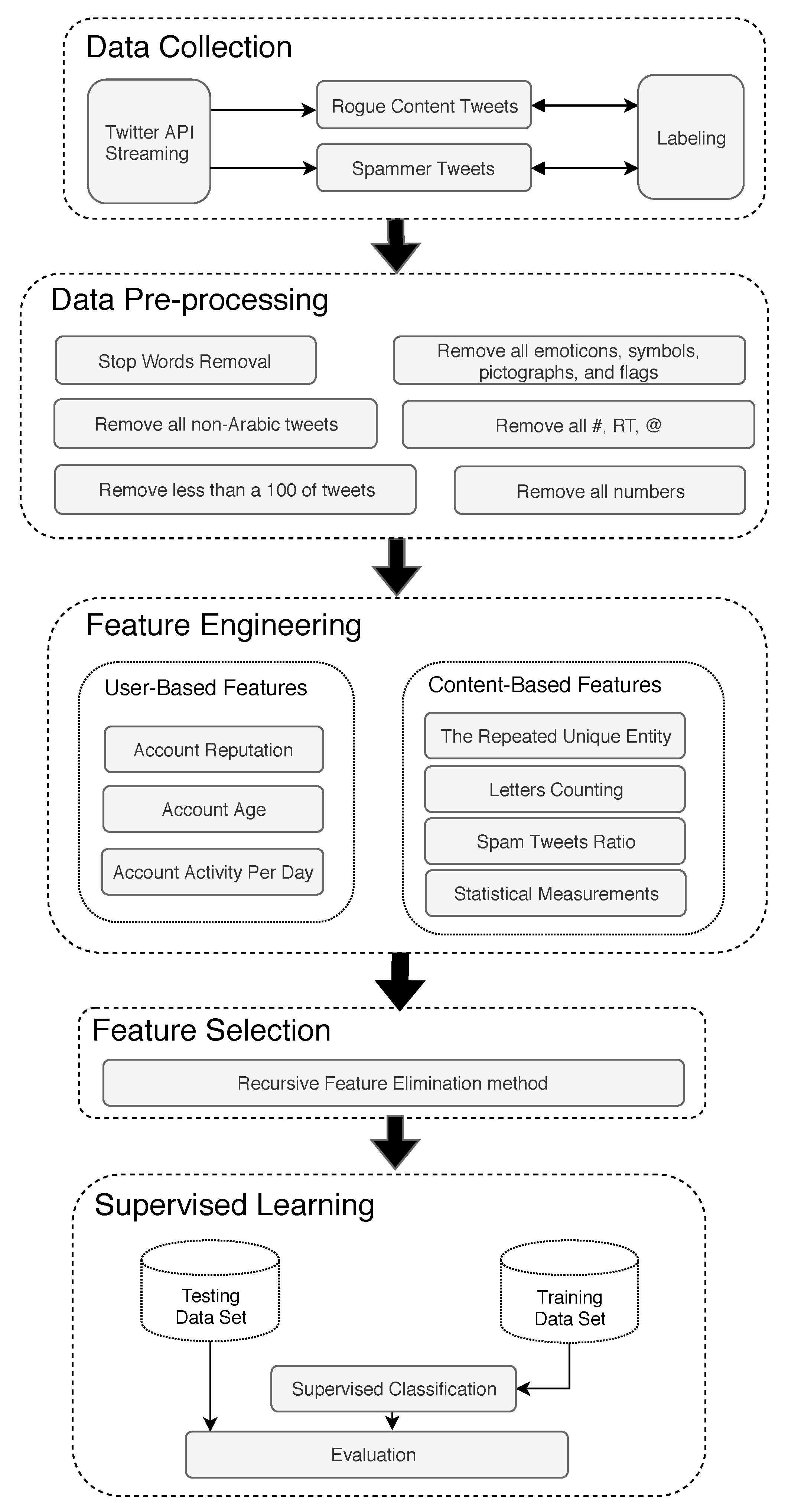

4. Proposed Solution

5. Data Collection

5.1. Constructing the Data Set

5.2. Labeling

6. Data Pre-processing

- All Twitter’s separators such as ’#’ (hashtag symbol), ’RT’ (Retweet indicator), ’’ (mention symbol), and double white spaces in the user’s textual tweets were removed in order to work with clean text.

- All the numbers were removed [1].

- We removed all the stop words by deleting undervalued significant phrases, such as “a”, “an”, and “the”; this is a popular technique in the classification process.

- All emoticons, symbols, pictographs, and flags in the text were removed.

- All tweets that contained characters other than Arabic language characters were removed. Some users tweet or retweet in different languages (e.g., English), and our detection system concentrates only on Arabic tweets. After we applied this process, 62.09% of the tweets were removed.

- Accounts that had fewer than 100 tweets were removed because our data collection process captured fewer tweets then the targeted number of tweets, and fewer tweets for an account meant less information as a basis for building the classification learner model. There were 52,788 user accounts with fewer than 100 tweets. Hence, around 46.40% of the total tweets needed to be removed.

7. Feature Engineering

7.1. User-Based Features

7.1.1. Account Reputation

7.1.2. Account Age

7.1.3. Account Activity Per Day

7.2. Content-Based Features

7.2.1. The Repeated Unique Entity

7.2.2. Letter Counting

7.2.3. Spam Tweet Ratio

7.2.4. Statistical Measurements

8. Feature Analysis

8.1. Feature Ranking

- The Gini index is calculated at the root node and at both leaves each time a feature is used to divide data at a node. The Gini index reflects homogeneity: it is 0 for all-homogeneous data and 1 for all-heterogeneous data.

- The distinction between the child nodes’ Gini index and the dividing root node for the feature is calculated and standardized.

- It is also said that the nodes result in data ’purity’, which implies that the data are categorized more easily and efficiently. If the purity is high, then there is a large mean reduction in the Gini index.

- Therefore, the mean decrease in the Gini index is the highest for the most important feature.

- Such features are useful for classifying data and, when used at a node, are likely to split the data into pure single-class nodes. Therefore, during splitting, they are used first.

- Therefore, for each feature, the general mean decrease in Gini importance is calculated as the percentage of the sum of the number of splits in all trees that include the function to the number of samples it divides.

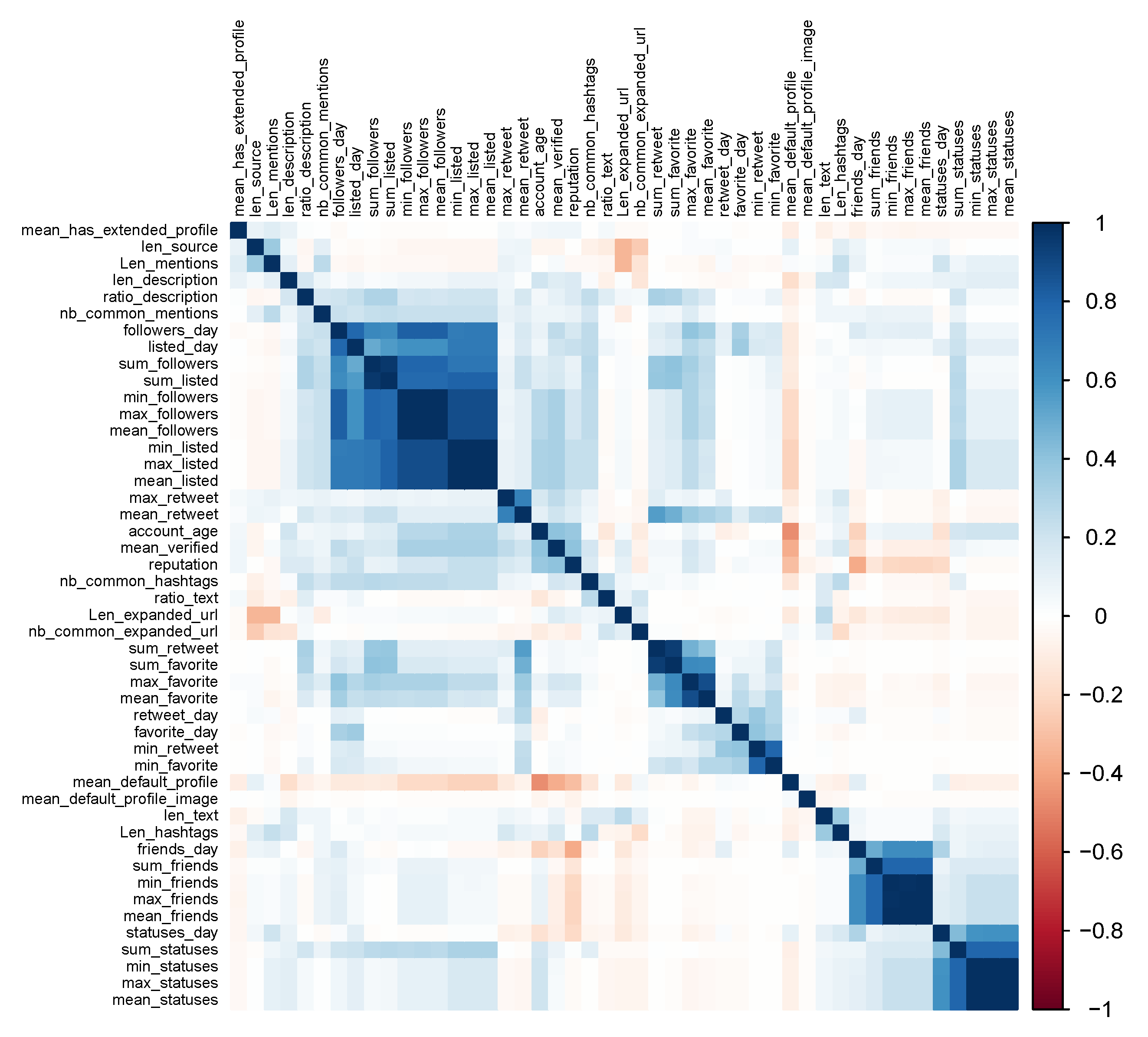

8.2. Feature Relationship

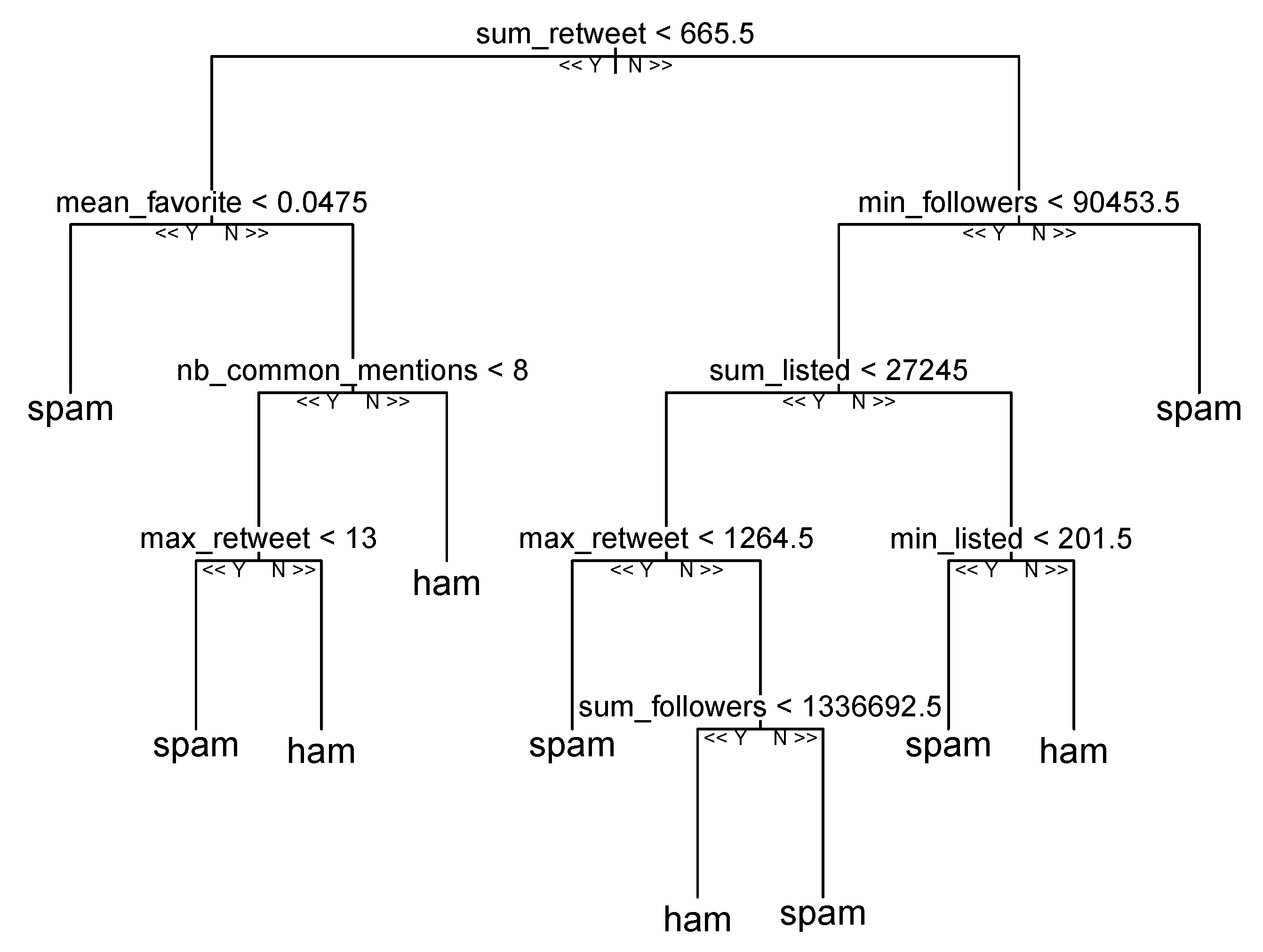

9. Supervised Classification

10. Experimental Result

10.1. Feature Selection Results

10.2. Tuning Classification Algorithm Parameters

10.2.1. Random Search

10.2.2. Grid Search

10.2.3. Search by the Number of Trees

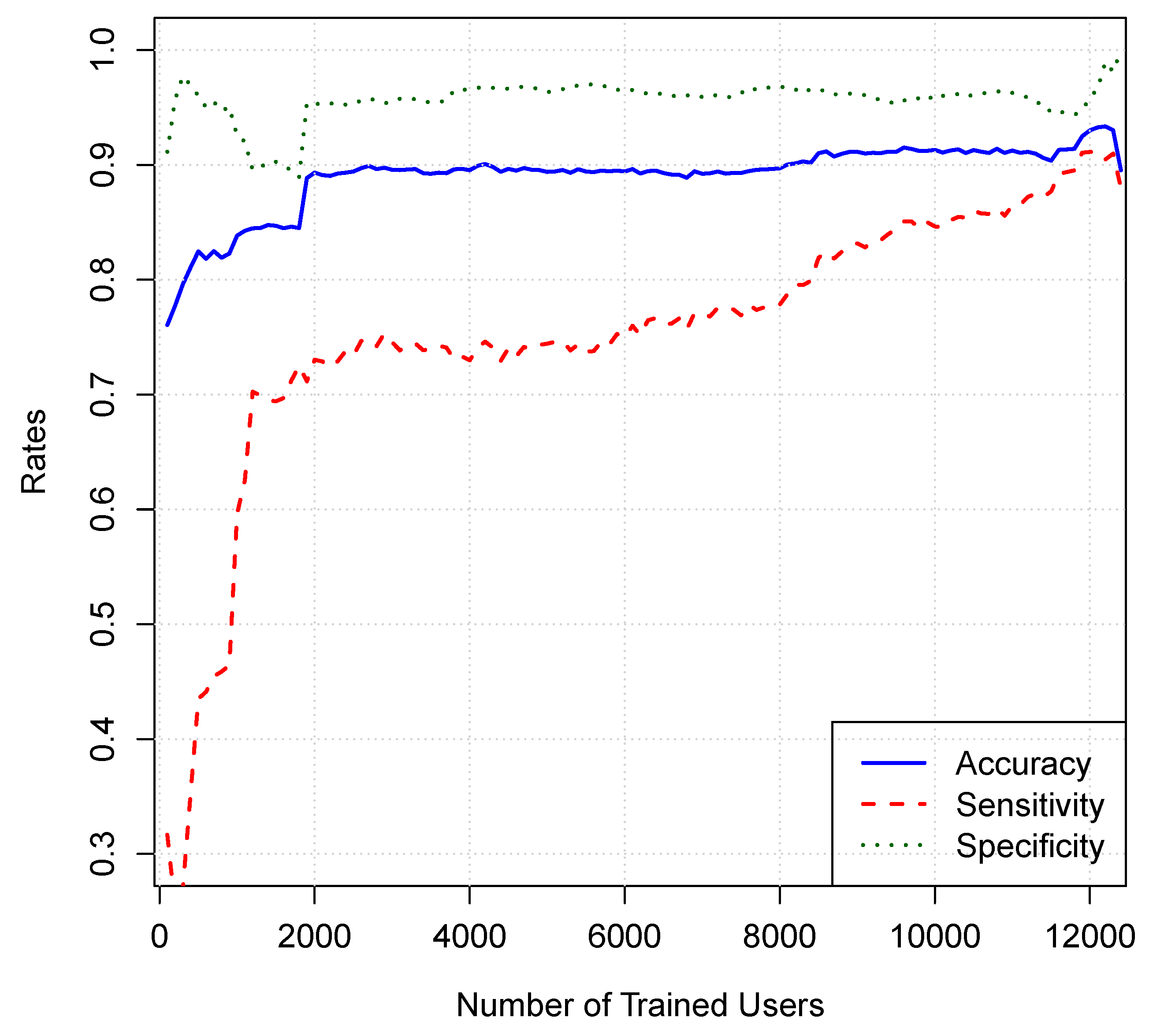

10.3. Performance Results for a Different Number of Users

11. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Abozinadah, E.A.; Mbaziira, A.V.; Jones, J. Detection of abusive accounts with Arabic tweets. Int. J. Knowl. Eng.-IACSIT 2015, 1, 113–119. [Google Scholar] [CrossRef]

- El-Mawass, N.; Alaboodi, S. Detecting Arabic spammers and content polluters on Twitter. In Proceedings of the 2016 Sixth International Conference on Digital Information Processing and Communications (ICDIPC), Beirut, Lebanon, 21–23 April 2016; pp. 53–58. [Google Scholar]

- Abdurabb, K. Saudi Arabia has highest number of active Twitter users in the Arab world. Arab News, 27 June 2014. [Google Scholar]

- Mari, M. Twitter usage is booming in Saudi Arabia. GlobalWebIndex (Blog) 2013, 20. Available online: https://blog.globalwebindex.com/chart-of-the-day/twitter-usage-is-booming-in-saudi-arabia/ (accessed on 29 October 2019).

- Benevenuto, F.; Magno, G.; Rodrigues, T.; Almeida, V. Detecting spammers on twitter. In Collaboration, Electronic Messaging, Anti-Abuse and Spam Conference (CEAS); CiteSeerx: Princeton, NJ, USA, 2010; Volume 6, p. 12. [Google Scholar]

- Mccord, M.; Chuah, M. Spam detection on twitter using traditional classifiers. In International Conference on Autonomic and Trusted Computing; Springer: Berlin, Germany, 2011; pp. 175–186. [Google Scholar]

- Wang, A.H. Don’t follow me: Spam detection in twitter. In Proceedings of the 2010 International Conference on Security and Cryptography (SECRYPT), Athens, Greece, 26–28 July 2010; pp. 1–10. [Google Scholar]

- Alhumoud, S.O.; Altuwaijri, M.I.; Albuhairi, T.M.; Alohaideb, W.M. Survey on arabic sentiment analysis in twitter. Int. Sci. Index 2015, 9, 364–368. [Google Scholar]

- Chaabane, A.; Chen, T.; Cunche, M.; De Cristofaro, E.; Friedman, A.; Kaafar, M.A. Censorship in the wild: Analyzing Internet filtering in Syria. In Proceedings of the 2014 Conference on Internet Measurement Conference, Vancouver, BC, Canada, 5–7 November 2014; ACM: New York, NY, USA, 2014; pp. 285–298. [Google Scholar]

- El-Mawass, N.; Alaboodi, S. Data Quality Challenges in Social Spam Research. J. Data Inf. Qual. 2017, 9, 4. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Domanski, R.J. Hacktivism and distributed hashtag spoiling on Twitter: Tales of the IranTalks. First Monday 2018, 23. [Google Scholar] [CrossRef]

- Ameen, A.K.; Kaya, B. Detecting spammers in twitter network. Int. J. Appl. Math. Electron. Comput. 2017, 5, 71–75. [Google Scholar] [CrossRef]

- Al-Rubaiee, H.; Qiu, R.; Alomar, K.; Li, D. Sentiment analysis of Arabic tweets in e-learning. J. Comput. Sci. 2016, 11, 553–563. [Google Scholar] [CrossRef]

- Sriram, B.; Fuhry, D.; Demir, E.; Ferhatosmanoglu, H.; Demirbas, M. Short text classification in twitter to improve information filtering. In Proceedings of the 33rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Geneva, Switzerland, 19–23 July 2019; ACM: New York, NY, USA, 2010; pp. 841–842. [Google Scholar] [Green Version]

- Mubarak, H.; Darwish, K.; Magdy, W. Abusive language detection on Arabic social media. In Proceedings of the First Workshop on Abusive Language Online, Vancouver, BC, Canada, 4–7 August 2017; pp. 52–56. [Google Scholar]

- Alshehri, A.; Nagoudi, A.; Hassan, A.; Abdul-Mageed, M. Think before your click: Data and models for adult content in arabic twitter. In Proceedings of the 2nd Text Analytics for Cybersecurity and Online Safety (TA-COS-2018); 2018. Available online: https://pdfs.semanticscholar.org/0515/b46e219b2ea6e7f843e42e79ed2cf5591b61.pdf (accessed on 29 October 2019).

- Al-Eidan, R.M.B.; Al-Khalifa, H.S.; Al-Salman, A.S. Measuring the credibility of Arabic text content in Twitter. In Proceedings of the 2010 Fifth International Conference on Digital Information Management (ICDIM), Thunder Bay, ON, Canada, 5–8 July 2010; pp. 285–291. [Google Scholar]

- Rsheed, N.A.; Khan, M.B. Predicting the popularity of trending arabic news on twitter. In Proceedings of the 6th International Conference on Management of Emergent Digital EcoSystems, Buraidah, Al Qassim, Saudi Arabia, 15–17 September 2014; pp. 15–19. [Google Scholar]

- Vijayarani, S.; Janani, R. Text mining: Open source tokenization tools—An analysis. Adv. Comput. Intell. 2016, 3, 37–47. [Google Scholar]

- Perera, R.D.; Anand, S.; Subbalakshmi, K.; Chandramouli, R. Twitter analytics: Architecture, tools and analysis. In Proceedings of the 2010-MILCOM 2010 Military Communications Conference, San Jose, CA, USA, 31 October–3 November 2010; pp. 2186–2191. [Google Scholar]

- Haidar, B.; Chamoun, M.; Serhrouchni, A. A multilingual system for cyberbullying detection: Arabic content detection using machine learning. Adv. Sci. Technol. Eng. Syst. J. 2017, 2, 275–284. [Google Scholar] [CrossRef]

- Abbasi, A.; Chen, H. Applying authorship analysis to Arabic web content. In International Conference on Intelligence and Security Informatics; Springer: Berlin, Germany, 2005; pp. 183–197. [Google Scholar]

- Al-Shammari, E.T.; Lin, J. Towards an error-free Arabic stemming. In Proceedings of the 2nd ACM Workshop on Improving Non English Web Searching, Napa Valley, CA, USA, 30 October 2008; pp. 9–16. [Google Scholar]

- Xie, X.; Ho, J.W.; Murphy, C.; Kaiser, G.; Xu, B.; Chen, T.Y. Testing and validating machine learning classifiers by metamorphic testing. J. Syst. Softw. 2011, 84, 544–558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sandri, M.; Zuccolotto, P. A bias correction algorithm for the Gini variable importance measure in classification trees. J. Comput. Graph. Stat. 2008, 17, 611–628. [Google Scholar] [CrossRef]

- Louppe, G.; Wehenkel, L.; Sutera, A.; Geurts, P. Understanding variable importances in forests of randomized trees. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 431–439. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. InIjcai 1995, 14, 1137–1145. [Google Scholar]

- Sedgwick, P. Pearson’s correlation coefficient. BMJ 2012, 345, e4483. [Google Scholar] [CrossRef]

- Chen, P.Y.; Smithson, M.; Popovich, P.M. Correlation: Parametric and Nonparametric Measures; No. 139; Sage: New York, NY, USA, 2002. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Wainberg, M.; Alipanahi, B.; Frey, B.J. Are random forests truly the best classifiers? J. Mach. Learn. Res. 2016, 17, 3837–3841. [Google Scholar]

- Brownlee, J. Machine Learning Mastery. Available online: http://machinelearningmastery.com/discover-feature-engineering-howtoengineer-features-and-how-to-getgood-at-it (accessed on 29 October 2019).

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation. In Australasian Joint Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Granitto, P.M.; Furlanello, C.; Biasioli, F.; Gasperi, F. Recursive feature elimination with random forest for PTR-MS analysis of agroindustrial products. Chemom. Intell. Lab. Syst. 2006, 83, 83–90. [Google Scholar] [CrossRef]

- Robnik-Šikonja, M. Improving random forests. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 2004; pp. 359–370. [Google Scholar]

- Sonobe, R.; Tani, H.; Wang, X.; Kobayashi, N.; Shimamura, H. Parameter tuning in the support vector machine and random forest and their performances in cross-and same-year crop classification using TerraSAR-X. Int. J. Remote. Sens. 2014, 35, 7898–7909. [Google Scholar] [CrossRef]

- Jamal, N.; Xianqiao, C.; Aldabbas, H. Deep Learning-Based Sentimental Analysis for Large-Scale Imbalanced Twitter Data. Future Internet 2019, 11, 190. [Google Scholar] [CrossRef]

- Imam, N.; Issac, B.; Jacob, S.M. A Semi-Supervised Learning Approach for Tackling Twitter Spam Drift. Int. J. Comput. Intell. Appl. 2019, 18, 1950010. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alharbi, A.R.; Aljaedi, A. Predicting Rogue Content and Arabic Spammers on Twitter. Future Internet 2019, 11, 229. https://doi.org/10.3390/fi11110229

Alharbi AR, Aljaedi A. Predicting Rogue Content and Arabic Spammers on Twitter. Future Internet. 2019; 11(11):229. https://doi.org/10.3390/fi11110229

Chicago/Turabian StyleAlharbi, Adel R., and Amer Aljaedi. 2019. "Predicting Rogue Content and Arabic Spammers on Twitter" Future Internet 11, no. 11: 229. https://doi.org/10.3390/fi11110229