Simple and Efficient Computational Intelligence Strategies for Effective Collaborative Decisions

Abstract

1. Introduction

- (i)

- A novel tensor factorization strategy, which adapts radial basis of Gaussian type to solve the cold start problem is proposed.

- (ii)

- A hybrid collaborative system using the speed of the multilayer perceptron and the simplicity and accuracy of Tensor Factorization to produce fast and accurate model for improved performance to ensure a scalable processing of big data is proposed.

- (iii)

- Our novelty lies in the fact that, our proposed models; MLP and MTF which are jointly trained promotes feature engineering and memorization which could be used as basis for future learning through our special tweaking strategy. We wish to draw readers attention to the fact that, our model is not an ensemble where each model is trained disjointly and then combining their predictions in the final stage. We train a deep neural network over the corresponding user information over the latent factors from the user matrix via tensor factorization which is innovative.

2. Review of Related Works

3. Materials and Methods

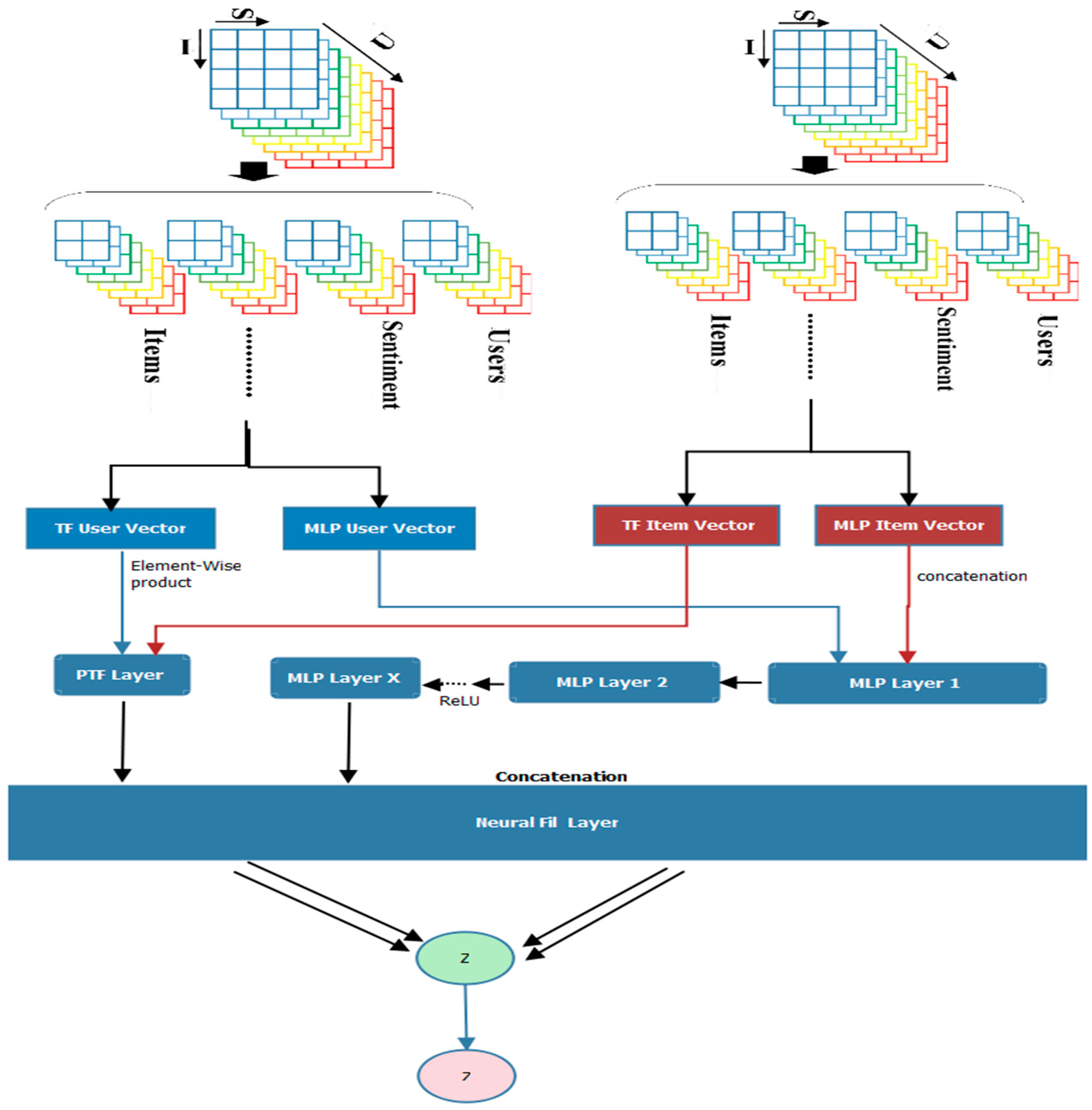

3.1. General Framework

3.2. Multi-Task Tensor Factorization

3.3. Proposed Deep Neural Filtering Model

3.4. Learning the Joint Model of Non-Linear Tensor Factorization and Multilayer Perceptron

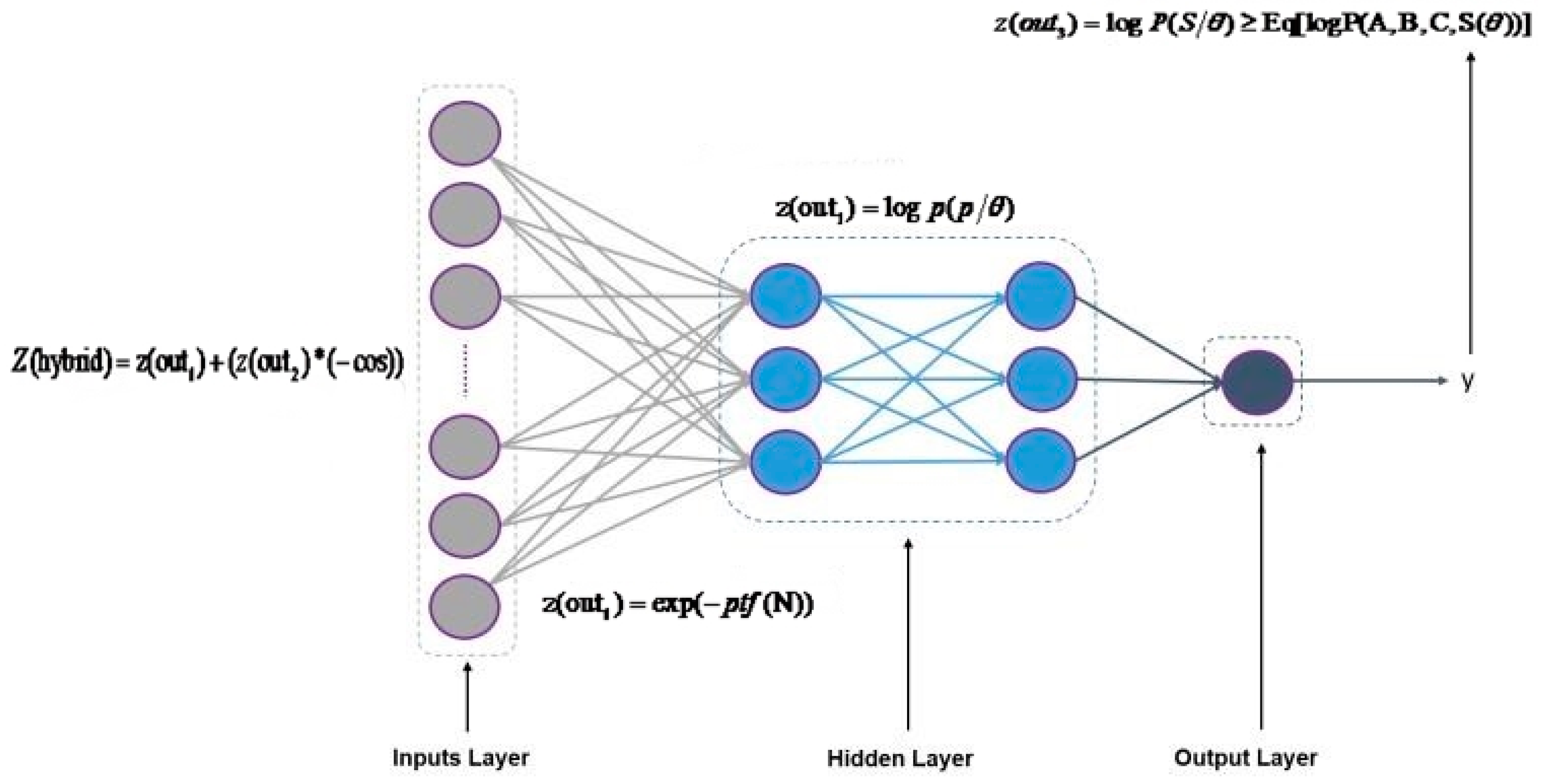

3.5. Proposed Deep Neural Prediction Model

4. Model Integration

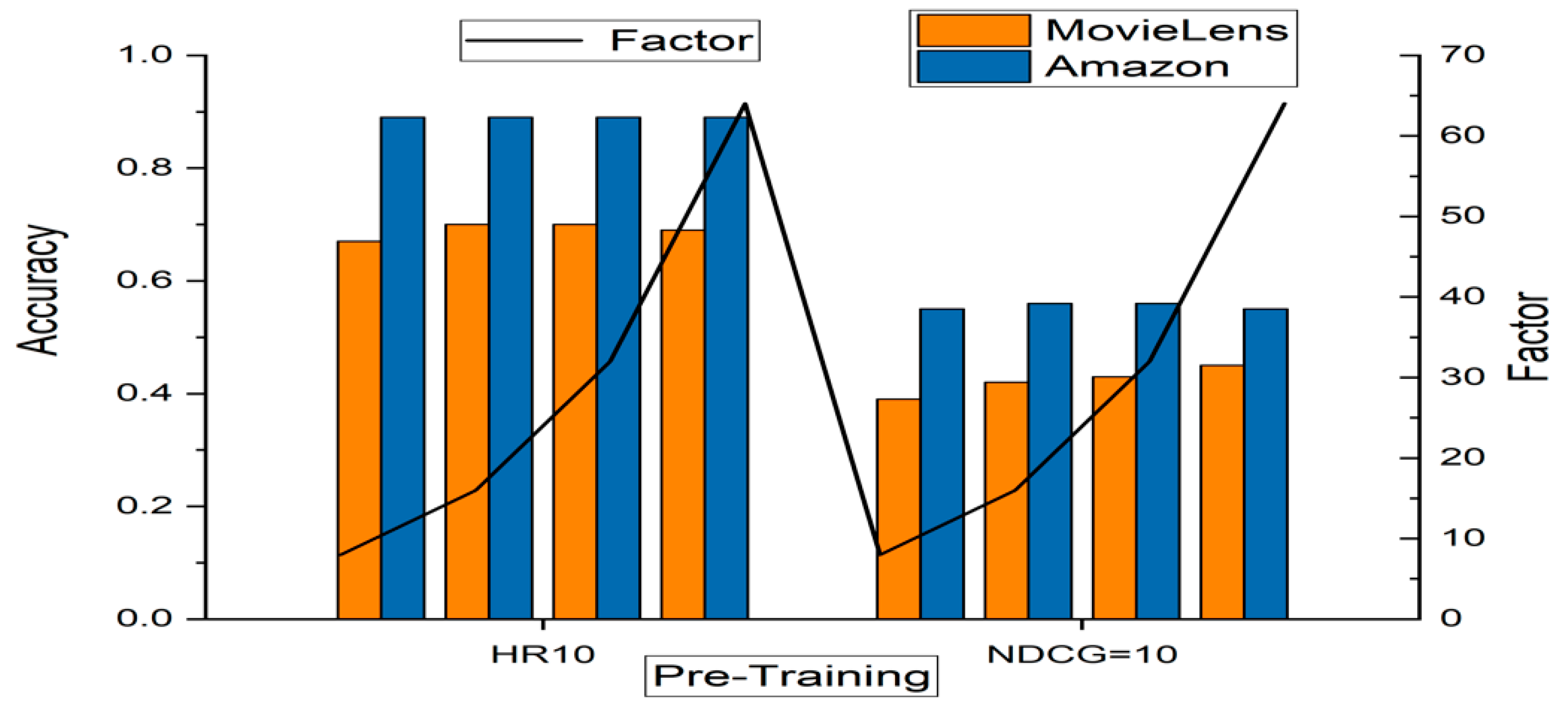

Pre-Training

5. Results

5.1. Data Preparation

5.2. Evaluation

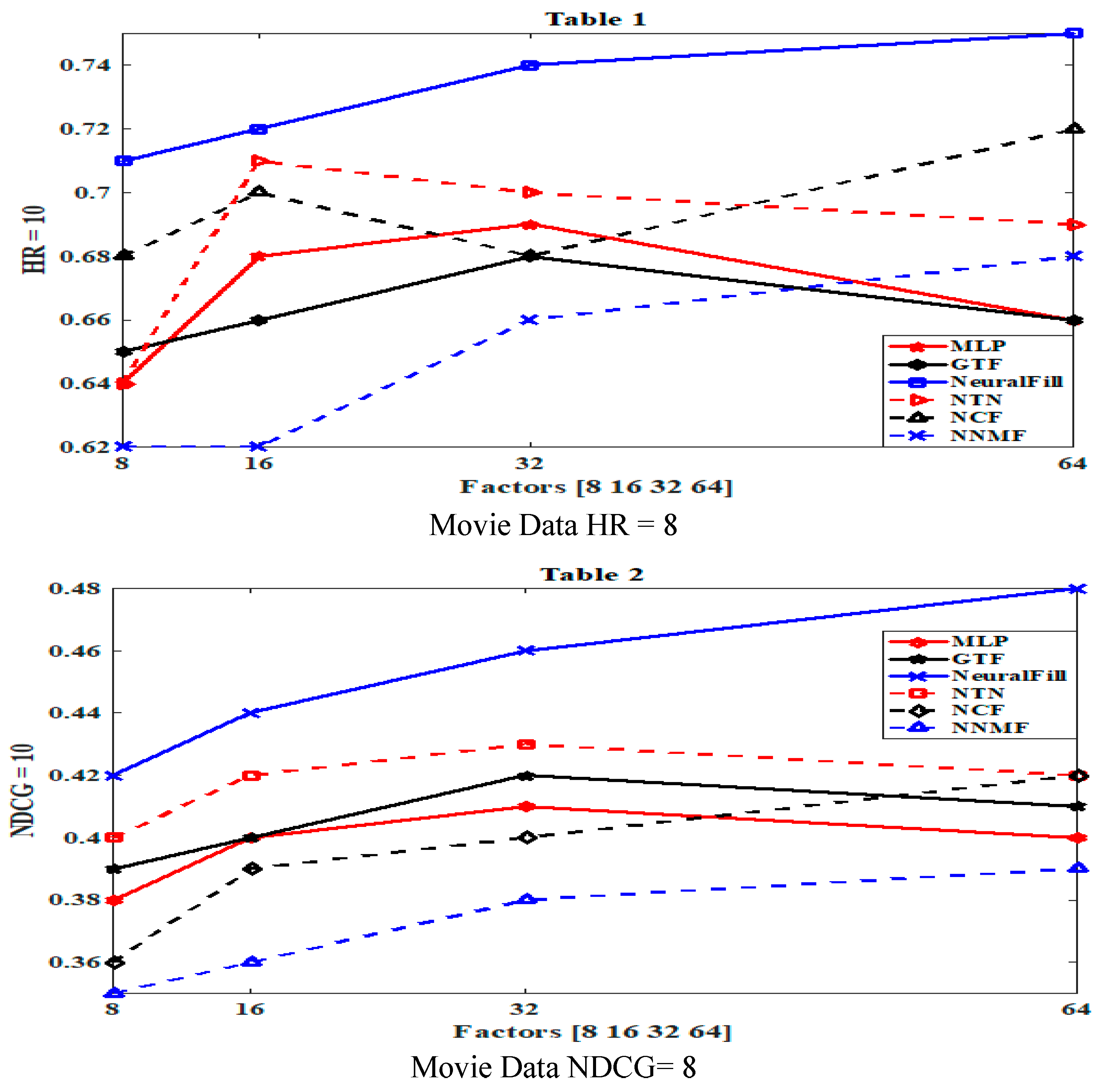

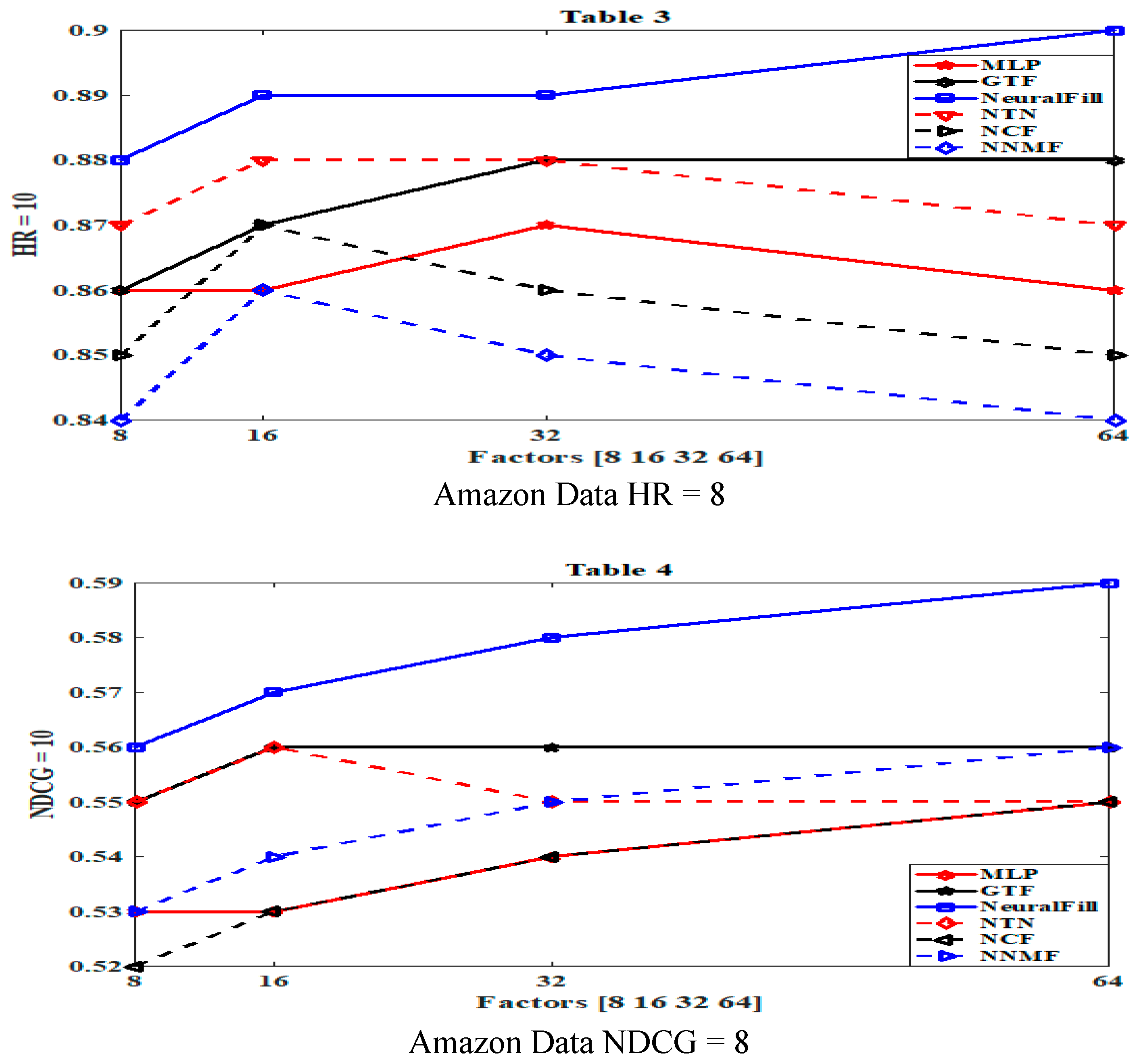

5.3. Efficiency of NeuralFil

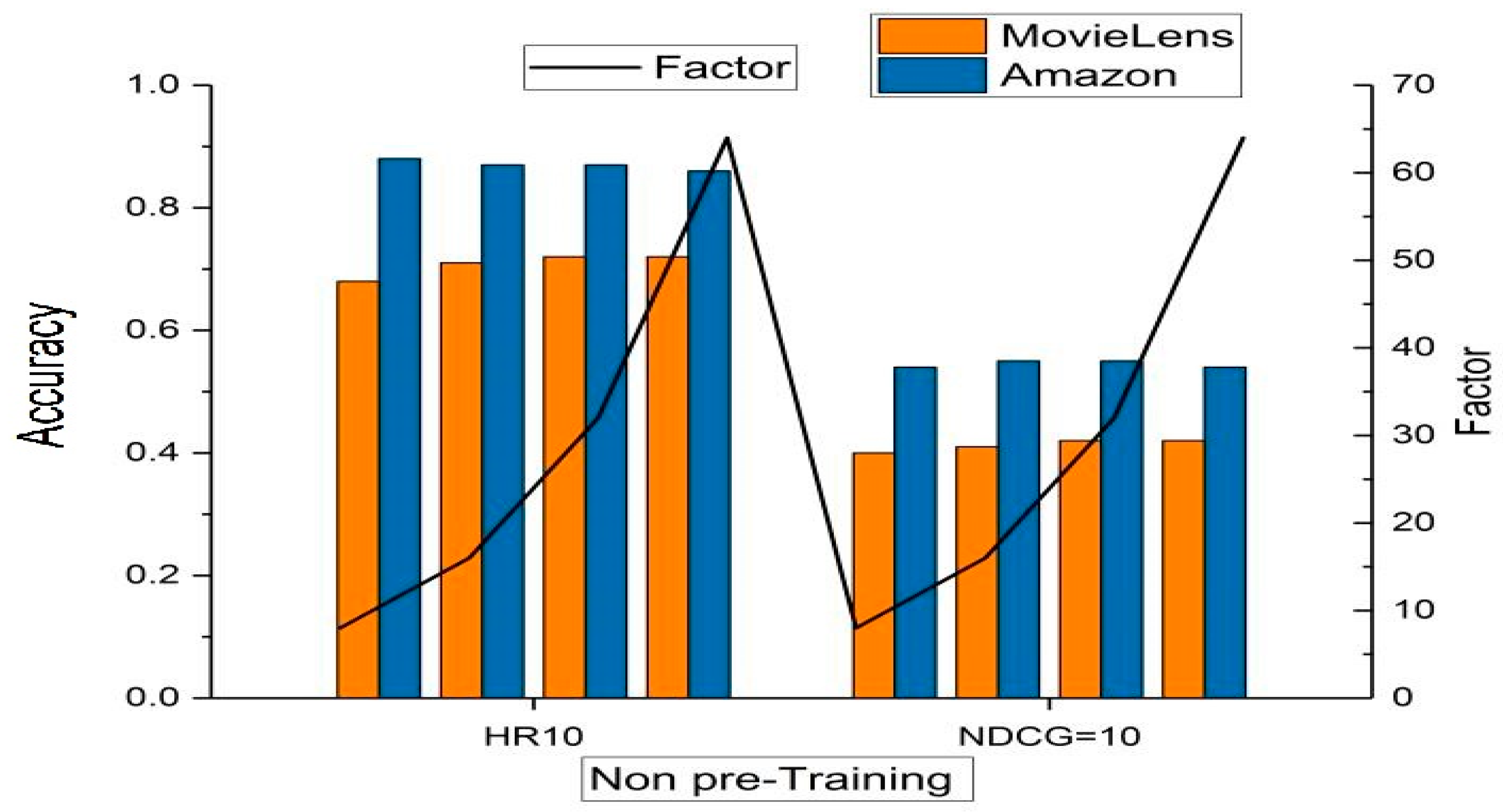

5.4. Pre-Training Strategy

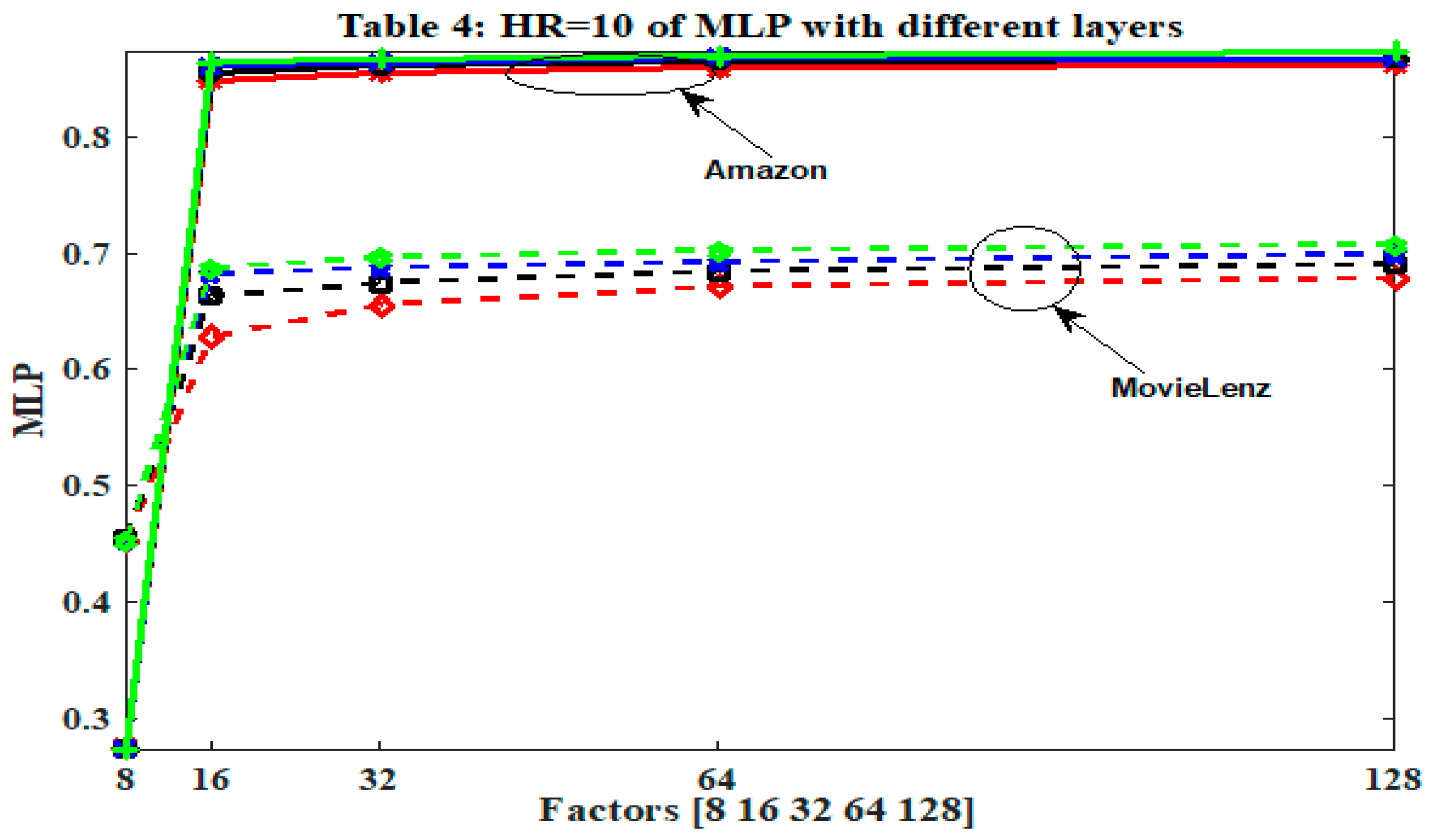

5.5. The Efficacy of DNN for modelling User-Item Interaction

5.6. Computational Complexity Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cichocki, A. Era of Big Data Processing: A New Approach via Tensor Networks and Tensor Decompositions. arXiv, 2014; arXiv:1403.2048. [Google Scholar]

- Mujawar, S. Big Data: Tools and Applications. Int. J. Comput. Appl. 2015, 115, 7–11. [Google Scholar] [CrossRef]

- Cichocki, A. Tensor networks for big data analytics and large-scale optimization problems. arXiv, 2014; arXiv:1407.3124. [Google Scholar]

- Park, D.H.; Kim, H.K.; Choi, I.Y.; Kim, J.K. A literature review and classification of recommender systems research. Expert Syst. Appl. 2012, 39, 10059–10072. [Google Scholar] [CrossRef]

- Zheng, L.; Noroozi, V.; Yu, P.S. Joint Deep Modeling of Users and Items Using Reviews for Recommendation. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; pp. 425–433. [Google Scholar]

- Zhang, Q.; Wu, D.; Lu, J.; Liu, F.; Zhang, G. A cross-domain recommender system with consistent information transfer. Decis. Support Syst. 2017, 104, 49–63. [Google Scholar] [CrossRef]

- Sun, M.; Li, F.; Zhang, J. A Multi-Modality Deep Network for Cold-Start Recommendation. Big Data Cogn. Comput. 2018, 2, 7. [Google Scholar]

- Han, H.; Jain, A.K.; Shan, S.; Chen, X. Heterogeneous Face Attribute Estimation: A Deep Multi-Task Learning Approach. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2597–2609. [Google Scholar] [CrossRef] [PubMed]

- Suphavilai, C.; Bertrand, D.; Nagarajan, N. Predicting Cancer Drug Response using a Recommender System. Bioinformatics 2018, 34, 3907–3914. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Tan, P.-N.; Zhou, J.; Luo, L. Online Multi-task Learning Framework for Ensemble Forecasting. IEEE Trans. Knowl. Data Eng. 2017, 29, 1268–1280. [Google Scholar] [CrossRef]

- Brodén, B.; Hammar, M.; Nilsson, B.J.; Paraschakis, D. Ensemble Recommendations via Thompson Sampling: An Experimental Study within e-Commerce. In Proceedings of the International Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 25–28 July 2018; Volume 74, pp. 19–29. [Google Scholar]

- Fang, X.; Pan, R.; Cao, G.; He, X.; Dai, W. Personalized Tag Recommendation through Nonlinear Tensor Factorization Using Gaussian Kernel. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–29 January 2015; pp. 439–445. [Google Scholar]

- Zahraee, S.M.; Assadi, M.K.; Saidur, R. Application of Artificial Intelligence Methods for Hybrid Energy System Optimization. Renew. Sustain. Energy Rev. 2016, 66, 617–630. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine learning. In Burr Ridge; McGraw Hill: New York, NY, USA, 1997; Volume 45, pp. 870–877. [Google Scholar]

- Schmidhuber, J. Deep Learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Niemi, J.; Tanttu, J. Deep Learning Case Study for Automatic Bird Identification. Appl. Sci. 2018, 8, 2089. [Google Scholar] [CrossRef]

- Zheng, X.; Luo, Y.; Sun, L.; Zhang, J.; Chen, F. A tourism destination recommender system using users’ sentiment and temporal dynamics. J. Intell. Inf. Syst. 2018, 51, 557–578. [Google Scholar] [CrossRef]

- Abdi, M.H.; Okeyo, G.O.; Mwangi, R.W. Matrix Factorization Techniques for Context-Aware Collaborative Filtering Recommender Systems: A Survey. Comput. Inf. Sci. 2018, 11, 1. [Google Scholar] [CrossRef]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural Collaborative Filtering. In Proceedings of the 26th International Conference on World Wide Web (WWW ’17), Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Chen, H.; Dou, Q.; Yu, L.; Qin, J.; Heng, P.A. VoxResNet: Deep voxelwise residual networks for brain segmentation from 3D MR images. NeuroImage 2017, 170, 446–455. [Google Scholar] [CrossRef]

- Arshad, S.; Shah, M.A.; Wahid, A.; Mehmood, A.; Song, H. SAMADroid: A Novel 3-Level Hybrid Malware Detection Model for Android Operating System. IEEE Access 2018, 6, 4321–4339. [Google Scholar] [CrossRef]

- Ansong, M.O.; Huang, J.S.; Yeboah, M.A.; Dun, H.; Yao, H. Non-Gaussian Hybrid Transfer Functions: Memorizing Mine Survivability Calculations. Math. Probl. Eng. 2015, 2015, 623720. [Google Scholar] [CrossRef]

- Shi, Z.; Zuo, W.; Chen, W.; Yue, L.; Han, J.; Feng, L. User Relation Prediction Based on Matrix Factorization and Hybrid Particle Swarm Optimization. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 1335–1341. [Google Scholar]

- Zitnik, M.; Zupan, B. Data Fusion by Matrix Factorization. Pattern Anal. Mach. Intell. IEEE Trans. 2015, 37, 41–53. [Google Scholar] [CrossRef]

- Kumar, R.S.; Sathyanarayana, B. Adaptive Genetic Algorithm Based Artificial Neural Network for Software Defect Prediction. Glob. J. Comput. Sci. Technol. 2015, 15. [Google Scholar]

- Opoku, E.; James, G.C.; Kumar, R.; Opoku, E.; James, G.C.; Kumar, R. ScienceDirect ScienceDirect Evaluating the Performance of Deep Neural Networks for Health Evaluating the Performance of Deep Neural Networks for Health Decision Making Decision Making. Procedia Comput. Sci. 2018, 131, 866–872. [Google Scholar]

- Yera, R.; Mart, L. Fuzzy Tools in Recommender Systems: A Survey. Int. J. Comput. Intell. Syst. 2017, 10, 776–803. [Google Scholar] [CrossRef]

- Taud, H.; Mas, J.F. Multilayer Perceptron (MLP). In Geomatic Approaches for Modelling Land Change Scenarios; Springer: Berlin, Germany, 2018; pp. 451–455. [Google Scholar]

- Zare, M.; Pourghasemi, H.R.; Vafakhah, M.; Pradhan, B. Landslide susceptibility mapping at Vaz Watershed (Iran) using an artificial neural network model: A comparison between multilayer perceptron (MLP) and radial basic function (RBF) algorithms. Arab. J. Geosci. 2013, 6, 2873–2888. [Google Scholar] [CrossRef]

- Li, S.; Xing, J.; Niu, Z.; Shan, S.; Yan, S. Shape driven kernel adaptation in Convolutional Neural Network for robust facial trait recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 222–230. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. Proc. IEEE Int. Conf. Comput. Vis. 2017, 115, 4278–4284. [Google Scholar]

- Xu, Z.; Yan, F.; Qi, Y. Bayesian nonparametric models for multiway data analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 475–487. [Google Scholar] [CrossRef] [PubMed]

- Aboagye, E.O.; James, G.C.; Jianbin, G.; Kumar, R.; Khan, R.U. Probabilistic Time Context Framework for Big Data Collaborative Recommendation. In Proceedings of the 2018 International Conference on Computing and Artificial Intelligence, ICCAI, Chengdu, China, 12–14 March 2018; pp. 118–121. [Google Scholar]

- Chen, W.; Hsu, W.; Lee, M.L. Making recommendations from multiple domains. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’13, Chicago, IL, USA, 11–14 August 2013; p. 892. [Google Scholar]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Vervliet, N.; Debals, O.; Sorber, L.; De Lathauwer, L. Breaking the curse of dimensionality using decompositions of incomplete tensors: Tensor-based scientific computing in big data analysis. IEEE Signal Process. Mag. 2014, 31, 71–79. [Google Scholar] [CrossRef]

- Nickel, M.; Tresp, V. An Analysis of Tensor Models for Learning on Structured Data. Mach. Learn. Knowl. Discov. Databases 2013, 8189, 272–287. [Google Scholar]

- Karatzoglou, A.; Amatriain, X.; Baltrunas, L.; Oliver, N. Multiverse recommendation: N-dimensional tensor factorization for context-aware collaborative filtering. In Proceedings of the fourth ACM Conference on Recommender Systems, RecSys ’10, Barcelona, Spain, 26–30 September 2010; p. 79. [Google Scholar]

- Li, G.; Xu, Z.; Wang, L.; Ye, J.; King, I.; Lyu, M. Simple and efficient parallelization for probabilistic temporal tensor factorization. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1–8. [Google Scholar]

- Opoku, A.E.; Jianbin, G.; Xia, Q.; Tetteh, N.O.; Eugene, O.M. Efficient Tensor Strategy for Recommendation. ASTES J. 2017, 2, 111–114. [Google Scholar] [CrossRef]

- Liu, X.; De Lathauwer, L.; Janssens, F.; De Moor, B. Hybrid clustering of multiple information sources via HOSVD. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2010; Volume 6064 LNCS, pp. 337–345. [Google Scholar]

- Yang, F.; Shang, F.; Huang, Y.; Cheng, J.; Li, J.; Zhao, Y.; Zhao, R. Lftf: A framework for efficient tensor analytics at scale. Proc. VLDB Endow. 2017, 10, 745–756. [Google Scholar] [CrossRef]

- Frolov, E.; Oseledets, I. Tensor Methods and Recommender Systems. arXiv, 2016; arXiv:1603.06038. [Google Scholar]

- Cheng, H.T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & Deep Learning for Recommender Systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016. [Google Scholar]

- Dhillon, I.S.; Guan, Y.; Kulis, B. Kernel k-means, Spectral Clustering and Normalized Cuts. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 551–556. [Google Scholar]

- Sohangir, S.; Wang, D.; Pomeranets, A.; Khoshgoftaar, T.M. Big Data: Deep Learning for financial sentiment analysis. J. Big Data 2018, 5, 3. [Google Scholar] [CrossRef]

- Gantner, Z.; Drumond, L.; Freudenthaler, C.; Schmidt-Thieme, L. Bayesian Personalized Ranking for Non-Uniformly Sampled Items. In Proceedings of the KDD Cup 2011, San Diego, CA, USA, 21–24 August 2011; Volume 18, pp. 231–247. [Google Scholar]

- Liu, M.; Li, S.; Shan, S.; Chen, X. AU-inspired Deep Networks for Facial Expression Feature Learning. Neurocomputing 2015, 159, 126–136. [Google Scholar] [CrossRef]

- Hong, R.; Hu, Z.; Liu, L.; Wang, M.; Yan, S.; Tian, Q. Understanding Blooming Human Groups in Social Networks. IEEE Trans. Multimed. 2015, 17, 1980–1988. [Google Scholar] [CrossRef]

- Wang, M.; Fu, W.; Hao, S.; Liu, H.; Wu, X. Learning on Big Graph: Label Inference and Regularization with Anchor Hierarchy. IEEE Trans. Knowl. Data Eng. 2017, 29, 1101–1114. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. BMVC 2015, 1, 6. [Google Scholar]

- Socher, R.; Chen, D.; Manning, C.; Chen, D.; Ng, A. Reasoning with Neural Tensor Networks for Knowledge Base Completion; Neural Information Processing Systems: La Jolla, CA, USA, 2013; pp. 926–934. [Google Scholar]

- Rauber, P.E.; Fadel, S.G.; Falcão, A.X.; Telea, A.C. Visualizing the Hidden Activity of Artificial Neural Networks. IEEE Trans. Vis. Comput. Graph. 2017, 23, 101–110. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Yuan, L.; Liao, J.; Yu, N.; Hua, G. Stylebank: An explicit representation for neural image style transfer. arXiv, 2017; arXiv:1703.09210. [Google Scholar]

- McAuley, J.; Pandey, R.; Leskovec, J. Inferring Networks of Substitutable and Complementary Products. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; p. 12. [Google Scholar]

- Covington, P.; Adams, J.; Sargin, E. Deep Neural Networks for YouTube Recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems, RecSys ’16, Boston, MA, USA, 15–19 September 2016. [Google Scholar]

- Boaz, A.; Gough, D. Deep and wide: New perspectives on evidence and policy. Evid. Policy 2012, 8, 139–140. [Google Scholar] [CrossRef]

| Symbol | Description |

|---|---|

| U | User-tag |

| I | Item-tag matrix |

| R | Rating sentiments expressed |

| A | Tensor |

| R | Number of features in XYZ |

| C | Core tensor |

| Rating history | |

| PS | User item pairs |

| X | Dimension layer |

| Set of negativities | |

| Mapping of output layer | |

| Z | Observed relationship in Z |

| Training parameter | |

| S | tensor |

| c-th neural filtering layer | |

| first input dimensional vector | |

| second input dimensional vector | |

| interaction function for the final phase | |

| interaction function for deep neural filtering phase | |

| is the k-th characteristic of item i |

| Dataset | Interaction# | Item# | User# | Sparsity |

|---|---|---|---|---|

| MovieLens | 1,000,209 | 3706 | 6040 | 95.53% |

| Amazon | 1,500,809 | 9916 | 55,187 | 79.90% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Opoku Aboagye, E.; Kumar, R. Simple and Efficient Computational Intelligence Strategies for Effective Collaborative Decisions. Future Internet 2019, 11, 24. https://doi.org/10.3390/fi11010024

Opoku Aboagye E, Kumar R. Simple and Efficient Computational Intelligence Strategies for Effective Collaborative Decisions. Future Internet. 2019; 11(1):24. https://doi.org/10.3390/fi11010024

Chicago/Turabian StyleOpoku Aboagye, Emelia, and Rajesh Kumar. 2019. "Simple and Efficient Computational Intelligence Strategies for Effective Collaborative Decisions" Future Internet 11, no. 1: 24. https://doi.org/10.3390/fi11010024

APA StyleOpoku Aboagye, E., & Kumar, R. (2019). Simple and Efficient Computational Intelligence Strategies for Effective Collaborative Decisions. Future Internet, 11(1), 24. https://doi.org/10.3390/fi11010024