1. Introduction

With the arrival of the Big Data era, the amount of video data in modern society is growing at an unprecedented speed; especially in the field of video surveillance, there has been an explosion in the total amount of video data [

1]. Data has been an essential resource, and how to better manage and use it has become crucial. The scale effect of Big Data brings extraordinary challenges to data storage and data analysis.

The issue of massive video data processing is a common problem in Big Data technology; especially with the deepening of the construction of the city, video surveillance technology has received more and more attention from the community. It is of great theoretical and practical significance to study how to realize the storage and retrieval of video data effectively. Storage technology [

2,

3,

4] is a vital issue in video surveillance systems. The cloud storage of video surveillance is a research hotspot. Researchers have done a lot of work and achieved some results. However, there are still some challenges. The number of replicas of video files in the distributed system is a man-made definition [

5,

6]. Hadoop Distributed File System (HDFS) has a default number of copies. If we save the data according to the default number, we need massive storage space. This mechanism can effectively guarantee the security of video data. However, it cannot accurately reflect the security level of the video file in many application environments, and the redundant data always waste storage resource. The deduplication checks (i.e., the corresponding essential message exchange) create a side channel, exposing the privacy of file existence status to the attacker. The random response (RARE) approach achieves stronger privacy. Both deduplication benefit and privacy of RARE can be preserved [

7]. Besides, when users play back video data to track a target’s action path, how to predict the target’s action path and add the video data to the cache in advance is a significant question.

For this reason, the purpose of this paper is to optimize replicas strategy and data cache strategy in cloud-based video surveillance systems. Firstly, we propose a dynamic redundant replicas mechanism based on security levels, which dynamically adjusts the number of redundant replicas. Secondly, we design a more realistic cache mechanism according to the position and time correlation of the front camera. The following sections have detailed descriptions of two improvements and validate in the actual applications.

The rest of this paper is organized as follows:

Section 2 describes several studies closely related to our research.

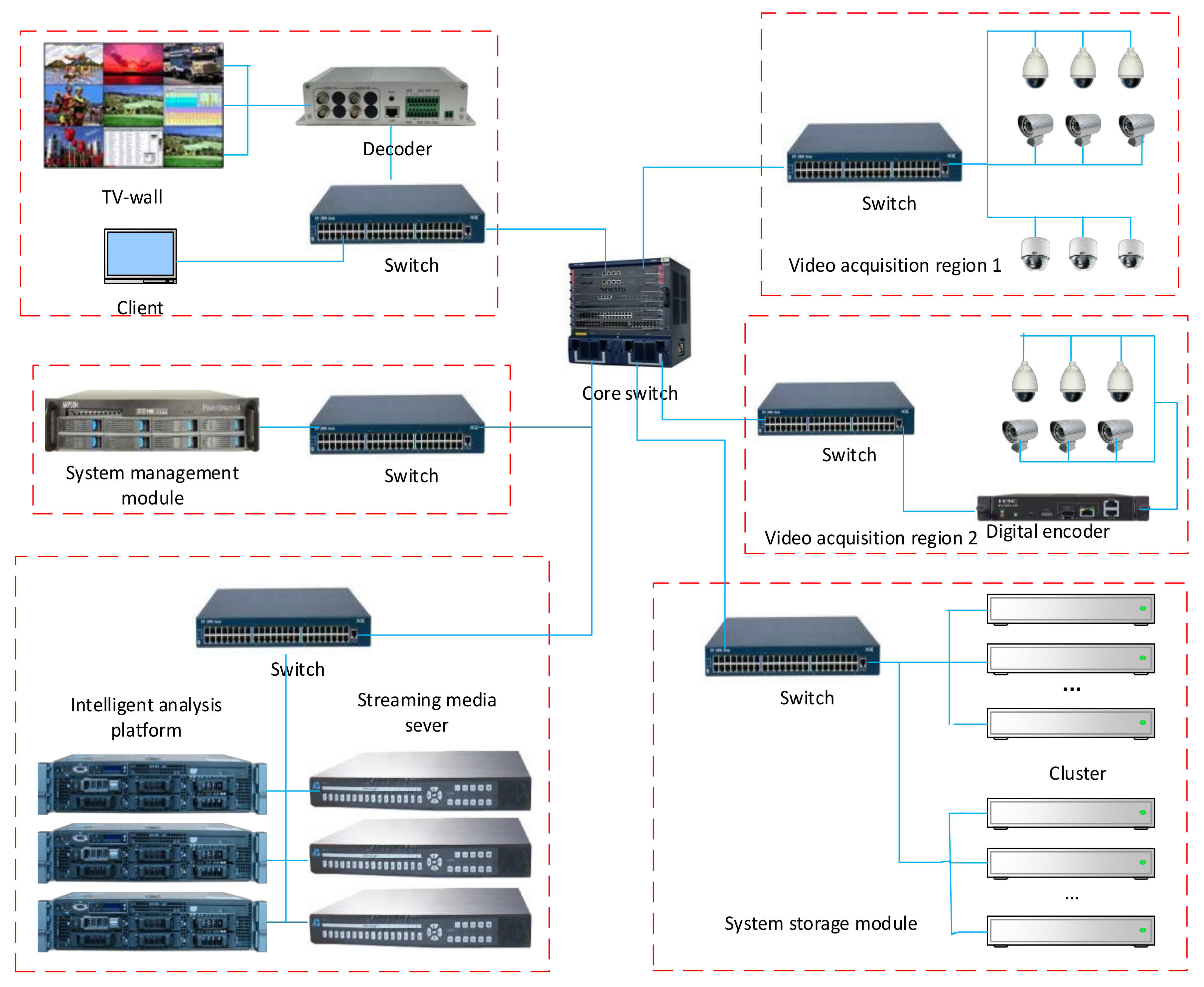

Section 3 introduces the architecture of the cloud-based video surveillance system.

Section 4 and

Section 5 describe the dynamic redundant replicas mechanism based on security levels and data caching strategy based on location correlation in details. Experimental verification and analysis can be found in

Section 6. Finally,

Section 7 concludes this paper and states future research options.

2. Related Work

With the development of cloud computing technology, researchers have put forward some new system architectures based on cloud computing technology to meet the growing demand for video surveillance system. Neal et al. [

8] proved cloud computing could be a new deployment solution for video surveillance system. Karimaa [

9] investigated the reliability cloud-based video surveillance technology, but there is no real breakthrough in data management. In [

10], Lin et al. proposed a video surveillance system under IaaS abstraction layer. The system is based on the Hadoop distributed file system to provide scalable video recording and backup capabilities. M. Anwar Hossain analyzed the suitability of cloud-based multimedia surveillance systems and proposed a cloud-based multimedia surveillance system framework [

11,

12,

13]. The system can efficiently deal with system overload, meet the storage requirements of the large-scale monitoring system, and provide data access to users. Bao et al. proposed the Racki selection algorithm and DNik selection algorithm to guarantee the cluster load balance [

14]. The two algorithms can fully consider cluster load balance when providing the data replicas pre-written into HDFS reasonable DataNodes. However, many unimportant data occupy a lot of space resource. Big data stream mobile computing (BDMSC) has some challenges in performing real-time energy-efficient management of the distributed resources available at both mobile devices and Internet-connected data centers. A fundamental problem for coping with the variable volume of data generated by the emerging BDMSC applications is the design of integrated computing-networking technological platforms [

15]. Xie et al. [

16] proposed a replicas mechanism based on computing capacity of each node in HDFS. Similarly, inactive data in the system still causes the consumption of storage resources. Mauro Conti et al. [

17] proposed a distributed Fog-supported IoE-based framework. This approach saves energy consumption impressively in the Fog Data Center compared with the existing methods and could be of practical interest in the incoming Fog of Everything (FoE) realm. Xiong et al. [

18] implemented a hotness-proportional replication strategy (HP) to improve the efficiency of storage space. Wei et al. [

19] presented a cost-effective, dynamic replication management scheme referred to as CDRM. CDRM maintains minimal replica number for a given availability requirement. However, they did not address the replica replacement issue. Najme [

20] presented adaptive data replication strategy (ADRS), including a new replica placement and replacement strategy. They implemented ADRS and evaluation results apparently show that ADRS can improve the performance of cloud storage. However, in the massive video surveillance storage system, the retention time of video file will also affect the replica factor, so we should consider this factor.

Niu et al. [

21] proposed a cache mechanism based on multi-agents that can automatically manage related video streams. Besides, they designed a caching framework to implement the cache interaction, cache activity control, and video streaming migration. However, the framework is too complicated and costly. Rejaie and Kangasharju [

22] used a prefetching mechanism to support higher quality cache streaming during subsequent playback of the hierarchically encoded video stream. However, when users play back the video, they pay more attention to the speed of data querying. Zhang L. et al. [

23] presented a Cost-Effective Cloud Storage Caching Strategy referred to as CloudCache. They first utilized nearly free desktop machines in a local area network environment to build a local distributed file system, which is deployed as a data cache of remote cloud storage service. After that, they presented a cache replacement and file reading/writing algorithm. Performance evaluation is accomplished, using Amazon Simple Storage Service (S3) and desktop PCs in the laboratory to build the experimental environment. The evaluation results apparently show that CE-cache strategy reduces cost and dramatically improves file response speed. However, they did not address the cache size issue. Users need to define the cache size according to their needs.

4. Dynamic Redundant Replicas Mechanism Based on Security Levels

To ensure the security and storage time of the video data segments, it is necessary to store several data replicas in different nodes when storing the video data segment. However, in various situations, the requirements of the security for video files are different. Even in the same scene, security requirements may differ in different monitoring locations. For example, a checkout counter needs a high security level. Users [

5,

6] define the replicas mechanism in the distributed surveillance system, and users set the number of redundant replicas in advance. This mechanism can effectively guarantee the security of the video data. However, the coarse-grained redundant replicas mechanism does not accurately reflect the security level of video files [

26]. Keeping meaningless video data will cause the storage resources to be wasted. Besides, man-made definition replicas mechanism may lose critical data.

From the above analysis, we put forward a dynamic redundant replicas mechanism based on the video file security levels (SL-DRM). It keeps different redundant replicas based on different security levels. The security level can be dynamically adjusted according to some influencing factors. In the current surveillance field, the factors affecting the number of replicas of the video data only are the retention time, man-made definition, access hit of video files, and intelligent video analysis results. Intelligent video analysis directly indicates whether the video file has saved value; when the video data is saved for the first time, the user-defined security level shows the importance of the data to the user. With the increase of the retention time, the security level of video data needs to consider the retention time, and access hit. Access hit can measure the importance of video files to users; storing redundant copies for a long time may result in the consumption of a significant amount of storage space. Hence, the retention time also has a considerable influence on the video security level. There is no direct correlation between the factors, but their changes affect the security level of the video file.

Table 1 shows the notations that we will use.

The replica management strategy can divide into static and the dynamic replica management strategy based on the number of copies and the fixed position of the replica placement. In the current business system, the static replica strategy is adopted; for example, HDFS creates three copies of each file by default in the number of backups. The cost of data management would increase with the number of replicas increasing. Too many copies may significantly enhance the availability, but it also brings unnecessary consumption [

20]. Hence, we take the default copy number of HDFS as the maximum number of copies and define four security levels for video files. As shown in

Table 2, Level 0 is the lowest level of security, and it does not retain redundant replica of the video file; level 1 only saves one redundant replica of the video file; level 2 and level 3 increase the number of redundant replicas in turn. Then, according to the various factors that affect the security level of video files, the security levels of each file will downgrade or upgrade through some formulas.

When calculating the security levels of video files, we mainly consider the following four factors:

Ix represents User-specify security levels. It can be divided into three levels: lower, normal, and important. As shown in

Table 3, 0 represents lower, 1 represents normal, and 2 represents important.

Tx denotes effects of retention days on video file security levels. As the number of days of video data storage increases, the security levels of the data will gradually decrease, but the video file does not need to be considered for the first time.

Hx denotes the access hit of the video file .The more frequent access to video files, the more critical the video files, and the higher the security levels are.

Ax denotes the analyzing result for the video file

by using the method introduced in [

27].

The intelligent video analysis algorithm used in this paper is the Edge Frame Difference and Gaussian Mixture Model algorithm (EFD-GMM) [

27]. EFD-GMM is an improved algorithm based on the Edge Frame Difference and Gaussian Mixture Model. It can model the moving objects and detect the moving objects. The algorithm can help to solve the problem of noise, illumination, and error target. This algorithm can analyze the moving target in video data. For the results of the intelligent video analysis, if the target is detected in video file

x,

= 1; Otherwise the

= 0.

There is no retention time, access hit and other factors when the video saved for the first time. Hence, users determine security level of video file and specify security levels and intelligent video analysis results. We propose Formula (1) to calculate the initial security level of the video file. Here,

has two kinds of values, 0 means no value and 1 means valuable.

With the video files’ retention time growing and the number of accesses increasing, the security level will change relatively. Firstly, we give Formula (2) to calculate the access hit of the video file. As shown in Formula (2),

will increase as the number of visits increases, also

.

Keeping multiple redundant replicas for a long time can cause a lot of consumption of storage system. As the retention time increases, the effects on the security level will higher, at the same time the security level of the video file will downgrade. Hence,

t and

Tx are positively related. The effects of retention time on security level can be defined in Formula (3). The reason we take the logarithmic relationship is that logarithm does not change the nature of the data and the correlation, but the scale of the variable is compressed, such as 800/200 = 4, but log800/log200 = 1.2616, the data is more stable, and it also weakens the collinearity and heteroscedasticity of the model. The logarithmic relationship dramatically reflects the impact of factors on variables.

In summary, we have considered all the factors that affect the security level of the video file. Finally, we get Formula (4) to calculate the video file security level. The size of the needs to consider the application scenario. Users can define weights according to their own needs such as in the case of more cold data, occupies a more significant value; if it is a high-access system, holds significant weight. They can set a higher value on their more concerned factor which makes the proposed strategy adaptable. As Tx plays a negative role in the retention time increasing, so < 0.

Using the linear relationship can directly reflect the impact weights of the influencing factors under different scenarios.

Assume there are w video streams writing data into the storage system. Each stream will write block files, file collection is set as

for each stream is

. Though the intelligent video analysis results, we can get

and determine the number of initial redundant replicas. Then with the changes in retention time and access hit, redefine the number of redundant replicas, set

. The security levels algorithm is proposed as Algorithm 1.

| Algorithm 1 Security levels algorithm. |

| 1 Information: |

| 2 Input: |

| 3 Output: number of redundant replicas |

| 4 for each in do |

| 5 get retention time from storage module |

| 6 calculate by Formula (3) |

| 7 get number of accesses from storage module |

| 8 calculate by Formula (2) |

| 9 get from |

| 10 calculate by Formula (4) |

| 11 compare with Table 2 |

| 12 put the number of redundant replicas into . |

| 13 end for |

| 14 return . |

5. Data Cache Strategy Based on Location Correlation

Distributed cache technology is mainly used to improve the response speed of data reading of storage system, which is a significant way to improve the performance of the distributed system. In the distributed storage system, a suitable data cache mechanism can predict the user’s data reading behavior according to the running time of the system, and the data is read into the buffer in advance. It can increase the speed of data reading.

When a surveillance client plays back particular time of historical video and tracks related targets, it usually begins in a specific location then tracks the target’s path. It needs to visit the video data in multiple regions, and there is a certain location correlation among these areas. Hence, according to the location correlation of the front camera, when a video file of a camera is accessed, location-correlated cameras’ video data segments for the same period will be added to the cache.

To implement the cache mechanism based on location correlation of front camera, we add a cache pool with eight caches in streaming media server. The eight caches have used the method of circulation allocation. When cache pool is full, the highest cache is the priority to be replaced. Also, the size of each cache is 2 MByte. When the video rate is 2 Mb/s, the frame rate is 25 fps, and I frame interval is 12, at least two Group of Pictures (GOP) data segments can be stored.

When a user accesses a video file, the first two GOP data segments are in the cache pool. Then the data can be obtained from the cache directly while data is also read from the storage system according to the index information. Otherwise, search the video file in the storage system according to the indexing. Then the first two GOP data segments and location-correlated cameras’ data segments in the same period are added to the cache pool.

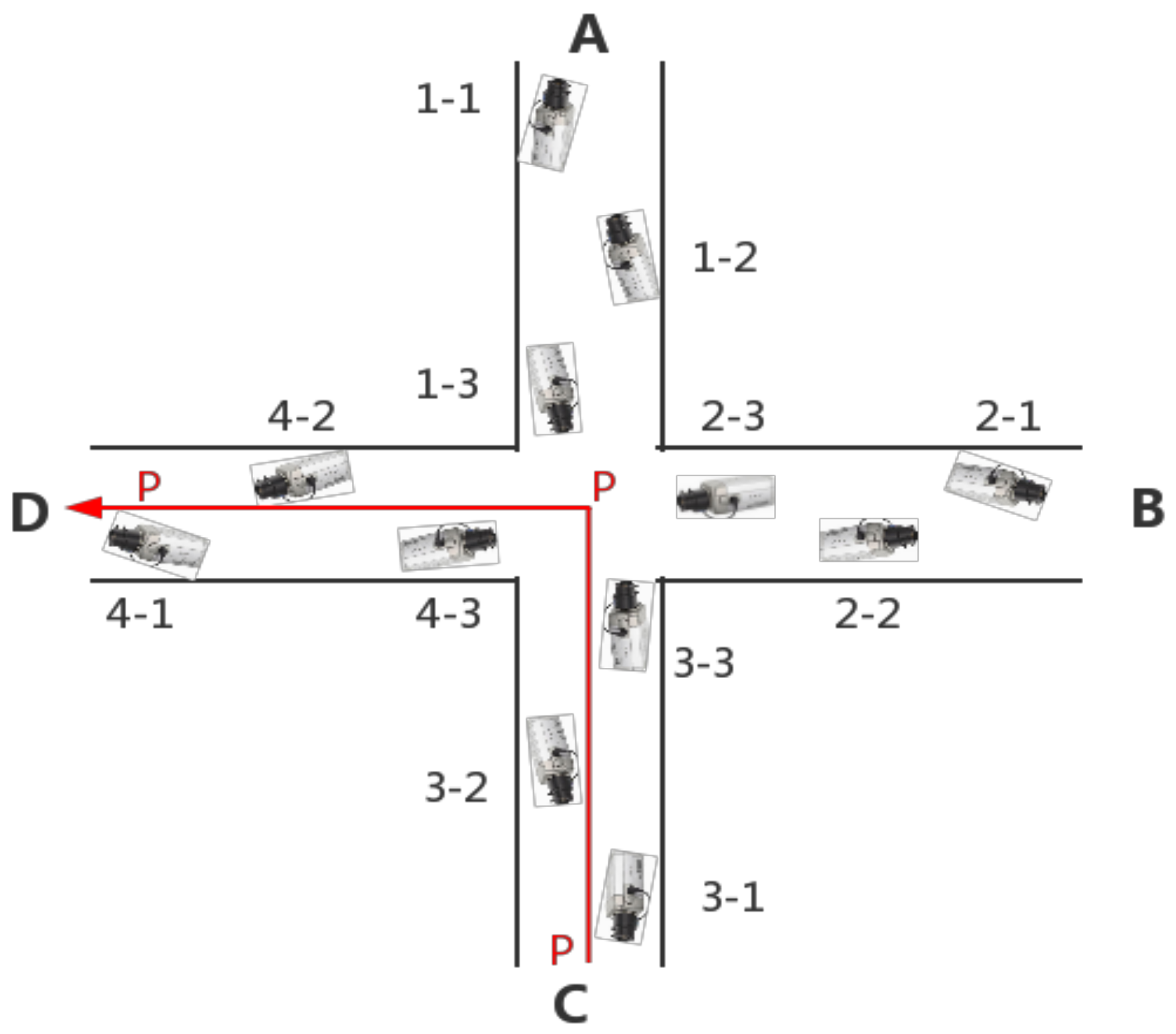

To describe the data cache strategy based on location correlation (LC-cache), we set a surveillance area as an example. As shown in

Figure 2, there are four exits and twelve cameras. The camera parameters are in

Table 4.

The cache strategy must be established based on the location correlation between cameras. Due many cameras are installed in crowded places like bank counters or shops, these cameras may have overlapping coverage areas or the front and back position correlation. Therefore, the correlation among cameras is considered in two ways:

Table 5 records the correlation between cameras in

Figure 2. There are overlaps between 1-1 and 1-2, so they are associated. There is no overlap and the correlation between the front and back positions between 1-1 and 1-3, so they are not associated. If someone appears in a camera, he may appear in associated cameras. We simulate a path: pedestrian P enters from exit C, goes through the crossroads and leaves from exit D. Hence, the pedestrian P will appear in the camera 3-1, 3-2, 1-3, 3-3, 4-3, 2-3, 4-1 and 4-2.

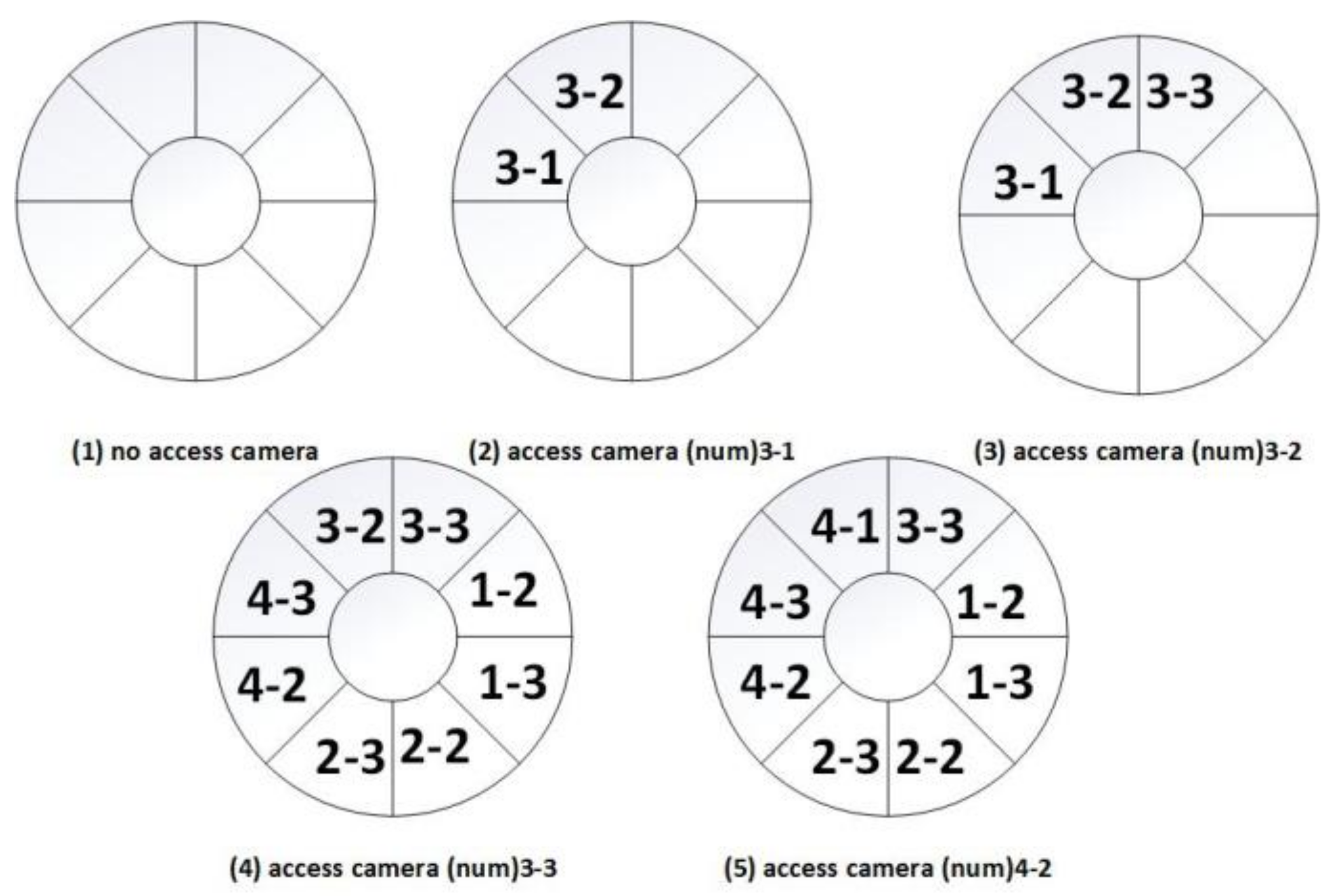

As shown in

Figure 3, the cache pool is empty at first. When the user plays back camera 3-1’s video data for the first time, not only the data needs to send to the user, but also the first two GOP data segments need to add to the cache. Besides, camera 3-2’s first two GOP data segments for the same period will be added to the cache. The state of the cache pool is shown

Figure 3(2).

If users find pedestrian P moving to the camera 3-2 is monitoring area, it will replay camera 3-2’s video, at this point cache pool hit. Hence, there is no need to locate the video file according to the indexing system. At the same time, camera 3-1, camera 3-3 are associated with camera 3-2. Since the camera 3-1’s GOP data segments have been in the cache pool, it only needs to store camera 3-3, the state of the cache pool is shown

Figure 3(3). Then pedestrian P is moving towards camera 3-3’s monitoring area and repeats the above process, so camera 1-2, 1-3, 2-2, 2-3, 4-2, 4-3’s first two GOP data segments will be added to the cache pool, the state of the cache pool is shown

Figure 3(4). Then pedestrian P is moving towards camera 4-2’s monitoring area, so the camera 4-1’s first two GOP data segments will be added to the cache pool, the state of the cache pool is shown

Figure 3(5). At last, P leaves from the exit 4, the cache pool also hits.

6. Experiments and Analysis

In this section, basic environment and discussion of the simulation results are presented.

6.1. Basic Environment

Our experimental platform includes a client, a management server, a streaming media server, 5 cluster hosts and 12 cameras. The client has an I5-4590 CPU, 8 GB RAM, 1 TB disk and a 1 Gbps port. The management server has two E5-2620 CPU, 32 GB RAM, 2 TB disk and two 1 Gbps ports. The streaming media server configures with an I7-4790 CPU, 8 GB RAM, 1 T disk and two 1 Gbps ports. The cluster in the experiment consists of five physical servers, each with an E3-1225 CPU, 4 GB RAM, 2 TB disk and two 1 Gbps ports. The cameras used in our experiments is VC-SDI-B3500WE. The network connection uses a 24-ports switch, with 52 Gbps bandwidth.

6.2. Dynamic Redundant Replicas Mechanism Based on Security Levels

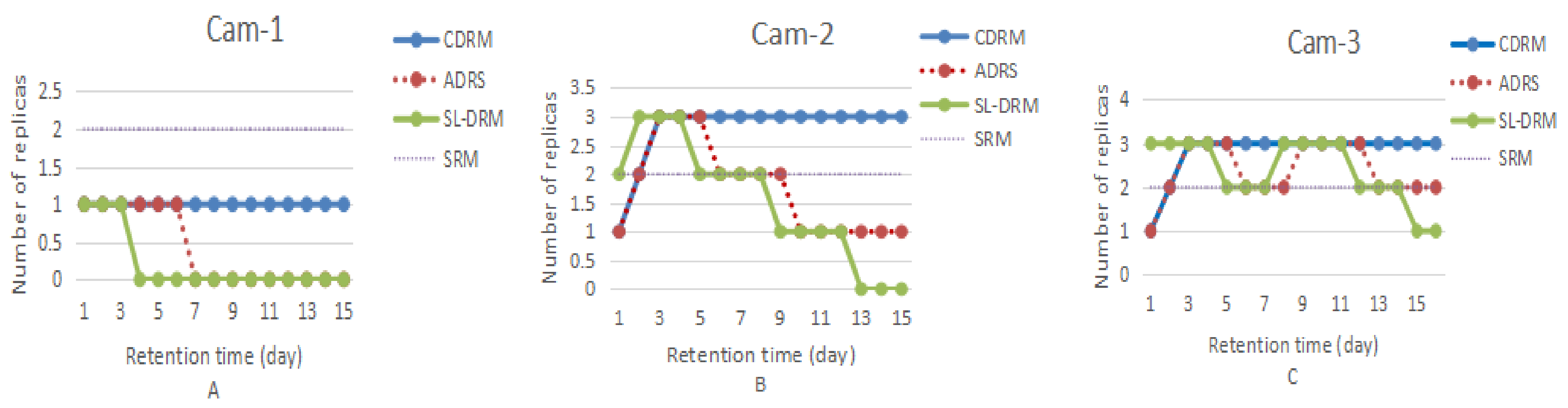

In this experiment, we evaluate dynamic redundant replicas mechanism based on security levels (SL-DRM). The SL-DRM strategy is compared with static replicas mechanism (SRM) and two dynamic replicas strategies (CDRM and ADRS).

We use three cameras, camera-1’s

Ix is 0, which video file will not be accessed; camera-2’s

Ix is 1 and camera-3’s

Ix is 2. Unlike camera-1, we accessed the video file of camera-2 on day 2, 3 and camera-3 on day 2, 3, 8, 9. The cameras are used to test the number of redundant replicas of video files. Then, we select a video file for the same period from different cameras; the size of the video file is 2 G. To ensure the safety of the video file, we defined each file initially hold two redundant replicas in SRM and one replica in CDRM and ADRS respectively. The experiment is repeated five times, which result is showed in

Figure 4.

For SL-DRM strategy, in the beginning, surveillance system detected that something was passing through the monitoring area. Hence, in SL-DRM strategy, the Ax was 1, security level increased. After that, due video file of camera-1 had not been accessed, Sx also reduced, the camera-1 had not kept redundant replicas. We accessed the video file of camera-2 and camera-3 on day 2, 3. Therefore, the security level went higher, the number of redundant replicas also increased. After that, we had not accessed video file of camera-2, the security level was finally reduced to 0 too. Due to camera-3 being accessed on day 8, 9, the number of redundant replicas is changed from two to three for SL-DRM strategy. As the retention time grew, camera-3 had one redundant replica on the day 15. When the security level rises, the number of replicas will increase again. Otherwise, the redundant replicas will be deleted.

CDRM maintains minimal replica number for a given availability requirement. Availability requirement is defined by users [

19]. CDRM strategy can increase the number of replicas but cannot address replica replace issue. ADRS strategy can change the number of replicas, but is not as flexible as SL-DRM. As a result, SL-DRM can adapt to the change of scenario. It guarantees important data security as well as reduces unnecessary replication.

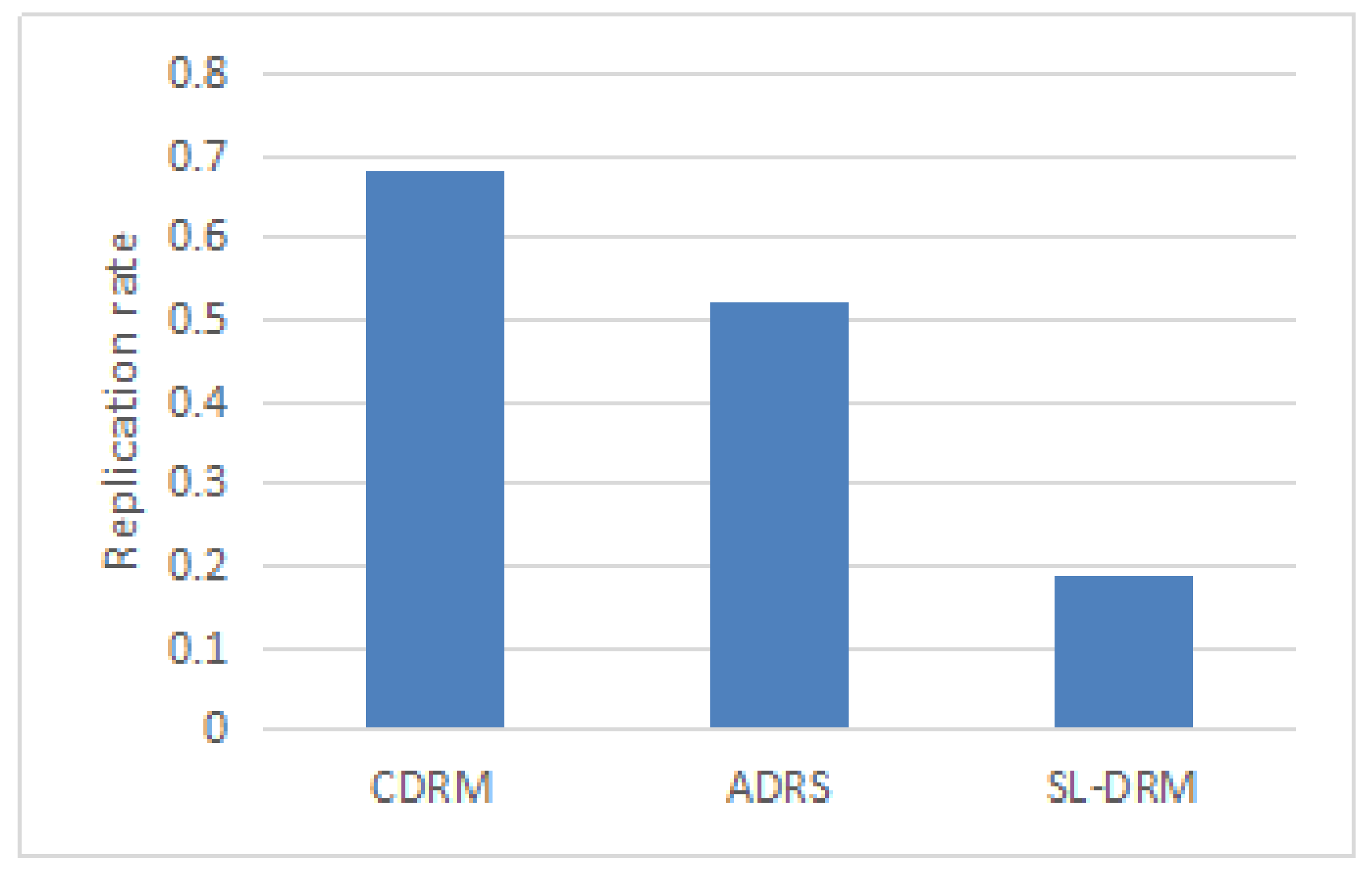

In the next experiment, we investigate the replication rate, which is determined as the ratio of replicas to the total files (original files and replicas). The lower value indicates that strategies are better at saving storage. 50 video files (total file size is around 15 GB) are all from the same camera, and the size of the file is the same. In 15 days, we randomly select seven days to visit all the files. On the 15th day, we count the number of replicas. We repeat this experiment 5 times.

The results of the replication rate are shown in

Figure 5. The replication rate of CDRM and ADRS is more than 0.5. That is, under the CDRM strategy [

19] and ADRS strategy [

20], each file at least have one replica. The SL-DRM strategy considered all the factors affecting the number of replicas of the video file, so the replication rate is less than 0.2. The SL-DRM strategy shows better storage usage performance, compared with other two strategies (CDRM, ADRS).

6.3. Data Cache Strategy Based on Location Correlation

We also evaluate the data cache strategy based on location correlation (LC-cache) and set up two sets of experiments. The first group of experiments is to verify LC-cache strategy can reduce the response time of reading data effectively; the second group of experiments is to verify the hit rate of LC-cache. LC-cache is compared with other two strategies (CloudCache and LRU [

28]). The design and results of the two sets of experiments are presented separately.

In CloudCache strategy, as the increase of cache size, the average reading speed is significantly increased, and the hit rate is gradually increased [

23]. We set LC-cache strategy’s cache pool as mentioned in

Section 5. To compare response time and hit rate, the two strategies have the same cache size (16 MByte).

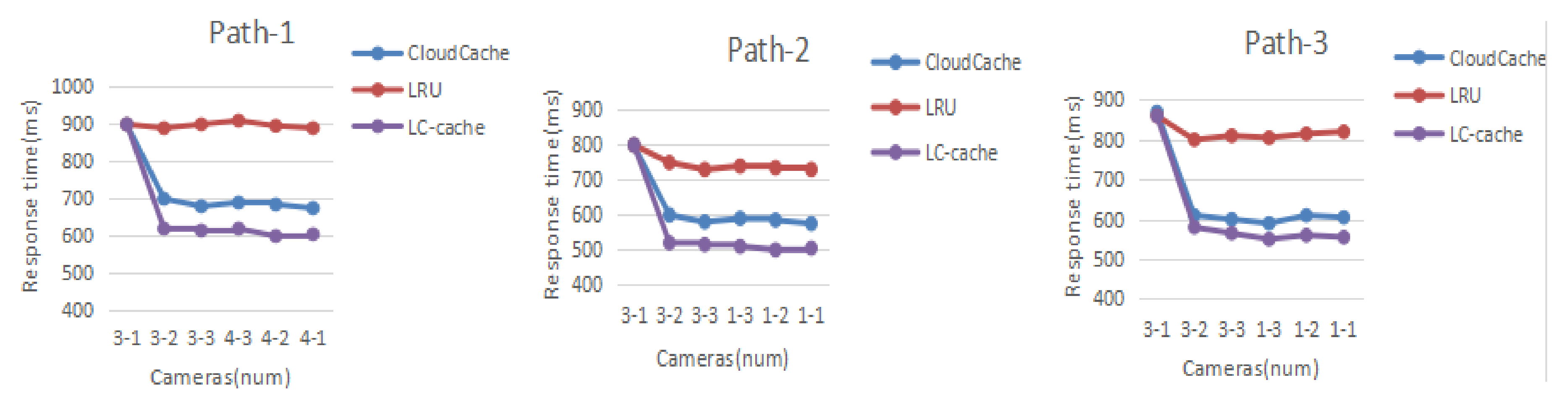

In the first group of experiments, we have implemented the surveillance environment in

Figure 2 by using twelve cameras; the cameras are named in accordance with

Figure 2. Firstly, according to

Table 5, we set the location correlation between the cameras. Then, we design three paths, Path-1: exit C→exit D, Path-2: exit C→exit A, Path-3: exit C→exit B, record and compare the response time of data read when using different cache strategy.

Figure 6 shows the first playback response time under different cache strategies.

According to the response time recorded in

Figure 6, in the beginning, each cache strategy has no pre-cached data, so there has almost the same response time when access 3-1 camera. After that, we find that LC-cache strategy can improve the response time of data read significantly. According to the location correlation between the cameras, the first two GOP data segments of the video file may be added to the cache pool in advance, so the speed of reading data get significantly improved. The reduced response time is the time to query the location of the video file in the storage system and opens the file to read the data. The LRU cache does not have any data at the beginning of the experiment, nor does the data reading frequently in the experiment. The LRU cache mechanism has almost no effect in this case. Hence, there is nearly no difference between the LRU cache mechanism and no cache mechanism.

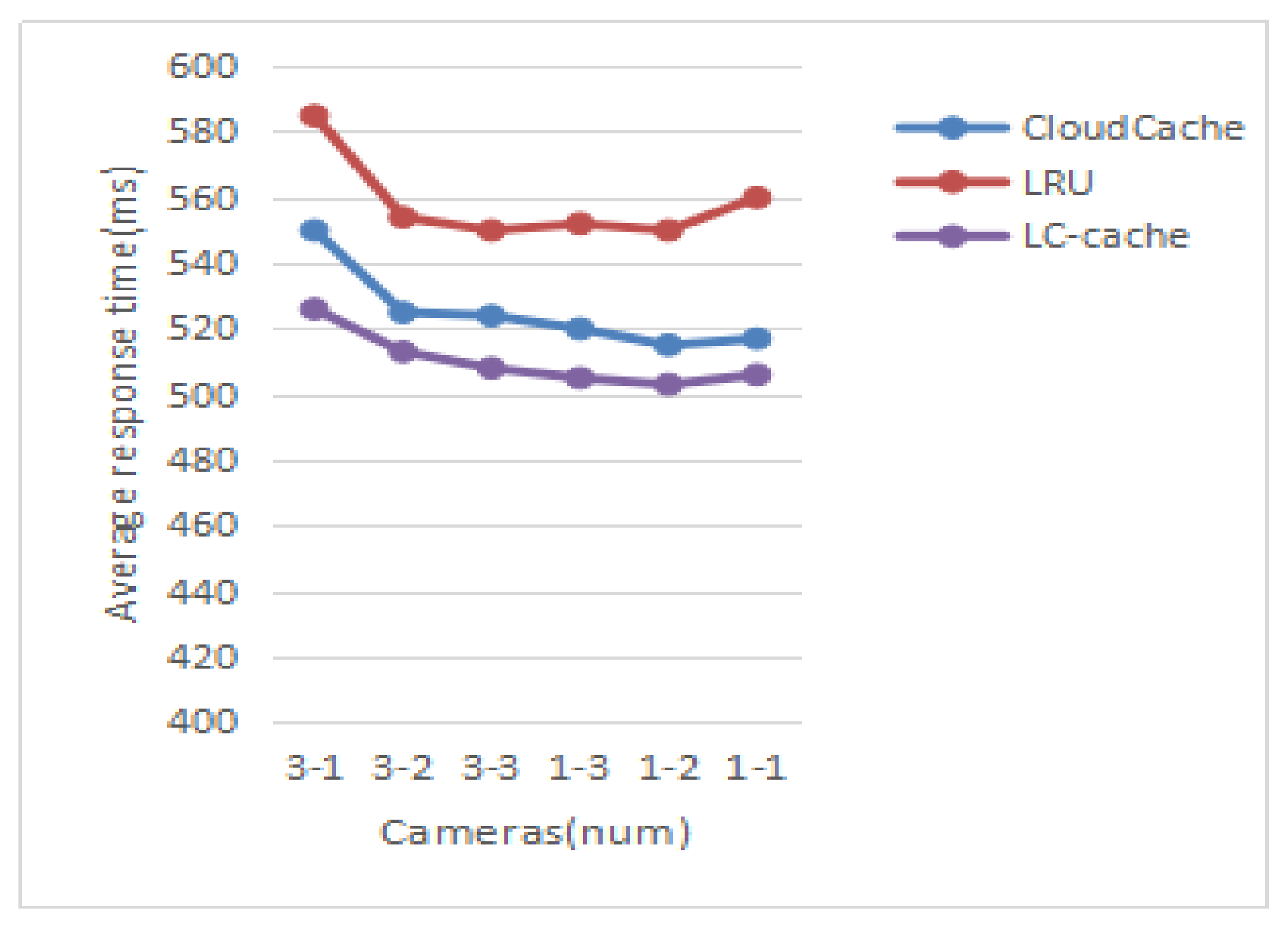

After the first experiment, each strategy will cache some data. We repeat the experiment 100 times with path 2, record and calculate the average response time of the camera under different cache strategies, as shown in

Figure 7. We find that the LC-cache strategy shows a better efficiency of the data access, compared with the other two strategies (CloudCache and LRU).

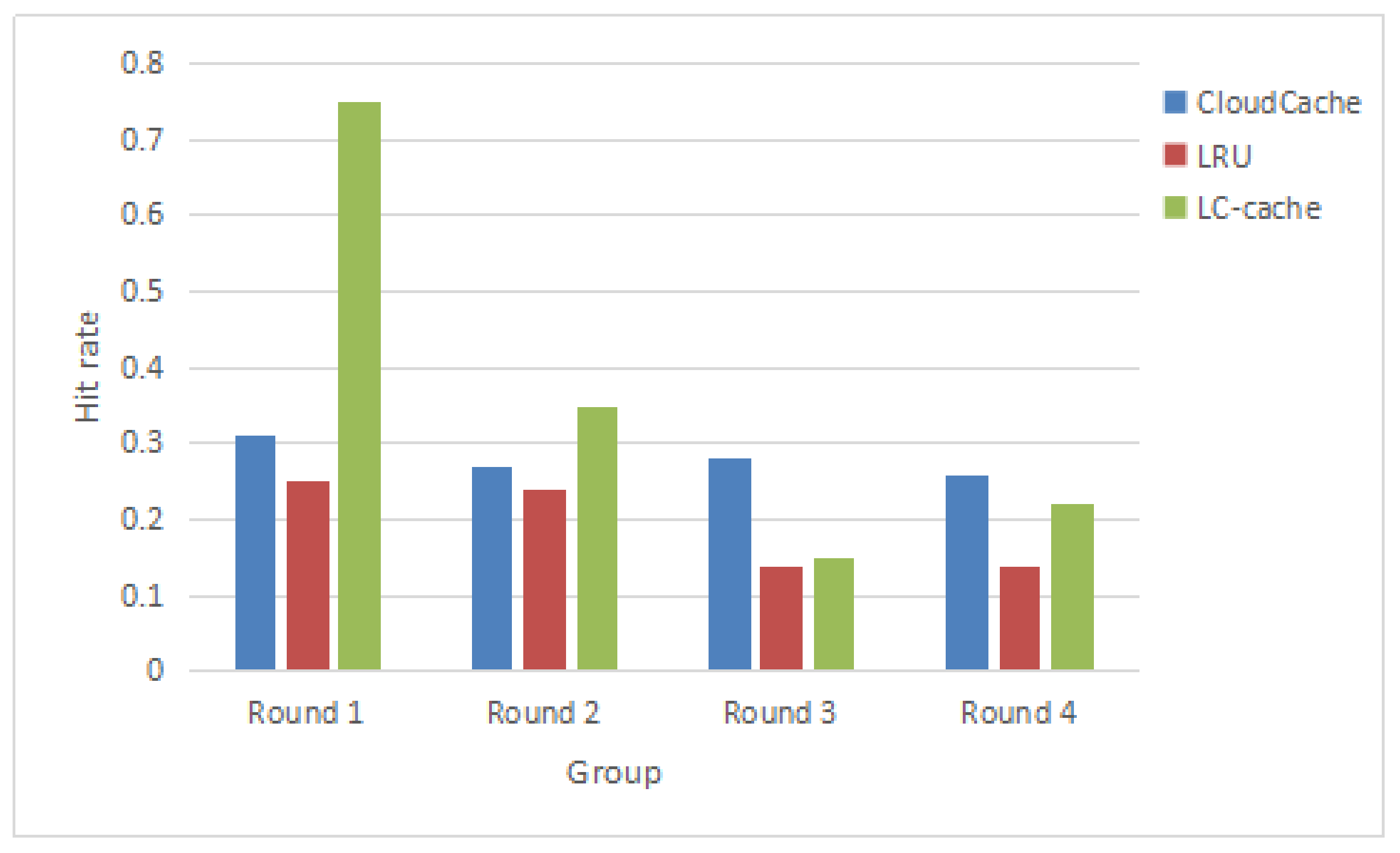

In the second group of experiments, we are primarily evaluating the hit rate of the cache pool. We designed four sets of video-on-demand sequences, as shown in

Table 6. The first group is the way to track pedestrians, the second group is in random order, and the above two groups are in the same period. Besides, the video-on-demand methods that are used by the other two groups are the same with the first two groups respectively, but the time of playback is also random. We repeat this experiment 100 times.

Figure 6 shows the hit rate in each case.

According to the experimental data in

Figure 8, we can see that the LC-cache strategy only has an obvious advantage in the first group while in the other three groups, the cache hit rate does not have any advantage. The reason is that the data cache strategy is mainly based on cameras’ locations and time correlation of video file, and predicts the user’s playback behaviors in advance. Hence, it is not surprising that the hit rate is lower in the other three groups of experiments. However, in the real surveillance system, the user’s playback behavior often has location and time relevance, so the proposed location correlation data cache strategy not only can reduce the response time of data read but also have a higher cache hit rate in the real distributed video surveillance system. Due to the CloudCache strategy not being able to predict the user’s playback behaviors in advance, under the fixed cache size, the hit rate is almost unchanged.