Deep Learning-Based Urban Tree Species Mapping with High-Resolution Pléiades Imagery in Nanjing, China

Abstract

1. Introduction

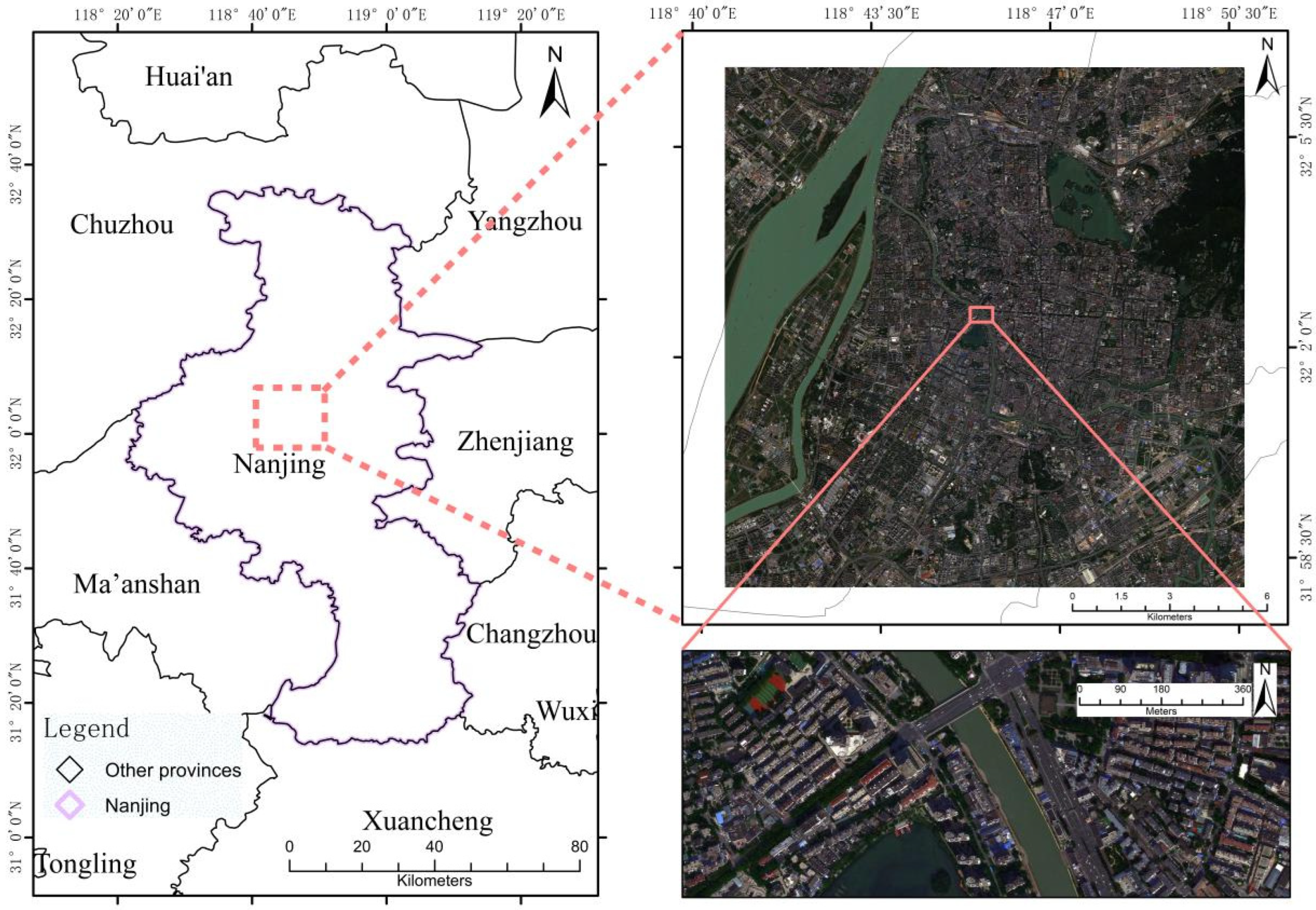

2. Study Area and Dataset

2.1. Study Area

2.2. Remote Sensing Data and Preprocessing

2.2.1. Pléiades Images

2.2.2. Remote Sensing Image Preprocessing

2.2.3. Extraction of Shadow Areas from Imagery

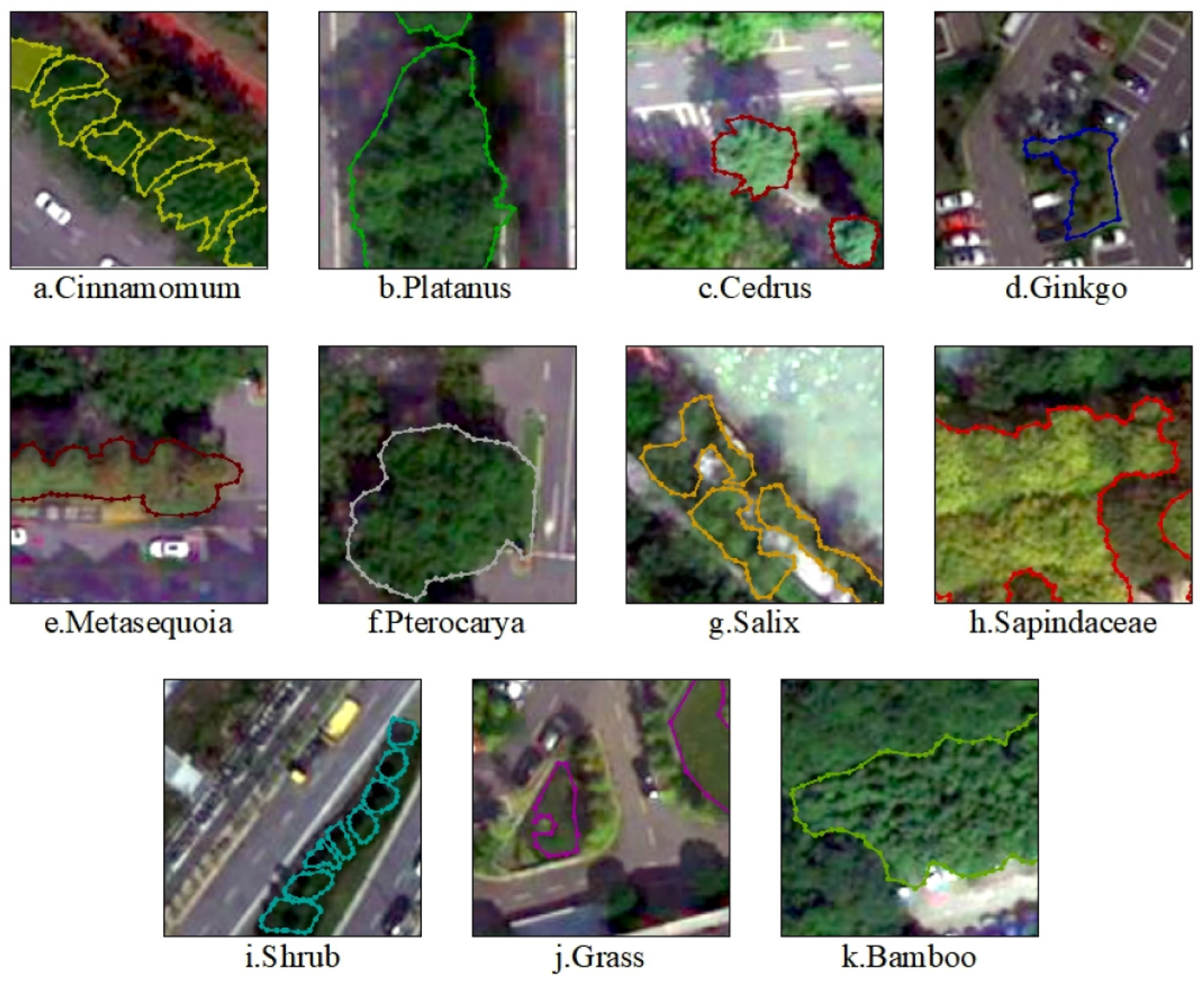

2.3. Field Survey Data

3. Methods

3.1. Deep Transfer Learning

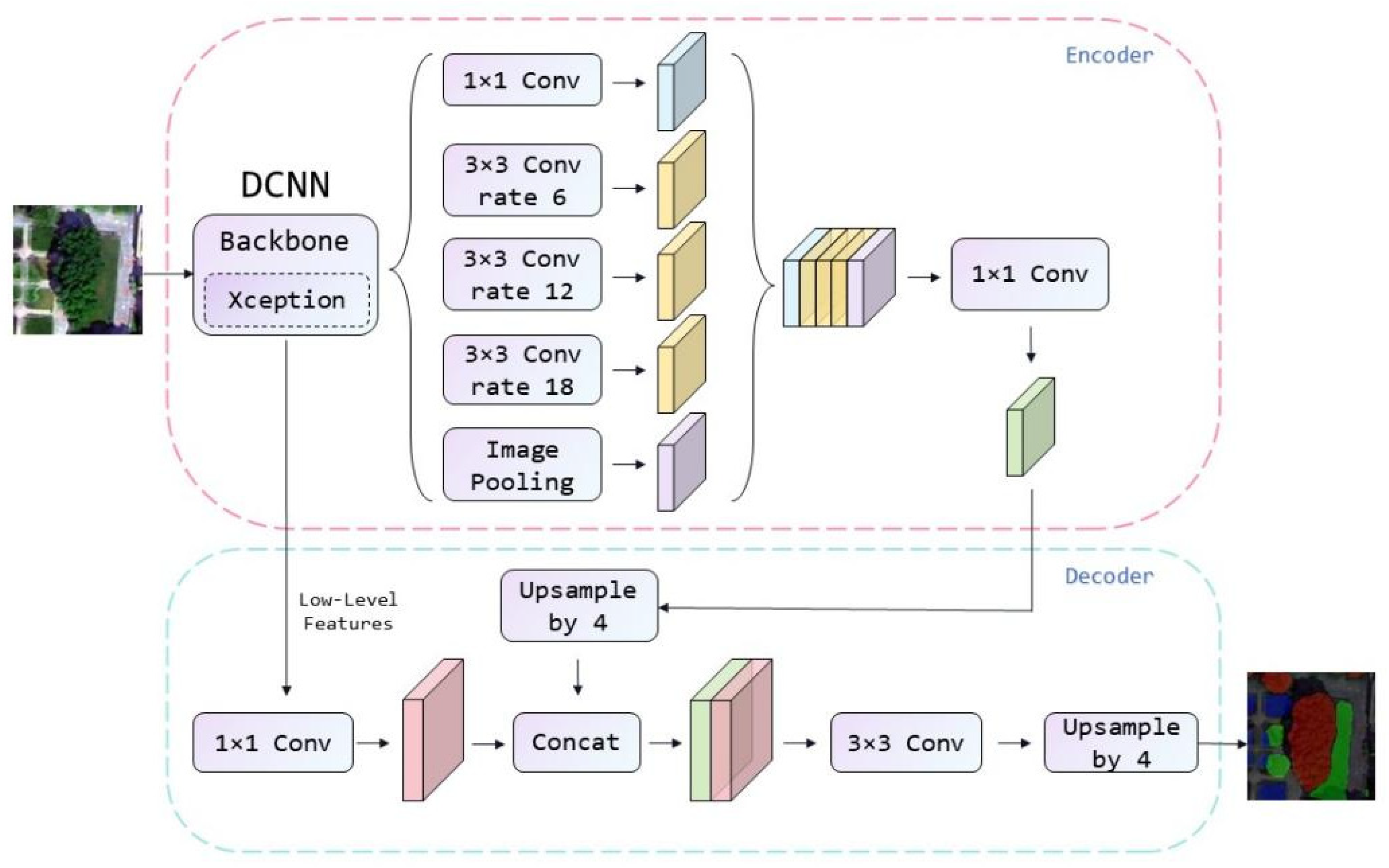

3.2. Network Architecture

3.3. Evaluation Methods

3.3.1. Basic Evaluation Indicators

3.3.2. 10-Fold Cross-Validation

3.4. Implementation Details and Metrics

4. Experimental Process and Comparison Results

4.1. Comparison Setting

4.1.1. UNet

4.1.2. HRNet

4.1.3. PSPNet

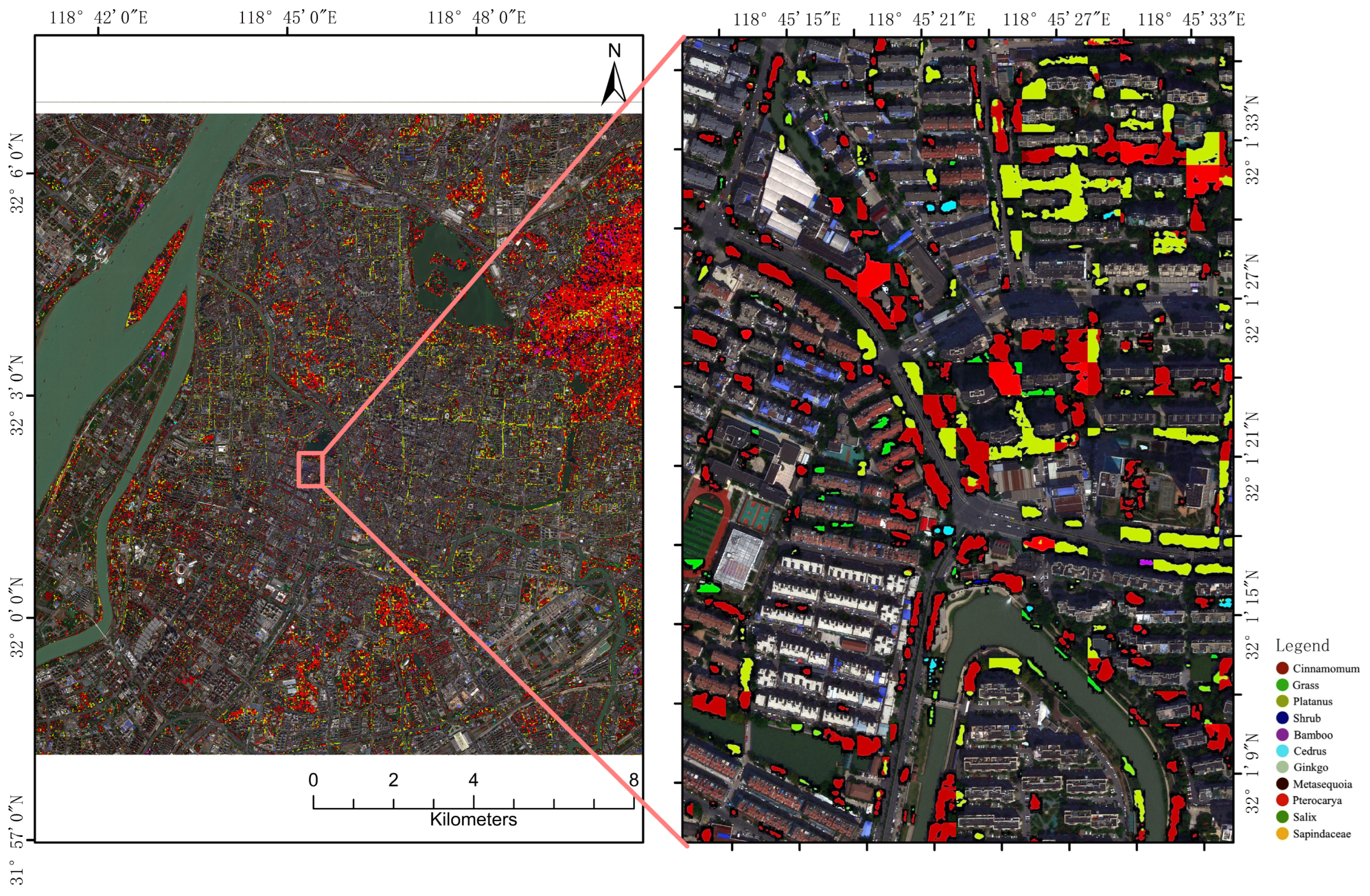

4.2. Results

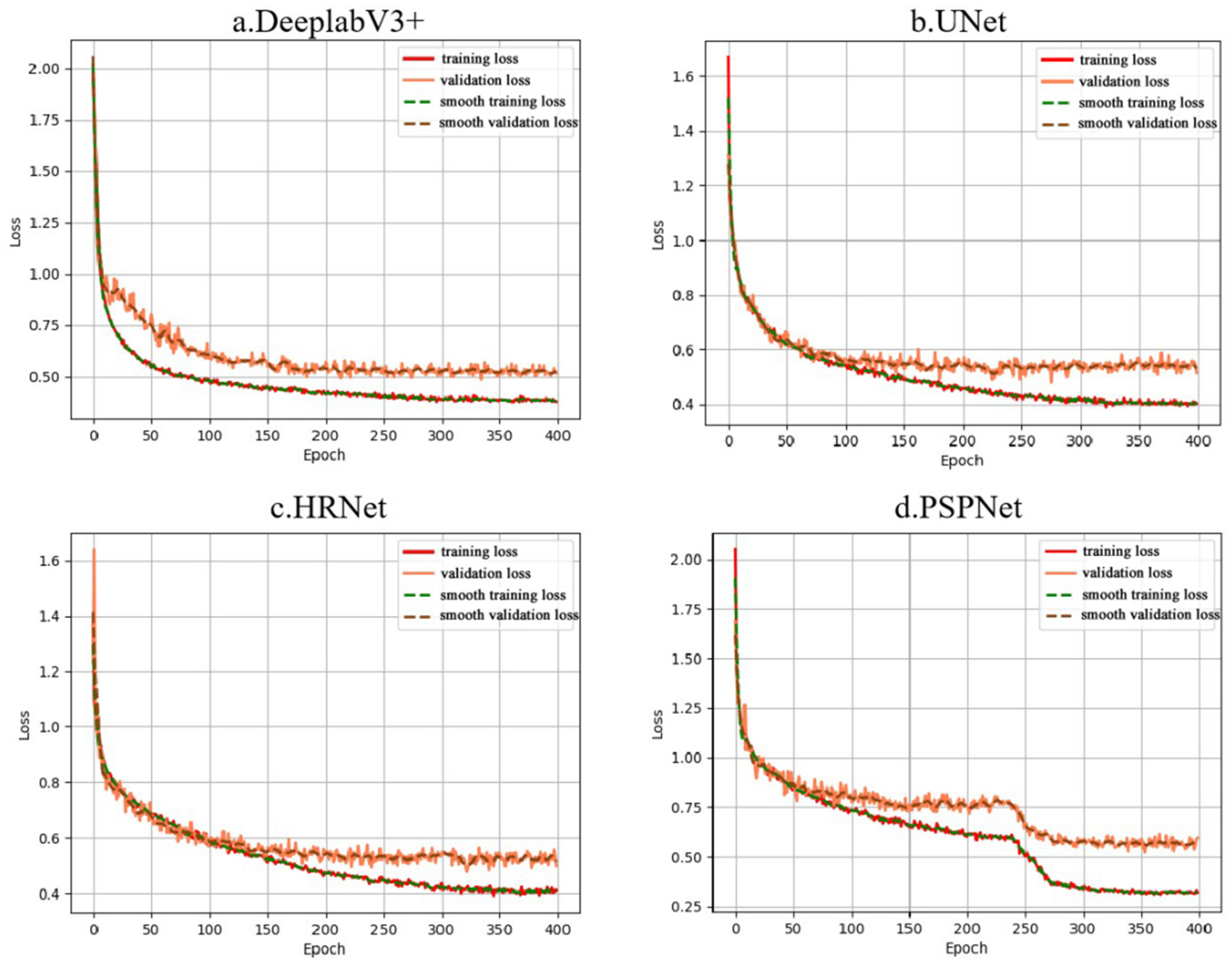

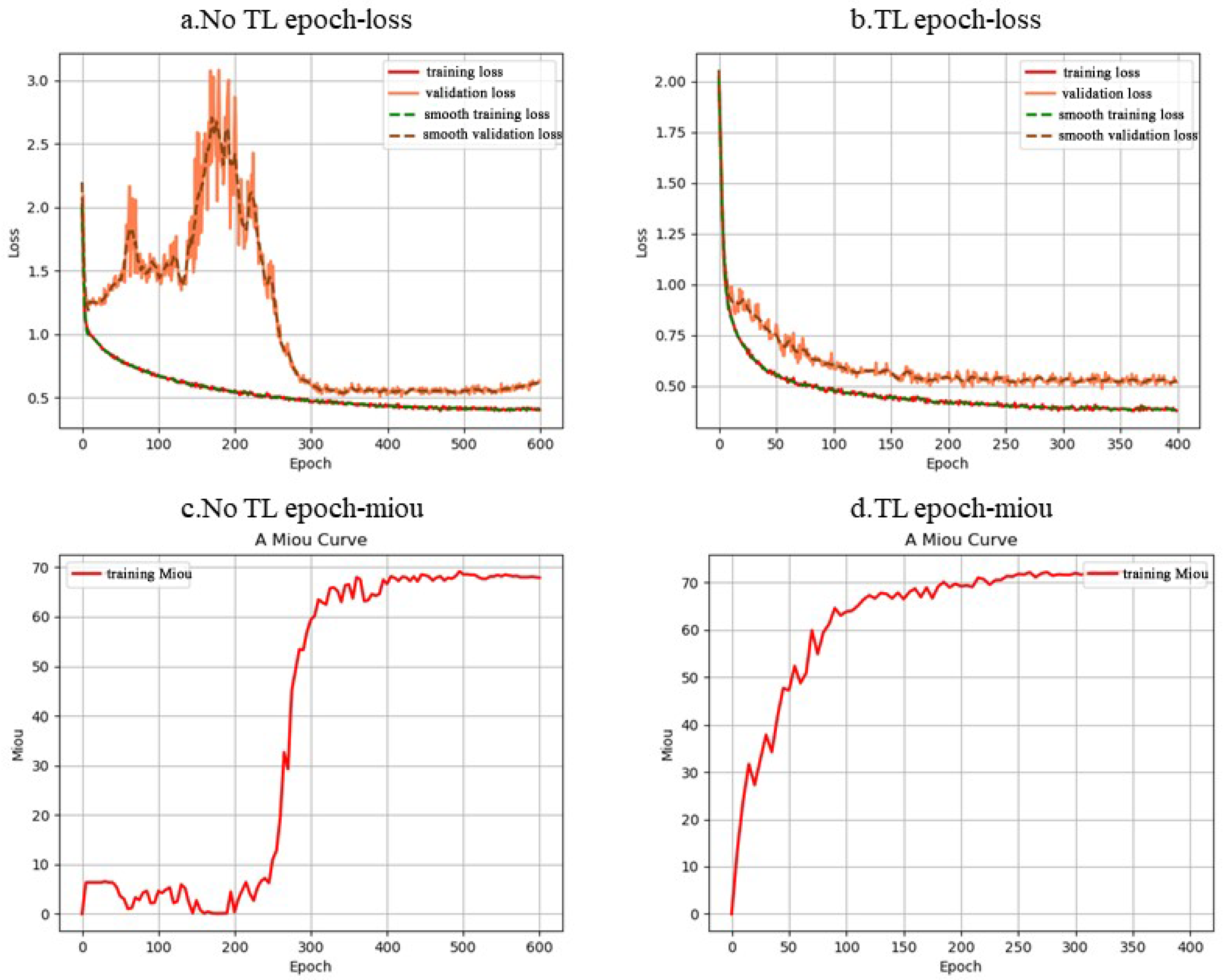

4.2.1. Comparison of Loss Curves of Deep Learning Models

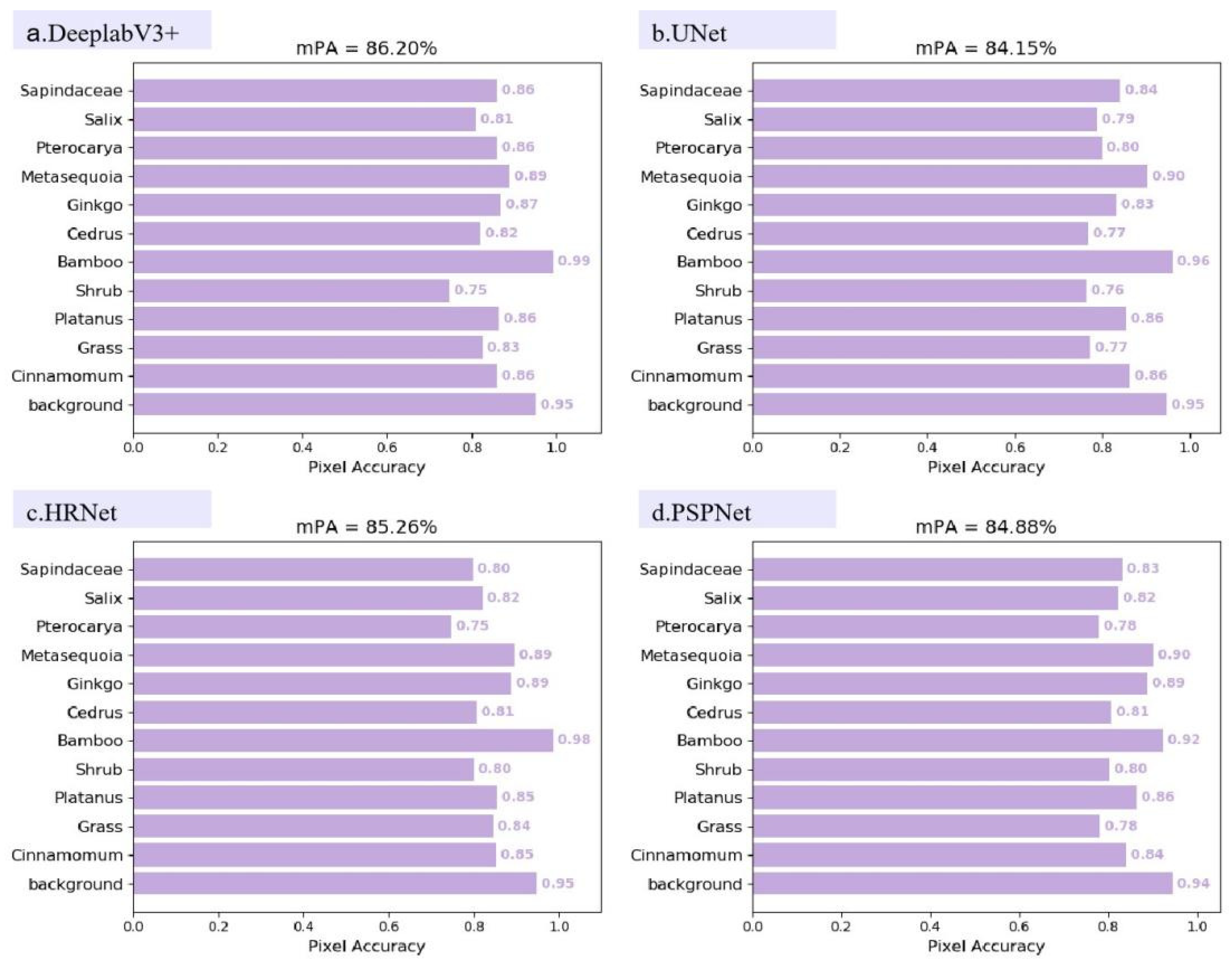

4.2.2. Performance Comparison of Deep Learning Models in Urban Tree Species Classification

4.2.3. Comparison Between Transfer Learning and Non-Transfer Learning

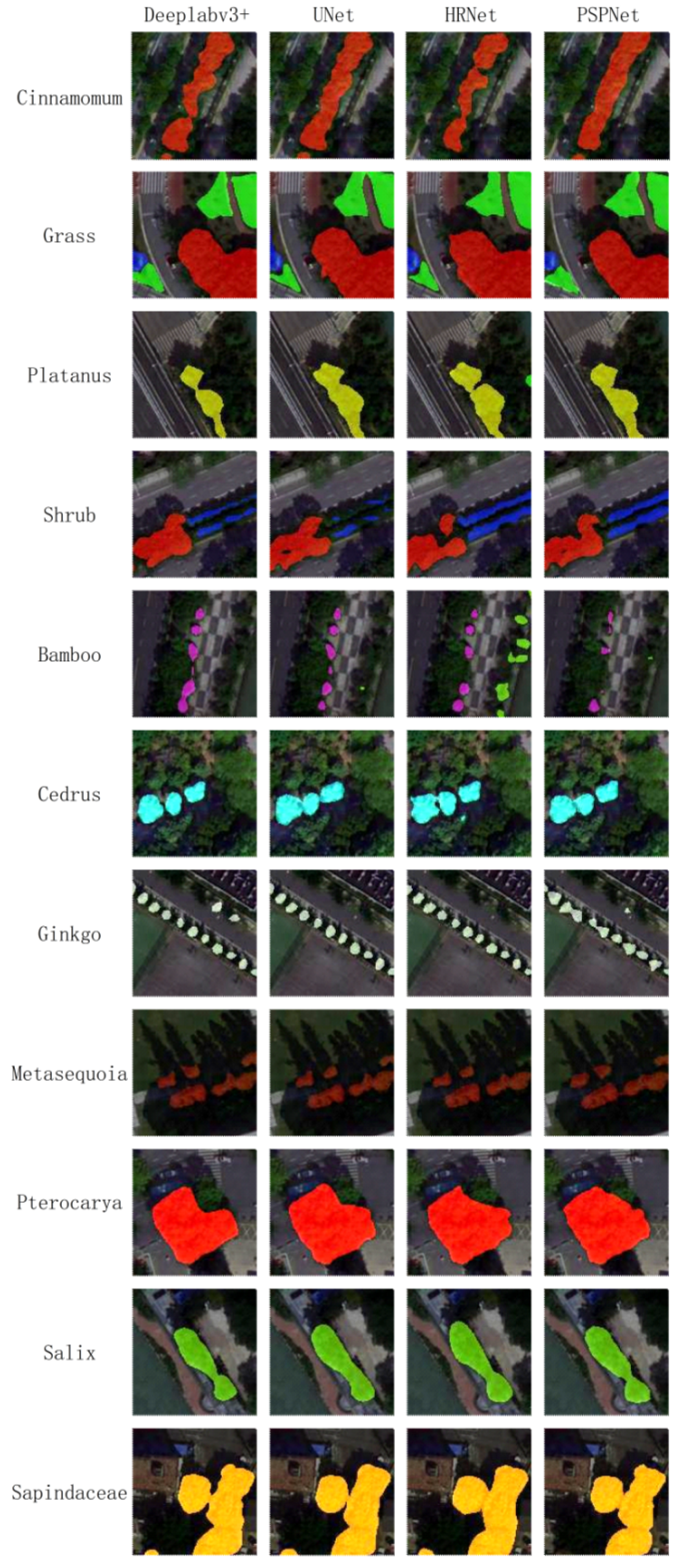

4.2.4. Comparison Results of Models Based on Classification Effects of Major Tree Species

5. Discussion

5.1. Applicability of Various Deep Learning Models in Urban Tree Species Classification

5.2. The Impact of Transfer Learning and Non-Transfer Learning in Tree Species Classification

5.3. The Influence of Urban Tree Species Dataset on Classification Model

5.4. Urban Shadow Problem and Its Influence

5.5. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Haase, D.; Larondelle, N.; Andersson, E.; Artmann, M.; Borgstrom, S.; Breuste, J.; Gomez-Baggethun, E.; Gren, A.; Hamstead, Z.; Hansen, R.; et al. A quantitative review of urban ecosystem service assessments: Concepts, models, and implementation. Ambio 2014, 43, 413–433. [Google Scholar] [CrossRef] [PubMed]

- de Groot, R.S.; Wilson, M.A.; Boumans, R. A typology for the classification, description and valuation of ecosystem functions, goods and services. Ecol. Econ. 2002, 41, 393–408. [Google Scholar] [CrossRef]

- Yang, J.; Li, Y.; Hay, I.; Huang, X. Decoding national new area development in China: Toward new land development and politics. Cities 2019, 87, 114–120. [Google Scholar] [CrossRef]

- Jim, C.Y.; Zhang, H. Species diversity and spatial differentiation of old-valuable trees in urban Hong Kong. Urban Urban Gree 2013, 12, 171–182. [Google Scholar] [CrossRef]

- Chen, P.; Xu, W.; Zhan, Y.; Yang, W.; Wang, J.; Lan, Y. Evaluation of Cotton Defoliation Rate and Establishment of Spray Prescription Map Using Remote Sensing Imagery. Remote Sens. 2022, 14, 4206. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, X.; Li, L.; Zhang, Z. Urban forest in Jinan City: Distribution, classification and ecological significance. Catena 2007, 69, 44–50. [Google Scholar] [CrossRef]

- Jensen, R.R.; Hardin, P.J.; Hardin, A.J. Classification of urban tree species using hyperspectral imagery. Geocarto Int. 2012, 27, 443–458. [Google Scholar] [CrossRef]

- Mairota, P.; Cafarelli, B.; Didham, R.K.; Lovergine, F.P.; Lucas, R.M.; Nagendra, H.; Rocchini, D.; Tarantino, C. Challenges and opportunities in harnessing satellite remote-sensing for biodiversity monitoring. Ecol. Inform. 2015, 30, 207–214. [Google Scholar] [CrossRef]

- Abbas, S.; Peng, Q.; Wong, M.S.; Li, Z.; Wang, J.; Ng, K.T.K.; Kwok, C.Y.T.; Hui, K.K.W. Characterizing and classifying urban tree species using bi-monthly terrestrial hyperspectral images in Hong Kong. ISPRS J. Photogramm. 2021, 177, 204–216. [Google Scholar] [CrossRef]

- Wang, L.Y.; Lu, D.N.; Xu, L.L.; Robinson, D.T.; Tan, W.K.; Xie, Q.; Guan, H.Y.; Chapman, M.A.; Li, J. Individual tree species classification using low-density airborne LiDAR data via attribute-aware cross-branch transformer. Remote Sens. Environ. 2024, 315, 114456. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Sugumaran, R.; Pavuluri, M.K.; Zerr, D. The use of high-resolution imagery for identification of urban climax forest species using traditional and rule-based classification approach. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1933–1939. [Google Scholar] [CrossRef]

- Xiao, Q.; Ustin, S.L.; McPherson, E.G. Using AVIRIS data and multiple-masking techniques to map urban forest tree species. Int. J. Remote Sens. 2004, 25, 5637–5654. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, X.; Jiang, H. Object-oriented method for urban vegetation mapping using IKONOS imagery. Int. J. Remote Sens. 2010, 31, 177–196. [Google Scholar] [CrossRef]

- Abdollahnejad, A.; Panagiotidis, D.; Shataee Joybari, S.; Surový, P. Prediction of Dominant Forest Tree Species Using QuickBird and Environmental Data. Forests 2017, 8, 42. [Google Scholar] [CrossRef]

- Shojanoori, R.; Shafri, H.Z.M.; Mansor, S.; Ismail, M.H. The Use of WorldView-2 Satellite Data in Urban Tree Species Mapping by Object-Based Image Analysis Technique. Sains Malays. 2016, 45, 1025–1034. [Google Scholar]

- Mustafa, Y.T.; Habeeb, H.N.; Stein, A.; Sulaiman, F.Y. Identification and Mapping of Tree Species in Urban Areas Using Worldview-2 Imagery. ISPRS Jt. Int. Geoinf. Conf. 2015, II-2, 175–181. [Google Scholar] [CrossRef]

- Zhang, K.; Hu, B. Individual Urban Tree Species Classification Using Very High Spatial Resolution Airborne Multi-Spectral Imagery Using Longitudinal Profiles. Remote Sens. 2012, 4, 1741–1757. [Google Scholar] [CrossRef]

- Qin, H.; Wang, W.; Yao, Y.; Qian, Y.; Xiong, X.; Zhou, W. First Experience with Zhuhai-1 Hyperspectral Data for Urban Dominant Tree Species Classification in Shenzhen, China. Remote Sens. 2023, 15, 3179. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, S.; Kong, B. Vector Distance Algorithm for Optimal Segmentation Scale Selection of Object-oriented Remote Sensing Image Classification. In Proceedings of the 2009 17th International Conference on Geoinformatics, Fairfax, VA, USA, 12–14 August 2009; Volumes 1 and 2, p. 743. [Google Scholar]

- Huang, Z.L.; Pan, Z.X.; Lei, B. What, Where, and How to Transfer in SAR Target Recognition Based on Deep CNNs. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2324–2336. [Google Scholar] [CrossRef]

- Li, J.X.; Hong, D.F.; Gao, L.R.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep learning in multimodal remote sensing data fusion: A comprehensive review. Int. J. Appl. Earth Obs. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Shi, F.; Yang, B.; Li, M. An improved framework for assessing the impact of different urban development strategies on land cover and ecological quality changes—A case study from Nanjing Jiangbei New Area, China. Ecol. Indic. 2023, 147, 109998. [Google Scholar] [CrossRef]

- Gui, S.X.; Song, S.; Qin, R.J.; Tang, Y. Remote Sensing Object Detection in the Deep Learning Era-A Review. Remote Sens. 2024, 16, 327. [Google Scholar] [CrossRef]

- Xu, Z.; Zhou, Y.; Wang, S.; Wang, L.; Li, F.; Wang, S.; Wang, Z. A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 3845. [Google Scholar] [CrossRef]

- Vinod, P.V.; Trivedi, S.; Hebbar, R.; Jha, C.S. Assessment of Trees Outside Forest (TOF) in Urban Landscape Using High-Resolution Satellite Images and Deep Learning Techniques. J. Indian. Soc. Remote 2023, 51, 549–564. [Google Scholar] [CrossRef]

- Akbar, K.F.; Ashraf, I.; Shakoor, S. Analysis of Urban Forest Structure, Distribution and Amenity Value: A Case Study. J. Anim. Plant Sci. 2014, 24, 1636–1642. [Google Scholar]

- Sun, X.Z.; Xie, Y.C.; Jiang, L.M.; Cao, Y.; Liu, B.Y. DMA-Net: DeepLab With Multi-Scale Attention for Pavement Crack Segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18392–18403. [Google Scholar] [CrossRef]

- Wu, Z.Q.; Lv, J.L.; Sun, X.G.; Niu, W.L. MCAC-UNet: Multi scale Attention Cascade Compensation U-Net Network for Rail Surface Defect Detection. In Proceedings of the 2nd Asia Conference on Computer Vision, Image Processing and Pattern Recognition (CVIPPR), Xiamen, China, 26–28 April 2024. [Google Scholar]

- Qi, H.; Zhou, H.Y.; Dong, J.Y.; Dong, X.H. Small Sample Image Segmentation by Coupling Convolutions and Transformers. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 5282–5294. [Google Scholar] [CrossRef]

- Tang, Q.S.; Jiang, Z.Y.; Pan, B.L.; Guo, J.T.; Jiang, W.M. Scene Text Detection Using HRNet and Spatial Attention Mechanism. Program. Comput. Softw. 2023, 49, 954–965. [Google Scholar] [CrossRef]

- Zhao, H.S.; Shi, J.P.; Qi, X.J.; Wang, X.G.; Jia, J.Y. Pyramid Scene Parsing Network. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Chen, J.D.; Chen, J.X.; Zhang, D.F.; Sun, Y.D.; Nanehkaran, Y.A. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vision 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z. Mapping the Urban Landscape: A Remote Sensing and Deep Learning Approach to Identifying Forests and Land Cover Features in a Desert City; University of Idaho: Moscow, ID, USA, 2023; p. 160. [Google Scholar]

- He, J.J.; Gong, S.L.; Yu, Y.; Yu, L.J.; Wu, L.; Mao, H.J.; Song, C.B.; Zhao, S.P.; Liu, H.L.; Li, X.Y.; et al. Air pollution characteristics and their relation to meteorological conditions during 2014–2015 in major Chinese cities. Environ. Pollut. 2017, 223, 484–496. [Google Scholar] [CrossRef] [PubMed]

- Le Louarn, M.; Clergeau, P.; Briche, E.; Deschamps-Cottin, M. “Kill Two Birds with One Stone”: Urban Tree Species Classification Using Bi-Temporal Pleiades Images to Study Nesting Preferences of an Invasive Bird. Remote Sens. 2017, 9, 916. [Google Scholar] [CrossRef]

- Jérôme, S. Shaping the Future of Earth Observation with Pleiades Neo. In Proceedings of the IEEE 9th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 11–14 June 2019; pp. 399–401. [Google Scholar]

- Pu, R.L.; Landry, S.; Yu, Q.Y. Assessing the potential of multi-seasonal high resolution Pleiades satellite imagery for mapping urban tree species. Int. J. Appl. Earth Obs. 2018, 71, 144–158. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS plus Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Lillo-Saavedra, M.; Gonzalo, C. Multispectral images fusion by a joint multidirectional and multiresolution representation. Int. J. Remote Sens. 2007, 28, 4065–4079. [Google Scholar] [CrossRef]

- Restaino, R.; Mura, M.D.; Vivone, G.; Chanussot, J. Context-Adaptive Pansharpening Based on Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 753–766. [Google Scholar] [CrossRef]

- Fraser, C.S.; Dial, G.; Grodecki, J. Sensor orientation via RPCs. ISPRS J. Photogramm. 2006, 60, 182–194. [Google Scholar] [CrossRef]

- Shean, D.E.; Alexandrov, O.; Moratto, Z.M.; Smith, B.E.; Joughin, I.R.; Porter, C.; Morin, P. An automated, open-source pipeline for mass production of digital elevation models (DEMs) from very-high-resolution commercial stereo satellite imagery. ISPRS J. Photogramm. 2016, 116, 101–117. [Google Scholar] [CrossRef]

- Xie, Y.K.; Feng, D.J.; Xiong, S.F.; Zhu, J.; Liu, Y.G. Multi-Scene Building Height Estimation Method Based on Shadow in High Resolution Imagery. Remote Sens. 2021, 13, 2862. [Google Scholar] [CrossRef]

- Singh, M.; Nain, N.; Panwar, S.; Chbeir, R. Foreground Object Extraction using Thresholding With Automatic Shadow Removal. In Proceedings of the 2015 11th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Bangkok, Thailand, 23–27 November 2015; pp. 655–662. [Google Scholar]

- Ganesan, P.; Rajini, V.; Sathish, B.S.; Shaik, K.B. HSV Color Space Based Segmentation of Region of Interest in Satellite Images. In Proceedings of the International Conference on Control, Instrumentation, Communication and Computational Technolo-gies (ICCICCT), Kanyakumari, India, 10–11 July 2014; pp. 101–105. [Google Scholar]

- Houssein, E.H.; Abdelkareem, D.A.; Emam, M.M.; Hameed, M.A.; Younan, M. An efficient image segmentation method for skin cancer imaging using improved golden jackal optimization algorithm. Comput. Biol. Med. 2022, 149, 106075. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vision. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Dual attention deep learning network for automatic steel surface defect segmentation. Comput. Civ. Infrastruct. Eng. 2022, 37, 1468–1487. [Google Scholar] [CrossRef]

- Li, Z.; Dong, J. A Framework Integrating DeeplabV3+, Transfer Learning, Active Learning, and Incremental Learning for Mapping Building Footprints. Remote Sens. 2022, 14, 4738. [Google Scholar] [CrossRef]

- Zhuang, F.Z.; Qi, Z.Y.; Duan, K.Y.; Xi, D.B.; Zhu, Y.C.; Zhu, H.S.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.K.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the COMPUTER VISION—15th European Conference on Computer Vision (ECCV), PT VII, Munich, Germany, 8–14 September 2018; Volume 11211, pp. 833–851. [Google Scholar]

- Zheng, S.X.; Lu, J.C.; Zhao, H.S.; Zhu, X.T.; Luo, Z.K.; Wang, Y.B.; Fu, Y.W.; Feng, J.F.; Xiang, T.; Torr, P.; et al. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6877–6886. [Google Scholar]

- Akcay, O.; Kinaci, A.C.; Avsar, E.O.; Aydar, U. Semantic Segmentation of High-Resolution Airborne Images with Dual-Stream DeepLabV3+. ISPRS Int. J. Geo-Inf. 2022, 11, 23. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Cai, Z.W.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1483–1498. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Meena, S.R.; Tiede, D.; Aryal, J. Evaluation of Different Machine Learning Methods and Deep-Learning Convolutional Neural Networks for Landslide Detection. Remote Sens. 2019, 11, 196. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Higham, N.J.; Mary, T. Mixed precision algorithms in numerical linear algebra. Acta Numer. 2022, 31, 347–414. [Google Scholar] [CrossRef]

- Kim, J.H. Estimating classification error rate: Repeated cross-validation, repeated hold-out and bootstrap. Comput. Stat. Data Anal. 2009, 53, 3735–3745. [Google Scholar] [CrossRef]

- Wong, T.T.; Yeh, P.Y. Reliable Accuracy Estimates from k-Fold Cross Validation. IEEE Trans. Knowl. Data Eng. 2020, 32, 1586–1594. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Med. Image Comput. Comput.-Assist. Interv. Pt. III 2015, 9351, 234–241. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Oghaz, M.; Saheer, L.B.; Zarrin, J. Urban Tree Detection and Species Classification Using Aerial Imagery. In Intelligent Computing, Vol. 2, Computing Conference on Intelligent Computing, Shenzhen, China, 9–11 August 2022; Arai, K., Ed.; Springer: Cham, Switzerland, 2022; Volume 507, pp. 469–483. [Google Scholar]

- Yu, H.L.; Che, M.H.; Yu, H.; Zhang, J. Development of Weed Detection Method in Soybean Fields Utilizing Improved DeepLabv3+ Platform. Agronomy 2022, 12, 2889. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, R.; Chen, L.; Li, L.; Yi, T.; Wen, Y.; Ding, C.; Xie, C. Evaluation of Deep Learning Segmentation Models for Detection of Pine Wilt Disease in Unmanned Aerial Vehicle Images. Remote Sens. 2021, 13, 3594. [Google Scholar] [CrossRef]

- Ayhan, B.; Kwan, C. Tree, Shrub, and Grass Classification Using Only RGB Images. Remote Sens. 2020, 12, 1333. [Google Scholar] [CrossRef]

- Chen, S.D.; Zhang, M.; Lei, F. Mapping Vegetation Types by Different Fully Convolutional Neural Network Structures with Inadequate Training Labels in Complex Landscape Urban Areas. Forests 2023, 14, 1788. [Google Scholar] [CrossRef]

- Guo, S.C.; Jin, Q.Z.; Wang, H.Z.; Wang, X.Z.; Wang, Y.G.; Xiang, S.M. Learnable Gated Convolutional Neural Network for Semantic Segmentation in Remote-Sensing Images. Remote Sens. 2019, 11, 1922. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q.A. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.L.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 83. [Google Scholar] [CrossRef]

- Pacheco-Prado, D.; Bravo-López, E.; Ruiz, L.A. Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach. Forests 2023, 14, 1050. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Advances in Neural Information Processing Systems 27 (NIPS 2014), Proceedings of the 28th Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Neural Information Processing Systems Foundation: La Jolla, CA, USA, 2014; Volume 27. [Google Scholar]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. ISPRS J. Photogramm. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Xue, X.Y.; Luo, Q.; Bu, M.F.; Li, Z.; Lyu, S.; Song, S.R. Citrus Tree Canopy Segmentation of Orchard Spraying Robot Based on RGB-D Image and the Improved DeepLabv3+. Agronomy 2023, 13, 2059. [Google Scholar] [CrossRef]

- Wang, K.P.; Wang, T.J.; Liu, X.H. A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment. Forests 2019, 10, 1. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 215232. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.S.; Zhang, H.S.; Li, Q.S.; Lin, Y.Y.; Michalski, J. New morphological features for urban tree species identification using LiDAR point clouds. Urban For. Urban Green. 2022, 71, 127558. [Google Scholar] [CrossRef]

- Chen, M.; Seow, K.; Briottet, X.; Pang, S.K. Efficient Empirical Reflectance Retrieval in Urban Environments. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2013, 6, 1596–1601. [Google Scholar] [CrossRef]

- Zhou, W.Q.; Huang, G.L.; Troy, A.; Cadenasso, M.L. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777. [Google Scholar] [CrossRef]

- Zhang, C.Y.; Qiu, F. Mapping Individual Tree Species in an Urban Forest Using Airborne Lidar Data and Hyperspectral Imagery. Photogramm. Eng. Remote Sens. 2012, 78, 1079–1087. [Google Scholar] [CrossRef]

- Guan, H.Y.; Yu, Y.T.; Ji, Z.; Li, J.; Zhang, Q. Deep learning-based tree classification using mobile LiDAR data. Remote Sens. Lett. 2015, 6, 864–873. [Google Scholar] [CrossRef]

- Estacio, I.; Hadfi, R.; Blanco, A.; Ito, T.; Babaan, J. Optimization of tree positioning to maximize walking in urban outdoor spaces: A modeling and simulation framework. Sustain. Cities Soc. 2022, 86, 104105. [Google Scholar] [CrossRef]

- Rice, P.J.; Horgan, B.P.; Rittenhouse, J.L. Evaluation of core cultivation practices to reduce ecological risk of pesticides in runoff from Agrostis palustris. Environ. Toxicol. Chem. 2010, 29, 1215–1223. [Google Scholar] [CrossRef]

- Sharifi, A. Co-benefits and synergies between urban climate change mitigation and adaptation measures: A literature review. Sci. Total Environ. 2021, 750, 141642. [Google Scholar] [CrossRef]

| Band Number | Spectral Bands | Wavelength (nm) | Resolution (m) |

|---|---|---|---|

| Band 1 | Deep Blue | 400–450 | 1.2 |

| Band 2 | Blue | 450–520 | 1.2 |

| Band 3 | Green | 530–590 | 1.2 |

| Band 4 | Red | 620–690 | 1.2 |

| Band 5 | Red Edge | 700–750 | 1.2 |

| Band 6 | NIR | 770–880 | 1.2 |

| Band 7 | Panchromatic | 450–800 | 0.3 |

| Model | Miou (%) | mPA (%) | mPrecision (%) | mRecall (%) | Kappa |

|---|---|---|---|---|---|

| DeeplabV3+ | 72.33 | 86.20 | 81.15 | 86.20 | 0.82 |

| UNet | 70.81 | 84.15 | 80.93 | 84.15 | 0.81 |

| HRNet | 70.32 | 85.26 | 79.65 | 85.26 | 0.81 |

| PSPNet | 70.06 | 84.88 | 79.46 | 84.88 | 0.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, X.; Sun, M.; Chen, Z.; Li, M.; Zhang, X. Deep Learning-Based Urban Tree Species Mapping with High-Resolution Pléiades Imagery in Nanjing, China. Forests 2025, 16, 783. https://doi.org/10.3390/f16050783

Cui X, Sun M, Chen Z, Li M, Zhang X. Deep Learning-Based Urban Tree Species Mapping with High-Resolution Pléiades Imagery in Nanjing, China. Forests. 2025; 16(5):783. https://doi.org/10.3390/f16050783

Chicago/Turabian StyleCui, Xiaolei, Min Sun, Zhili Chen, Mingshi Li, and Xiaowei Zhang. 2025. "Deep Learning-Based Urban Tree Species Mapping with High-Resolution Pléiades Imagery in Nanjing, China" Forests 16, no. 5: 783. https://doi.org/10.3390/f16050783

APA StyleCui, X., Sun, M., Chen, Z., Li, M., & Zhang, X. (2025). Deep Learning-Based Urban Tree Species Mapping with High-Resolution Pléiades Imagery in Nanjing, China. Forests, 16(5), 783. https://doi.org/10.3390/f16050783