Automatic Extraction of Discolored Tree Crowns Based on an Improved Faster-RCNN Algorithm

Abstract

1. Introduction

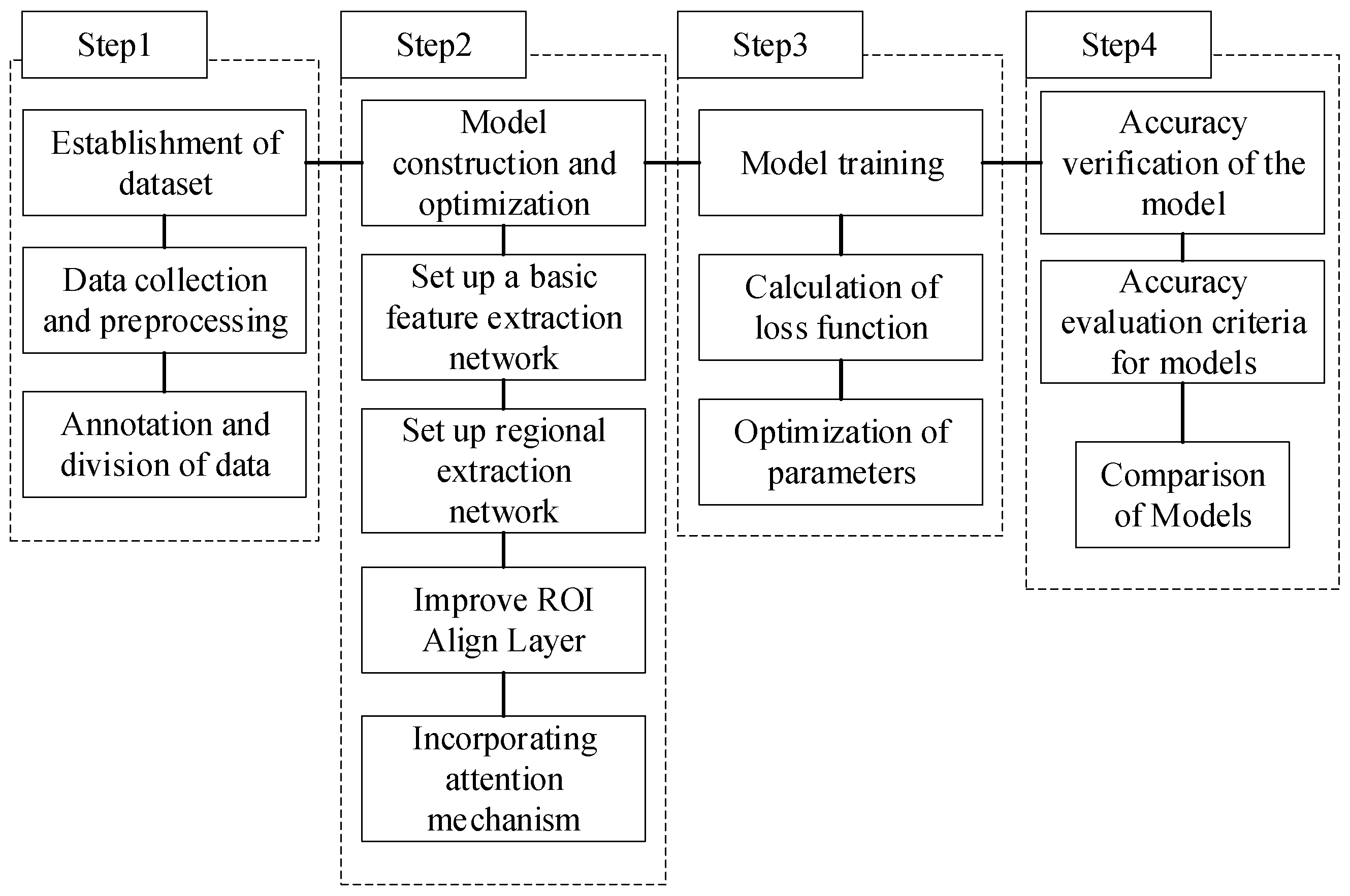

2. Materials and Methods

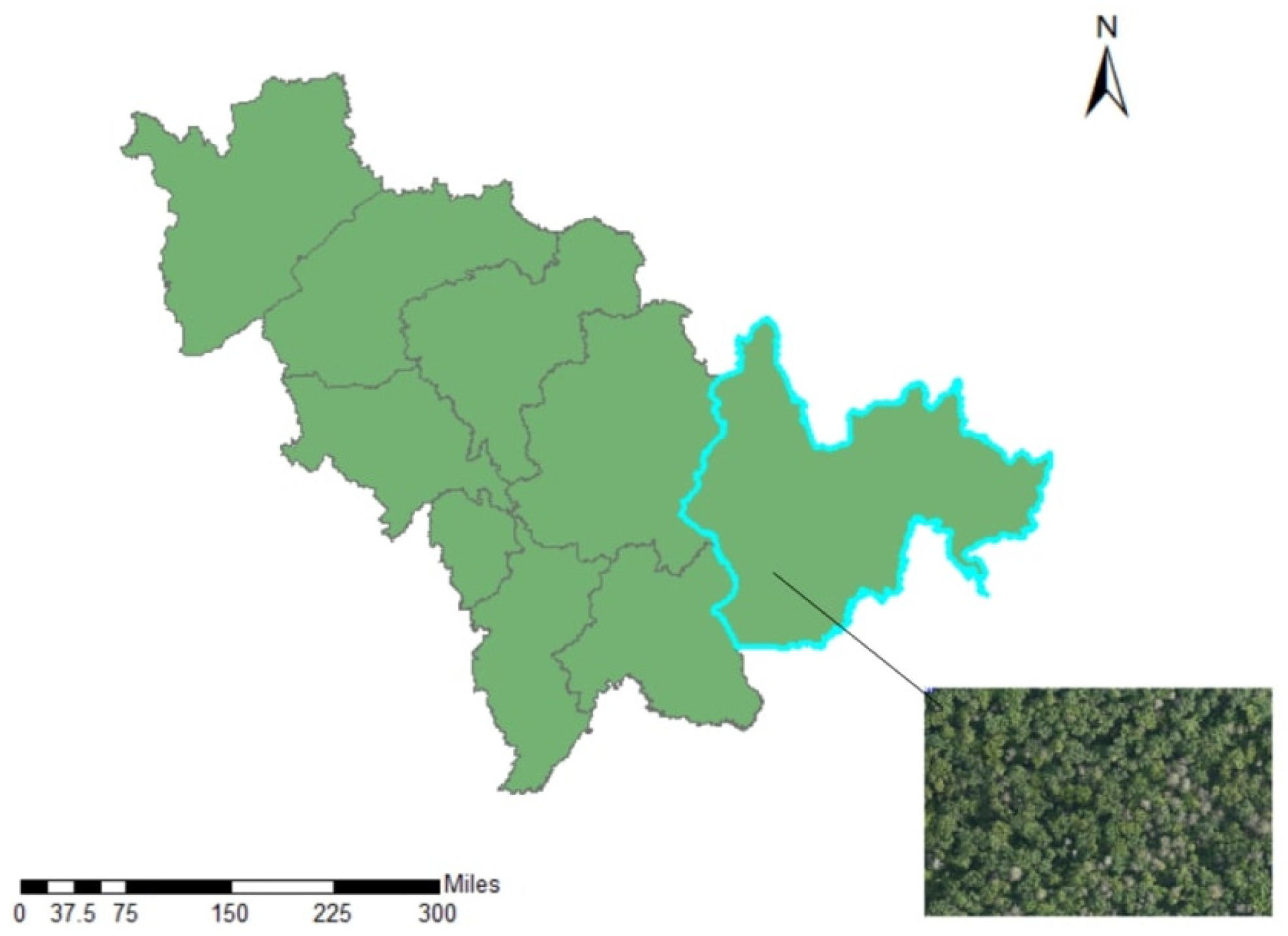

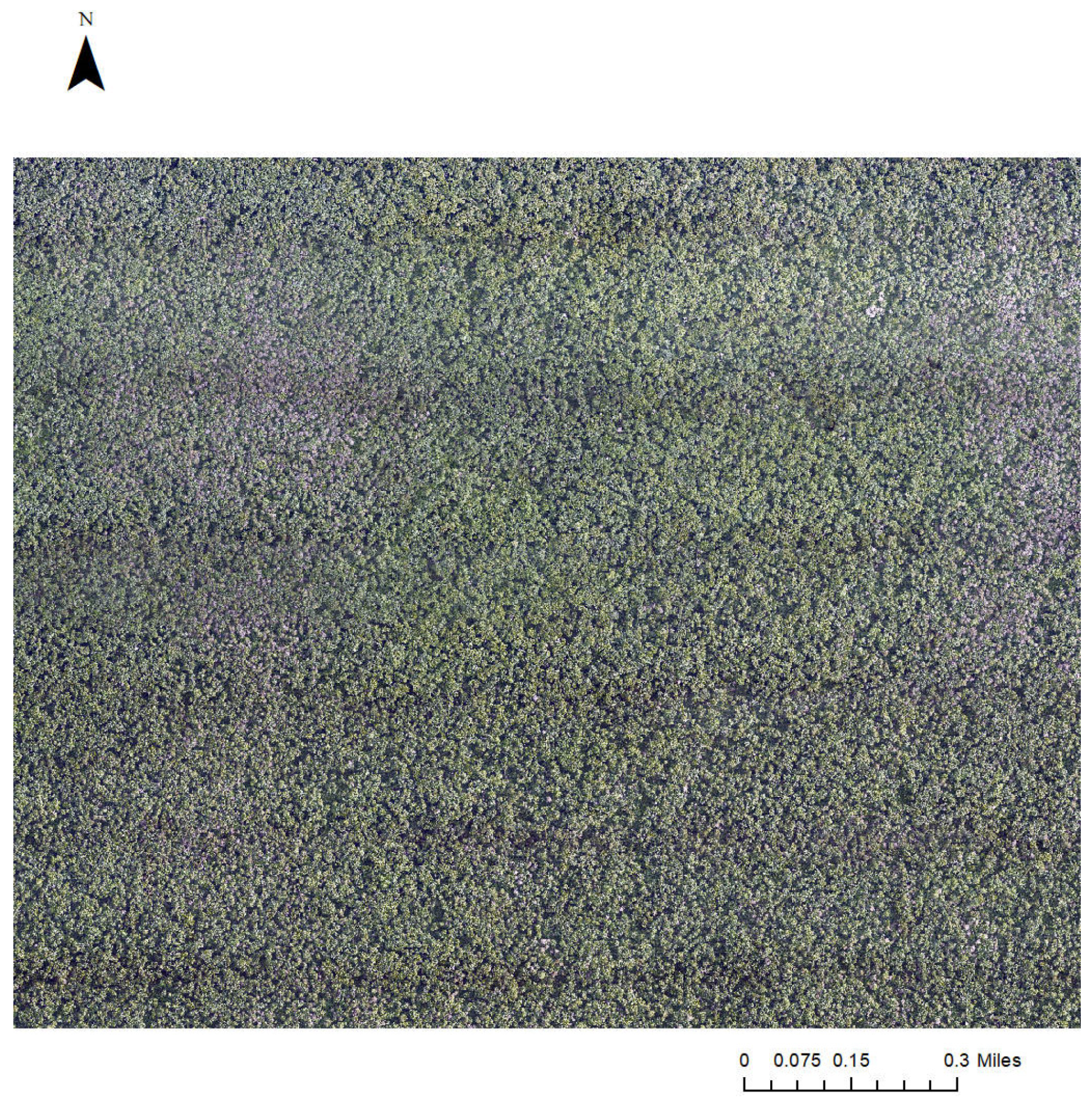

2.1. Study Area and Data Acquisition

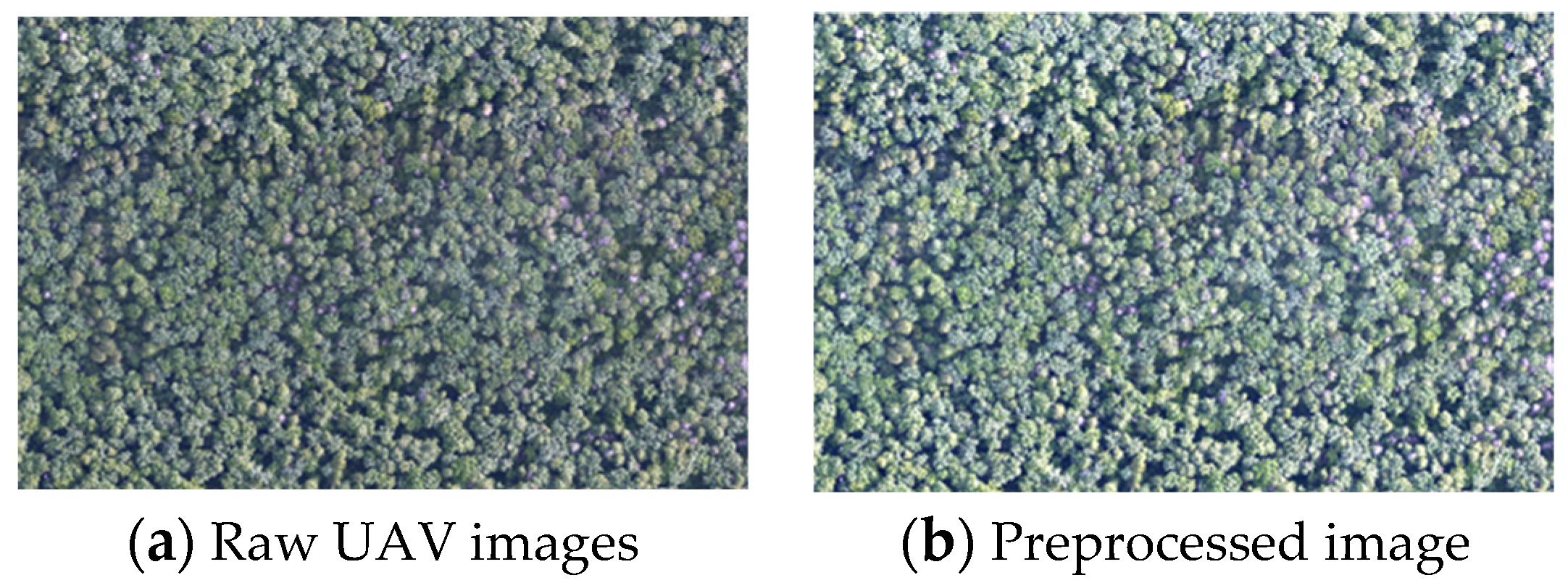

2.2. Data Preprocessing

2.3. Model Construction and Optimization

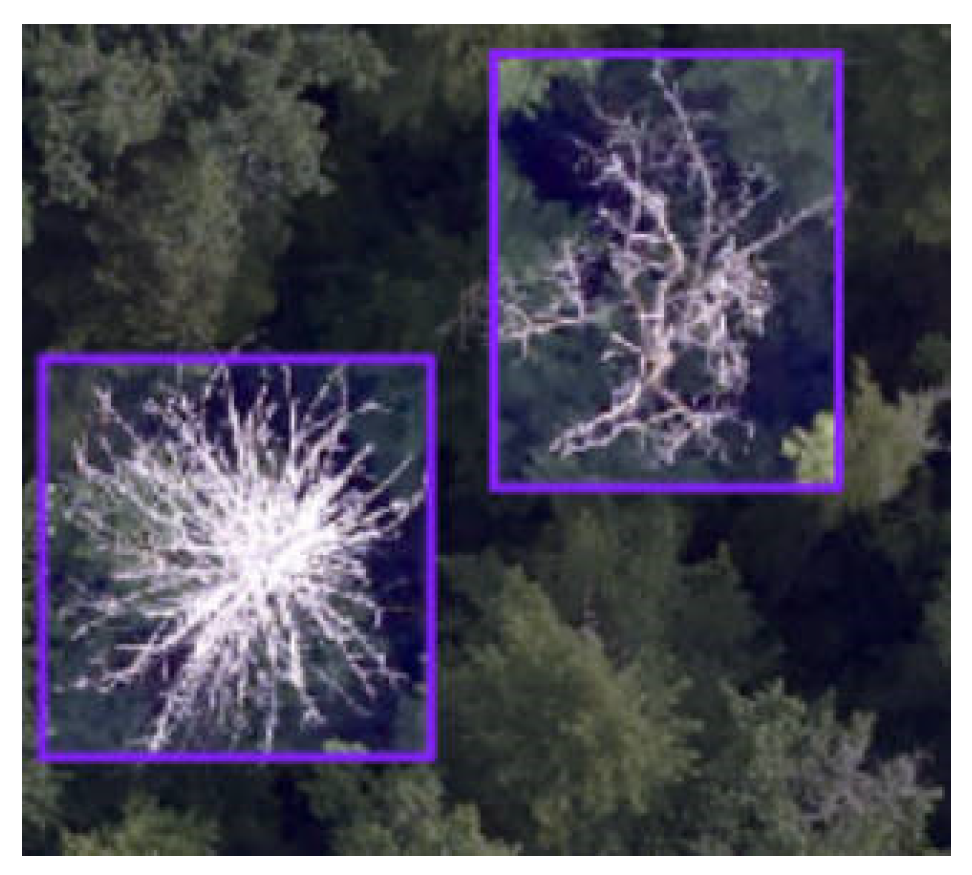

2.3.1. Dataset Construction

2.3.2. Model Architecture and Optimization

- (1)

- Input Data: High-resolution images of the study area, typically preprocessed for noise reduction and normalization, are fed into the network.

- (2)

- Residual Blocks: These blocks allow the network to learn deep representations by using skip connections. Each residual block performs a transformation F(x), and the result is added back to the input x, as shown in the following formula:

- (3)

- Feature Extraction with FPN: The FPN component aggregates features from various layers to capture both fine-grained details and coarse features. By upsampling the higher-resolution features and merging them with lower-level features through lateral connections, the network creates a multi-scale representation. This step improves the detection of targets with varying scales, such as the tree crowns in forest environments.

- (4)

- Output Features: After passing through the residual blocks and FPN, the feature maps are used by the region proposal network (RPN) to generate candidate bounding boxes. These proposals are further processed to refine the localization and classification of discolored tree crowns.

2.3.3. Model Training

2.3.4. Model Accuracy Validation

- (1)

- mAP (mean average precision) is a metric used in object detection algorithms to evaluate performance. It represents the average area under the precision–recall curve. Higher mAP values (closer to 100%) indicate better performance. mAP is calculated by averaging the precision and recall values and integrating over their curve:

- (2)

- Precision is the ratio of correctly predicted positive samples to the total number of positive predictions. The formula for precision is the following:

- (3)

- Recall is the ratio of correctly predicted positive samples to the total number of actual positive samples. The formula for recall is the following:

3. Results

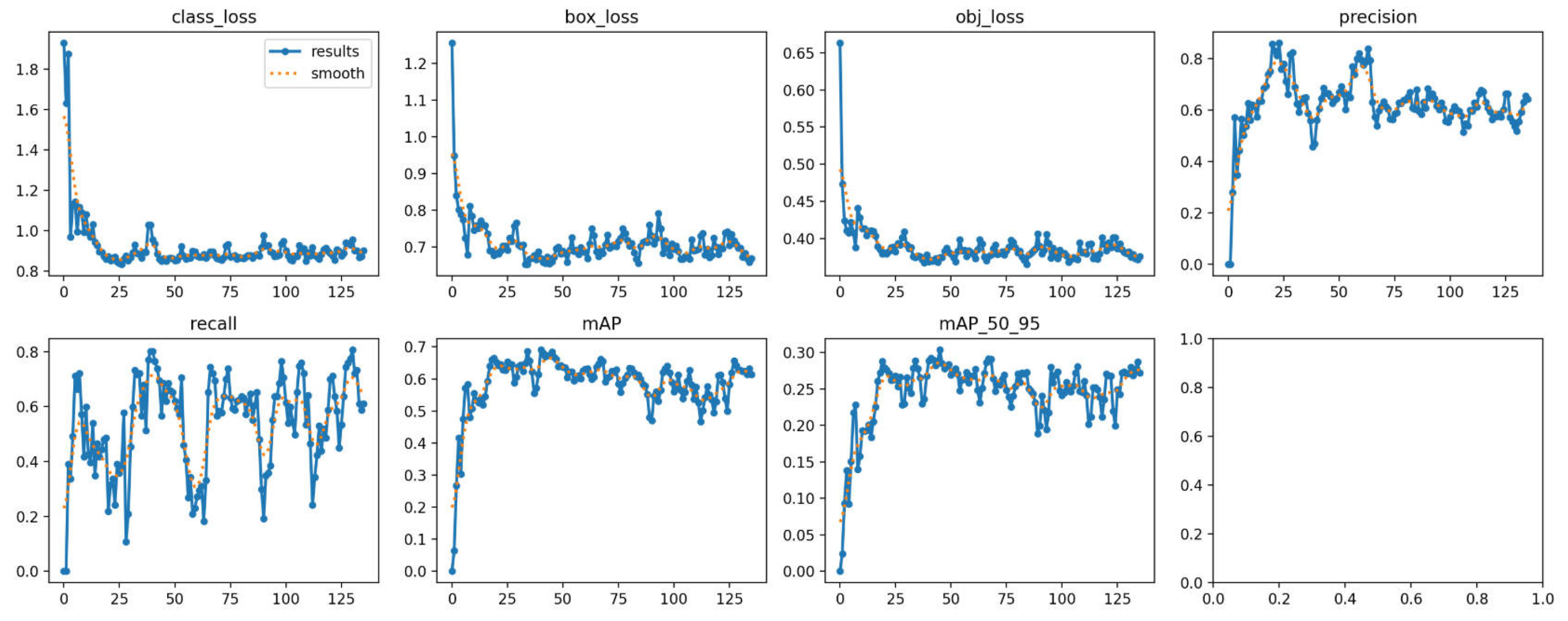

3.1. Model Indices and Decision Values

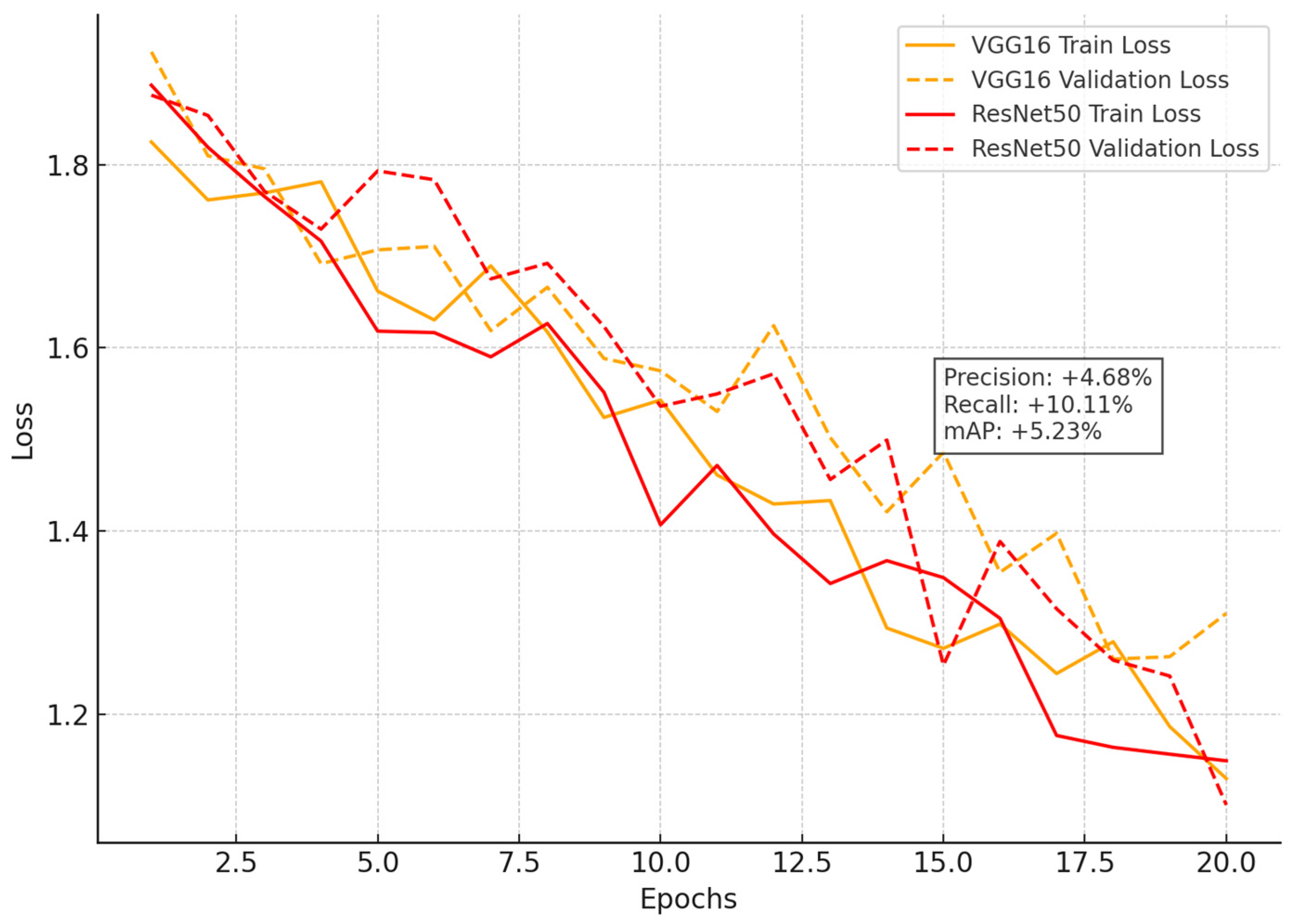

3.2. The Improvement of the Model

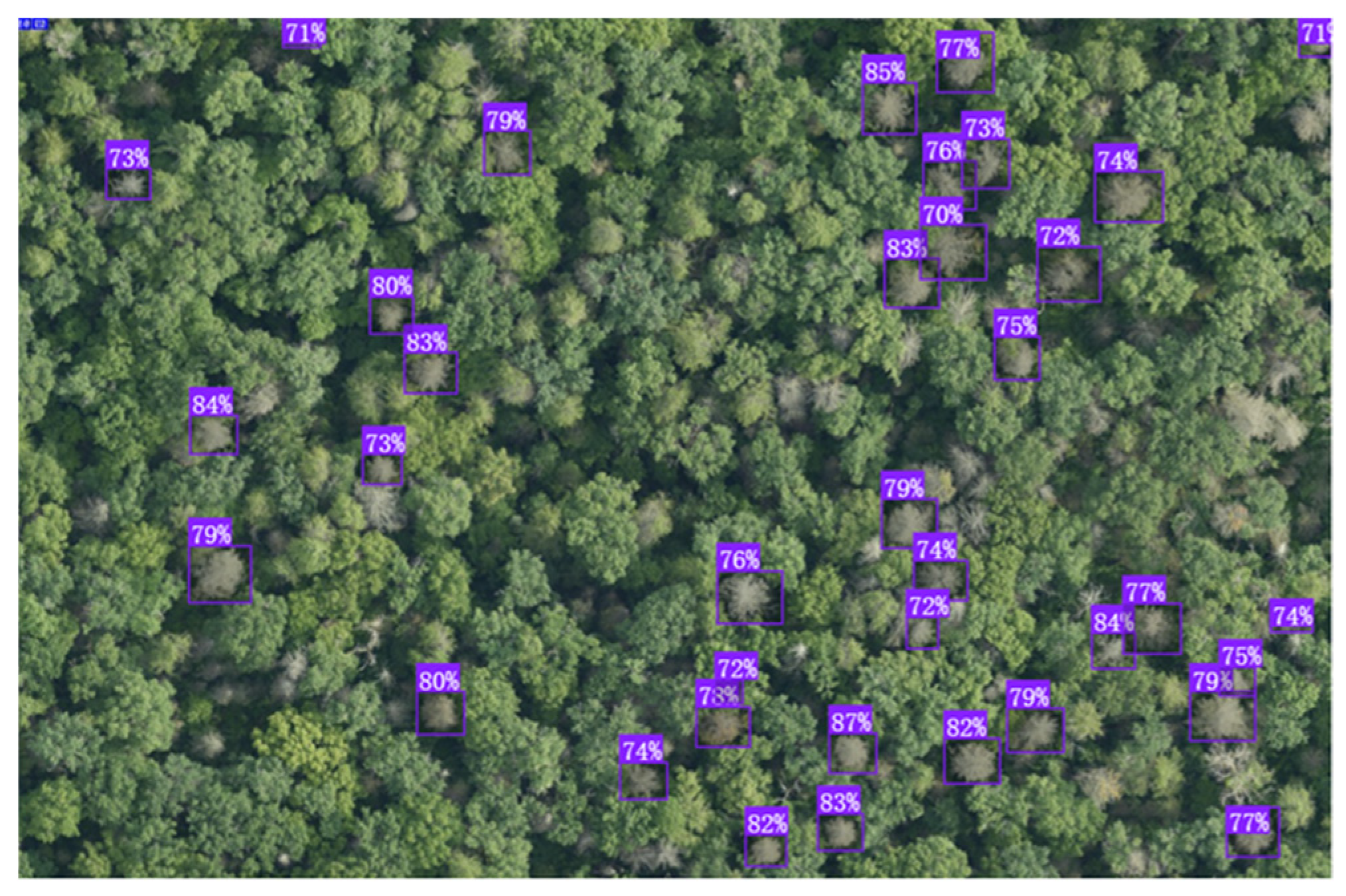

3.3. Application of the Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ye, J. Current epidemic status, prevention and control technologies, and countermeasure analysis of pine wood nematode disease in China. Sci. Silvae Sin. 2019, 55, 1–10. [Google Scholar]

- Tao, H.; Li, C.; Cheng, C.; Jiang, L.; Hu, H. Research progress on remote sensing monitoring of discolored pine trees infected by pine wood nematode disease. For. Res. 2020, 33, 172–183. [Google Scholar]

- Hassan, M.I.; Ahmed, M.H. Deep Learning Techniques for Pests and Disease Identification in Forestry: A Survey. For. Ecol. Manag. 2020, 464, 118012. [Google Scholar]

- Wang, J. Extraction and detection of crop pest and disease information based on multi-source remote sensing technology. Mod. Agric. Sci. Technol. 2020, 170–171. [Google Scholar]

- Lv, X.; Wang, J.; Yu, W. Preliminary study on UAV monitoring of forestry pests. Hubei For. Sci. Technol. 2016, 30–33. [Google Scholar]

- Zeng, Q.; Sun, H.; Yang, Y.; Zhou, J.; Yang, C. Comparison of the accuracy of UAV monitoring for pine wood nematode disease. Sichuan For. Sci. Technol. 2019, 40, 92–95+114. [Google Scholar]

- Gu, J.; Congalton, R.G. Individual Tree Crown Delineation From UAS Imagery Based on Region Growing by Over-Segments with a Competitive Mechanism. IEEE Trans. GeoScience Remote Sens. 2021, 60, 4402411. [Google Scholar] [CrossRef]

- Li, H.; Xu, H.; Zheng, H.; Chen, X. Research on monitoring technology of pine wood nematode disease based on UAV remote sensing images. J. Chin. Agric. Mech. Chem. 2020, 41, 6. [Google Scholar]

- Liu, X.; Cheng, D.; Li, T.; Chen, X.; Gao, W. Preliminary study on the automatic monitoring technology for pine wood nematode disease affected trees based on UAV remote sensing images. Chin. J. For. Pathol. 2018, 37, 16–21. [Google Scholar]

- Zhai, Y. Research on Peach Pest Occurrence Prediction System Based on Internet of Things Technology. Master’s Thesis, Hebei Agricultural University, Baoding, China, 2014. [Google Scholar]

- Li, X.; Liu, Y.; Zhao, X. Aerial Pest Detection in Forestry Using Convolutional Neural Networks. Comput. Electron. Agric. 2021, 182, 105956. [Google Scholar]

- Wu, Q. Research on Regional Detection Algorithm of Pine Wood Nematode Disease Based on Remote Sensing Images. Master’s Thesis, Anhui University, Hefei, China, 2013. [Google Scholar]

- Hu, G.; Zhang, X.; Liang, D.; Huang, L. Identification of diseased pine trees in remote sensing images based on weighted support vector data description. Trans. Chin. Soc. Agric. Eng. 2013, 44, 258–263+287. [Google Scholar]

- Zhang, R.; Xia, L.; Chen, L.; Xie, C.; Chen, M.; Wang, W. Recognition of discolored pine wood infected by pine wood nematode disease based on U-Net network and UAV images. Trans. CSAE 2020, 36, 61–68. [Google Scholar]

- Zhang, Z.; Guo, Y. Pest Monitoring and Disease Classification in Forests Using UAV Imagery and Deep Convolutional Networks. Remote Sens. 2019, 11, 2283. [Google Scholar]

- Huang, L.; Wang, Y.; Xu, Q.; Liu, Q. Recognition of abnormal discolored pine wood infected by pine wood nematode disease using YOLO algorithm and UAV images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 197–203. [Google Scholar]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of Fir Trees (Abies sibirica) Damaged by the Bark Beetle in Unmanned Aerial Vehicle Images with Deep Learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Chen, F.; Zhu, X.; Zhou, W.; Gu, M.; Zhao, Y. Spruce counting based on UAV aerial photography and improved YOLOv3 model. Trans. Chin. Soc. Agric. Eng. 2020, 36, 22–30. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Xu, X.; Tao, H.; Li, C.; Cheng, C.; Guo, H.; Zhou, J. Recognition and location of pine wood nematode disease-affected wood based on Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2020, 51, 228–236. [Google Scholar]

- Huang, H.; Ma, X.; Hu, L.; Huang, Y.; Huang, H. Preliminary study on the application of Fast R-CNN deep learning and UAV remote sensing in pine wood nematode disease monitoring. J. Environ. Entomol. 2021, 43, 1295–1303. [Google Scholar]

- Sun, X.; Wu, P.; Hoi, S.C.H. Face Detection using Deep Learning: An Improved Faster RCNN Approach. arXiv 2017, arXiv:1701.08289. [Google Scholar] [CrossRef]

- Lee, C.; Kim, H.J.; Oh, K.W. Comparison of faster R-CNN models for object detection. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems, Gyeongju, Republic of Korea, 16–19 October 2016. [Google Scholar]

- Kamal, K.; Hamid, E.Z. A Comparison Between the VGG16, VGG19 and ResNet50 Architecture Frameworks for Classification of Normal and CLAHE Processed Medical Images. Available online: https://www.researchsquare.com/article/rs-2863523/v1 (accessed on 16 February 2025).

- Rai, P.; Jain, A. Multi-Scale Convolutional Neural Network for Forest Disease Detection from High-Resolution UAV Images. ISPRS J. Photogramm. Remote Sens. 2022, 176, 122–134. [Google Scholar]

- Reddy, S.K.; Yang, C. Cost-benefit analysis of applying deep learning models for agricultural pest detection. Comput. Electron. Agric. 2018, 151, 104–114. [Google Scholar]

- Ghosal, S.; Ghosh, S. Economic analysis of pest detection in forestry using automated systems. J. Environ. Manag. 2019, 248, 109254. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Chen, S.; Liang, D.; Ying, B.; Zhu, W.; Zhou, G.; Wang, Y. Assessment of an improved individual tree detection method based on local-maximum algorithm from unmanned aerial vehicle RGB imagery in overlapping canopy mountain forests. Int. J. Remote Sens. 2021, 42, 106–125. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, X.; Xu, B.; Han, L.; Zhu, Y. Automatic Counting of in situ Rice Seedlings from UAV Images Based on a Deep Fully Convolutional Neural Network. Remote Sens. 2019, 11, 691. [Google Scholar] [CrossRef]

- Yu, R.; Luo, Y.; Li, H.; Yang, L.; Huang, H.; Yu, L.; Ren, L. Three-Dimensional Convolutional Neural Network Model for Early Detection of Pine Wilt Disease Using UAV-Based Hyperspectral Images. Remote Sens. 2021, 13, 4065. [Google Scholar] [CrossRef]

- Huang, L. Image Detection and Localization of Farmland Pests Based on the Faster-RCNN Algorithm. Digit. Technol. Appl. 2023, 41, 49–52. [Google Scholar] [CrossRef]

| Metric | VGG16 | ResNet50 FPN V2 | Improvement |

|---|---|---|---|

| Precision | 85.54% | 90.22% | 4.68% |

| Recall | 82.22% | 92.33% | 10.11% |

| mAP | 78.40% | 83.63% | 5.23% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Yang, B.; Wang, R.; Yu, Q.; Yang, Y.; Wei, J. Automatic Extraction of Discolored Tree Crowns Based on an Improved Faster-RCNN Algorithm. Forests 2025, 16, 382. https://doi.org/10.3390/f16030382

Ma H, Yang B, Wang R, Yu Q, Yang Y, Wei J. Automatic Extraction of Discolored Tree Crowns Based on an Improved Faster-RCNN Algorithm. Forests. 2025; 16(3):382. https://doi.org/10.3390/f16030382

Chicago/Turabian StyleMa, Haoyang, Banghui Yang, Ruirui Wang, Qiang Yu, Yaoyao Yang, and Jiahao Wei. 2025. "Automatic Extraction of Discolored Tree Crowns Based on an Improved Faster-RCNN Algorithm" Forests 16, no. 3: 382. https://doi.org/10.3390/f16030382

APA StyleMa, H., Yang, B., Wang, R., Yu, Q., Yang, Y., & Wei, J. (2025). Automatic Extraction of Discolored Tree Crowns Based on an Improved Faster-RCNN Algorithm. Forests, 16(3), 382. https://doi.org/10.3390/f16030382