Accuracy Assessment of Drone Real-Time Open Burning Imagery Detection for Early Wildfire Surveillance

Abstract

:1. Introduction

- The model of the YOLOv5 detector and LSTM classifier is proposed for training and testing the drone’s real-time open burning imagery detection that is called Dr-TOBID for a wildfire surveillance platform.

- The structure of the deep learning framework is designed by Anaconda platforms such as OpenCV, YOLOv5, TensorFlow, LebelImg, Pycharm, and OBS.

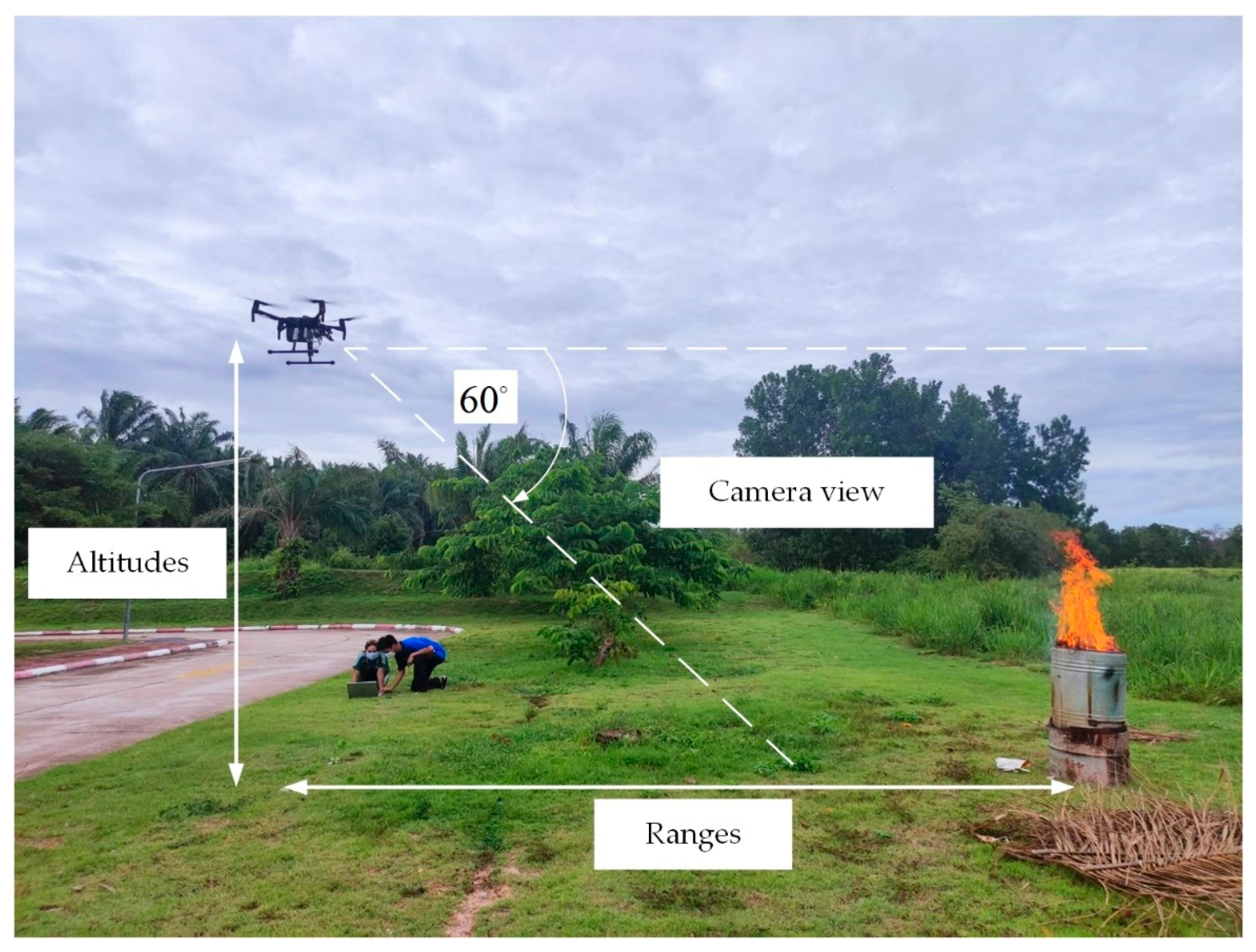

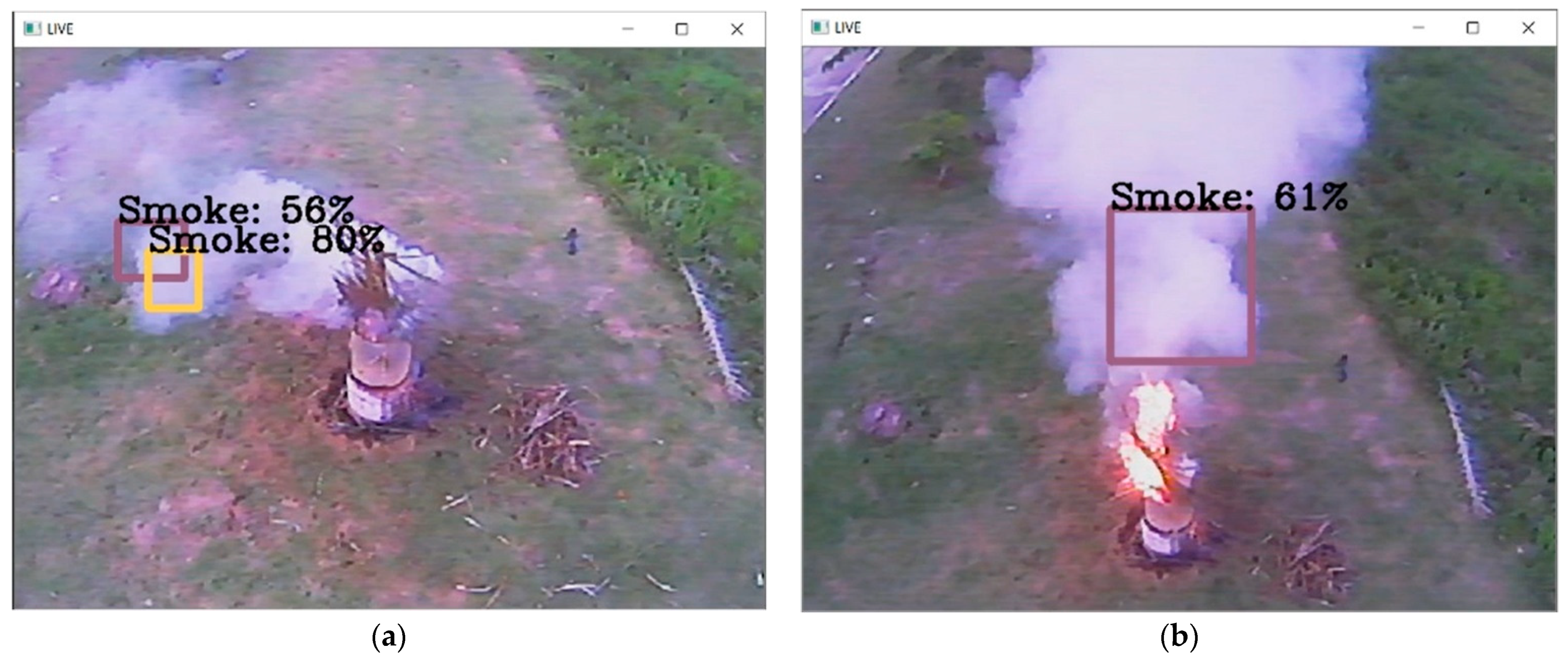

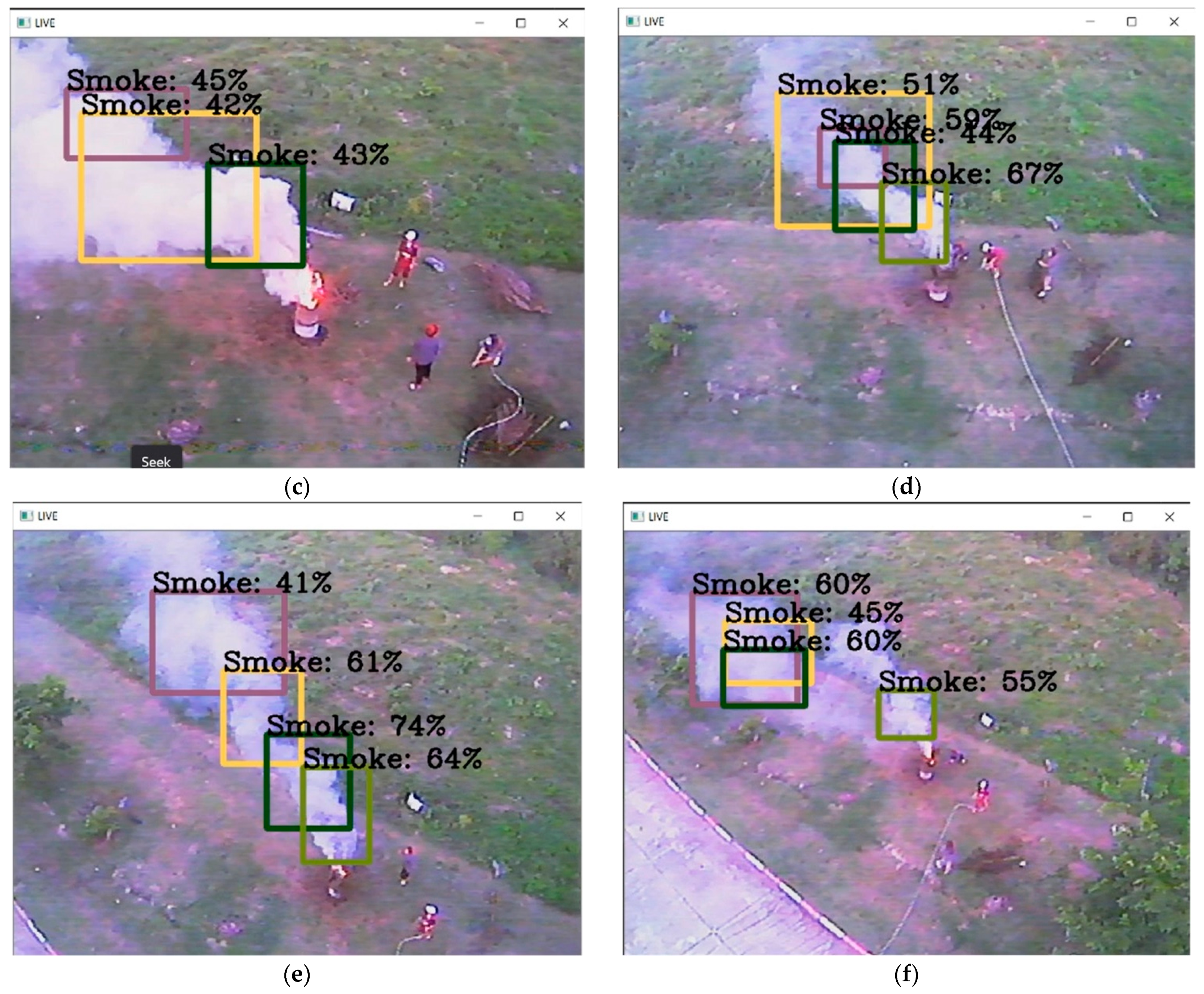

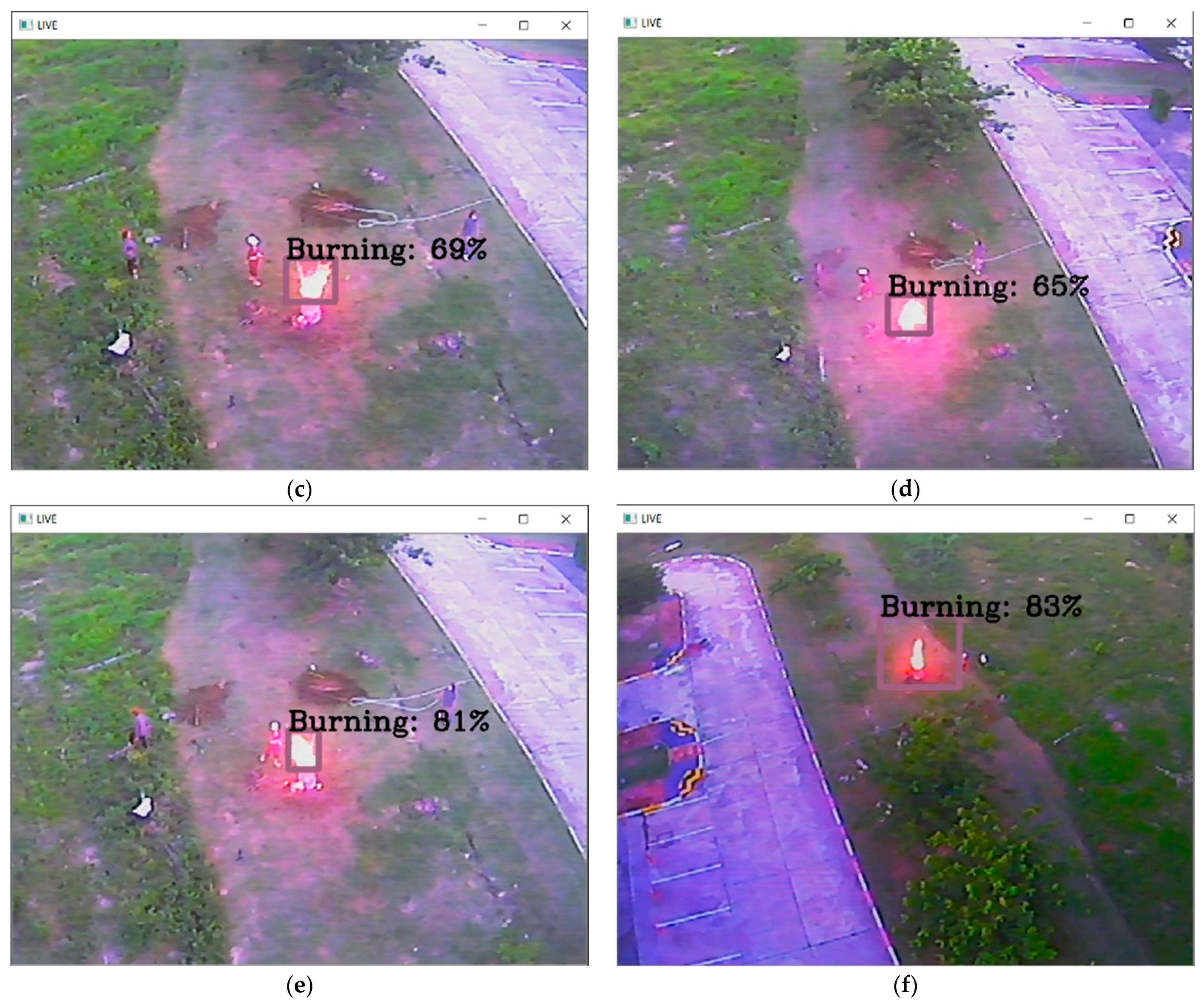

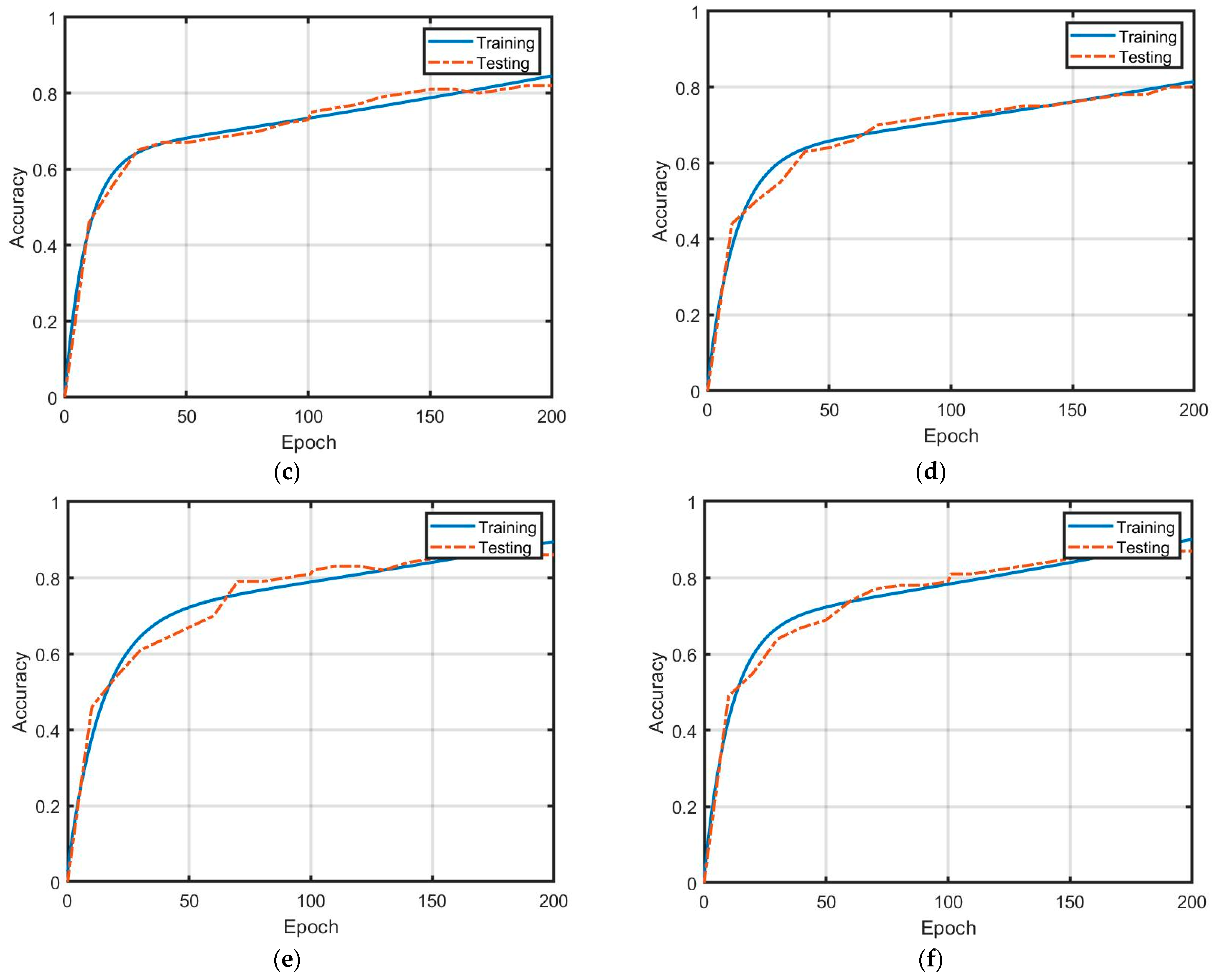

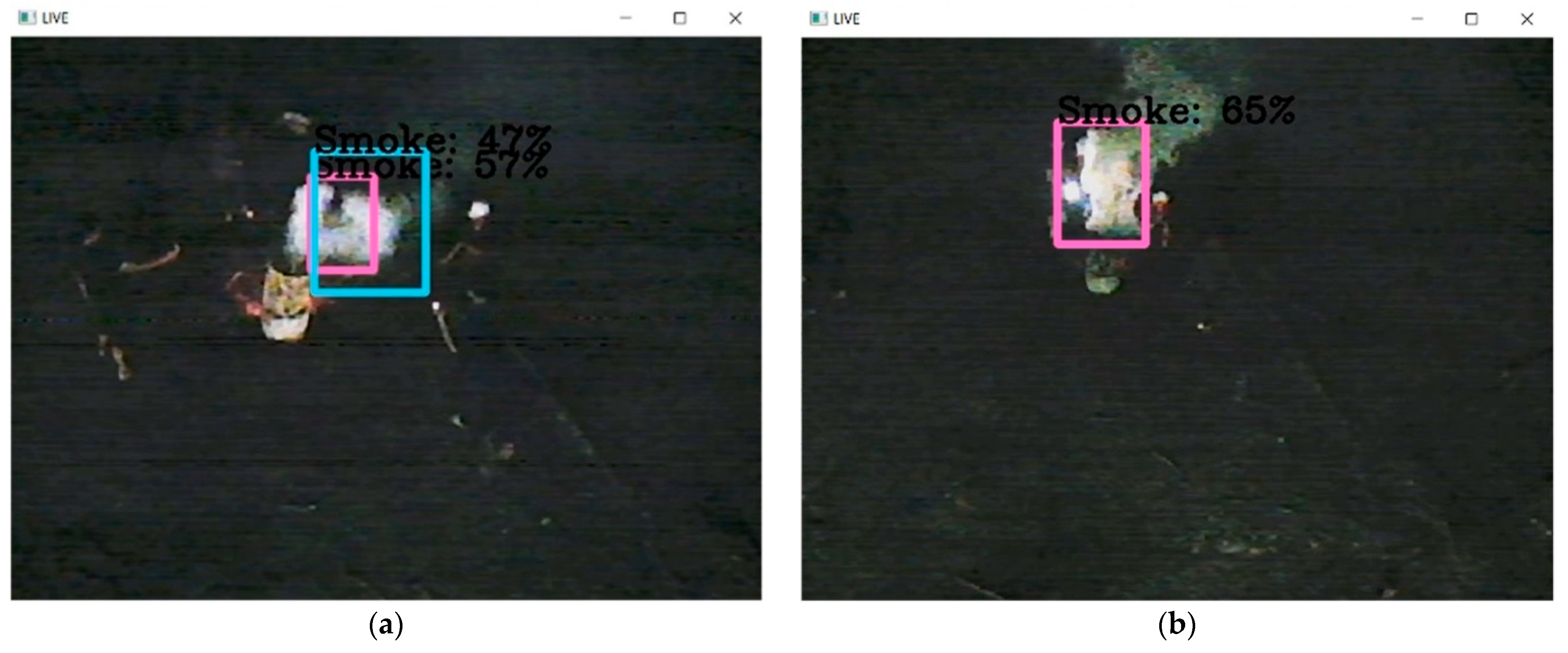

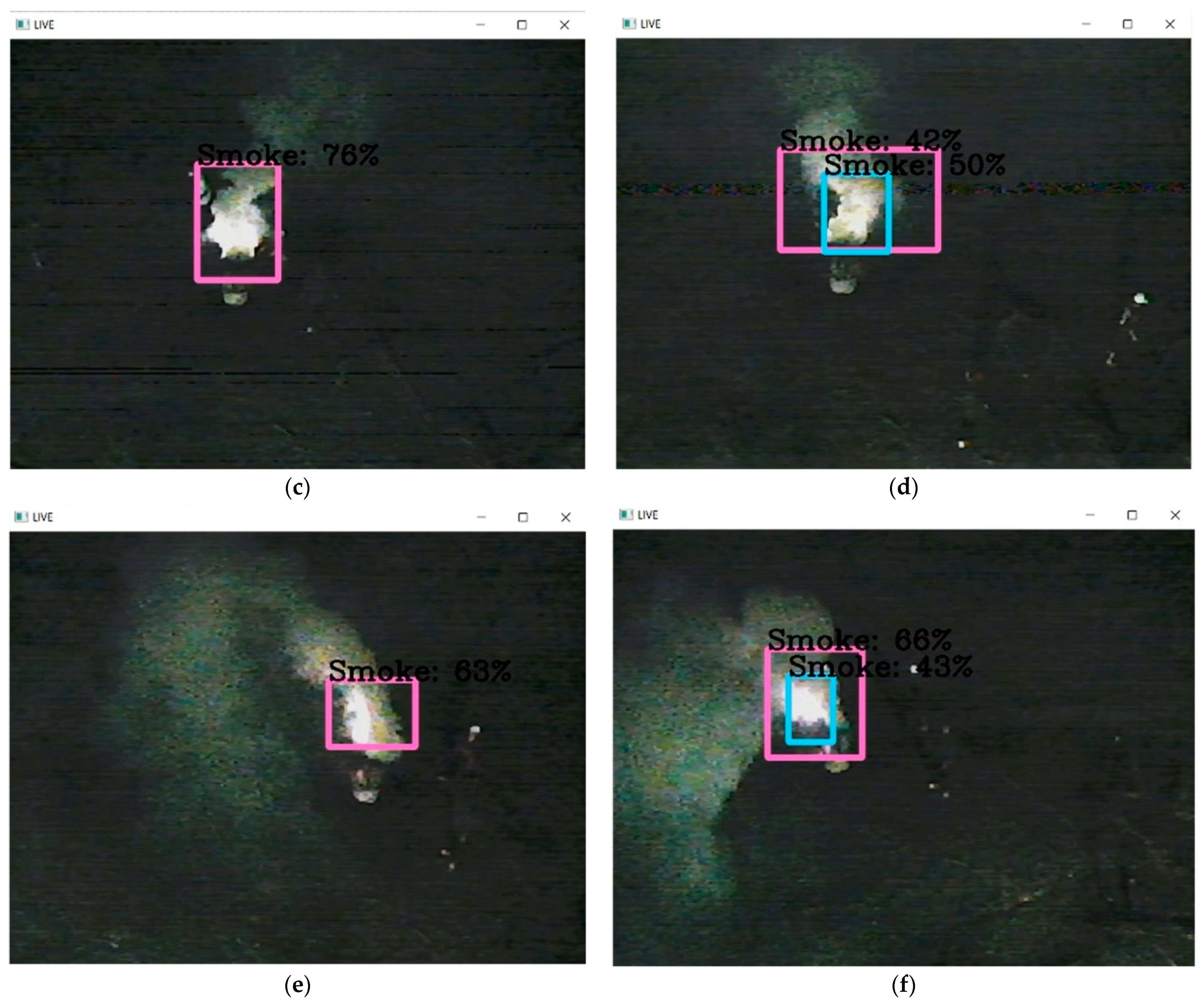

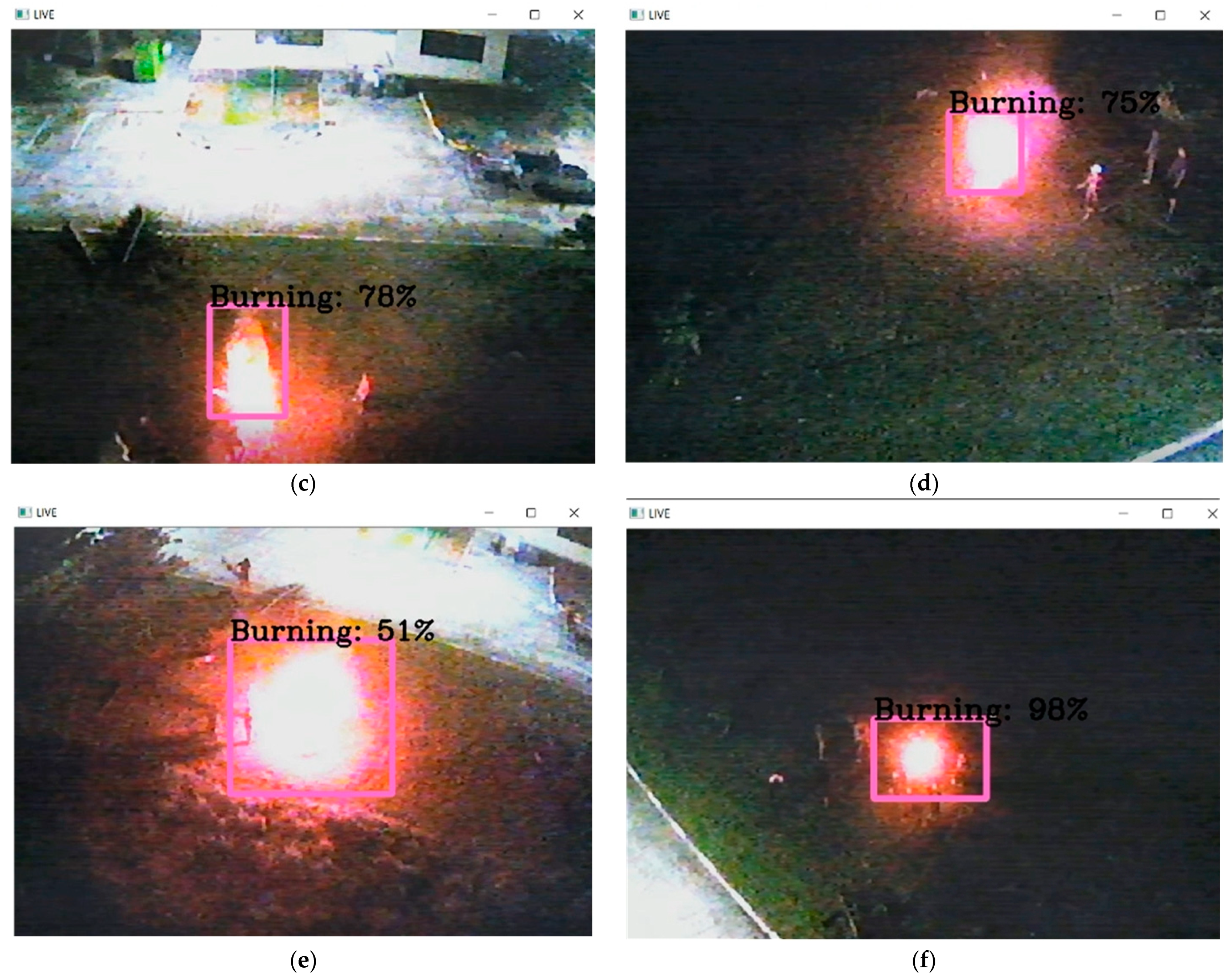

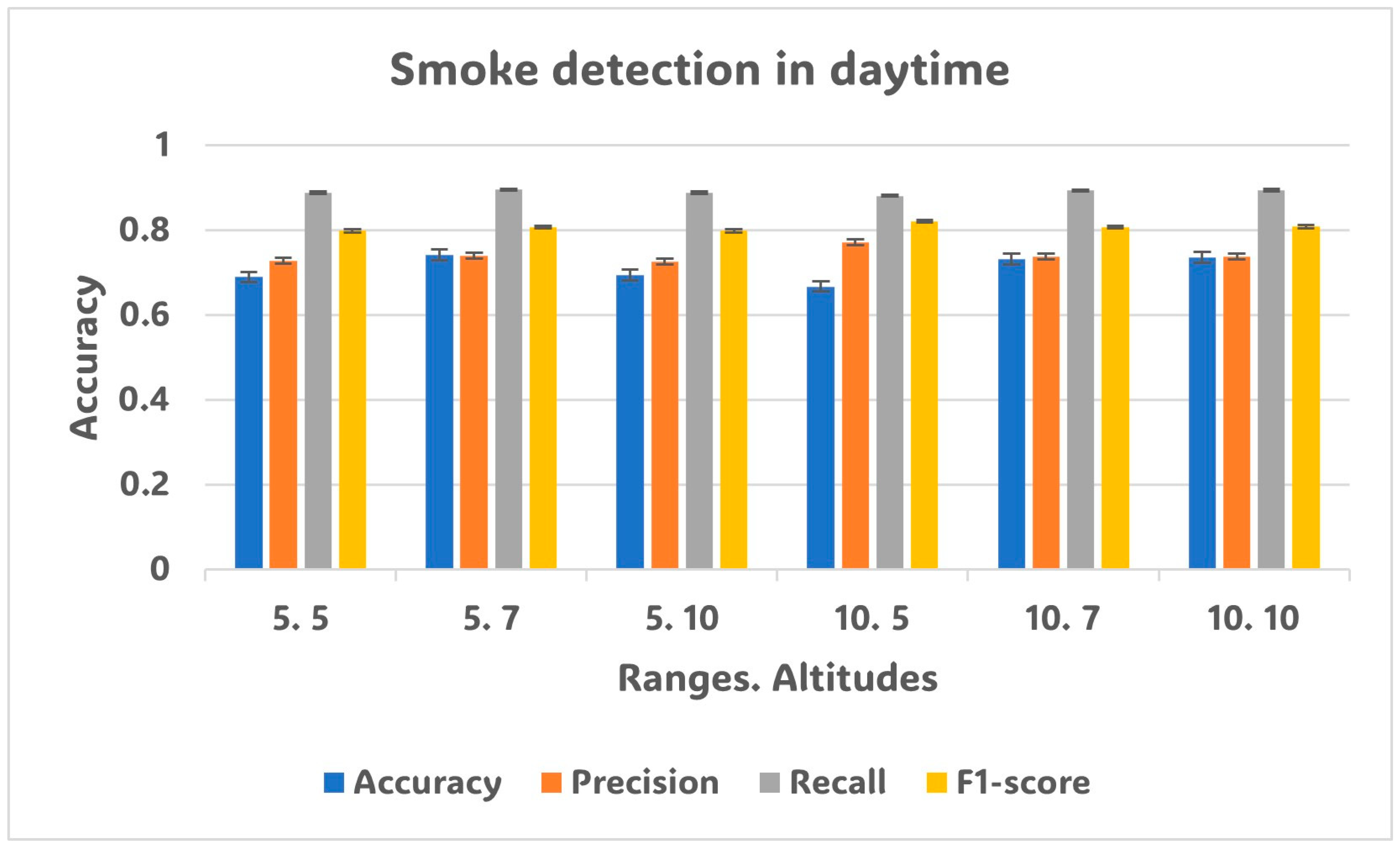

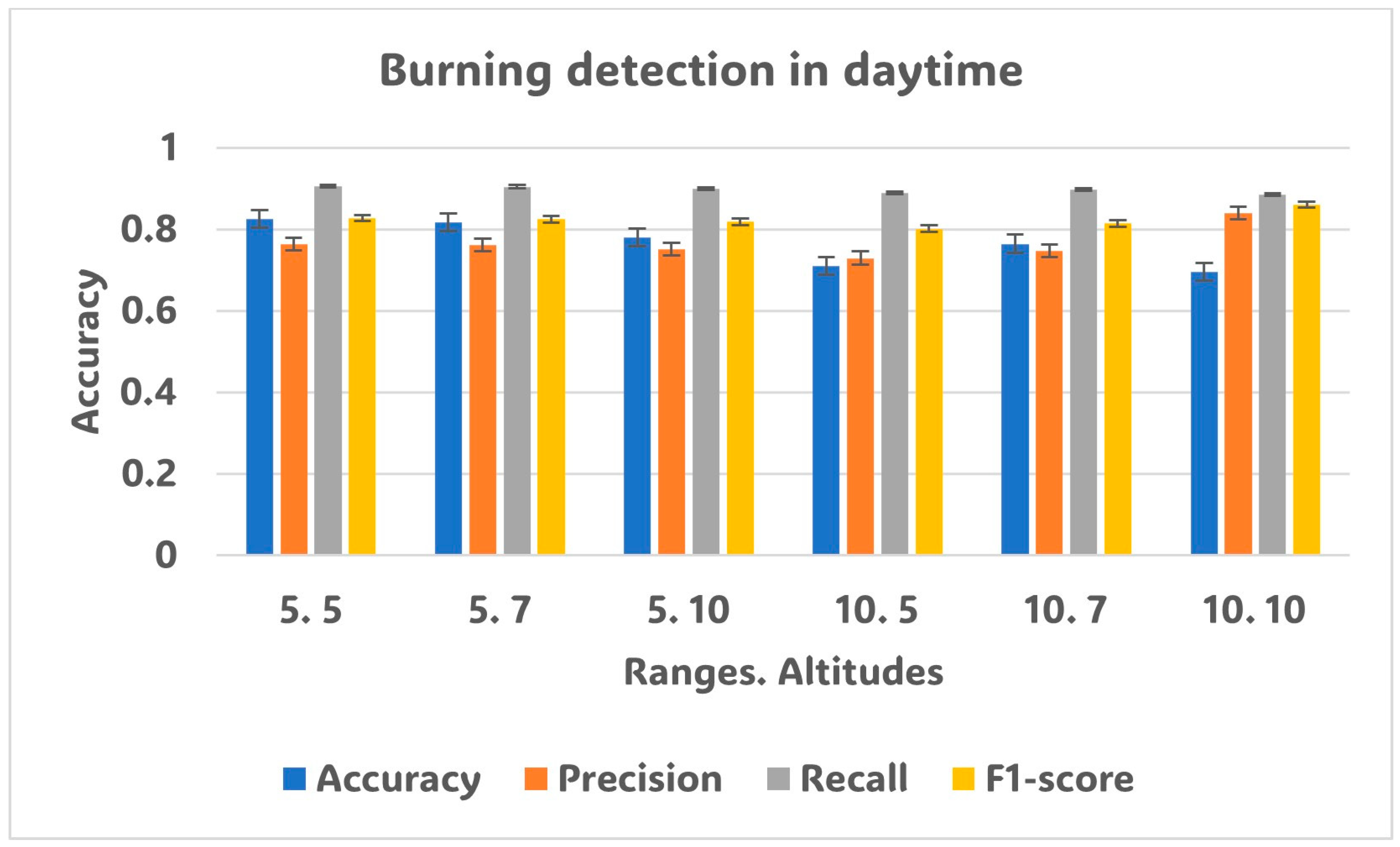

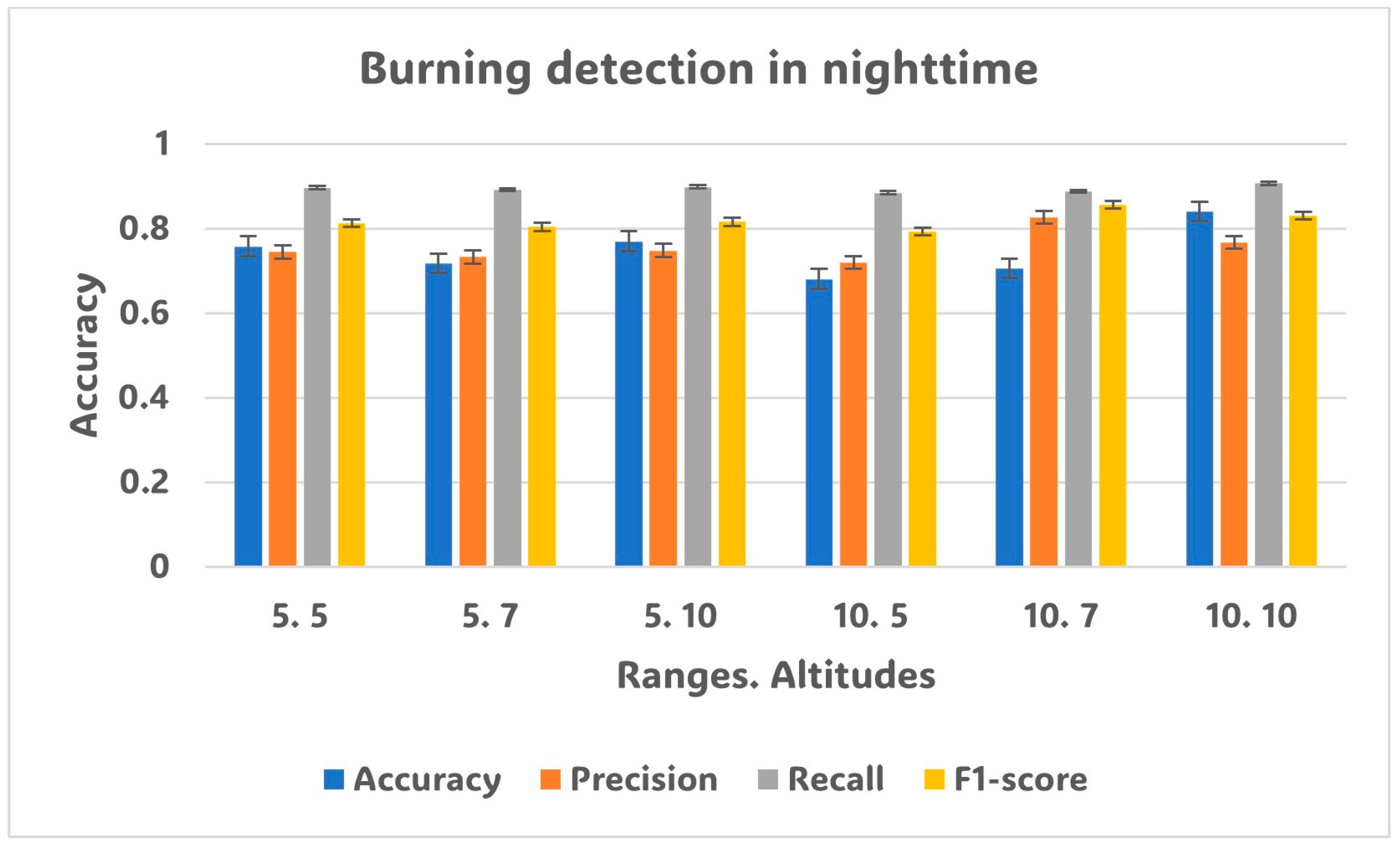

- The detection of smoke and burning from the open burning location considers conditions by investigating the characteristics of drone altitudes, ranges, and RGB mode in the daytime and nighttime.

- Finally, the evaluation metrics, such as accuracy, precision, recall, and F1-Score, are assessed as the accuracy of Dr-TOBID.

2. Related Works

Early Wildfire Surveillance

3. Methodology

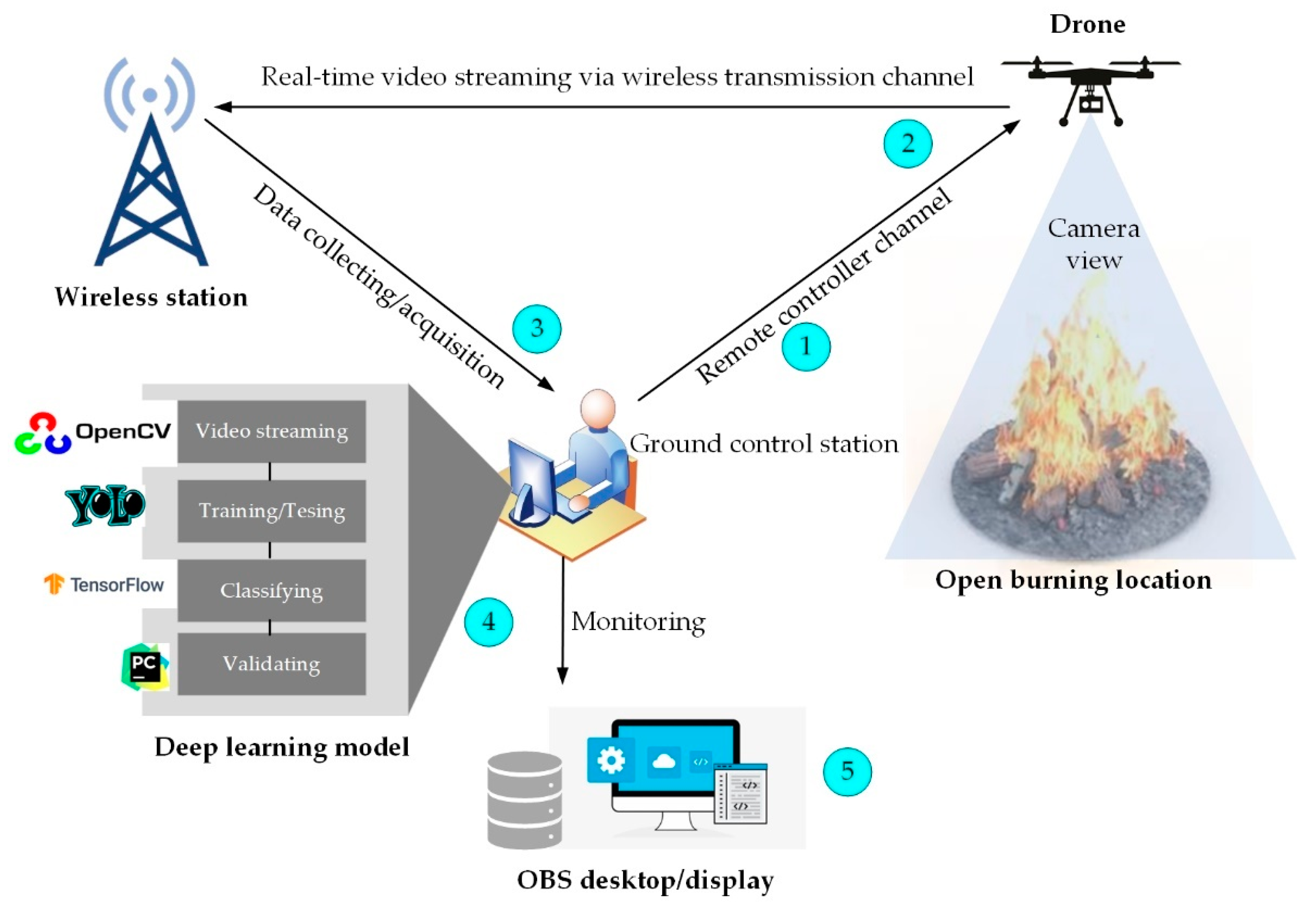

3.1. Frameworks

- The flight control of the drone is applied by the remote controller or path planning for searching the open burning locations.

- The video streaming in real-time will be linked via the wireless transmission channel.

- Data collection and acquisition will be sent to the ground control station.

- The deep learning procedures are the next steps, such as feature extraction, training/testing, classifying, and validating results.

- Display the monitoring data on the OBS software.

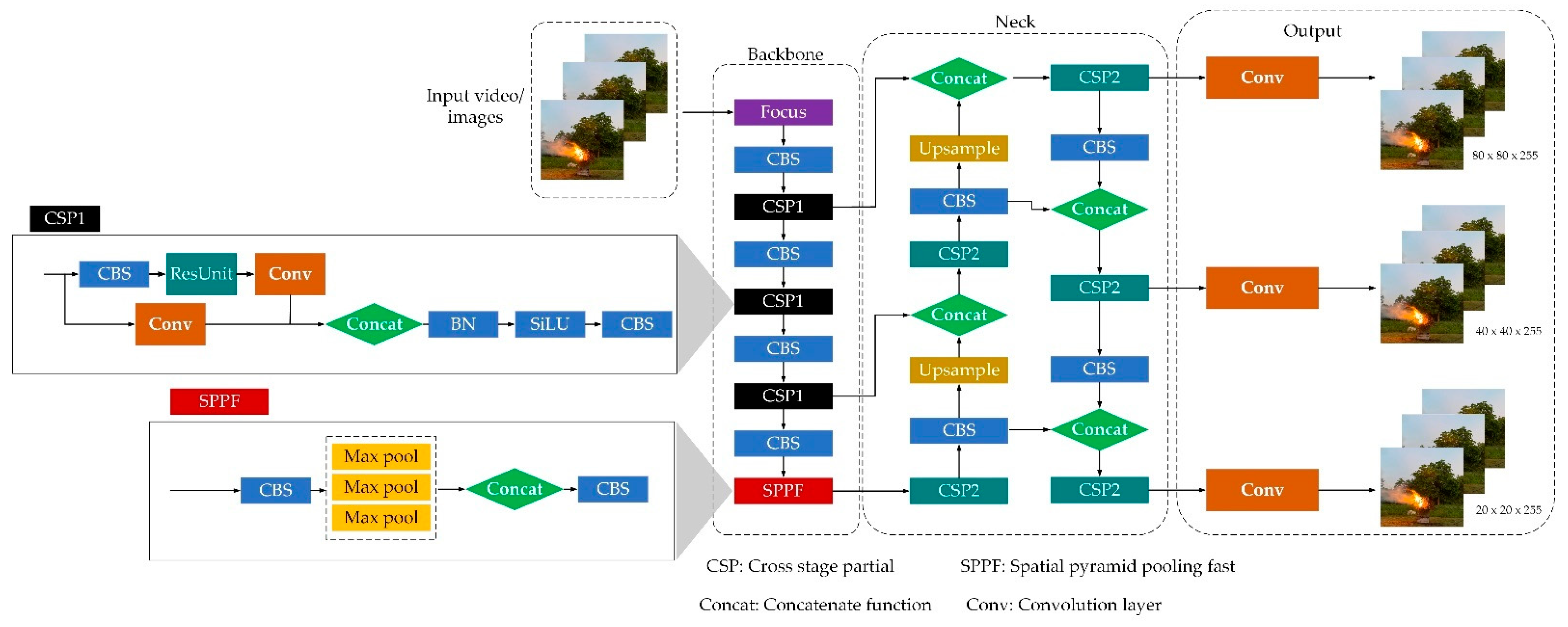

3.1.1. YOLOv5 Model

3.1.2. Extension of YOLOv5 with LSTM layers

4. Experimental Setup

4.1. Dr-TOBID

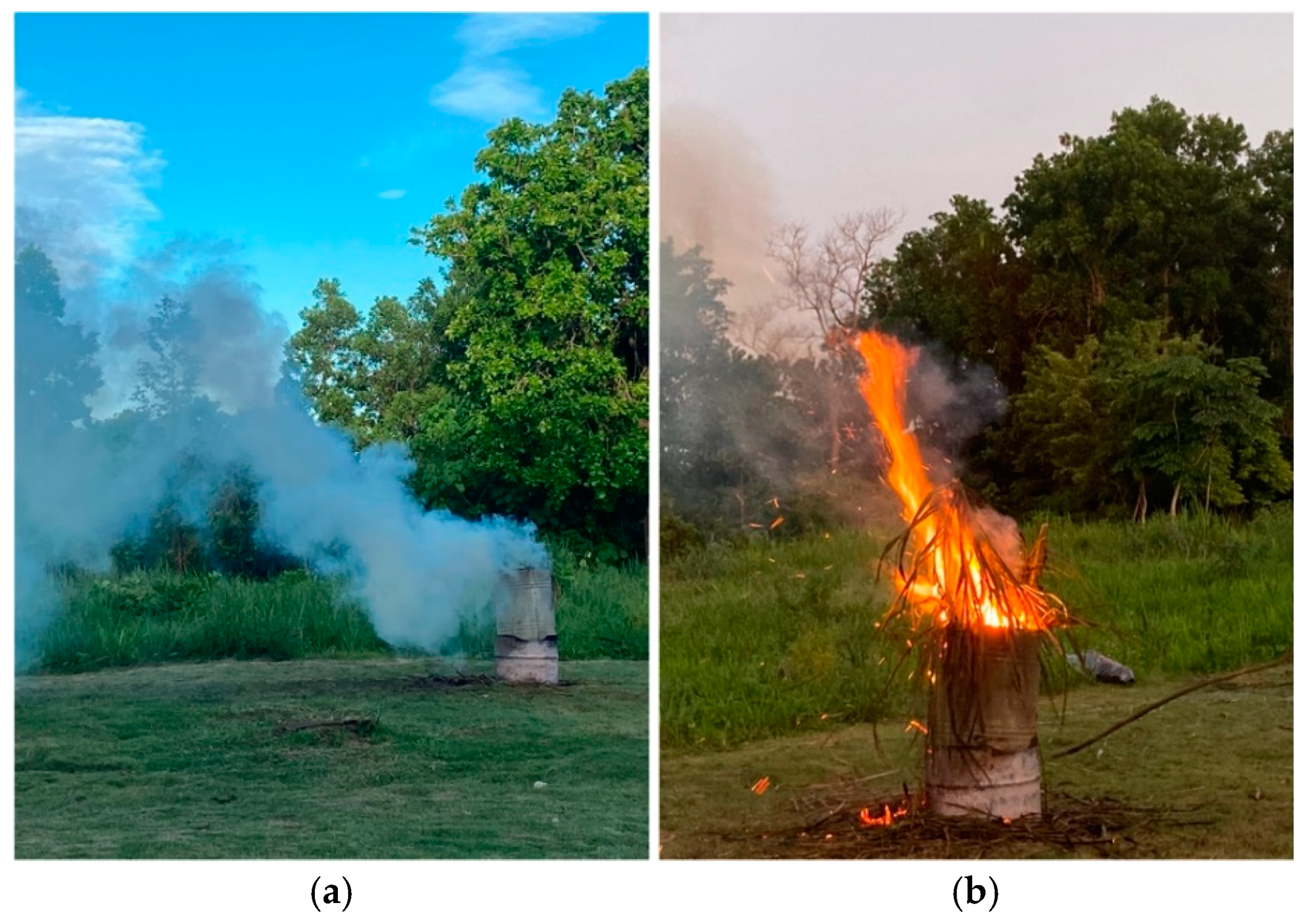

4.2. Dataset

4.3. Experimental Model

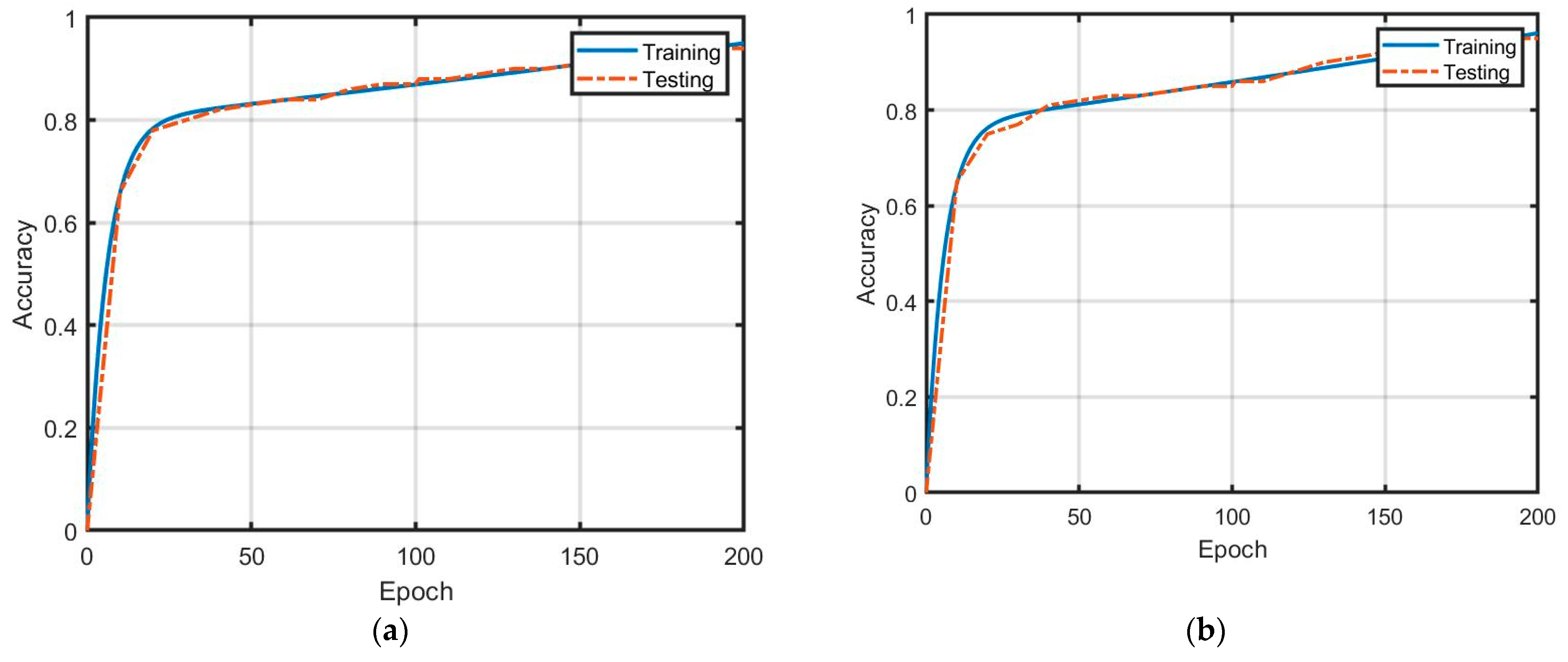

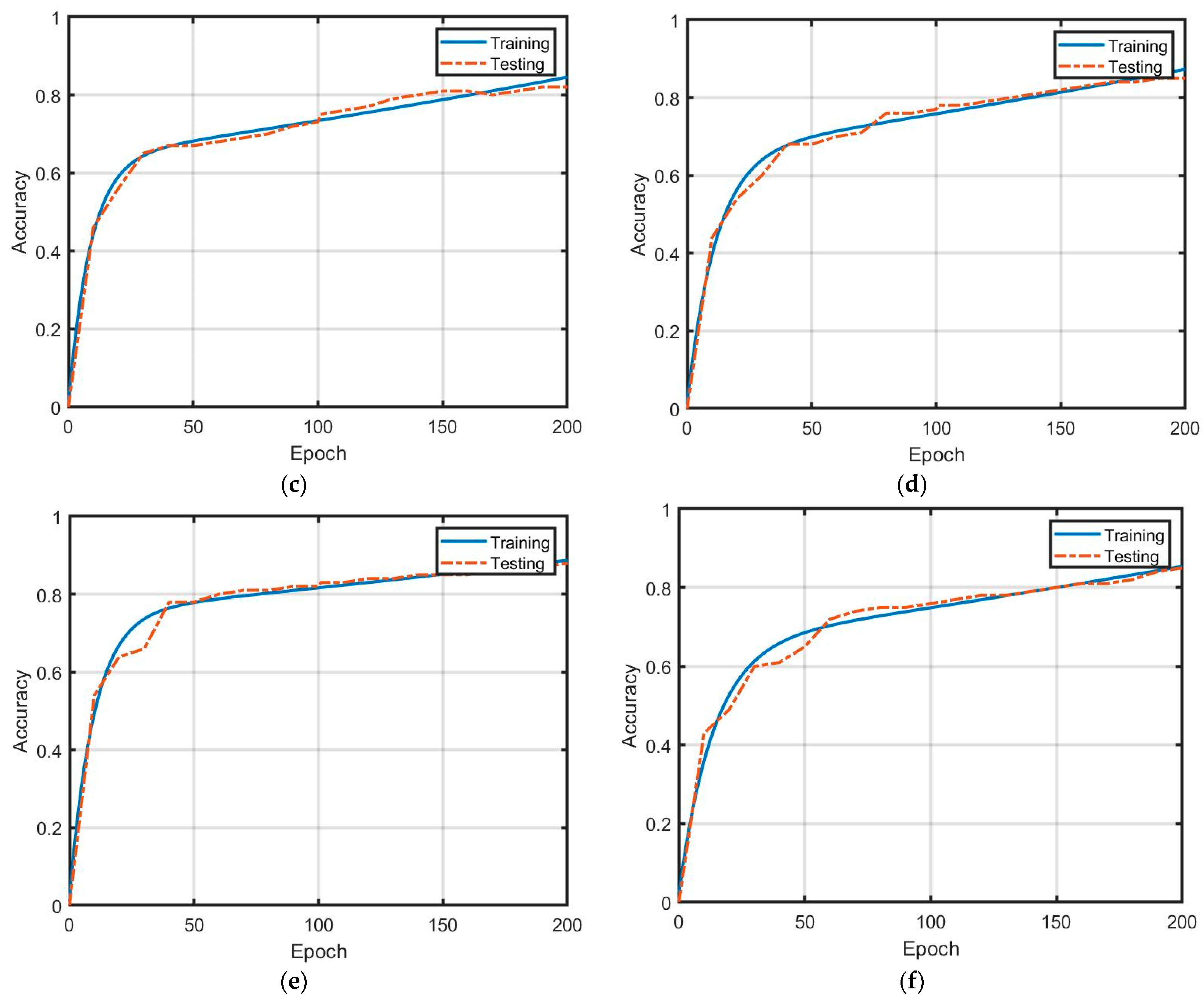

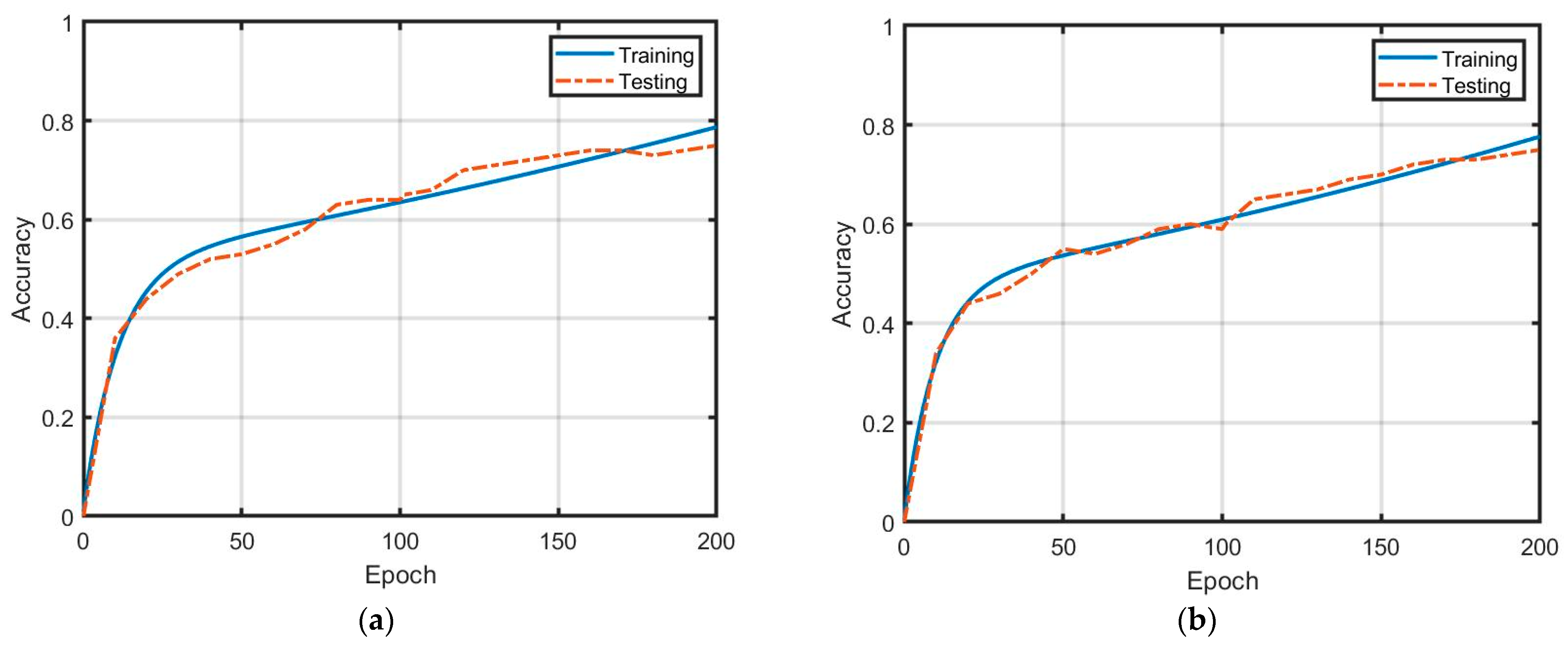

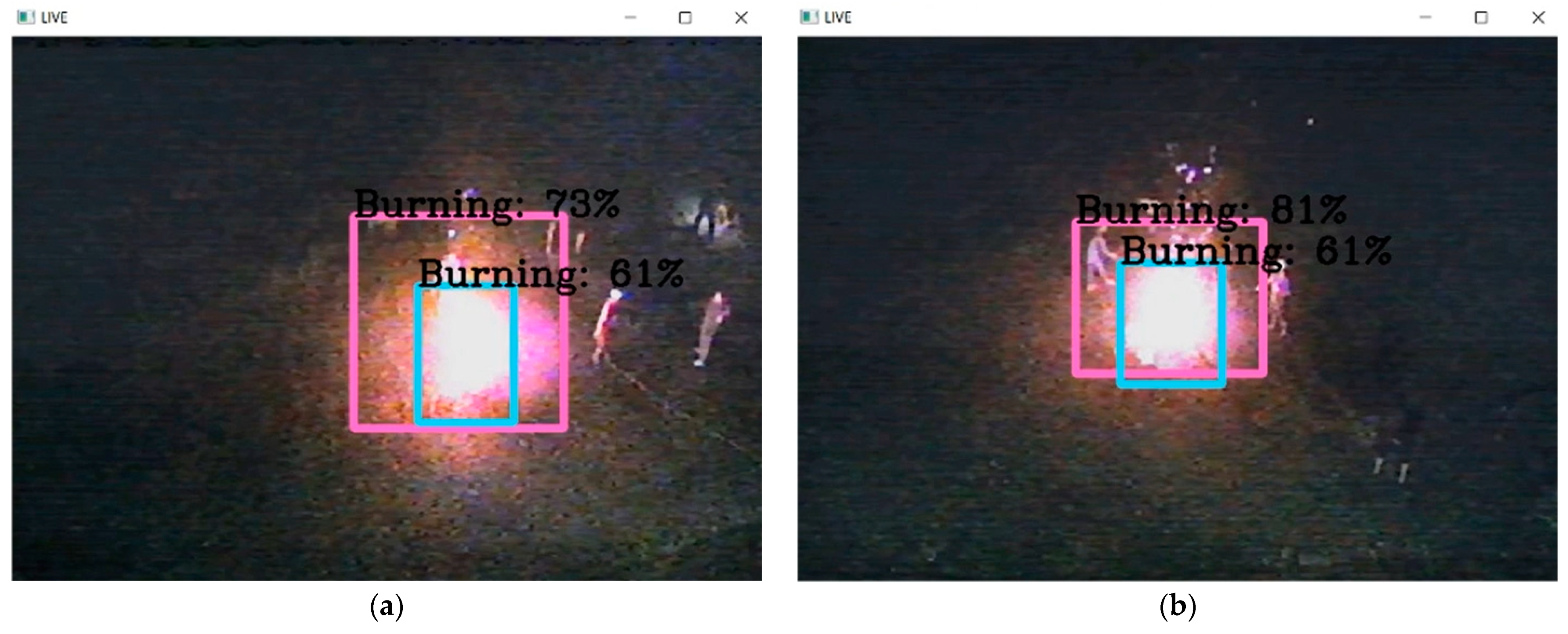

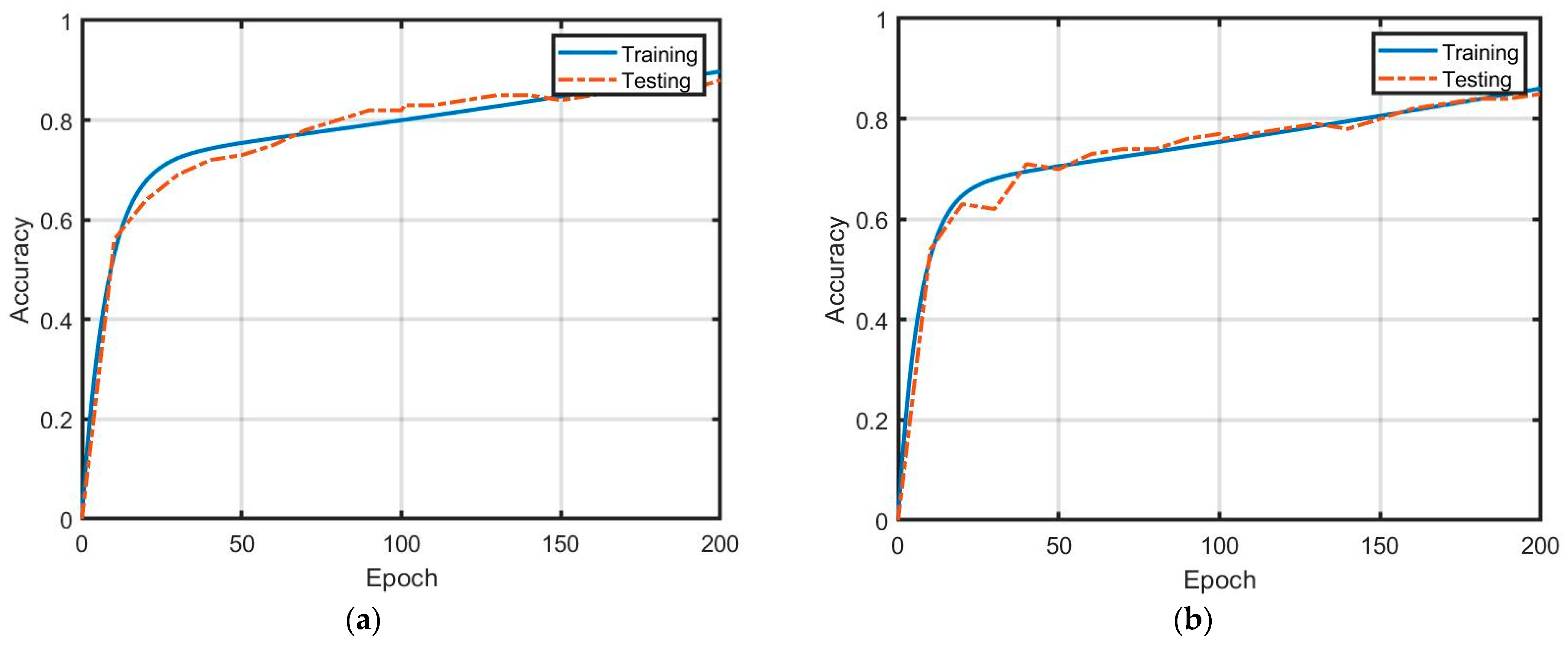

5. Results

5.1. Experimental Results

5.2. Discussion

- The communication network Dr-TOBID provided the WiFi link, where the maximum data rates are 12 Mbps. Indeed, the data rate can be used at 2 Mbps due to the attenuation of the wireless link. Thus, this problem reduces the resolution of video streaming. The alternative solution to resolve this is employed by the mobile network [12]. In addition, HSV mode can guarantee resolution.

- Warning system: In fact, the detection of fire in the forests must be early. The proposal in [33] can be applied further.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pariruang, W.; Hata, M.; Furuuchi, M. Influence of agricultural activities, forest fires and agro-industries on air quality in Thailand. J. Environ. Sci. 2017, S2, 85–97. [Google Scholar]

- Jirataya, P.; Agapol, J.; Savitri, G. Assessment of air pollution from household solid waste open burning in Thailand. Sustainability 2018, 10, 2553. [Google Scholar]

- Phairuang, W.; Suwattiga, P.; Chetiyanukornkul, T.; Hongteiab, S.; Limpaseni, W.; Ikemori, F.; Hata, M.; Furuuchi, M. The influence of the open burning of agricultural biomass and forest fires in Thailand on the carbonaceous components in size-fractionated particles. Environ. Poll. 2019, 247, 238–247. [Google Scholar] [CrossRef] [PubMed]

- Thangavel, K.; Spiller, D.; Sabatini, R.; Amici, S.; Sasidharan, S.T.; Fayek, H.; Marzocca, P. Autonomous satellite wildfire detection using hyperspectral imagery and neural network: A case study on Australian wildfire. Remote Sens. 2023, 15, 720. [Google Scholar] [CrossRef]

- Dash, P.J.; Pearse, D.G.; Watt, S.M. UAV multispectral imagery can complement satellite data for monitoring forest health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- Priya, S.R.; Vani, K. Deep learning based forest fire classification and detection in satellite images. In Proceedings of the 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019. [Google Scholar]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An attention enhanced bidirectional LSTM for early forest fire smoke recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Kastridis, A.; Stathaki, T.; Yuan, J.; Shi, M.; Grammalidis, N. Suburban forest risk assessment and forest surveillance using 360-degree cameras and a multiscale deformable transformer. Remote Sens. 2023, 15, 1995. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Learning-based smoke detection for unmanned aerial vehicles applied to forest fire surveillance. J. Intel. Robot. Syst. 2019, 93, 337–349. [Google Scholar] [CrossRef]

- Partheepan, S.; Sanati, F.; Hassan, J. Autonomous unmanned aerial vehicles in bushfire management: Challenges and opportunities. Drones 2023, 7, 47. [Google Scholar] [CrossRef]

- Pandey, S.; Singh, R.; Kathuria, S.; Negi, P.; Chhabra, G.; Joshi, K. Emerging technologies for prevention and monitoring of forest fire. In Proceedings of the International Conference on Innovative Data Communication Technologies and Application (ICIDCA), Uttarakhand, India, 14–16 March 2023; pp. 1115–1121. [Google Scholar]

- Geetha, S.; Adhishek, C.S.; Akshayanat, C.S. Machine vision based fire detection techniques: A survey. Fire Technol. 2021, 57, 591–623. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A video-based fire detection using deep learning models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Reder, S.; Mund, J.P.; Albert, N.; Wabermann, L.; Miranda, L. Detection of windthrown tree stems on UAV-orthomosaics using U-Net convolutional networks. Remote Sens. 2022, 14, 75. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intel. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Natekar, S.; Patil, S.; Nair, A.; Roychowdhury, S. Forest fire prediction using LSTM. In Proceedings of the 2nd International Conference for Emerging Technology (INCET), Belagavi, India, 21–23 May 2021; pp. 1–5. [Google Scholar]

- Park, M.; Tran, D.Q.; Bak, J.; Park, S. Advanced wildfire detection using generative adversarial network-based augmented datasets and weakly supervised object localization. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103052. [Google Scholar] [CrossRef]

- Haq, M.A.; Rahaman, G.; Baral, P.; Ghosh, A. Deep learning based supervised image classification using UAV images for forest areas classification. J. Indian Soc. Remote Sens. 2021, 43, 601–606. [Google Scholar] [CrossRef]

- Rahman Rasel, A.K.Z.; Sakif Nabil, S.M.; Sikder, N.; Masud, M.; Aljuaid, H.; Bairagi, A.K. Uumanned aerial vehicle assisted forest fire detection using deep convolutional neural network. Intel. Autom. Soft Comput. 2023, 35, 3259–3277. [Google Scholar] [CrossRef]

- Novac, I.; Geipel, K.G.; Domingo Gil, E.; Paula, L.G.; Hyttel, K.; Chrysostomou, D. A framework for wildfire inspection using deep convolutional neural networks. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; pp. 867–872. [Google Scholar]

- Chandana, V.S.; Vasavi, S. Autonomous drones based forest surveillance using faster R-CNN. In Proceedings of the International Conference on Electronics and Renewable Systems (ICEARS), Tuticorin, India, 16–18 March 2022; pp. 1718–1723. [Google Scholar]

- Guede-Fernandaz, F.; Martins, L.; Almeida, R.V.; Gamboa, H.; Vieira, P. A deep learning based object identification system for forest fire detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Khudayberdiev, O.; Zhang, J.; Abdullahi, S.M.; Zhang, S. Light-Firenet: An efficient lightweight network for fire detection in diverse environments. Multi. Tools App. 2022, 81, 24553–24572. [Google Scholar] [CrossRef]

- Harkat, H.; Nascimento, J.M.P.; Bernardino, A.; Thariq Ahmed, H.F. Assessing the impact of the loss function and encoder architecture for fire aerial images segmentation using Deeplabv3+. Remote Sens. 2022, 14, 2023. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intel. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Mohapatra, A.; Trinh, T. Early wildfire detection technologies in practice—A Review. Sustainability 2022, 14, 12270. [Google Scholar] [CrossRef]

- Maqbool, A.; Mirza, A.; Afzal, F.; Shah, T.; Khan, W.Z.; Zikria, Y.B.; Kim, S.W. System-level performance analysis of cooperative multiple unmanned aerial vehicles for wildfire surveillance using agent-based modeling. Sustainability 2022, 14, 5927. [Google Scholar] [CrossRef]

- Thangavel, K.; Spiller, D.; Sabatini, R.; Marzocca, P.; Esposito, M. Near real-time wildfire management using distributed satellite system. IEEE Geo. Remot. Sens. Let. 2023, 20, 550070. [Google Scholar] [CrossRef]

- Carta, F.; Zidda, C.; Putzu, M.; Loru, D.; Anedda, M. Advancements in forest fire prevention: A comprehensive survey. Sensors 2023, 23, 6635. [Google Scholar] [CrossRef] [PubMed]

- Peruzzi, G.; Pozzebon, A.; Van Der Meer, M. Fight fire with fire: Detecting forest fires with embedded machine learning models dealing with audio and images on low power IoT devices. Sensors 2023, 23, 783. [Google Scholar] [CrossRef]

- Jemmali, M.; B.Melhim, L.K.; Boulila, W.; Amdouni, H.; Alharbi, M.T. Optimizing forest fire prevention: Intelligent scheduling algorithms for drone-based surveillance system. arXiv 2023, arXiv:2035:10444. [Google Scholar]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comp. Appli. 2023, 35, 20939. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep learning and transformer approaches for UAV-based wildfire detection and segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef]

- Khan, S.; Khan, A. FFireNet: Deep learning based forest fire classification and detection in smart cities. Symmetry 2022, 14, 2155. [Google Scholar] [CrossRef]

- Micheal, A.A.; Vani, K.; Sanjeevi, S.; Lin, C.-H. Object detection and tracking with UAV data using deep learning. J. Indian Soc. Remote Sens. 2021, 49, 463–469. [Google Scholar] [CrossRef]

- Jeong, M.; Park, M.; Nam, J.; Chul Ko, B. Light-weight student LSTM for real-time wildfire smoke detection. Sensors 2020, 20, 5508. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Zhou, Y.; Zhang, L.; Peng, Y.; Hu, X.; Peng, H.; Cai, X. Mixed YOLOv3-Lite: A lightweight real-time object detection method. Sensors 2020, 20, 1861. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Proc. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Abdusalomov, A.B.; Cho, J. A wildfire smoke detection system using unmanned aerial vehicles images based on the optimized YOLOv5. Sensors 2022, 22, 9384. [Google Scholar] [CrossRef]

- Bahher, C.; Ksibi, A.; Ayadi, M.; Jamjoom, M.M.; Ullah, Z.; Soufiene, B.O. Wildfire and smoke detection using staged YOLO model and ensemble CNN. Electronics 2023, 12, 228. [Google Scholar] [CrossRef]

- Battistoni, P.; Cantone, A.A.; Martino, G.; Passamano, V.; Romano, M.; Sebillo, M.; Vitiello, G. A cyber-physical system for wildfire detection and firefighting. Future Internet 2023, 15, 237. [Google Scholar] [CrossRef]

| Types | Images | Videos | Total |

|---|---|---|---|

| Smoke | 1489 | 89 | 1578 |

| Burning | 1462 | 72 | 1534 |

| Description | Specifications |

|---|---|

| FPV Camera 1 | Full HD 1080P/30 frame rates |

| WiFi module | 5.8 GHz frequency wireless link |

| Flight time | 25 min. |

| Storage | SSD: 512 GB |

| CPU | Intel Core i7-7700K |

| GPU | NVIDIA GeForce GTX 1080 Ti |

| RAM | DDR4 16 GB |

| The power transmitted by WiFi module | 26 dBm |

| Operation system | Windows 11 |

| Software installations | Anaconda Navigator 3, OpenCV, YOLOv5, TensorFlow, Labellmg, Pycharm, and OBS |

| Duration | Detection | Ranges (m), Altitudes (m) | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| Daytime | Smoke | 5, 5 | 0.689 | 0.727 | 0.888 | 0.798 |

| 5, 7 | 0.742 | 0.739 | 0.895 | 0.807 | ||

| 5, 10 | 0.694 | 0.725 | 0.888 | 0.798 | ||

| 10, 5 | 0.667 | 0.772 | 0.881 | 0.821 | ||

| 10, 7 | 0.732 | 0.737 | 0.893 | 0.807 | ||

| 10, 10 | 0.736 | 0.738 | 0.894 | 0.808 | ||

| Burning | 5, 5 | 0.826 | 0.764 | 0.906 | 0.828 | |

| 5, 7 | 0.818 | 0.762 | 0.905 | 0.825 | ||

| 5, 10 | 0.780 | 0.751 | 0.900 | 0.819 | ||

| 10, 5 | 0.710 | 0.730 | 0.890 | 0.802 | ||

| 10, 7 | 0.765 | 0.747 | 0.898 | 0.815 | ||

| 10, 10 | 0.696 | 0.840 | 0.885 | 0.861 | ||

| Nighttime | Smoke | 5, 5 | 0.602 | 0.689 | 0.869 | 0.768 |

| 5, 7 | 0.583 | 0.683 | 0.866 | 0.761 | ||

| 5, 10 | 0.644 | 0.744 | 0.878 | 0.805 | ||

| 10, 5 | 0.667 | 0.715 | 0.882 | 0.788 | ||

| 10, 7 | 0.635 | 0.703 | 0.877 | 0.779 | ||

| 10, 10 | 0.634 | 0.702 | 0.875 | 0.778 | ||

| Burning | 5, 5 | 0.758 | 0.745 | 0.897 | 0.813 | |

| 5, 7 | 0.718 | 0.733 | 0.892 | 0.804 | ||

| 5, 10 | 0.770 | 0.748 | 0.899 | 0.816 | ||

| 10, 5 | 0.681 | 0.720 | 0.885 | 0.793 | ||

| 10, 7 | 0.706 | 0.827 | 0.888 | 0.857 | ||

| 10, 10 | 0.841 | 0.767 | 0.907 | 0.831 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duangsuwan, S.; Klubsuwan, K. Accuracy Assessment of Drone Real-Time Open Burning Imagery Detection for Early Wildfire Surveillance. Forests 2023, 14, 1852. https://doi.org/10.3390/f14091852

Duangsuwan S, Klubsuwan K. Accuracy Assessment of Drone Real-Time Open Burning Imagery Detection for Early Wildfire Surveillance. Forests. 2023; 14(9):1852. https://doi.org/10.3390/f14091852

Chicago/Turabian StyleDuangsuwan, Sarun, and Katanyoo Klubsuwan. 2023. "Accuracy Assessment of Drone Real-Time Open Burning Imagery Detection for Early Wildfire Surveillance" Forests 14, no. 9: 1852. https://doi.org/10.3390/f14091852

APA StyleDuangsuwan, S., & Klubsuwan, K. (2023). Accuracy Assessment of Drone Real-Time Open Burning Imagery Detection for Early Wildfire Surveillance. Forests, 14(9), 1852. https://doi.org/10.3390/f14091852