A Tree Point Cloud Simplification Method Based on FPFH Information Entropy

Abstract

:1. Introduction

2. Related Works

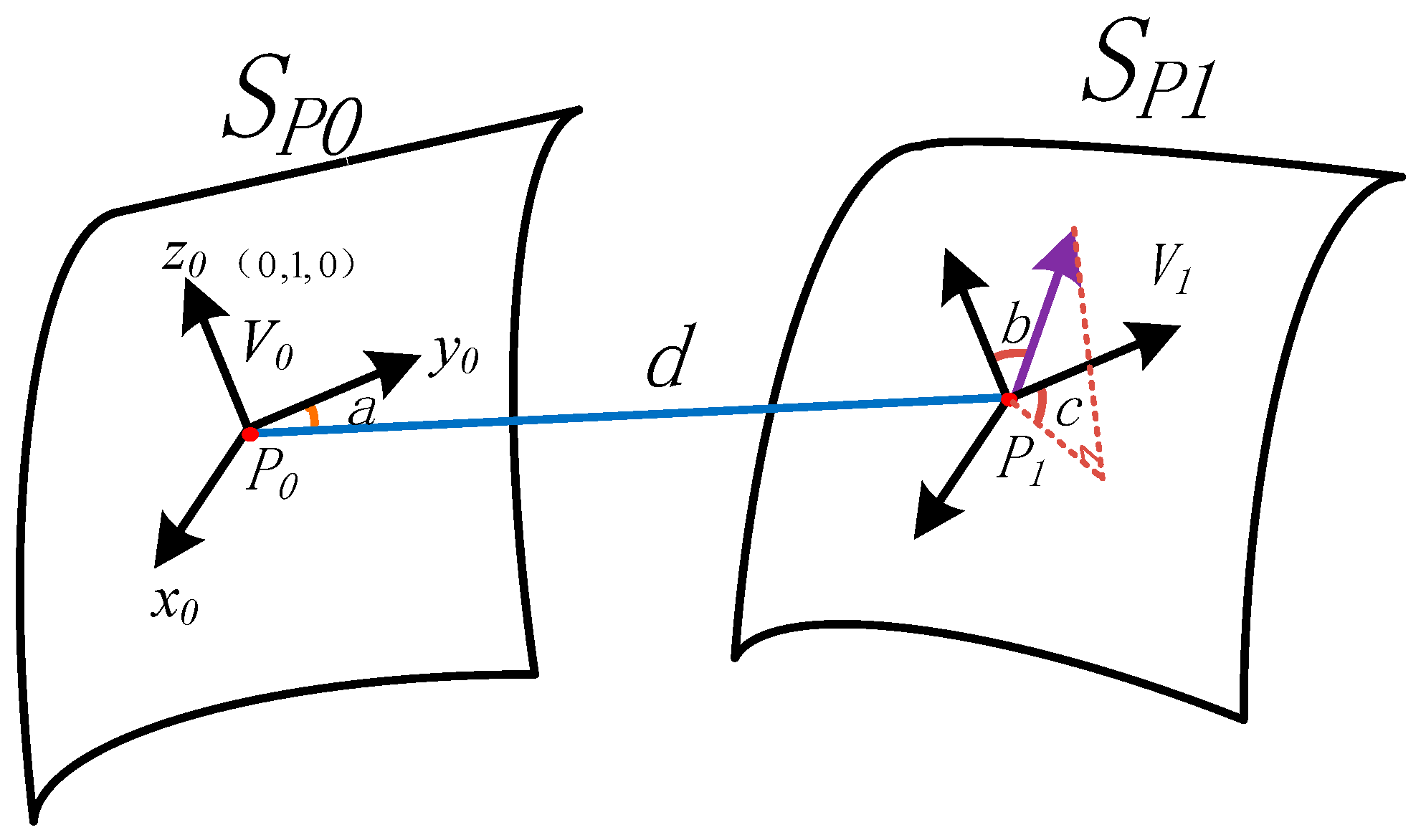

2.1. Fast Point Feature Histograms Principle

2.2. The Proposed Method

2.2.1. Determination of FPFH Neighborhood Searching Ranges

2.2.2. Extraction of Feature Points

- Encoding: Each chromosome is represented by a real number in the range (0, 1).

- Initialize the population: Randomly generate n real numbers as the initial population, where n is the population size.

- Evaluate fitness: Calculate the objective function value corresponding to each chromosome, and set the fitness of the chromosome with the smaller value higher.

- Selection: Use the roulette wheel selection method to select chromosomes to generate the next generation population. The probability of selecting a chromosome with higher fitness is greater.

- Crossover: Use two-point crossover, randomly select two parent chromosomes and exchange the middle part to generate two offspring chromosomes. The crossover probability is pc.

- Mutation: Randomly select a gene of a chromosome, add a small random value, and the mutation probability is pm.

- Repeat Steps 3 to 6 until the maximum number of generations is reached.

- Select the chromosome with the highest fitness from the last generation as the optimal solution.

2.3. Evaluation Metrics of Simplification

2.4. Platforms and Software

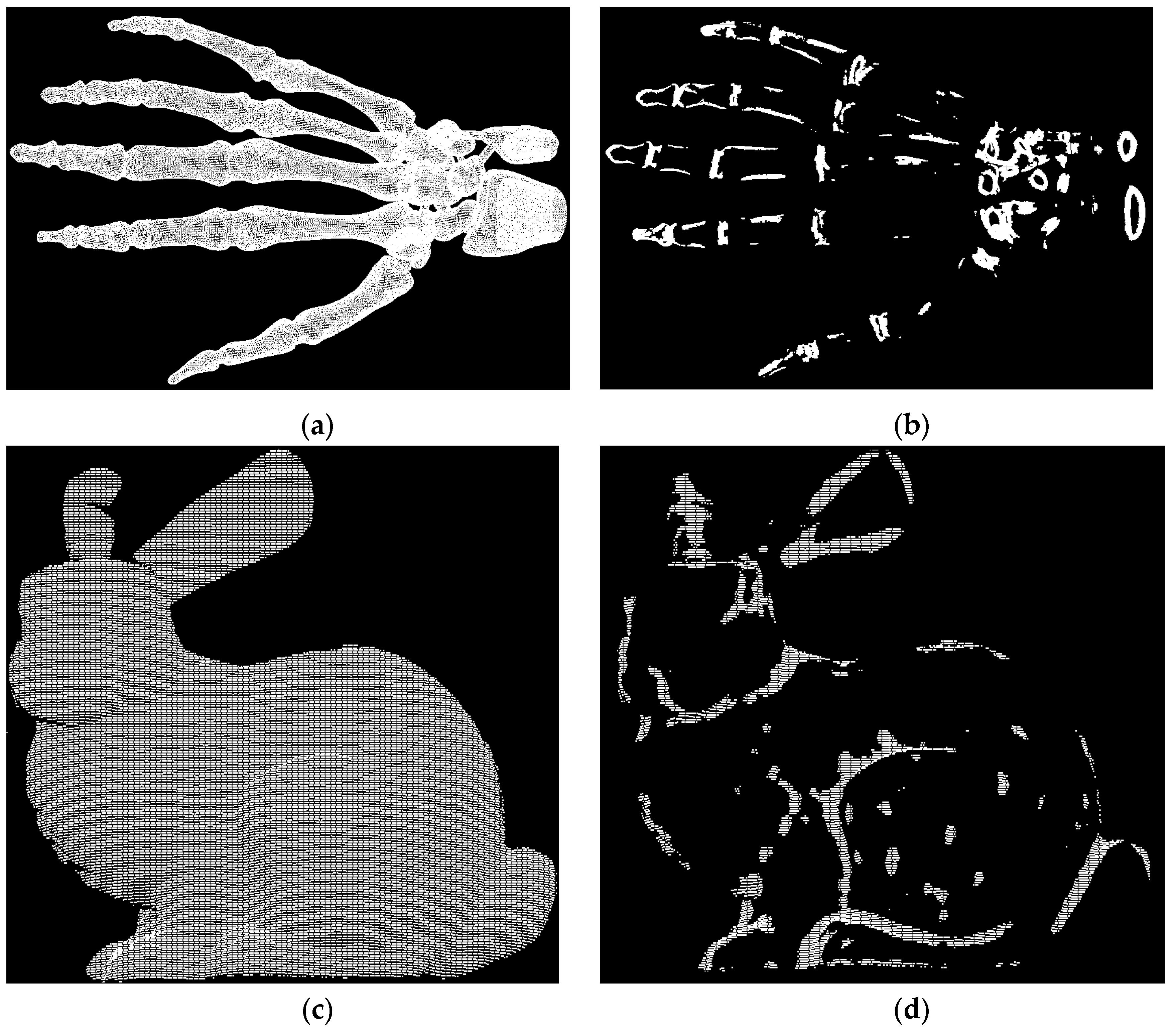

2.5. Test Data Set and Process

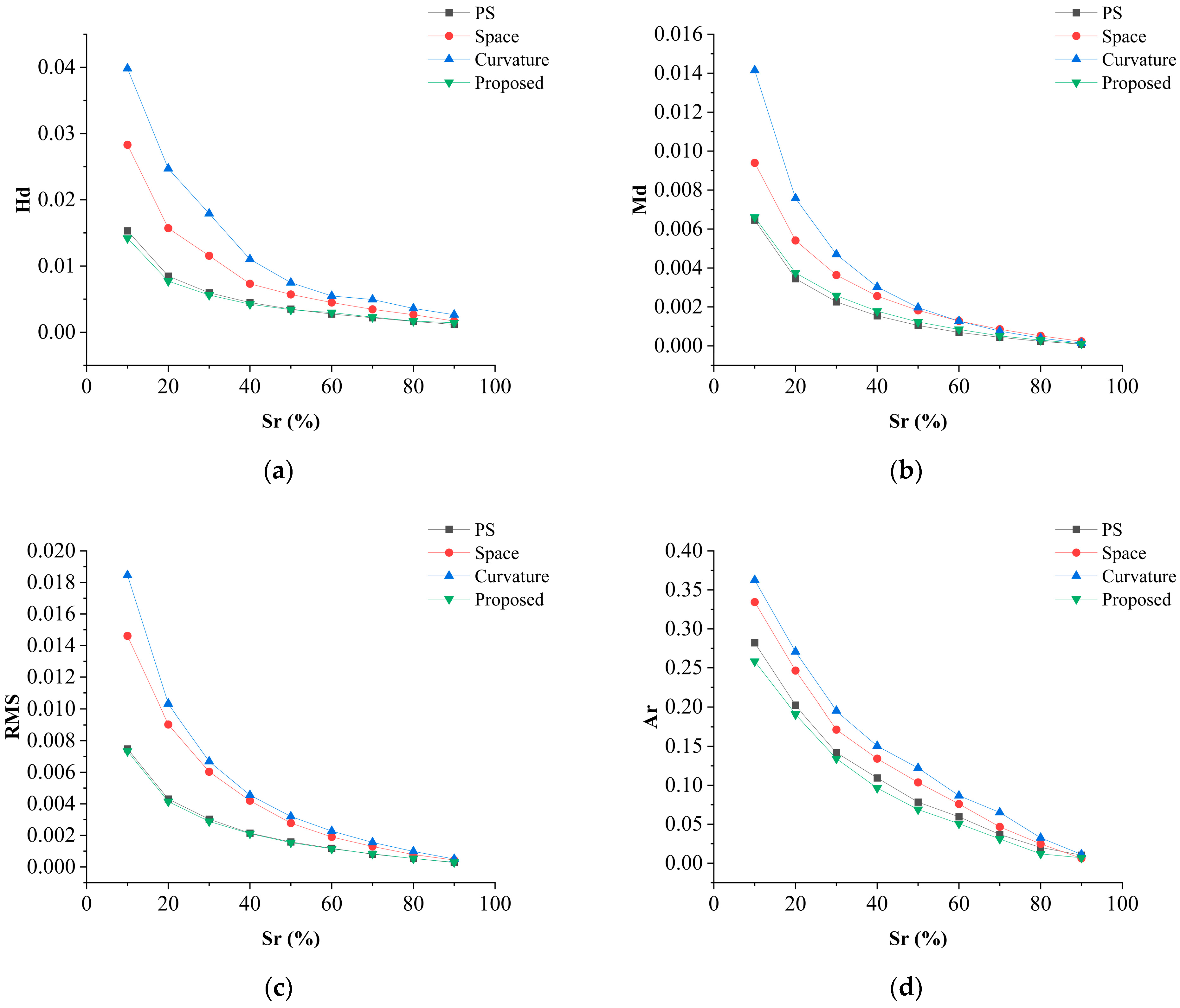

3. Results

4. Discussion

4.1. FPFH and Neighborhood Search

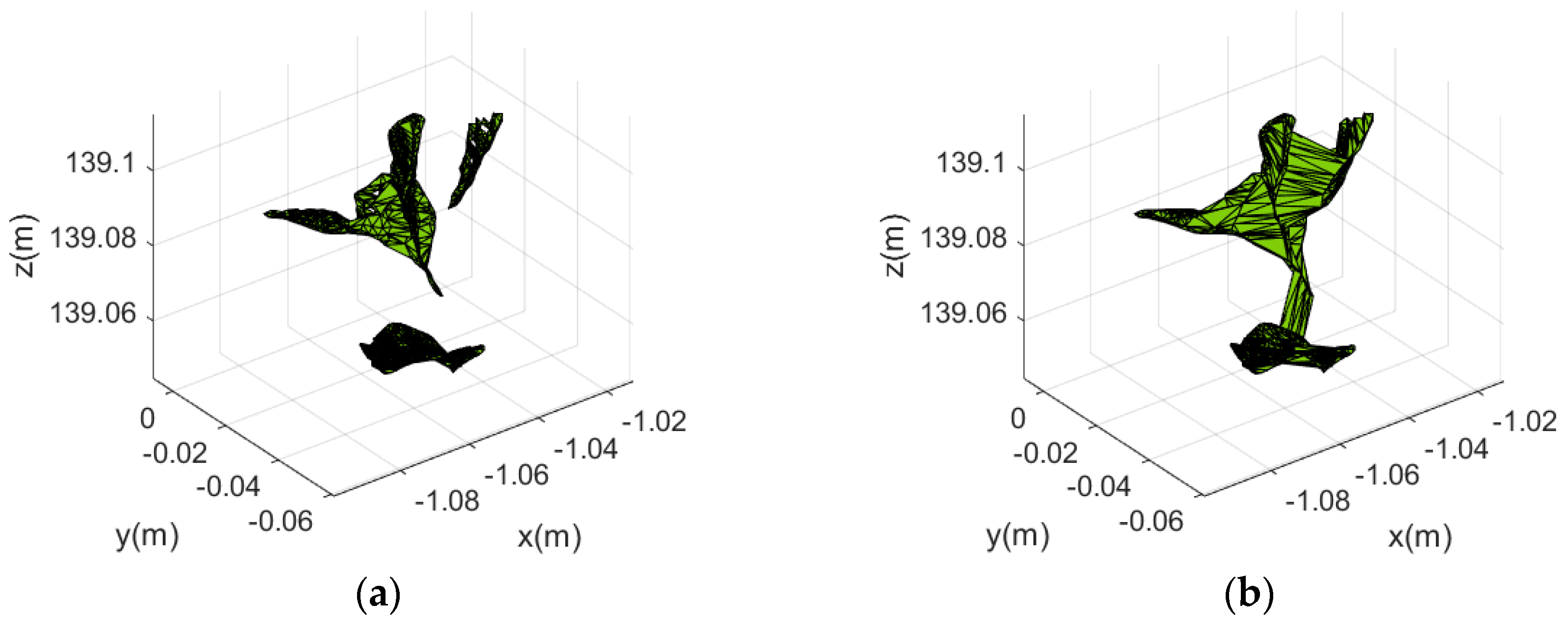

4.2. Key Points and Area of Point Cloud

- (1)

- Boundary points and high-curvature feature points have a greater impact on the area. Ignoring or missing these points during simplification will cause a large change in the calculated area.

- (2)

- In the acquired point cloud, the point density near the center is larger, while the point density far away becomes sparse. The final point cloud cannot guarantee a uniform point density at each position.

- (3)

- If the points in Pl are too sparse, it will also have a great impact on the area calculation result, resulting in the overestimation or underestimation of the area.

4.3. Density of Point Cloud and Sr

- (1)

- When the point density is high, the generated triangular mesh can accurately cover all points, and the calculated area is close to the actual area.

- (2)

- When the point density is low, the generated triangular mesh cannot cover all points, resulting in an inaccurate area calculation result. Some areas are overestimated while some areas are missing.

- (3)

- When the Sr is small and the point density is high, although the number of points after simplification is small, the density is still high. The generated triangular mesh can still cover the overall shape of the point cloud, and the calculated area is still close to the actual situation.

- (4)

- When the Sr is small and the point density is low, the number of points after simplification is small and the density is lower, resulting in a large error in the calculated area.

5. Conclusions and Future Work

- (1)

- A method for determining the optimal search neighborhood based on the standard deviation of FPFH information entropy is proposed, providing a basis for subsequent sampling and optimization;

- (2)

- The FPFH features and the Poisson disk sampling theory are used to partition and sample each point cloud to retain the geometric features of the point cloud;

- (3)

- The point cloud simplification quality and area are taken as the objective function, and genetic algorithms are used to optimize the thresholds of significant feature points and less-significant feature points to control point cloud simplification quality and area.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tompalski, P.; Coops, N.C.; White, J.C.; Goodbody, T.R.H.; Hennigar, C.R.; Wulder, M.A.; Socha, J.; Woods, M.E. Estimating Changes in Forest Attributes and Enhancing Growth Projections: A Review of Existing Approaches and Future Directions Using Airborne 3D Point Cloud Data. Curr. For. Rep. 2021, 7, 1–24. [Google Scholar] [CrossRef]

- Tao, W.; Lei, Y.; Mooney, P. Dense point cloud extraction from UAV captured images in forest area. In Proceedings of the 2011 IEEE International Conference on Spatial Data Mining and Geographical Knowledge Services, Fuzhou, China, 29 June–1 July 2011; pp. 389–392. [Google Scholar]

- Zhang, H.; Wang, L.; Jin, X.; Bian, L.; Ge, Y. High-throughput phenotyping of plant leaf morphological, physiological, and biochemical traits on multiple scales using optical sensing. Crop J. 2023, in press. [CrossRef]

- Li, Q.; Xue, Y. Real-time detection of street tree crowns using mobile laser scanning based on pointwise classification. Biosyst. Eng. 2023, 231, 20–35. [Google Scholar] [CrossRef]

- Luebke, D.P. A developer’s survey of polygonal simplification algorithms. IEEE Comput. Graph. Appl. 2001, 21, 24–35. [Google Scholar] [CrossRef]

- Shi, B.-Q.; Liang, J.; Liu, Q. Adaptive simplification of point cloud using k-means clustering. Comput.-Aided Des. 2011, 43, 910–922. [Google Scholar] [CrossRef]

- Li, M.; Nan, L. Feature-preserving 3D mesh simplification for urban buildings. ISPRS J. Photogramm. Remote Sens. 2021, 173, 135–150. [Google Scholar] [CrossRef]

- Ji, C.; Li, Y.; Fan, J.; Lan, S. A Novel Simplification Method for 3D Geometric Point Cloud Based on the Importance of Point. IEEE Access 2019, 7, 129029–129042. [Google Scholar] [CrossRef]

- Potamias, R.A.; Bouritsas, G.; Zafeiriou, S. Revisiting Point Cloud Simplification: A Learnable Feature Preserving Approach. arXiv 2021, arXiv:2109.14982. [Google Scholar]

- Nurunnabi, A.; West, G.; Belton, D. Outlier detection and robust normal-curvature estimation in mobile laser scanning 3D point cloud data. Pattern Recognit. 2015, 48, 1404–1419. [Google Scholar] [CrossRef]

- Wang, G.; Wu, L.; Hu, Y.; Song, M. Point cloud simplification algorithm based on the feature of adaptive curvature entropy. Meas. Sci. Technol. 2021, 32, 065004. [Google Scholar] [CrossRef]

- Mahdaoui, A.; Sbai, E.H. 3D Point Cloud Simplification Based on k-Nearest Neighbor and Clustering. Adv. Multimed. 2020, 2020, 8825205. [Google Scholar] [CrossRef]

- Wang, S.; Hu, Q.; Xiao, D.; He, L.; Liu, R.; Xiang, B.; Kong, Q. A New Point Cloud Simplification Method with Feature and Integrity Preservation by Partition Strategy. Measurement 2022, 197, 111173. [Google Scholar] [CrossRef]

- Xu, X.; Li, K.; Ma, Y.; Geng, G.; Wang, J.; Zhou, M.; Cao, X. Feature-preserving simplification framework for 3D point cloud. Sci. Rep. 2022, 12, 9450. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Han, X.; Sun, F.; Huang, C. Point cloud simplification with preserved edge based on normal vector. Opt. Int. J. Light Electron Opt. 2015, 126, 2157–2162. [Google Scholar] [CrossRef]

- Leal, N.; Leal, E.; German, S.-T. A linear programming approach for 3D point cloud simplification. IAENG Int. J. Comput. Sci. 2017, 44, 60–67. [Google Scholar]

- Yuan, X.; Wu, L.; Chen, H. Feature preserving point cloud simplification. Opt. Precis. Eng. 2015, 23, 2666–2676. [Google Scholar] [CrossRef]

- Markovic, V.; Jakovljevic, Z.; Miljkovic, Z. Feature sensitive three-dimensional point cloud simplification using support vector regression. Teh. Vjesn. 2019, 26, 985–994. [Google Scholar] [CrossRef] [Green Version]

- Ding, X.; Lin, W.; Chen, Z.; Zhang, X. Point Cloud Saliency Detection by Local and Global Feature Fusion. IEEE Trans. Image Process. 2019, 28, 5379–5393. [Google Scholar] [CrossRef]

- Zhang, K.; Qiao, S.; Wang, X.; Yang, Y.; Zhang, Y. Feature-Preserved Point Cloud Simplification Based on Natural Quadric Shape Models. Appl. Sci. 2019, 9, 2130. [Google Scholar] [CrossRef] [Green Version]

- Zhu, L.L.; Kukko, A.; Virtanen, J.P.; Hyyppa, J.; Kaartinen, H.; Hyyppa, H.; Turppa, T. Multisource Point Clouds, Point Simplification and Surface Reconstruction. Remote Sens. 2019, 11, 2659. [Google Scholar] [CrossRef] [Green Version]

- Tian, D.; Ochimizu, H.; Feng, C.; Cohen, R.; Vetro, A. Geometric distortion metrics for point cloud compression. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3460–3464. [Google Scholar]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-Dimensional Reconstruction of Structural Surface Model of Heritage Bridges Using UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef] [Green Version]

- Xiaoyi, L.; Ting, Y.; Lianfeng, X.; Qiangfa, X.; Lin, C. Effective Feature Extraction and Identification Method Based on Tree Laser Point Cloud. Chin. J. Lasers 2019, 5, 0510002. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zheng, J.; Yang, K.; Ge, Y. Low-volume precision spray for plant pest control using profile variable rate spraying and ultrasonic detection. Front. Plant Sci. 2022, 13, 1042769. [Google Scholar] [CrossRef] [PubMed]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3384–3391. [Google Scholar]

- Zhao, M.; Cao, L.; Song, T.; Liu, S.; Luo, Y.; Yang, X. Independent Method for Selecting Radius of FPFH Neighborhood in 3D Point Cloud Registration. Laser Optoelectron. Prog. 2021, 58, 610002. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 623–656. [Google Scholar] [CrossRef]

- Corsini, M.; Cignoni, P.; Scopigno, R. Efficient and Flexible Sampling with Blue Noise Properties of Triangular Meshes. IEEE Trans. Vis. Comput. Graph. 2012, 18, 914–924. [Google Scholar] [CrossRef] [Green Version]

- Cignoni, P.; Rocchini, C.; Scopigno, R. Metro: Measuring Error on Simplified Surfaces. Comput. Graph. Forum 1998, 17, 167–174. [Google Scholar] [CrossRef] [Green Version]

- Miao, Y.; Pajarola, R.; Feng, J. Curvature-aware adaptive re-sampling for point-sampled geometry. Comput.-Aided Des. 2009, 41, 395–403. [Google Scholar] [CrossRef]

- Demantké, J.; Mallet, C.; David, N.; Vallet, B. Dimensionality based scale selection in 3d lidar point clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 3812, 97–102. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

| Method | ||||

|---|---|---|---|---|

| PS | Space | Curvature | Proposed | |

| Sr = 20% |  |  |  |  |

|  |  |  | |

| Sr = 80% |  |  |  |  |

|  |  |  | |

| Method | Sr = 20% | Sr = 50% | Sr = 80% |

|---|---|---|---|

| PS |  |  |  |

| Proposed |  |  |  |

| Space |  |  |  |

| Curvature |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, C.; Ru, Y.; Fang, S.; Zhou, H.; Xue, J.; Zhang, Y.; Li, J.; Xu, G.; Fan, G. A Tree Point Cloud Simplification Method Based on FPFH Information Entropy. Forests 2023, 14, 1507. https://doi.org/10.3390/f14071507

Hu C, Ru Y, Fang S, Zhou H, Xue J, Zhang Y, Li J, Xu G, Fan G. A Tree Point Cloud Simplification Method Based on FPFH Information Entropy. Forests. 2023; 14(7):1507. https://doi.org/10.3390/f14071507

Chicago/Turabian StyleHu, Chenming, Yu Ru, Shuping Fang, Hongping Zhou, Jiangkun Xue, Yuheng Zhang, Jianping Li, Guopeng Xu, and Gaoming Fan. 2023. "A Tree Point Cloud Simplification Method Based on FPFH Information Entropy" Forests 14, no. 7: 1507. https://doi.org/10.3390/f14071507

APA StyleHu, C., Ru, Y., Fang, S., Zhou, H., Xue, J., Zhang, Y., Li, J., Xu, G., & Fan, G. (2023). A Tree Point Cloud Simplification Method Based on FPFH Information Entropy. Forests, 14(7), 1507. https://doi.org/10.3390/f14071507