A Forest Fire Identification System Based on Weighted Fusion Algorithm

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Environment

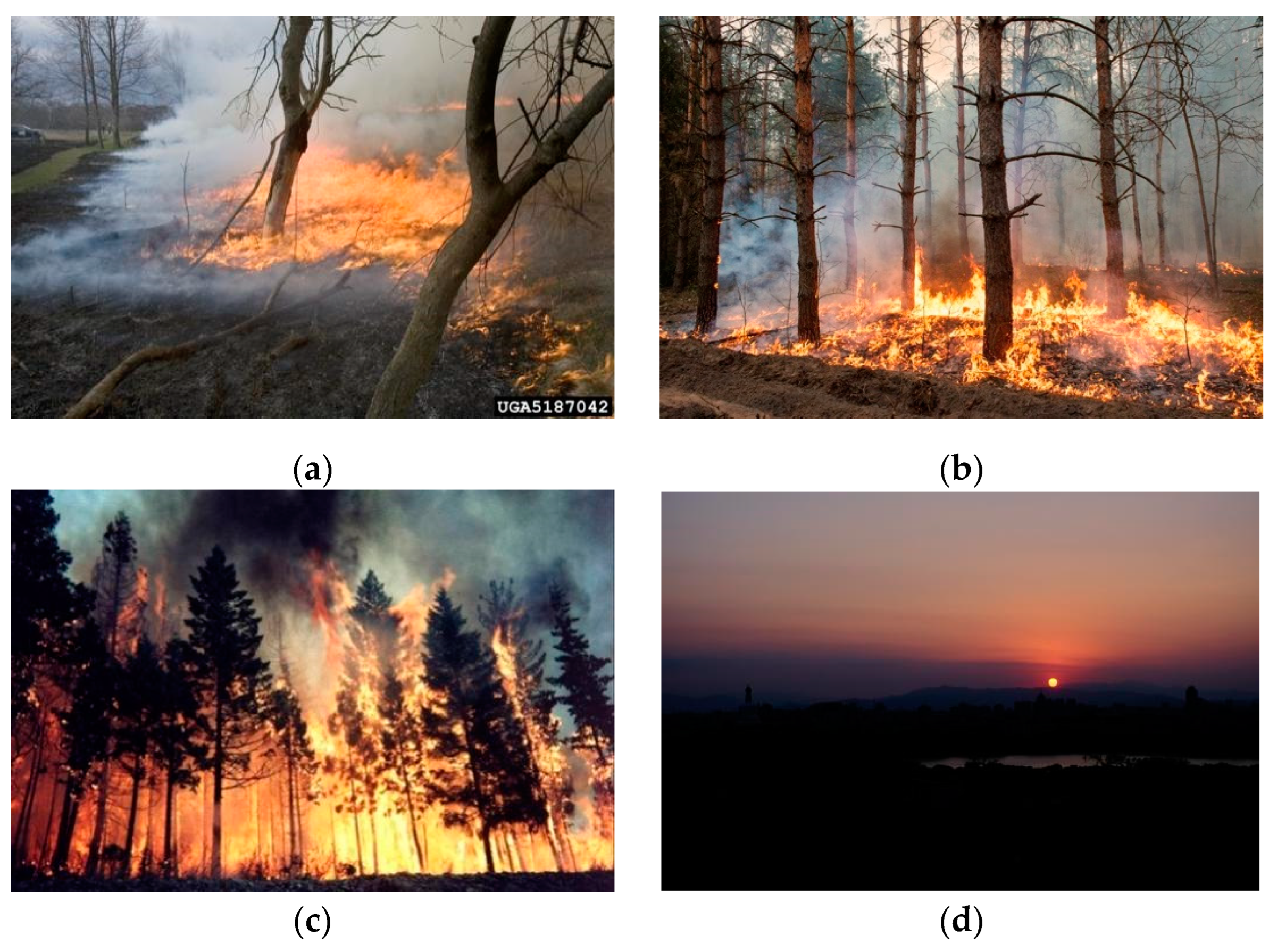

2.2. Data Set

2.3. Integrated Learning

- According to the network depth and network width, Yolov5 can be divided into Yolov5s, Yolov5m, Yolov5l, and Yolov5x. The Yolov5s network was used in this paper. The depth of Yolov5s network is the smallest in the Yolov5 series [21]. The image inference speed of the Yolov5s model reaches 455FPS, which is widely used by a large number of scholars with this advantage.

- SSD is another single-order target recognition model after Yolo. It uses the method of direct regression bbox and classification probability in Yolo. At the same time, it also refers to Fast R-CNN and uses anchor extensively to improve the recognition accuracy. It has the advantages of high precision and high real-time, but its recognition effect on small targets is general.

- Limited by hardware computing resources, Efficientdet-D2 was used for the experiment in this paper. For forest fire detection, the advantage of the EfficientDet model is that it has different trunk network, feature fusion network, and output network [22], which can select different detection frameworks according to the cost performance of software and hardware and the actual requirements for accuracy and efficiency in the real environment, so as to design a more efficient forest fire detector.

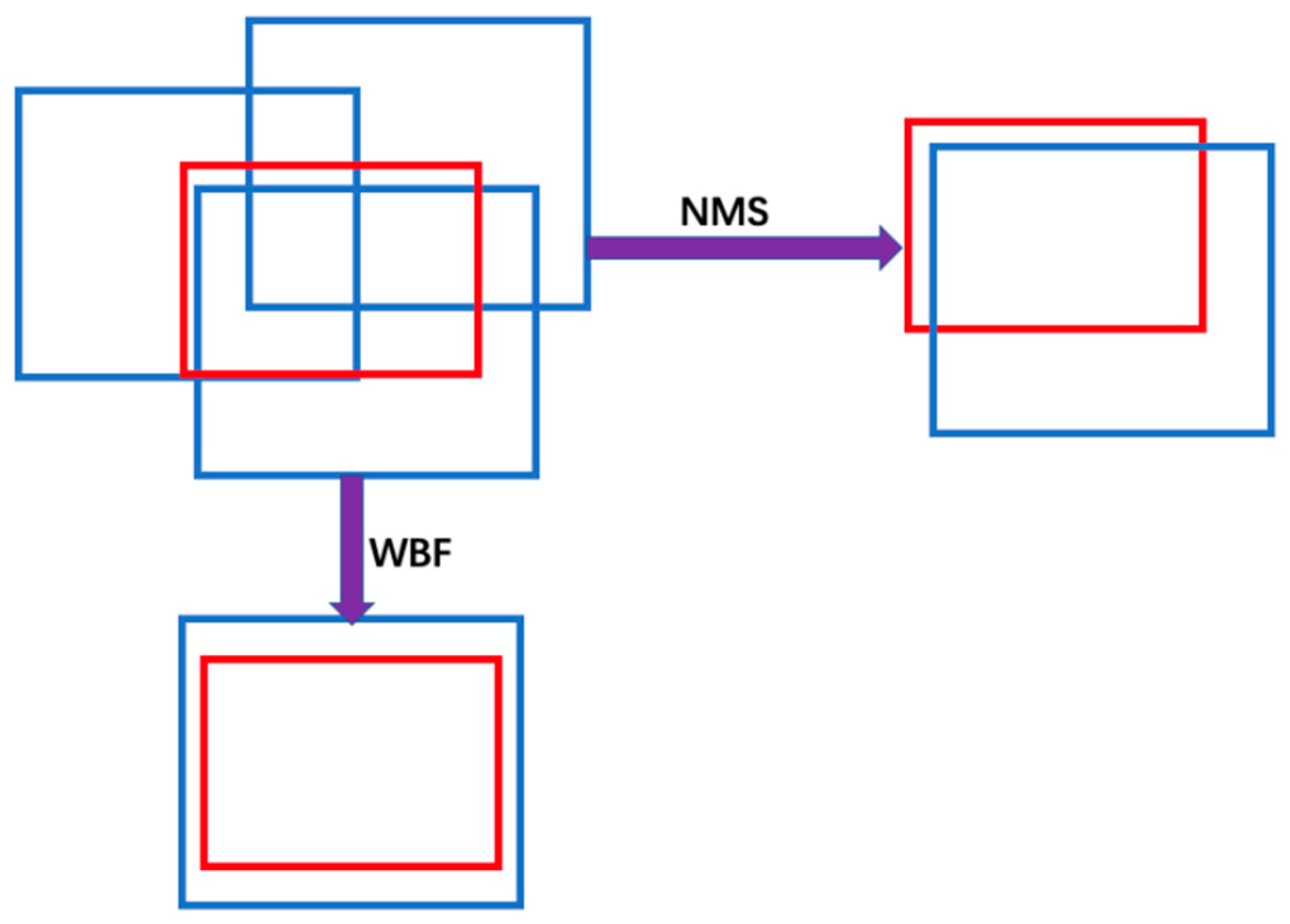

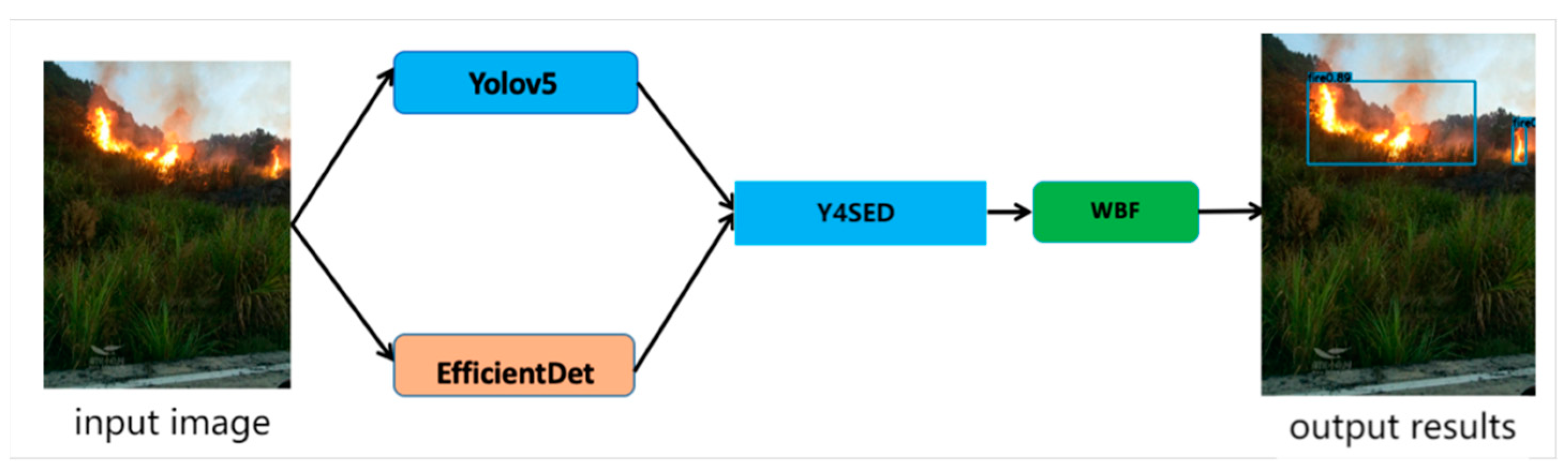

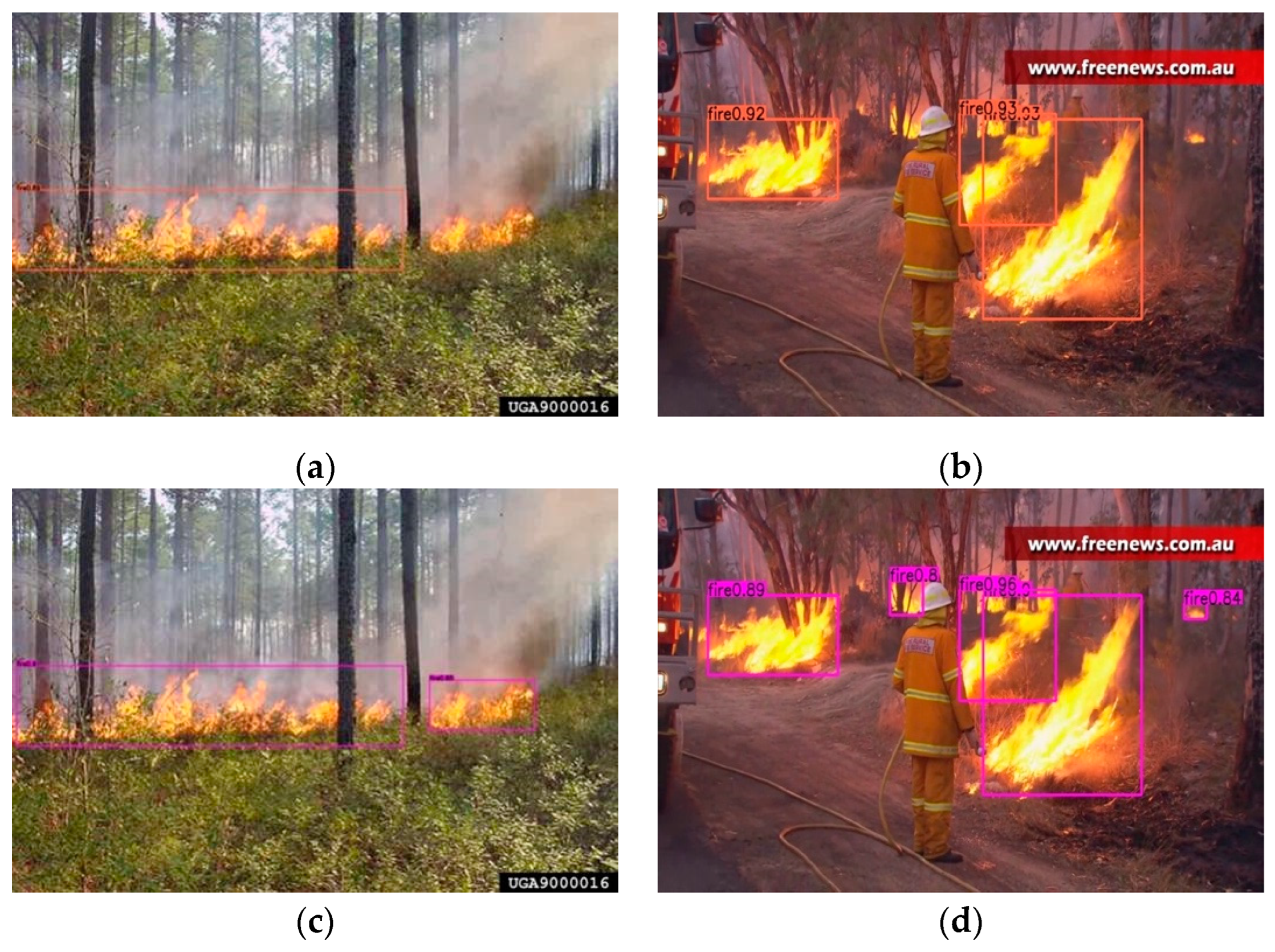

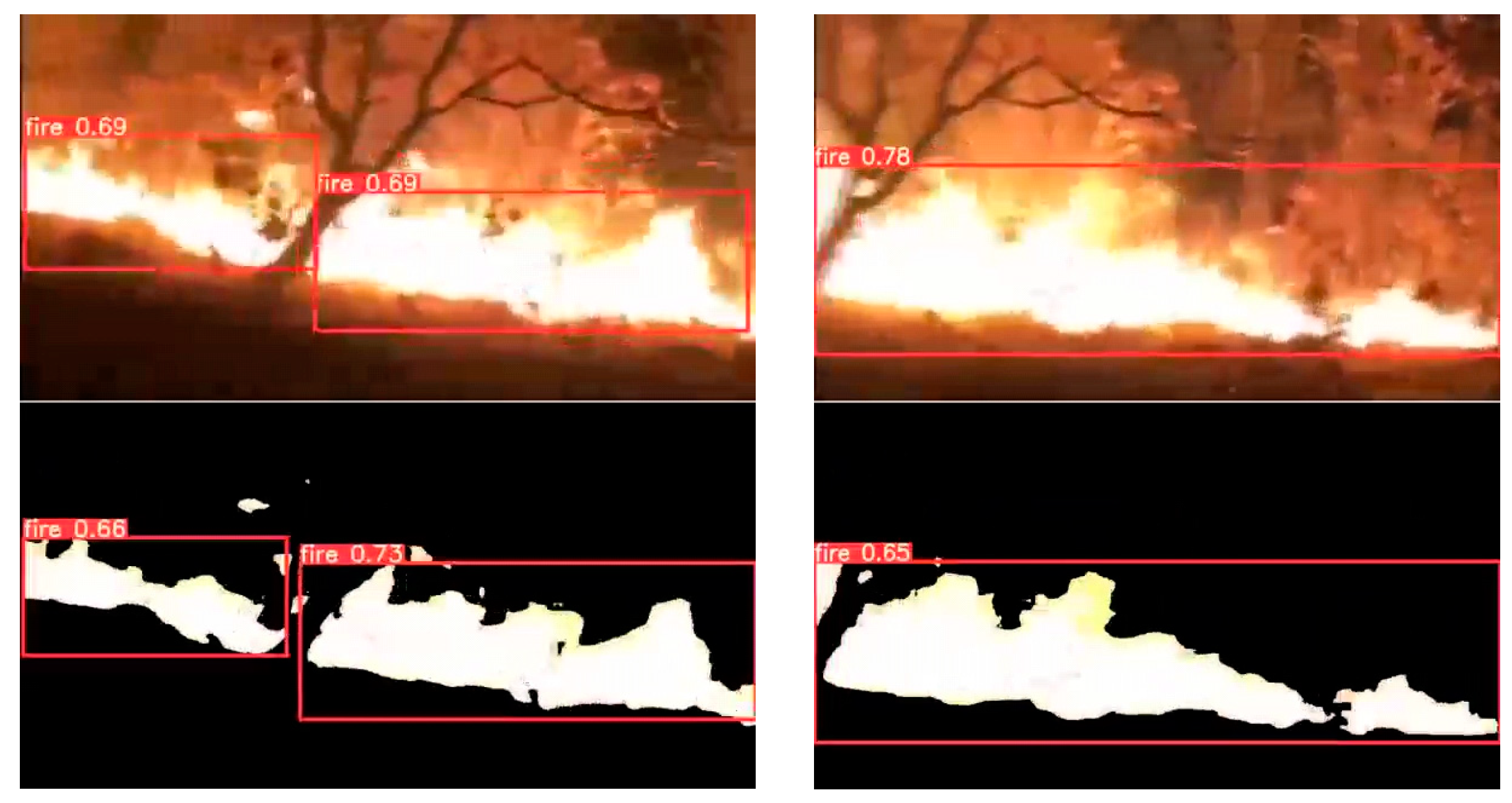

2.4. Fusion Model Y4SED

2.5. Evaluation Indicators

3. Experimental Results and Analysis

3.1. Parameter Setting

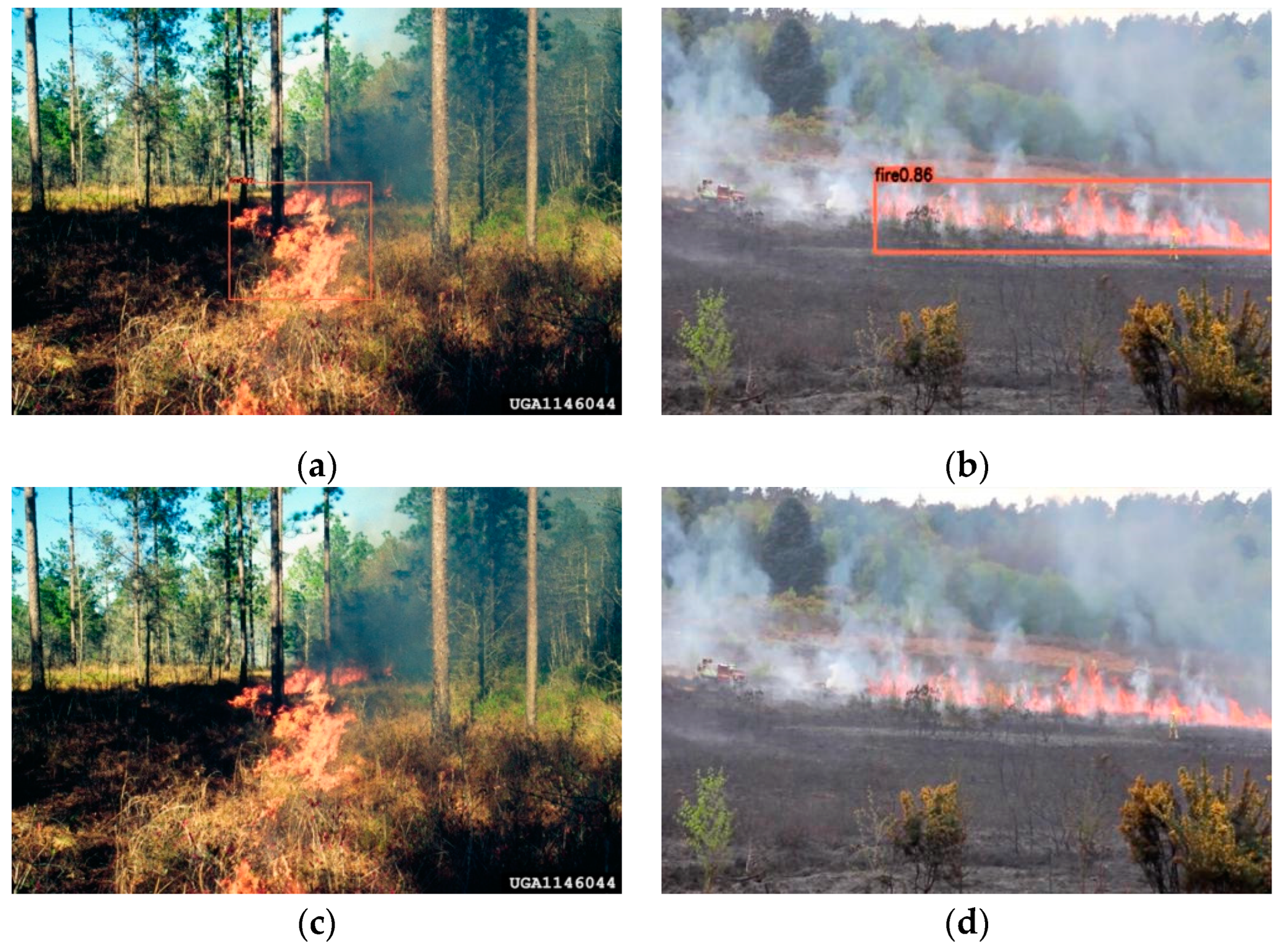

3.2. Experimental Analysis

3.3. Experimental Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Chowdhury, N.; Mushfiq, D.R.; Chowdhury, A.E. Computer Vision and Smoke Sensor Based Fire Detection System. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–5. [Google Scholar]

- Varela, N.; Ospino, A.; Zelaya, N.A.L. Wireless sensor network for forest fire detection. Procedia Comput. Sci. 2020, 175, 435–440. [Google Scholar] [CrossRef]

- Lin, H.; Liu, X.; Wang, X.; Liu, Y. A fuzzy inference and big data analysis algorithm for the prediction of forest fire based on rechargeable wireless sensor networks. Sustain. Comput. Inform. Syst. 2018, 18, 101–111. [Google Scholar] [CrossRef]

- Sun, F.; Yang, Y.; Lin, C.; Liu, Z.; Chi, L. Forest Fire Compound Feature Monitoring Technology Based on Infrared and Visible Binocular Vision. J. Phys. Conf. Ser. 2021, 1792, 012022. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Zhan, J.; Hu, Y.; Cai, W.; Zhou, G.; Li, L. PDAM–STPNNet: A Small Target Detection Approach for Wildland Fire Smoke through Remote Sensing Images. Symmetry 2021, 13, 2260. [Google Scholar] [CrossRef]

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; Volume 3, pp. 1707–1710. [Google Scholar]

- Horng, W.B.; Peng, J.W.; Chen, C.Y. A new image-based real-time flame detection method using color analysis. In Proceedings of the 2005 IEEE Networking, Sensing and Control, Tucson, AZ, USA, 19–22 March 2005; pp. 100–105. [Google Scholar]

- Çelik, T.; Özkaramanlı, H.; Demirel, H. Fire and smoke detection without sensors: Image processing based approach. In Proceedings of the 2007 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 1794–1798. [Google Scholar]

- Khan, M.N.A.; Tanveer, T.; Khurshid, K.; Zaki, H.; Zaidi, S.S.I. Fire Detection System using Raspberry Pi. In Proceedings of the 2019 International Conference on Information Science and Communication Technology (ICISCT), Karachi, Pakistan, 9 March 2019; pp. 1–6. [Google Scholar]

- Priya, R.S.; Vani, K. Deep Learning Based Forest Fire Classification and Detection in Satellite Images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar]

- Zheng, X.; Chen, F.; Lou, L.; Cheng, P.; Huang, Y. Real-Time Detection of Full-Scale Forest Fire Smoke Based on Deep Convolution Neural Network. Remote Sens. 2022, 14, 536. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Processing Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Ultralytics. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 May 2022).

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10781–10790. [Google Scholar]

- BoWFire Dataset. Available online: https://bitbucket.org/gbdi/bowfifire-dataset/downloads/ (accessed on 1 May 2022).

- Xie, Y.; Peng, M. Forest fire forecasting using ensemble learning approaches. Neural Comput. Appl. 2019, 31, 4541–4550. [Google Scholar] [CrossRef]

- Dong, X.; Yu, Z.; Cao, W.; Shi, Y.; Ma, Q. A survey on ensemble learning. Front. Comput. Sci. 2020, 14, 241–258. [Google Scholar] [CrossRef]

- Yang, G.; Feng, W.; Jin, J.; Lei, Q.; Li, X.; Gui, G.; Wang, W. Face mask recognition system with YOLOV5 based on image recognition. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1398–1404. [Google Scholar]

- Song, S.; Jing, J.; Huang, Y.; Shi, M. EfficientDet for fabric defect detection based on edge computing. J. Eng. Fibers Fabr. 2021, 16, 15589250211008346. [Google Scholar] [CrossRef]

- Zhou, D.; Fang, J.; Song, X.; Guan, C.; Yin, J.; Dai, Y.; Yang, R. Iou loss for 2d/3d object detection. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019; pp. 85–94. [Google Scholar]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted boxes fusion: Ensembling boxes from different object detection models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 658–666. [Google Scholar]

- Lydia, A.; Francis, S. Adagrad—An optimizer for stochastic gradient descent. Int. J. Inf. Comput. Sci. 2019, 5, 566–568. [Google Scholar]

- Yao, Z.; Gholami, A.; Shen, S.; Mustafa, M.; Keutzer, K.; Mahoney, M. Adahessian: An adaptive second order optimizationer for machine learning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 10665–10673. [Google Scholar]

| Experimental Environment | Configuration Parameters |

|---|---|

| Programming language | Python3.8 |

| Deep learning framework | PyTorch1.7.1 |

| GPU | NVIDIA GeForce RTX 3060 |

| GPU accelerating package | CUDA: 11.0 |

| Operating system | Windows10 |

| CPU processor | AMD Ryzen 7 5800H |

| Model | Test Case 1 | Test Case 2 | Test Case 3 |

|---|---|---|---|

| m1 | ✓ | ✓ | ✕ |

| m2 | ✓ | ✕ | ✓ |

| m3 | ✕ | ✓ | ✓ |

| Integration | ✓ | ✓ | ✓ |

| Model | Test Case 1 | Test Case 2 | Test Case 3 |

|---|---|---|---|

| m1 | ✓ | ✓ | ✕ |

| m2 | ✓ | ✓ | ✕ |

| m3 | ✓ | ✓ | ✕ |

| Integration | ✓ | ✓ | ✕ |

| Model | Test Case 1 | Test Case 2 | Test Case 3 |

|---|---|---|---|

| m1 | ✓ | ✕ | ✕ |

| m2 | ✕ | ✓ | ✕ |

| m3 | ✕ | ✕ | ✓ |

| Integration | ✕ | ✕ | ✕ |

| Average accuracy (AP) | |

| AP0.5 | The average accuracy when IOU = 0.5 |

| Average accuracy at multiple scales | |

| APS | AP0.5 of small target (size < 322) |

| APM | AP0.5 of medium target (322 < size < 962) |

| APL | AP0.5 of big target (size > 962) |

| Average recall rate (AR) | |

| AR0.5 | The average recall rate when IOU = 0.5 |

| Average recall rate at multiple scales | |

| ARS | AR0.5 of small target (size < 322) |

| ARM | AR0.5 of medium target (322 < size < 962) |

| ARL | AR0.5 of big target (size > 962) |

| Model | Training | Test | Optimizer | Vector | Batch Size | Number of Iterations |

|---|---|---|---|---|---|---|

| Yolov5-S | 2678 | 298 | SGD [26] | 1 × 10−2 | 12 | 300 |

| EfficientDet-D2 | 2678 | 298 | AdamW [27] | 1 × 10−4 | 12 | 300 |

| Algorithm Used in the Integration Model | AP0.5 |

|---|---|

| NMS | 79 |

| WBF | 87 |

| Model | AP0.5 | APS | APM | APL | AR0.5 | ARS | ARM | ARL |

|---|---|---|---|---|---|---|---|---|

| Yolov5-S | 82.5 | 36.0 | 48.7 | 66.0 | 69.2 | 48 | 59 | 76 |

| EfficientDet-D2 | 84.5 | 36.3 | 50.2 | 64.1 | 68.6 | 49.8 | 64.2 | 73 |

| Y4SED | 87 | 36 | 50.1 | 68.6 | 71.5 | 52.4 | 63.4 | 77.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, J.; Lin, H. A Forest Fire Identification System Based on Weighted Fusion Algorithm. Forests 2022, 13, 1301. https://doi.org/10.3390/f13081301

Qian J, Lin H. A Forest Fire Identification System Based on Weighted Fusion Algorithm. Forests. 2022; 13(8):1301. https://doi.org/10.3390/f13081301

Chicago/Turabian StyleQian, Jingjing, and Haifeng Lin. 2022. "A Forest Fire Identification System Based on Weighted Fusion Algorithm" Forests 13, no. 8: 1301. https://doi.org/10.3390/f13081301

APA StyleQian, J., & Lin, H. (2022). A Forest Fire Identification System Based on Weighted Fusion Algorithm. Forests, 13(8), 1301. https://doi.org/10.3390/f13081301