Abstract

We see how we can improve the accessibility of Newton’s method for approximating a solution of a nonlinear equation in Banach spaces when a center Hölder condition on the first derivative is used to prove its semi-local convergence.

1. Introduction

It is often necessary in many scientific and engineering problems to find a root of a nonlinear equation. To solve this equation, we can use iterative methods. To give sufficient generality to the problem of approximating a solution of a nonlinear equation by iterative methods, we are concerned, in this work, with the problem of approximating a locally-unique solution of the equation , where F is a nonlinear operator defined on a nonempty open convex subset Ω of a Banach space X with values in a Banach space Y, so that many scientific and engineering problems can be written as a nonlinear equation in Banach spaces.

Newton’s method is probably the best-known iterative method, and it is well known that it converges quadratically. Newton’s method for solving scalar equations was extended to nonlinear equations in Banach spaces by Kantorovich in 1948 [1] in the following way:

where is the Fréchet derivative of the operator F. For this reason, many authors refer to it as the Newton–Kantorovich method.

Kantorovich proved the semi-local convergence of Newton’s method under the hypothesis that the operator involved F is twice differentiable Fréchet with bounded second derivative:

There exists a constant , such that for .

It is well known that this condition can be replaced by a Lipschitz condition on the first derivative of the operator involved [2]:

There exists a constant , such that for .

In view of the applications, numerous papers have recently appeared where the convergence of the method is proven under different conditions for the derivatives of the operator F. A known variant is the study of the convergence of the method under a Hölder condition on the first derivative [3,4,5]:

There exist two constants and such that for .

In order to improve the accessibility of Newton’s method under the last condition, we can use different strategies that change the condition. In this work, we use a similar one as that used by Argyros in [6] for the Lipschitz condition on the first derivative , which consists of noticing that, as a consequence of the last condition being satisfied in Ω, we have, for the starting point , that:

- There exist two constants and , such that for ,

In this paper, we focus our attention on the analysis of the semi-local convergence of Sequence (1), which is based on demanding the last condition to the initial approximation and provides the so-called domain of parameters corresponding to the conditions required for the initial approximation that guarantee the convergence of Sequence (1) to the solution .

In this work, we carry out an analysis of the domain of parameters for Newton’s method under the last two conditions for and use a technique based on recurrence relations. As a consequence, we improve the domains of parameters associated with the semi-local convergence result given for Newton’s method by Hérnández in [4].

We prove in this paper that center conditions on the first derivative of the operator involved in the solution of nonlinear equations play an important role in the semi-local convergence of Newton’s method, since we can improve the accessibility of Newton’s method from them.

Throughout the paper, we denote and .

2. Preliminaries

The best-known semi-local convergence result for Newton’s method under a Hölder condition on the first derivative of the operator involved, when the technique of recurrence relations is used to prove it, is that given by Hernández in [4], which is established under the following conditions:

- (A1)

- There exists , for some , with and , where is the set of bounded linear operators from Y to X.

- (A2)

- There exist two constants and , such that for .

- (A3)

- If is the unique zero of the auxiliary function:in the interval , it is satisfied that and , where .

Theorem 1. (Theorem 2.1 of [4]) Let be a continuously-differentiable operator defined on a nonempty open convex domain Ω of a Banach space X with values in a Banach space Y. Suppose that Conditions (A1)(A3) are satisfied. Then, Newton’s sequence, given by (1), converges to a solution of the equation , starting at , and , for all

Note that Condition (A1), required for the initial approximation , defines the parameters β and η, and Condition (A2), required for the operator F, defines the parameter K fixed for all point of Ω. Observe that, every point , such that the operator exists with and , has associated the pair of the -plane, where and . In addition, fixed , if we consider the set:

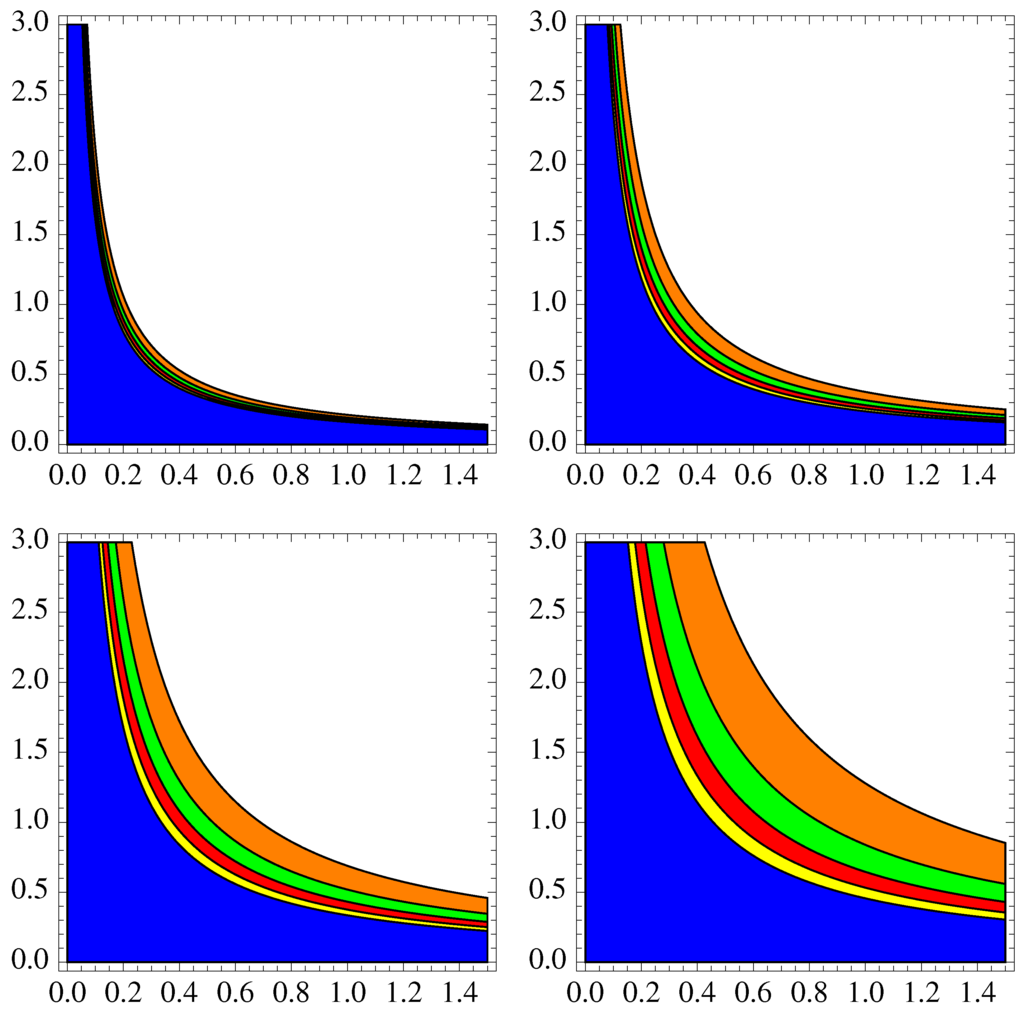

we can observe that every point z, such that its associated pair belongs to , can be chosen as the starting point for Newton’s method, so that the method converges to a solution of the equation when it starts at z. The set is then called the domain of parameters associated with Theorem 1 and can be drawn by choosing , and coloring the region of the -plane whose points satisfy the condition (namely, ) of the Theorem 1. In Figure 1, we see the domain of parameters (blue region).

In relation to the above, we can think that the larger the size of the domain of parameters is, the more possibilities we have for choosing good starting points for Newton’s method. As a consequence, we are interested in being as big as possible, since this fact allows us to find a greater number of good starting points for Newton’s method.

3. Semi-Local Convergence of Newton’s Method

To improve the semi-local convergence of Newton’s method from increasing the domain of parameters , we consider a procedure that consists of observing that, as a consequence of Condition (A2), once is fixed, the condition:

is satisfied with . Then, by considering jointly the parameters K and , we can relax the semi-local convergence conditions of Newton’s method given in Theorem 1 and obtain a larger domain of parameters.

Now, we present a semi-local convergence result for Newton’s method under Condition (A1) for the starting point and Condition (A2) for the first derivative . Note that Condition (3) follows from Condition (A2) for the starting point . In addition, we obtain a semi-local convergence result by combining Conditions (A2) and (3), which allows increasing the domain of parameters , so that the possibility of choosing good starting points for Newton’s method is increased, as we can see later in the applications. In particular, we study the convergence of Newton’s method to a solution of the equation under certain conditions for the pair . From some real parameters, a system of four recurrence relations is constructed in which two sequences of positive real numbers are involved. The convergence of Newton’s method is then guaranteed from them.

3.1. Auxiliary Scalar Sequences

From Conditions (A1)–(A2), we define , and , where . Now, we define and:

Observe that we consider the case , since if , a trivial problem results, as the solution of the equation is .

Next, we prove the following four recurrence relations for Sequences (1), (4) and (5):

provided that:

If , then:

Then, by the Banach lemma on invertible operators, it follows that the operator exists and:

After that, from Taylor’s series and Sequence (1), we have:

Thus,

provided that and .

Now, we prove in the same way as above the following four recurrence relations for Sequences (1), (4) and (5):

provided that:

In addition, we generalize the last recurrence relations to every point of Sequence (1), so that we can guarantee that (1) is a Cauchy sequence from them. For this, we analyze the scalar sequences defined in (4) and (5) in order to prove later the semi-local convergence of Sequence (1). For this, it suffices to see that (1) is a Cauchy sequence and (10) and (15) are true for all and with . We begin by presenting a technical lemma.

Lemma 2 If and is such that

then:

- (a)

- the sequences and are strictly decreasing,

- (b)

- and , for all .

If , then and for all .

Proof. We first consider the case in which satisfies (16). Item (a) is proven by mathematical induction on n. As , then and . If we now suppose that and , for all , then:

As a result, the sequences and are strictly decreasing for all .

To see Item (b), we have and , for all , by Item (a) and the conditions given in (16).

Second, if , then , for all . Moreover, if , then we have , for all . □

3.2. Main Result

We now give a semi-local convergence result for Newton’s method from a modification of the convergence conditions required in Theorem 1. Therefore, we consider Conditions (A1), (A2) and the modification of Condition (A3) given by:

- (A3b) , satisfies (16) and , where .

Remember that Condition (3) follows from Condition (A2) for the starting point .

Theorem 3. Let be a continuously-differentiable operator defined on a nonempty open convex domain Ω of a Banach space X with values in a Banach space Y. Suppose that Conditions (A1), (A2) and (A3b) are satisfied. Then, Newton’s sequence, given by (1), converges to a solution of the equation , starting at , and , for all .

proof. We begin by proving the following four items for Sequences (1), (4) and (5) and :

- (I)

- There exists and ,

- (II)

- ,

- (III)

- ,

- (IV)

- .

Observe that , since . Moreover, from (6), (7), (8) and (9), it follows that . Furthermore, from (11), (12), (13) and (14), we have that Items (I), (II), (III) and (IV) hold for . If we now suppose that Items (I), (II) and (III) are true for some , it follows, by analogy to the case where and induction, that Items (I), (II) and (III) also hold for n. Notice that for all . Now, we prove (IV). Therefore,

and . As , then for all . Note that the conditions given in (15) are satisfied for all and with .

Next, we prove that is a Cauchy sequence. For this, we follow an analogous procedure to the latter. Therefore, for and , we have:

Thus, is a Cauchy sequence.

After that, we prove that is a solution of equation . As when , if we take into account that:

and is bounded, since:

it follows that when . As a consequence, we obtain by the continuity of F in . □

4. Accessibility of Newton’s Method

The accessibility of an iterative method is analyzed from the set of possible starting points that guarantee the convergence of the iterative method when it starts at them. As we have indicated, the set of starting points that guarantees the convergence of the iterative method is related to the domain of parameters associated with a result of semi-local convergence of the iterative method.

Next, we study the domain of parameters associated with Theorem 1 and compare it with that associated with Theorem 3. To guarantee the convergence of Newton’s method from Theorem 3, the following three conditions must be satisfied:

where .

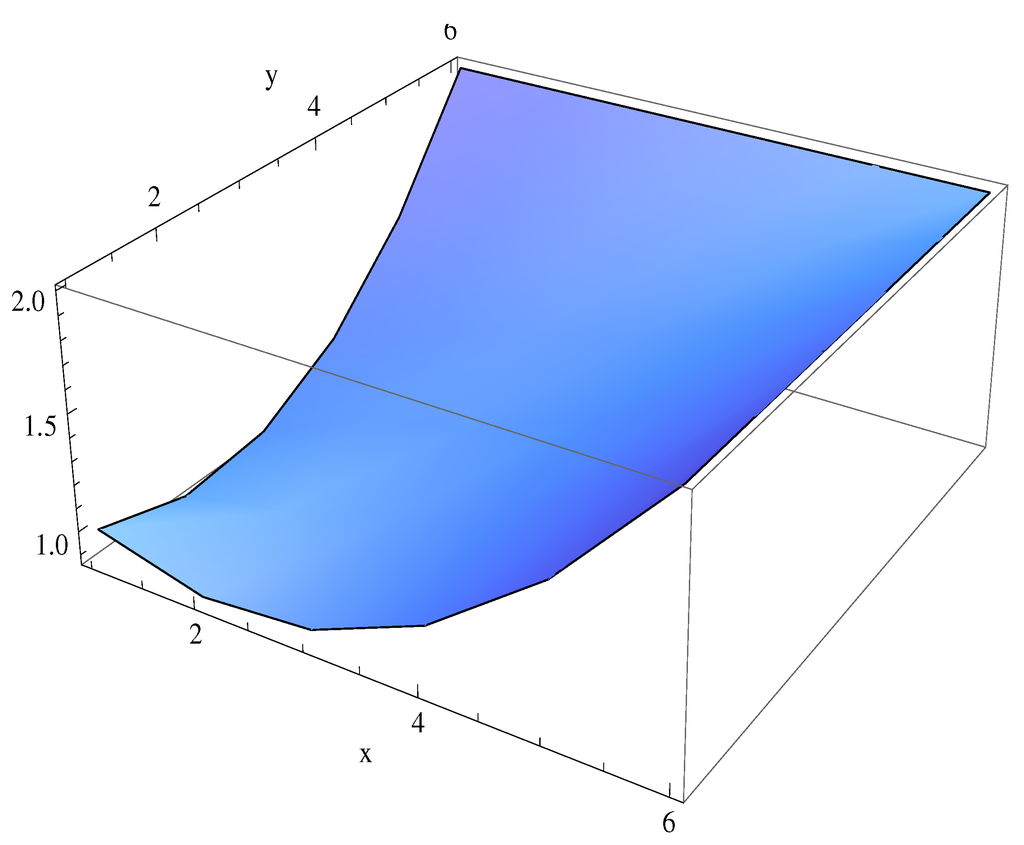

Observe that is a non-increasing and convex function and and , for all . Besides, if , the unique zero of in the interval is . If we now denote, for a fixed , the unique zero of in by and demand , then Condition (18) holds. Moreover, since , the second condition of (17) is satisfied. Now, as:

the second and third conditions of (17) are satisfied, provided that:

After that, we write the first condition of (17) as:

and take into account that the second and third conditions of (17) are satisfied if:

since and . In addition, Condition (20) is equivalent to:

Furthermore, , so that is a nondecreasing function for all , and and . Therefore, Condition (19) is satisfied if Condition (20) holds, and we can then give the following result.

Corollary 4 Let be a continuously-differentiable operator defined on a nonempty open convex domain Ω of a Banach space X with values in a Banach space Y. Suppose that (A1)–(A2) are satisfied. If Condition (20), where is the unique zero of function (2) in the interval , is satisfied and , where , , and , then Newton’s sequence, given by (1), converges to a solution of the equation , starting at , and , for all

From the last result, we can define the following domains of parameters:

where , and is the unique zero of Function (2) in the interval .

Next, we compare the conditions required for the semi-local convergence of Newton’s method in Theorem 1 and Corollary 4. In Figure 1, we see that for , so that we can guess that the smaller the quantity is, the larger the domain of parameters is: orange for , green for , red for and yellow for . Note that, if , the domain of parameters associated with Corollary 4, , tends to be that obtained by Theorem 1 (blue region). As a consequence,

Figure 1.

Domains of parameters of Newton’s method associated with Theorem 1 (blue region) and Corollary 4 (orange for , green for , red for and yellow for ) for .

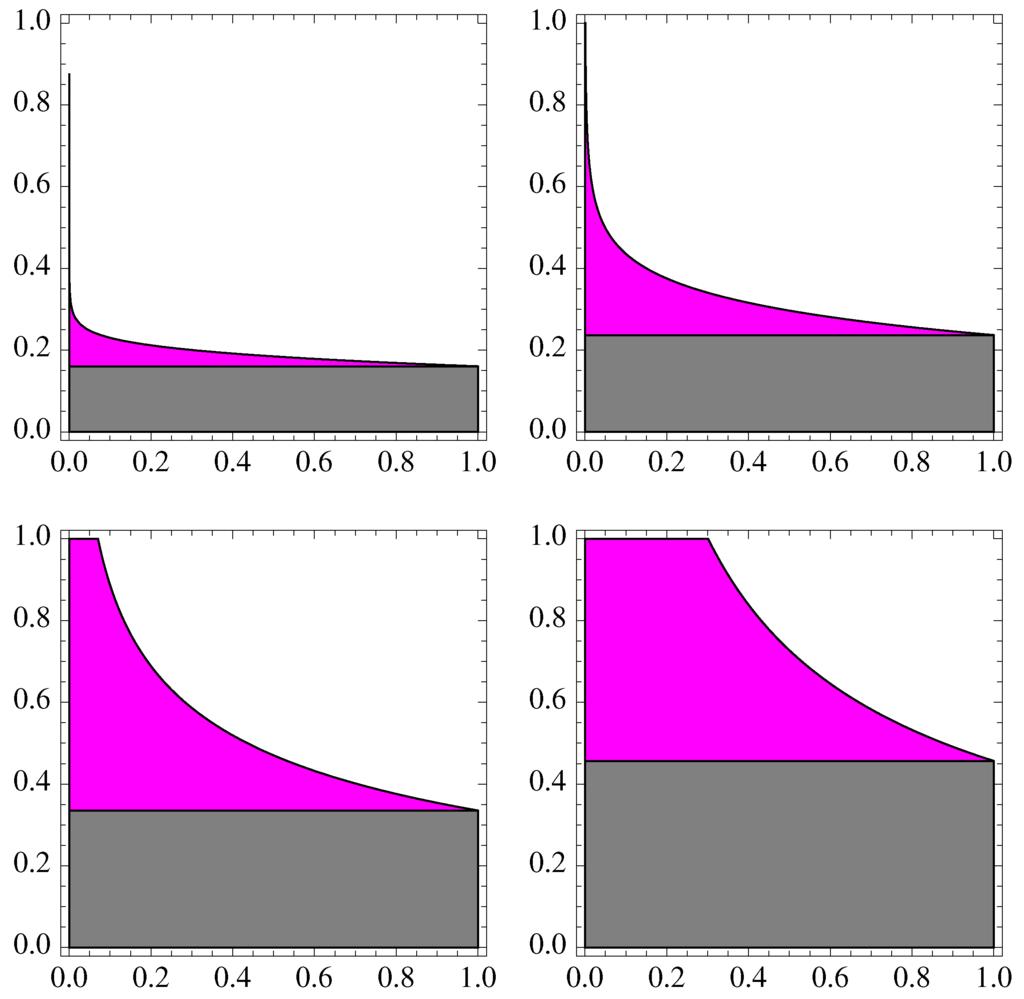

On the other hand, in Figure 2, we observe the relationship between the domains of parameters associated with Theorem 1 (gray region) and Corollary 4 (magenta region) from the variability of ε and for four different values of p: . As we can see, the domain associated with Corollary 4 is always larger, for all , than that associated with Theorem 1.

Figure 2.

Domains of parameters of Newton’s method associated with Corollary 4 (magenta region) and Theorem 1 (gray region) for .

In addition, we prove analytically in the following what we have just seen graphically. First, we prove that , for each and . For this, if , then , since and . Moreover, , since when and . Therefore, for each and .

Finally, we see that if with . Indeed, from and , we have and , since , so that and , and therefore .

5. Application

Now, we illustrate all of that mentioned above with the following mildly nonlinear elliptic equation:

This type of equation is of interest in the theory of gas dynamics [7]. An associated Dirichlet problem can be formulated as follows. Suppose that the equation is satisfied in the interior of the square in and that is given and continuous on the boundary of the square ([8]):

Our discussion focuses on the formulation of the finite difference equation for the elliptic boundary value Problems (21)–(22). The method of finite differences applied to this problem yields a finite system of equations. For general use, iterative techniques often represent the best approach to the solution of such finite systems of equations.

Specifically, central difference approximations for (21) are used, so that Problems (21)–(22) are reduced to the problem of finding a real zero of a function , namely a real solution of a nonlinear system with m equations and m unknowns. The common technique used to approximate is the application of iterative methods. In this case, Newton’s method goes on being the most used iterative method for approximating , since this method is one of the most efficient.

For Problems (21)–(22) in , Equation (21) can be approximated using central difference approximations for the spacial derivatives. Consider a grid with step size in x and in y defined over the region D, so that D is partitioned into a grid consisting of rectangles with sides and . The mesh points are given by:

Considering the following finite difference expressions to approximate the partial differentials:

Equation (21) is approximated at each interior grid point by the difference equation:

for and . The boundary conditions are:

If we now denote the approximate value of as , we obtain the difference equation:

for and , with:

Equation (21) with the boundary conditions given in (22) forms an nonlinear system of equations. To set up the nonlinear system, the interior grid points are labeled row-by-row from to starting from the left-bottom corner point. The resulting system is:

where:

, , I is the identity matrix in , , and is a vector formed from the boundary conditions (systems of this type are so-called mildly nonlinear systems).

If we denote the previous system by , where:

then is a linear operator, which is given by the matrix:

We now choose and the infinity norm. In addition, the number of equations is , and . Besides, and:

In this case, we observe that a solution of the system with F defined in (23) satisfies:

where and , so that , where and are the two positive real roots of the scalar equation . Then, we can consider:

since .

Moreover, is the linear operator given by the matrix:

and:

where . In addition,

Thus, , and .

If we choose the starting point , we obtain and , so that the condition of Theorem 1 is not satisfied, since , where is the unique zero of the corresponding auxiliary function given by (2), , in the interval . As a consequence, we cannot use Theorem 1 to guarantee the convergence of Newton’s method for approximating a solution of the system , where F is defined in (23).

However, we can guarantee the convergence of Newton’s method from Corollary 4, since Condition (20) is satisfied: with . Therefore, we can then apply Newton’s method for approximating a solution of the system with F defined in (23) and obtain the approximation given by the vector that is shown in Table 1, reached after four iterations with a tolerance . In Table 2, we show the errors using the stopping criterion . Notice that the vector shown in Table 1 is a good approximation of the solution of the system, since . See the sequence in Table 2.

Table 1.

Approximation of the solution of with F given in (23).

| i | i | i | i | ||||

|---|---|---|---|---|---|---|---|

| 1 | 5 | 9 | 13 | ||||

| 2 | 6 | 10 | 14 | ||||

| 3 | 7 | 11 | 15 | ||||

| 4 | 8 | 12 | 16 |

Table 2.

Absolute errors obtained by Newton’s method and .

| n | ||

|---|---|---|

| 0 | ||

| 1 | ||

| 2 | ||

| 3 |

Acknowledgments

This work has been partially supported by the project MTM2014-52016-C2-1-P of the Spanish Ministry of Economy and Competitiveness.

Author Contributions

The contributions of the two authors have been similar. Both authors have worked together to develop the present manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kantorovich, L.V.; Akilov, G.P. Functional Analysis; Pergamon Press: Oxford, UK, 1982. [Google Scholar]

- Fenyö, I. Über die lösung der in Banachschen raume definierten nichtlinearen gleichungen. Acta Math. Acad. Sci. Hung. 1954, 5, 85–93. [Google Scholar] [CrossRef]

- Argyros, I.K. Remarks on the convergence of Newton’s method under Hölder continuity conditions. Tamkang J. Math. 1992, 23, 269–277. [Google Scholar]

- Hernández, M.A. The Newton method for operators with Hölder continuous first derivative. J. Optim. Theory Appl. 2001, 109, 631–648. [Google Scholar] [CrossRef]

- Rokne, J. Newton’s method under mild differentiability conditions with error analysis. Numer. Math. 1972, 18, 401–412. [Google Scholar] [CrossRef]

- Argyros, I.K. On the Newton-Kantorovich hypothesis for solving equations. J. Comput. Appl. Math. 2004, 169, 315–332. [Google Scholar] [CrossRef]

- Greenspan, D. Introductory Numerical Analysis of Elliptic Boundary Value Problems; Harper and Row: New York, NY, USA, 1965. [Google Scholar]

- Rall, L.B. Computational Solution of Nonlinear Operator Equations; Robert E. Krieger Publishing Company: Malabar, FL, USA, 1979. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).