Future Skills in the GenAI Era: A Labor Market Classification System Using Kolmogorov–Arnold Networks and Explainable AI

Abstract

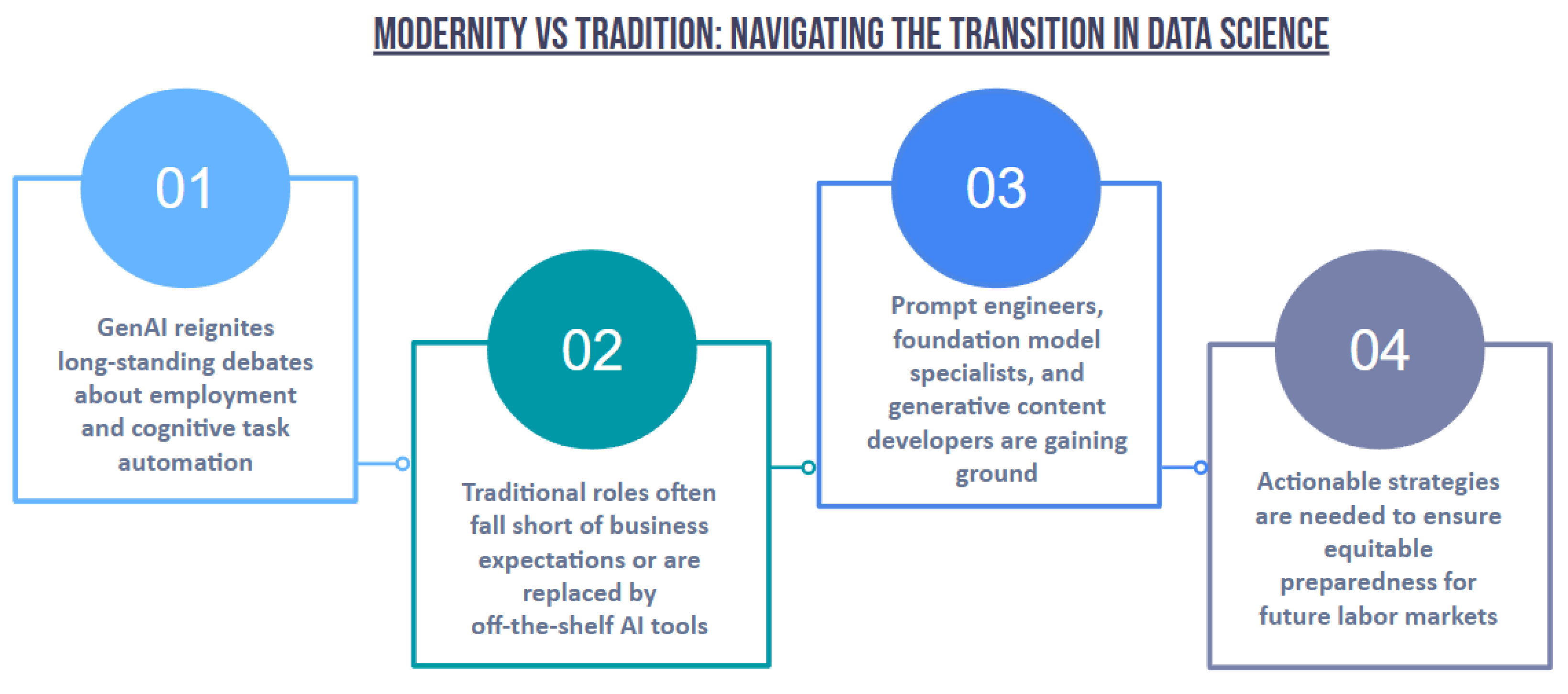

1. Introduction

- RQ1: Can KANs accurately and transparently distinguish between modern GenAI roles and traditional AI roles based solely on skill profiles?

- RQ2: How is traditional AI impacted by GenAI and which skills are mainly affected?

- RQ3: Can traditional AI roles be distinguished from their GenAI counterparts?

- RQ4: How can real-time skill explainability be used to support stakeholders in identifying GenAI readiness and guiding upskilling decisions?

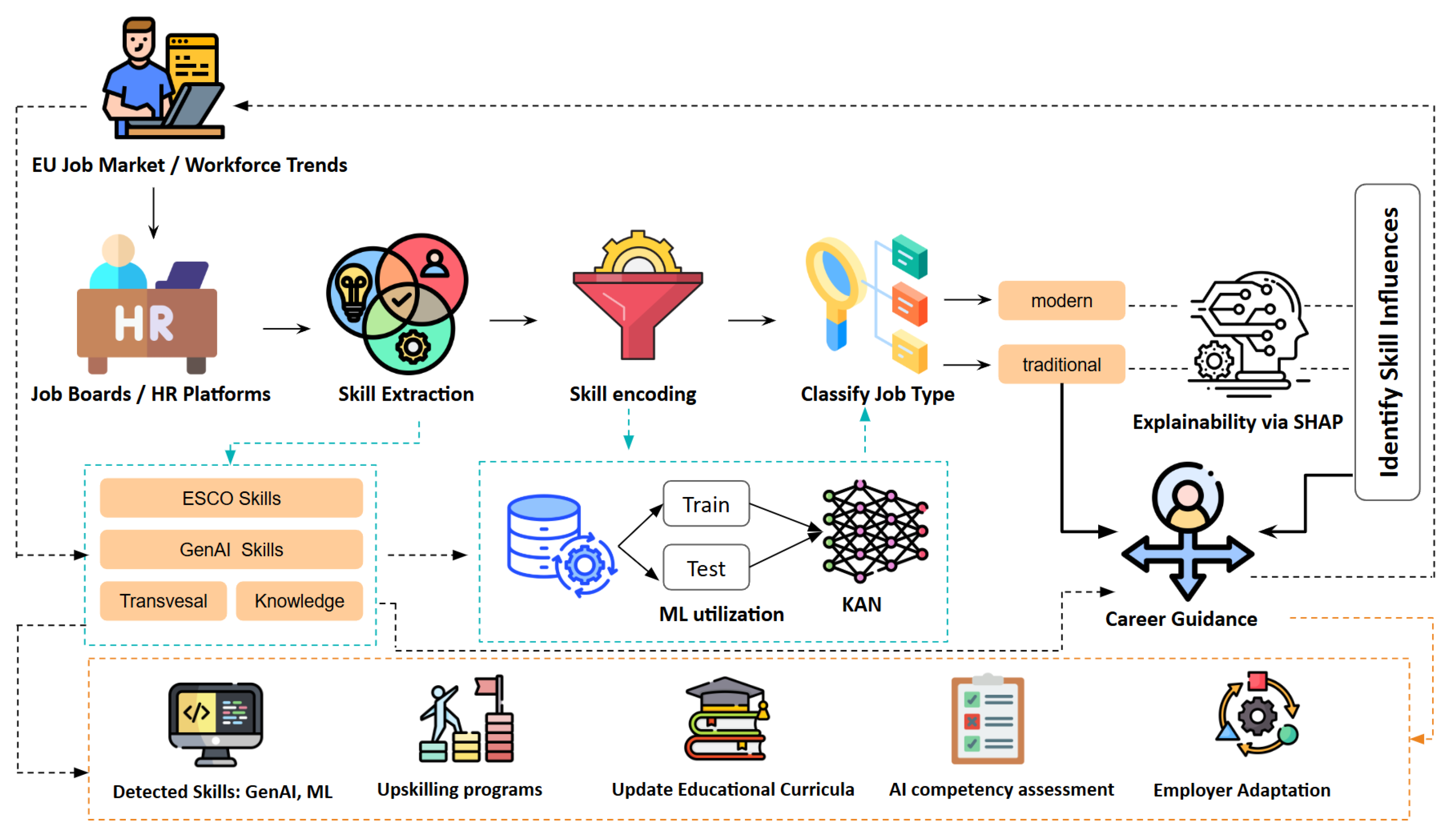

- We introduce KANVAS, the first KAN-based framework for distinguishing traditional and GenAI-related job roles using interpretable, skill-driven classification.

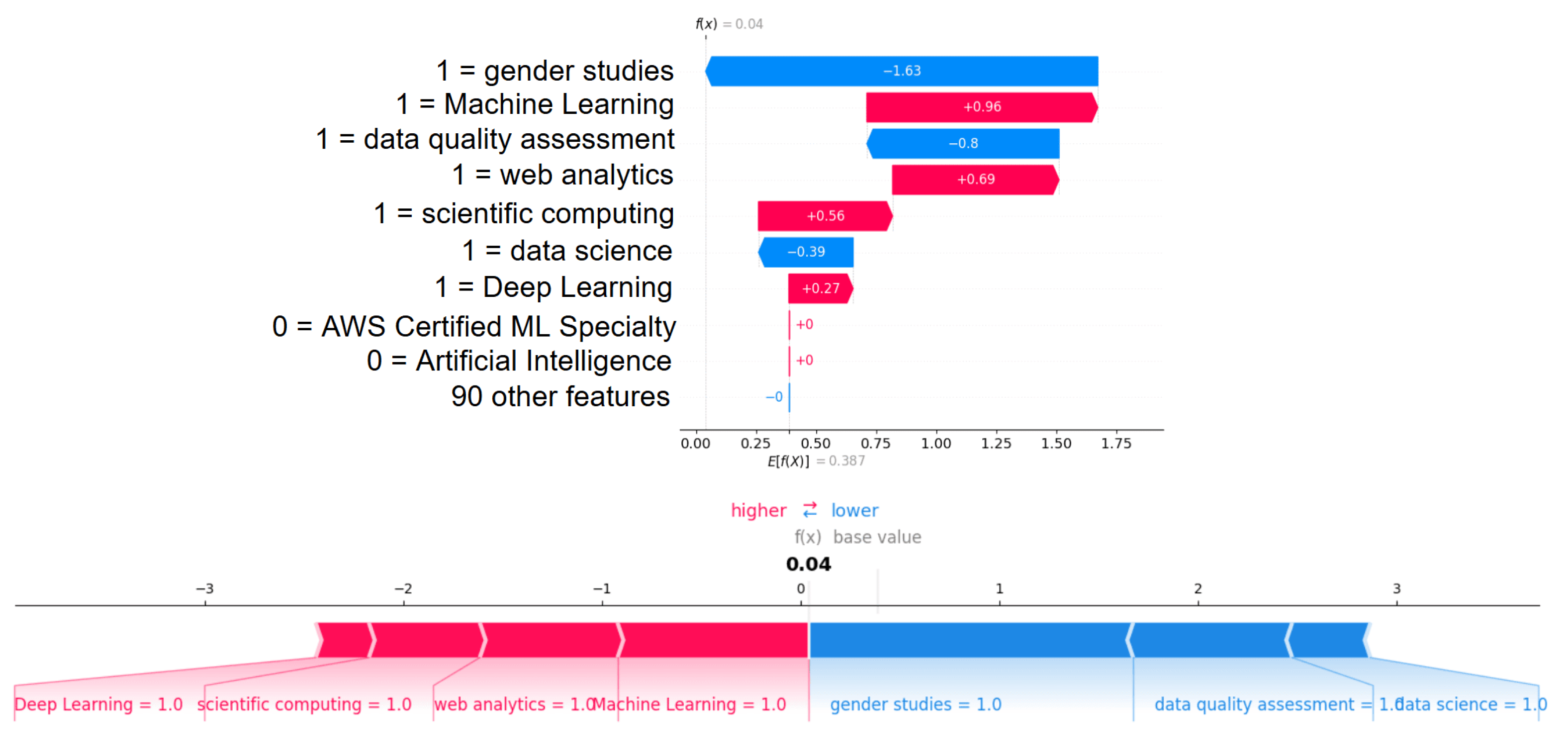

- Our approach leverages Explainable AI (XAI) techniques to identify the most influential skills differentiating modern and traditional AI roles, based on large-scale job postings.

- KANVAS is validated on real-world job advertisements, offering actionable insights for workforce planning and upskilling strategies.

- The trained KAN models enable augmentation of traditional AI job profiles with GenAI skills, illustrating their added value in modern data science.

- Our implementation is publicly available at https://github.com/dkavargy/KANVAS (accessed on 19 August 2025).

2. Related Work

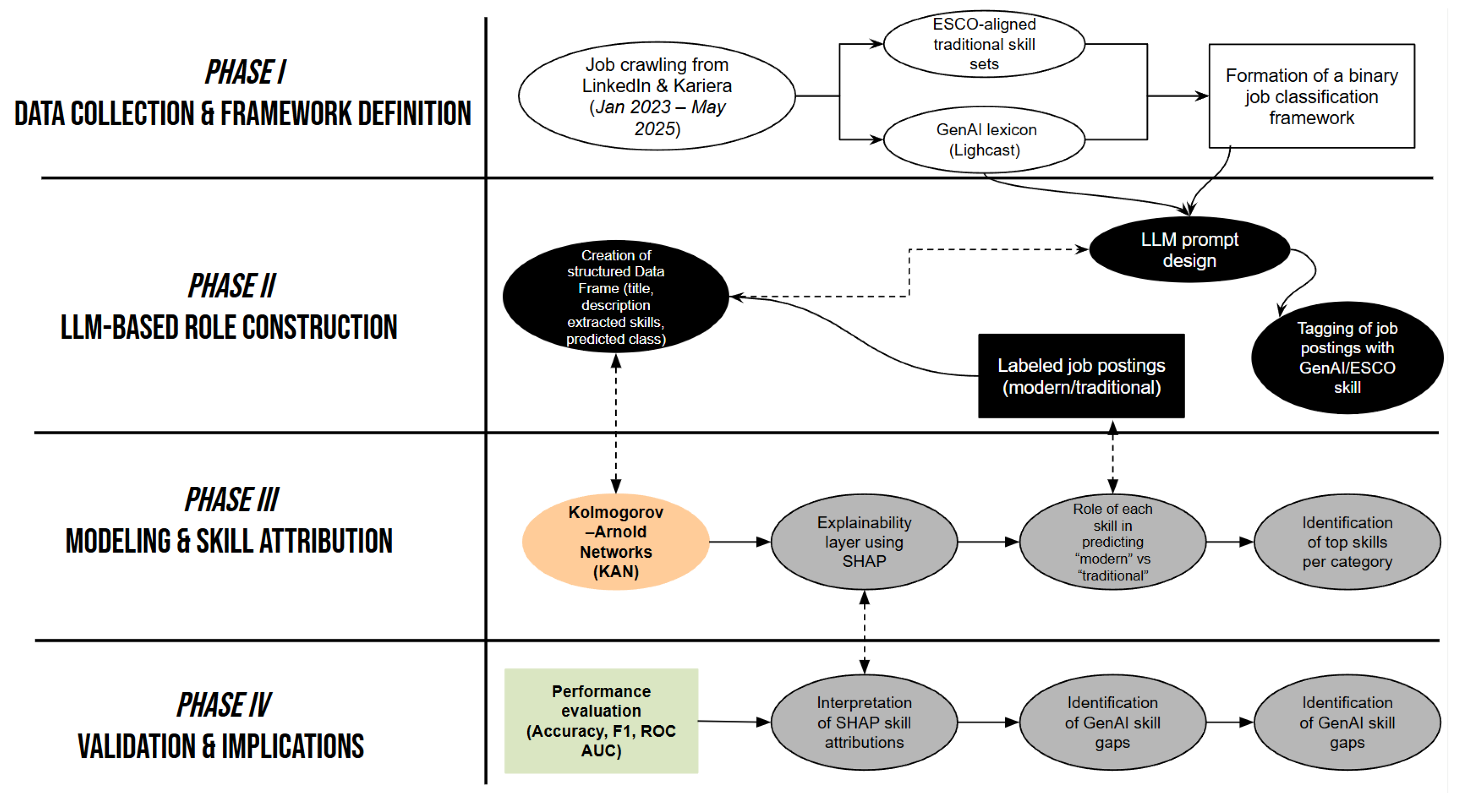

3. Methodological Framework

3.1. Data Collection and Framework Definition

Skill Extraction from OJA

3.2. LLM-Based Role Construction

| Listing 1. Prompt generated using a LLM to guide classification decisions. |

| “You are a highly specialized labor market expert trained to classify job roles based on current AI and data trends. Your task is to analyze the job title and description provided, and classify the job into one of two distinct categories: 1. ‘modern’: Roles that involve recent advances in artificial intelligence and generative technologies. Examples include work with large language models (LLMs), GenAI, prompt engineering, RAG (Retrieval-Augmented Generation), LangChain, CrewAI, synthetic data generation, vector databases (e.g., FAISS , Pinecone), diffusion models, multimodal AI, transformers, embeddings, fine - tuning of models, and modern MLOps or LLMOps practices. 2. ‘traditional’: Roles that rely on established methods in data science and machine learning, such as regression, classification, clustering, feature engineering, statistical analysis, BI/reporting, and traditional ML frameworks like scikit-learn, TensorFlow (classic usage), and general analytics. These jobs do NOT involve GenAI or advanced generative AI components. Only reply with one word: modern or traditional. No explanation. No punctuation. No formatting” |

3.3. Modeling & Skill Attribution

3.4. Validation & Implications

4. Results

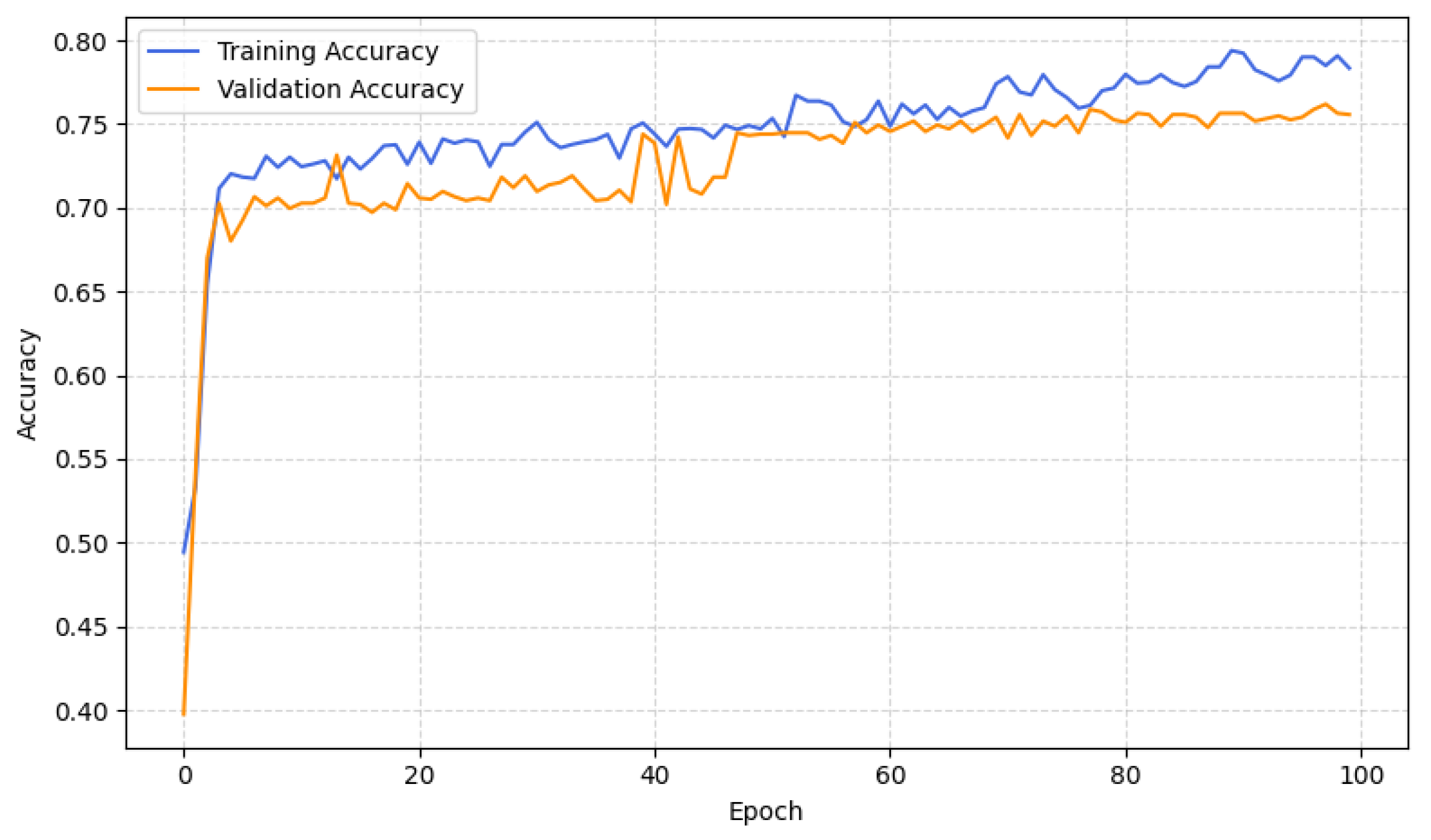

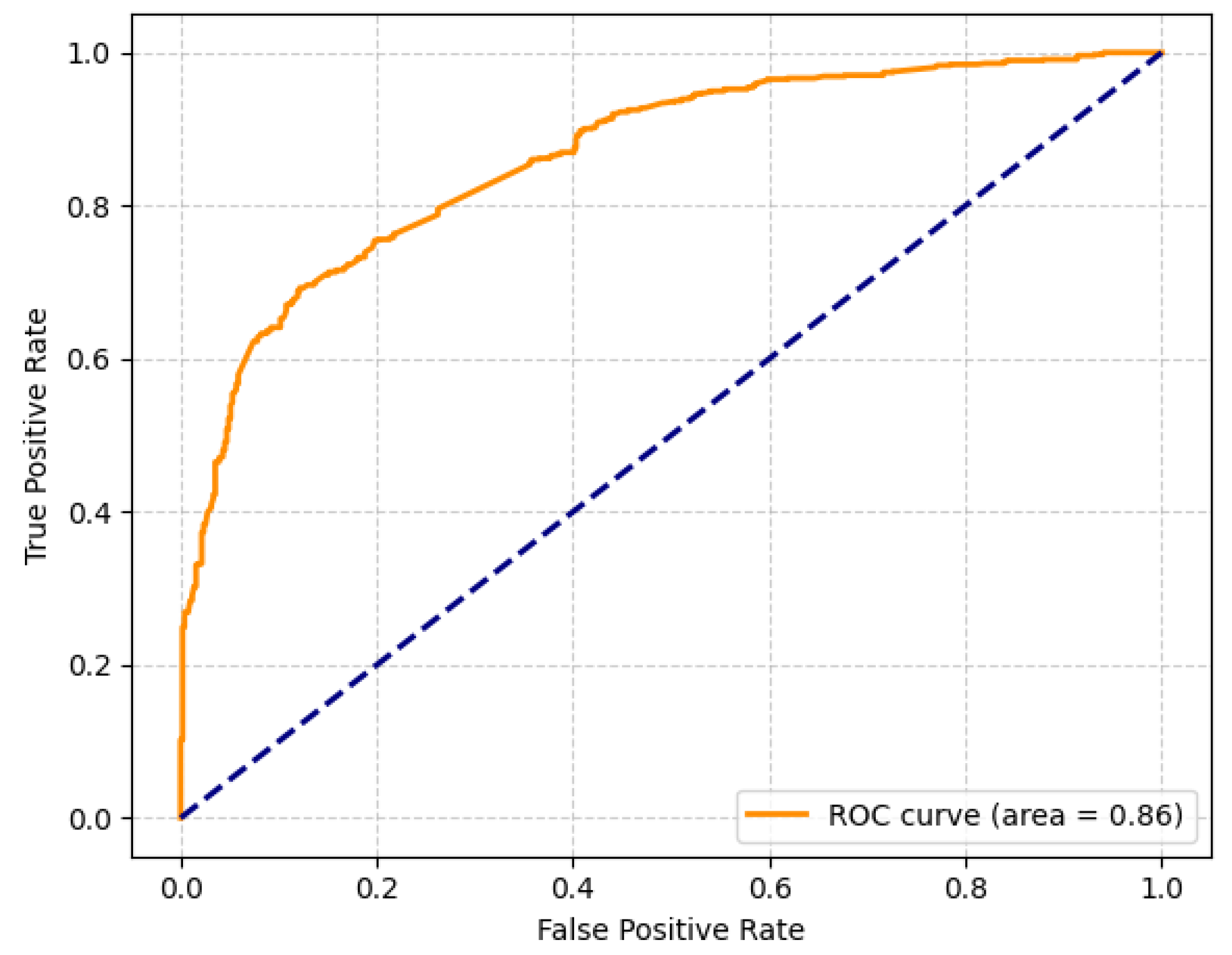

4.1. Evaluating the KAN Model

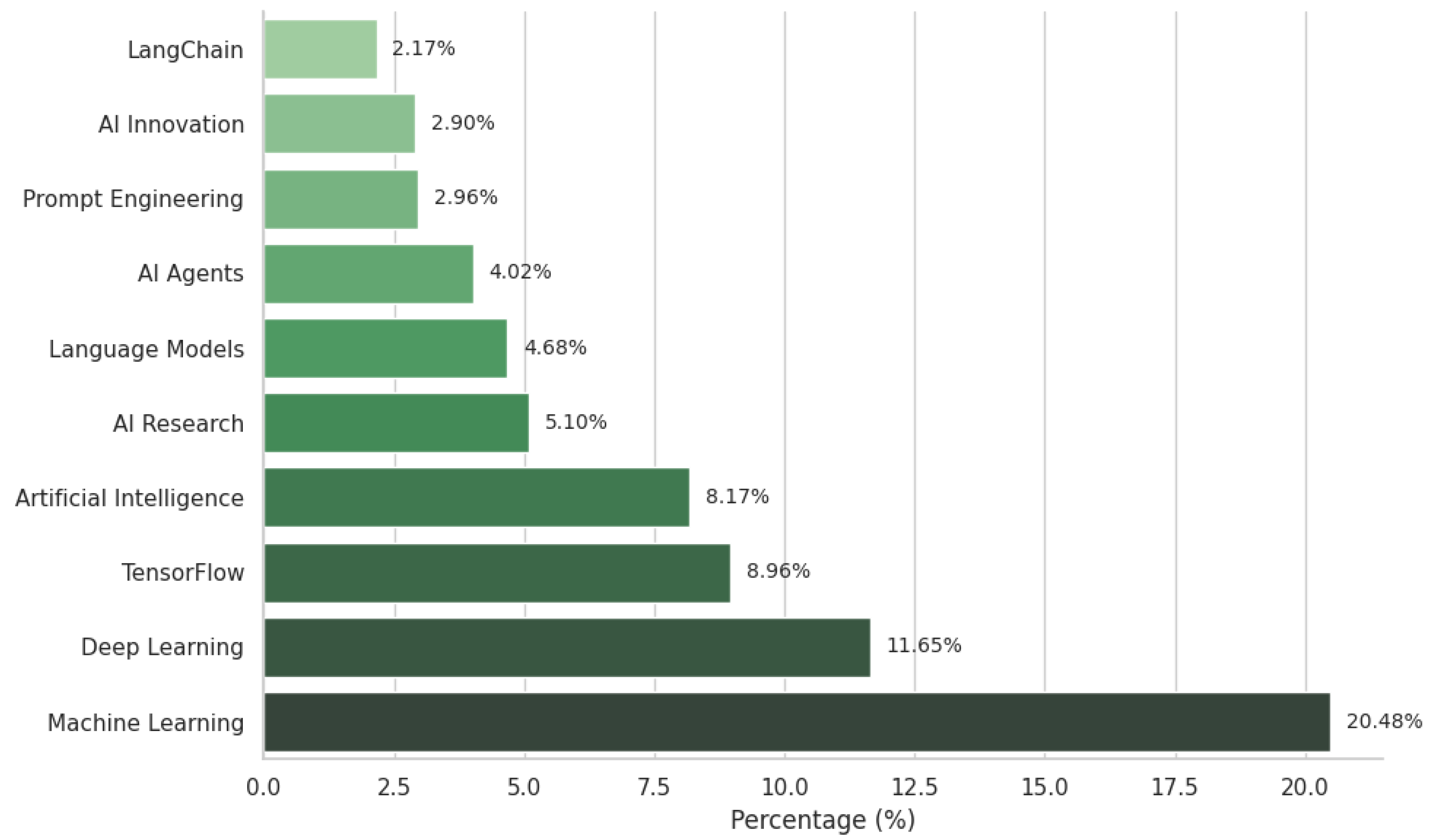

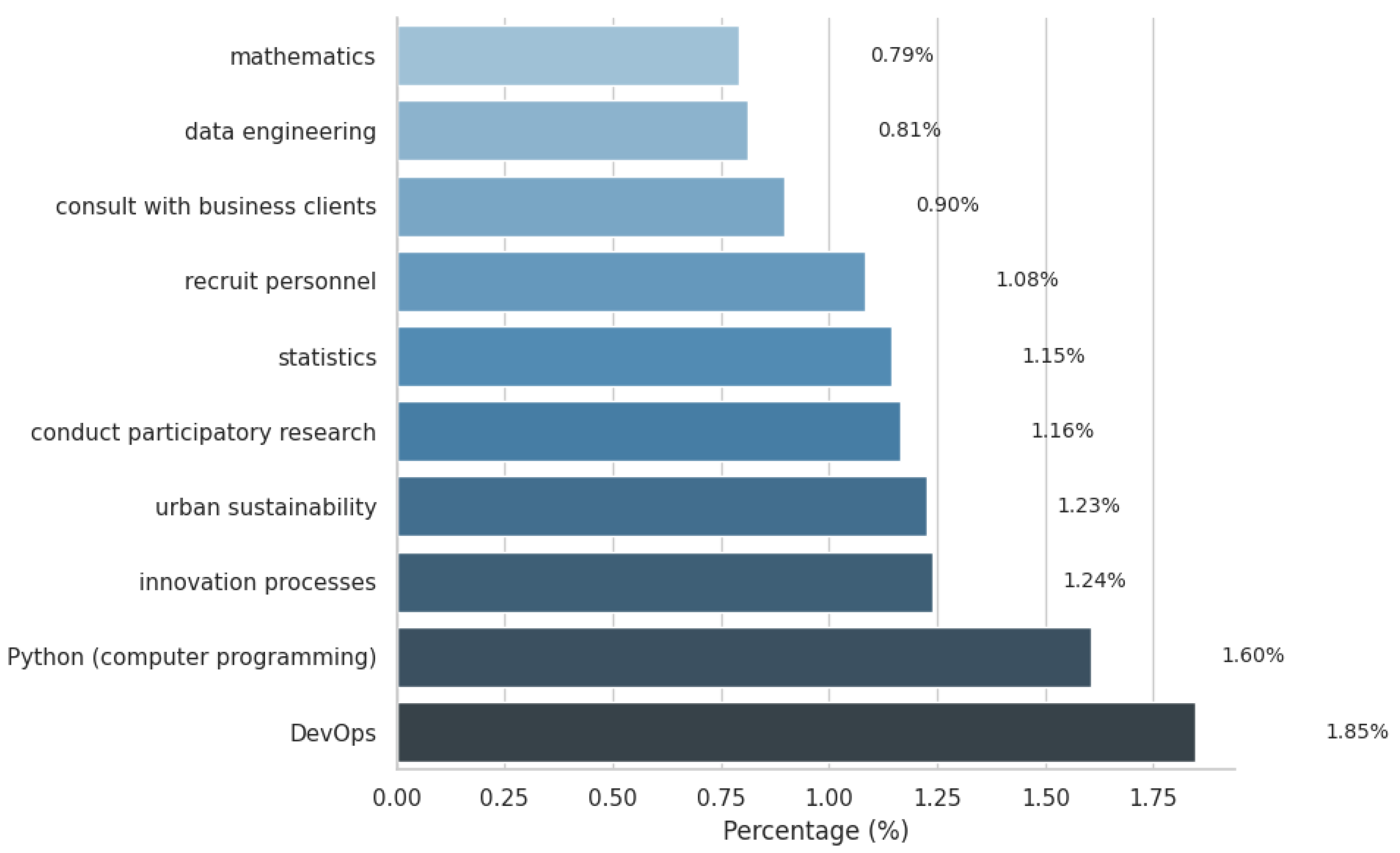

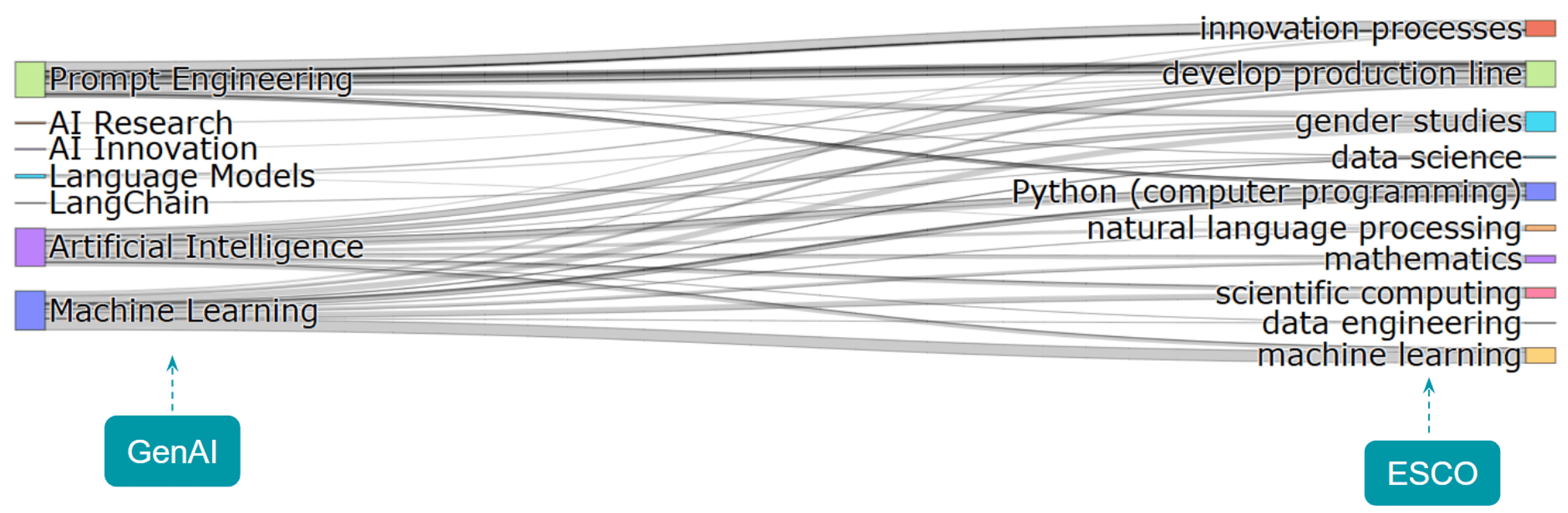

4.2. GenAI Roles Are on the Rise

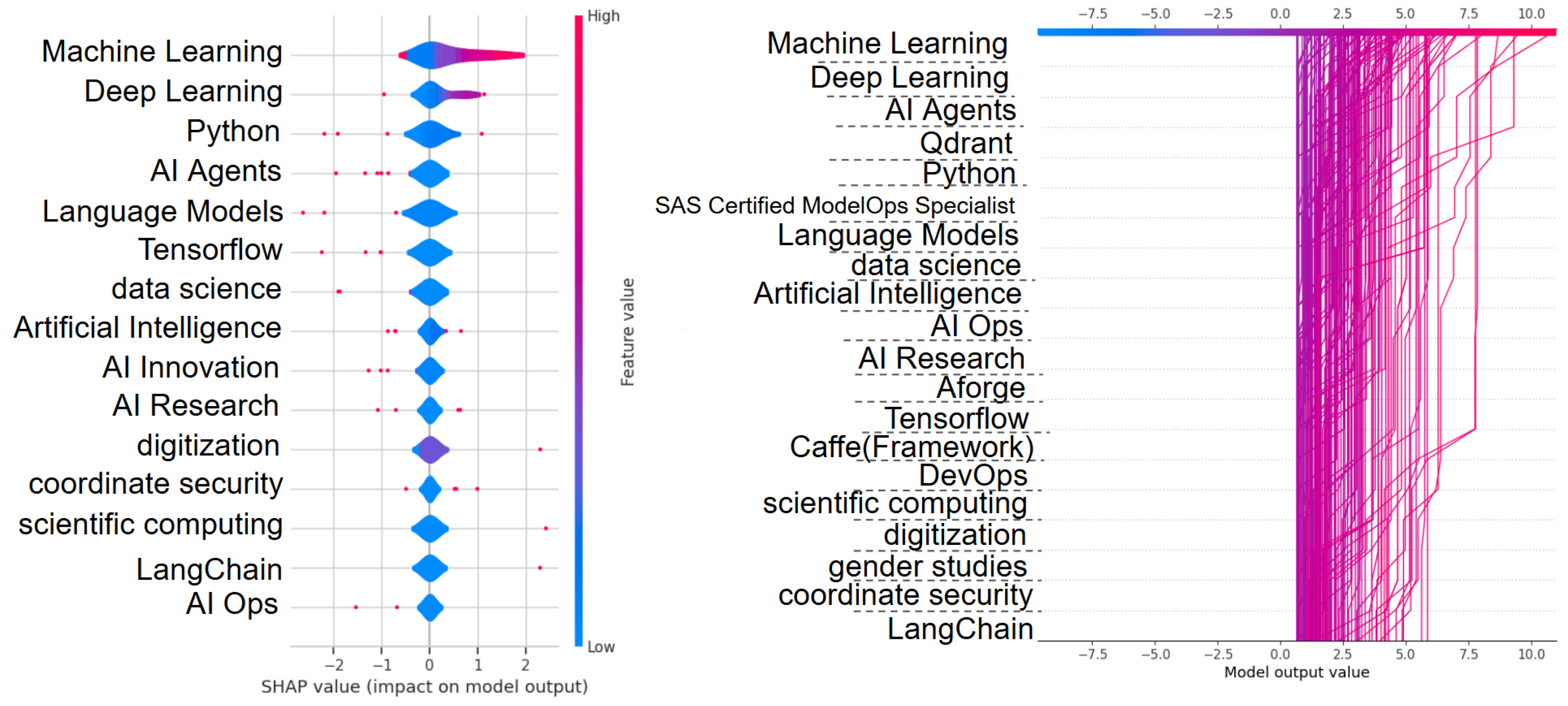

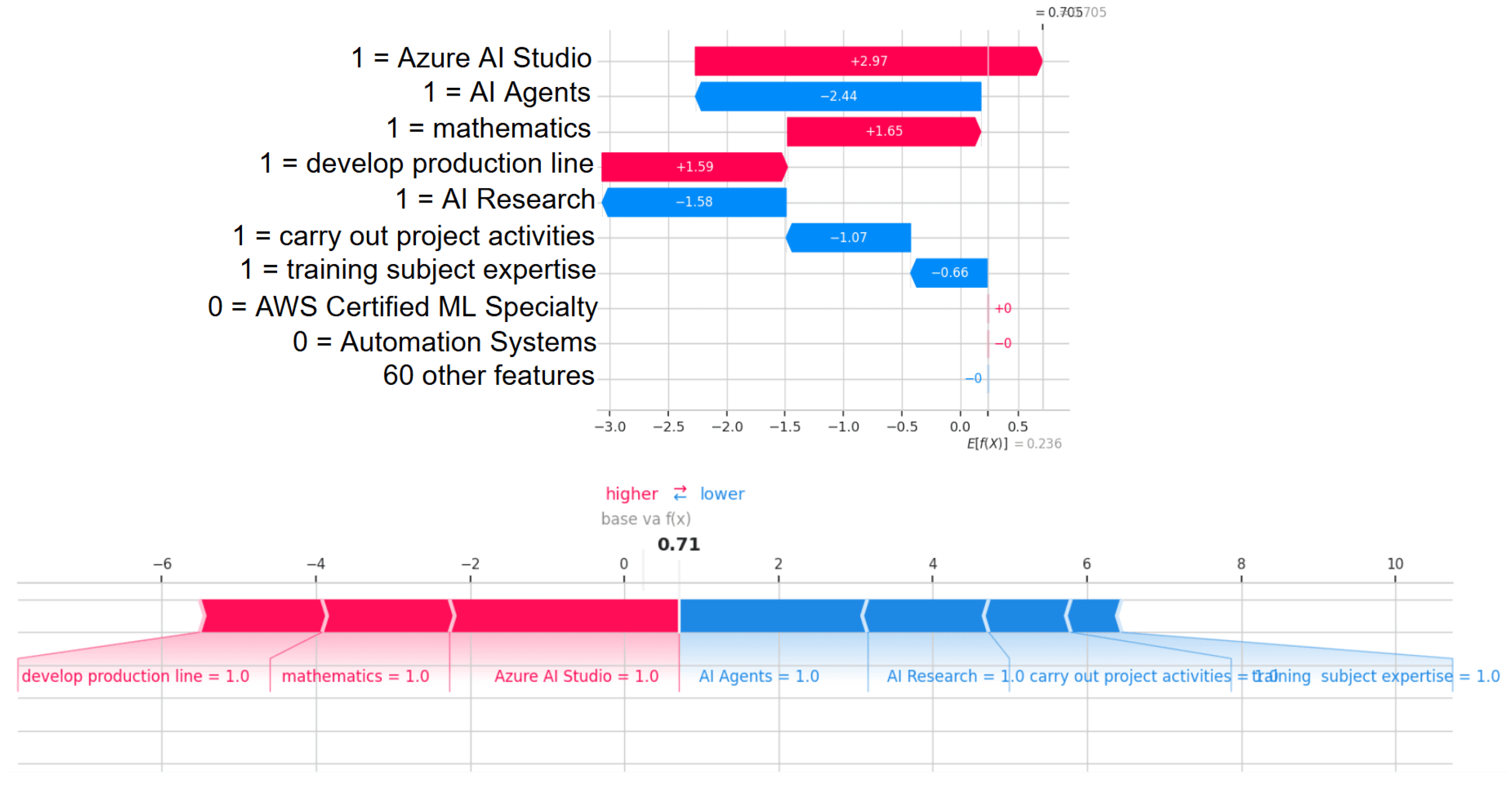

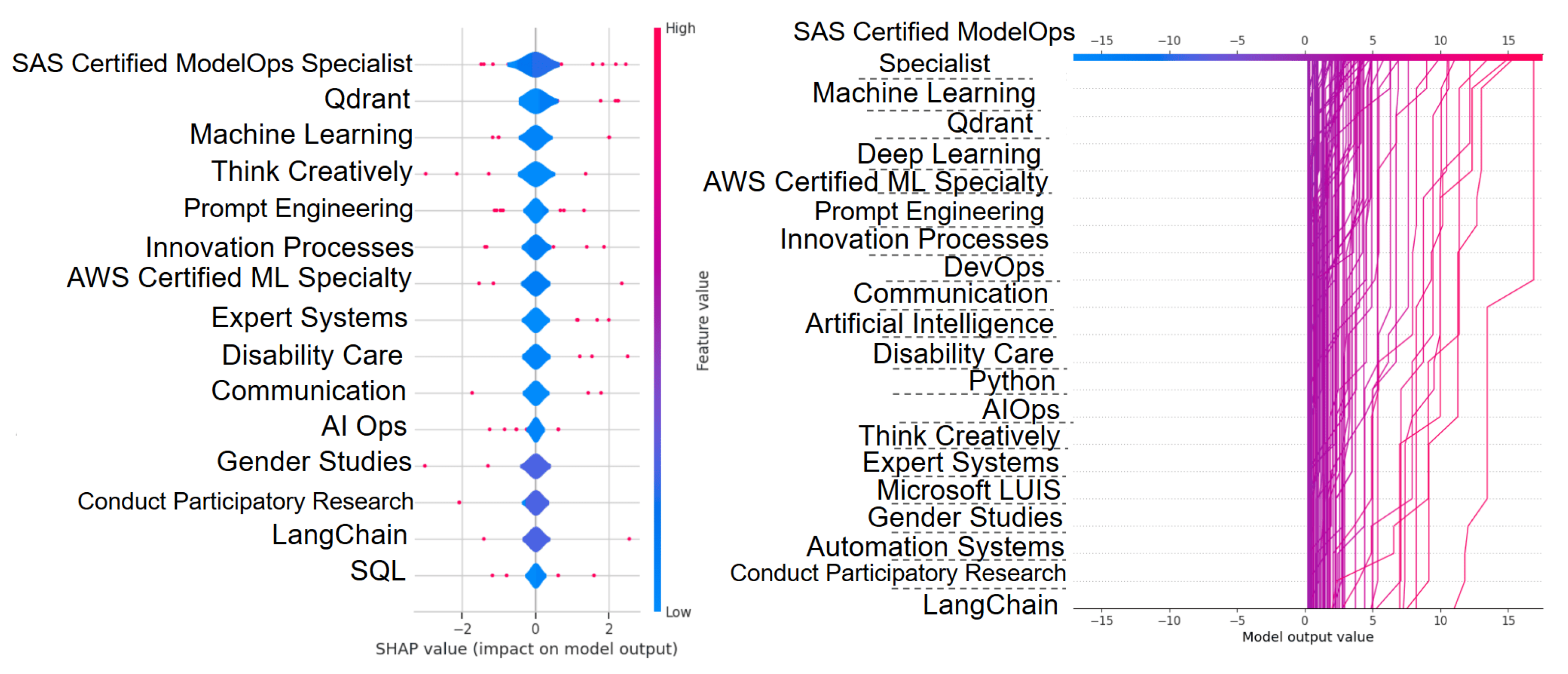

4.3. KANs Classification on Modern Job Roles

{Machine Learning, SAS Certified ModelOps Specialist, Language Models, Artificial Intelligence, TensorFlow, AI Agents, Python (computer programming), AI Research, data science, Deep Learning}

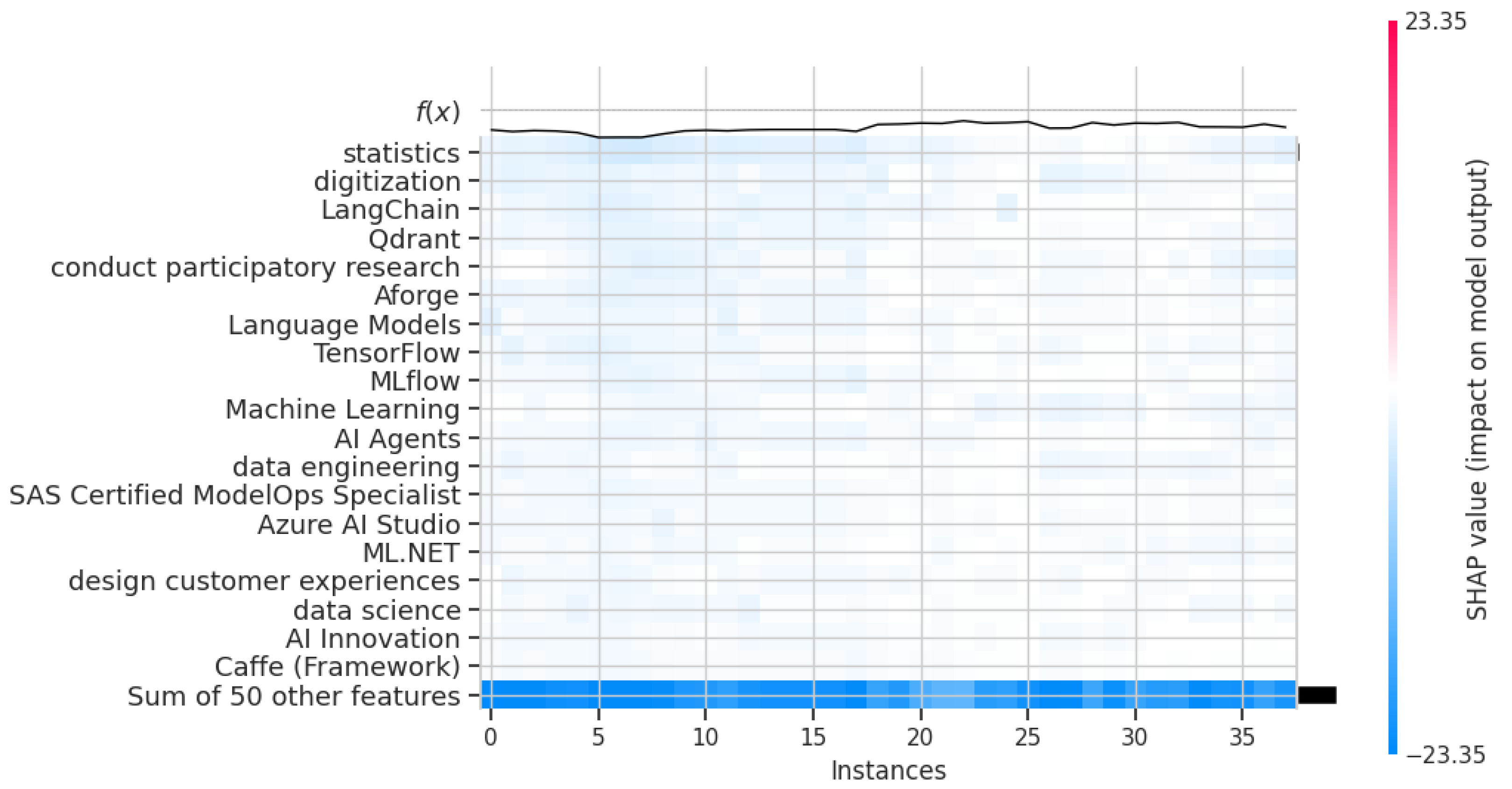

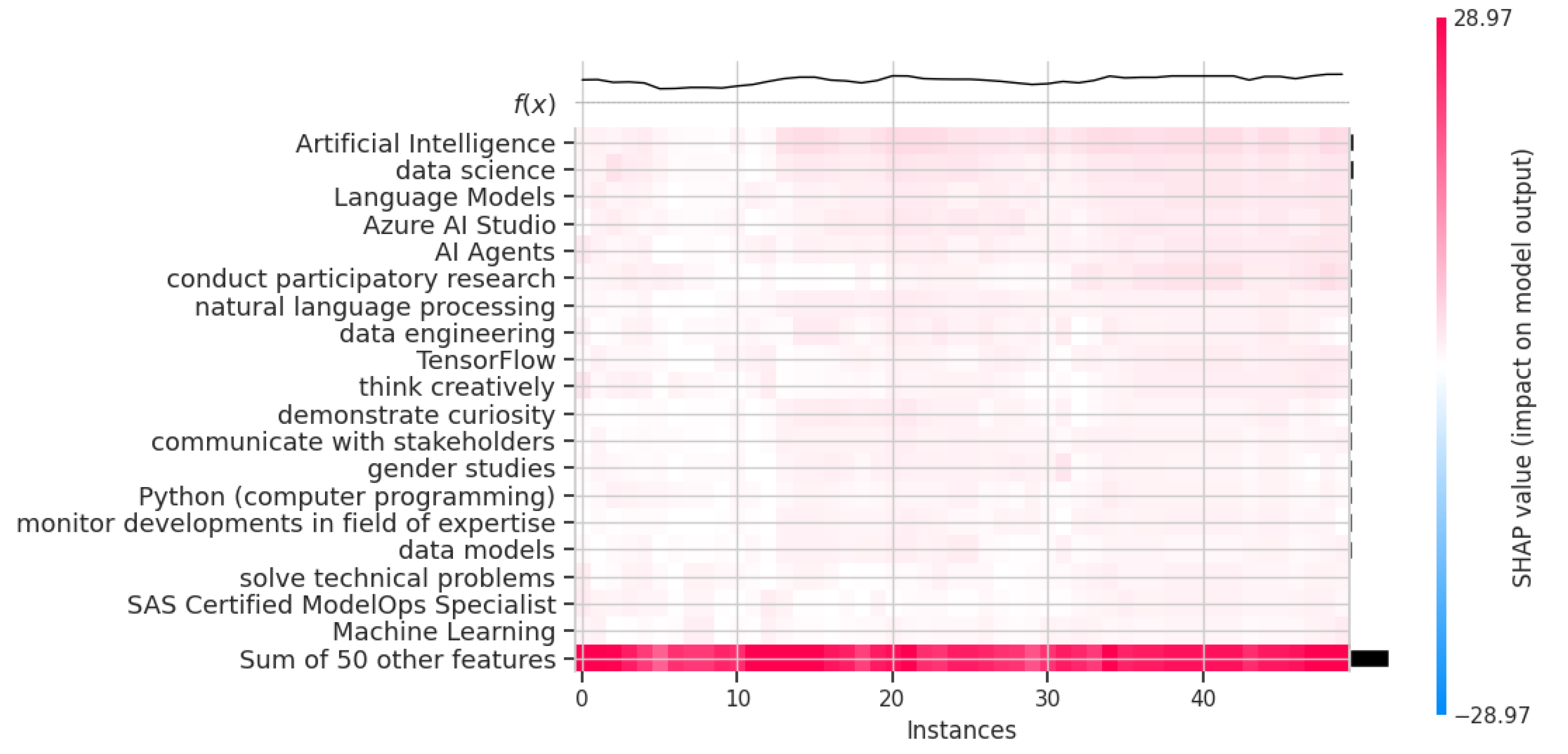

4.4. KANs Classification on Traditional Job Roles

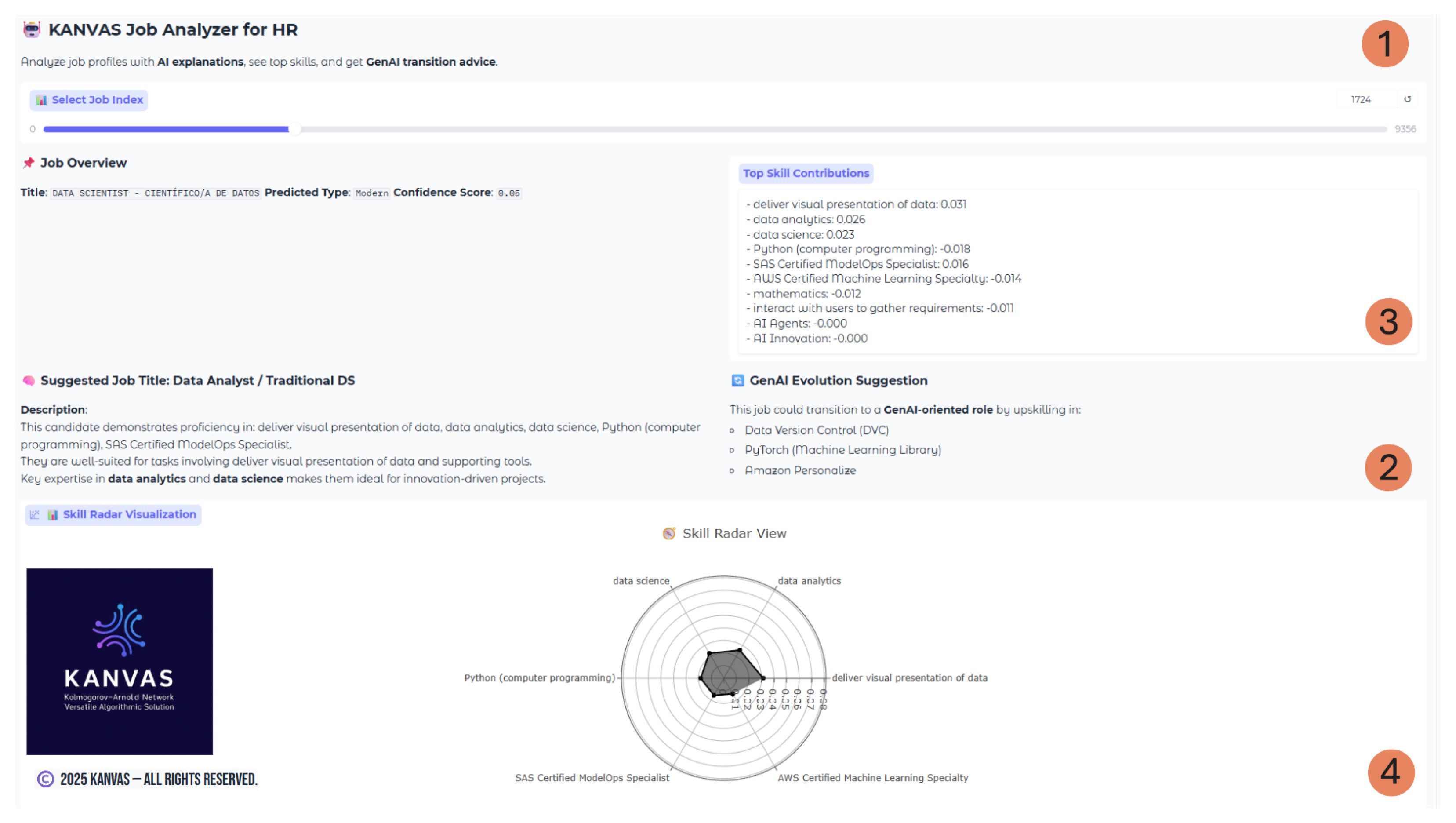

5. Practical Implications

allows HR managers to select a job index from the dataset using an intuitive slider. Each index corresponds to a unique job posting, which initiates the analysis pipeline. Step

allows HR managers to select a job index from the dataset using an intuitive slider. Each index corresponds to a unique job posting, which initiates the analysis pipeline. Step  displays the system’s prediction of whether the selected role is modern or traditional, supported by a confidence score and a generated job title and description based on the most influential skills. Step

displays the system’s prediction of whether the selected role is modern or traditional, supported by a confidence score and a generated job title and description based on the most influential skills. Step  highlights the top contributing skills using a ranked textual explanation derived from gradient-based SHAP analysis, helping HR professionals understand the model’s reasoning. Step

highlights the top contributing skills using a ranked textual explanation derived from gradient-based SHAP analysis, helping HR professionals understand the model’s reasoning. Step  presents a radar plot that visualizes the importance of the top six skills, offering a compact and comparative overview that supports decision-making around training and reskilling initiatives.

presents a radar plot that visualizes the importance of the top six skills, offering a compact and comparative overview that supports decision-making around training and reskilling initiatives.6. Discussion

6.1. Generalizability and Limitations

6.1.1. Internal Validity

6.1.2. External Validity

6.1.3. Privacy and Policy

7. Conclusions & Future Work

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| GenAI | Generative Artificial Intelligence |

| LLM | Large Language Model |

| XAI | Explainable Artificial Intelligence |

| SHAP | SHapley Additive exPlanations |

| ESCO | European Skills, Competences, Qualifications, and Occupations |

| ISCO | International Standard Classification of Occupations |

| KAN | Kolmogorov–Arnold Network |

| ML | Machine Learning |

| CV | Curriculum Vitae |

| RAG | Retrieval-Augmented Generation |

| CSV | Comma-Separated Values |

Appendix A

| GenAI Skills | |

|---|---|

| Artificial Intelligence Development | DALL-E Image Generator |

| Artificial Intelligence Risk | CrewAI |

| Artificial Intelligence Systems | Azure OpenAI |

| Artificial General Intelligence | AutoGen |

| Artificial Neural Networks | Image Captioning |

| AI/ML Inference | Image Inpainting |

| Applications of Artificial Intelligence | Image Super-Resolution |

| AI Agents | Natural Language Generation (NLG) |

| AI Alignment | Large Language Modeling |

| AI Innovation | Language Models |

| AI Research | Natural Language Understanding (NLU) |

| AI Safety | Natural Language User Interface |

| Attention Mechanisms | LangChain |

| Adversarial Machine Learning | Langgraph |

| Agentic AI | Microsoft Copilot |

| Agentic Systems | Microsoft LUIS |

| Autoencoders | Prompt Engineering |

| Association Rule Learning | Retrieval Augmented Generation |

| Activity Recognition | Sentence Transformers |

| 3D Reconstruction | Operationalizing AI |

| Backpropagation | Supervised Learning |

| Bagging Techniques | Unsupervised Learning |

| Bayesian Belief Networks | Transfer Learning |

| Boltzmann Machine | Zero Shot Learning |

| Classification and Regression Tree (CART) | Soft Computing |

| Deeplearning4j | Sorting Algorithm |

| Concept Drift Detection | Training Datasets |

| Deep Learning | Test Datasets |

| Deep Learning Methods | Test Retrieval Systems |

| Deep Reinforcement Learning (DRL) | Dlib (C++ Library) |

| Computational Intelligence | Topological Data Analysis (TDA) |

| Convolutional Neural Networks | Swarm Intelligence |

| Cognitive Computing | Spiking Neural Networks |

| Collaborative Filtering | Variational Autoencoders |

| Ensemble Methods | Sequence-to-Sequence Models (Seq2Seq) |

| Expectation Maximization Algorithm | Transformer (Machine Learning Model) |

| Expert Systems | Stable Diffusion |

| Federated Learning | Small Language Model |

| Few Shot Learning | Apache Mahout |

| Gradient Boosting | Apache MXNet |

| Gradient Boosting Machines (GBM) | Apache SINGA |

| Hidden Markov Model | Aforge |

| Incremental Learning | Amazon Forecast |

| Inference Engine | Azure OpenAI |

| Hyperparameter Optimization | ChatGPT |

| Fuzzy Set | DALL-E Image Generator |

| Genetic Algorithm | CatBoost (Machine Learning Library) |

| Genetic Programming | Chainer (Deep Learning Framework) |

References

- Salari, N.; Beiromvand, M.; Hosseinian-Far, A.; Habibi, J.; Babajani, F.; Mohammadi, M. Impacts of generative artificial intelligence on the future of labor market: A systematic review. Comput. Hum. Behav. Rep. 2025, 18, 100652. [Google Scholar] [CrossRef]

- Baabdullah, A.M. Generative conversational AI agent for managerial practices: The role of IQ dimensions, novelty seeking and ethical concerns. Technol. Forecast. Soc. Change 2024, 198, 122951. [Google Scholar] [CrossRef]

- Demirci, O.; Hannane, J.; Zhu, X. Who is AI replacing? The impact of generative AI on online freelancing platforms. Manag. Sci. 2025. [Google Scholar] [CrossRef]

- Gmyrek, P.; Berg, J.; Bescond, D. Generative AI and Jobs: A Global Analysis of Potential Effects on Job Quantity and Quality; ILO Working Paper, No. 96; International Labour Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Gonzalez Ehlinger, E.; Stephany, F. Skills or degree? The rise of skill-based hiring for AI and green jobs. SSRN 2024. [Google Scholar] [CrossRef]

- Fadhel, M.A.; Duhaim, A.M.; Albahri, A.S.; Al-Qaysi, Z.T.; Aktham, M.A.; Chyad, M.A.; Abd-Alaziz, W.; Albahri, O.S.; Alamoodi, A.H.; Alzubaidi, L.; et al. Navigating the metaverse: Unraveling the impact of artificial intelligence—A comprehensive review and gap analysis. Artif. Intell. Rev. 2024, 57, 264. [Google Scholar] [CrossRef]

- McCarthy, J. Generality in AI. Commun. ACM 1987, 30, 1030–1035. [Google Scholar] [CrossRef]

- Skiena, S.S. An overview of machine learning in computer chess. ICGA J. 1986, 9, 20–28. [Google Scholar] [CrossRef]

- Kearns, M.J. The Computational Complexity of Machine Learning; MIT Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Tulli, S.K.C. Artificial intelligence, machine learning and deep learning in advanced robotics: A review. Int. J. Acta Inform. 2024, 3, 35–58. [Google Scholar]

- Pacal, I. Enhancing crop productivity and sustainability through disease identification in maize leaves: Exploiting a large dataset with an advanced vision transformer model. Expert Syst. Appl. 2024, 238, 122099. [Google Scholar] [CrossRef]

- Lim, W.; Yong, K.S.C.; Lau, B.T.; Tan, C.C.L. Future of generative adversarial networks (GAN) for anomaly detection in network security: A review. Comput. Secur. 2024, 139, 103733. [Google Scholar] [CrossRef]

- Zhao, D. The impact of AI-enhanced natural language processing tools on writing proficiency: An analysis of language precision, content summarization, and creative writing facilitation. Educ. Inf. Technol. 2025, 30, 8055–8086. [Google Scholar] [CrossRef]

- Abdalla, H.; Artoli, A.M. Towards an efficient data fragmentation, allocation, and clustering approach in a distributed environment. Information 2019, 10, 112. [Google Scholar] [CrossRef]

- Strong, D.M.; Lee, Y.W.; Wang, R.Y. Data quality in context. Commun. ACM 1997, 40, 103–110. [Google Scholar] [CrossRef]

- Kumar, V.; Khosla, C. Data Cleaning—A thorough analysis and survey on unstructured data. In Proceedings of the 2018 8th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 11–12 January 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 305–309. [Google Scholar]

- Bui, N.; Collier, J.; Ozturk, Y.E.; Song, D. The effects of conversational agents on human learning and how we used them: A systematic review of studies conducted before generative AI. TechTrends 2025, 69, 628–664. [Google Scholar] [CrossRef]

- Lim, S.; Schmälzle, R.; Bente, G. Artificial social influence via human-embodied AI agent interaction in immersive virtual reality (VR): Effects of similarity-matching during health conversations. arXiv 2024, arXiv:2406.05486. Available online: https://arxiv.org/abs/2406.05486 (accessed on 19 August 2025). [CrossRef]

- Allouch, M.; Azaria, A.; Azoulay, R. Conversational agents: Goals, technologies, vision and challenges. Sensors 2021, 21, 8448. [Google Scholar] [CrossRef] [PubMed]

- Teubner, T.; Flath, C.M.; Weinhardt, C.; Van Der Aalst, W.; Hinz, O. Welcome to the era of ChatGPT et al.: The prospects of large language models. Bus. Inf. Syst. Eng. 2023, 65, 95–101. [Google Scholar] [CrossRef]

- Rahsepar, A.A.; Tavakoli, N.; Kim, G.H.J.; Hassani, C.; Abtin, F.; Bedayat, A. How AI responds to common lung cancer questions: ChatGPT versus Google Bard. Radiology 2023, 307, e230922. [Google Scholar] [CrossRef]

- Wu, J.; Wang, Z.; Qin, Y. Performance of DeepSeek-R1 and ChatGPT-4o on the Chinese National Medical Licensing Examination: A Comparative Study. J. Med. Syst. 2025, 49, 74. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Pandey, N.; Currie, W.; Micu, A. Leveraging ChatGPT and other generative artificial intelligence (AI)-based applications in the hospitality and tourism industry: Practices, challenges and research agenda. Int. J. Contemp. Hosp. Manag. 2024, 36, 1–12. [Google Scholar] [CrossRef]

- U.S. Bureau of Labor Statistics. Data Scientists: Occupational Outlook Handbook. 2024. Available online: https://www.bls.gov/ooh/computer-and-information-technology/data-scientists.htm (accessed on 20 June 2025).

- U.S. Bureau of Labor Statistics. Data Scientists: Occupational Outlook Handbook. 2024. Available online: https://www.bls.gov/ooh/math/data-scientists.htm (accessed on 20 June 2025).

- U.S. Bureau of Labor Statistics. Occupations with the Most Job Growth: Employment Projections. 2024. Available online: https://www.bls.gov/emp/tables/occupations-most-job-growth.htm (accessed on 20 June 2025).

- Hightower, R. Data Scientists Job Trends After GenAI. Medium. 2023. Available online: https://medium.com/@richardhightower/data-scientists-job-trends-after-gen-ai-2e6b1685a8f9 (accessed on 20 June 2025).

- Lao, P. The Death of the Traditional Data Scientist and How to Survive in 2025. Medium. 2024. Available online: https://medium.com/@pined.lao/the-death-of-the-traditional-data-scientist-and-how-to-survive-in-2025-5d89ccf6f425 (accessed on 20 June 2025).

- Autor, D.H.; Levy, F.; Murnane, R.J. The skill content of recent technological change: An empirical exploration. Q. J. Econ. 2003, 118, 1279–1333. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; McAfee, A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies; W. W. Norton & Company: New York, NY, USA, 2014. [Google Scholar]

- Chui, M.; Manyika, J.; Miremadi, M. What AI Can and Can’t Do (Yet) for Your Business. McKinsey& Company. 2018. Available online: https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/what-ai-can-and-cant-do-yet-for-your-business (accessed on 19 August 2025).

- Acemoglu, D.; Restrepo, P. Tasks, automation, and the rise in US wage inequality. Econometrica 2021, 90, 1973–2016. [Google Scholar] [CrossRef]

- Marrone, R.; Cropley, D.; Medeiros, K. How does narrow AI impact human creativity? Creat. Res. J. 2024, 1–11. [Google Scholar] [CrossRef]

- Currie, G.M.; Hawk, K.E.; Rohren, E.M. Generative artificial intelligence biases, limitations and risks in nuclear medicine: An argument for appropriate use framework and recommendations. Semin. Nucl. Med. 2025, 55, 423–436. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, E. Should ChatGPT be biased? Challenges and risks of bias in large language models. arXiv 2023, arXiv:2304.03738. [Google Scholar] [CrossRef]

- Yan, L.; Sha, L.; Zhao, L.; Li, Y.; Martinez-Maldonado, R.; Chen, G.; Gašević, D. Practical and ethical challenges of large language models in education: A systematic scoping review. Br. J. Educ. Technol. 2024, 55, 90–112. [Google Scholar] [CrossRef]

- Schryen, G.; Marrone, M.; Yang, J. Exploring the scope of generative AI in literature review development. Electron. Mark. 2025, 35, 13. [Google Scholar] [CrossRef]

- Joshi, S. The Transformative Role of Agentic GenAI in Shaping Workforce Development and Education in the US. 2025. Available online: https://ssrn.com/abstract=5133376 (accessed on 19 August 2025).

- Khalil, M.; Mahmoud, M.; Abdalla, H. Traditional AI vs Generative AI: The Role in Modern Cyber Security. 2025. Available online: https://www.researchgate.net/publication/386248918_TRADITIONAL_AI_VS_GENERATIVE_AI_THE_ROLE_IN_MODERN_CYBER_SECURITY (accessed on 19 August 2025).

- ARTiBA. Generative AI vs. Traditional AI: Key Differences and Advantages. 2024. Available online: https://www.artiba.org/blog/generative-ai-vs-traditional-ai-key-differences-and-advantages (accessed on 19 August 2025).

- University of Illinois. What Is Generative AI vs AI? 2024. Available online: https://education.illinois.edu/about/news-events/news/article/2024/11/11/what-is-generative-ai-vs-ai (accessed on 19 August 2025).

- MIT Curve. Exploring the Shift from Traditional to Generative AI. 2024. Available online: https://curve.mit.edu/exploring-shift-traditional-generative-ai (accessed on 19 August 2025).

- Aggarwal, R.; Sachan, S.; Verma, R.; Dhanda, N. Traditional AI vs Modern AI. In The Confluence of Cryptography, Blockchain and Artificial Intelligence; CRC Press: Boca Raton, FL, USA, 2025; pp. 51–75. [Google Scholar]

- Zarefard, M.; Marsden, N. ESCO and the Job Market: A Comparative Study of HCI Skill Demands. In Proceedings of the International Conference on Human-Computer Interaction, Yokohama, Japan, 26 April–1 May 2025; Springer: Cham, Switzerland, 2025; pp. 95–110. [Google Scholar]

- Kavargyris, D.; Georgiou, K.; Mittas, N.; Angelis, L. Extracting knowledge and highly demanded skills from European Union educational policies: A network analysis approach. Soc. Netw. Anal. Min. 2025, 15, 84. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2404.19756. Available online: https://arxiv.org/abs/2404.19756 (accessed on 19 August 2025).

- Xu, K.; Chen, L.; Wang, S. Kolmogorov-Arnold Networks for time series: Bridging predictive power and interpretability. arXiv 2024, arXiv:2406.02496. Available online: https://arxiv.org/abs/2406.02496 (accessed on 19 August 2025). [CrossRef]

- Wang, G.; Zhu, Q.; Song, C.; Wei, B.; Li, S. MedKAFormer: When Kolmogorov-Arnold Theorem meets Vision Transformer for medical image representation. IEEE J. Biomed. Health Inform. 2025, 29, 4303–4313. [Google Scholar] [CrossRef]

- Yu, S.; Chen, Z.; Yang, Z.; Gu, J.; Feng, B.; Sun, Q. Exploring Kolmogorov-Arnold Networks for realistic image sharpness assessment. In Proceedings of the ICASSP 2025—IEEE International Conference on Acoustics, Speech and Signal Processing, Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Topol, E.J. The transformative potential of generative AI in healthcare. N. Engl. J. Med. 2023, 389, 401–403. [Google Scholar]

- Glennon, B. Skilled Immigrants, Firms, and the Global Geography of Innovation. J. Econ. Perspect. 2024, 38, 3–26. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; Li, D.; Raymond, L.R. Generative AI at Work. National Bureau of Economic Research Working Paper Series, No. 31161. 2024. Available online: https://www.nber.org/papers/w31161 (accessed on 19 August 2025).

- European Commission. The Crosswalk Between ESCO and O*NET (Technical Report). ESCO Publications. 2022. Available online: https://esco.ec.europa.eu/en/about-esco/publications/publication/crosswalk-between-esco-and-onet-technical-report (accessed on 20 June 2025).

- OECD. AI and the Future of Skills. In OECD Skills Insight; OECD: Paris, France, 2023. [Google Scholar]

- Nguyen, K.C.; Zhang, M.; Montariol, S.; Bosselut, A. Rethinking Skill Extraction in the Job Market Domain using Large Language Models. In Proceedings of the 1st Workshop on NLP for Human Resources (NLP4HR), St. Julian’s, Malta, 22 March 2024; pp. 19–27. Available online: https://aclanthology.org/2024.nlp4hr-1.3 (accessed on 19 August 2025).

- Karlsruhe Institute of Technology. Skill Taxonomy Augmentation with LLMs: Towards Real-Time Matching. Technical Report. 2024. Available online: https://www.research-karlsruhe.de/skill-taxonomy-llms (accessed on 19 August 2025).

- Guan, Z.; Yang, J.-Q.; Yang, Y.; Zhu, H.; Li, W.; Xiong, H. JobFormer: Skill-Aware Job Recommendation with Semantic-Enhanced Transformer. arXiv 2024, arXiv:2404.04313. Available online: https://arxiv.org/abs/2404.04313 (accessed on 19 August 2025). [CrossRef]

- Dawson, N.; Williams, M.-A.; Rizoiu, M.-A. Skill-driven recommendations for job transition pathways. arXiv 2020, arXiv:2011.11801. Available online: https://arxiv.org/abs/2011.11801 (accessed on 19 August 2025). [CrossRef]

- Brookings Institution. The Future of Hiring: Advantages of a Skill-Based, AI-Powered, Hybrid Approach. Brookings Report. 2024. Available online: https://www.brookings.edu/articles/the-future-of-hiring-advantages-of-a-skill-based-ai-powered-hybrid-approach (accessed on 20 June 2025).

- Chetan, K.R. Smart Resume Matcher: AI-Powered Job and Skill Recommendation System. Int. J. Innov. Res. Technol. (IJIRT) 2025, 11, 597. Available online: https://ijirt.org/publishedpaper/IJIRT172703_PAPER.pdf (accessed on 20 June 2025).

- Dave, V.S.; Zhang, B.; Al Hasan, M.; AlJadda, K.; Korayem, M. A combined representation learning approach for better job and skill recommendation. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management (CIKM ’18), Torino, Italy, 22–26 October 2018; Volume 9, pp. 1997–2005. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Çelik Ertuğrul, D.; Bitirim, S. Job recommender systems: A systematic literature review, applications, open issues, and challenges. J. Big Data 2025, 12, 1–111. [Google Scholar] [CrossRef]

- Luhmann, H.; van der Helm, R.; Fergnani, A. Scenario typology development: A methodological framework for futures studies. Technol. Forecast. Soc. Change 2021, 169, 120802. [Google Scholar]

- Dinath, W. Linkedin: A link to the knowledge economy. In Proceedings of the ECKM 2021 22nd European Conference on Knowledge Management, Coventry, UK, 2–3 September 2021; Academic Conferences Limited: Reading, UK, 2021; pp. 253–259. [Google Scholar]

- Jaiswal, K.; Kuzminykh, I.; Modgil, S. Understanding the skills gap between higher education and industry in the UK in artificial intelligence sector. Ind. High. Educ. 2025, 39, 234–246. [Google Scholar] [CrossRef]

- Cedefop. Mapping the Landscape of Online Job Vacancies. Background Country Report: Greece; Cedefop: Thessaloniki, Greece, 2018. [Google Scholar]

- Garcia de Macedo, M.M.; Clarke, W.; Lucherini, E.; Baldwin, T.; Queiroz Neto, D.; de Paula, R.A.; Das, S. Practical skills demand forecasting via representation learning of temporal dynamics. In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, Oxford, UK, 19–21 May 2022; pp. 285–294. [Google Scholar] [CrossRef]

- Kavargyris, D.C.; Georgiou, K.; Papaioannou, E.; Petrakis, K.; Mittas, N.; Angelis, L. ESCOX: A tool for skill and occupation extraction using LLMs from unstructured text. Softw. Impacts 2025, 25, 100772. [Google Scholar] [CrossRef]

- Shui, H.; Li, Z.; Wang, X.; Zhang, Y.; Li, W. An Emotion Text Classification Model Based on Llama3-8b Using Lora Technique. In Proceedings of the 2024 7th International Conference on Computer Information Science and Application Technology (CISAT), Hangzhou, China, 12–14 July 2024; IEEE: Piscataway, NJ, USA, 2024. [Google Scholar]

- Myrzakhan, A.; Bsharat, S.M.; Shen, Z. Open-LLM-Leaderboard: From multi-choice to open-style questions for LLMs evaluation, benchmark, and arena. arXiv 2024, arXiv:2406.07545. Available online: https://arxiv.org/abs/2406.07545 (accessed on 19 August 2025).

- Tan, Z.; Beigi, A.; Wang, S.; Guo, R.; Bhattacharjee, A.; Jiang, B.; Bhattacharjee, A.; Karami, M.; Li, J.; Cheng, L.; et al. Large language models for data annotation: A survey. arXiv 2024, arXiv:2402.13446. Available online: https://arxiv.org/abs/2402.13446 (accessed on 19 August 2025). [CrossRef]

- Törnberg, P. ChatGPT-4 outperforms experts and crowd workers in annotating political Twitter messages with zero-shot learning. arXiv 2023, arXiv:2304.06588. Available online: https://arxiv.org/abs/2304.06588 (accessed on 19 August 2025).

- Patil, D. Explainable Artificial Intelligence (XAI): Enhancing transparency and trust in machine learning models. ResearchGate 2024, 14, 204–213. Available online: https://www.researchgate.net/publication/385629166_Explainable_Artificial_Intelligence_XAI_Enhancing_transparency_and_trust_in_machine_learning_models (accessed on 19 August 2025).

- Rane, N.; Choudhary, S.; Rane, J. Explainable Artificial Intelligence (XAI) approaches for transparency and accountability in financial decision-making. SSRN 2023. Available online: https://ssrn.com/abstract=4640316 (accessed on 19 August 2025).

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 2017, 30. Available online: https://proceedings.neurips.cc/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html (accessed on 19 August 2025).

- Rozemberczki, B.; Watson, L.; Bayer, P.; Yang, H.-T.; Kiss, O.; Nilsson, S.; Sarkar, R. The Shapley Value in Machine Learning. arXiv 2023, arXiv:2202.05594. Available online: https://arxiv.org/abs/2202.05594 (accessed on 19 August 2025). [CrossRef]

- Santos, M.R.; Guedes, A.; Sanchez-Gendriz, I. SHapley additive explanations (SHAP) for efficient feature selection in rolling bearing fault diagnosis. Mach. Learn. Knowl. Extr. 2024, 6, 316–341. [Google Scholar] [CrossRef]

- Dieber, B.; Kirrane, S. Why model why? Assessing the strengths and limitations of LIME. arXiv 2020, arXiv:2012.00093. [Google Scholar] [CrossRef]

- Čík, I.; Rasamoelina, A.D.; Mach, M.; Sinčák, P. Explaining deep neural network using layer-wise relevance propagation and integrated gradients. In Proceedings of the 2021 IEEE 19th World Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 21–23 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 381–386. [Google Scholar] [CrossRef]

- Salih, A.M.; Raisi-Estabragh, Z.; Galazzo, I.B.; Radeva, P.; Petersen, S.E.; Lekadir, K.; Menegaz, G. A perspective on explainable artificial intelligence methods: SHAP and LIME. Adv. Intell. Syst. 2025, 7, 2400304. [Google Scholar] [CrossRef]

- Zhang, Y.; He, H.; Tan, Z.; Yuan, Y. Trade-off between efficiency and consistency for removal-based explanations. Adv. Neural Inf. Process. Syst. 2023, 36, 25627–25661. Available online: https://proceedings.neurips.cc/paper_files/paper/2023/hash/51484744337f4bf5fea0e4dd92ddab0b-Abstract-Conference.html (accessed on 19 August 2025).

- Movva, R.; Koh, P.W.; Pierson, E. Annotation alignment: Comparing LLM and human annotations of conversational safety. arXiv 2024, arXiv:2406.06369. Available online: https://arxiv.org/abs/2406.06369 (accessed on 19 August 2025). [CrossRef]

- Wissler, L.; Almashraee, M.; Monett, D.; Paschke, A. The Gold Standard in Corpus Annotation. IEEE GSC 2014, 21. Available online: https://www.researchgate.net/publication/265048097_The_Gold_Standard_in_Corpus_Annotation (accessed on 19 August 2025).

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 2025, 6, 101260. [Google Scholar] [CrossRef]

- Zhu, Z.; Tan, Y.; Yamashita, N.; Lee, Y.C.; Zhang, R. The Benefits of Prosociality towards AI Agents: Examining the Effects of Helping AI Agents on Human Well-Being. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–18. [Google Scholar]

- Fakfare, P.; Manosuthi, N.; Lee, J.S.; Han, H.; Jin, M. Customer word-of-mouth for generative AI: Innovation and adoption in hospitality and tourism. Int. J. Hosp. Manag. 2025, 126, 104070. [Google Scholar] [CrossRef]

- Erdmann, J.; Mausolf, F.; Späh, J.L. KAN We Improve on HEP Classification Tasks? Kolmogorov–Arnold Networks Applied to an LHC Physics Example. Comput. Softw. Big Sci. 2025, 9, 9. [Google Scholar] [CrossRef]

- Aluas, M.; Angelis, L.; Arapakis, I.; Arvanitou, E.; Georgiou, K.; Gogos, A.; Voulgarakis, V. SKILLAB: Skills Matter. In Proceedings of the 2024 50th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Paris, France, 28–30 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 491–498. [Google Scholar]

- Georgiou, K.; Rossini, R.; Jahn, M.; Tsoukalas, D.; Voulgarakis, V.; Tsekeridou, S.; Angelis, L. SKILLAB: Creating a Skills Supply and Demand Data Space. In Proceedings of the 4th Eclipse Security, AI, Architecture and Modelling Conference on Data Space, Mainz, Germany, 22 October 2024; pp. 10–17. [Google Scholar]

- Kariera. Privacy Policy. Kariera. Describes Data Collection, Use, and Storage in Accordance with Regulation (EU) 2016/679 (GDPR). 2025. Available online: https://www.kariera.gr/en/documents/privacy-policy (accessed on 19 August 2025).

- LinkedIn. Privacy Policy. LinkedIn Corporation. Describes How Publicly Available Data Is Processed in Accordance with Applicable Data Protection Laws, Including GDPR. 2024. Available online: https://www.linkedin.com/legal/privacy-policy (accessed on 19 August 2025).

- IAPP. Publicly Available Data Under the GDPR: Main Considerations; Highlights That Publicly Available Data Remains Subject to GDPR Protections; International Association of Privacy Professionals: Portsmouth, Uk, 2019; Available online: https://iapp.org/news/a/publicly-available-data-under-gdpr-main-considerations (accessed on 19 August 2025).

| Field | Value |

|---|---|

| Job Title | Developer of Generative AI solutions F/M |

| Company | XXXX |

| Location | Clermont-Ferrand, France |

| Domain | lesjeudis |

| Date | 12 May 2025 |

| Seniority Level | Mid-Senior level |

| Salary Range | €55,000–€70,000 annually |

| Contract Type | Permanent |

| Description | As a GenAI developer in XXXX you must possible and technical expertise to provide Solution Architecture and Design:

Define the architecture of enterprise-grade AI applications, ensuring scalability, security, and maintainability. The employee will guide the design of RAG-based pipelines, AI agents and API-driven AI solutions. Optimize prompt engineering and LLM interactions to improve response accuracy & relevance. Ensure LLM security best practices, data privacy… (cont’d) |

| Model | Size (GB) | Accuracy (%) | Error (%) |

|---|---|---|---|

| deepseek-r1:1.5b | 1.1 | 79 | 21 |

| deepseek-r1:8b | 5.2 | 80 | 20 |

| mistral:instruct | 4.1 | 78 | 22 |

| deepseek-r1:latest | 5.2 | 82 | 18 |

| llama3:8b (Ours) | 4.7 | 83 | 17 |

| Class | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| Modern | 0.67 | 0.88 | 0.76 | 777 |

| Traditional | 0.90 | 0.68 | 0.77 | 1095 |

| Accuracy | 0.79 (on 1872 samples) | |||

| Macro Avg | 0.78 | 0.78 | 0.78 | 1872 |

| Weighted Avg | 0.80 | 0.78 | 0.78 | 1872 |

| Model | Accuracy | F1 Score | Precision | Recall | ROC AUC |

|---|---|---|---|---|---|

| Logistic Regression | 0.8040 | 0.7607 | 0.7716 | 0.8393 | 0.8292 |

| Decision Tree | 0.7838 | 0.8258 | 0.8162 | 0.8356 | 0.8379 |

| Random Forest | 0.7921 | 0.8515 | 0.8319 | 0.8721 | 0.8861 |

| Naive Bayes | 0.7329 | 0.7784 | 0.7562 | 0.8018 | 0.8107 |

| K-Nearest Neighbors | 0.7826 | 0.8236 | 0.7838 | 0.8676 | 0.8441 |

| SVM | 0.7943 | 0.8318 | 0.7973 | 0.8694 | 0.8428 |

| Gradient Boosting | 0.7730 | 0.8140 | 0.7815 | 0.8493 | 0.8462 |

| KANs (Ours) | 0.7960 | 0.8532 | 0.8384 | 0.8695 | 0.8885 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kavargyris, D.C.; Georgiou, K.; Papaioannou, E.; Moysiadis, T.; Mittas, N.; Angelis, L. Future Skills in the GenAI Era: A Labor Market Classification System Using Kolmogorov–Arnold Networks and Explainable AI. Algorithms 2025, 18, 554. https://doi.org/10.3390/a18090554

Kavargyris DC, Georgiou K, Papaioannou E, Moysiadis T, Mittas N, Angelis L. Future Skills in the GenAI Era: A Labor Market Classification System Using Kolmogorov–Arnold Networks and Explainable AI. Algorithms. 2025; 18(9):554. https://doi.org/10.3390/a18090554

Chicago/Turabian StyleKavargyris, Dimitrios Christos, Konstantinos Georgiou, Eleanna Papaioannou, Theodoros Moysiadis, Nikolaos Mittas, and Lefteris Angelis. 2025. "Future Skills in the GenAI Era: A Labor Market Classification System Using Kolmogorov–Arnold Networks and Explainable AI" Algorithms 18, no. 9: 554. https://doi.org/10.3390/a18090554

APA StyleKavargyris, D. C., Georgiou, K., Papaioannou, E., Moysiadis, T., Mittas, N., & Angelis, L. (2025). Future Skills in the GenAI Era: A Labor Market Classification System Using Kolmogorov–Arnold Networks and Explainable AI. Algorithms, 18(9), 554. https://doi.org/10.3390/a18090554