Comprehensive Forensic Tool for Crime Scene and Traffic Accident 3D Reconstruction

Abstract

1. Introduction

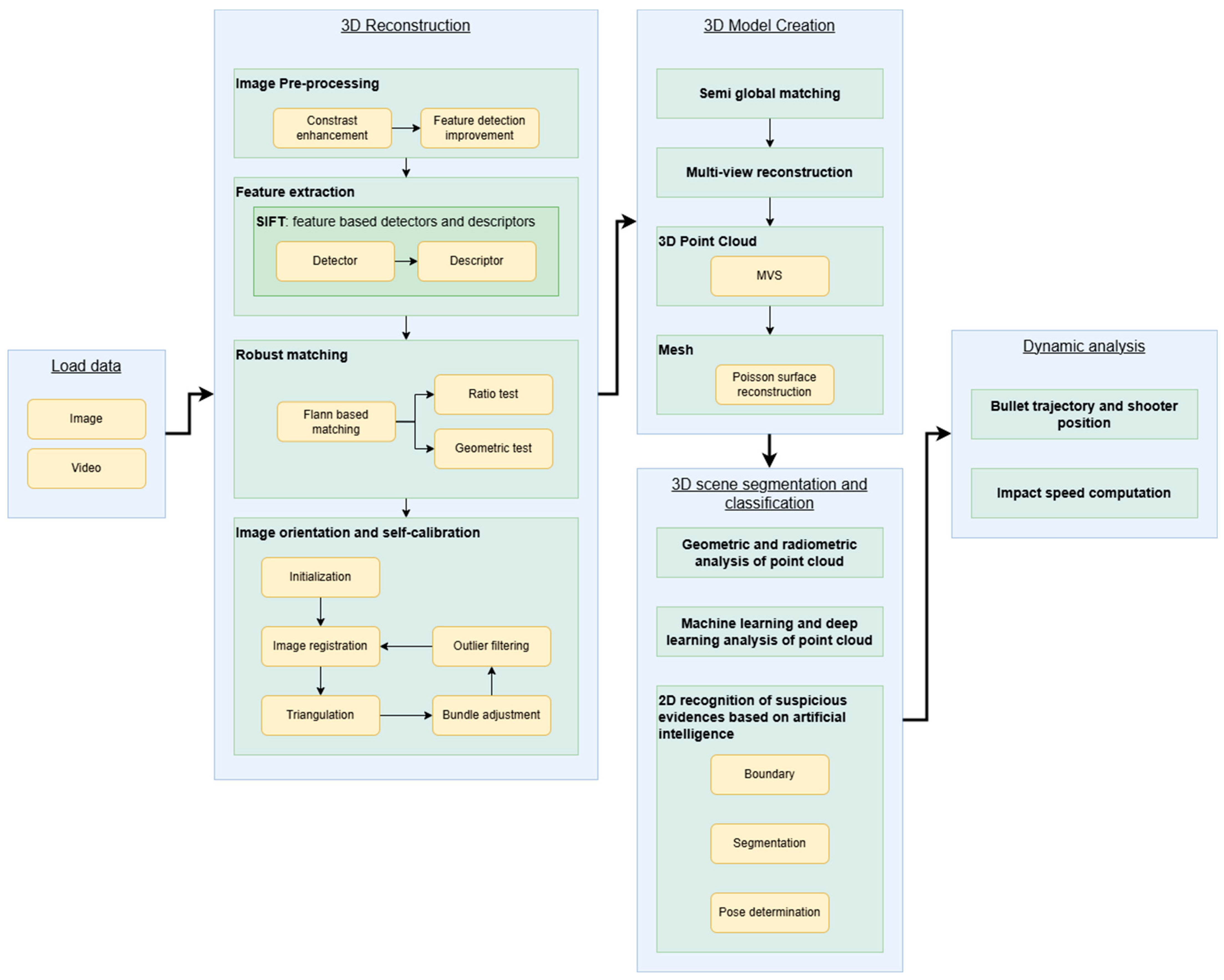

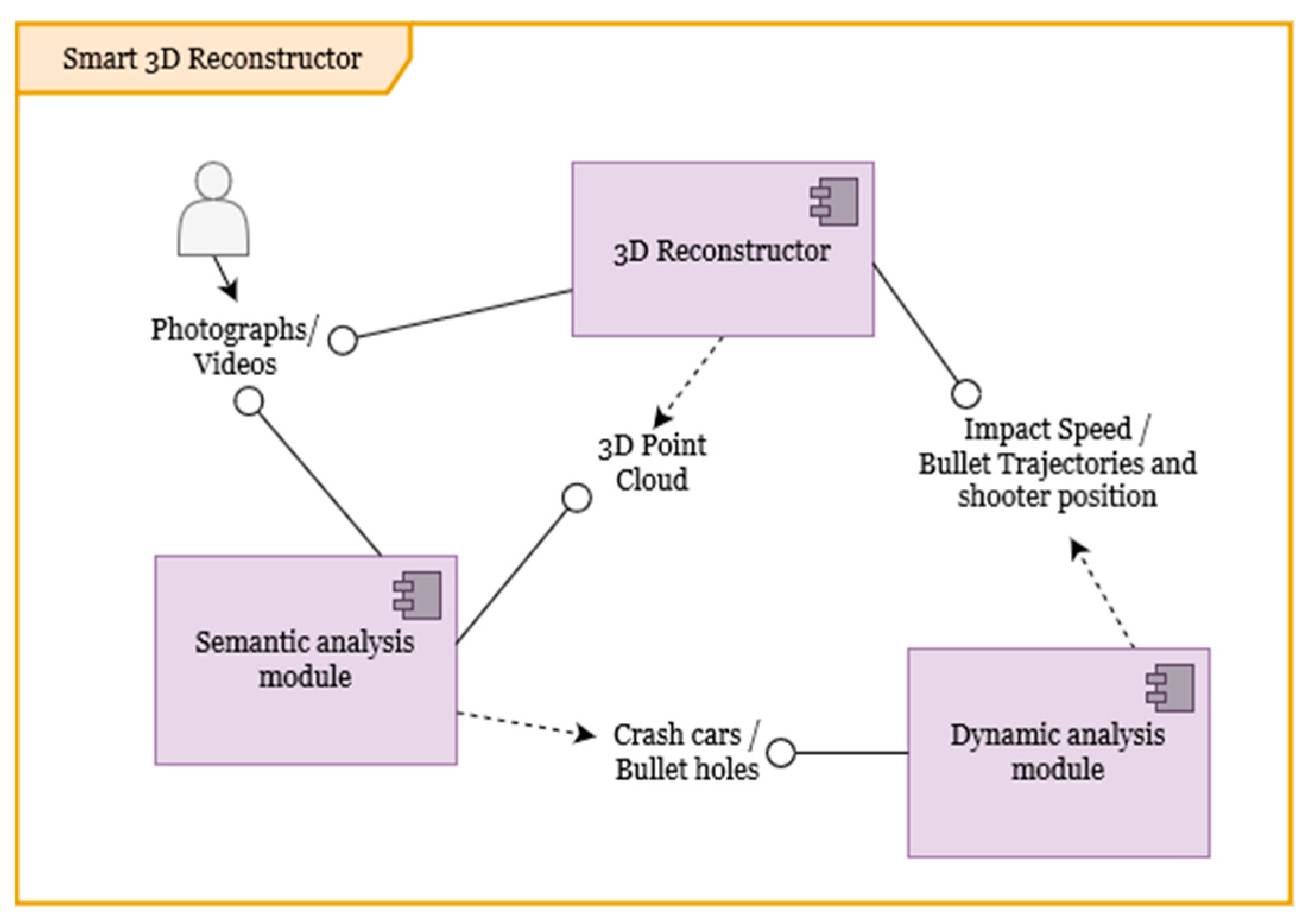

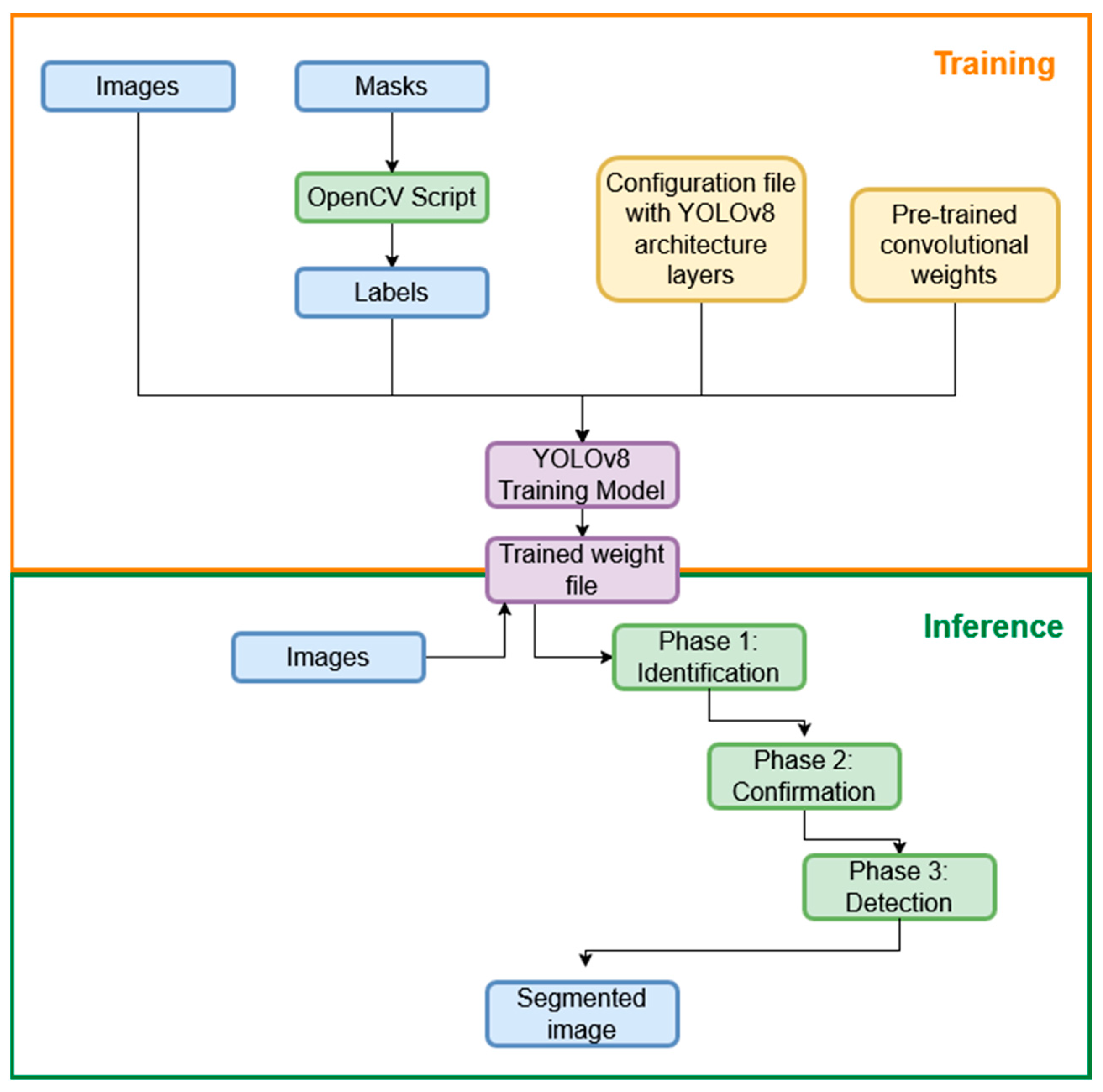

- Photo or video-based 3D reconstruction: the tool recreates detailed 3D environments with metric capabilities from photographic evidence, employing cutting-edge image processing and computer vision techniques.

- Semantic 2D/3D analysis module: the tool includes a module for the semantic classification of the scene in 2D (based on photographs) and 3D (based on the 3D point clouds generated).

- Forensic analysis modules: the tool includes tailored modules focused on ballistics and car impact speeds. It offers an analysis and visual representation of bullet trajectories and collision dynamics.

- Intuitive user interface: a user-friendly interface allows easy navigation and utilization for forensic investigators and analysts.

- Applicability: the tool was designed for security and law forces organizations, forensic experts, and other professionals involved in forensic investigations. It serves as a resource for comprehensive scene analysis.

1.1. State of the Art

1.1.1. Forensic 3D Digitisation and Analysis Methods

- Pure geometric data (linear, angular, surface, or volume measurements).

- Pure radiometric data (detection of fluids such as water, gasoline, and blood, among others)

- A combination of both for the detection of relevant objects in the scene. This process is known as semantic information inference of the scene or the semantic classification of point clouds.

1.1.2. Software and Tools for 3D Reconstruction

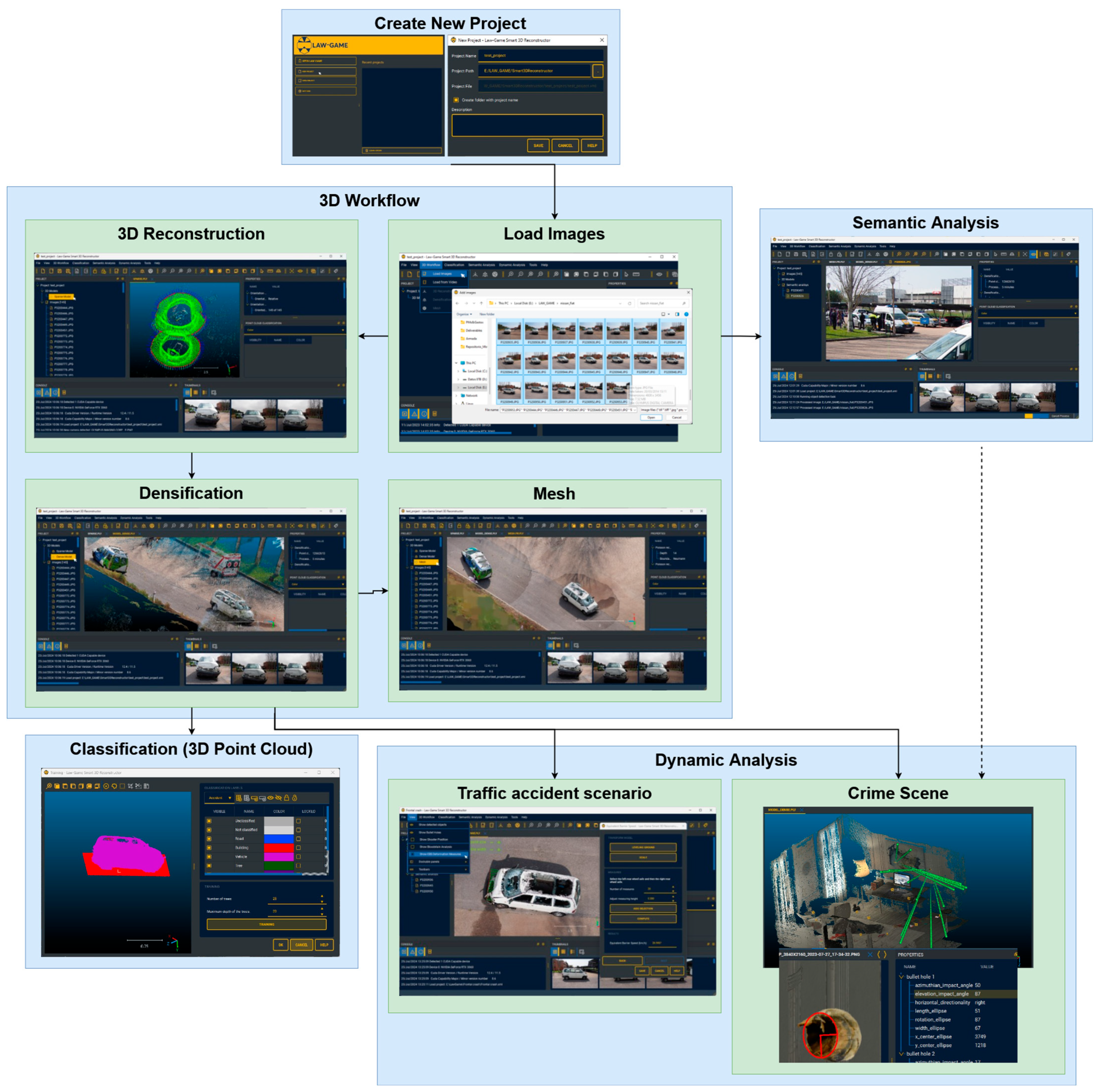

2. Materials and Methods

2.1. System Architecture Overview

2.2. Generation of Smart 3D Scenarios

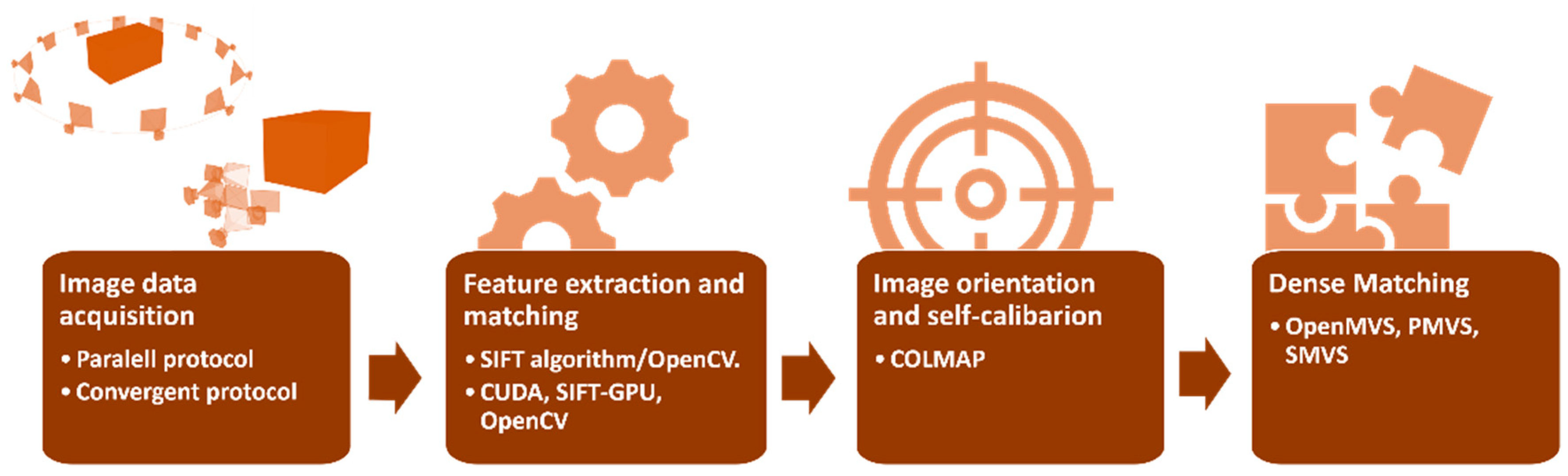

2.2.1. From 2D Photographs/Videos to 3D Environments

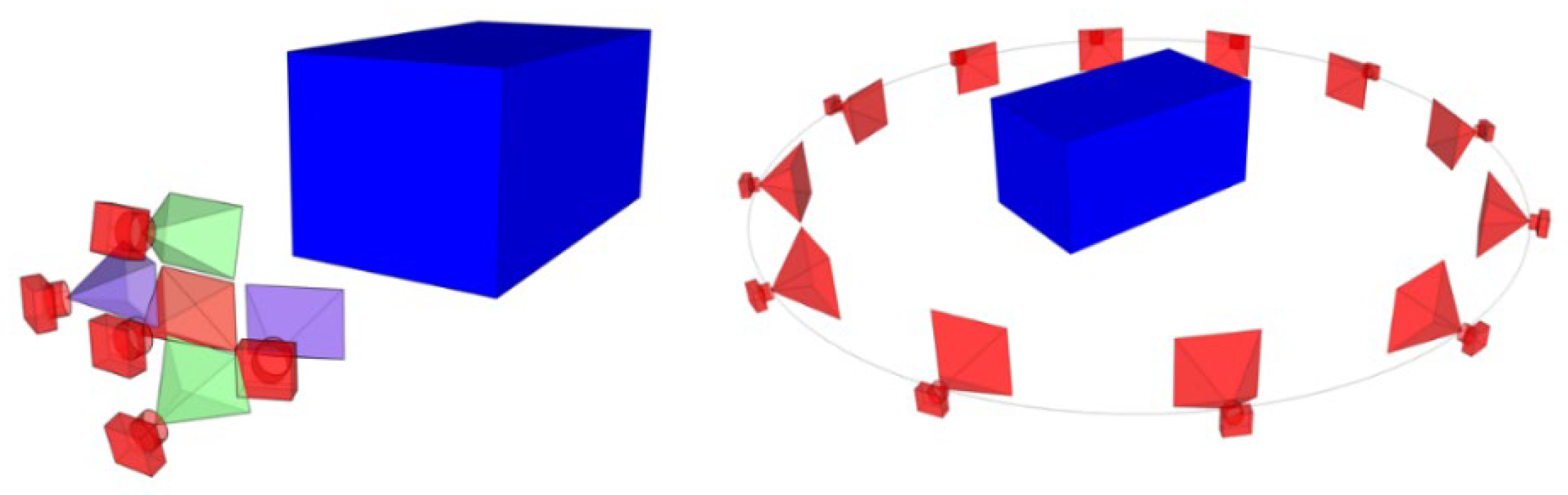

- Parallel Protocol: This protocol is optimal for detailed reconstructions of specific areas, such as vehicle deformations or crime scene features (e.g., bullet holes, footprints, bloodstains). The user should capture five images arranged in a cross pattern (Figure 5, left), with at least 80% overlap between images. The central image (red) should focus on the area of interest, while the four surrounding images (left, right, top, and bottom) should be taken with the camera angled towards the central area. Each image must cover the entire region of interest for a comprehensive reconstruction.

- Convergent Protocol: This protocol is well-suited for reconstructing 360° 3D point clouds, such as those of accident scenes or entire crime scenes. The user should capture images while moving in a ring around the object, maintaining a constant distance and ensuring more than 80% overlap between images (Figure 5, right). If the object cannot be captured in a single ring, a similar approach using a half-ring can be employed.

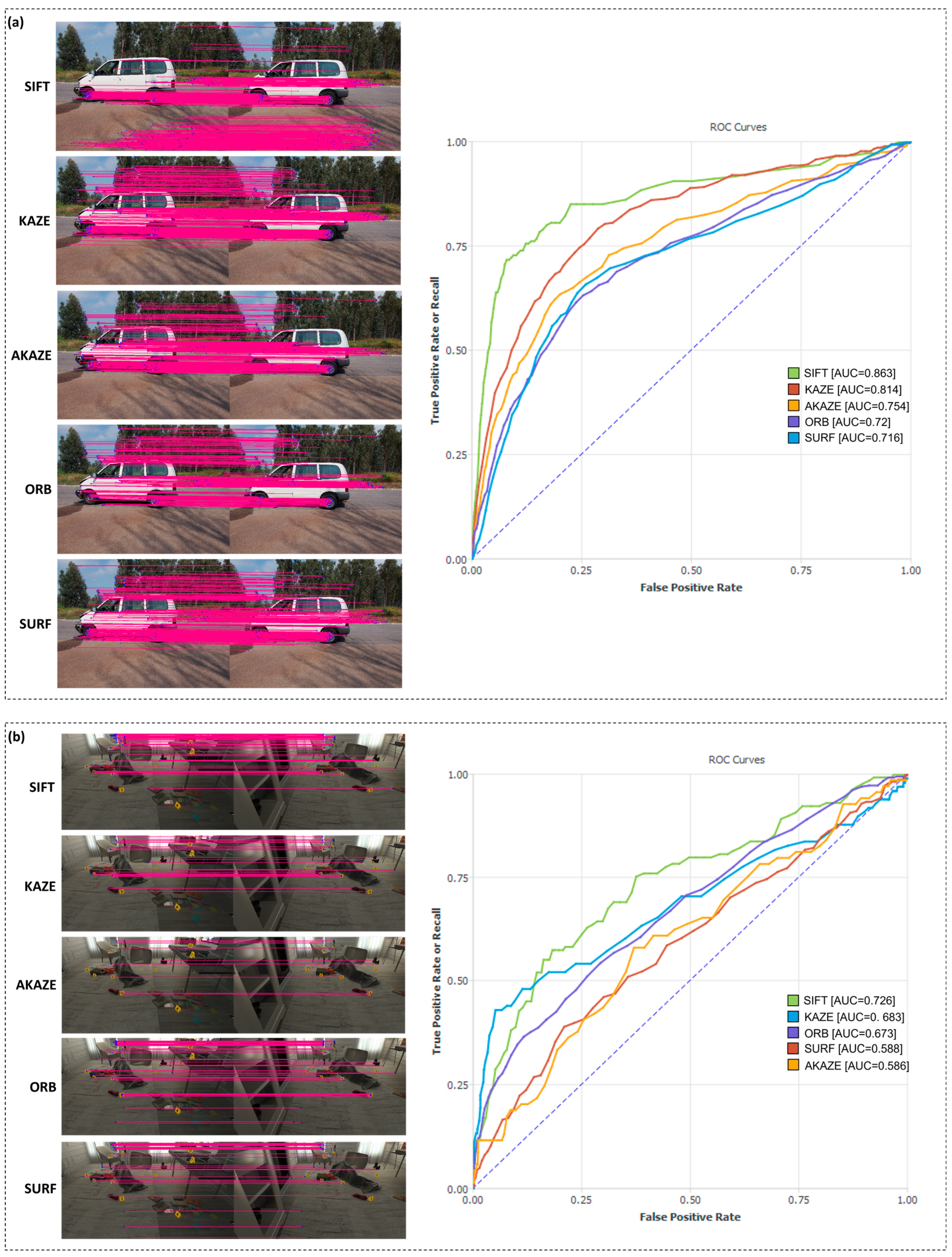

- SIFT [96]: A scale- and rotation-invariant algorithm that detects and describes local features with high robustness to illumination and viewpoint changes.

- KAZE [99]: Operates in a nonlinear scale space, offering strong performance in textured regions and preserving edge information.

- AKAZE [100]: An accelerated version of KAZE optimized for computational efficiency while maintaining good feature quality.

- ORB [101]: Combines FAST keypoint detection with BRIEF descriptors, designed for speed and low resource consumption, though less precise in complex scenes.

- SURF [102]: A faster alternative to SIFT, using integral images and approximated filters, but with reduced accuracy in forensic contexts.

- The initialization of the first pair of images is carried out by choosing the best pair. For this selection, a series of criteria were established: (i) guarantee a good ray intersection; (ii) contain a high number of matching points; (iii) present a good distribution of matching points throughout the image format.

- Once the image pair has been chosen, the triangulation of images is performed by applying Direct Linear Transformation (DLT) [105], using the camera pose and the matching points from the fundamental matrix. Then, considering the initial image pair as a reference, new images are registered and triangulated again by applying DLT. DLT allows estimating the camera pose and triangulating the matching points without initial approximations and camera calibration parameters. The result of this step is 2D–3D correspondences and image registration.

- Although at this point all images have been registered and triangulated using DLT, this method has limited accuracy and reliability, which can easily lead to a state of non-convergence. To address this problem, a bundle adjustment based on a collinearity condition [56] was carried out with the purpose of computing registration and triangulation jointly and globally, self-calibrating, and obtaining better accuracy in image orientation and self-calibration, by applying an iterative nonlinear process supported by the collinearity condition that minimizes the reprojection error.

- Pinhole: ideal projection model without distortion.

- Radial: radial distortion model.

- Radial–tangential: complete distortion model (radial and tangential).

- Fisheye.

- Detailed 3D Point Cloud: High-resolution point cloud representing specific damaged areas, such as vehicle damage in traffic accidents or bullet holes in crime scenes.

- General 3D Point Cloud: Represents the entire crime scene or traffic accident scenario, encompassing metric properties for dimensional analysis.

2.2.2. Semantic Analysis: Detection of Evidence and Relevant Objects

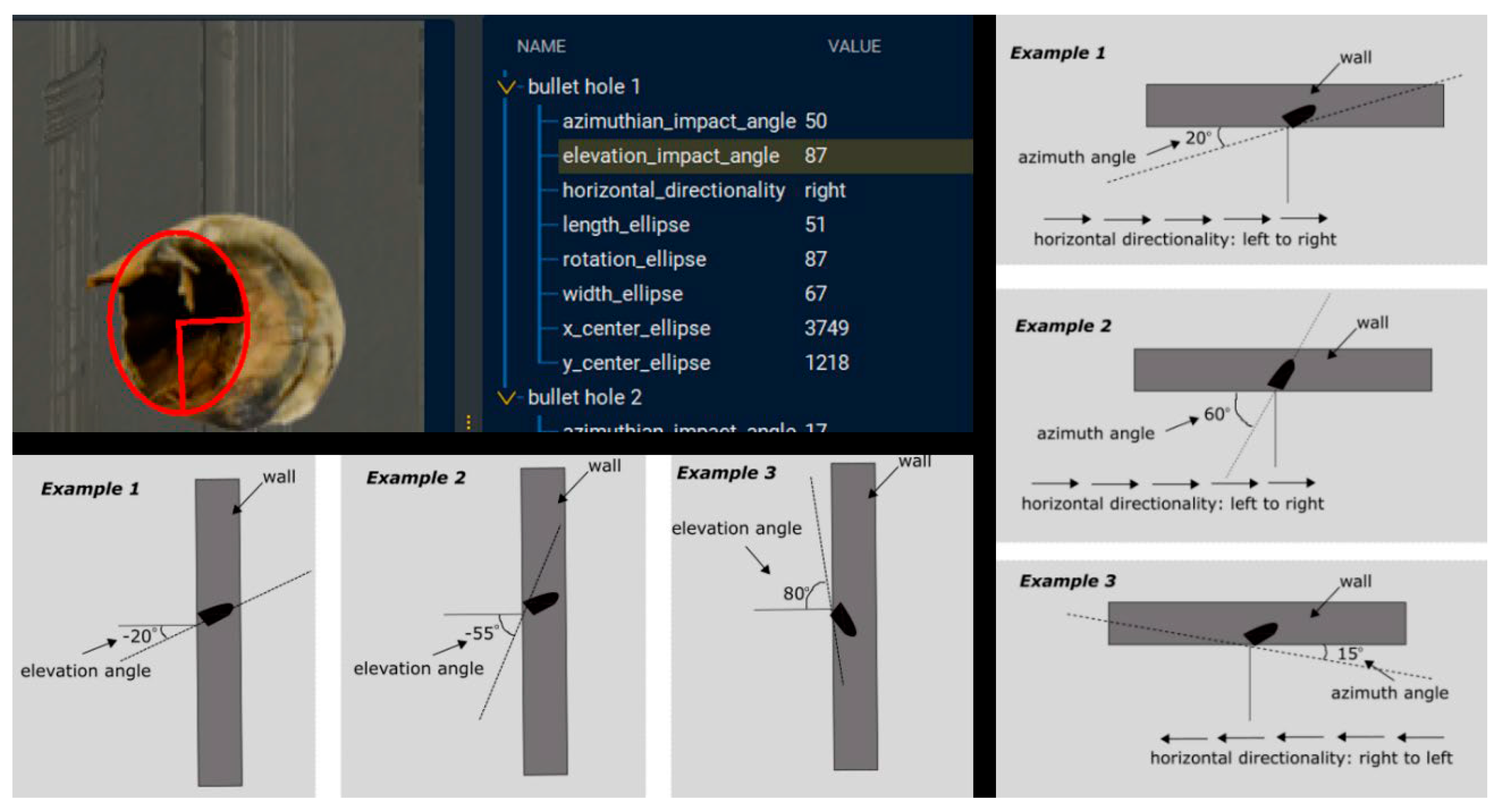

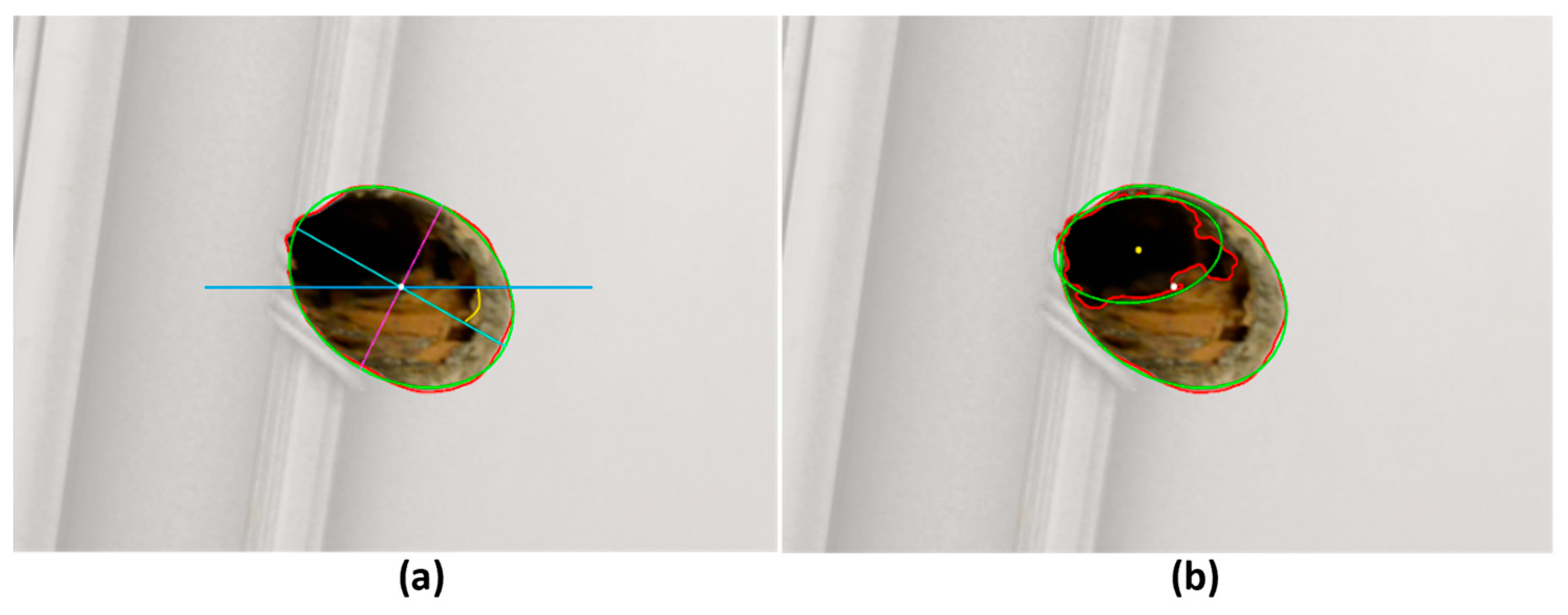

- N: neighbourhood

- p: neighbourhood centroid

- a: azimuth angle

- l: ellipse length (minor axis)

- w: ellipse width (major axis)

- e: elevation angle

- r: ellipse rotation

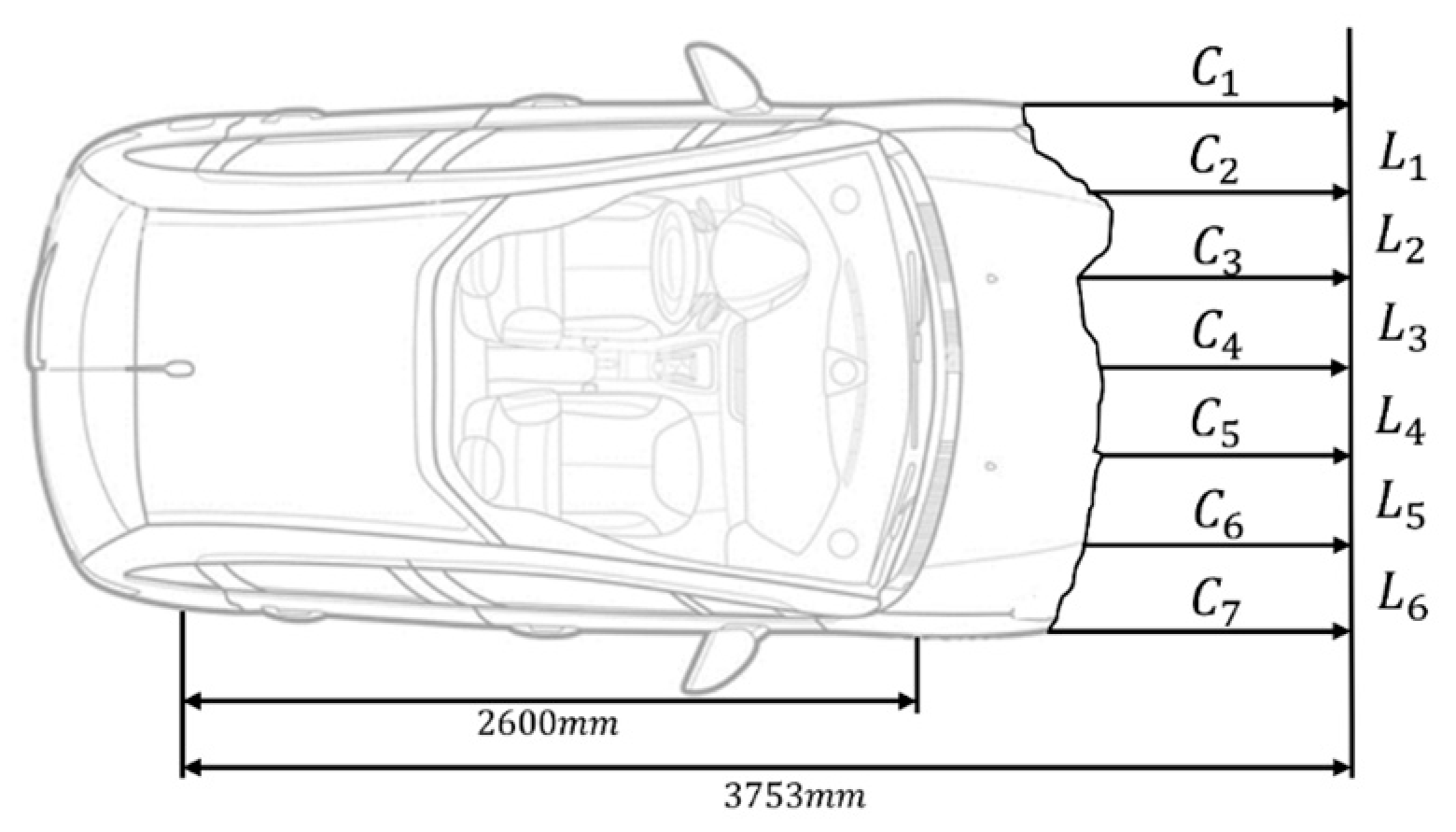

- L: Width of the deformed area.

- d0, d1: Prasad coefficients

- ci: Deformation measurements

- Lc: Distances between measurements

- : mass of the vehicle.

- : energy absorbed during the collision

3. Results

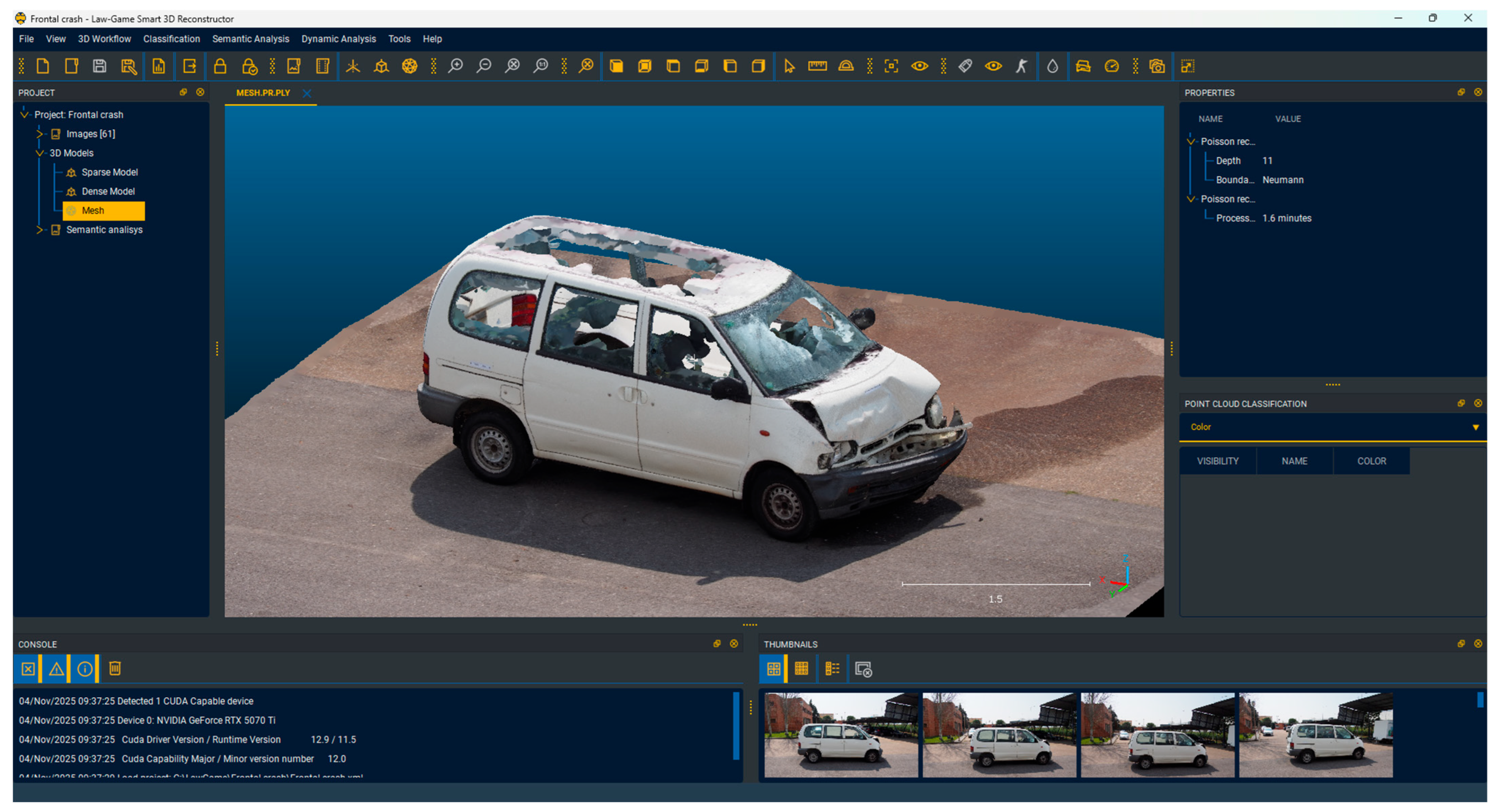

3.1. Car Accident Scenario

3.1.1. Three-Dimensional Reconstruction

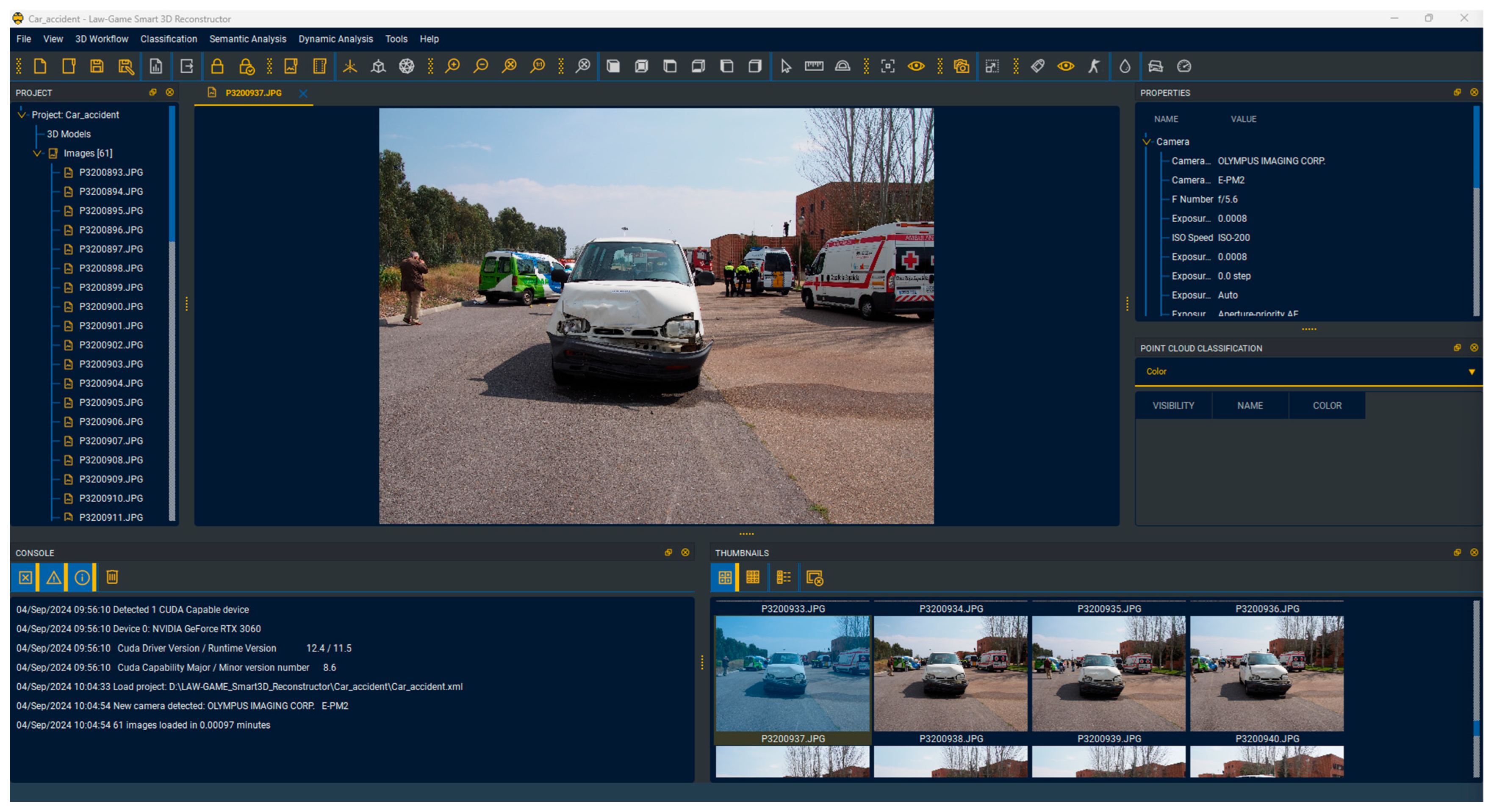

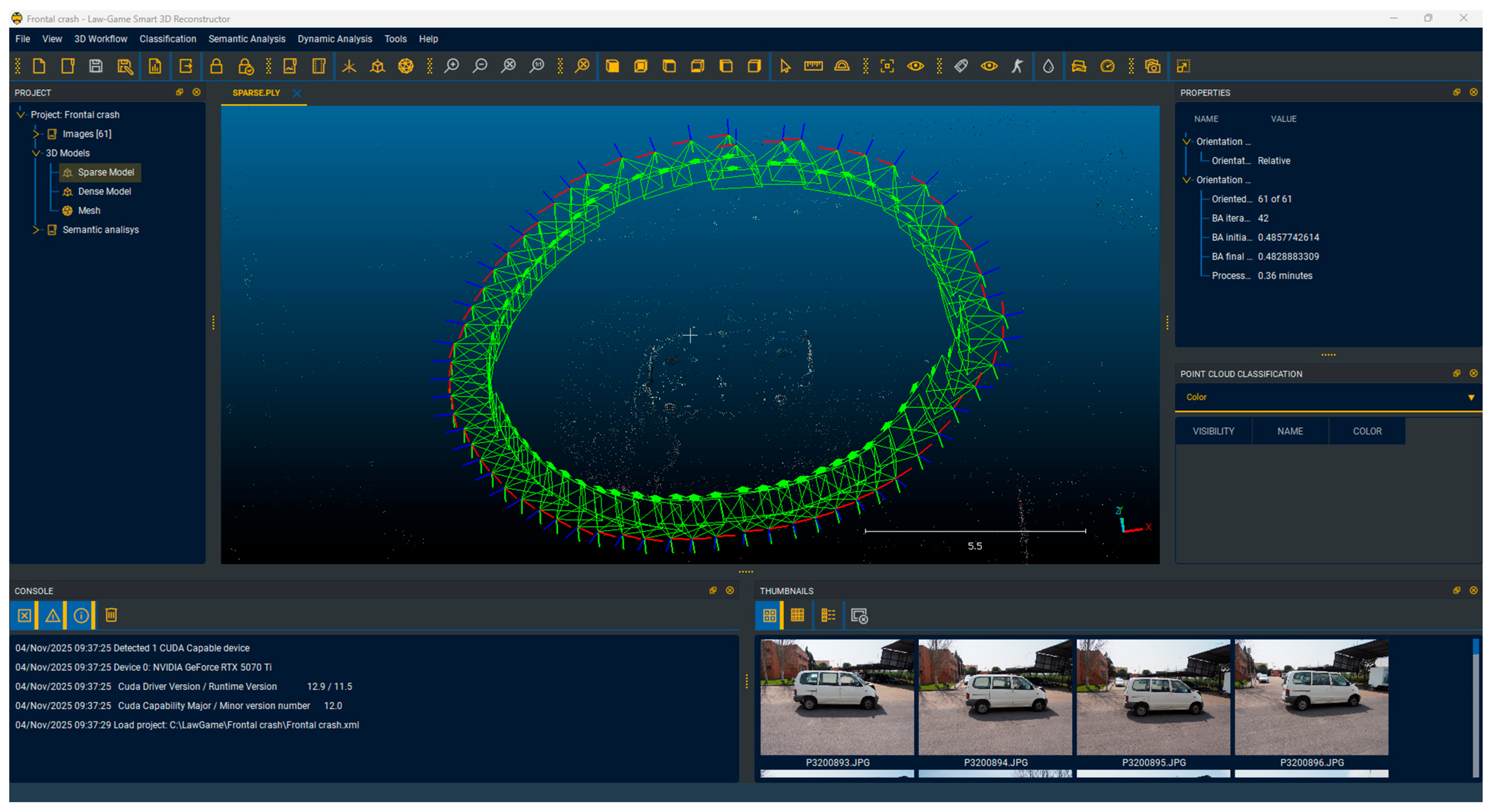

- The first step involves uploading the traffic accident photos into the tool (Figure 12). For this scenario, the convergent protocol was applied to capture images to reconstruct the entire scene. A total of 61 photographs were taken in this case.

3.1.2. Semantic Analysis

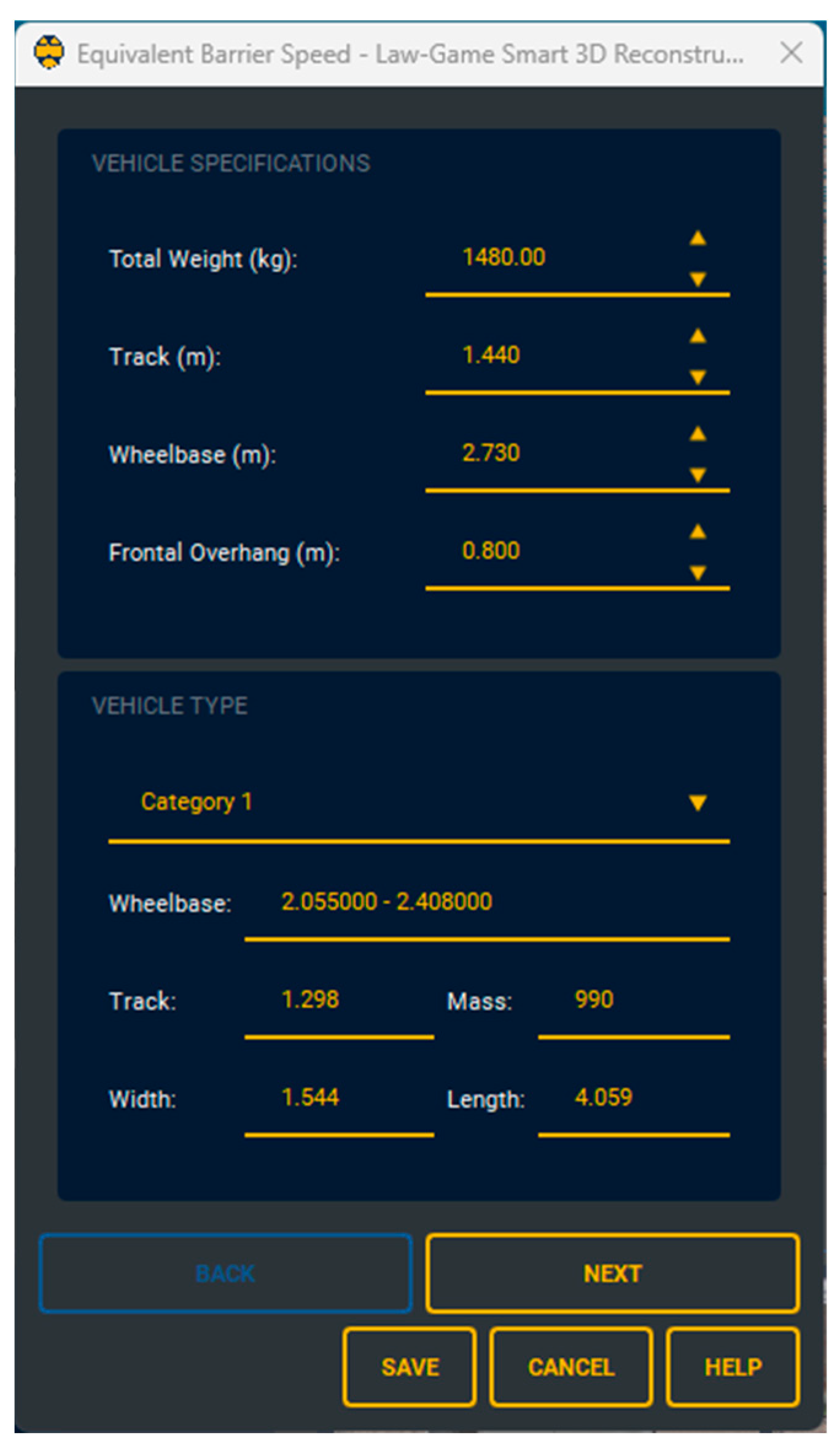

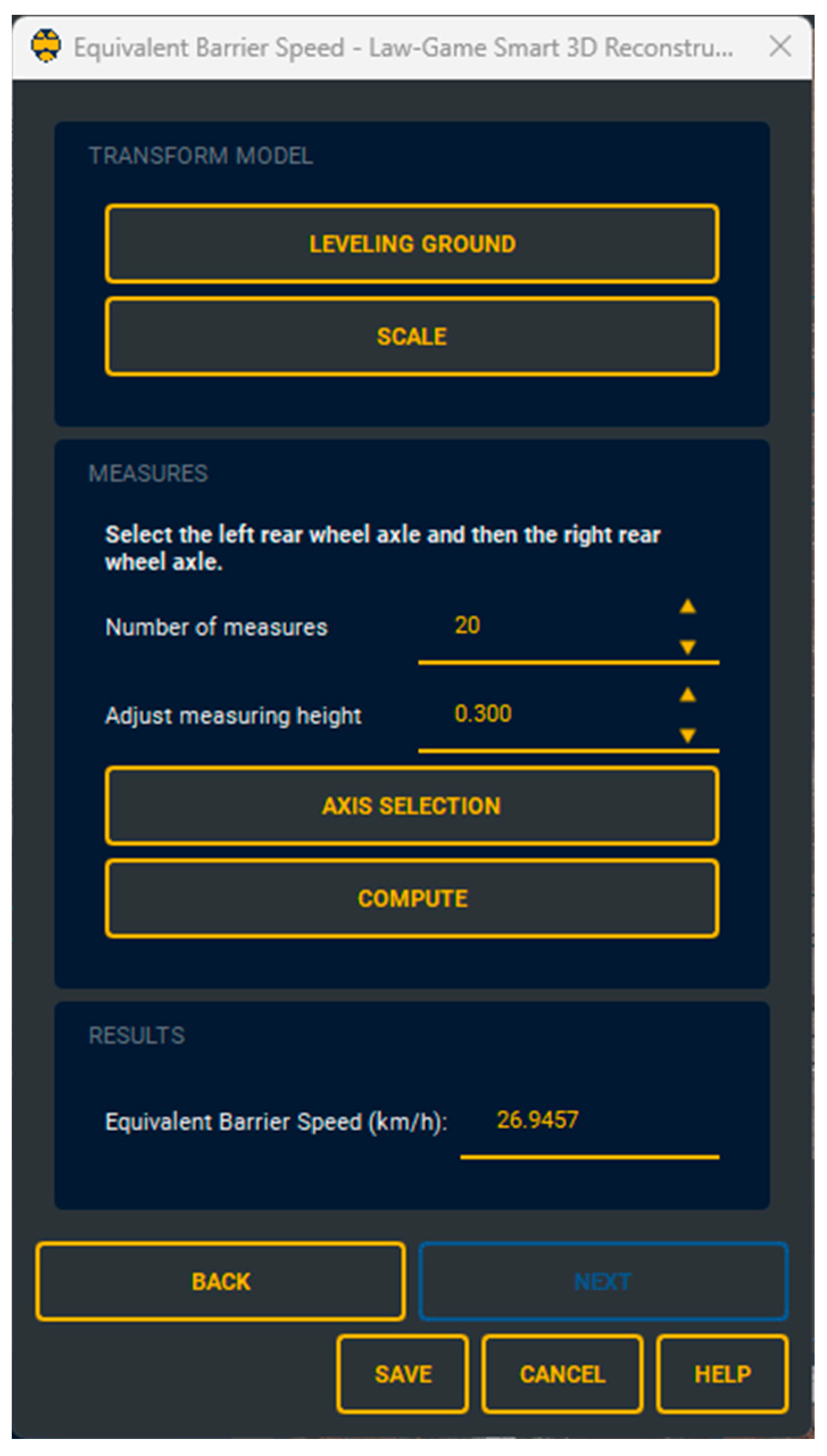

3.1.3. Dynamic Analysis

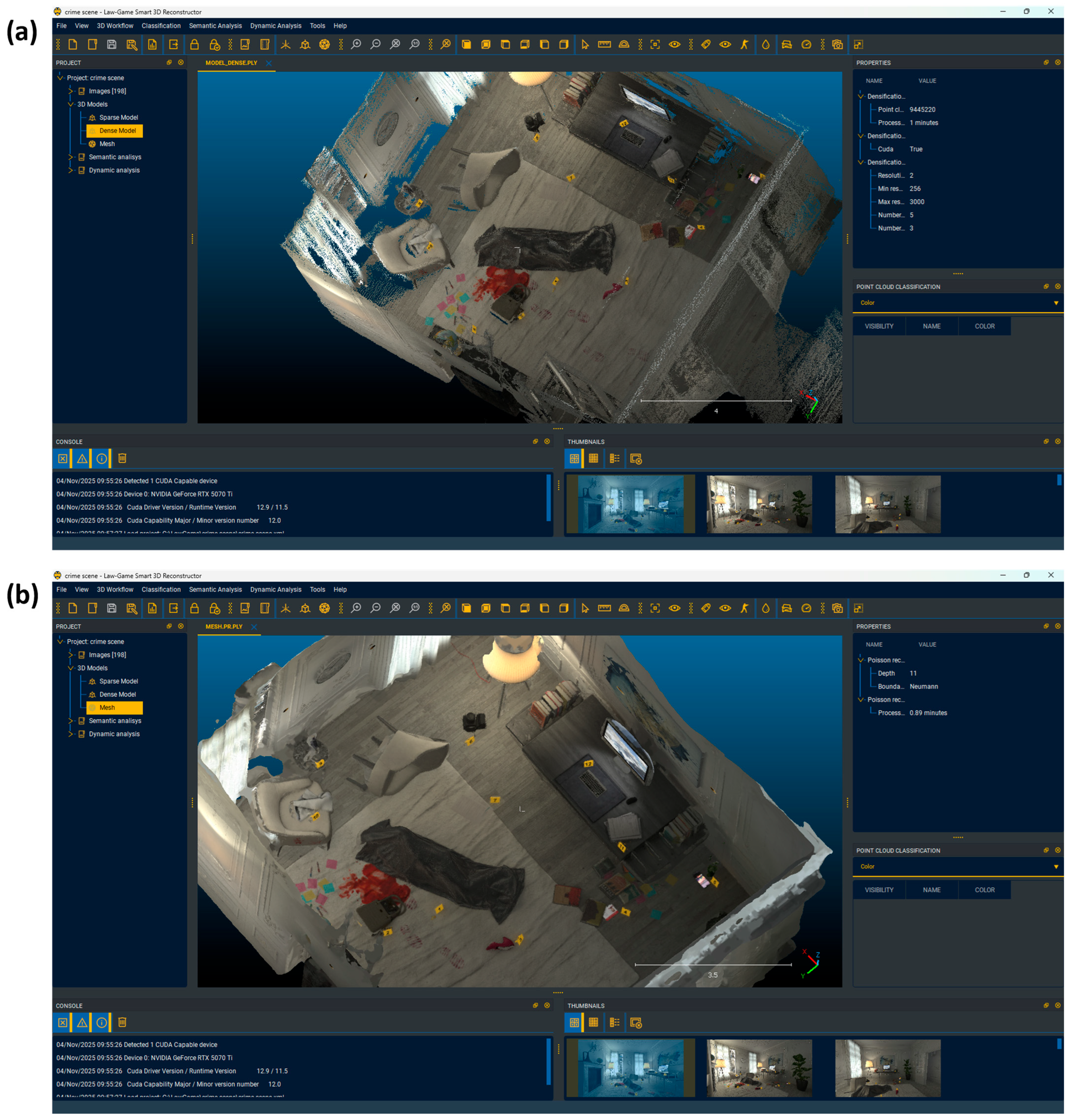

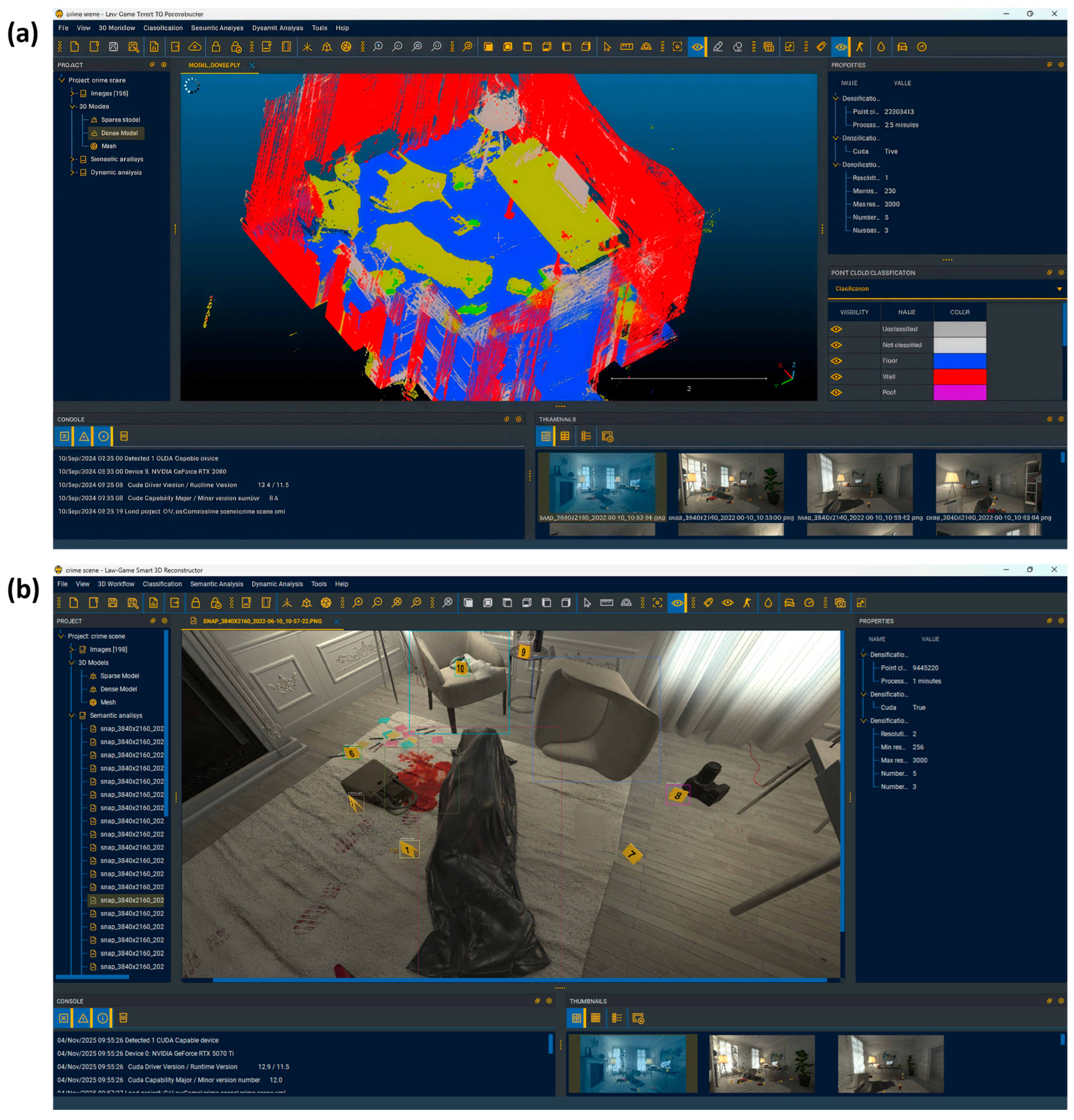

3.2. Crime Scene

3.2.1. Three-Dimensional Reconstruction

3.2.2. Semantic Analysis

3.2.3. Dynamic Analysis

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| EBS | Equivalent Barrier Speed |

| ABS | Anti-lock braking system |

| SfM | Structure from Motion |

| UAV | Unmanned aerial vehicle |

| DEM | Digital elevation model |

| GUI | Graphical user interface |

| ODM | Open Drone Map |

| GCP | Ground control point |

| MVE | Multi-view environment |

| ABM | Area-based matching |

| LSM | Least Squares Matching |

| SUSAN | Smallest Univalue Segment Assimilation Nucleus |

| MSER | Efficient Maximally Stable Extremal Region |

| SURF | Speeded Up Robust Features |

| SIFT | Scale Invariant Feature Transform |

| RANSAC | Random Sample Consensus |

| DLT | Direct Linear Transformation |

| SGM | Semi-Global Matching |

| COCO | Common Object in Context |

References

- Stuart, H.J.; Nordby, J.J.; Bell, S. Forensic Science: An Introduction to Scientific and Investigative Techniques; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Docchio, F.; Sansoni, G.; Tironi, M.; Bui, C. Sviluppo di procedure di misura per il rilievo ottico tridimensionale di scene del crimine. In Proceedings of the XXIII Congresso Nazionale Associazione Gruppo di Misure Elettriche ed Elettroniche, L’Aquila, Italy, 11–13 September 2006. [Google Scholar]

- Kovacs, L.; Zimmermann, A.; Brockmann, G.; Gühring, M.; Baurecht, H.; Papadopulos, N.A.; Zeilhofer, H.F. Three-dimensional recording of the human face with a 3D laser scanner. J. Plast. Reconstr. Aesthetic Surg. 2006, 59, 1193–1202. [Google Scholar] [CrossRef]

- Cavagnini, G.; Sansoni, G.; Trebeschi, M. Using 3D range cameras for crime scene documentation and legal medicine. In Proceedings of the the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 2–6 August 2009. [Google Scholar]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Pastra, K.; Saggion, H.; Wilks, Y. Extracting relational facts for indexing and retrieval of crime-scene photographs. Knowl.-Based Syst. 2003, 16, 313–320. [Google Scholar] [CrossRef][Green Version]

- Gonzalez-Aguilera, D.; Gomez-Lahoz, J. Forensic terrestrial photogrammetry from a single image. Forensic Sci. 2009, 54, 1376–1387. [Google Scholar] [CrossRef]

- D’Apuzzo, N.; Harvey, M. Medical applications. In Advances in Photogrammetry, Remote Sensing and Spatial Information Sciences: 2008 ISPRS Congress Book; CRC Press: Boca Raton, FL, USA, 2008; pp. 443–456. [Google Scholar]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of Laser Scanning and Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2006, 36, 355–362. [Google Scholar]

- El-Hakim, S.; Beraldin, J.-A.; Blais, F. A Comparative Evaluation of the Performance of Passive and Active 3-D Vision Systems. In Proceedings of the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 4–8 August 2003. [Google Scholar]

- Remondino, F.; Guarnieri, A.; Vettore, A. 3D modeling of Close-Range Objects: Photogrammetry or Laser Scanning. In Proceedings of the SPIE, Kissimmee, FL, USA, 12–16 April 2004. [Google Scholar]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Ine. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2005, 36, 266–272. [Google Scholar]

- Apero-Micmac Open Source Tools. Available online: http://www.tapenade.gamsau.archi.fr/TAPEnADe/Tools.html (accessed on 5 August 2024).

- Cloud Compare Open Source Tool. Available online: http://www.danielgm.net/cc/ (accessed on 5 August 2024).

- Deseilligny, M.; Clery, I. APERO, an open source bundle adjusment software for automatic calibration and orientation of set of images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, XXXVIII-5/W16, 269–276. [Google Scholar] [CrossRef]

- Samaan, M.; Heno, R.; Deseilligny, M. Close-range photogrammetric tools for small 3D archeological objects. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W2, 549–553. [Google Scholar] [CrossRef]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Ponto, K.; Tredinnick, R. Opportunities for utilizing consumer grade 3D capture tools for insurance documentation. Int. J. Inf. Technol. 2022, 14, 2757–2766. [Google Scholar] [CrossRef]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.M.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. In Proceedings of the Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Pumarola, A.; Corona, E.; Pons-Moll, G.; Moreno-Noguer, F. D-NeRF: Neural Radiance Fields for Dynamic Scenes. In Proceedings of the Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Tretschk, E.; Tewari, A.; Golyanik, V.; Zollhöfer, M.; Lassner, C.; Theobalt, C. Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synthesis of a Dynamic Scene From Monocular Video. In Proceedings of the Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, Z.; Wu, S.; Xie, W.; Chen, M.; Prisacariu, V.A. NeRF--: Neural Radiance Fields Without Known Camera Parameters. In Proceedings of the Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Zhu, H.; Wu, W.; Zhu, W.; Jiang, L.; Tang, S.; Zhang, L.; Liu, Z.; Loy, C.C. CelebV-HQ: A Large-Scale Video Facial Attributes Dataset. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Rodríguez, M.U.P.; Álvarez, J.A.S.; Cárdenas, J.G.M. Investigación & Reconstrucción de accidentes: La Reconstrucción práctica de un accidente de tráfico. Secur. Vialis 2011, 1, 27–37. [Google Scholar] [CrossRef]

- Sánchez, J.L.D.; Andreu, J.S.-F. La reconstrucción de accidentes desde el punto de vista policial. Cuad. Guard. Civ. Rev. Segur. Pública 2004, 1, 109–118. [Google Scholar]

- Carballo, H. Pericias Tecnico-Mecanicas; Ediciones Larocca: Buenos Aires, Argentina, 2005. [Google Scholar]

- Robsan, S.; Kyle, S.; Harley, I. Close Range Photogrammetry: Principles, Techniques and Applications; Whittles Publishing: Hertfordshire, UK, 2011. [Google Scholar]

- González-Aguilera, D.; Muñoz-Nieto, Á.; Rodríguez-Gonzalvez, P.; Mancera-Taboada, J. Accuracy assessment of vehicles surface area measurement by means of statistical methods. Measurement 2013, 46, 1009–1018. [Google Scholar] [CrossRef]

- Du, X.; Jin, X.; Zhang, X.; Shen, J.; Hou, X. Geometry features measurement of traffic accident for reconstruction based on close-range photogrammetry. Adv. Eng. Softw. 2009, 40, 497–505. [Google Scholar] [CrossRef]

- Fraser, C.; Hanley, H.; Cronk, S. Close-range photogrammetry for accident reconstruction. In Optical 3D Measurements VII; Gruen, A., Kahmen, H., Eds.; SPIE: Bellingham, WA, USA, 2005; Volume 2, pp. 115–123. [Google Scholar]

- Fraser, C.; Cronk, S.; Hanley, H. Close-range photogrammetry in traffic incident management. In Proceedings of the XXI ISPRS Congress Commission V, Beijing, China, 3–11 July 2008. [Google Scholar]

- Hattori, S.; Aklmoto, K.; Fraser, C.; Imoto, H. Automated procedures with coded targets in industrial vision metrology. Photogramm. Eng. Remote Sens. 2002, 68, 441–446. [Google Scholar]

- Han, I.; Kang, H. Determination of the collision speed of a vehicle from evaluation of the crush volume using photographs. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2016, 230, 479–490. [Google Scholar] [CrossRef]

- Pool, G.; Venter, P. Measuring accident scenes using laser scanning systems and the use of scan data in 3D simulation and animation. In Proceedings of the 23rd Annual Southern African Transport Conference, Pretoria, South Africa, 12–15 July 2004. [Google Scholar]

- Buck, U.; Naether, S.; Braun, M.; Bolliger, S.; Friederich, H.; Jackowski, C.; Aghayev, E.; Christe, A.; Vock, P.; Dirnhofer, R.; et al. Application of 3D documentation and geometric reconstruction methods in traffic accident analysis: With high resolution surface scanning, radiological MSCT/MRI scanning and real data based animation. Forensic Sci. Int. 2007, 170, 20–28. [Google Scholar] [CrossRef]

- Buck, U.; Naether, S.; Räss, B.; Jackowski, C.; Thali, M.J. Accident or homicide—Virtual crime scene reconstruction using 3D methods. Forensic Sci. Int. 2013, 225, 75–84. [Google Scholar] [CrossRef]

- Wu, T.-H.; Liu, Y.-C.; Huang, Y.-K.; Lee, H.-Y.; Su, H.-T.; Huang, P.-C.; Hsu, W.H. ReDAL: Region-Based and Diversity-Aware Active Learning for Point Cloud Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Li, L.; Sung, M.; Dubrovina, A.; Yi, L.; Guibas, L.J. Supervised Fitting of Geometric Primitives to 3D Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Kang, Z.; Yang, J. A probabilistic graphical model for the classification of mobile LiDAR point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 108–123. [Google Scholar] [CrossRef]

- Bretar, F. Feature Extraction from LiDAR Data in Urban Areas. In Topographic Laser Ranging and Scanning; Productivity Press: New York, NY, USA, 2017. [Google Scholar]

- Li, Y.; Lin, Q.; Zhang, Z.; Zhang, L.; Chen, D.; Shuang, F. MFNet: Multi-Level Feature Extraction and Fusion Network for Large-Scale Point Cloud Classification. Remote Sens. 2022, 14, 5707. [Google Scholar] [CrossRef]

- Zeybek, M. Classification of UAV point clouds by random forest machine learning algorithm. Turk. J. Eng. 2021, 5, 48–57. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Chen, Z.; Lu, Z. A Review of Deep Learning-Based Semantic Segmentation for Point Cloud. IEEE Access 2019, 7, 179118–179133. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 16–21 July 2017. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point Transformer. In Proceedings of the EEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-Scale Point Cloud Semantic Segmentation With Superpoint Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–16 June 2020. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Robert, D.; Raguet, H.; Landrieu, L. Efficient 3D Semantic Segmentation with Superpoint Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023. [Google Scholar]

- Maini, R.; Aggarwal, H. A Comprehensive Review of Image Enhancement Techniques. J. Comput. 2010, 2, 8–13. [Google Scholar]

- Verhoeven, G.; Karel, W.; Štuhec, S.; Doneus, M.; Trinks, I.; Pfeifer, N. Mind your grey tones: Examining the influence of decolourization methods on interest point extraction and matching for architectural image-based modelling. In Proceedings of the 3D-Arch 2015: 3D Virtual Reconstruction and Visualization of Complex Architectures, Avila, Spain, 25–27 February 2015. [Google Scholar]

- Apollonio, F.I.; Ballabeni, A.; Gaiani, M.; Remondino, F. Evaluation of feature-based methods for automated network orientation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 47–54. [Google Scholar] [CrossRef]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent developments in large-scale tie-point matching. ISPRS J. Photogramm. Remote Sens. 2016, 115, 47–62. [Google Scholar]

- Agarwal, S.; Snavely, N.; Seitz, S.M.; Szeliski, R. Bundle Adjustment in the Large. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Crete, Greece, 5–11 September 2010. [Google Scholar]

- Wu, C.; Agarwal, S.; Curless, B.; Seitz, S.M. Multicore bundle adjustment. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Schonberger, J.L.; Frahm, J.-M. Structure-From-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar]

- Frahm, J.-M.; Fite-Georgel, P.; Gallup, D.; Johnson, T.; Raguram, R.; Wu, C.; Jen, Y.-H.; Dunn, E.; Clipp, B.; Lazebnik, S.; et al. Building Rome on a Cloudless Day. In Proceedings of the Computer Vision—ECCV 2010, Crete, Greece, 5–11 September 2010. [Google Scholar]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012. [Google Scholar]

- Heinly, J.; Schonberger, J.L.; Dunn, E.; Frahm, J.-M. Reconstructing the World* in Six Days *(As Captured by the Yahoo 100 Million Image Dataset). In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Schops, T.; Schonberger, J.L.; Galliani, S.; Sattler, T.; Schindler, K.; Pollefeys, M.; Geiger, A. A Multi-View Stereo Benchmark With High-Resolution Images and Multi-Camera Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Knapitsch, A.; Park, J.; Zhou, Q.Y.; Koltun, V. Tanks and temples: Benchmarking large-scale scene reconstruction. ACM Trans. Graph. (ToG) 2017, 36, 1–13. [Google Scholar] [CrossRef]

- Aguilera, D.G.; Lahoz, J.G. sv3DVision: Didactical photogrammetric software for single image-based modeling. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 171–179. [Google Scholar]

- Grussenmeyer, P.; Drap, P. Possibilities and limits of web photogrammetry. In Proceedings of the Photogrammetric Week ’01, Stuttgart, Germany, 23–27 April 2001. [Google Scholar]

- Piatti, E.J.; Lerrma, J.L. Virtual Worlds for Photogrammetric Image-Based Simulation and Learning. Photogramm. Rec. 2013, 28, 27–42. [Google Scholar] [CrossRef]

- González-Aguilera, D.; Guerrero, D.; López, D.H.; Rodríguez-González, P.; Pierrot, M.; Fernández-Hernández, J. PW, Photogrammetry Workbench. CATCON Silver Award, ISPRS WG VI/2. In Proceedings of the 22nd ISPRS Congress, Melbourne, Australia, 25 August–1 September 2012. [Google Scholar]

- Luhmann, T. Learning Photogrammetry with Interactive Software Tool PhoX. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 39–44. [Google Scholar] [CrossRef]

- Wu, C. VisualSFM: A Visual Structure from Motion System. 2011. Available online: http://ccwu.me/vsfm/ (accessed on 4 November 2025).

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multiview Stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- ARC-Team Engineering srls. Arc-Team. 2020. Available online: https://www.arc-team.it/ (accessed on 3 November 2025).

- Waechter, M.; Moehrle, N.; Goesele, M. Let There Be Color! Large-Scale Texturing of 3D Reconstructions. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Fuhrmann, S.; Langguth, F.; Moehrle, N.; Waechter, M.; Goesele, M. MVE—An image-based reconstruction environment. Comput. Graph. 2015, 53, 44–53. [Google Scholar] [CrossRef]

- Sweeney, C. TheiaSfM. 2016. Available online: https://github.com/sweeneychris/TheiaSfM (accessed on 3 November 2025).

- Pan, L.; Baráth, D.; Pollefeys, M.; Schönberger, J.L. Global Structure-from-Motion Revisited. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- González-Aguilera, D.; Fernández, L.L.; Rodríguez-González, P.; López, D.H.; Guerrero, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; et al. GRAPHOS—Open-source software for photogrammetric applications. Photogramm. Rec. 2018, 33, 11–29. [Google Scholar] [CrossRef]

- Condorelli, F.; Rinaudo, F.; Salvadore, F.; Tagliaventi, S. A comparison between 3D reconstruction using nerf neural networks and mvs algorithms on cultural heritage images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 565–570. [Google Scholar] [CrossRef]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Aziz, S.A.B.A.; Majid, Z.B.; Setan, H.B. Application of close range photogrammetry in crime scene investigation (CSI) mapping using iwitness and crime zone software. Geoinf. Sci. J. 2010, 10, 1–16. [Google Scholar]

- Olver, A.M.; Guryn, H.; Liscio, E. The effects of camera resolution and distance on suspect height analysis using PhotoModeler. Forensic Sci. Int. 2021, 318, 110601. [Google Scholar] [CrossRef]

- Engström, P. Visualizations techniques for forensic training applications. In Proceedings of the Virtual, Augmented, and Mixed Reality (XR) Technology for Multi-Domain Operations, Online, 27 April–8 May 2020; Volume 11426. [Google Scholar]

- Kottner, S.; Thali, M.J.; Gascho, D. Using the iPhone’s LiDAR technology to capture 3D forensic data at crime and crash scenes. Forensic Imaging 2023, 32, 200535. [Google Scholar] [CrossRef]

- Chaves, L.B.; Barbosa, T.L.; Casagrande, C.P.M.; Alencar, D.S.; Capelli, J., Jr.; Carvalho, F.D.A.R. Evaluation of two stereophotogrametry software for 3D reconstruction of virtual facial models. Dent. Press J. Orthod. 2022, 27, e2220230. [Google Scholar] [CrossRef]

- Galanakis, G.; George, X.; Xenophon, X.; Fikenscher, S.-E.; Allertseder, A.; Tsikrika, T.; Vrochidis, S. A Study of 3D Digitisation Modalities for Crime Scene Investigation. Forensic Sci. 2021, 1, 56–85. [Google Scholar] [CrossRef]

- Cunha, R.R.; Arrabal, C.T.; Dantas, M.M.; Bassanelli, H.R. Laser scanner and drone photogrammetry: A statistical comparison between 3-dimensional models and its impacts on outdoor crime scene registration. Forensic Sci. Int. 2022, 330, 111100. [Google Scholar] [CrossRef]

- Al-Top Topografía, S.A. Trimble Forensic Reveal. Available online: https://al-top.com/producto/trimble-forensics-reveal/ (accessed on 3 November 2025).

- Franț, A.-E. Forensic architecture: A new dimension in Forensics. Analele Științifice ale Universităţii Alexandru Ioan Cuza din Iași. Ser. Ştiinţe Jurid. 2022, 68, 61–78. [Google Scholar]

- Mezhenin, A.; Polyakov, V.; Prishhepa, A.; Izvozchikova, V.; Zykov, A. Using Virtual Scenes for Comparison of Photogrammetry Software. In Proceedings of the Advances in Intelligent Systems, Computer Science and Digital Economics II, Moscow, Russia, 18–20 December 2020; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Joglekar, J.; Gedam, S.S. Area Based Image Matching Methods—A Survey. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 130–136. [Google Scholar]

- Gruen, A.W. Adaptive least squares correlation: A powerful image matching technique. S. Afr. J. Photogramm. Remote Sens. Cartogr. 1985, 14, 175–187. [Google Scholar]

- Smith, S.M.; Brady, J.M. SUSAN—A New Approach to Low Level Image Processing. Int. J. Comput. Vis. 1997, 23, 45–78. [Google Scholar] [CrossRef]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Revaud, J.; De Souza, C.; Humenberger, M.; Weinzaepfel, P. R2D2: Reliable and Repeatable Detector and Descriptor. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Wu, C. SiftGPU. Available online: https://github.com/pitzer/SiftGPU (accessed on 3 October 2024).

- OpenCV. SIFT Feature Detection Tutorial. Available online: https://docs.opencv.org/4.x/da/df5/tutorial_py_sift_intro.html?ref=blog.roboflow.com (accessed on 3 October 2024).

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE Features. In Computer Vision—ECCV 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7577. [Google Scholar]

- Alcantarilla, P.; Nuevo, J.; Bartoli, A. Fast Explicit Diffusion for Accelerated Features in Nonlinear Scale Spaces. In Proceedings of the British Machine Vision Conference 2013, Bristol, UK, 9–13 September 2013; pp. 13.1–13.11. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Computer Vision—ECCV 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951. [Google Scholar]

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.; Karara, H.; Hauck, M. Direct Linear Transformation from Comparator Coordinates into Object Space Coordinates in Close-Range Photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Morel, J.-M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Walk, S. Random Forest Template Forest. Available online: https://prs.igp.ethz.ch/research/Source_code_and_datasets/legacy-code-and-datasets-archive.html (accessed on 3 September 2024).

- OpenMVS. OpenMVS—Open Multi-View Stereo Reconstruction Library. Available online: https://cdcseacave.github.io/ (accessed on 25 September 2024).

- ENS Patch-Based Multi-View Stereo Software (PMVS). 2008. Available online: https://www.di.ens.fr/pmvs/pmvs-1/index.html (accessed on 25 September 2024).

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multi-View Stereopsis. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Langguth, F.; Sunkavalli, K.; Hadap, S.; Goesele, M. Shading-Aware Multi-view Stereo. In Computer Vision (ECCV). 2016. Available online: http://www.kalyans.org/research/2016/ShadingAwareMVS_ECCV16_supp.pdf (accessed on 3 November 2025).

- Thomas, H.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Gall, Y.L. Semantic Classification of 3D Point Clouds with Multiscale Spherical Neighborhoods. In Proceedings of the Internation Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Girshick, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 36, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Patsiouras, E.; Vasileiou, S.K.; Papadopoulos, S.; Dourvas, N.I.; Ioannidis, K.; Vrochidis, S.; Kompatsiaris, I. Integrating AI and Computer Vision for Ballistic and Bloodstain Analysis in 3D Digital Forensics. In Proceedings of the 2024 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024; pp. 734–739. [Google Scholar]

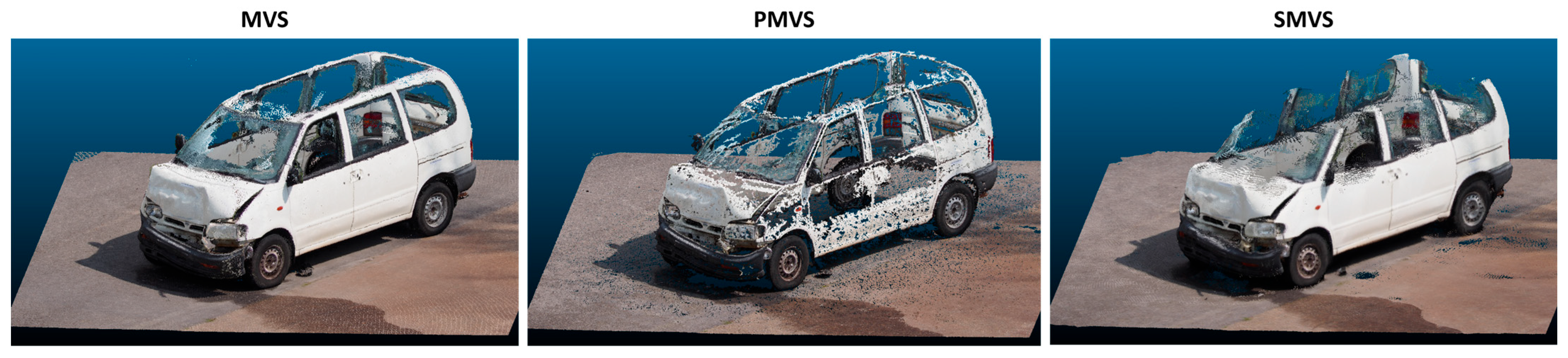

| Algorithm | Execution Time | Number of Generated 3D Points |

|---|---|---|

| MVS | 0.47 min | 5,427,919 |

| PMVS | 12 min | 1,868,967 |

| SMVS | 1.2 min | 4,029,451 |

| Features | Definitions |

|---|---|

| Sum of eigenvalues | |

| Omnivariance | |

| Eigenentropy | |

| Linearity | |

| Planarity | |

| Sphericity | |

| Change in curvature | |

| Verticality (x2) | |

| Absolute moment (x6) | |

| Vertical moment (x2) | |

| Number of points | |

| Average colour (x3) | |

| Colour variance (x3) |

| Categories | Frontal Crash | Side Impact | Rear Impact | |||

|---|---|---|---|---|---|---|

| d0 | d1 | d0 | d1 | d0 | d1 | |

| Category 1 | 92.87 | 569.06 | 125.18 | 511.68 | 26.69 | 504.90 |

| Category 2 | 83.27 | 544.31 | 128.74 | 531.74 | 36.06 | 679.43 |

| Category 3 | 89.31 | 621.16 | 130.31 | 550.60 | 48.31 | 626.68 |

| Category 4 | 128.68 | 484.16 | 100.09 | 624.16 | 42.64 | 586.94 |

| Category 5 | 112.64 | 504.91 | 74.84 | 694.48 | 54.43 | 569.06 |

| Vans | 71.94 | 931.74 | 85.28 | 615.50 | 0.00 | 0.00 |

| fx/fy: focal lengths cx/cy: principal point coordinates k1/k2: radial lens distortion | |

| Measurement | Deformation |

|---|---|

| C1 | 0.446 |

| C2 | 0.392 |

| C3 | 0.315 |

| C4 | 0.234 |

| C5 | 0.185 |

| C6 | 0.152 |

| C7 | 0.136 |

| C8 | 0.238 |

| C9 | 0.286 |

| C10 | 0.331 |

| C11 | 0.334 |

| C12 | 0.325 |

| C13 | 0.322 |

| C14 | 0.306 |

| C15 | 0.209 |

| C16 | 0.131 |

| C17 | 0.076 |

| C18 | 0.023 |

| C19 | 0.339 |

| C20 | 0.281 |

| fx/fy: focal lengths cx/cy: principal point coordinates k1/k2: radial lens distortion | |

| Bullet Hole 1 | Bullet Hole 2 | |

|---|---|---|

| Azimuthian impact angle | 24.4746° | 40.395° |

| Elevation impact angle | 0.264709° | 5.57001° |

| Horizontal directionality | Right | Right |

| Ellipse length | 111 px | 151 px |

| Ellipse width | 267 px | 232 px |

| Cx | 2137 px | 1788 px |

| Cy | 1384 px | 1276 px |

| Cx/Cy: ellipse center | ||

| Bullet hole 1 | 0.515 | −1.749 | −0.481 | −0.904 | 0.423 | −0.070 |

| Bullet hole 2 | −0.769 | −1.772 | −0.490 | −0.747 | 0.658 | −0.095 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ospina-Bohórquez, A.; Ruiz de Oña, E.; Yali, R.; Patsiouras, E.; Margariti, K.; González-Aguilera, D. Comprehensive Forensic Tool for Crime Scene and Traffic Accident 3D Reconstruction. Algorithms 2025, 18, 707. https://doi.org/10.3390/a18110707

Ospina-Bohórquez A, Ruiz de Oña E, Yali R, Patsiouras E, Margariti K, González-Aguilera D. Comprehensive Forensic Tool for Crime Scene and Traffic Accident 3D Reconstruction. Algorithms. 2025; 18(11):707. https://doi.org/10.3390/a18110707

Chicago/Turabian StyleOspina-Bohórquez, Alejandra, Esteban Ruiz de Oña, Roy Yali, Emmanouil Patsiouras, Katerina Margariti, and Diego González-Aguilera. 2025. "Comprehensive Forensic Tool for Crime Scene and Traffic Accident 3D Reconstruction" Algorithms 18, no. 11: 707. https://doi.org/10.3390/a18110707

APA StyleOspina-Bohórquez, A., Ruiz de Oña, E., Yali, R., Patsiouras, E., Margariti, K., & González-Aguilera, D. (2025). Comprehensive Forensic Tool for Crime Scene and Traffic Accident 3D Reconstruction. Algorithms, 18(11), 707. https://doi.org/10.3390/a18110707