1. Introduction

In the paper, we revisit GMRES and GMRES(m) to solve

In terms of an initial guess,

, and the initial residual

, Equation (

1) is equivalent to

where

is the descent vector or called a corrector.

Equation (

2) can be used to search a proper descent vector,

, which is inserted into Equation (

3) to produce a solution,

, closer to the exact solution. We suppose an

m-dimensional Krylov subspace:

Krylov subspaces are named after A.N. Krylov in a paper [

1], who described a method for solving a secular equation to determine the frequencies of small vibrations of material systems.

Many well-developed Krylov subspace methods for solving Equation (

1) have been discussed in the text books [

2,

3,

4,

5]. Godunov and Gordienko [

6] used an optimal representation of vectors in the Krylov space with the help of a variational problem; the extremum of the variational problem is the solution of the Kalman matrix equation, in which the 2-norm minimal solution is used as a characteristic of the Krylov space.

One of the most important classes of numerical methods for iteratively solving Equation (

1) is the Krylov subspace methods [

7,

8], and the preconditioned Krylov subspace methods [

9]. Recently, Bouyghf et al. [

10] presented a comprehensive framework for unifying the Krylov subspace methods for Equation (

1).

The GMRES method in [

11] used the Petrov–Galerkin method to search

via a perpendicular property:

The descent vector

is achieved by minimizing the length of the residual vector [

2]:

where

and

is the Euclidean norm of the residual vector,

.

In many Krylov subspace methods, the central idea is the projection. The method of GMRES is a special case of the Krylov subspace methods with an oblique projection. Let

and

be the matrix representations of

and

, respectively. We project the residual Equation (

2) from an

n-dimensional space to an

m-dimensional subspace:

We seek

in the subspace

by

hence, Equation (

8) becomes

Solving

from Equation (

10) and inserting it into Equation (

9), we can obtain

, and, hence, the next

;

is a correction of

, and we hope

.

Meanwhile, Equation (

7) becomes

where

. By means of Equations (

5), (

7), and (

11), we enforce the perpendicular property by

it is equivalent to the projection of

on

.

is the projection of

on

, and

is perpendicular to

, which ensures that the length of the residual vector is decreasing. For seeking a fast convergent iterative algorithm, we must keep the orthogonality, and simultaneously maximize the projection quantity

. Later on, we will modify the GMRES method from these two aspects.

Liu [

12] derived an effective iterative algorithm based on the maximal projection method to solve Equation (

1) in the column space of

. Recently, Liu et al. [

13] applied the maximal projection method and the minimal residual norm method to solve the least-squares problems.

There are two reasons to cause the slowdown and even the stagnation of GMRES: one is the loss of orthogonality, and other is that its descent vector,

, is not the best one. There are different methods to accelerate and improve GMRES [

14,

15,

16,

17]. The restart technique is often applied to the Krylov subspace methods, which, however, generally slows their convergence. Another way to accelerate the convergence is updating the initial value [

18,

19]. The preconditioning technology is often used to speed up GMRES and other Krylov subspace methods [

20,

21,

22,

23,

24]. The multipreconditioned GMRES [

25] is also proposed by using two or more preconditioners simultaneously. The polynomial preconditioned GMRES and GMRES-DR were also developed in [

26]. As an augment of the Krylov subspace, some authors sought better descent vectors in a larger space to accelerate the convergence of GMRES [

27,

28,

29]. Recently, Carson et al. [

30] presented mathematically important and practically relevant phenomena that uncover the behavior of GMRES through a discussion of computed examples; they considered the conjugate gradient (CG) and GMRES methods crucially important for further development and practical applications, as well as other Krylov subspace methods.

Contribution and Novelty:

The major contribution and novelty of the present paper are that we propose a quite simple strategy to overcome the slowdown and stagnation behavior of GMRES by inserting a stabilization factor, , into the iterative equation. In doing so, the orthogonality property of the residual vector and the stepwise decreasing property of the residual length are automatically preserved. We extend the space of GMRES to an affine Krylov subspace for seeking a better descent vector. When the maximal projection method and the affine technique are combined into the orthogonalization technique, two very powerful iterative algorithms for solving linear systems with high performance are developed.

Highlight:

Examine the iterative algorithm for linear systems from the viewpoint of maximizing the decreasing quantity of the residual and maintaining the orthogonality of the consecutive residual vector.

A stabilization factor to measure the deviation from the orthogonality is inserted into GMRES to preserve the orthogonality automatically.

The re-orthogonalized GMRES guarantees the preservation of orthogonality, even if the orthogonality is gradually lost in the iterations by means of GMRES.

Improve GMRES by seeking the descent vector to minimize the length of the residual vector in a larger space of the affine Krylov subspace.

The new algorithms are all absolute convergence.

Outline:

The Arnoldi process and the conventional GMRES and GMRES(m) are described in

Section 2. In

Section 3, we propose a new algorithm modified from GMRES by inserting a stabilization factor,

, which is named a re-orthogonalized GMRES (ROGMRES). We discuss ROGMRES from two aspects: preserving the orthogonality and maximally decreasing the length of the residual vector; we also prove that ROGMRES can automatically maintain

, and preserve the good property of orthogonality and the maximality of reducing the residual. In

Section 4, a better descent vector is sought in a larger affine Krylov subspace by using the maximal projection method; upon combining it with the orthogonalization technique, we propose a new algorithm, the orthogonalized maximal projection algorithm (OMPA). The iterative equations in GMRES are extended to that in the affine Krylov subspace by using the minimum residual method in

Section 5, which results in an affine GMRES (A-GMRES) method. The numerical tests of some examples are given in

Section 6.

Section 7 concludes the achievements, novelties, and contributions of this paper.

2. GMRES and GMRES(m)

The Arnoldi process [

2] is often used to orthonormalize the Krylov vectors

in Equation (

4), such that the resultant vectors

satisfy

, where

is the Kronecker delta symbol. The full orthonormalization procedure, known as the Algorithm 1, is set up as follows.

| Algorithm 1: Arnoldi process |

1: Select m and give an initial 2: 3: Do 4: 5: Do 6: 7: 8: Enddo of i 9: 10: 11: Enddo of j |

A dot between two vectors represents the inner product, like as

.

denotes the Arnoldi matrix whose

jth column is

:

After

m steps of the Arnoldi process, we can construct an upper Hessenberg matrix,

, as follows:

Utilizing

, the Arnoldi process can be expressed as a matrix product form:

where

, and

. Now, the augmented Hessenberg upper matrix,

, can be formulated as

According to [

2], the Algorithm 2 method is given as follows.

| Algorithm 2: GMRES |

1: Give , , and 2: Do (), until 3: 4: 5: (by Arnoldi process) 6: Solve 7: |

In the above,

is the

kth step value of

;

is the

kth residual vector;

is the vector of

m expansion coefficients used in

;

is the first column of

;

denotes the

kth step

in Equation (

13);

is the

kth step augmented Hessenberg upper matrix in Equation (

16); and

is the

kth step descent vector. Notice that

.

In addition to Algorithm 3, there is a restarted version (GMRES(m)) [

11,

31] described as follows.

| Algorithm 3: GMRES(m) |

1: Give , , , and 2: Do 3: Do () 4: 5: 6: (by Arnoldi process) 7: Solve 8: 9: If , stop 10: Otherwise, ; go to 2 |

Instead of

used in the original GMRES and GMRES(m), a suitable value of

can speed up the convergence; hence,

is the frequency for restart. Rather than the original GMRES and GMRES(m) [

2,

11,

31], we take

, not

, in (7) of Algorithm GMRES and in (8) of Algorithm GMRES(m). Because

is not updated in the original GMRES and GMRES(m), they converge slowly. In Algorithm GMRES(m), if we take

, then we return to the usual iterative algorithm for GMRES, with a fixed-dimension,

, of the Krylov subspace.

The restart remedies the long-term recurrence and accumulations of the round-off errors of GMRES. In order to improve the restart process, several improvement techniques have been proposed, as mentioned above. Some techniques based on adaptively determining the restart frequency can be found in [

32,

33,

34,

35]. For a quicker convergence, we can update the current value of

at each iterative step. Because

is new information for determining the next value of

, the result of

cannot be wasted for saving computational cost.

3. Maximally Decreasing Residual and Automatically Preserving Orthogonality

In this section, we develop a new iterative algorithm based on GMRES to solve Equation (

1). The iterative form is

where

is the

kth step descent vector, which is determined by the iterative algorithm. For instance,

in GMRES is

.

Lemma 1. A better iterative scheme than that in Equation (17) is Proof. Taking the squared norms of both sides yields

To maximally reduce the residual we consider

which leads to

Inserting

into Equation (

19), the proof of Equation (

18) is finished. □

Lemma 2. In Equation (18) the following orthogonal property holds: Proof. Apply

to Equation (

18):

Using

and

, Equation (

25) changes to

Taking the inner product with

yields

Equation (

24) is proven. □

It follows from Equation (

5) that

is implied by GMRES. However, during the GMRES iteration the numerical values of

may deviate from 1 to a great extent, even being zero or a negative value. Therefore, we propose a re-orthogonalized version of GMRES, namely the ROGMRES method (Algorithm 4), as follows.

| Algorithm 4: ROGMRES |

1: Give , , and 2: Do (), until 3: 4: 5: (by Arnoldi process) 6: Solve 7: 8: |

Correspondingly, the ROGMRES(m) Algorithm 5 is given as follows.

| Algorithm 5: ROGMRES(m) |

1: Give , , , and 2: Do 3: Do () 4: 5: 6: (by Arnoldi process) 7: Solve 8: 9: 10: If , stop 11: Otherwise, ; go to 2 |

Upon comparing with GMRES(m) in

Section 2, the computational cost of ROGMRES(m) is slightly increased by computing an extra term,

, at each iteration; it needs one matrix-vector product and two inner products of vectors.

Theorem 1. For GMRES, if is happened at the kth step, the orthogonality in Equation (5) cannot be continued after the kth step, i.e.,moreover, if , the residual does not decrease, i.e., For ROGMRES in Equation (18), the residual is absolutely decreased:and the orthogonality holds. Proof. Applying

to

in Algorithm GMRES yields

where

.

It follows from Equation (

23) that

which can be rearranged to

and then by Equation (

31),

If

, we can prove Equation (

28). Taking the squared norm of Equation (

31) and using Equation (

23) generates

If

,

and Equation (

29) is proven.

For ROGMRES, taking the squared norm of Equation (

26), we have

Because

, Equation (

30) is proven. The orthogonality

was proven in Equation (

24) of Lemma 2. □

Corollary 1. For GMRES, the orthogonality of the consecutive residual vector is equivalent to the maximality of h in Equation (22), where Proof. By means of Equation (

34), to satisfy the orthogonality condition of the residual vector we require

; it implies Equation (

37) by the definition in Equation (

23). At the same time, Equation (

36) changes to

Inserting

into Equation (

22), we have

of which

in Equation (

37) was used. For GMRES, obtaining the maximal value of

h and preserving the orthogonality are the same. □

Remark 1. To maintain in GMRES is a key issue for preserving the orthogonality and for the maximality of reducing the residual. However, for the traditional GMRES, it is not always true that it can maintain during the iteration process.

Theorem 2. Letbe the descent vector of ROGMRES in The following identity holds:such that ROGMRES can automatically maintain the orthogonality of the residual vector, and achieve the maximality of the decreasing length of the residual vector. Proof. Equation (

40) is written as

Inserting it into Equation (

42) produces

by means of Equation (

43), it follows that

Thus, Equation (

42) is proven. The proof to satisfy the orthogonality condition of the consecutive residual vector and the maximality of

h is the same as that given in Corollary 1. □

Remark 2. The properties in ROGMRES are crucial so that it can automatically maintain , for preserving its good properties of the orthogonality, and the maximality for reducing the residual. The method in Equation (18) can be viewed as an automatically orthogonality preserving method. Numerical experiments will verify these points. Theorem 3. For ROGMRES in Equation (18), the iterative point, , is located on an invariant manifold:where ; with is an increasing sequence. Proof. We begin with the following time-invariant manifold:

where

, and

is an increasing function of

t.

We suppose that the evolution of

in time is driven by a descent vector,

:

Equation (

48) is a time-invariant manifold, such that its time differential is zero, i.e.,

where

was used.

Inserting Equation (

49) into Equation (

50) yields

Inserting

into Equation (

49) and using

, we can derive

where

By applying the forward Euler scheme to Equation (

52), we have

where

An iterative form of Equation (

54) is

It generates the residual form:

Taking the squared norms of both sides yields

Dividing both sides by

renders

where

By means of Equation (

47), Equation (

59) changes to

Equation (

61) is satisfied. In terms of

, Equation (

56) is recast to

Noticing

, Equation (

63) is just the iterative Equation (

18) for ROGMRES. □

Remark 3. For the factor defined in Equation (23), plays a vital role in Equation (34) to dominate the orthogonal property of GMRES, and also in Equation (35) to control the residual decreasing property of GMRES. When GMRES blows up for some problems, by introducing in GMRES, we can stabilize the iterations to avoid blowing up. In this viewpoint, is a stabilization factor for GMRES. Inspired by Remark 3, two simple methods can be developed for GMRES and GMRES(m): we can compute at each step, and consider or to be the stopping criteria of the iterations; they are labeled as GMRES() and GMRES(m,), respectively.

Remark 4. h given in Equation (21) is a decreasing quantity of the residual, whose maximal value by means of Equation (36) is Upon comparing with in Equation (60), we have Because , the best value of that can be obtained is when ; in this situation, it will directly lead to the exact solution at the step, owing to , which is obtained by inserting into Equation (38). In this sense, can also be an objective function. correlates to , which is the quantity of the projection from on , by In the next section, we will maximize f, i.e., minimize , to find the best descent vector for .

4. Orthogonalized Maximal Projection Algorithm

As seen from Equation (

38), we must make

as large as possible.

, mentioned in

Section 1, is the projection of

on

. Therefore we consider

to be the maximal projection of

on the direction

, where

. In view of Equation (

66), the maximization of

f is equivalent to the minimization of

.

We can expand

via

which is slightly different from the descent vector

used in GMRES.

Theorem 4. For , , the optimal approximately satisfying Equation (2) and subjecting to Equation (67) is given in Equation (68), where Proof. Due to

and Equation (

68),

where

and

By Equation (

71), one has

where

Resorting to the maximality condition for

f in Equation (

67), we can obtain

where

From Equation (

76),

is equal to

:

because of

according to Corollary 1.

Then, it follows from Equations (

77)–(

79) that

which can be arranged to Equation (70). □

We pull back the minimization problem used in the GMRES method to the following one:

which is derived from Equations (

6) and (

7).

Theorem 5. For , derived from the minimization problem in Equation (82), in Equation (2) is optimized to be that in Theorem 4. Proof. From

and Equations (78) and (

77) it follows that

which is just Equation (70). □

According to Theorems 4 and 5, the maximal projection is equivalent to the minimization of residual. Therefore, the maximal projection algorithm (MPA) is an extension of GMRES to a larger space of the affine Krylov subspace, not merely in the Krylov subspace.

By considering Lemma 1 and Theorem 5, we come to an improvement of ROGMRES as well as the double-improvement of GMRES to the following iterative formulas for the orthogonalized maximal projection algorithm (OMPA):

Equation (

85) can be further simplified in the next section. The algorithms of OMPA (Algorithm 6) and OMPA(m) (Algorithm 7) are given as follows.

| Algorithm 6: OMPA |

1: Give , , and 2: Do (), until 3: 4: 5: (by Arnoldi process) 6: 7: Solve 8: 9: |

| Algorithm 7: OMPA(m) |

1: Give , , , and 2: Do 3: Do () 4: 5: 6: (by Arnoldi process) 7: 8: Solve 9: 10: 11: If , stop 12: Otherwise, ; go to 2 |

In Algorithm OMPA(m), if we take , then we return to the usual iterative algorithm with a fixed-dimension of the Krylov subspace. In Algorithm OMPA(m), is the frequency for restart.

Remark 5. In view of Equations (67) and (80), f and have the following relation: Therefore, the maximization of f is equivalent to the maximization of the length of the projection vector . By means of Equation (64),hence,follows from Equation (22). Through a double-maximization, we have derived the maximal projection algorithm. 6. Examples

In this section, we apply the Algorithms GMRES, GMRES(m), A-GMRES, A-GMRES(m), ROGMRES, ROGMRES(m), OMPA, and MPA(m) to solve some examples endowed with a symmetric cyclic matrix, randomized matrix, ill-posed Cauchy problem, diagonal dominant matrix, and highly ill-conditioned matrix. They are subjected to the convergence criterion with , where is the error tolerance.

6.1. Example 1: Cyclic Matrix Linear System

A cyclic matrix,

, is generated from the first row

. The exact solution is assumed to be

, and

can be obtained from Equation (

1). First we apply GMRES to this problem with

and

. The initial values are

. For this problem, we encounter the situation that

after 150 steps, not

as shown in

Figure 1a by a solid line. As shown in

Figure 1b, the residuals blow up for GMRES.

This problem shows that the original GMRES method cannot be applied to solve this linear system, since

happened after 150, and the iteration of GMRES blows up after around 157 steps. In this situation, by Equation (

35),

the residual obtained by GMRES grows step-by step; hence, the GMRES method is no longer stable after 157 steps.

To overcome this drawback of GMRES, we can either relax the convergence criterion, or employ the version of GMRES before it diverges.

Table 1 compares the maximum error (ME) and number of steps (NS) for different methods under

.

is used in GMRES and ROGMRES;

is used in GMRES(

);

and

are used in GMRES(m);

and

are used in ROGMRES(m) and GMRES(m,

);

and

are used in MPA(m) and A-GMRES(m). As shown in

Figure 1a by a dashed line and a dashed–dotted line, both ROGMRES and ROGMRES(m) can keep the value of

up to the termination; as shown in

Figure 1b, they converge faster than GMRES. ROGMRES(m) converges faster than ROGMRES. This example shows that ROGMRES can stabilize GMRES when it is unstable, as shown in

Figure 1b.

It is interesting that the MPA(m), with a fixed value

, can attain a highly accurate solution with ten steps, as shown in

Figure 1b; A-GMRES(m) obtains ME =

with 15 steps by using

. If the orthogonality is not adopted in A-GMRES(m), ME =

is obtained with 14 steps; it means that

can be kept, even though the orthogonalization technique was not employed. Within ten steps, A-GMRES with

can obtain ME =

. Within nine steps, A-GMRES(m) with

and

can obtain ME =

.

6.2. Example 2: Random Matrix Linear System

The coefficient matrix

of size

is randomly generated with

. The exact solution is

, and

can be obtained by Equation (

1).

We consider the initial values

and take

.

Table 2 compares ME and NS for different methods under

.

is used in ROGMRES, OMPA, A-GMRES, and GMRES(

);

and

are used in GMRES(m), ROGMRES(m) and GMRES(m,

). ROGMRES is better than OMPA and A-GMRES; other methods are not accurate. For this example, the restart ROGMRES(m) is not good.

6.3. Example 3: Inverse Cauchy Problem and Boundary Value

Problem

Consider the inverse Cauchy problem:

The method of fundamental solutions is taken as

where

We consider

where

is an exact solution of the Laplace equation, and

describes the boundary contour in the polar coordinates.

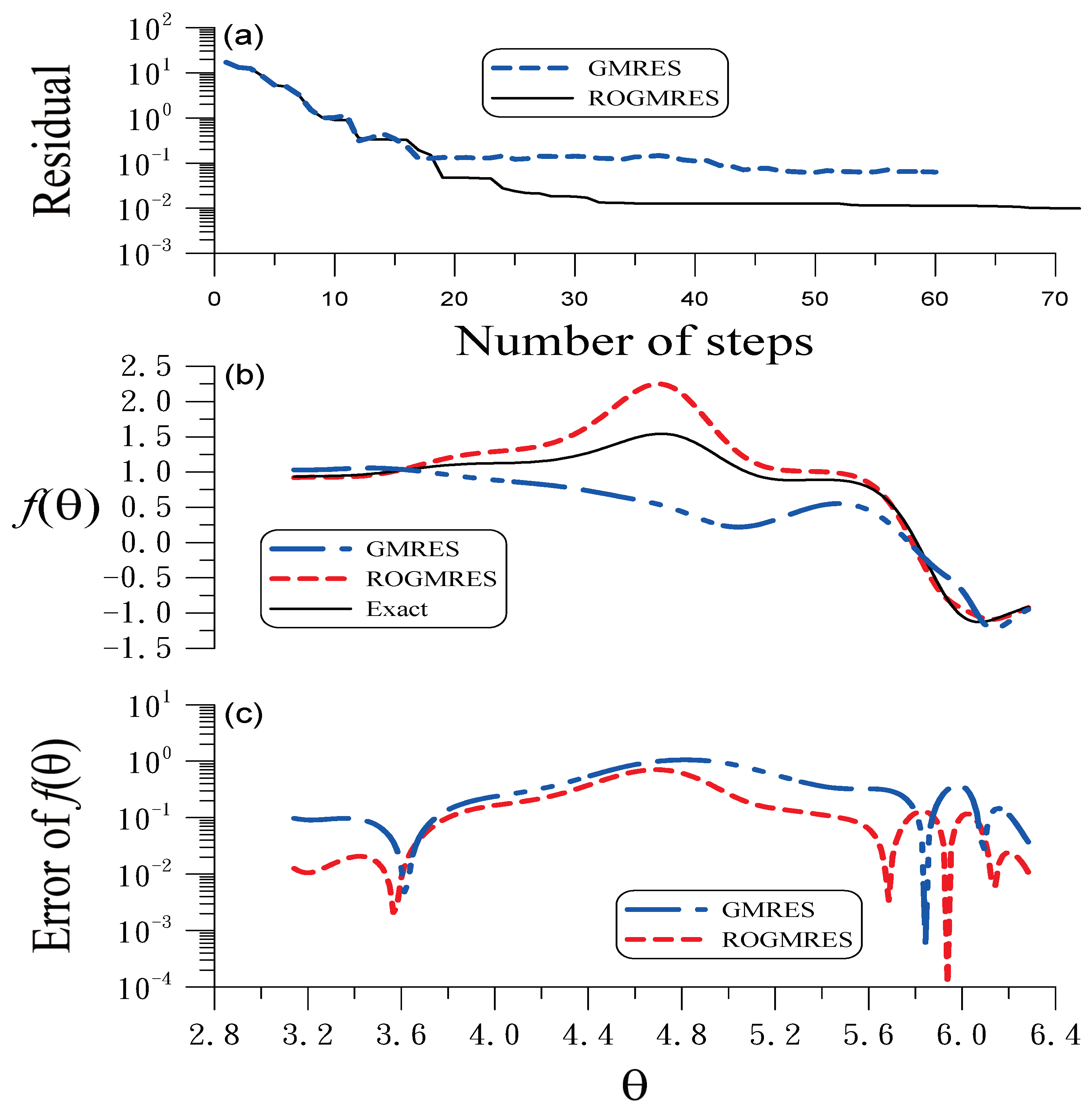

By GMRES with

, through 60 iterations the residual is shown in

Figure 2a, which does not satisfy the convergence criterion with

. If we run it to 70 steps, GMRES will blow up. In contrast, ROGMRES converges with 72 steps. As compared in

Figure 2b, ME obtained by ROGMRES is smaller than 0.27, as shown in

Figure 2c. However, GMRES obtains ME = 0.5 with a worse result.

Because the inverse Cauchy problem is a highly ill-posed problem, GMRES does not converge within a loose convergence criterion , and after 70 steps it will blow up. It is apparent that GMRES cannot solve the Cauchy problem adequately. The ROGMRES method is stable for the Cauchy problem, and can improve the accuracy to 0.27; however, it leaves room to improve the methods based on the Krylov subspace for solving the Cauchy problem.

Next, we consider the mixed boundary value problem. Under

, for GMRES(m) with

and

, as shown in

Figure 3a,

after 12 steps is irregular; it causes the residuals to decrease non-monotonically, as shown in

Figure 3b. The GMRES(m) does not converge within 200 steps, and obtains an incorrect solution with ME = 0.92. If

is used as a stopping criterion, through 14 steps, ME =

is obtained. When the ROGMRES method is applied, we obtain ME =

with 187 steps. As shown in

Figure 3a by a dashed line,

is kept well, and the residuals monotonically decrease, as shown in

Figure 3b by a dashed line for ROGMRES. ME =

is obtained through 167 steps by OMPA with

, which is convergent faster than ROGMRES. ME =

is obtained through 109 steps by A-GMRES with

, which is convergent faster than ROGMRES and OMPA.

6.4. Example 4: Diagonal Dominant Linear System

Consider an example borrowed from Morgan [

27]. The matrix

consists of main diagonal elements from 1 to 1000; the super diagonal elements are 0.1, and other elements are zero. We take

.

Table 3 compares the number of steps (NS) for different methods under

.

is used in OMPA and A-GMRES;

are used in original GMRES(m), ROGMRES(m) and current GMRES(m). The NS of ROGMRES(m), and current GMRES(m) are the same, because of

for the current GMRES(m) before convergence.

Figure 4 compares the residuals.

The method in Equation (

18) is an automatically orthogonality preserving method. When the OMPA and A-GMRES methods do not consider the automatically orthogonality preserving method, the results are drastically different, as shown in

Figure 5. Because

is not preserved, both OMPA and A-GMRES diverge very quickly after 40 steps.

6.5. Example 5: Densely Clustered Non-Symmetric Linear System

Consider an example with

, where

. Suppose that

are exact solutions, and the initial values are

. The values of matrix elements are densely clustered in a narrow range with

. This problem is a highly ill-conditioned non-symmetric linear system with the condition number over the order

.

Table 4 compares the maximal errors (MEs) and number of steps (NS) for different methods under

.

is used in OMPA and A-GMRES;

are used in current GMRES(m), and

are used in ROGMRES(m). ROGMRES(m) is convergent faster than other algorithms. Even for this highly ill-conditioned problem, the proposed novel methods are highly efficient in finding accurate solutions.

7. Conclusions

In this paper the GMRES method was re-examined from the two aspects of preserving the orthogonality and maximizing the decreasing length of the residual vector, both of which can significantly enhance the stability and accelerate the convergence speed of GMRES. If in GMRES is kept to during the iterations, it is stable; otherwise, the GMRES is unstable. For any iterative form of , we can improve it to , such that the orthogonality is preserved automatically, and simultaneously the length of the residual vector is reduced maximally. It brings the GMRES method to a re-orthogonalized GMRES (ROGMRES) method, preserving the orthogonality automatically and having the property of absolute convergence. We derived the new iterative form also from the invariant-manifold theory.

In order to find a better descent vector, we solved a maximal projection problem in a larger affine Krylov subspace to derive the descent vector. We proved that the maximal projection is equivalent to the minimal residual length in the m-dimensional affine Krylov subspace. After taking the automatically orthogonality preserving method into account, a new orthogonalized maximal projection algorithm (OMPA) method was developed. We derived formulas similar to GMRES in the affine Krylov subspace, namely the affine GMRES (A-GMRES) method, which had superior performance for the problems tested in the paper; it is even better than OMPA for some problems. The algorithm A-GMRES possessed two advantages: solving a simpler least squares problem in the affine Krylov subspace, and preserving the orthogonality automatically.

Through the studies conducted in the paper, the novelty points and main contributions are summarized as follows.

The GMRES was examined from the viewpoints of maximizing the decreasing quantity of the residual and to maintain the orthogonality of the consecutive residual vector.

A stabilization factor, , to maintain orthogonality was inserted into GMRES to preserve the orthogonality automatically.

GMRES was improved in a larger space of the affine Krylov subspace.

A new orthogonalized maximal projection algorithm, OMPA, was derived; a new affine A-GMRES was derived.

The new algorithms ROGMRES, OMPA, and A-GMRES guarantee the absolute convergence.

Through examples, we showed that the orthogonalization techniques are useful to stabilize the methods of GMRES, MPA, and A-GMRES.

Numerical testings for five different problems confirmed that the methods of ROGMRES, ROGMRES(m), OMPA, OMPA(m), A-GMRES, and A-GMRES(m) can significantly accelerate the convergence speed compared with the original methods of GMRES and GMRES(m).