Abstract

Prediction of intrinsic disordered proteins is a hot area in the field of bio-information. Due to the high cost of evaluating the disordered regions of protein sequences using experimental methods, we used a low-complexity prediction scheme. Sequence complexity is used in this scheme to calculate five features for each residue of the protein sequence, including the Shannon entropy, the Topo-logical entropy, the Permutation entropy and the weighted average values of two propensities. Particularly, this is the first time that permutation entropy has been applied to the field of protein sequencing. In addition, in the data preprocessing stage, an appropriately sized sliding window and a comprehensive oversampling scheme can be used to improve the prediction performance of our scheme, and two ensemble learning algorithms are also used to verify the prediction results before and after. The results show that adding permutation entropy improves the performance of the prediction algorithm, in which the MCC value can be improved from the original 0.465 to 0.526 in our scheme, proving its universality. Finally, we compare the simulation results of our scheme with those of some existing schemes to demonstrate its effectiveness.

1. Introduction

As the highest content of organic compounds in human body, protein is the main bearer of human life activities, The “Amino Acid Sequence—3-Dimensional Structure—Protein Function” paradigm of protein was generally accepted [1]. However, in the past few decades, it has been found that not all proteins have a fixed three-dimensional structure in the whole sequence, and a protein lacking a specific three-dimensional structure has been continuously discovered by researchers [2]. These proteins lack a stable three-dimensional structure in at least one region and can also perform normal biological functions, so they are called intrinsically disordered proteins (IDPS). IDPS play an important role in physiological processes such as DNA transcription and translation [3]. Studies have shown that disordered proteins are associated with some major human diseases. For example, the lack of IDPS functionality may induce heart disease, Parkinson’s disease, nerve tissue disease, cancer, etc. [4,5,6,7,8]. For example, the first pathogenic mutation in the SNCA gene, encoding for α-synuclein was discovered in cases of familial Parkinson’s disease [9], some of these point mutations cause Parkinson’s with high penetrance. In addition, the severity of cognitive impairment in Alzheimer’s disease was later shown to better correlate with low-molecular weight and soluble amyloid-beta aggregates, when amyloid-beta is highly disorganized in shape, it’s actually less likely to stick together and form toxic clusters that lead to brain cell death [10]. A considerable number of biophysical studies have shown, type-2 diabetic islets are characterized by islet amyloid protein derived from islet amyloid peptide (IAPP), a protein co-expressed by beta cells with insulin that, when misfolded and present in aggregated form, may lead to beta cell failure [11]. Therefore, more and more attention has been paid to the study of disordered proteins in recent years, and the research on the characteristics, functions and prediction of disordered proteins has also been greatly developed.

In the past few decades, there are various schemes for predicting IDPS that continue to emerge, and these methods are roughly divided into two categories: physicochemical-based and calculation-based. The first method is to detect IDPs by using amino acid propensity scale and physicochemical properties of protein sequence, such as GlobPlot [12], IUPred [13], FoldIndex [14] and IsUnstruct [15]. Compared with the physicochemical-based method, The second method distinguishes ordered and disordered proteins with positive samples and negative samples, effectively combines various features, and uses machine learning to make predictions, such as support vector machines (SVM), Naive Bayes (NB), K nearest neighbors (KNN) and decision trees (DT). These schemes include DISOPRED3 [16], SPINE-D [17], ESpritz [18] and MetaDisorder [19]. DISOPRED3 calculates the Position-Specific Substitution Matrix (PSSM) of all residues using three iterations of PSI-BLAST, and predicts the disordered regions and protein binding sites by using support vector machines as classifiers. SPINE-D uses a neural network to predict disorder regions, the algorithm makes a ternary prediction of all residues (ordered residues, short disordered region residues and long disordered region residues), then simplifies it to a binary prediction and trains both short disordered regions and long disordered regions. It is worth mentioning that the hybrid scheme based on various predictors can integrate a plurality of single schemes, and can better utilize the prediction advantages of different aspects of each single scheme, so as to improve the prediction accuracy. For example, MetaDisorder integrates predictors including DISOPRED2 [20], Globplot, IUpred, PrDOS [21], POODLE [22], DISPI [23], RONN [24], etc., So that its prediction results score higher than a single prediction result.

Most of the above IDPS prediction schemes use a large number of features, resulting in too high computational complexity to meet the requirements of making efficient predictions on a large number of data sets. Disordered proteins often have repetitive regions in their amino acid sequences, so they have lower sequence complexity than ordered proteins [25], We propose a new feature extraction scheme based on sequence complexity, which uses five features including Shannon entropy, topological entropy, permutation entropy and two amino acid preferences. Through the proposed preprocessing strategy, the selected features can better reflect the features of disordered regions, and has lower computational complexity than the existing prediction scheme. Finally, two boosting algorithm are used to verify the feasibility of the scheme.

The specific steps of the scheme are as follows:

Step 1: Download the latest 2209 intrinsically disordered protein sequences from DisProt (https://www.disprot.org/, accessed on 6 October 2021). The data set includes 1,217,223 amino acid residues, of which 995,189 residues are ordered and 222,034 residues are disordered.

Step 2: Since the nucleotides of the disordered protein coding gene are different from the ordered protein, the amino acid sequence of the disordered protein shows a more obvious bias. Compared with ordered proteins, disordered proteins have a lower content of hydrophobic residues. We corresponded the 20 amino acids to the numbers 0 and 1, which were used to calculate the permutation entropy.

Step 3: select a suitable sliding window, calculate the Shannon entropy, topological entropy, permutation entropy and two amino acid preferences of each residue, and finally acquire a 1,217,223 × 5 data set DIS2209.

Step 4: Due to the imbalance of the data samples, we performed three oversampling schemes on the data, and selected the comprehensive sampling with better performance. In addition, we used ten-fold cross-validation, using 90% of the DIS2209 data set randomly as the training set and 10% as the test set, and then using the grid search method to find the optimal parameter combination of the trainer, and finally calculating our Four indicators needed: Sensitivity (Sens), Specificity (Spec), F1 score (F1), Matthews Correlation Coefficient (MCC).

Step 5: compare our schemes with the existing schemes.

2. Feature Selection and Preprocessing Process

The amino acid sequence of disordered proteins often has repeated regions, which is lower in sequence complexity than that of ordered proteins. According to this characteristic, we use Shannon entropy, topological entropy, permutation entropy and two amino acid preferences to describe the sequence complexity of proteins. A detailed description of these features follows.

2.1. Shannon Entropy

The Shannon entropy [26] is a standard measure for the order state of sequences and has been applied previously to protein sequences, it quantifies the probability density function of the distribution of values. If the length of a protein sequence is , its Shannon entropy can be expressed as:

where () represents the frequency of the 20 amino acids in the sequence, and the formula can be expressed as:

When , , otherwise .

2.2. Topological Entropy

Topological entropy [27,28,29,30] can also reflect the complexity of protein sequences very well, calculate the complexity function of the protein sequence of length :

representing the total number of different -length subwords of , where is the subsequence of length () in the sequence. For example, given the sequence ,, then the subsequence of are , so .

For a sequence of length and a subsequence length of , the following formula needs to be satisfied:

Denote the segment consisting of consecutive characters in the first paragraph of as , namely:

Then the topological entropy of the protein sequence can be expressed as:

where can be calculated by Equation (3), which represents the number of different subsequences of length n contained in the first segment of length in sequence . If fragment contains all subsequences of length , then , If fragment only contains one subsequence of length , then .

In order to further optimize the calculation result of topological entropy, we use the method of sequence traversal to calculate the topological entropy of each segment, and take the average value as the final topological entropy calculation result:

However, Equation (7) only needs to make the length of the protein sequence greater than 400 for the case of , which exceeds the length of most protein sequences. Therefore, according to the nature of the disordered protein that there are few hydrophobic residues [31], we map the sequence to 0 and 1: map hydrophobic (I,L,V,F,W,Y) residues to 1, and map other residues to 0, as shown in Table 1. Then the Equation (7) can be changed to:

Table 1.

Mapping values of topological entropy.

2.3. Permutation Entropy

In order to better highlight the complexity of protein sequences, we introduced the permutation entropy for the first time. Permutation entropy introduces the idea of permutation when calculating the complexity of reconstructed subsequences, it can be calculated for arbitrary real-world time series. Since the method is extremely fast and robust, it is preferable when there are huge data sets and no time for preprocessing and fine-tuning of parameters [32].

Given a protein sequence of length , specify an embedding dimension and a time delay to reconstruct the original sequence:

Each row in the matrix can be regarded as a reconstructed subsequence, and there are reconstruction subsequence in total. The -th reconstructed subsequence of is . Sort in ascending order based on numerical value:

If the two values are equal, which is , they are sorted according to the index of . In this way, a subsequence is mapped to . Therefore, each row in the matrix reconstructed by the protein sequence can acquire a set of symbol sequences:

where , and , so every m-dimensional subsequence is mapped to one of permutations.

Through the above steps, we can represent the continuous -dimensional subspace with a sequence of symbols, in which there are . The probability distribution of all symbols is represented by , where .

Finally, the permutation entropy of the protein sequence is calculated as:

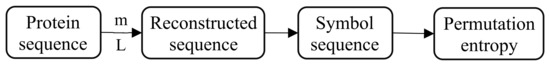

For the convenience of calculation, we use 0 and 1 to represent the 20 amino acids of the protein sequence, as shown in Table 1. If the specified value of is too small, the reconstructed sequence will contain too few states and the subsequences will lose validity and meaning. If the value of is too large, the protein sequence will be homogenized, increasing the amount of calculation and failing to reflect the inherent subtleties of the protein sequence. Therefore, the embedding dimension is generally 3 ~ 7, and in this article. The influence of the delay time can be ignored, usually. The overall calculation process is shown in Figure 1.

Figure 1.

Calculation process of permutation entropy.

2.4. Two Amino Acid Preferences

On the basis of the above three entropies, we also added two amino acid propensity indicators to calculate the complexity of the protein sequence, namely Remark465 and Deleage/Roux given in the GlobPlot NAR article. We use Equation (11) to calculate the values of two amino acid preferences:

represents the mapping value of the -th amino acid preference, correspond to Remark465, Deleage/Roux, respectively, as shown in Table 2.

Table 2.

Mapping values of amino acid sequences according to -th preference.

2.5. Preprocessing Process

The prediction results after directly calculating all the above feature values for training are not ideal, so we use the sliding window to continuously intercept the area of the window length, calculate the five selected features, and assign them to all residues at the corresponding positions.

Given a protein sequence of length , select a sliding window of length , and add zeros at both ends of the protein sequence. As the sliding window slides, calculate the mean value of the five-dimensional feature vector of each window, including Shannon entropy, topological entropy, permutation entropy, and two amino acid preferences, and assign to all residues in the window. Finally, Dividing the accumulated value of all residues by the number of accumulations, the five-dimensional feature vector of each residue can be obtained:

Since the 995,189 residues in the DIS2209 data set are ordered, the 222,034 residues are disordered, and the number of positive and negative samples is unbalanced, we have added an oversampling method to increase the sample size and generate according to the law of samples with fewer categories. More samples of this label make the data tend to be balanced and the prediction results are more accurate. Compared with some existing oversampling schemes, we adopted SMOTE oversampling. The specific steps are as follows:

Step 1: For each sample of the minority class, use Euclidean distance as the standard to calculate the distance from all samples in the minority class sample set to obtain its k nearest neighbors.

Step 2: Set a sampling ratio according to the sample imbalance ratio to determine the sampling magnification . For each minority sample , randomly select several samples from its nearest neighbors, assuming that the selected nearest neighbor is .

Step 3: For each neighbor selected at random, it is defined as:

3. Algorithm Scheme

For the problem of data imbalance, common processing methods include: sampling (over-sampling or under-sampling), cost-sensitive learning, and Ensemble learning methods. As mentioned above, we have adopted the method of oversampling to increase the number of samples in the minority class, so that the data tends to be balanced, but when the learner encounters this situation, it will encounter many repeated samples, so it will learn A special mode, which greatly increases the probability of overfitting. The ensemble learning algorithm is the result of merging multiple base classifiers, and fully considers the uncertainty and the possibility of misclassification of the sample.

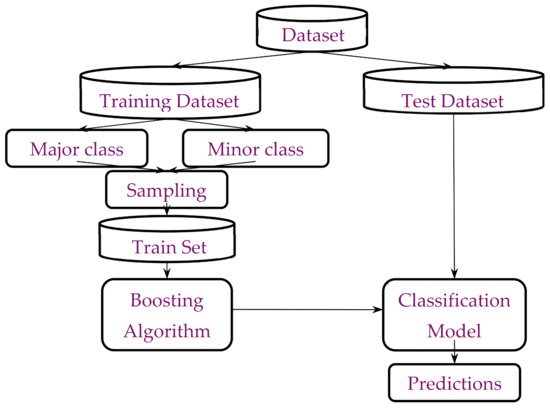

Therefore, based on the ensemble learning (Boosting) algorithm, we used the oversampling method to preprocess the data and predict the data set DIS2209. Figure 2 shows the specific flow chart.

Figure 2.

Scheme specific flow chart.

3.1. Gradient Boosting Decision Tree

Gradient Boosting Decision Tree (GBDT) is an algorithm to classify and regress data by using an additive model (that is, a linear combination of basis functions) and using the negative gradient of the loss function to fit the approximate value of the current round of loss.

For a given sample set, first determine the cut point :

For the number of iterations , assuming the number of samples , calculate the negative gradient:

Use to fit a regression tree to acquire the leaf node area of the th tree, where is the number of leaf nodes. For each sample in the leaf node, we find the smallest loss function, which is the best output value of the fitting leaf node:

Strong learners updated to this round:

Finally acquire the learner expression:

By fitting the negative gradient of the loss function, we have found a general way to fit the loss error, so whether it is a classification problem or a regression problem, we can use GBDT to fit the negative gradient of the loss function Solve our classification regression problem. The only difference lies in the different negative gradients caused by different loss functions.

3.2. LightGBM

LightGBM is a framework that implements the GBDT algorithm. It is optimized on the traditional GBDT algorithm, which can speed up the training speed of the GBDT model without compromising the accuracy, and further improve the accuracy of predicting IDPS. The specific optimization is:

- Using Histogram’s decision tree algorithm, this algorithm can reduce memory usage and computing time through feature discretization.

- Using the Leaf-wise algorithm with depth limitation, this strategy can split the same layer of leaves at the same time by traversing the data once, and it is easy to perform multi-thread optimization, and it is also easy to control the complexity of the model, and it is not easy to overfit.

- The single-sided gradient sampling algorithm is used to exclude most of the samples with small gradients, and only the remaining samples are used to calculate the information gain. This algorithm can achieve a balance between reducing the amount of data and ensuring accuracy.

- The use of mutually exclusive feature bundling algorithm can transform many mutually exclusive features into low-dimensional dense features, effectively avoiding unnecessary calculation of zero-value features.

- Supports efficient parallelism, including feature parallelism, data parallelism, and voting parallelism.

4. Performance Evaluation

We selected four indicators to evaluate the performance of the model: sensitivity (Sens), specificity (Spec), F1-Score (F1) and Matthews’ correlation coefficient (MCC). Sens, Spec, and MCC are often used to evaluate prediction results in bioinformatics [33,34]. On this basis, we have added F1-Score to balance the accuracy and recall of the classification model. The following are the mathematical definitions of these four indicators:

Sensitivity:

Specificity:

F1-Score:

where, .

Matthews correlation coefficient:

In all the above formulas, represents the number of samples where the actual disordered residues are predicted to be disordered residues, represents the number of samples where the actual ordered residues are predicted to be disordered residues, represents the number of samples where the actual ordered residues are predicted to be ordered residues, represents the number of samples where the actual disordered residues are predicted to be ordered residues.

5. Result and Discussion

5.1. The Effect of Permutation Entropy

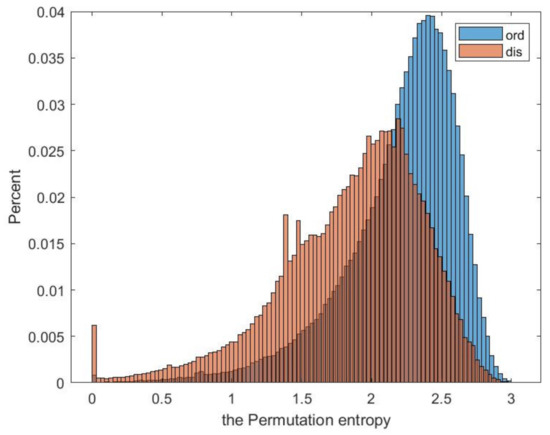

In this article, we use permutation entropy to describe sequence complexity. Experiments have proved that low-complexity protein regions are often disordered. Permutation entropy has not been used to predict IDPS before, and it is used for the first time in our research. We calculated the permutation entropy of all ordered and disordered proteins in the data set DIS2209, and their probability density distribution is shown in Figure 3. It can be seen that there is a clear difference between ordered and disordered regions.

Figure 3.

Probability distribution diagram.

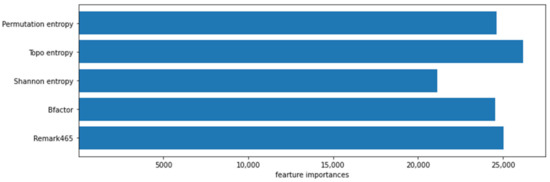

In classification prediction, we can calculate the feature importance score of the predictive model, the score can highlight which features are important to the model and which features are not important to the model, helping us better understand the data and model. We show the feature importance of the five features in Figure 4. It can be seen that the feature importance of permutation entropy is higher than Shannon entropy and is basically the same as the preference of two amino acids.

Figure 4.

The importance of five features in the algorithm.

In order to ensure that permutation entropy plays a positive role in the prediction of intrinsically disordered proteins, we compared the prediction results with and without permutation entropy features in the two ensemble learning algorithms. The results are shown in Table 3 and Table 4.

Table 3.

The influence of permutation entropy on GBDT-PE.

Table 4.

The influence of permutation entropy on LightGBM-PE.

By comparison, after adding permutation entropy to the two ensemble learning algorithms, the prediction results are significantly improved. In GBDT-PE, F1 and MCC have increased by 4% and 5%, respectively. In LightGBM-PE, the increase is the most obvious, F1 and MCC have increased by 9% and 6%, respectively. This is enough to show that permutation entropy plays a positive role in the prediction of inherent disordered proteins.

5.2. The Influence of Sliding Window and Oversampling

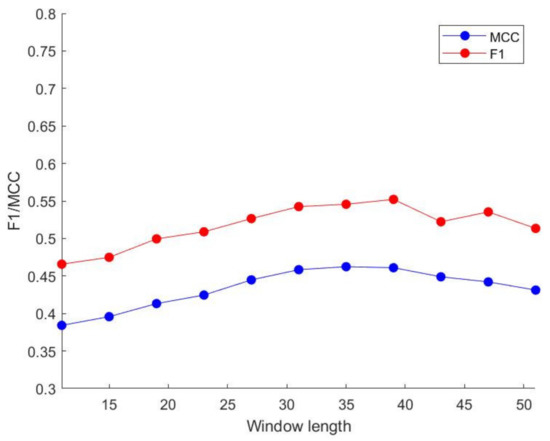

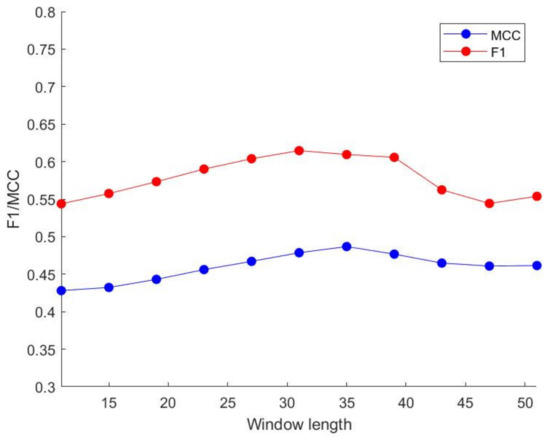

For the DIS2209 data set, we use a ten-fold cross-validation method to randomly divide the protein sequence into 10 subsets of roughly the same size, and use GBDT and LightGBM to train and predict data with different window sizes. The specific results are shown in Table 5.

Table 5.

Performance comparison of different window sizes.

By comparing various indicators, as the window length increases, the MCC value and F1 value gradually increase. When the window size is greater than 35, their values tend to be stable, so we choose a window length of 35 to process our features. The above-mentioned trend of change is shown in Figure 5 and Figure 6. Similarly, we use GBDT and LightGBM to perform three oversampling schemes on the DIS2209 data set. When the SMOTE sampling scheme is used, the prediction effect is the best. The specific results are shown in Table 6.

Figure 5.

Different window size performance in GBDT.

Figure 6.

Different window size performance in LightGBM.

Table 6.

Performance comparison of different oversampling schemes.

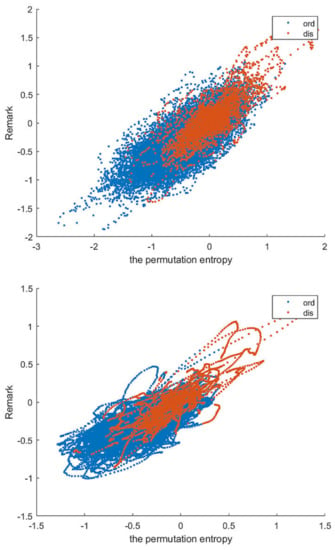

Our pre-processing scheme improves the accuracy and stability of the prediction results of each learning method. Finally, we compare the MCC values before and after pre-processing in Table 7, taking sliding window size 35 and SMOTE oversampling as examples. We selected the Remark and Permutation Entropy features of all residues in the DIS2209 dataset and compared their performance before and after pretreatment at a window length of 31, as shown in Figure 7.

Table 7.

The influence of the preprocessing scheme.

Figure 7.

Remark465 and Permutation entropy before and after windowing.

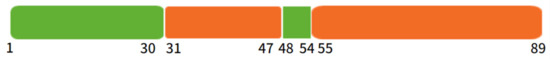

In order to test the predictions of the model, we take the IAPP protein associated with type-2 diabetes from DisProt as an example and acquire the prediction result as Figure 8. At the same time, the standard prediction results are shown as Figure 9.

Figure 8.

The prediction result of IAPP based on our system.

Figure 9.

The prediction result of IAPP based on DisPort.

5.3. Compare with Existing Forecasting Schemes

In order to compare our scheme with the existing schemes, we used the dataset R80 collected by Yang et al. for testing. The R80 dataset contains 78 sequences with 29,243 ordered residues and 3566 disordered residues. Existing schemes include DISPRED2 [20], BVDEA [35], DisPSSMP [36], RONN [24], IsUnstruct [15], FoldIndex [14]. Table 8 shows the prediction results of each program.

Table 8.

Prediction performance comparison based on test set R80.

Considering the classification method used, we use GBDT-PE and LightGBM-PE as the abbreviations of our scheme. Among these solutions, the highest Sens, Spec, F1 and MCC are DISPRED2, RONN, LightGBM-PE, LightGBM-PE, respectively. Only our LightGBM-PE scheme and IsUnstruct scheme have MCC values exceeding 0.5. Similarly, the F1 values of LightGBM-PE, DisPSSMP and IsUnstruct all exceed 0.6. After a comprehensive comparison, the results of our scheme are roughly the same as those of BVDEA, DisPSSMP and IsUnstruct. DisPSSMP and BVDEA need to calculate the 188 and 120 features of each residue in the protein sequence, respectively, while our solution only needs to calculate 5 features, which has lower computational complexity and simpler decision curve calculation, so our solution is more robust than DisPSSMP and BVDEA, and requires fewer learning samples.

6. Conclusions

In this paper, five features are selected to predict intrinsically disordered proteins, including Shannon entropy, topological entropy, permutation entropy and two amino acid preferences. Among them, permutation entropy is the first application in this field. In the data preprocessing stage, we used a sliding window to connect adjacent residues in the sequence. At the same time, we used the SMOTE oversampling scheme and two integrated learning algorithms to solve the imbalance of positive and negative samples in the original data. These schemes greatly improved Forecast accuracy. By comparing some existing schemes, our scheme has better F1 value and MCC value. The results show that the LightGBM-PE scheme can reach the highest MCC value of 0.526. Our solution uses only five features, has lower computational complexity, shorter training time, lower memory usage, and can adapt to training with a large number of data samples.

Author Contributions

Conceptualization, H.L.; project administration, H.L.; supervision, H.L.; validation, H.L. and X.Z.; formal analysis, X.Z.; investigation, X.Z.; methodology, X.Z.; software, X.Z.; writing—original draft preparation, X.Z.; writing—review and editing, X.Z.; resources, H.H. All authors have read and agreed to the published version of the manuscript.

Funding

The author(s) received no specific funding for this study.

Data Availability Statement

Publicly available datasets were analyzed in this study. These data can be found on the website: https://disprot.org (accessed on 6 October 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dunker, A.K.; Lawson, J.D.; Brown, C.J.; Williams, R.M.; Romero, P.; Oh, J.S.; Oldfield, C.J.; Campen, A.M.; Ratliff, C.M.; Hipps, K.W.; et al. Intrinsically Disordered Protein. J. Mol. Graph. Model. 2001, 19, 26–59. [Google Scholar] [CrossRef] [Green Version]

- Uversky, V.N.; Oldfield, C.J.; Dunker, A.K. Intrinsically Disordered Proteins in Human Diseases: Introducing the D2 Concept. Annu. Rev. Biophys. 2008, 37, 215. [Google Scholar] [CrossRef] [PubMed]

- Dyson, H.J.; Wright, P.E. Intrinsically Unstructured Proteins and Their Functions. Nat. Rev. Mol. Cell Biol. 2005, 6, 197–208. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.; LeGall, T.; Oldfield, C.J.; Dunker, A.K.; Uversky, V.N. Abundance of Intrinsic Disorder in Protein Associated with Cardiovascular Disease. Biochemistry 2006, 45, 10448–10460. [Google Scholar] [CrossRef]

- Uversky, V.N.; Davé, V.; Iakoucheva, L.M.; Malaney, P.; Metallo, S.J.; Pathak, R.R.; Joerger, A.C. Pathological Unfoldomics of Uncontrolled Chaos: Intrinsically Disordered Proteins and Human Diseases. Chem. Rev. 2014, 114, 6844–6879. [Google Scholar] [CrossRef] [Green Version]

- Goh, K.M.; Dunker, A.K.; Uversky, V.N. Protein Intrinsic Disorder Toolbox for Comparative Analysis of Viral Proteins. BMC Genom. 2008, 9, S4. [Google Scholar] [CrossRef] [Green Version]

- Uversky, V.N.; Roman, A.; Oldfield, C.J.; Dunker, A.K. Protein Intrinsic Disorder and Human Papillomaviruses: Increased Amount of Disorder in E6 and E7 Oncoproteins from High Risk HPVs. J. Proteome Res. 2006, 5, 1829–1842. [Google Scholar] [CrossRef]

- Xue, B.; Williams, R.J.; Oldfield, C.; Kian-Meng Goh, G.; Keith Dunker, A.; Uversky, V.N. Viral Disorder or Disordered Viruses: Do Viral Proteins Possess Unique Features? Protein Pept. Lett. 2010, 17, 932–951. [Google Scholar] [CrossRef]

- Oliveira, L.; Gasser, T.; Edwards, R.; Zweckstetter, M.; Melki, R.; Stefanis, L.; Lashuel, H.A.; Sulzer, D.; Vekrellis, K.; Halliday, G.M.; et al. Alpha-Synuclein Research: Defining Strategic Moves in the Battle Against Parkinson’s Disease. NPJ Parkinson Dis. 2021, 7, 1–23. [Google Scholar] [CrossRef]

- Tempra, C.; Scollo, F.; Pannuzzo, M.; Lolicato, F.; La Rosa, C. A Unifying Framework for Amyloid-Mediated Membrane Damage: The Lipid-Chaperon Hypothesis. Biochim. Biophys. Acta BBA Proteins Proteom. 2021, 1870, 140767. [Google Scholar] [CrossRef]

- Milardi, D.; Gazit, E.; Radford, S.E.; Xu, Y.; Gallardo, R.U.; Caflisch, A.; Westermark, G.T.; Westermark, P.; Rosa, C.L.; Ramamoorthy, A. Proteostasis of Islet Amyloid Polypeptide: A Molecular Perspective of Risk Factors and Protective Strategies for Type II Diabetes. Chem. Rev. 2021, 121, 1845–1893. [Google Scholar] [CrossRef]

- Linding, R.; Russell, R.B.; Neduva, V.; Gibson, T.J. GlobPlot: Exploring Protein Sequences for Globularity and Disorder. Nucleic Acids Res. 2003, 31, 3701–3708. [Google Scholar] [CrossRef] [Green Version]

- Dosztanyi, Z.; Csizmok, V.; Tompa, P.; Simon, I. IUPred: Web Server for the Prediction of Intrinsically Unstructured Regions of Proteins Based on Estimated Energy Content. Bioinformatics 2005, 21, 3433–3434. [Google Scholar] [CrossRef] [Green Version]

- Prilusky, J.; Felder, C.E.; Zeev-Ben-Mordehai, T.; Rydberg, E.H.; Man, O.; Beckmann, J.S.; Silman, I.; Sussman, J.L. FoldIndex: A Simple Tool to Predict Whether a given Protein Sequence Is Intrinsically Unfolded. Bioinformatics 2005, 21, 3435–3438. [Google Scholar] [CrossRef]

- Lobanov, M.Y.; Galzitskaya, O.V. The Ising Model for Prediction of Disordered Residues from Protein Sequence Alone. Phys. Biol. 2011, 8, 035004. [Google Scholar] [CrossRef]

- Ward, J.J.; Sodhi, J.S.; McGuffin, L.J.; Buxton, B.F.; Jones, D.T. Prediction and Functional Analysis of Native Disorder in Proteins from the Three Kingdoms of Life. J. Mol. Biol. 2004, 337, 635–645. [Google Scholar] [CrossRef]

- Zhang, T.; Faraggi, E.; Xue, B.; Dunker, A.K.; Uversky, V.N.; Zhou, Y. SPINE-D: Accurate Prediction of Short and Long Disordered Regions by a Single Neural-network based Method. J. Biomol. Struct. Dyn. 2012, 29, 799–813. [Google Scholar] [CrossRef] [Green Version]

- Tosatto, S. ESpritz: Accurate and Fast Prediction of Protein Disorder. Bioinformatics 2012, 28, 503. [Google Scholar]

- Kozlowski, L.P.; Bujnicki, J.M. MetaDisorder: A Meta-Server for the Prediction of Intrinsic Disorder in Proteins. BMC Bioinform. 2012, 13, 111. [Google Scholar] [CrossRef] [Green Version]

- Ward, J.J.; McGuffin, L.J.; Bryson, K.; Buxton, B.F.; Jones, D.T. The DISOPRED Server for the Prediction of Protein Disorder. Bioinformatics 2004, 20, 2138–2139. [Google Scholar] [CrossRef]

- Ishida, T.; Kinoshita, K. PrDOS: Prediction of Disordered Protein Regions from Amino Acid Sequence. Nucleic Acids Res. 2007, 35, W460–W464. [Google Scholar] [CrossRef] [PubMed]

- Shimizu, K.; Hirose, S.; Noguchi, T. POODLE-S: Web Application for Predicting Protein Disorder by Using Physicochemical Features and Reduced Amino Acid Set of a Position-Specific Scoring Matrix. Bioinformatics 2007, 23, 2337. [Google Scholar] [CrossRef] [PubMed]

- Medina, M.W.; Gao, F.; Naidoo, D.; Rudel, L.L.; Temel, R.E.; McDaniel, A.L.; Marshall, S.M.; Krauss, R.M. Coordinately Regulated Alternative Splicing of Genes Involved in Cholesterol Biosynthesis and Uptake. PLoS ONE 2011, 6, 19420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, Z.R.; Thomson, R.; Mcneil, P.; Esnouf, R.M. RONN: The Bio-Basis Function Neural Network Technique Applied to the Detection of Natively Disordered Regions in Proteins. Bioinformatics 2005, 21, 3369–3376. [Google Scholar] [CrossRef] [Green Version]

- Jones, D.T.; Ward, J.J. Prediction of Disordered Regions in Proteins from Position Specific Score Matrices. Proteins 2003, 53, 573–578. [Google Scholar] [CrossRef]

- Pritišanac, I.; Vernon, R.M.; Moses, A.M.; Forman Kay, J.D. Entropy and Information within Intrinsically Disordered Protein Regions. Entropy 2019, 21, 662. [Google Scholar] [CrossRef] [Green Version]

- Hao, H.; Jiaxiang, Z.; Aimé, L.-E. A Low Computational Complexity Scheme for the Prediction of Intrinsically Disordered Protein Regions. Math. Probl. Eng. 2018, 2018, 1–7. [Google Scholar]

- Jin, S.; Tan, R.; Jiang, Q.; Xu, L.; Peng, J.; Wang, Y.; Wang, Y. A Generalized Topological Entropy for Analyzing the Complexity of DNA Sequences. PLoS ONE 2014, 9, e88519. [Google Scholar] [CrossRef]

- Koslicki, D. Topological Entropy of DNA Sequences. Bioinformatics 2011, 27, 1061–1067. [Google Scholar] [CrossRef] [Green Version]

- Hao, H.; Jiaxiang, Z.; Guiling, S. The Prediction of Intrinsically Disordered Proteins Based on Feature Selection. Algorithms 2019, 12, 46. [Google Scholar]

- Orosz, F.; Ovádi, J. Proteins without 3D Structure: Definition, Detection and Beyond. Bioinformatics 2011, 27, 1449–1454. [Google Scholar] [CrossRef] [Green Version]

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Le, N.Q.K.; Do, D.T.; Hung, T.N.K.; Lam, L.H.T.; Huynh, T.T.; Nguyen, N.T.K. A Computational Framework Based on Ensemble Deep Neural Networks for Essential Genes Identification. Int. J. Mol. Sci. 2020, 21, 9070. [Google Scholar] [CrossRef]

- Ho Thanh Lam, L.; Le, N.H.; Van Tuan, L.; Tran Ban, H.; Nguyen Khanh Hung, T.; Nguyen, N.T.K.; Huu Dang, L.; Le, N.Q.K. Machine Learning Model for Identifying Antioxidant Proteins Using Features Calculated from Primary Sequences. Biology 2020, 9, 325. [Google Scholar] [CrossRef]

- Kaya, I.E.; Ibrikci, T.; Ersoy, O.K. Prediction of Disorder with New Computational Tool: BVDEA. Expert Syst. Appl. 2011, 38, 14451–14459. [Google Scholar] [CrossRef] [Green Version]

- Su, C.T.; Chen, C.Y.; Ou, Y.Y. Protein Disorder Prediction by Condensed PSSM Considering Propensity for Order or Disorder. BMC Bioinform. 2006, 7, 1–16. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).