1. Introduction

The Lempel–Ziv-77 (LZ77) [

1] and Lempel–Ziv-78 (LZ78) [

2] factorizations are some of the most well-studied techniques for lossless data compression. Several variants such as Lempel–Ziv–Storer–Szymanski (LZSS) [

3] have been proposed, and nowadays we often perceive the LZSS factorization as the standard variant of the LZ77 factorization. Both are defined as follows: Given a factorization

for a string

T:

it is the LZSS factorization of T if each factor , for , is either the leftmost occurrence of a character or the longest prefix of that occurs at least twice in ; or

it is the classic LZ77 factorization of T if each factor , for , is the shortest prefix of that has only one occurrence in (as a suffix). The last factor is the suffix that may have multiple occurrences in .

The

non-overlapping variation is to restrict, when computing

, all candidate occurrences of

to end before

starts. For LZSS, this means that a factor

must occur at least once in

. Given a text

T of length

n whose characters are drawn from an integer alphabet of size

, we want to study the problem of computing the non-overlapping LZSS factorization memory-efficiently with the aid of two suffix tree representations, which were used by Fischer et al. [

4] (Section 2.2) to compute the classic LZ77, LZSS, and LZ78 factorizations in linear time within the asymptotic space requirements of the respective suffix tree. In this article, we obtain the non-overlapping LZSS factorization with similar techniques and within the same space boundaries:

Theorem 1. Given a text of length n whose characters are drawn from an integer alphabet with size , we can compute its non-overlapping LZSS factorization

in time using bits (excluding the read-only text T); or

in time using bits,

for a selectable constant . We support outputting the factors directly or storing the factors within the (asymptotic) bounds of the working space such that we can retrieve a factor in constant time.

We also show that we can compute the longest previous non-overlapping factor table [

5] within the same space and time complexities (Theorem 3) by providing a succinct representation of this table (Lemma 1).

Subsequently, we study the substring compression query problem [

6], where the task is to compute the factorization of a given substring of the text in time related to the number of computed factors and possibly a logarithmic dependency on the text length. However, this problem has only been conceived for the LZ77 factorization family. Here, we provide the first non-trivial solutions for LZ78, again with the help of several suffix tree representations:

Theorem 2. Given a text of length n whose characters are drawn from an integer alphabet with size , we can compute a data structure on T in time that computes, given an interval , the LZ78 factorization of in

time using bits of space;

time using bits of space; or

time using bits of space,

where is the number of computed LZ78 factors and is a selectable constant. In the last result, we need additionally the bits of space for the read-only text during the queries if there is any character of the alphabet omitted in the text (otherwise, we can then simulate a text access with the function as described in [4]). We can further speed-up the last two solutions of Theorem 2 by spending more space (Theorem 4).

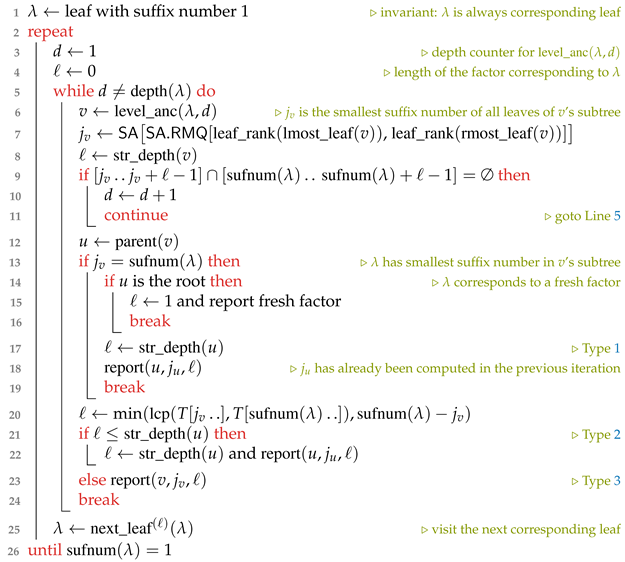

Figure 1 shows a juxtaposition of all Lempel–Ziv factorizations addressed in this article.

2. Preliminaries

With lg we denote the logarithm to base two. Our computational model is the word RAM model with machine word size for a given input size n. Accessing a word costs time.

Let T be a text of length n whose characters are drawn from an integer alphabet with . Given with , then X, Y, and Z are called a prefix, substring, and suffix of T, respectively. We call the ith suffix of T and denote a substring with .

Given a character

and an integer

j, the

rank query

counts the occurrences of

c in

and the

select query

gives the position of the

jth

c in

T. We stipulate that

. If the alphabet is binary, i.e., when

T is a bit vector, there are data structures [

7,

8] that use

extra bits of space and can compute

and

in constant time, respectively. Each of those data structures can be constructed in time linear in

. We say that a bit vector has a

rank-support and a

select-support if it is endowed by data structures providing constant time access to

and

, respectively.

From now on, we assume that T ends with a special character $ smaller than all other characters appearing in T. Under this assumption, there is no suffix of T having another suffix of T as a prefix. The suffix trie of T is the trie of all suffixes of T. There is a one-to-one relationship between the suffix trie leaves and the suffixes of T. The suffix tree of T is the tree obtained by compacting the suffix trie of T. Similar to the suffix trie, the suffix tree has n leaves, but the number of internal nodes of the suffix tree is at most n because every node is branching. The string stored in a suffix tree edge e is called the label of e. We define the function returning, for each edge e, the length of e’s label. The string label of a node v is defined as the concatenation of all edge labels on the path from the root to v; its string depth, denoted by , is the length of its string label. The leaf corresponding to the ith suffix is labeled with the suffix number . We write for the suffix number of a leaf . The leaf-rank is the preorder rank () of a leaf among the set of all leaves, denoted by for a leaf . For instance, the leftmost leaf in has leaf-rank 1, while the rightmost leaf has leaf-rank n. The function returns the leaf whose suffix number is the suffix number of incremented by one, or 1 if the suffix number of is n.

Reading the suffix numbers stored in the leaves of

in leaf-rank order gives the suffix array [

9]. We denote the suffix array and the inverse suffix array of

T by

and

, respectively. The array

is defined such that

for every

. The two arrays

and

have the following relation with the two operations

and

on the

leaves:

For the leaf with , we have .

For the leaf with , we have .

is an array with

and

being the length of the longest common prefix (LCP) of the

lexicographically jth smallest suffix

with its lexicographic predecessor

for

. The permuted LCP array

([

10] [Section 4]) is a permutation of

with

for

, and can be stored within

bits of space. The

-function [

11] is defined by

for

with

(and

for

). It can be stored in

bits while supporting constant access time [

12].

In this article, we focus on the following two suffix tree representations, which are an ensemble of some of the aforementioned data structures:

The succinct suffix tree (SST), using

bits of space ([

4] [Section 2.2.3]) for a selectable constant

, contains, among others, a

-bits representation of

and

with

access time for each array.

The compressed suffix tree (CST) using

bits of space [

10,

13] contains, among others, the

-function.

Both suffix tree representations can be constructed in linear time within their final space requirements (asymptotically) when neglecting the space requirements of the read-only text

T. They store the

array and a succinct representation of the suffix tree topology such as a balanced parentheses (BP) [

7] sequence. The BP sequence represents a rooted, unlabeled but ordered tree of

n nodes by a bit vector of length

bits. Since the suffix tree has at most

nodes, the BP representation of the

topology uses at most

bits. For example, the BP sequence of the suffix tree given in

Figure 2 is

, where we label the starting of an internal node and the center of a leaf ‘

()’ with the respective preorder number on top. The BP sequence can be conceptionally constructed by performing a preorder traversal on the tree, writing an opening parenthesis when walking down an edge and writing a closing parenthesis when climbing up an edge. We augment the BP sequence of

with auxiliary data structures [

14] of

bits to support queries such as

returning the parent of a node

v, a

level ancestor query returning the ancestor on depth

d of the leaf

, or

, all in constant time. Note that the

depth of a node

v, i.e., the number of edges from

v to the root, is at most

.

For our algorithms, we want to simulate a linear scan on the text from its beginning to its end by visiting the leaves in ascending order with respect to their suffix numbers (starting with the leaf with suffix number 1, and ending at the leaf with suffix number n). For that, we iteratively call . We can compute by first computing the leaf-rank of the succeeding leaf of a leaf with , and then selecting by its leaf-rank; we can select a leaf by its leaf-rank in constant time due to the BP sequence representation of the suffix tree topology (the BP sequence can be augmented with a rank- and select-support for leaves represented by the empty parentheses ‘()’). Since we can simulate with and , the SST needs time for evaluating .

Finally, a

factorization of

T of size

z partitions

T into

z substrings

. Each such substring

is called a

factor. In what follows, we deal with the non-overlapping LZSS factorization in

Section 3, and subsequently (in

Section 4) with the LZ78 factorization in the special context that we want to compute it on a substring of

T after a preprocessing step.

3. Non-Overlapping LZSS

Let

and

z denote the number of factors of the overlapping LZSS factorization (i.e., the standard LZSS factorization supporting overlaps) and of the non-overlapping LZSS factorization, respectively. Kosolobov and Shur [

15] showed that

. Although being inferior to the overlapping LZSS factorization with respect to the number of factors, the non-overlapping LZSS factorization is an important tool for finding approximate repetitions [

16], periods [

17], seeds [

18], tandem repeats [

19], and other regular structures (cf. the non-overlapping s-factorization in ([

20] [Chpt. 8])).

Algorithms computing the non-overlapping LZSS factorization usually compute the longest previous non-overlapping factor table , where stores the length of the LCP of with all substrings for , which we set to zero if no such substring exists (i.e., ). Having , we can iteratively compute the non-overlapping LZSS factorization because with for .

We are aware of the algorithms of Crochemore and Tischler [

5] and Crochemore et al. [

21] computing

in linear time with a linear number of words. There are further practical optimizations [

22,

23,

24] computing

in linear time for constant alphabets. Finally, Ohlebusch and Weber [

25] gave a linear time conversion algorithm from the longest previous factor table

[

26] to

if the leftmost possible referred positions

with

for each text position

are provided. It seems possible that, instead of overwriting the

array with the

array, we could run their algorithm on a

-bits succinct representation of the

array supporting sequential scan in constant time ([

27] [Corollary 5]) to produce an

array representation within the same space due to the following lemma:

Lemma 1. for .

Proof. Assume that (since trivially holds). According to the definition, there exists an occurrence of with . Hence, and . Thus, has a common prefix with a substring of of (at least) length , i.e., . The upper bound follows from the fact that a factor cannot protrude T to the right. □

Consequently,

is non-decreasing. By storing the differences

for

in a unary bit sequence, we can linearly decode

from this unary bit sequence because we know that

. Since

by the above lemma (in particular

), the sequence has at most

bits. Obviously, this sequence can be written sequentially from right to left in constant time per

value in reverse order

(the algorithm of Ohlebusch and Weber [

25] computes

in this order). It is therefore possible to compute

within

bits on top of

P and a compressed indexing data structures such as the FM-index [

28] of the text: For that purpose, Okanohara and Sadakane [

29] proposed an algorithm computing

and

P with the FM-index in

time, which was improved by Prezza and Rosone [

30] to

time. However, the need of

P, using

bits when stored in a plain array, makes an approach that transforms

to

after computing

and

P rather unattractive. In what follows, we present a different way that directly computes the non-overlapping LZSS factorization or

with near-linear or linear running time, without the need of

P.

3.1. Setup

Our idea is an adaptation of the LZSS factorization introduced in ([

4] [Section 3]). To explain our approach, we first stipulate that

T ends with a unique character

$ that is smaller than all other characters appearing in

T. Next, we distinguish between fresh and referencing factors. We say that a factor is

fresh if it is the leftmost occurrence of a character. We call all other factors

referencing. A referencing factor

has a reference pointing to the starting position of its longest previous occurrence (as a tie break, we always select the

leftmost such position). We call this starting position the

referred position of

. More precisely, the referred position of a factor

is the smallest text position

j with

and

. Compared to the overlapping LZSS factorization, we require here the additional restriction that

. This makes the computation of the referred positions more technical: Let

j be the referred position of a factor

, and let

S be the longest substring starting before

i that is a prefix of

. We associate the factor

F with one of the following three types:

- Type 1:

(the factor F coincides with the overlapping LZSS factor that would start at );

- Type 2:

is shorter than S, but (then there is a suffix tree node that has the string label F); or

- Type 3:

and (otherwise, the factor F could be extended to the right).

An example is

, where the factor borders are symbolized by the vertical bar ∣, and the referencing factors are labeled with their types (fresh factors are not labeled). If

F is of Type 3, the suffixes

and

share more than

ℓ characters such that

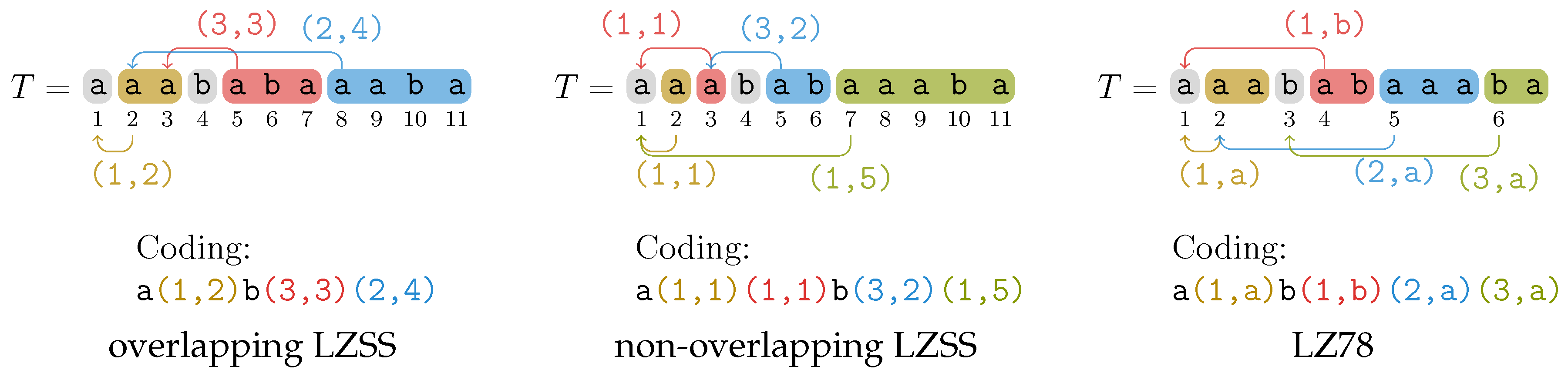

F is not a string label of any suffix tree node in general, but it is at least a prefix of the string label of a node. This is the case for the third factor

ab in the aforementioned example, as can be seen in

Figure 2.

To find the referred positions, we mark certain nodes as witnesses, which create a connection between corresponding leaves and their referred positions. A leaf is called corresponding if its suffix number is the starting position of a factor. We say that the witness of a fresh factor is the root. For a referencing factor F, the witness of F is the highest node whose string label has F as a prefix; the witness of F determines the referred position of F, which is the smallest suffix number among all leaves in its subtree.

Despite this increased complexity compared to the overlapping LZSS factorization, the non-overlapping factorization can be computed with the suffix tree in

time using

bits of space ([

31] [APL16]). Here, we adapt the algorithms of (Fischer et al. [

4] [Section 3]) computing the overlapping LZSS factorization to compute the non-overlapping factorization by following the approach of Gusfield [

31]. Our goal is to compute the coding of the factors, i.e., the referred position and the length of each factor (cf.

Figure 1).

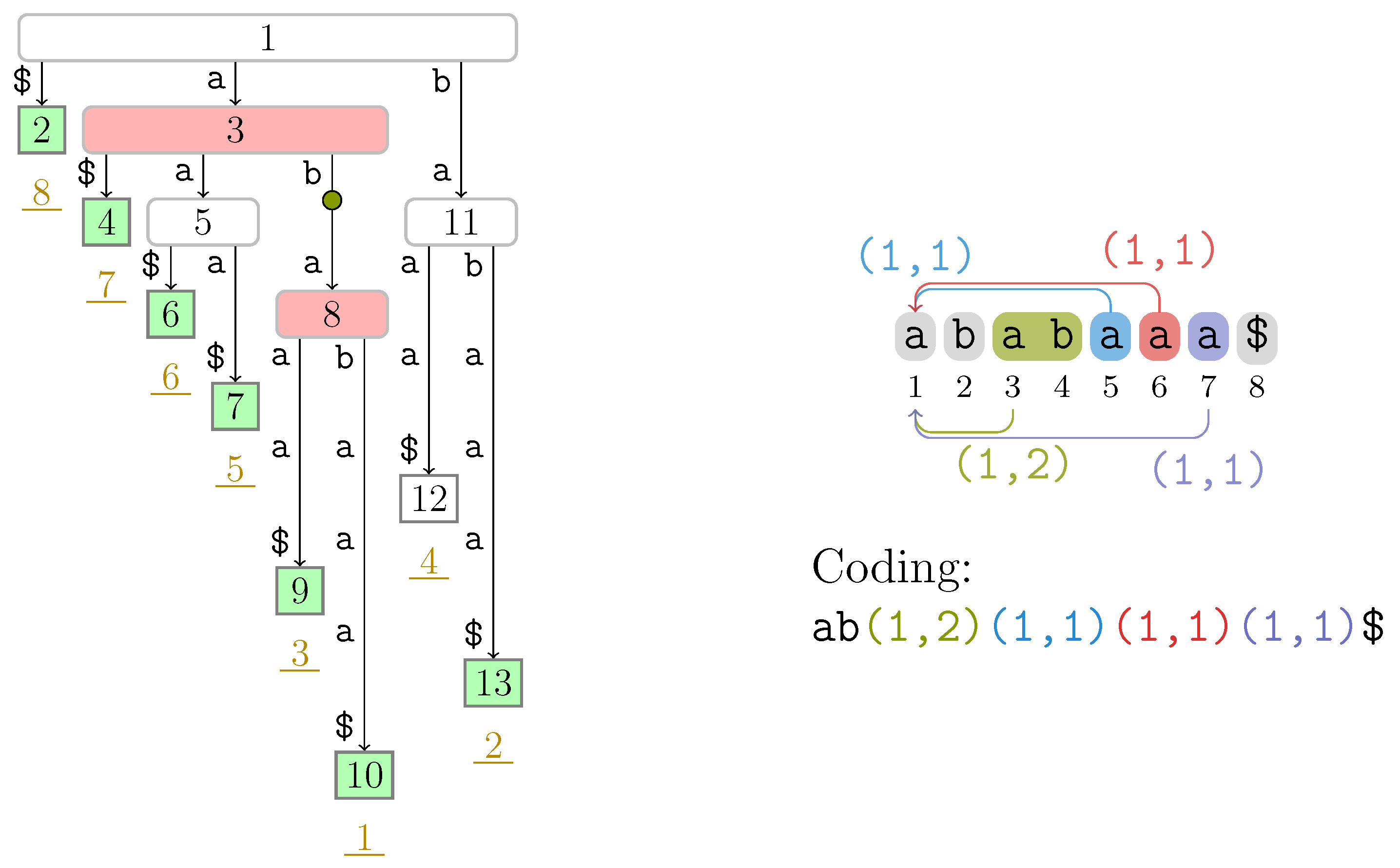

3.2. The Factorization Algorithm

All LZSS factorization algorithms of (Fischer et al. [

4] [Section 3]) are divided into passes. A pass consists of visiting suffix tree leaves in text order (i.e., in order of their suffix numbers). On visiting a leaf, they conduct a leaf-to-root traversal. In what follows, we present our modification, which merely consists of a modification of Pass (a) in all LZSS factorization variants of ([

4] [Section 3]): In Pass (a), Fischer et al. computed the factor lengths and the witnesses. To maintain the witnesses and lengths in future passes, they marked and stored the preorder numbers of the witnesses and the starting positions of the LZSS factors in two bit vectors

and

, respectively. In succeeding passes, they computed, based on the factor lengths and the witnesses, the referred positions and with that the final coding. Therefore, it suffices to only change Pass (a) according to our definition of witnesses and factors, while keeping the subsequent passes untouched. In this pass, we do the following:

- Pass (a)

Create and to determine the witnesses and the factor lengths, respectively.

The main technique of a pass in [

4] are leaf-to-root traversals. Here, we do the opposite: We traverse from the root to a specific leaf. We perform a root-to-leaf traversal by level ancestor queries such that visiting a node takes constant time. We perform these traversals only for all

corresponding leaves since the other leaves are not useful for determining a factor.

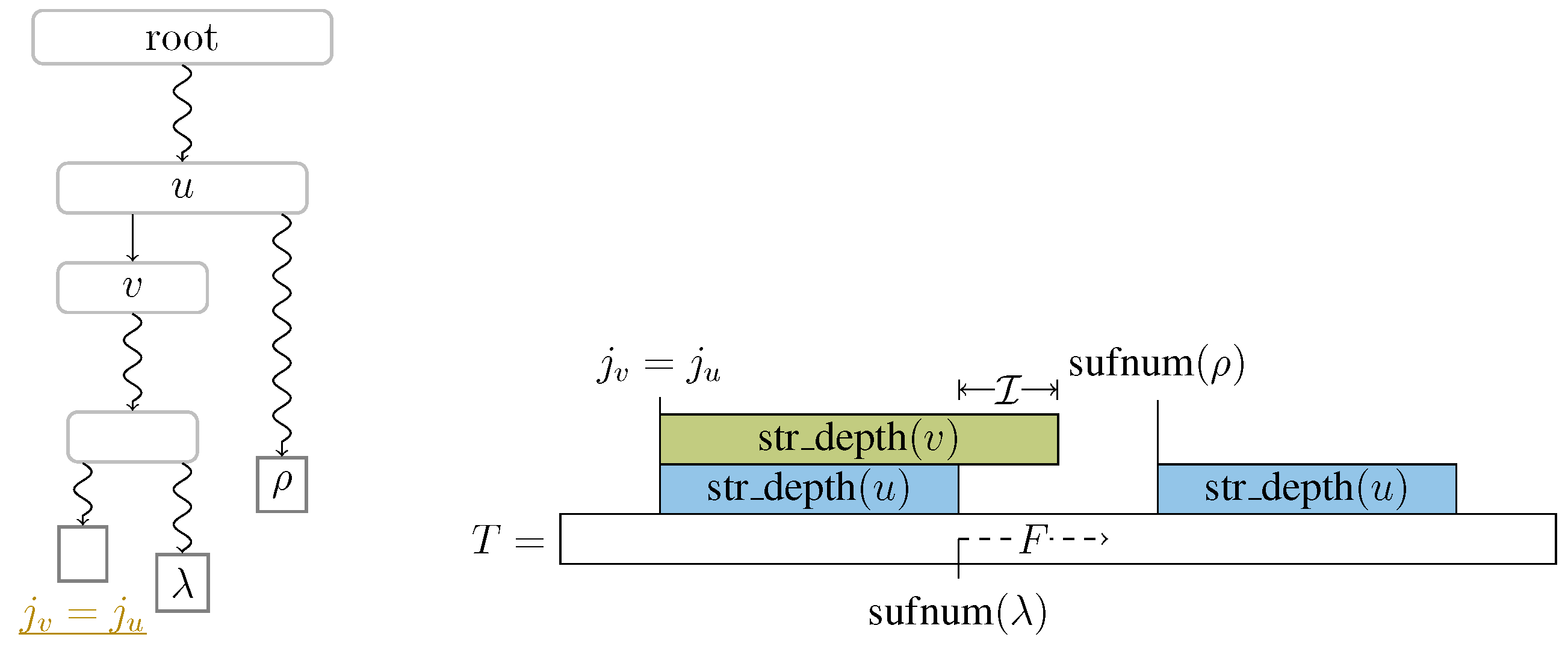

Suppose we visit a leaf

corresponding to a factor

F. We already know the starting position of

F (i.e.,

), but not its length, referred position, or witness

w. To detect

w, we use the following observation: Given

is the smallest suffix number among all leaves in the subtree rooted at a node

u,

w is the highest node that maximizes

If

, then

F is a fresh factor. Otherwise,

w determines the length

and the referred position

of

F. However, the two functions

and

are strictly increasing and monotonically decreasing, respectively, when applied to each node

v visited when walking downwards the path from the root to

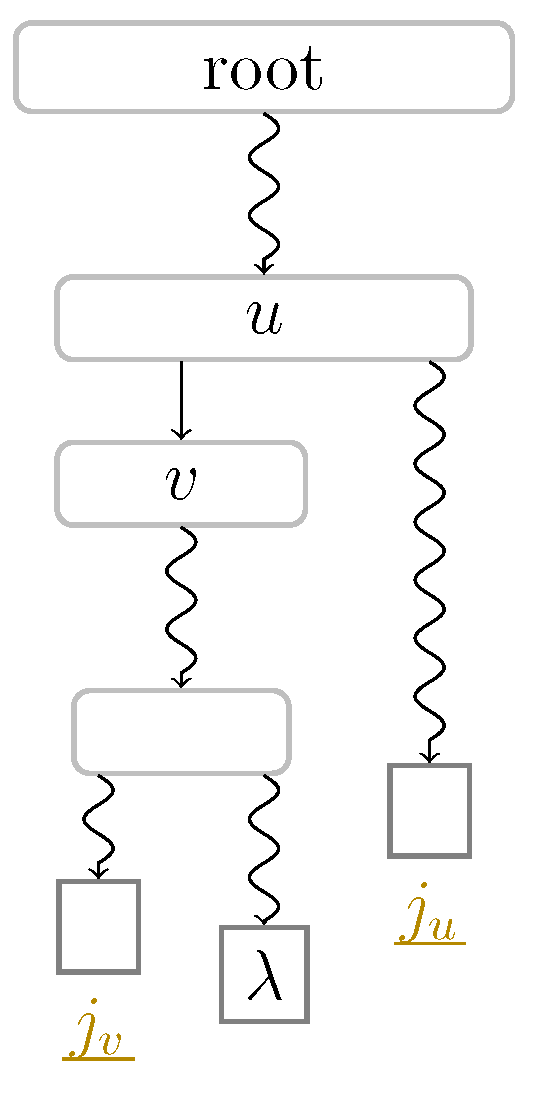

. Thus, our goal is to find the lowest node

u, where the value

of Equation (

1) still results from

, and not from the second argument

. We give a sketch in

Figure 3 and study a particular case in

Figure 4 for factors of Types 2 and 3.

To achieve our goal, let and be two intervals. These two intervals have the property that . The idea is that is a candidate for F with being its leftmost occurrence in T. We compute the values of , and for every node v on the path from the root to until reaching a node v such that the intervals and overlap (cf. Line 9 in Algorithm 1). Let u be the parent of v. Then, the edge determines the factor F: We consider the following two cases that determine whether F is a fresh or referencing factor, and whether the witness and the referred position of F are u and , or v and , respectively, in case F is a referencing factor:

3.3. Complexity Bounds

To determine the value of , we need to answer a range minimum query (RMQ) on . Given an array , an RMQ for an interval asks for the index of the minimum value in . To answer an , we can make use of the following data structure:

Lemma 2 ([

32] [Thm 5.8])

. Let be an integer array, where accessing an element takes time for . There exists a data structure of size bits built on top of A that answers RMQs in constant time. It is constructed in time with additional bits of working space. According to Lemma 2, we can construct an RMQ data structure in time using bits of space, where is the time for accessing . We can access in time and in time with the SST and CST, respectively, where the last time complexity is due to the following lemma:

Lemma 3 (Grossi and Vitter [

11] [Section 3.2])

. There is a data structure using bits that can access in time, where is a selectable constant. As shown by (Fischer et al. [

33] [Lemma 3]), the operation

for a node

u can be computed with

,

, and an RMQ data structure on

because the leaf-ranks of the leftmost leaf

and rightmost leaf

in the subtree rooted

u define the interval

in

, and selecting the minimum value in

within this interval gives the length of the longest common prefix shared among all leaves in

u’s subtree, which is

. However, we do not store

explicitly, but instead simulate an access of its

jth entry for

by

. Hence, we can access an entry of

in

time. Consequently, we can build the data structure of Lemma 2 on

in

time, which takes

bits of additional space. Equipped with this data structure, we finally can evaluate

in

time. The total time bounds are composed as follows:

- (a)

Since the number of visited nodes is at most the factor length of a corresponding leaf during a root-to-leaf traversal to , and , we conclude that the RMQs take time in total.

- (b)

For each root-to-leaf traversal to a leaf corresponding to a factor F, we stop at an edge and compute the length of the LCP of and by naïvely comparing characters. In total, the number of compared characters is .

Altogether, Pass (a) takes takes

time, since all applied tree navigational operations take constant time. With Lemma 3, we obtain the time and space complexities claimed in Theorem 1.

| Algorithm 1: Pass (a) of the non-overlapping LZSS algorithm of Section 3. The function outputs the referred position j and the length ℓ of the respective referencing factor; marks the witness w and the starting position of the next factor (determined by ℓ) in and in , respectively; and appends the unary value of to (defined in Section 3.4).

and return the leftmost and the rightmost leaf of the subtree rooted at v in constant time, respectively.

All break statements exit the nested inner loop and jump to Line 25. |

![Algorithms 14 00044 i001 Algorithms 14 00044 i001]() |

3.4. Storing the Factorization

From here on, we have two options: We can either directly output the referred positions and the lengths of the computed factors during Pass (a), or we can store additional information for retrieving the witnesses in a later pass. Such a later pass is interesting when working with the SST, as we can store the factors in the

bits of working space ([

4] [Section 3.3]). There, a later pass overwrites the space occupied by the SST, in particular the suffix array representation, such that later passes no longer can determine witnesses. Although we mark each witness in the bit vector

during Pass (a), there can be multiple nodes marked in

on the path from the root to a leaf corresponding to a factor

F. The overlapping LZSS factorization obeys the invariant that the witness of

F is the lowest ancestor of

that is marked in

, given that

marks all ancestors of the leaves with a suffix number smaller than

when conducting a leaf-to-root traversal at

during the overlapping LZSS computation ([

4] [Section 3]). Due to the existence of factors of Types 2 and 3, this invariant does not hold for the non-overlapping factorization.

For the later passes, we want a data structure that finds the witness

w of a factor

F based on

F’s starting position in constant time. Fortunately,

w is determined by the leaf

corresponding to

F and

w’s depth due to

. To remember the depth of each witness, we maintain a bit vector

that stores the depth of each witness in unary coding sorted by the suffix number of the respective corresponding leaf. Given that we find the witness

w of a leaf

in Pass (a) during the traversal from the root to

, we store the unary code

in

, where

. For a leaf corresponding to a fresh factor, we store the unary code

1 in

. Similar to

in ([

4] [Sect. 3.4.3 Pass (2)]), we do not need to add a select-support to

, since we process the corresponding leaves always sequentially in text order. Given a corresponding leaf

, we can jump to its witness (or to the root if

corresponds to a fresh factor) with a level ancestor query from

with the depth

. The length of

is at most

since the depth of a witness is bounded by the length of its corresponding factor and the sum of all factor lengths is

n.

3.5. Computing

Finally, we can compute

with the same algorithm by visiting all leaves (i.e., not only the corresponding ones). However, we no longer can charge the visited nodes during a root-to-leaf traversal with the length of a factor as in

Section 3.3 (a). In fact, such an algorithm may visit

nodes since

(and this sum is

for the string

). To reduce the number of nodes to visit, we can make use of Lemma 1: having

computed, we know that

; hence, it suffices to start the root-to-leaf traversal at the lowest node

whose string depth is at most

. We find this node

by a suffix link. A

suffix link connects a node with string label

to the node with string label

or to the root node if

. All nodes except the root have a suffix link. However, we do not store suffix links as pointers explicitly, but simulate them with the leaves since we can compute the suffix link of a leaf

with

: Suppose that we have processed the leaf

with suffix number

for computing

. In what follows, we first assume that the computed factor starting at

is not of Type 3. Then, the witness of

is

’s ancestor

u with

being the computed factor length. First, we select another leaf

of the subtree rooted at

u such that the lowest common ancestor (LCA) of

and

is

u (e.g., we can select the leftmost or rightmost leaf in

u’s subtree). Then,

is the leaf with suffix number

i, and the LCA

of

and

is the node on the path from the root to

with

. By omitting the nodes from the root to

in the traversal to

for computing

, we only need to visit at most

nodes for determining

. A telescoping sum with the upper bound of Lemma 1 shows that we visit

nodes in total.

It is left to deal with the text positions

for which we computed a factor of Type 3. Here, the leaf

has a witness

v with

, i.e., the computed factor is implicitly represented on the edge from

to

v. We apply the same technique (i.e., taking the suffix link) as for the other types, but apply this technique on

u instead of the witness

v, such that we end up at a node

with

. We sketch the setting in

Figure 5. Now, we additionally need to walk down from

towards

to reach the lowest node

with

. There can be at most

nodes on the path from

to

. We can refine this number to at most

, where

is the number of characters on the edge

contributing to

. Nevertheless, these extra

nodes seem to invalidate the

bound on the number of visited nodes.

To retain our claimed time complexity, we switch from counting nodes to counting characters and use the following charging argument: We charge each edge we traversed by characters, or characters if we only traversed characters on an edge. With the above analysis, we again obtain time for the algorithm computing the non-overlapping factorization (as well as the non-Type 3 values) by spending time for each charged character (instead of each visited node).

Let us reconsider that the factor of is of Type 3, where we charge the last edge for computing with characters. Here, we observe that we actually spend only time for processing this edge. Hence, we have characters as a credit left, which we can spend on traversing descendants of . If the factor starting at i is again of Type 3, we add the remaining credit to the newly gained credit, and recurse.

Regarding

Section 3.3 (b), computing the length of the LCP of

and

naïvely results again in

overall running time since we need to compute these lengths for all

n positions. Here, instead of computing the length of such an LCP naïvely, we determine it by computing

of the LCA of

and the leaf

with suffix number

in

time. We find

with the RMQ data structure on

that actually reports the leaf-rank instead of the suffix number

, which we obtain by accessing

. Altogether, we obtain the same time and space bounds for computing the non-overlapping LZSS factorization:

Theorem 3. We can compute the -bits representation of within the same time and space as the non-overlapping LZSS factorization described in Theorem 1.

4. Substring Compression Query Problem

The substring compression query problem [

6] is to find the compressed representation of

, given a query interval

. Cormode and Muthukrishnan [

6] solved this problem for LZSS with a data structure answering the query for

in

time, where

denotes the number of produced LZSS factors of the queried substring

. Their data structure uses

space, and it can be constructed in

time. This result was improved by Keller et al. [

34] to

query time for the same space or to

query time for linear space. They also gave other trade-offs regarding query time and the size of the used data structure for larger data structures.

The main idea of tackling the problem for LZSS (and similarly for the classic LZ77 factorization) is to use a data structure answering interval LCP queries, which are usually answered by two-dimensional range successor/predecessor data structures. Most recently, Matsuda et al. [

35] proposed a data structure answering an interval LCP query in

time while taking

bits of space, where

denotes the zeroth order empirical entropy. Therefore, they could implicitly answer a substring compression query in

time within compressed space. Recently, Bille et al. [

36] proposed data structures storing the LZSS-compressed suffixes of

T for answering a pattern matching query of an LZSS-compressed pattern

P without decompressing

P. Their proposed data structures also seem to be capable of answering substring compression queries.

As a warm up for the more-involving techniques for the LZ78 factorization below (cf.

Section 4.5), we show that our techniques studied for the non-overlapping LZSS factorization in

Section 3 can be adapted to the substring compression query problem under the restriction that the query interval starts at text position 1 (meaning that we query for prefixes instead of arbitrary substrings). Given an interval

for a text position

, the algorithm of Theorem 1 achieves

time, where

is the time to access

. We can improve the running time by replacing the linear scan on Line 10 of Algorithm 1 with an exponential search [

37]: As long as the condition on Line 9 is true (the condition for walking downwards), we do not increment the depth

d by one, but instead double

d. Now, when the condition on Line 9 becomes false, we may have overestimated the desired depth (we want the first

d for which the condition on Line 9 becomes false). Thus, we need to additionally backtrack by performing a binary search on the interval

. If we perform this search for computing a factor of length

ℓ, then we double

d at most

times, and visit

depths during the binary search (see also

Figure 6 for a visualization). In total, we obtain

time, where

ℓ is the length of the longest non-overlapping LZSS factor (here,

denotes the number of computed

non-overlapping factors). Note that the result is not particularly interesting since we can just store the whole factorization of

, scan for the leftmost factor

that ends at

p or after, trim

’s length to end at

p, and finally return

, all in

time.

To generalize this algorithm for an interval

with

, we need to change the definition of

for a node

v in

Section 3.2 to be the smallest suffix number of at least

among the leaves in the subtree rooted at

v. However, this additional complexity makes the approach selecting

with an RMQ on

infeasible and leads us back to the interval LCP query problem.

4.1. Related Substring Compression Query Problems

As far as the author is aware of, the substring compression query problem has only been studied for LZSS. However, Lifshits [

38] mentioned that it is also feasible to think about the substring compression query problem in context of straight-line programs (SLPs): Given an SLP of size

g representing

T, we can construct an SLP of size

on

in

time. Actually, we can do better if the SLP is locally consistent. For that, we augment each non-terminal with the number of terminal symbols it expands to (after recursively expanding all non-terminals by their right hand sides). For a grammar such as HSP ([

39] [Theorem 3.5]), we can compute the SLP variant of HSP (analogously to the SLP variant of ESP [

40]) in

time, or ESP [

41] in

time due to ([

39] [Lemma 2.11]).

Here, we consider answering substring compression queries with the LZ78 factorization (which is actually also an SLP ([

42] [Section VI.A.1])), i.e., the goal is to compress the substring

with LZ78. Let

denote the number of LZ78 factors of the string

. When the text is given as an SLP of size

g, we can first transform this SLP into an SLP of

in

time, and then apply the algorithm of Bannai et al. [

43] on this SLP to compute the LZ78 factorization in

time. Let us consider from now on that

T is given in its plain form as a string with

bits. A possible way is to apply first a solution for computing an LZ77 substring compression query, and then transform the LZ77-compressed substring into an SLP of size

in

time by a transformation due to Rytter [

44], to finally apply the aforementioned algorithm of Bannai et al. [

43]. The fastest LZ78 factorization algorithms [

4,

45] can answer a LZ78 substring compression query in

time alphabet independently. For small alphabet sizes, the running time

of the LZ78 factorization algorithm of Jansson et al. [

46] becomes even sub-linear in

. However, for large

and a compressible text

T, these approaches are rather slow compared to the solutions for LZSS mentioned above, whose running times are bounded by the number of computed factors and a logarithmic multiplicative factor on the text length.

To obtain similar bounds for LZ78, we could adapt the approach of Bille et al. [

36] to preprocess the LZ78 factorization of all suffixes of

T, but that would give us a data structure with super-linear preprocessing time (and possibly super-linear space). Here, we borrow the idea from Nakashima et al. [

45] to superimpose the suffix tree with the LZ78 trie, and use a data structure for answering nearest marked ancestor queries to find the lowest marked suffix tree node on the path from the root to a leaf. This data structure [

47] takes

bits of space, and can answer a nearest marked ancestor query in

amortized time. We are unaware whether there are improvements for this type of query, even under the light that they only need to answer fringe marked ancestor queries, a notion coined by Breslauer and Italiano [

48], which is a special case of nearest marked ancestor queries: in the fringe marked ancestor query problem, the root of a tree (here: the suffix tree) is already marked, and we can only mark the children of an already marked node. In what follows, we formally define the LZ78 factorization, and then propose approaches for the LZ78 substring compression query problem based on different suffix tree representations.

4.2. LZ78 Factorization

Stipulating that

is the empty string, a factorization

is called the

LZ78 factorization [

2] of

T iff, for all

, the factor

is the longest prefix of

with

for some

and

, that is,

is the longest possible previous factor

appended by the following character

in the text. We say that

y is the

referred index of the factor

. A factor is thus determined by its referred index and its last character, which lets us encode the factors in a list of (integer, character)-pairs, as shown in the example of

Figure 1 where we simplify the coding of factors with referred index 0 to plain characters (to ease the comparison with the LZSS variants).

Figure 7 gives another visualization of the same example with the

LZ trie, which represents each factor as a node (the root represents the factor

). The node representing the factor

has a child representing the factor

connected with an edge labeled by a character

if and only if

. An observation of Nakashima et al. [

45] (Section 3) is that the LZ trie is a connected subgraph of the suffix trie containing its root. We can therefore simulate the LZ trie by marking nodes in the suffix trie. Since the suffix trie has

nodes, we use the suffix tree

instead of the suffix trie to save space. In

, however, not every LZ trie node is represented; these implicit LZ trie nodes are on the

edges between two

nodes (cf.

Figure 8). Since the LZ trie is a connected subgraph of the suffix trie sharing the root node, implicit LZ trie nodes on the same

edge have the property that they are all consecutive and that they start at the first character of the edge. To represent them, it thus suffices to augment an

edge with a counter counting the number of its implicit LZ trie nodes. We call this counter an

exploration counter, and we write

for the exploration counter of an edge

, which is stored in the lower node

v that

e connects to. Additionally, we call an

node

v an

edge witness if

becomes incremented during the factorization. We additionally stipulate that the root of

is an edge witness, whose exploration counter is always full. Then, all edge witnesses form a sub-graph of

sharing the root node. We say that

is full if

, meaning that

v is an explicit LZ78 trie node. We give an example in

Figure 9.

However, since we do not know the shape of the LZ trie in advance, we also do not know which nodes will become an edge witness. For the time being, we augment each node with an exploration counter, spending

bits in total. As in

Section 3, we assume that our text

T has length

n and ends with a special symbol

$ smaller than all other characters appearing in

T.

4.3. Linear-Time Computation

Now, we can give our first result of Theorem 2 on the LZ78 substring compression query problem by a simple modification of the LZ78 factorization algorithm presented by Nakashima et al. [

45]. This algorithm uses a pointer-based suffix tree, which is augmented by a nearest marked ancestor data structure [

47], using altogether

bits of space.

The algorithm works as follows: Suppose that we have computed the factors and now want to compute . Since is a prefix of the suffix with , is a prefix of the concatenation of edge labels on the path from the root to the leaf with suffix number p in the suffix tree. The additional requirement that , excluding its last character, has to coincide with a preceding factor means that is the string label of the lowest LZ trie node on ; this LZ trie node is represented either

In either case, w is the edge witness of and determines its length . We create an LZ trie node representing as follows:

If is not full, we make w the edge witness of , and increment by one.

Otherwise ( is full), we make the child of w on the path the edge witness of , and set .

It is left to find

w, which we can by traversing

from the root until reaching an edge

whose exploration counter

is less than the length of its label

, where either

u or

v is

w. However, a linear scan of

for finding

w would result in

time per factor. Here, the fringe marked ancestor queries come into the picture, which allow us to find a lowest edge witness in amortized constant time: by marking all edge witnesses, querying the lowest marked ancestor of the leaf with suffix number

p yields either

u or

v. This gives us

amortized time per LZ78 factor, and concludes the LZ78 factorization algorithm of Nakashima et al. [

45] (Theorem 3).

Finally, to obtain the LZ78 factorization of for a given interval with , we do not start the computation at , but directly at , and terminate when a factor ends at or protrudes to the right. In the latter case, we trim this factor. Hence, we can compute the factorization of in time with bits of space, in which we can store a pointer-based suffix tree on T.

4.4. Outline

In what follows, we want to study variants of this algorithm that use more lightweight data structures at the expense of additional running times. All LZ78 factorization algorithms here presented stick to the following general framework, which we call a

pass: For each leaf

whose suffix number is the starting position of a factor

F, locate the lowest edge witness

w on the path from the root to

and create a new LZ trie node by incrementing either

or the exploration counter of its child on the path towards

as described in

Section 4.3. Since

w determines the length of the factor

F, we know the suffix number of the leaf that starts with the next factor.

After a pass, we know the LZ trie topology due to the exploration counters. In a subsequent pass (

Section 4.7), we use this knowledge to associate an edge witness

w with the index of the most recent factor having

w as its edge witness such that we can identify the referred indices with this association. However, before that, we reduce the space (

Section 4.5) and subsequently show how to perform a pass within the reduced working space (

Section 4.6). Finally, we accelerate a root-to-leaf traversal for long factors in

Section 4.8.

4.5. Space-Efficient Computation

In what follows, we give trade-offs for less space but slightly larger time bounds by using SST and CST. To get below

bits of space, we need to get rid of: (a) the

-bits marked ancestor data structure; and (b) the

bits for the exploration counters. For the latter (b), Fischer et al. [

4] (Section 4.1) presented a data structure representing the exploration counters within

bits on top of the suffix tree. For the former (a), we use level ancestor queries to simulate a fringe marked ancestor query: to this end, we mark all edge witnesses in a bit vector

of length

such that

if and only if the

node with preorder rank

j is an edge witness (remember that the number of nodes in

is at most

). Suppose now that we want to compute the factor

. For that, we visit the leaf

with suffix number

. As in

Section 4.3, we want to find the lowest edge witness on the path from the root to

, which we find with a fringe marked ancestor query. Here, we answer this query by scanning the path from the root to

until reaching the lowest marked node in

. We can traverse linearly from the root to this node by querying

for each depth

. However, we then visit

nodes for computing the factor

, or

nodes in total. To improve this bound, we can apply again exponential search (cf.

Figure 6). To see why that can be done, let

be the path from the root

to

. If each node

(for each depth

) is represented by its preorder number, then

if the lowest edge witness has depth

k, which is the smallest

such that

. Although we do not know

k in advance, we can find the rightmost ‘

’ in

with an exponential search visiting

nodes (we evaluate

for specific

d and check each time whether the returned node is marked in

). Thus, we can determine

and

’s edge witness in

time. Since

, we spend

time in total.

4.6. Navigation in Small Space

To complete our algorithm for SST and CST, it is left to study how to access the leaves when issuing the level ancestor queries. While the LZ78 factorization algorithms of Fischer et al. [

4] used the fact that they can scan the leaves linearly in suffix number order to simulate the scan of the text in text order within their

time budget, we want to accelerate this algorithm by visiting only the leaves whose suffix numbers match the starting positions of the factors. With the SST, we can select the leaf

with suffix number

in

time since we have access to

returning the leaf-rank of

.

With the CST, we can visit the leaf with the subsequent suffix number with in constant time, but may need time to visit an arbitrary leaf. Here, the idea is to store a sampling of within bits of space during a precomputation step. We can produce the values of this sampling by iterating over such that we obtain an array that stores in its ith entry the leaf-rank of the leaf with suffix number . Consequently, we can jump to a leaf with suffix number in time by jumping to the closest sampled predecessor of j, and subsequently applying times to reach the leaf with suffix number j. To sum up, we need time to traverse between two corresponding leaves. The total time becomes and for the SST and the CST, respectively.

4.7. LZ78 Coding

Finally, to obtain the LZ78 coding, we need to compute the referred indices. In a classic LZ78 trie, we would augment each trie node with the index of its corresponding factor. Here, we additionally need a trick for the implicitly represented LZ trie nodes: For them, we can now leverage the edge witnesses by augmenting each of them with the factor index of the currently lowest LZ trie node created on its ingoing edge. Fortunately, we know all nodes that become edge witnesses thanks to

(cf.

Section 4.5) marking the preorder numbers of all edge witnesses. We now enhance

with a rank-support such that we can give each edge witness a rank within

. Therefore, we can maintain the most recent factor indices corresponding to each edge witness in an array

W of

bits. We again conduct a pass as described in

Section 4.4, but this time we use

W to write out the referred indices (see ([

4] [Section 4.2.1 Pass (b)]) for a detailed description on how to read the referred indices from

W). By doing so, we finally obtain Theorem 2. For an overview, we present the obtained complexity bounds in

Table 1.

4.8. Centroid-Path Decomposed Suffix Tree

If the length

of the longest factor is so large that

, then we can speed up the exponential search of

Section 4.5 by searching in the centroid-path decomposed suffix tree

. The centroid path decomposition [

49] of the suffix tree is defined as follows: For each internal node, we call its child whose subtree is the largest among all its siblings (ties are broken arbitrarily if there are multiple such children) a

heavy node, while we call all other children

light nodes. Additionally, we make the root and all leaves light nodes (here we differ from the standard definition because we need a one-to-one relationship between leaves in the original tree and in the path-decomposed one). A

heavy path is a path from a light node

u to the parent of a leaf containing, except for

u, only heavy nodes. There is a one-to-one relationship between light nodes and heavy paths. Since heavy paths do not overlap, we can contract all heavy paths to single nodes and thus form

(see ([

49] [Section 4.2]) for details and

Figure 8 for an example). The centroid path decomposition is helpful, because the number of light nodes on a path from the root to a leaf is

, which means that a path from the root to a leaf in

contains

nodes. This can be seen by the fact that the subtree size of a light node is at most half of the subtree size of its heavy sibling; thus, when visiting a light node during a top-down traversal in

, we at least half the number of

nodes we can visit from then on. Consequently, a root-to-leaf path in

has

nodes.

For that to be of use, we need a connection between

and

: observe that the number of leaves and their respective order is the same in both trees, such that we can map leaves by their leaf-ranks in constant time. If we mark the light nodes in the suffix tree in a bit vector

, then the rank of a light node

v in

is the preorder number of the node in

representing the heavy path whose highest node is

v. To stay within our space budget, we represent the tree topology of

with a BP sequence (which we briefly introduced in

Section 2). First, we mark all light nodes in

by an Euler tour, where we query the

topology for the subtree size rooted at an arbitrary node in constant time. Next, we perform a depth-first search traversal on the suffix tree while producing the BP sequence of

. For that, we use a stack to store the light node ancestors of the currently visited node. Since a node has

light nodes as ancestors, the stack uses

bits of space. Finally, we endow

with a select-support such that we can map a node of

to its corresponding light node in

.

Our algorithm conducting a pass works as follows: Suppose that we visit the leaf with suffix number . This time, we map to the leaf of having the same leaf-rank as in . Next, we apply the exponential search with on , to obtain a node representing the heavy path whose highest node is a light node v, i.e., v is the lowest light node on the path from the root to the leaf that is an edge witness. Since a root-to-leaf path in has light nodes, we spend time to find v.

Finally, it is left to move from

v to the lowest edge witness on the path from

v to

in

. For that, we use a dictionary

that associates a light node with the number of edge witnesses in its heavy path. This number is at most

, and thus it can be stored in

bits, while a light node can be represented with its preorder number in

bits.

has to be dynamic since we do not know in advance which nodes will become edge witnesses; we can make use of one of the dynamic dictionaries given in

Table 2, where

denotes the time for an operation such as a lookup or an insertion and

denotes the dictionary size in bits.

Now, suppose that stores that d nodes in v’s heavy path are edge witnesses. Let w be the next light node on the path from v to (i.e., w is the highest light node on the path from the root to whose exploration counter is still zero).

If is at least the height difference between v and w, then the parent u of w is already an edge witness, and u is a node on the heavy path of v. If the exploration counter of u is full, i.e., , then we increment the exploration counter of w, and hence make w an edge witness and add w to .

Otherwise ( is smaller than this height difference), the node whose exploration counter we want to increment is within the heavy path, and is either the dth or th descendent of v.

In total, for

, we can improve the

factor in the time bounds to

, which is

when implementing

with the dynamic dictionary of Raman et al. [

50], costing

bits of additional working space during a query. More formally:

Theorem 4. Given a text of length n whose characters are drawn from an alphabet with size , we can compute a data structure on T in time that computes, given an interval , the LZ78 factorization of in

time using bits of space, or

time using bits of space,

where is the number of computed LZ78 factors, is a selectable constant, and and are the time and space complexities of a dynamic dictionary associating a -bit integer with a -bit value (cf. Table 2). Similar to Theorem 2, we need the read-only text stored for queries if there is a character in the alphabet that does not appear in T.

) and in green (

) and in green ( ), respectively. We additionally mark the string ab with an implicit node (

), respectively. We additionally mark the string ab with an implicit node ( ) whose string label is equal to the factor with Type 3. The nodes are labeled by their preorder numbers. The suffix number of each leaf is the underlined number drawn in dark yellow below . (Right) Non-overlapping LZSS factorization of T.

) whose string label is equal to the factor with Type 3. The nodes are labeled by their preorder numbers. The suffix number of each leaf is the underlined number drawn in dark yellow below . (Right) Non-overlapping LZSS factorization of T.

) and in green (

) and in green ( ), respectively. We additionally mark the string ab with an implicit node (

), respectively. We additionally mark the string ab with an implicit node ( ) whose string label is equal to the factor with Type 3. The nodes are labeled by their preorder numbers. The suffix number of each leaf is the underlined number drawn in dark yellow below . (Right) Non-overlapping LZSS factorization of T.

) whose string label is equal to the factor with Type 3. The nodes are labeled by their preorder numbers. The suffix number of each leaf is the underlined number drawn in dark yellow below . (Right) Non-overlapping LZSS factorization of T.

). Here, the leaf has depth 18, and we want to find the first unshaded node on depth 15. The exponential search and the subsequent binary search in the range is conducted by following the edges below and above the path array, respectively.

). Here, the leaf has depth 18, and we want to find the first unshaded node on depth 15. The exponential search and the subsequent binary search in the range is conducted by following the edges below and above the path array, respectively.

). Here, the leaf has depth 18, and we want to find the first unshaded node on depth 15. The exponential search and the subsequent binary search in the range is conducted by following the edges below and above the path array, respectively.

). Here, the leaf has depth 18, and we want to find the first unshaded node on depth 15. The exponential search and the subsequent binary search in the range is conducted by following the edges below and above the path array, respectively.

) colored nodes represent the explicit LZ trie nodes, i.e., those nodes that are present in . Implicit LZ trie nodes are represented by the small rounded nodes (

) colored nodes represent the explicit LZ trie nodes, i.e., those nodes that are present in . Implicit LZ trie nodes are represented by the small rounded nodes ( ). The edge witnesses are the nodes with the preorder numbers 3, 5, 6, 12, and 16. (Right) of T described in Section 4.8. The label of a node is the list of preorder numbers of the nodes in its respective heavy path. For instance, the heavy path from the root contains the nodes with the preorder numbers 1, 3, 5, and 6.

). The edge witnesses are the nodes with the preorder numbers 3, 5, 6, 12, and 16. (Right) of T described in Section 4.8. The label of a node is the list of preorder numbers of the nodes in its respective heavy path. For instance, the heavy path from the root contains the nodes with the preorder numbers 1, 3, 5, and 6.

) colored nodes represent the explicit LZ trie nodes, i.e., those nodes that are present in . Implicit LZ trie nodes are represented by the small rounded nodes (

) colored nodes represent the explicit LZ trie nodes, i.e., those nodes that are present in . Implicit LZ trie nodes are represented by the small rounded nodes ( ). The edge witnesses are the nodes with the preorder numbers 3, 5, 6, 12, and 16. (Right) of T described in Section 4.8. The label of a node is the list of preorder numbers of the nodes in its respective heavy path. For instance, the heavy path from the root contains the nodes with the preorder numbers 1, 3, 5, and 6.

). The edge witnesses are the nodes with the preorder numbers 3, 5, 6, 12, and 16. (Right) of T described in Section 4.8. The label of a node is the list of preorder numbers of the nodes in its respective heavy path. For instance, the heavy path from the root contains the nodes with the preorder numbers 1, 3, 5, and 6.

). The explicit LZ trie nodes u and w are shaded in blue (

). The explicit LZ trie nodes u and w are shaded in blue ( ). According to the figure, and . In particular, the exploration counters of u and w are full.

). According to the figure, and . In particular, the exploration counters of u and w are full.

). The explicit LZ trie nodes u and w are shaded in blue (

). The explicit LZ trie nodes u and w are shaded in blue ( ). According to the figure, and . In particular, the exploration counters of u and w are full.

). According to the figure, and . In particular, the exploration counters of u and w are full.