Constructing Reliable Computing Environments on Top of Amazon EC2 Spot Instances †

Abstract

1. Introduction

- A review of multi-criterion scheduling algorithms for EC2 resource provisioning that considers the diversity of instance types and pricing models;

- The development of a best-effort multi-workflow deadline and budget-constrained scheduling algorithm to execute scientific workflows on Amazon EC2 infrastructures;

- The development of a dynamic scheduling strategy to provide reliable virtual computing environments on top of Amazon EC2 on-demand, spot block, and unreliable SIs, which reactively detects reclaimed SIs and unfulfilled spot requests, and to perform resource reconfiguration to maintain required QoS in terms of deadline and budget;

- An extensive evaluation of the dynamic strategy and the scheduling algorithm with applications that follow real-life scientific workflow characteristics constrained by user-defined deadline and budget QoS parameters, and with SIs behaving and performing accordingly to the results of recent experimental evaluations of Amazon EC2 SIs.

2. Related Work

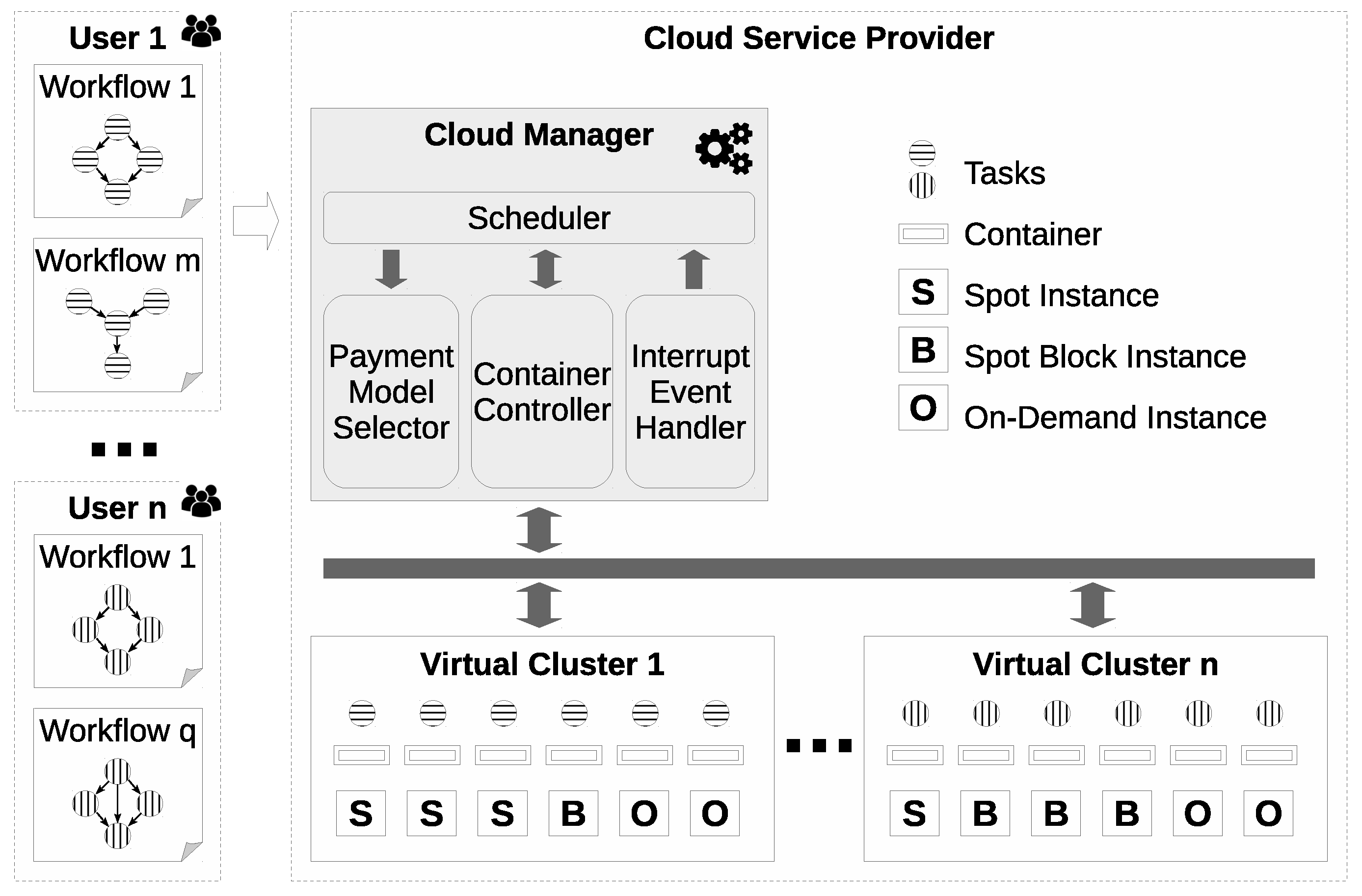

3. Reliable Computing Environments

3.1. System Overview

3.2. Achieving Computational Reliability

3.3. Workflow Application Model

3.4. Scheduling Algorithm

| Algorithm 1 MISER scheduling algorithm. |

|

| Algorithm 2 Cloud manager. |

|

4. Evaluation Scenario

4.1. Workloads Description

4.2. SI Interruption Description

4.3. Specification of Instances

4.4. Algorithms Considered for Comparison

4.5. Performance Metrics

4.6. Simulation Setup

5. Results and Analysis

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fox, A.; Griffith, R.; Joseph, A.; Katz, R.; Konwinski, A.; Lee, G.; Patterson, D.; Rabkin, A.; Stoica, I. Above the clouds: A berkeley view of cloud computing. Dept. Electr. Eng. Comput. Sci. Univ. Calif. Berkeley Rep. UCB/EECS 2009, 28, 2009. [Google Scholar]

- Sampaio, A.M.; Barbosa, J.G. Towards high-available and energy-efficient virtual computing environments in the cloud. Future Gener. Comput. Syst. 2014, 40, 30–43. [Google Scholar] [CrossRef]

- Vallee, G.; Naughton, T.; Engelmann, C.; Ong, H.; Scott, S.L. System-level virtualization for high performance computing. In Proceedings of the 16th Euromicro Conference on Parallel, Distributed and Network-Based Processing, Toulouse, France, 13–15 February 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 636–643. [Google Scholar]

- Juve, G.; Deelman, E. Scientific workflows in the cloud. In Grids, Clouds and Virtualization; Springer: Berlin/Heidelberg, Germany, 2011; pp. 71–91. [Google Scholar]

- Schulte, S.; Janiesch, C.; Venugopal, S.; Weber, I.; Hoenisch, P. Elastic Business Process Management: State of the art and open challenges for BPM in the cloud. Future Gener. Comput. Syst. 2015, 46, 36–50. [Google Scholar] [CrossRef]

- Challa, S.; Das, A.K.; Gope, P.; Kumar, N.; Wu, F.; Vasilakos, A.V. Design and analysis of authenticated key agreement scheme in cloud-assisted cyber–physical systems. Future Gener. Comput. Syst. 2020, 108, 1267–1286. [Google Scholar] [CrossRef]

- Sun, G.; Zhou, R.; Sun, J.; Yu, H.; Vasilakos, A.V. Energy-efficient provisioning for service function chains to support delay-sensitive applications in network function virtualization. IEEE Internet Things J. 2020, 7, 6116–6131. [Google Scholar] [CrossRef]

- Huang, M.; Liu, A.; Xiong, N.N.; Wang, T.; Vasilakos, A.V. An effective service-oriented networking management architecture for 5G-enabled internet of things. Comput. Netw. 2020, 173, 107208. [Google Scholar] [CrossRef]

- Wu, F.; Wu, Q.; Tan, Y. Workflow scheduling in cloud: A survey. J. Supercomput. 2015, 71, 3373–3418. [Google Scholar] [CrossRef]

- Deelman, E.; Gannon, D.; Shields, M.; Taylor, I. Workflows and e-Science: An overview of workflow system features and capabilities. Future Gener. Comput. Syst. 2009, 25, 528–540. [Google Scholar] [CrossRef]

- Agmon Ben-Yehuda, O.; Ben-Yehuda, M.; Schuster, A.; Tsafrir, D. Deconstructing amazon ec2 spot instance pricing. ACM Trans. Econ. Comput. 2013, 1, 16. [Google Scholar] [CrossRef]

- Chen, L.; Li, X.; Ruiz, R. Idle block based methods for cloud workflow scheduling with preemptive and non-preemptive tasks. Future Gener. Comput. Syst. 2018, 89, 659–669. [Google Scholar] [CrossRef]

- Poola, D.; Ramamohanarao, K.; Buyya, R. Enhancing reliability of workflow execution using task replication and spot instances. ACM Trans. Auton. Adapt. Syst. (TAAS) 2016, 10, 30. [Google Scholar] [CrossRef]

- Pham, T.P.; Ristov, S.; Fahringer, T. Performance and Behavior Characterization of Amazon EC2 Spot Instances. In Proceedings of the 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), San Francisco, CA, USA, 2–7 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 73–81. [Google Scholar]

- Garey, M.R.; Johnson, D.S. Computers and intractability: A guide to the theory of npcompleteness (series of books in the mathematical sciences), ed. In Computers Intractability; WH Freeman and Company: New York, NY, USA, 1979; Volume 340. [Google Scholar]

- Merkel, D. Docker: Lightweight linux containers for consistent development and deployment. Linux J. 2014, 2014, 2. [Google Scholar]

- Sampaio, A.M.; Barbosa, J.G. Enhancing the Reliability of Compute Environments on Amazon EC2 Spot Instances. In Proceedings of the 2019 International Conference on High Performance Computing & Simulation (HPCS), Dublin, Ireland, 15–19 July 2019. [Google Scholar]

- Mateescu, G.; Gentzsch, W.; Ribbens, C.J. Hybrid computing—Where HPC meets grid and cloud computing. Future Gener. Comput. Syst. 2011, 27, 440–453. [Google Scholar] [CrossRef]

- Sampaio, A.M.; Barbosa, J.G. A comparative cost analysis of fault-tolerance mechanisms for availability on the cloud. Sustain. Comput. Inform. Syst. 2018, 19, 315–323. [Google Scholar] [CrossRef]

- Zhou, A.C.; He, B.; Liu, C. Monetary cost optimizations for hosting workflow-as-a-service in IaaS clouds. IEEE Trans. Cloud Comput. 2016, 4, 34–48. [Google Scholar] [CrossRef]

- Sharma, P.; Lee, S.; Guo, T.; Irwin, D.; Shenoy, P. Spotcheck: Designing a derivative iaas cloud on the spot market. In Proceedings of the Tenth European Conference on Computer Systems, Bordeaux, France, 21–24 April 2015; ACM: New York, NY, USA, 2015; p. 16. [Google Scholar]

- Williams, D.; Jamjoom, H.; Weatherspoon, H. The Xen-Blanket: Virtualize once, run everywhere. In Proceedings of the 7th ACM European Conference on Computer Systems, Bern, Switzerland, 10–13 April 2012; ACM: New York, NY, USA, 2012; pp. 113–126. [Google Scholar]

- Sharma, P.; Chaufournier, L.; Shenoy, P.; Tay, Y. Containers and virtual machines at scale: A comparative study. In Proceedings of the 17th International Middleware Conference, Trento, Italy, 12–16 December 2016; ACM: New York, NY, USA, 2016; p. 1. [Google Scholar]

- Shastri, S.; Irwin, D. HotSpot: Automated server hopping in cloud spot markets. In Proceedings of the 2017 Symposium on Cloud Computing, Santa Clara, CA, USA, 24–27 September 2017; ACM: New York, NY, USA, 2017; pp. 493–505. [Google Scholar]

- Yi, S.; Andrzejak, A.; Kondo, D. Monetary cost-aware checkpointing and migration on amazon cloud spot instances. IEEE Trans. Serv. Comput. 2011, 5, 512–524. [Google Scholar] [CrossRef]

- Voorsluys, W.; Buyya, R. Reliable provisioning of spot instances for compute-intensive applications. In Proceedings of the 2012 IEEE 26th International Conference on Advanced Information Networking and Applications, Fukuoka, Japan, 26–29 March 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 542–549. [Google Scholar]

- Tang, S.; Yuan, J.; Wang, C.; Li, X.Y. A framework for amazon ec2 bidding strategy under sla constraints. IEEE Trans. Parallel Distrib. Syst. 2013, 25, 2–11. [Google Scholar] [CrossRef]

- Abundo, M.; Di Valerio, V.; Cardellini, V.; Presti, F.L. QoS-aware bidding strategies for VM spot instances: A reinforcement learning approach applied to periodic long running jobs. In Proceedings of the 2015 IFIP/IEEE International Symposium on Integrated Network Management (IM), Ottawa, ON, Canada, 11–15 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 53–61. [Google Scholar]

- Mishra, A.K.; Umrao, B.K.; Yadav, D.K. A survey on optimal utilization of preemptible VM instances in cloud computing. J. Supercomput. 2018, 74, 5980–6032. [Google Scholar] [CrossRef]

- Arabnejad, H.; Barbosa, J.G. Maximizing the completion rate of concurrent scientific applications under time and budget constraints. J. Comput. Sci. 2017, 23, 120–129. [Google Scholar] [CrossRef]

- Son, J.H.; Kim, J.S.; Kim, M.H. Extracting the workflow critical path from the extended well-formed workflow schema. J. Comput. Syst. Sci. 2005, 70, 86–106. [Google Scholar] [CrossRef][Green Version]

- Chen, H.; Zhu, J.; Wu, G.; Huo, L. Cost-efficient reactive scheduling for real-time workflows in clouds. J. Supercomput. 2018, 74, 6291–6309. [Google Scholar] [CrossRef]

- Xavier, M.G.; Neves, M.V.; Rossi, F.D.; Ferreto, T.C.; Lange, T.; De Rose, C.A. Performance evaluation of container-based virtualization for high performance computing environments. In Proceedings of the 2013 21st Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP), Belfast, UK, 27 February–1 March 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 233–240. [Google Scholar]

- Ruan, B.; Huang, H.; Wu, S.; Jin, H. A performance study of containers in cloud environment. In Proceedings of the Asia-Pacific Services Computing Conference, Zhangjiajie, China, 16–18 November 2016; Springer: Cham, Switzerland, 2016; pp. 343–356. [Google Scholar]

- Kozhirbayev, Z.; Sinnott, R.O. A performance comparison of container-based technologies for the cloud. Future Gener. Comput. Syst. 2017, 68, 175–182. [Google Scholar] [CrossRef]

- Helsley, M. LXC: Linux Container Tools. 2017. Available online: https://developer.ibm.com/tutorials/l-lxc-containers/ (accessed on 5 January 2019).

- Chen, J.; Guan, Q.; Liang, X.; Vernon, L.J.; McPherson, A.; Lo, L.T.; Chen, Z.; Ahrens, J.P. Docker-Enabled Build and Execution Environment (BEE): An Encapsulated Environment Enabling HPC Applications Running Everywhere. arXiv 2017, arXiv:1712.06790. [Google Scholar]

- Al-Dhuraibi, Y.; Paraiso, F.; Djarallah, N.; Merle, P. Autonomic vertical elasticity of docker containers with elasticdocker. In Proceedings of the 2017 IEEE 10th International Conference on Cloud Computing (CLOUD), Honolulu, CA, USA, 25–30 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 472–479. [Google Scholar]

- Machen, A.; Wang, S.; Leung, K.K.; Ko, B.J.; Salonidis, T. Live service migration in mobile edge clouds. IEEE Wirel. Commun. 2018, 25, 140–147. [Google Scholar] [CrossRef]

- Ma, L.; Yi, S.; Carter, N.; Li, Q. Efficient Live Migration of Edge Services Leveraging Container Layered Storage. IEEE Trans. Mob. Comput. 2018, 18, 2020–2033. [Google Scholar] [CrossRef]

- Zheng, C.; Thain, D. Integrating containers into workflows: A case study using makeflow, work queue, and docker. In Proceedings of the 8th International Workshop on Virtualization Technologies in Distributed Computing, Portland, OR, USA, 15–16 June 2015; ACM: New York, NY, USA, 2015; pp. 31–38. [Google Scholar]

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and low-complexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Bharathi, S.; Chervenak, A.; Deelman, E.; Mehta, G.; Su, M.H.; Vahi, K. Characterization of scientific workflows. In Proceedings of the 2008 Third Workshop on Workflows in Support of Large-Scale Science, Austin, TX, USA, 17 November 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–10. [Google Scholar]

- Deelman, E.; Singh, G.; Livny, M.; Berriman, B.; Good, J. The cost of doing science on the cloud: The montage example. In Proceedings of the SC’08: Proceedings of the 2008 ACM/IEEE Conference on Supercomputing, Austin, TX, USA, 15–21 November 2008; pp. 1–12. [Google Scholar]

- Abrishami, S.; Naghibzadeh, M.; Epema, D.H. Deadline-constrained workflow scheduling algorithms for infrastructure as a service clouds. Future Gener. Comput. Syst. 2013, 29, 158–169. [Google Scholar] [CrossRef]

- Shi, J.; Luo, J.; Dong, F.; Zhang, J.; Zhang, J. Elastic resource provisioning for scientific workflow scheduling in cloud under budget and deadline constraints. Clust. Comput. 2016, 19, 167–182. [Google Scholar] [CrossRef]

- Amazon EC2 T2 Instances. Available online: https://aws.amazon.com/ec2/instance-types/t2/ (accessed on 27 July 2020).

- Deelman, E.; Singh, G.; Su, M.H.; Blythe, J.; Gil, Y.; Kesselman, C.; Mehta, G.; Vahi, K.; Berriman, G.B.; Good, J.; et al. Pegasus: A framework for mapping complex scientific workflows onto distributed systems. Sci. Program. 2005, 13, 219–237. [Google Scholar] [CrossRef]

| VM Instance | O (USD/hour) | S (USD/hour) |

|---|---|---|

| t2.small | 0.0230 | 0.0069 |

| t2.medium | 0.0464 | 0.0139 |

| t2.xlarge | 0.1856 | 0.0557 |

| t2.2xlarge | 0.3712 | 0.1114 |

| VM Instance | B 1 h (USD/hour) | B 6 h (USD/hour) |

|---|---|---|

| t2.small | 0.0130 | 0.0160 |

| t2.medium | 0.0260 | 0.0320 |

| t2.xlarge | 0.1020 | 0.1300 |

| t2.2xlarge | 0.2040 | 0.2600 |

| Budget = 0.25 | Budget = 0.50 | |||

|---|---|---|---|---|

| Deadline = 1.0 | Deadline = 1.5 | Deadline = 1.0 | Deadline = 1.5 | |

| Unfulfilled Spot Requests (*-OB) | 110 | 126 | 118 | 122 |

| Unfulfilled Spot Requests (*-OBS) | 175 | 184 | 175 | 174 |

| Migration of Tasks (*-OBS) | 36 | 47 | 35 | 30 |

| Additional Schedules (*-OB) | 2.05% | 2.35% | 2.18% | 2.27% |

| Additional Schedules (*-OBS) | 3.93% | 4.30% | 3.89% | 3.80% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sampaio, A.M.; Barbosa, J.G. Constructing Reliable Computing Environments on Top of Amazon EC2 Spot Instances. Algorithms 2020, 13, 187. https://doi.org/10.3390/a13080187

Sampaio AM, Barbosa JG. Constructing Reliable Computing Environments on Top of Amazon EC2 Spot Instances. Algorithms. 2020; 13(8):187. https://doi.org/10.3390/a13080187

Chicago/Turabian StyleSampaio, Altino M., and Jorge G. Barbosa. 2020. "Constructing Reliable Computing Environments on Top of Amazon EC2 Spot Instances" Algorithms 13, no. 8: 187. https://doi.org/10.3390/a13080187

APA StyleSampaio, A. M., & Barbosa, J. G. (2020). Constructing Reliable Computing Environments on Top of Amazon EC2 Spot Instances. Algorithms, 13(8), 187. https://doi.org/10.3390/a13080187