In order to confirm the effectiveness of the proposed method, verification experiments were conducted using benchmark datasets and focusing on a binary classification problem.

4.1. Datasets

We conducted a verification experiment using two datasets described in the UCI benchmark dataset: The “abalone dataset” [

10] and the “wine quality dataset” [

11]. The abalone dataset summarizes measurements of physical characteristics of the abalone (eight dimensions) and the age of each. The wine quality dataset summarizes the measured values of the chemical composition of wine (11 dimensions) and the quality determined by sensory evaluation by wine experts (10 levels). In this experiment, in order to consider it as a binary classification problem, the age of the abalone was used as a target variable in the abalone dataset, and the quality of wine was used as a target variable in wine quality dataset. In the abalone dataset, data under 10 years old was assigned class label #1, and data of 10 years and older was assigned class label #2. In the wine quality dataset, data with quality level 5 or lower was assigned class label # 1, and data with quality level 6 or more was assigned class label #2.

Table 1 shows the abalone dataset and

Table 2 the wine quality dataset used in this experiment. As shown in

Table 1 and

Table 2, the abalone dataset was a balanced problem with approximately the same number of data items in each class, and the wine quality dataset was an imbalanced problem with a class ratio of about 1:2.

4.2. Experimental Conditions

From the datasets prepared in

Section 4.1, we created the target and source domains randomly so that they were disjointed. As a specific procedure, first,

data are extracted from the data set and used as a target domain. Then, let the remaining data be the source domain. Since transfer learning is usually employed in situations where sufficient data for the target domain cannot be secured, we set

, to reproduce such situations. In addition, since the source domain contains data labeled with a standard different from the target concept, we intentionally included data with an erroneous label, in this paper referred to as non-target data. The number of non-target data items was determined based on the size of the source domain, that is, we set the percentage of non-target data contained in the source domain at α

, and

α ×

data items were mislabeled in the source domain. In this study, we used a decision tree commonly used in ensemble learning, and generated multiple classifiers using bootstrap sampling. We set the amount of data extracted by bootstrap sampling at 50% of the

. In addition, the accuracy rate for the target domain of the base classifiers learned from the target domain was calculated using five-fold cross validation, and used as the adoption standard for the classifiers. In order to evaluate each method, five-fold cross validation was used.

4.4. Discussion

Consider the experimental results described in

Section 4.3. The target variable in the abalone dataset is a quantitative label because it is the age of the abalone, while the objective variable in the wine quality dataset is a qualitative label because it is based on sensory evaluation by a wine expert. Therefore, it is considered that the labels in the wine quality dataset had variation. First, we discuss the accuracy of the two bagging (learning using target domain and learning using source domain). In the bagging learned using the target domain, the accuracy is improved as the number of target domains increases. On the other hand, bagging learned using the source domain shows a stable accuracy. It is important to note that as

α increases, the accuracy of bagging with the source domain tends to decrease. When there are many target domains, learning with source domains shows a worse accuracy than with target domains. Next, we discuss the accuracy of TrBagg, a conventional transfer learning method, under the conditions of this experiment. In the case of

, it can be seen that TrBagg exhibits higher accuracy than bagging learned using the target domain, under all conditions. However, when

and

, TrBagg exhibits lower accuracy than bagging. There are two possible causes. One is that when

, there were few classifiers that could be adopted from the source domain. The data used for learning relies on random sampling by bootstrap sampling, because it is not known which data in the source domain is in accordance with the target concept. In a source domain in which more than half the data is erroneously labeled, it is considered that many data items with erroneous labels will inevitably be extracted. Therefore, it can be inferred from the results above that many classifiers could not properly learn the target concept. The other possible cause was the evaluation method for classifier adoption. Each classifier learned from data in the source domain is adopted or discarded based on its accuracy for the target domain. In this process, the target-domain accuracy of bagging learned from the target domain is used as the adoption criterion. In the case of

, the accuracy of bagging learning from the target domain is improved. As a result, the adoption criterion is also improved, and it is considered that it is difficult to adopt many classifiers. It can be said that TrBagg is not fully effective when there are a large number of data items in the target domain, and more than half the data in the source domain is erroneously labeled.

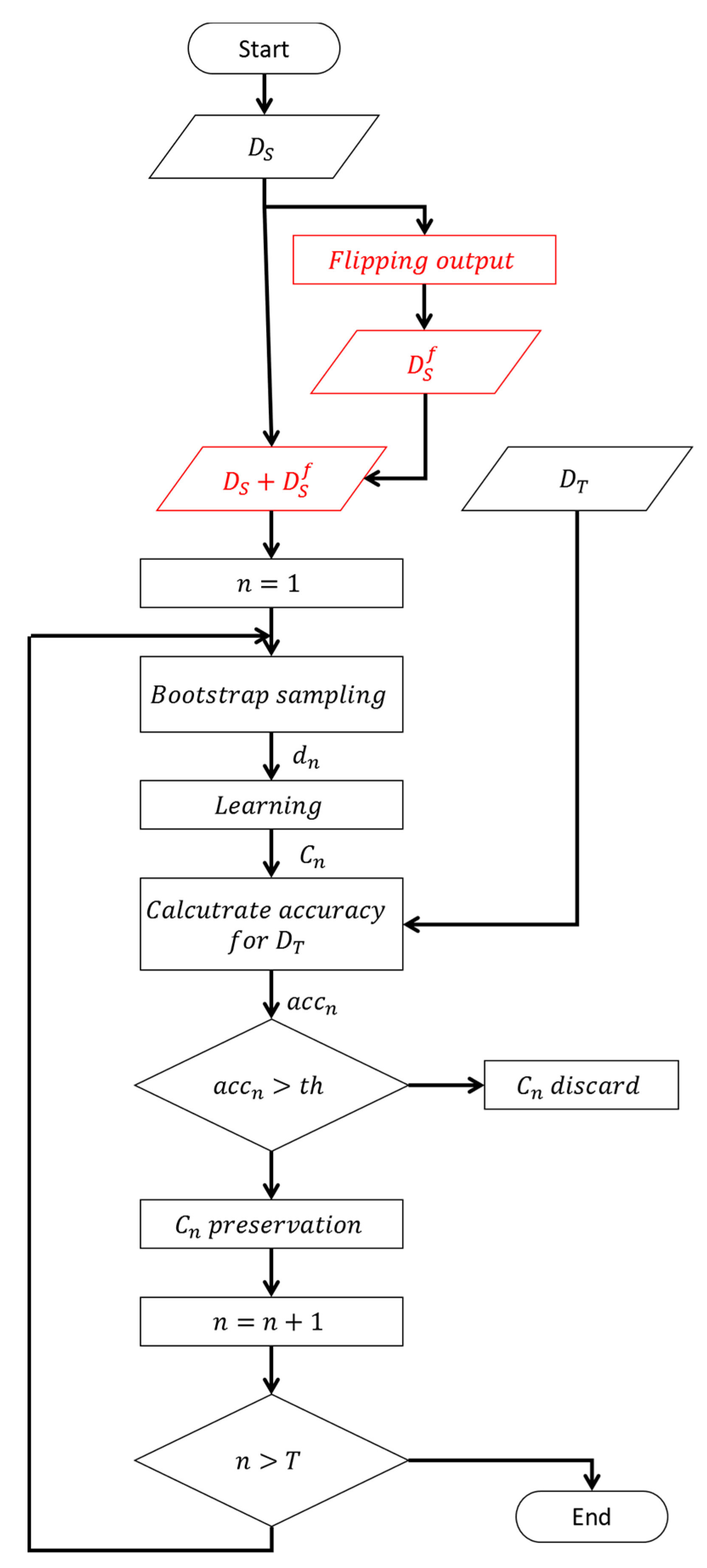

Next, we consider the proposed method. It can be seen that under all conditions of the validation experiments, the accuracy of the proposed method is higher than that of TrBagg. This shows the effect of extending the source domain by flipping output. There are two expected effects of the flipping output: 1) An increase in diversity as the number of data items increases, and 2) proper labeling of mislabeled data. Let us examine each of these effects. We will assume that all the data in the source domain is assigned a correct label (i.e.,

), and evaluate the improvement in accuracy in the proposed method.

Table 5 shows the results. We can see that the accuracy in the proposed method is not improved, compared to TrBagg, in many cases. From this, it can be seen that the performance improvement due an increase in diversity cannot be expected very much. Therefore, it is judged that the improvement in performance of the proposed method is due to proper labeling of mislabeled data. Notably, even when the accuracy of TrBagg is worse than that of bagging (

), the accuracy can be improved by using the proposed method.

In order to further verify the effectiveness of the proposed method, we introduce effect size, a statistical indication of the effectiveness of a proposed method. In this paper, we used

[

12], an effect size that represents the difference between two groups.

between the two groups

and

is as follows:

Here, the sample size of

is

, the variance

, and the sample average

. The sample size of

is

, the variance

, and the sample average

. The larger the value of

, the larger the difference between the average values of the two groups.

Table 6 shows the comparative effect size between the proposed method and bagging in each condition of the verification experiment, and

Table 7 shows the effect size between the proposed method and TrBagg.

Table 6 and

Table 7 clearly show that the proposed method has a large effect size.

Table 6 shows that the effect size increases as

increases. In particular, when

, the effect size compared with TrBagg is expected to be very large, 0.9 or more. Therefore, it can be seen that the proposed method is more effective as more data are incorrectly labeled in the source domain. However, when

is small, the effect size is as small as 0.2. This suggests that the proposed method is effective when the source domain contains many errors.

Table 7 also shows that the proposed method is effective when

is small.