Matrix Adaptation Evolution Strategy with Multi-Objective Optimization for Multimodal Optimization

Abstract

1. Introduction

- The MMOP is transferred into two objective optimization problems with strong objective confliction; the advantage of multi-objective optimization can be fully used to ensure the diversity of the population.

- The information of the population landscape and the fitness of the objective function are employed to construct two conflicting objective functions instead of utilizing classical niching strategies. Moreover, the archive is employed to save better individuals, which are helpful to ensure the convergence of the algorithm. In this manner, the exploration and exploitation abilities of the algorithm are balanced effectively.

- The population is divided into several subpopulations in MA-ES instead of using one population in CMA-ES. In this way, the algorithm can explore and exploit in parallel within these subpopulations to find multiple optimal solutions for the given problem. Moreover, the niching method is employed to improve the diversity of the population.

- Systematic experiments conducted to compare the algorithms including CMA-ES, MA-ES, CMA-ES-Niching (CMA-N), CMA-ES-Niching-MO (CMA-NMO), CMA-ES Niching with Mahalanobis Metric [20] (CMA-NMM), CMA-NMM-MO, MA-ES-Niching (MA-ESN), and MA-ESN-MO on CEC2013 multimodal benchmark problems [21] are described. CMA-NMO and CMA-NMM-MO are obtained by introducing the proposed method into CMA-ES and CMA-NMM. The experimental results show that the proposed method is promising for solving multimodal optimization problems.

2. Related Work

3. Variants of CMA-ES and MA-ES Algorithm

3.1. Variants of CMA-ES

3.2. MA-ES Algorithm

| Algorithm 1. MA-ES algorithm. | |

| 1: | Initialize (y(0), σ(0), g = 0, s(0) := 0, M(0) = I) |

| 2: | while termination condition(s) is not fulfilled |

| 3: | for l = 1 to λ do |

| 4: | |

| 5: | |

| 6: | |

| 7: | end for |

| 8: | SortOffspringPopulation |

| 9: | Update y(g+1) according to (1) |

| 10: | Update s(g+1) according to (2) |

| 11: | Update M(g+1) according to (5) |

| 12: | Update σ(g+1) according to (6) |

| 13: | g = g + 1 |

| 14: | end while |

4. The MA-ESN-MO Algorithm

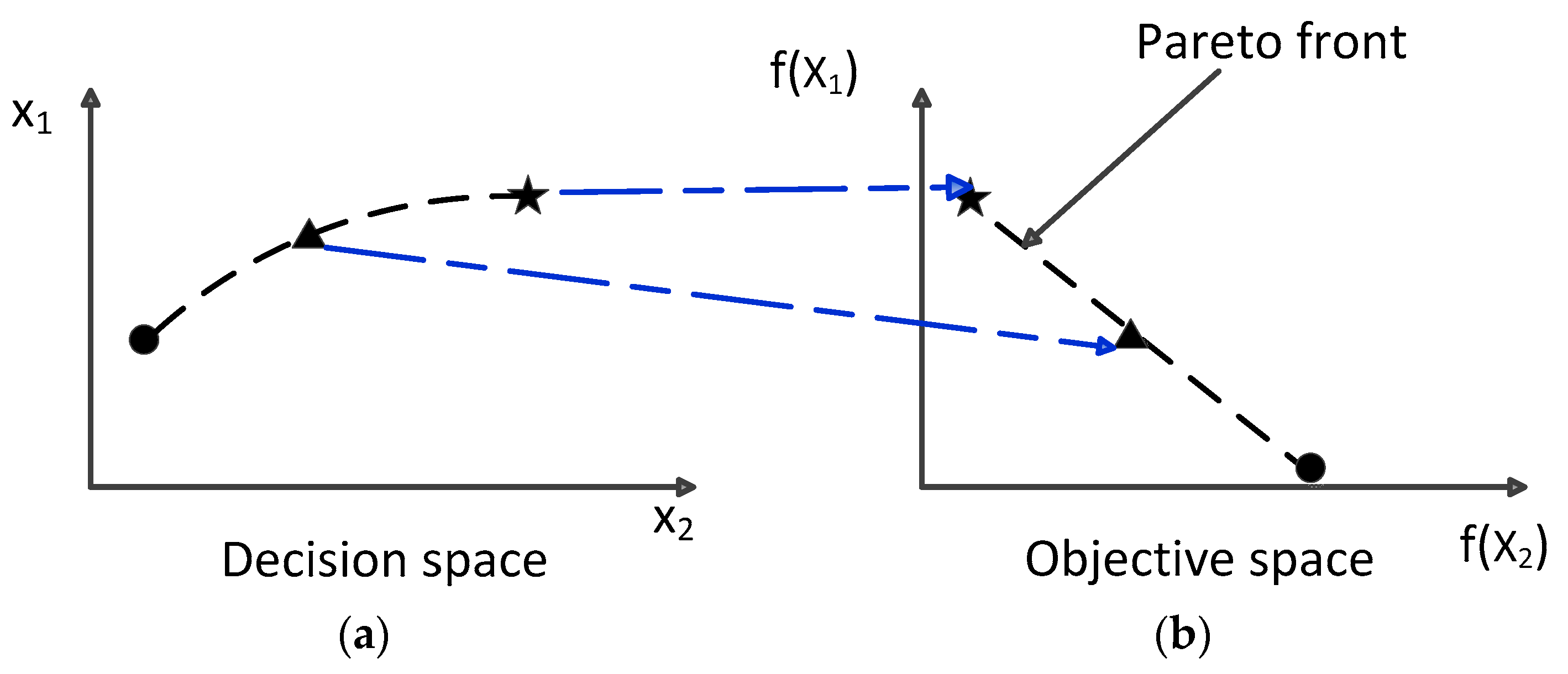

4.1. Transforming an MMOP into an MOP

4.2. Matrix Adaptation Evolution Strategy with Multi-Objective Optimization Algorithm

| Algorithm 2. MA-ESN-MO algorithm. | |

| 1: | Initialize D(number of dimensions), λ, y(0), σ(0), s(0), d(0), M(0) and NP, g = 0 |

| 2: | Initialize NP parents |

| 3: | while the termination condition is not satisfied |

| 4: | Generate the individuals according to Equation (1) |

| 5: | Calculate the objective function value of each individual |

| 6: | Sort the population according to the objective function value |

| 7: | Compute the f1 and f2 values of each dimension according to equations (7) and (8) |

| 8: | if rand > 0.5 |

| 9: | Generate the seeds of niching according to the non-dominated sorting procedure |

| 10: | else |

| 11: | Generate the seeds of niching with the dynamic peak identification algorithm (Algorithm 2) |

| 12: | end if |

| 13: | Update s(g+1) according to Equation (2) |

| 14: | Update M(g+1) according to Equation (5) |

| 15: | Update σ(g+1) according to Equation (6) |

| 16: | g = g + 1 |

| 17: | if g = = 1 |

| 18: | Archive = x(g) |

| 19: | else |

| 20: | if C(g) < C(g−1) |

| 21: | x(g) = Archive |

| 22: | else |

| 23: | Archive = x(g) |

| 24: | end if |

| 25: | end if |

| 26: | end while |

| Output: the global optima with the maximum objective function value in the population. | |

| Algorithm 3. Dynamic Peak Identification [15]. | |

| 1: | Input niche radius ρ, population Pop, and population size NP |

| 2: | Sort Pop according to the objective function value |

| 3: | NumPeak = 1 |

| 4: | DPS = {Pop{1}} |

| 5: | Niche (NumPeak) = {Pop{1}} |

| 6: | fori = 2 to NP |

| 7: | for k = 1 to NumPeak |

| 8: | if Pop{i} and DPS (k) belong to the same niche |

| 9: | Niche (k) = Niche (k)∪{Pop{i}} |

| 10: | end if |

| 11: | end for |

| 12: | ifPop{i} is not within ρ of peak in DPS |

| 13: | NumPeak = NumPeak + 1 |

| 14: | DPS = DPS∪{Pop{i}} |

| 15: | end if |

| 16: | end for |

| Output: DPS and Niche | |

- (1)

- Two conflicting objective functions are constructed differently. The landscape information of the population is usually helpful for judging the diversity of the population. The fitness of the objective function is helpful for obtaining the global optimum. Therefore, the two strongly conflicting objectives are designed by the landscape information of the population and the fitness of the objective function. In order to find multiple optimal solutions, exploration should be paid attention to in the early stage of evolution, and exploitation should be considered in the later stage of evolution. Therefore, the parameter α, which is proportional to the number of function evaluations, is introduced to adjust for exploration and exploitation.

- (2)

- The archive is employed to save the seeds of niching. In the classical CMA-ES algorithm and MA-ES algorithm, the parent will be discarded after the offspring are yielded. The CMA-ES and MA-ES perform well in solving unimodal optimization problems because of their strong exploratory ability. In multimodal optimization problems, the parent is responsible for finding the area where the potential optimal solution existed, while the offspring are responsible for exploitation. However, sometimes the offspring perform worse than their parents. As a result, the offspring may fail to find the optimal solution. As the evolution continues, the area where the potential optimal solution existed may be abandoned. Therefore, it will be difficult for the population to find all the global optimal solutions. To alleviate this issue, the archive is introduced in the MA-ESN-MO to save better individuals. At each generation, the individuals in the archive will be used as the parents for the next generation. If the optimal individual from offspring performs better than its parent, the individual will be saved in the archive. Otherwise, the parent will be saved in the archive. Then, it ensures the population to find all the global optimal solutions.

- (3)

- The non-dominated solutions include all the multiple optima of an MMOP. However, they also include some inferior solutions. Then, the best niche may occupy the population’s resources during the evolution. To address this issue, the dynamic peak identification strategy is used to avoid converging toward a single global optimum. However, the performance of the dynamic peak identification strategy is highly sensitive to the niching radius. In other words, the performance of the algorithm deteriorates with an inappropriate niching radius. Therefore, the transformation strategy and the dynamic peak identification strategy are dynamically employed in MA-ESN-MO to improve the performance of the algorithm.

5. Experiments and Discussions

5.1. Parameter Settings and Performance Criteria

5.1.1. Peak Ratio

5.1.2. Success Rate

5.1.3. Average Number of Peaks Found

5.2. Experimental Results of Eight Optimization Algorithms

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Singh, G.; Deb, K. Comparison of multimodal optimization algorithms based on evolutionary algorithms. In Proceedings of the Genetic and Evolutionary Computation Conference, Seattle, WA, USA, 8–12 July 2006; pp. 1305–1312. [Google Scholar]

- Li, X. Efficient differential evolution using speciation for multimodal function optimization. In Proceedings of the Genetic and Evolutionary Computation Conference, Washington DC, USA, 25–29 June 2005; pp. 873–880. [Google Scholar]

- Li, X. Niching without niching parameters: Particle swarm optimization using a ring topology. IEEE Trans. Evol. Comput. 2010, 14, 150–169. [Google Scholar] [CrossRef]

- Shir, O.M.; Emmerich, M.; Back, T. Adaptive niche radii and niche shapes approaches for niching with the CMA-ES. Evol. Comput. 2010, 18, 97–126. [Google Scholar] [CrossRef] [PubMed]

- Li, J.P.; Balazs, M.E.; Parks, G.T.; Clarkson, P.J. A species conserving genetic algorithm for multimodal function optimization. Evol. Comput. 2002, 10, 207–234. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, R. Multimodal optimization using crowding-based differential evolution. In Proceedings of the IEEE Congress on Evolutionary Computation, Portland, OR, USA, 19–23 June 2004; pp. 1382–1389. [Google Scholar]

- Harik, G.R. Finding multimodal solutions using restricted tournament selection. In Proceedings of the International Conference on Genetic Algorithms, Pittsburgh, PA, USA, 15–19 June 1995; pp. 24–31. [Google Scholar]

- Goldberg, D.E.; Richardson, J. Genetic algorithms with sharing for multimodal function optimization. In Proceedings of the International Conference on Genetic Algorithms, Cambridge, MA, USA, 6–8 July 1987; pp. 41–49. [Google Scholar]

- Petrowski, A. A clearing procedure as a niching method for genetic algorithms. In Proceedings of the IEEE Congress on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996; pp. 798–803. [Google Scholar]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the Time Complexity of the Derandomized Evolution Strategy with Covariance Matrix Adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Beyer, H.G.; Sendhoff, B. Simplify Your Covariance Matrix Adaptation Evolution Strategy. IEEE Trans. Evol. Comput. 2017, 21, 746–759. [Google Scholar] [CrossRef]

- Hansen, N.; Kern, S. Evaluating the CMA Evolution Strategy on Multimodal Test Functions. In Parallel Problem Solving from Nature—PPSN VIII; Springer: Berlin/Heidelberg, Germany, 2004; pp. 282–291. [Google Scholar]

- Shir, O.M.; Bäck, T. Niche Radius Adaptation in the CMA-ES Niching Algorithm. In Parallel Problem Solving from Nature—PPSN IX; Springer: Berlin/Heidelberg, Germany, 2006; pp. 142–151. [Google Scholar]

- Shir, O.M.; Back, T. Dynamic niching in evolution strategies with covariance matrix adaptation. In Proceedings of the IEEE Congress on Evolutionary Computation, Edinburgh, UK, 2–5 September 2005; Volume 3, pp. 2584–2591. [Google Scholar]

- Basak, A.; Das, S.; Tan, K.C. Multimodal Optimization Using a Biobjective Differential Evolution Algorithm Enhanced with Mean Distance-Based Selection. IEEE Trans. Evol. Comput. 2013, 17, 666–685. [Google Scholar] [CrossRef]

- Deb, K.; Saha, A. Multimodal optimization using a bi-objective evolutionary algorithm. Evol. Comput. 2012, 20, 27–62. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, H.X.; Yen, G.G.; Song, W. MOMMOP: Multiobjective Optimization for Locating Multiple Optimal Solutions of Multimodal Optimization Problems. IEEE Trans. Cybern. 2015, 45, 830–843. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Kharma, N.; Grogono, P. Bi-Objective Multipopulation Genetic Algorithm for Multimodal Function Optimization. IEEE Trans. Evol. Comput. 2010, 14, 80–102. [Google Scholar]

- Yu, W.J.; Ji, J.Y.; Gong, Y.J.; Yang, Q.; Zhang, J. A Tri-Objective Differential Evolution Approach for Multimodal Optimization. Inf. Sci. 2017, 423, 1–23. [Google Scholar] [CrossRef]

- Shir, O.M.; Emmerich, M.; Back, T. Self-Adaptive Niching CMA-ES with Mahalanobis Metric. In Proceedings of the IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007. [Google Scholar]

- Li, X.; Engelbrecht, A.; Epitropakis, M. Benchmark Functions for CEC 2013 Special Session and Competition on Niching Methods for Multimodal Function Optimization; Technical Report; Royal Melbourne Institute of Technology: Melbourne, VIC, Australia, 2013. [Google Scholar]

- Cheng, R.; Li, M.; Li, K.; Yao, X. Evolutionary Multiobjective Optimization Based Multimodal Optimization: Fitness Landscape Approximation and Peak Detection. IEEE Trans. Evol. Comput. 2018, 22, 692–706. [Google Scholar] [CrossRef]

- Qu, B.Y.; Suganthan, P.N.; Liang, J.J. Differential Evolution with neighborhood mutation for multimodal optimization. IEEE Trans. Evol. Comput. 2012, 16, 601–614. [Google Scholar] [CrossRef]

- SMahfoud, W. Niching Methods for Genetic Algorithms. Ph.D. Thesis, University of Illinois at Urbana-Champaign, Champaign, IL, USA, 1995. [Google Scholar]

- Mengsheol, O.; Goldberg, D. Probabilistic crowding: Deterministic crowding with probabilistic replacement. In Proceedings of the Genetic and Evolutionary Computation Conference, Orlando, FL, USA, 13–17 July 1999; pp. 409–416. [Google Scholar]

- Fayek, M.B.; Darwish, N.M.; Ali, M.M. Context based clearing procedure: A niching method for genetic algorithms. J. Adv. Res. 2010, 1, 301–307. [Google Scholar] [CrossRef]

- Hui, S.; Suganthan, P.N. Ensemble and Arithmetic Recombination-Based Speciation Differential Evolution for Multimodal Optimization. IEEE Trans. Cybern. 2016, 46, 64–74. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Chen, W.N.; Yu, Z.; Gu, T. Adaptive Multimodal Continuous Ant Colony Optimization. IEEE Trans. Evol. Comput. 2017, 21, 191–205. [Google Scholar] [CrossRef]

- Haghbayan, P.; Nezamabadi-Pour, H.; Kamyab, S. A niche GSA method with nearest neighbor scheme for multimodal optimization. Swarm Evol. Comput. 2017, 35, 78–92. [Google Scholar] [CrossRef]

- Qu, B.Y.; Suganthan, P.N.; Das, S. A Distance-Based Locally Informed Particle Swarm Model for Multimodal;Optimization. IEEE Trans. Evol. Comput. 2013, 17, 387–402. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y. Enhancing the performance of differential evolution with covariance matrix self-adaptation. Appl. Soft Comput. 2018, 64, 227–243. [Google Scholar] [CrossRef]

- Wang, Y.; Li, H.X.; Huang, T.; Li, L. Differential evolution based on covariance matrix learning and bimodal distribution parameter setting. Appl. Soft Comput. J. 2014, 18, 232–247. [Google Scholar] [CrossRef]

- Chen, X.; Tianfield, H.; Du, W.; Liu, G. Biogeography-based optimization with covariance matrix based migration. Appl. Soft Comput. 2016, 45, 71–85. [Google Scholar] [CrossRef]

- Ghosh, S.; Das, S.; Roy, S.; Sk, M.I. A Differential Covariance Matrix Adaptation Evolutionary Algorithm for real parameter optimization. Inf. Sci. 2012, 182, 199–219. [Google Scholar] [CrossRef]

- He, X.Y.; Zhou, Y.R.; Chen, Z.F. Evolutionary Bilevel Optimization based on Covariance Matrix Adaptation. IEEE Trans. Evol. Comput. 2018, 1–21. [Google Scholar] [CrossRef]

- Awad, N.; Ali, M.Z.; Suganthan, P.N.; Reynolds, R.G. A novel differential crossover strategy based on covariance matrix learning with Euclidean neighborhood for solving real-world problems. In Proceedings of the IEEE Congress on Evolutionary Computation, San Sebastian, Spain, 5–8 June 2017; pp. 380–386. [Google Scholar]

- Jiang, Q.; Wang, L.; Cheng, J.; Zhu, X.; Li, W.; Lin, Y.; Yu, G.; Hei, X.; Zhao, J.; Lu, X. Multi-objective differential evolution with dynamic covariance matrix learning for multi-objective optimization problems with variable linkages. Knowl. Based Syst. 2017, 121, 111–128. [Google Scholar] [CrossRef]

- Wang, T.C.; Liaw, R.T.; Ting, C.K. MOEA/D using covariance matrix adaptation evolution strategy for complex multi-objective optimization problems. In Proceedings of the IEEE Congress on Evolutionary Computation, Vancouver, BC, Canada, 24–29 July 2016; pp. 983–990. [Google Scholar]

- Wang, J.H.; Liao, J.J.; Zhou, Y.; Cai, Y.Q. Differential Evolution Enhanced with Multiobjective Sorting-Based Mutation Operators. IEEE Trans. Cybern. 2014, 44, 2792–2805. [Google Scholar] [CrossRef] [PubMed]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.X.; Zhang, Q.F. Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans. Evol. Comput. 2011, 15, 55–66. [Google Scholar] [CrossRef]

- Alcalá-Fdez, J.; Sánchez, L.; García, S.; del Jesus, M.J.; Ventura, S.; Garrell, J.M.; Otero, J.; Romero, C.; Bacardit, J.; Rivas, V.M.; et al. KEEL: A software tool to assess evolutionary algorithms to data mining problems. Soft Comput. 2009, 13, 307–318. [Google Scholar] [CrossRef]

| Fun. | ε | r | D | Number of Global Optima | Number of Function Evaluations | Population Size |

|---|---|---|---|---|---|---|

| f1 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 1 | 2 | 5 × 104 | 80 |

| f2 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 1 | 5 | 5 × 104 | 80 |

| f3 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 1 | 1 | 5 × 104 | 80 |

| f4 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 2 | 4 | 5 × 104 | 80 |

| f5 | 0.1/0.01/0.001/0.0001/0.00001 | 0.5 | 2 | 2 | 5 × 104 | 80 |

| f6 | 0.1/0.01/0.001/0.0001/0.00001 | 0.5 | 2 | 18 | 2 × 105 | 100 |

| f7 | 0.1/0.01/0.001/0.0001/0.00001 | 0.2 | 2 | 36 | 2 × 105 | 300 |

| f8 | 0.1/0.01/0.001/0.0001/0.00001 | 0.5 | 3 | 81 | 4 × 105 | 300 |

| f9 | 0.1/0.01/0.001/0.0001/0.00001 | 0.2 | 3 | 216 | 4 × 105 | 300 |

| f10 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 2 | 12 | 2 × 105 | 100 |

| f11 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 2 | 6 | 2 × 105 | 200 |

| f12 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 2 | 8 | 2 × 105 | 200 |

| f13 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 2 | 6 | 2 × 105 | 200 |

| f14 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 3 | 6 | 4 × 105 | 200 |

| f15 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 3 | 8 | 4 × 105 | 200 |

| f16 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 5 | 6 | 4 × 105 | 200 |

| f17 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 5 | 8 | 4 × 105 | 200 |

| f18 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 10 | 6 | 4 × 105 | 200 |

| f19 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 10 | 8 | 4 × 105 | 200 |

| f20 | 0.1/0.01/0.001/0.0001/0.00001 | 0.01 | 20 | 8 | 4 × 105 | 200 |

| Fun. | ε | CMA-ES | MA-ES | CMA-N | CMA-NMO | CMA-NMM | CMA-NMM-MO | MA-ESN | MA-ESN-MO |

|---|---|---|---|---|---|---|---|---|---|

| f1 | 0.1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 0.01 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | |

| 0.001 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | |

| 0.00001 | 2 | 1.96 | 2 | 2 | 2 | 2 | 2 | 2 | |

| f2 | 0.1 | 1 | 1 | 5 | 5 | 5 | 5 | 5 | 5 |

| 0.01 | 1 | 1 | 5 | 5 | 5 | 5 | 4.92 | 5 | |

| 0.001 | 1 | 1 | 4.52 | 5 | 5 | 5 | 4.32 | 5 | |

| 0.00001 | 1 | 1 | 3.20 | 3.68 | 5 | 1.96 | 2.16 | 1.44 | |

| f3 | 0.1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 0.01 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| 0.001 | 1 | 1 | 1 | 1 | 1 | 1 | 0.96 | 1 | |

| 0.00001 | 1 | 1 | 0.60 | 0.28 | 1 | 0.48 | 0.56 | 0.60 | |

| f4 | 0.1 | 1 | 1 | 4 | 4 | 2.68 | 4 | 4 | 4 |

| 0.01 | 1 | 1 | 4 | 4 | 2.68 | 4 | 4 | 4 | |

| 0.001 | 1 | 1 | 2.64 | 4 | 2.68 | 4 | 2.56 | 4 | |

| 0.00001 | 1 | 1 | 2.56 | 3.96 | 2.56 | 3.80 | 2.36 | 3.96 | |

| f5 | 0.1 | 1.12 | 1.04 | 2 | 2 | 2 | 2 | 2 | 2 |

| 0.01 | 1.12 | 1.04 | 1.80 | 2 | 1.64 | 2 | 1.80 | 2 | |

| 0.001 | 1.12 | 1.04 | 1.52 | 2 | 1.48 | 2 | 1.60 | 2 | |

| 0.00001 | 1.12 | 1.04 | 1.40 | 1.80 | 1.32 | 1.72 | 1.32 | 1.80 | |

| f6 | 0.1 | 1 | 1 | 13.56 | 17.56 | 12.72 | 17.52 | 14.12 | 17.40 |

| 0.01 | 1 | 1 | 13.48 | 17.48 | 12.72 | 17.48 | 14.04 | 17.36 | |

| 0.001 | 1 | 1 | 13.44 | 17.40 | 12.72 | 17.36 | 14.00 | 17.36 | |

| 0.00001 | 1 | 0.96 | 13.40 | 17.20 | 12.72 | 17.24 | 14.00 | 17.04 | |

| f7 | 0.1 | 1 | 1 | 36.00 | 35.28 | 27.68 | 35.04 | 36.00 | 35.20 |

| 0.01 | 1 | 1 | 29.68 | 30.44 | 27.68 | 29.00 | 28.84 | 30.00 | |

| 0.001 | 1 | 1 | 29.40 | 30.28 | 27.68 | 28.96 | 28.84 | 29.64 | |

| 0.00001 | 1 | 1 | 29.52 | 29.92 | 27.16 | 28.32 | 28.56 | 29.12 | |

| f8 | 0.1 | 1.08 | 0.96 | 42.64 | 53.40 | 44.04 | 54.04 | 43.44 | 50.84 |

| 0.01 | 1.08 | 0.92 | 42.64 | 52.96 | 44.04 | 53.48 | 43.40 | 50.20 | |

| 0.001 | 1.08 | 0.76 | 42.64 | 52.40 | 44.04 | 52.92 | 43.40 | 49.68 | |

| 0.00001 | 1.08 | 0.64 | 42.60 | 50.80 | 44.04 | 51.28 | 43.36 | 47.72 | |

| f9 | 0.1 | 1 | 1.04 | 216 | 89.20 | 28.92 | 73.32 | 216 | 81.48 |

| 0.01 | 1 | 1.00 | 31.40 | 57.32 | 28.92 | 52.16 | 30.48 | 54.40 | |

| 0.001 | 1 | 1.00 | 31.40 | 46.92 | 28.92 | 41.60 | 30.48 | 43.72 | |

| 0.00001 | 1 | 0.96 | 31.40 | 33.56 | 28.92 | 31.00 | 30.48 | 31.00 | |

| f10 | 0.1 | 1 | 1 | 9.92 | 11.68 | 8.64 | 11.72 | 9.68 | 11.72 |

| 0.01 | 1 | 1 | 9.92 | 11.60 | 8.64 | 11.72 | 9.68 | 11.56 | |

| 0.001 | 1 | 1 | 9.92 | 11.40 | 8.64 | 11.64 | 9.68 | 11.28 | |

| 0.00001 | 1 | 1 | 9.92 | 11.32 | 8.64 | 11.24 | 9.68 | 10.72 |

| Fun. | ε | CMA-ES | MA-ES | CMA-N | CMA-NMO | CMA-NMM | CMA-NMM-MO | MA-ESN | MA-ESN-MO |

|---|---|---|---|---|---|---|---|---|---|

| f11 | 0.1 | 1 | 1.04 | 6.00 | 4.28 | 3.72 | 4.16 | 6.00 | 4.04 |

| 0.01 | 1 | 1.04 | 3.68 | 3.96 | 3.72 | 3.96 | 3.72 | 4.00 | |

| 0.001 | 1 | 1.04 | 3.68 | 3.92 | 3.72 | 3.96 | 3.60 | 4.00 | |

| 0.00001 | 1 | 1.04 | 3.56 | 3.88 | 3.72 | 3.92 | 3.36 | 3.96 | |

| f12 | 0.1 | 1 | 1 | 2.84 | 6.92 | 2.80 | 6.24 | 3.00 | 7.04 |

| 0.01 | 1 | 1 | 2.76 | 6.44 | 2.80 | 5.60 | 2.68 | 6.60 | |

| 0.001 | 1 | 1 | 2.64 | 6.08 | 2.80 | 5.40 | 2.48 | 6.08 | |

| 0.00001 | 1 | 1 | 2.64 | 5.80 | 2.80 | 5.36 | 2.40 | 5.80 | |

| f13 | 0.1 | 1 | 1 | 5.76 | 3.92 | 3.44 | 3.96 | 5.64 | 3.92 |

| 0.01 | 1 | 1 | 3.36 | 3.84 | 3.44 | 3.96 | 3.44 | 3.92 | |

| 0.001 | 1 | 1 | 3.32 | 3.84 | 3.44 | 3.96 | 3.32 | 3.92 | |

| 0.00001 | 1 | 1 | 3.16 | 3.72 | 3.24 | 3.92 | 3.20 | 3.92 | |

| f14 | 0.1 | 1 | 0.96 | 6.00 | 3.56 | 1.72 | 3.68 | 5.80 | 3.64 |

| 0.01 | 1 | 0.76 | 1.76 | 3.52 | 1.72 | 3.68 | 1.84 | 3.60 | |

| 0.001 | 1 | 0.76 | 1.76 | 3.52 | 1.72 | 3.68 | 1.84 | 3.52 | |

| 0.00001 | 1 | 0.72 | 1.76 | 3.48 | 1.72 | 3.48 | 1.84 | 3.48 | |

| f15 | 0.1 | 1 | 2.08 | 8.00 | 2.40 | 1.12 | 2.44 | 7.44 | 2.20 |

| 0.01 | 1 | 0.84 | 1.20 | 2.24 | 1.12 | 2.44 | 1.32 | 2.12 | |

| 0.001 | 1 | 0.80 | 1.20 | 2.08 | 1.12 | 2.44 | 1.32 | 2.12 | |

| 0.00001 | 1 | 0.72 | 1.20 | 2.00 | 1.12 | 2.40 | 1.32 | 2.12 | |

| f16 | 0.1 | 1 | 0 | 6.00 | 2.28 | 1.16 | 2.72 | 6.00 | 2.32 |

| 0.01 | 1 | 0 | 1.24 | 2.16 | 1.16 | 2.44 | 1.08 | 2.08 | |

| 0.001 | 1 | 0 | 1.24 | 2.04 | 1.16 | 2.28 | 1.08 | 2.00 | |

| 0.00001 | 1 | 0 | 1.24 | 1.92 | 1.16 | 2.24 | 1.08 | 1.84 | |

| f17 | 0.1 | 0.84 | 0 | 6.32 | 1.72 | 1 | 1.52 | 5.92 | 1.56 |

| 0.01 | 0.84 | 0 | 1.00 | 1.52 | 1 | 1.44 | 1.04 | 1.56 | |

| 0.001 | 0.84 | 0 | 1.00 | 1.52 | 1 | 1.44 | 1.04 | 1.56 | |

| 0.00001 | 0.84 | 0 | 1.00 | 1.48 | 1 | 1.40 | 1.04 | 1.48 | |

| f18 | 0.1 | 3.88 | 0 | 6 | 1.84 | 2.32 | 1.52 | 6 | 1.60 |

| 0.01 | 0.88 | 0 | 1 | 1.60 | 1.00 | 1.48 | 1 | 1.60 | |

| 0.001 | 0.24 | 0 | 1 | 1.56 | 1.00 | 1.48 | 1 | 1.56 | |

| 0.00001 | 0.16 | 0 | 1 | 1.16 | 1.00 | 1.08 | 1 | 1.04 | |

| f19 | 0.1 | 0.72 | 0 | 1.20 | 1.12 | 1.08 | 1.04 | 1.40 | 1.08 |

| 0.01 | 0.20 | 0 | 1.00 | 1.12 | 1.00 | 1.00 | 1.00 | 1.04 | |

| 0.001 | 0.16 | 0 | 1.00 | 1.08 | 1.00 | 0.84 | 1.00 | 0.96 | |

| 0.00001 | 0 | 0 | 1.00 | 1.00 | 1.00 | 0.80 | 1.00 | 0.80 | |

| f20 | 0.1 | 0 | 0 | 8 | 1 | 7.72 | 1 | 8 | 0.92 |

| 0.01 | 0 | 0 | 0.88 | 0.16 | 0.96 | 0.36 | 0.56 | 0 | |

| 0.001 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0 | 0 | |

| 0.00001 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Fun. | CMA-ES | MA-ES | CMA-N | CMA-NMO | CMA-NMM | CMA-NMM-MO | MA-ESN | MA-ESN-MO | |

|---|---|---|---|---|---|---|---|---|---|

| f1 | ANP | 2(≈) | 1.96(≈) | 2(≈) | 2(≈) | 2(≈) | 2(≈) | 2(≈) | 2 |

| PR | 1 | 0.98 | 1 | 1 | 1 | 1 | 1 | 1 | |

| SR | 100% | 96% | 100% | 100% | 100% | 100% | 100% | 100% | |

| f2 | ANP | 1(−) | 1(−) | 3.64(−) | 4.32(≈) | 5(+) | 4.28(≈) | 3.04(−) | 4.40 |

| PR | 0.2 | 0.2 | 0.72 | 0.86 | 1 | 8.56 | 0.68 | 0.88 | |

| SR | 0% | 0% | 28% | 40% | 100% | 40% | 4% | 44% | |

| f3 | ANP | 1(≈) | 1(≈) | 0.72(−) | 1(≈) | 1(≈) | 1(≈) | 0.72(−) | 1 |

| PR | 1 | 1 | 0.72 | 1 | 1 | 1 | 0.72 | 1 | |

| SR | 100% | 100% | 72% | 100% | 100% | 100% | 72% | 100% | |

| f4 | ANP | 1(−) | 1(−) | 2.60(−) | 3.96(≈) | 2.56(−) | 4(≈) | 2.48(−) | 4 |

| PR | 0.25 | 0.25 | 0.65 | 0.99 | 0.64 | 1 | 0.62 | 1 | |

| SR | 0% | 0% | 8% | 96% | 8% | 100% | 4% | 100% | |

| f5 | ANP | 1.12(−) | 1.04(−) | 1.52(−) | 1.92(≈) | 1.36(−) | 1.92(≈) | 1.48(−) | 1.92 |

| PR | 0.56 | 0.52 | 0.76 | 0.96 | 0.68 | 0.96 | 0.74 | 0.96 | |

| SR | 12% | 4% | 60% | 92% | 48% | 92% | 52% | 92% | |

| f6 | ANP | 1(−) | 1(−) | 13.40(−) | 17.36(≈) | 12.72(−) | 17.36(≈) | 14.00(−) | 17.20 |

| PR | 0.05 | 0.05 | 0.74 | 0.96 | 0.70 | 0.96 | 0.77 | 0.95 | |

| SR | 0% | 0% | 0% | 52% | 4% | 60% | 0% | 44% | |

| f7 | ANP | 1(−) | 1(−) | 29.52(≈) | 30.00(≈) | 27.60(−) | 28.72(≈) | 28.60(≈) | 29.20 |

| PR | 0.02 | 0.02 | 0.82 | 0.83 | 0.76 | 0.79 | 0.79 | 0.82 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f8 | ANP | 1.08(−) | 0.68(−) | 42.60(−) | 51.60(≈) | 44.04(−) | 52.04(≈) | 43.36(−) | 48.88 |

| PR | 0.01 | 0.01 | 0.52 | 0.63 | 0.54 | 0.64 | 0.53 | 0.60 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f9 | ANP | 1(−) | 0.96(−) | 31.40(−) | 39.68(≈) | 28.92(−) | 35.88(≈) | 30.48(−) | 36.44 |

| PR | 0.004 | 0.004 | 0.14 | 0.18 | 0.13 | 0.16 | 0.14 | 0.16 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f10 | ANP | 1(−) | 1(−) | 9.92(−) | 11.40(≈) | 8.64(−) | 11.44(≈) | 9.68(−) | 11.20 |

| PR | 0.08 | 0.08 | 0.82 | 0.95 | 0.72 | 0.95 | 0.80 | 0.92 | |

| SR | 0% | 0% | 0% | 56% | 4% | 60% | 0% | 48% | |

| −/≈/+ | 8/2/0 | 8/2/0 | 8/2/0 | 0/10/0 | 7/2/1 | 0/10/0 | 8/2/0 | \ | |

| Fun. | CMA-ES | MA-ES | CMA-N | CMA-NMO | CMA-NMM | CMA-NMM-MO | MA-ESN | MA-ESN-MO | |

|---|---|---|---|---|---|---|---|---|---|

| f11 | ANP | 1(−) | 1.04(−) | 3.56(−) | 3.92(≈) | 3.72(−) | 3.96(≈) | 3.60(−) | 4 |

| PR | 0.16 | 0.17 | 0.60 | 0.65 | 0.62 | 0.66 | 0.60 | 0.66 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f12 | ANP | 1(−) | 1(−) | 2.64(−) | 6.00(≈) | 2.80(−) | 5.40(−) | 2.40(−) | 5.96 |

| PR | 0.12 | 0.12 | 0.33 | 0.75 | 0.35 | 0.67 | 0.31 | 0.75 | |

| SR | 0% | 0% | 0% | 16% | 0% | 0% | 0% | 4% | |

| f13 | ANP | 1(−) | 1(−) | 3.20(−) | 3.80(≈) | 3.44(−) | 3.96(≈) | 3.20(−) | 3.92 |

| PR | 0.16 | 0.16 | 0.54 | 0.63 | 0.57 | 0.66 | 0.53 | 0.65 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f14 | ANP | 1(−) | 0.72(−) | 1.76(−) | 3.52(≈) | 1.72(−) | 3.64(≈) | 1.84(−) | 3.48 |

| PR | 0.16 | 0.12 | 0.29 | 0.58 | 0.28 | 0.60 | 0.30 | 0.58 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f15 | ANP | 1(−) | 0.76(−) | 1.20(−) | 2.04(≈) | 1.12(−) | 2.40(≈) | 1.32(−) | 2.12 |

| PR | 0.12 | 0.09 | 0.15 | 0.25 | 0.14 | 0.30 | 0.16 | 0.26 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f16 | ANP | 1(−) | 0(−) | 1.24(−) | 1.96(≈) | 1.16(−) | 2.24(≈) | 1.08(−) | 1.84 |

| PR | 0.16 | 0 | 0.20 | 0.33 | 0.19 | 0.37 | 0.18 | 0.32 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f17 | ANP | 0.84(−) | 0(−) | 1(−) | 1.48(≈) | 1(−) | 1.44(≈) | 1.04(−) | 1.48 |

| PR | 0.10 | 0 | 0.12 | 0.18 | 0.12 | 0.18 | 0.13 | 0.19 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f18 | ANP | 0.16(−) | 0(−) | 1(≈) | 1.44(+) | 1(≈) | 1.32(+) | 1(≈) | 1.08 |

| PR | 0.02 | 0 | 0.16 | 0.24 | 0.16 | 0.22 | 0.16 | 0.18 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f19 | ANP | 0.04(−) | 0(−) | 1(≈) | 1.04(≈) | 1(≈) | 0.80(≈) | 1(≈) | 0.96 |

| PR | 0.005 | 0 | 0.12 | 0.13 | 0.12 | 0.10 | 0.12 | 0.12 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| f20 | ANP | 0(≈) | 0(≈) | 0.04(≈) | 0(≈) | 0(≈) | 0(≈) | 0(≈) | 0 |

| PR | 0 | 0 | 0.005 | 0 | 0 | 0 | 0 | 0 | |

| SR | 0% | 0% | 0% | 0% | 0% | 0% | 0% | 0% | |

| −/≈/+ | 9/1/0 | 9/1/0 | 7/3/0 | 0/9/1 | 7/3/0 | 1/8/1 | 7/3/0 | \ | |

| VS | R+ | R− | Exact p-Value | Asymptotic p-Value |

|---|---|---|---|---|

| CMA-ES | 188.5 | 1.5 | ≥0.2 | 0.000144 |

| MA-ES | 208.5 | 1.5 | ≥0.2 | 0.000103 |

| CMA-N | 182.0 | 8.0 | ≥0.2 | 0.00043 |

| CMA-NMO | 52.5 | 137.5 | ≥0.2 | 1 |

| CMA-NMM | 176.5 | 13.5 | ≥0.2 | 0.000911 |

| CMA-NMM-MO | 85.0 | 105.0 | ≥0.2 | 1 |

| MA-ESN | 205.5 | 4.5 | ≥0.2 | 0.000152 |

| Algorithm | Ranking |

|---|---|

| CMA-ES | 6.8 |

| MA-ES | 7.4 |

| CMA-N | 4.525 |

| CMA-NMO | 2.25 |

| CMA-NMM | 4.75 |

| CMA-NMM-MO | 2.55 |

| MA-ESN | 5 |

| MA-ESN-MO | 2.725 |

| Fun. | CMA-ES | MA-ES | CMA-N | CMA-NMO | CMA-NMM | CMA-NMM-MO | MA-ESN | MA-ESN-MO |

|---|---|---|---|---|---|---|---|---|

| f1 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| f2 | 20% | 20% | 90.4% | 100% | 100% | 100% | 86.4% | 100% |

| f3 | 100% | 100% | 100% | 100% | 100% | 100% | 96% | 100% |

| f4 | 25% | 25% | 60% | 100% | 67% | 100% | 65% | 100% |

| f5 | 62% | 50% | 80% | 100% | 82% | 100% | 84% | 100% |

| f6 | 5.5% | 5.3% | 71.7% | 98% | 76% | 97.1% | 77.5% | 97.1% |

| f7 | 2.7% | 2.7% | 79% | 83.3% | 77.8% | 80.2% | 81.5% | 83% |

| f8 | 1.3% | 0.7% | 52.8% | 64.1% | 54.6% | 66.1% | 51.1% | 60.9% |

| f9 | 0.4% | 0.4% | 14.4% | 22.2% | 13.7% | 17.6% | 14.6% | 21.1% |

| f10 | 8.3% | 8.3% | 85.3% | 97% | 75.6% | 98% | 83.6% | 94.6% |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W. Matrix Adaptation Evolution Strategy with Multi-Objective Optimization for Multimodal Optimization. Algorithms 2019, 12, 56. https://doi.org/10.3390/a12030056

Li W. Matrix Adaptation Evolution Strategy with Multi-Objective Optimization for Multimodal Optimization. Algorithms. 2019; 12(3):56. https://doi.org/10.3390/a12030056

Chicago/Turabian StyleLi, Wei. 2019. "Matrix Adaptation Evolution Strategy with Multi-Objective Optimization for Multimodal Optimization" Algorithms 12, no. 3: 56. https://doi.org/10.3390/a12030056

APA StyleLi, W. (2019). Matrix Adaptation Evolution Strategy with Multi-Objective Optimization for Multimodal Optimization. Algorithms, 12(3), 56. https://doi.org/10.3390/a12030056