Microscopic Object Recognition and Localization Based on Multi-Feature Fusion for In-Situ Measurement In Vivo

Abstract

1. Introduction

2. Materials and Methods

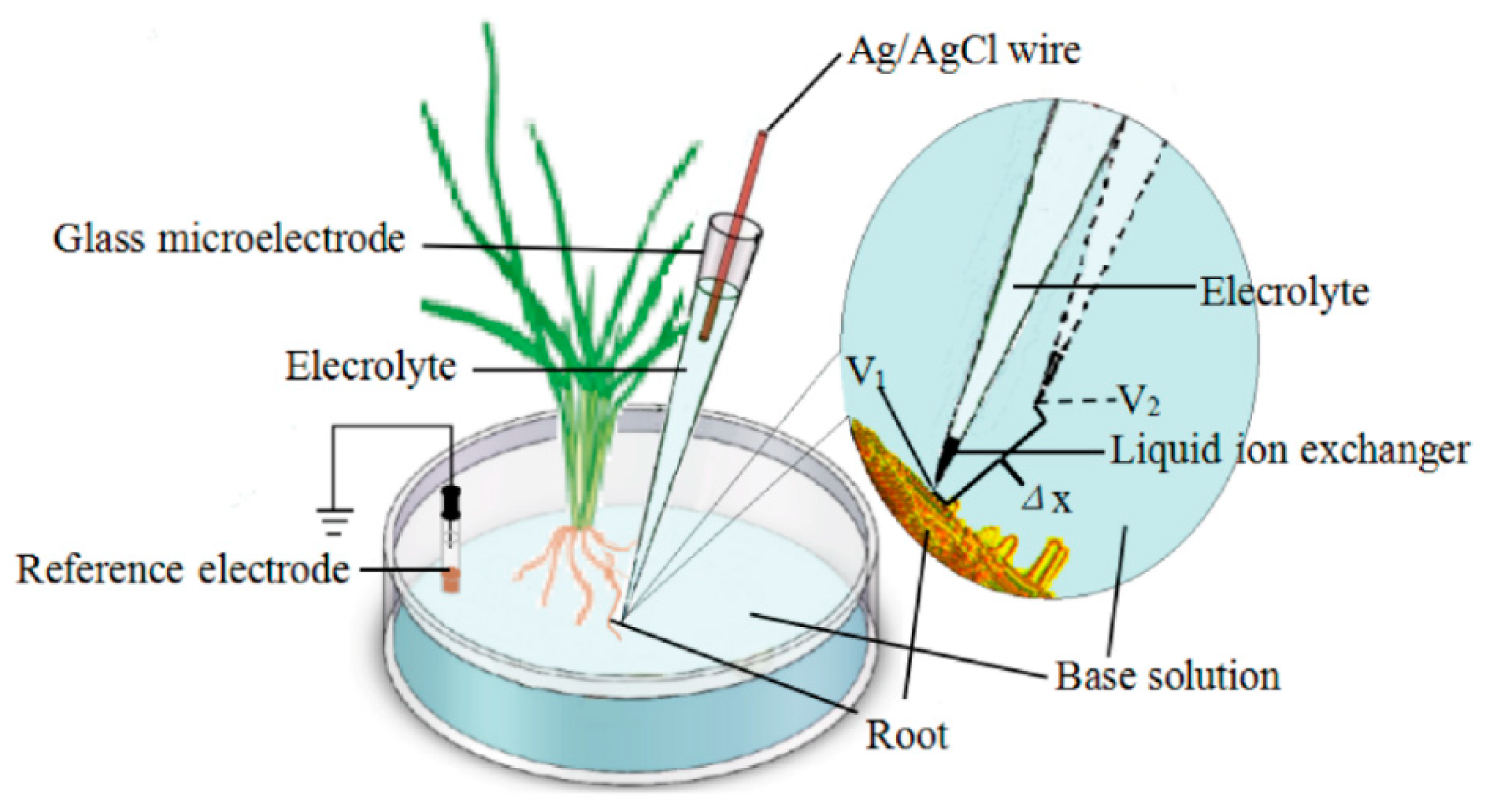

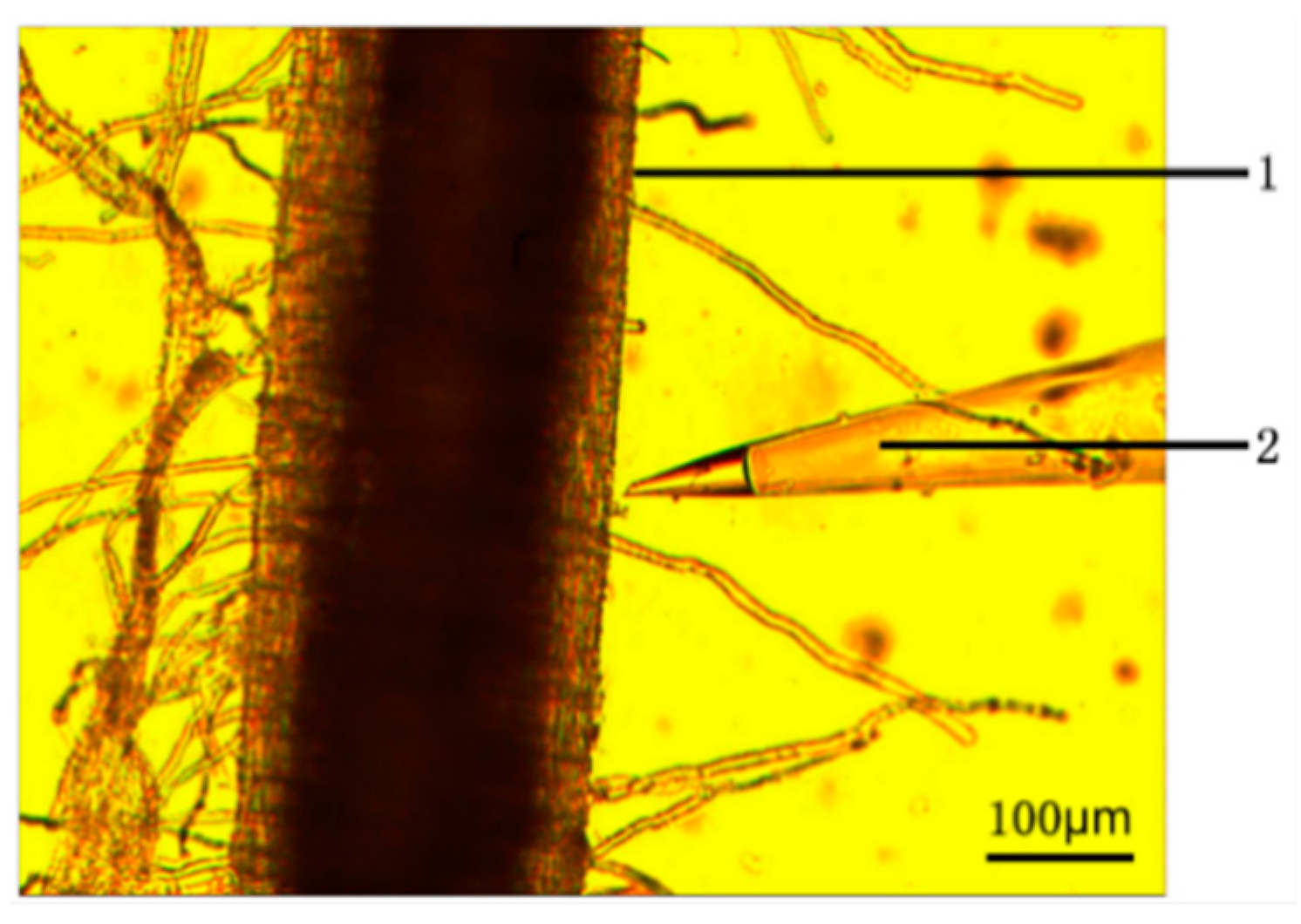

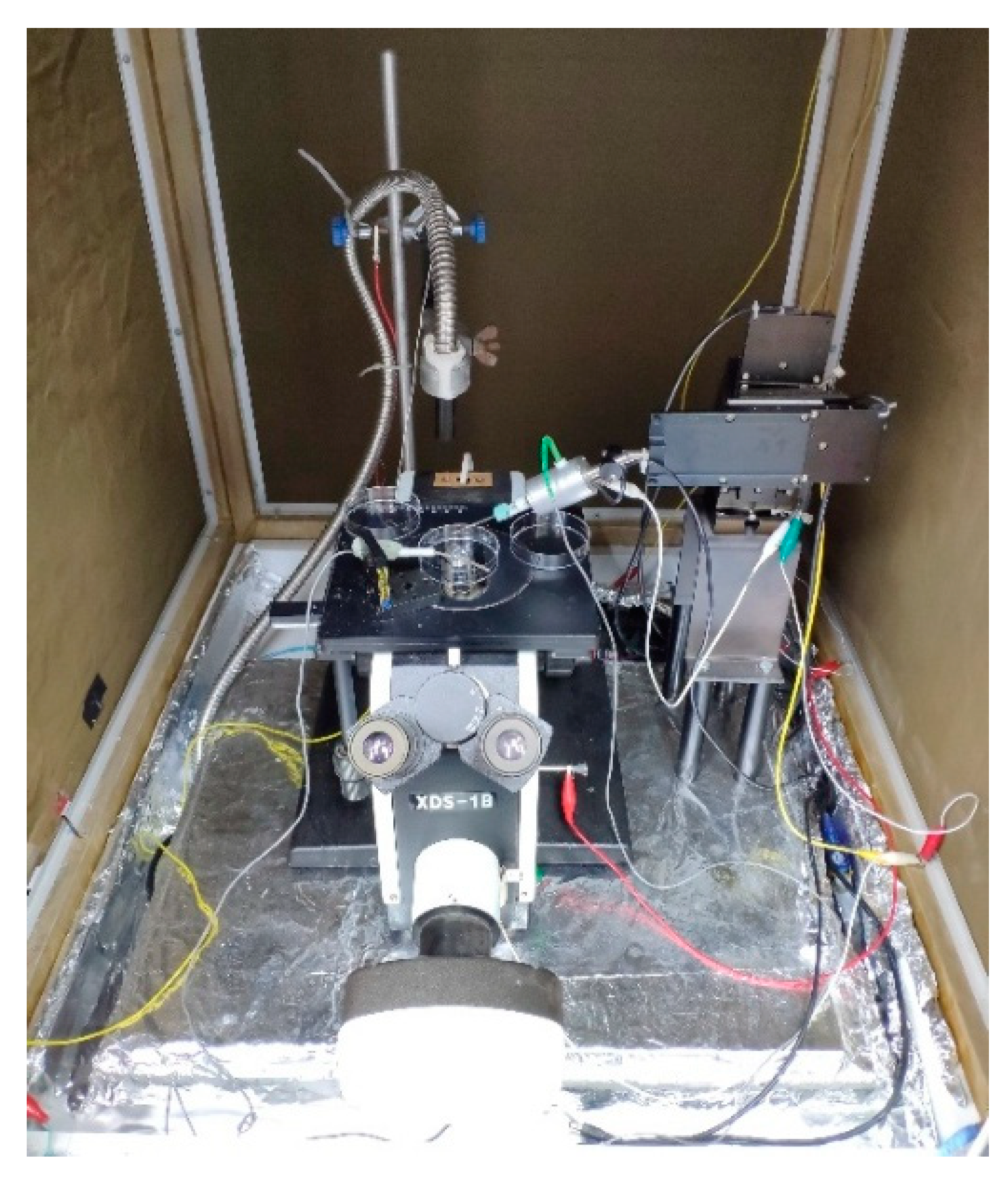

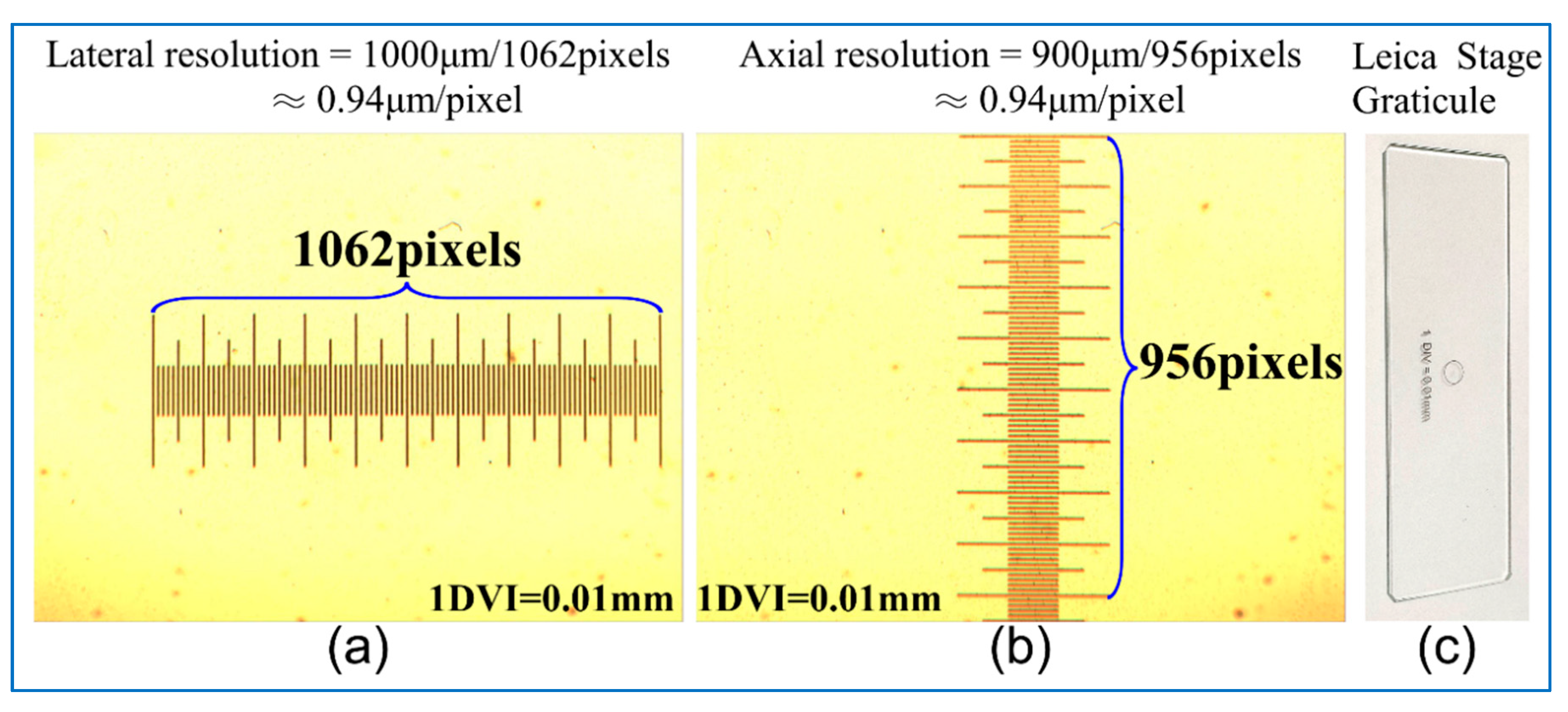

2.1. System Description

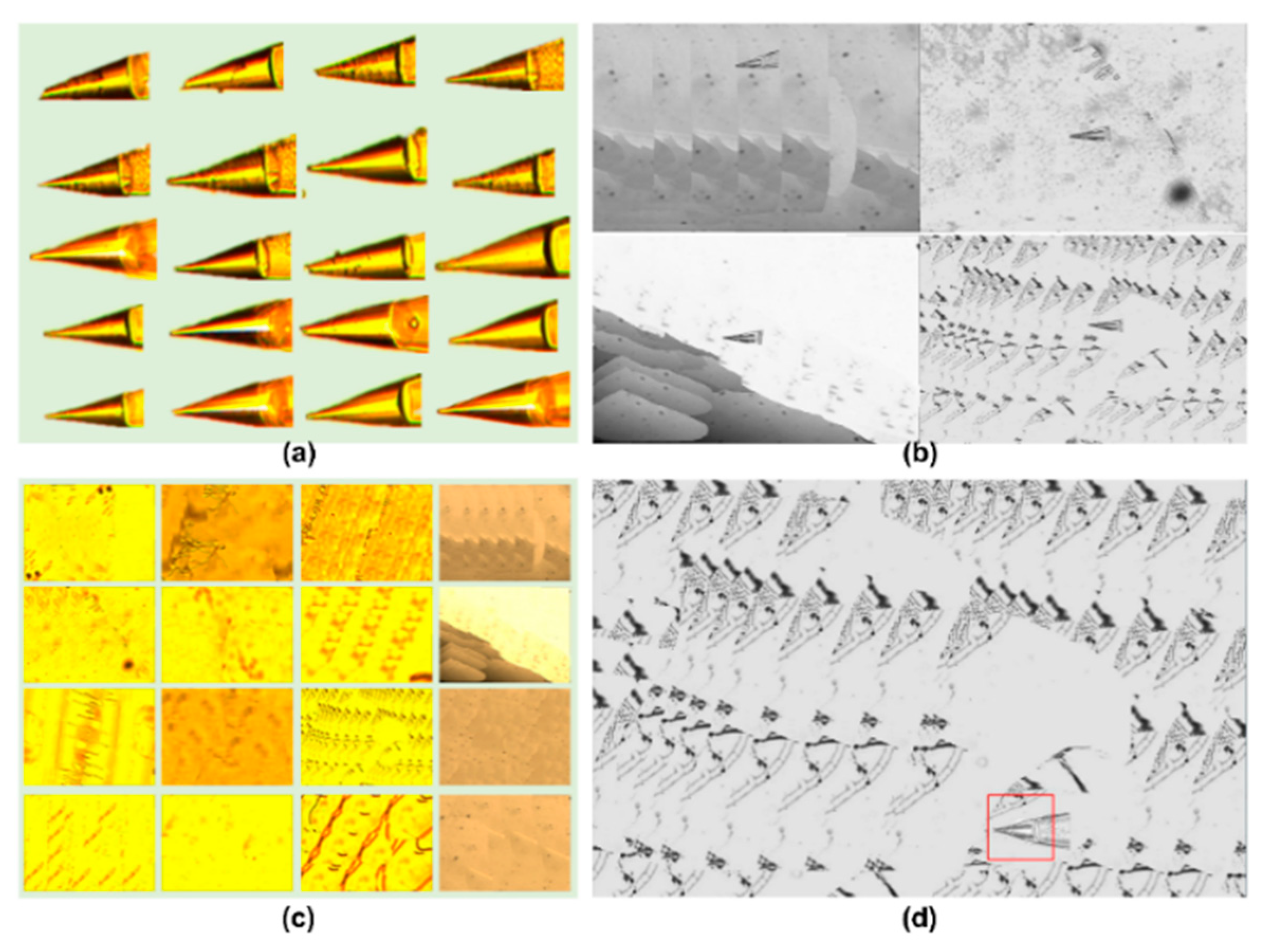

2.2. The Dataset

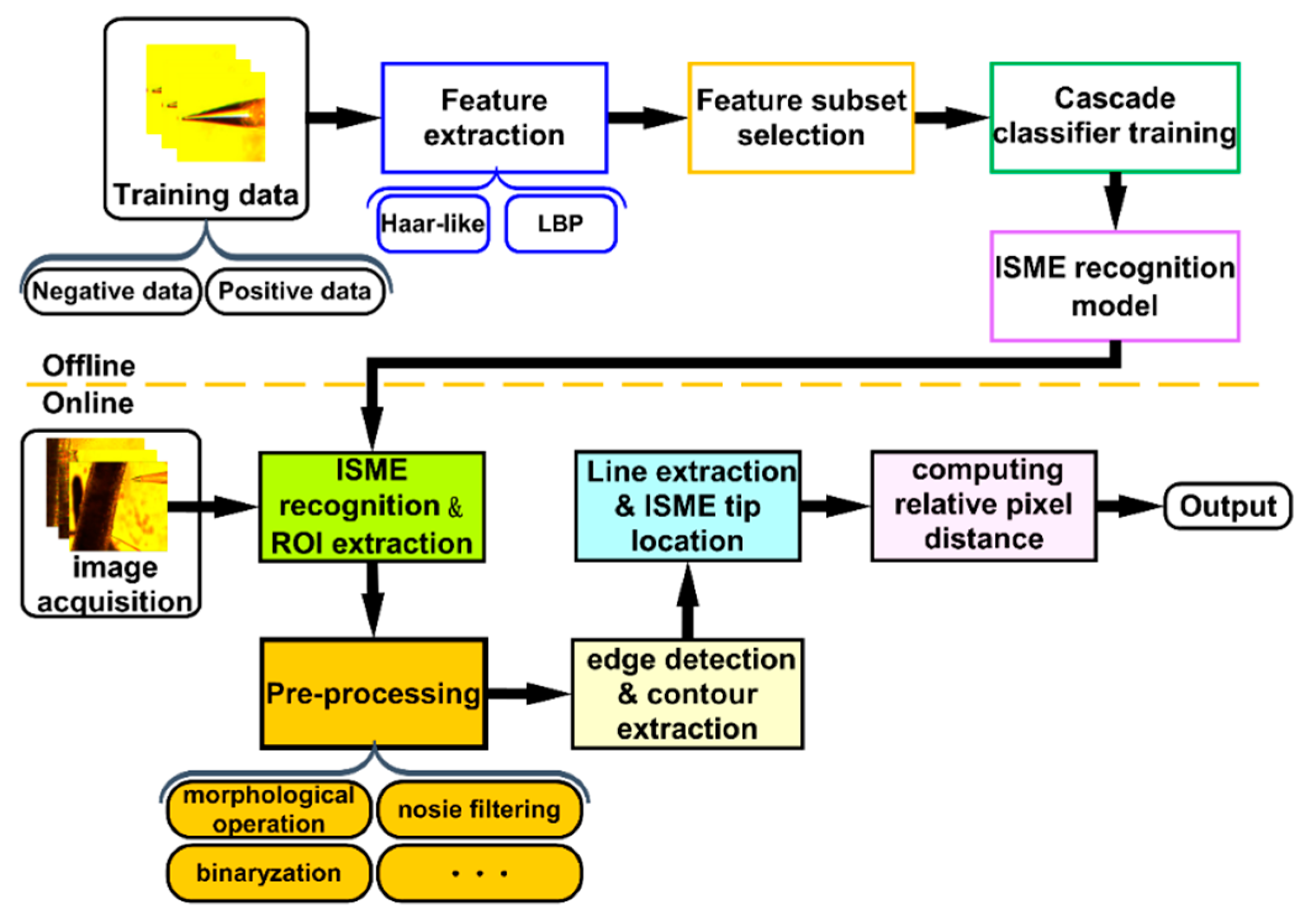

2.3. The Proposed Algorithm

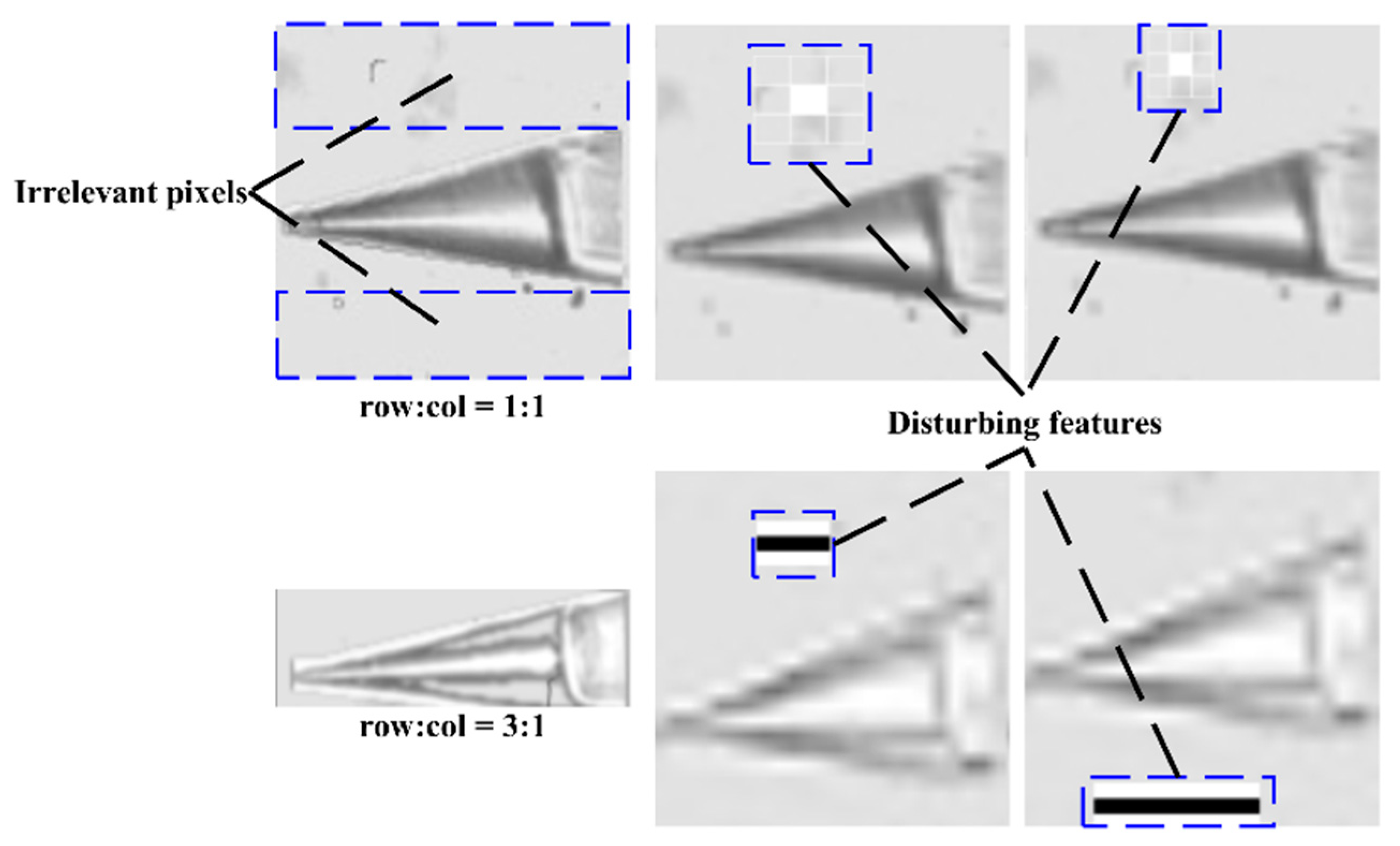

2.3.1. Image Preprocessing

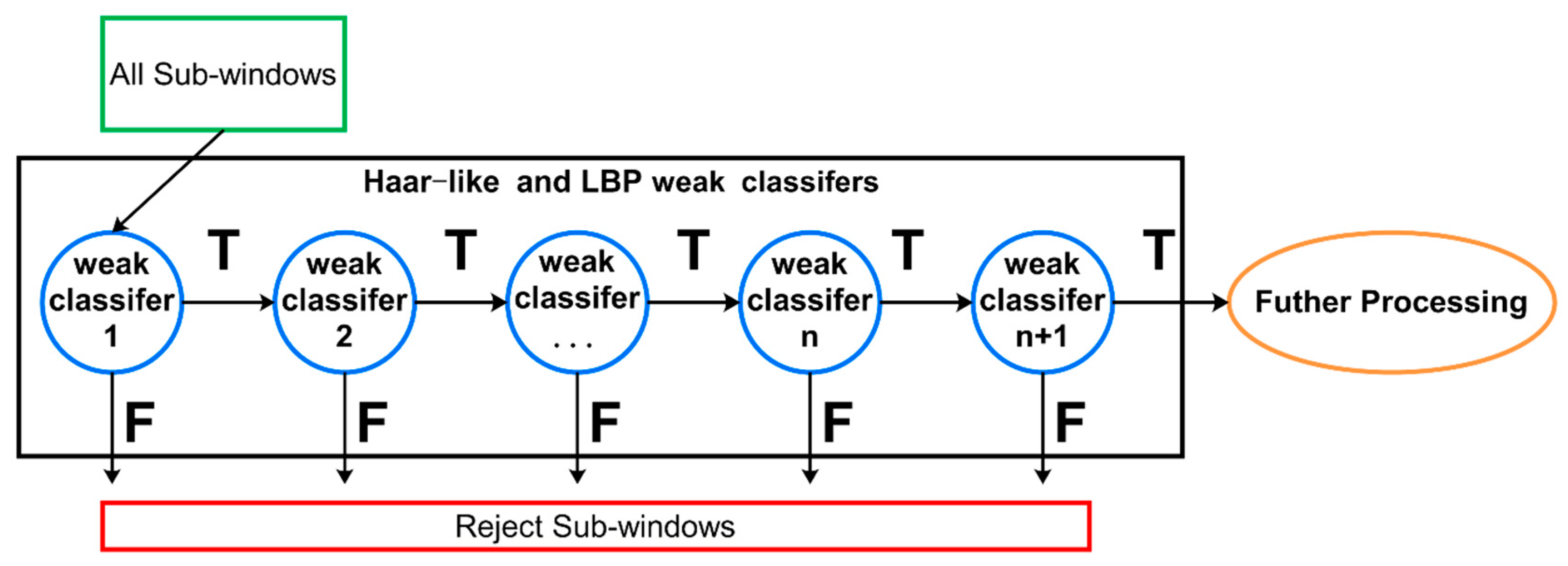

2.3.2. Training a Cascade Classifier for ISME

2.3.3. Edge Detection of the Target in the Microscopic Image

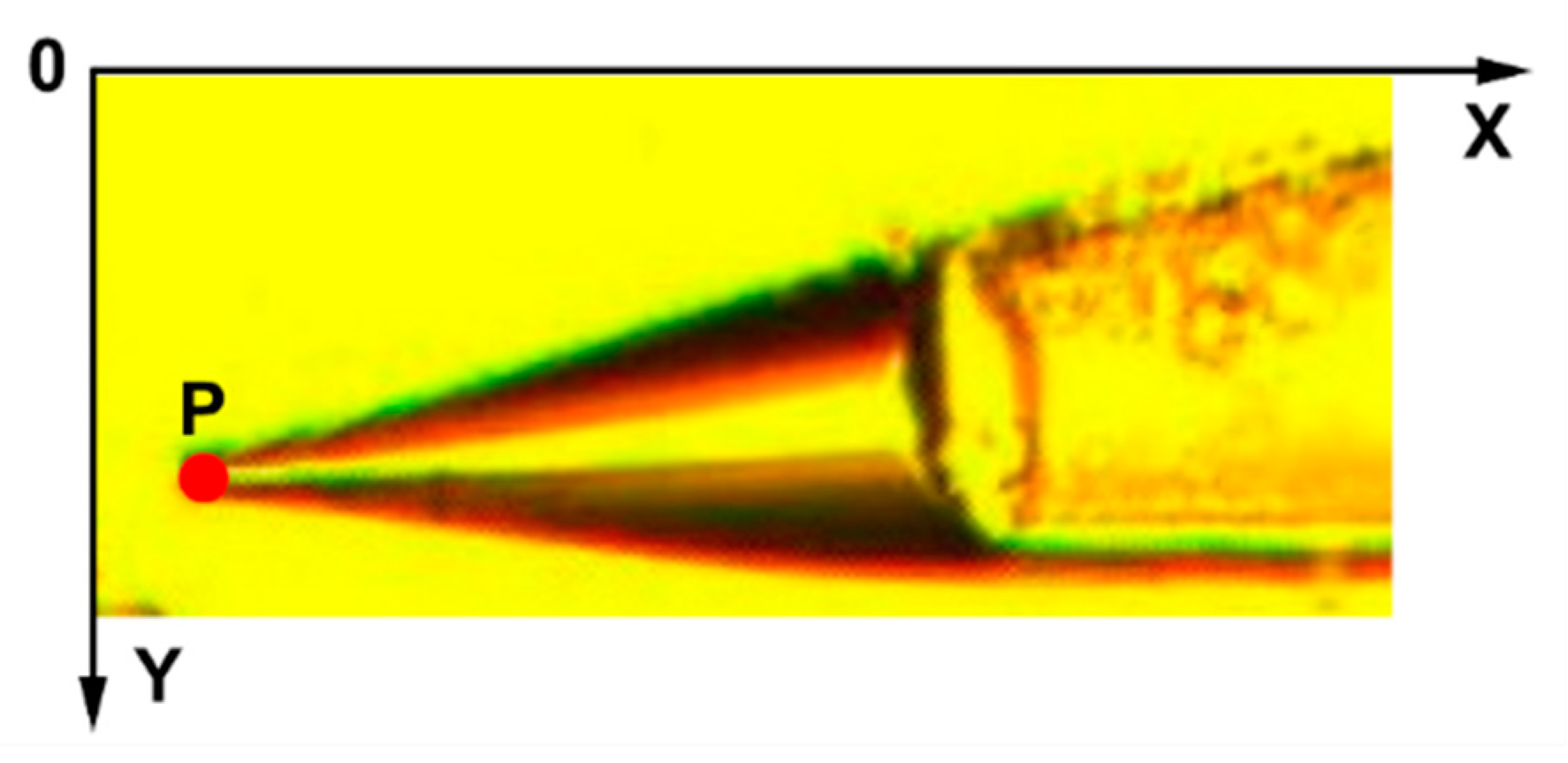

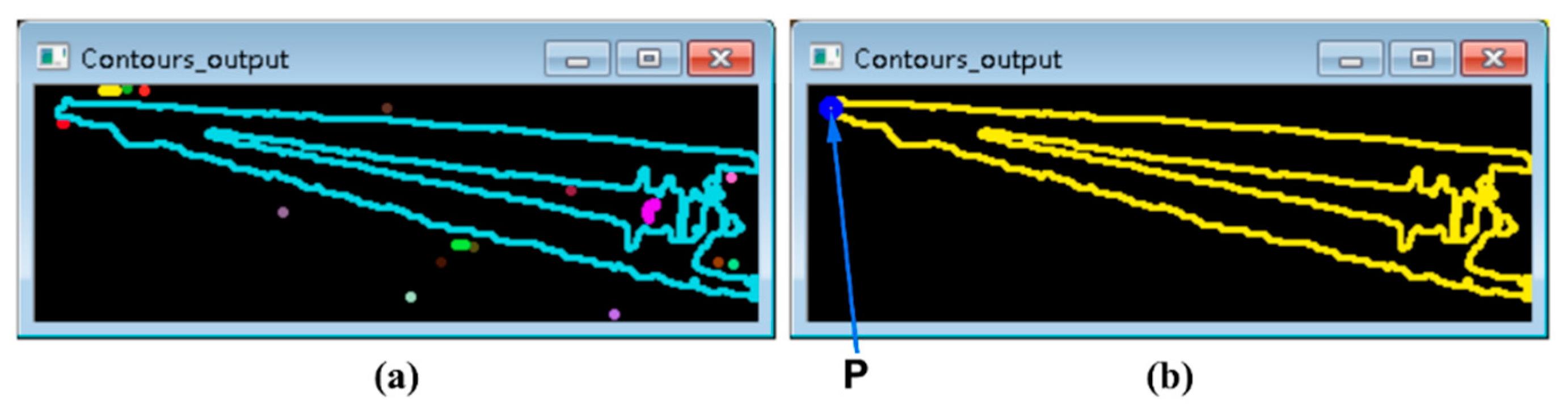

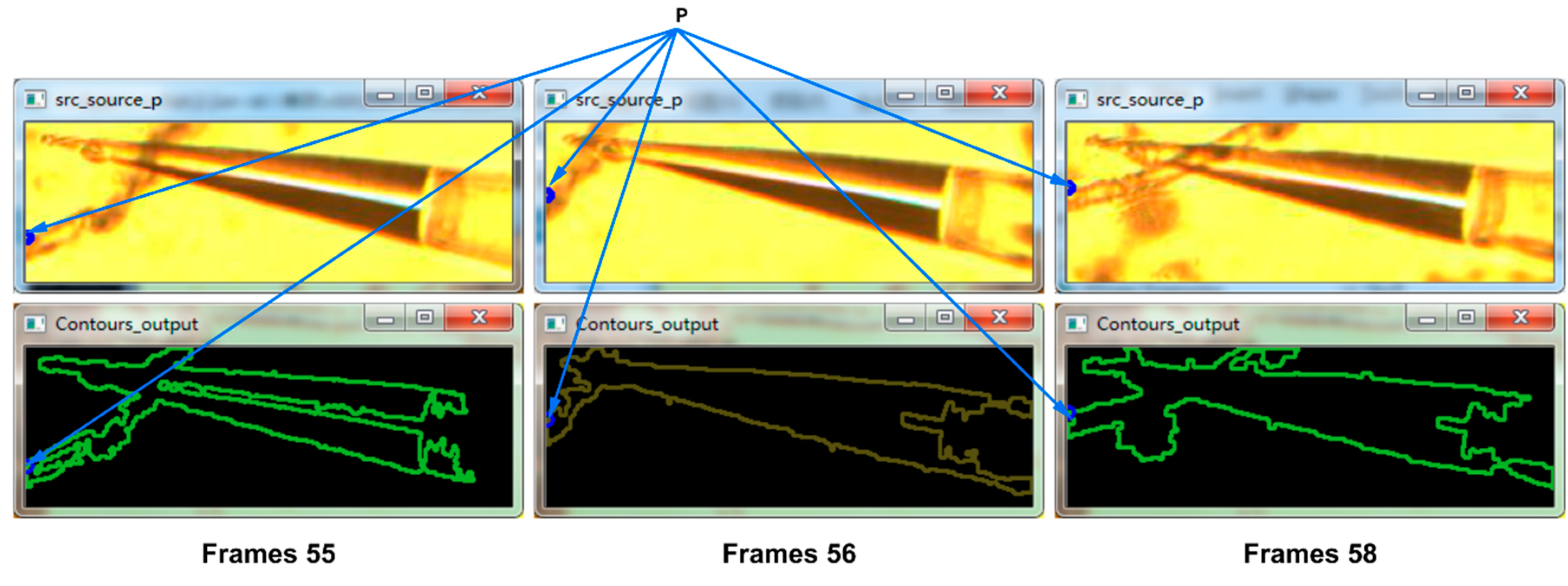

2.3.4. The Contour Extraction and the Localization of ISME Tip

| Algorithm 1: ISME Tip Localization |

| Input: edge_image Output: Tip Localization image is represented by tipLoc_image |

| BEGIN |

| 1.Find Contours () |

| 2.For i = 0: contours.size//contours.size is the number of the contours |

| 3.Get the length of all of the contours 4.END 5.Get the length of every contour 6.Con = the Longest contour 7.draw the Con on tipLoc_image |

| 8.For j = 0: Con.size//Con.size is the size of Con 9.find the point named TIP with the minimum x// 10.draw a circle around the TIP |

| END |

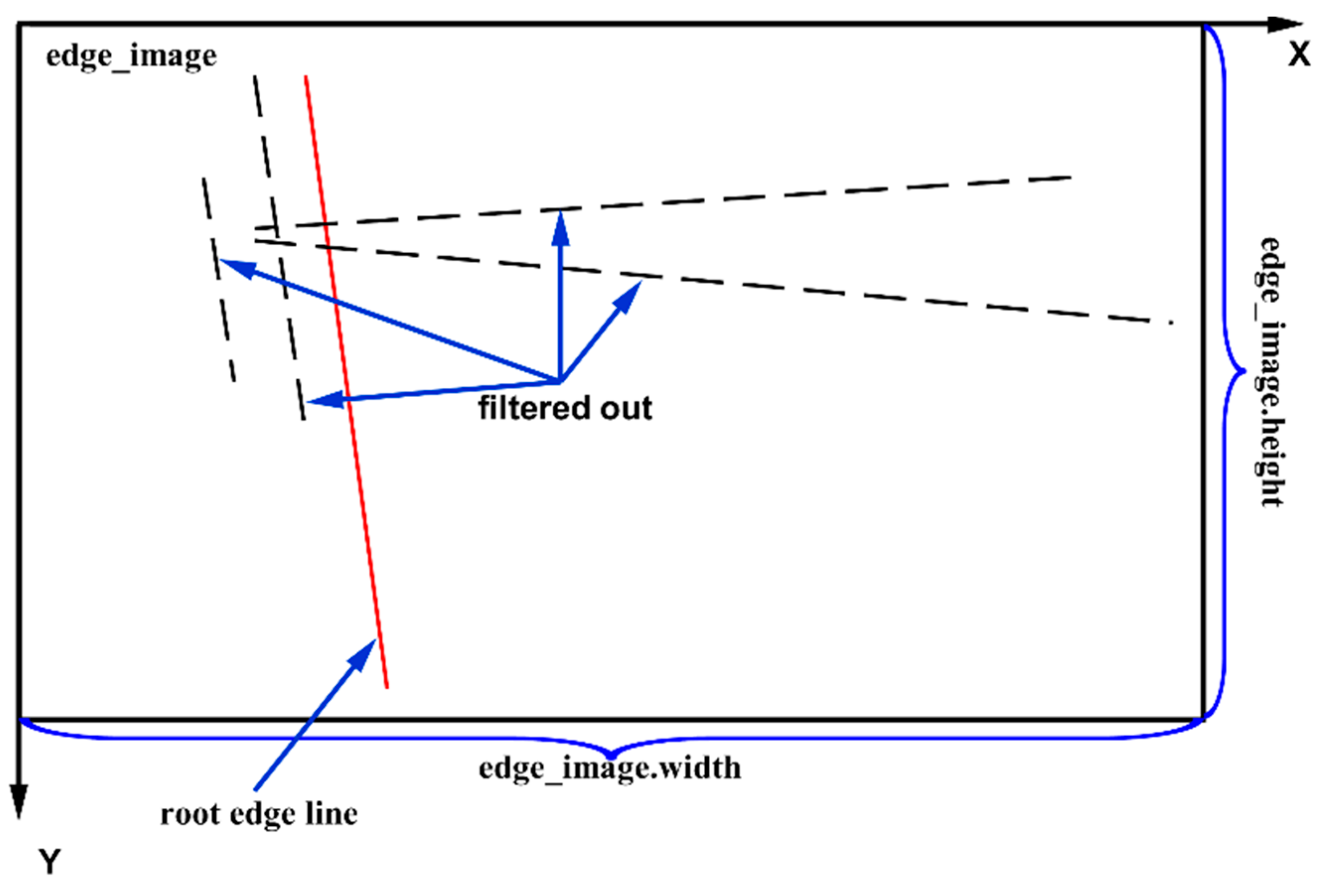

2.3.5. Edge Detection of Plant Root Using Hough Transformation

| Algorithm 2: Boundary Detection of Root |

| Input: edge_image Output: Tip Localization image is represented by tipLoc_image |

| BEGIN |

| 1.plines = Hough Line detection of edge_image |

| 2.For i = 0: plines.size//plines.size is the number of the lines 3.Point_A = A end point of plines segment Point_B = Another end point of plines segment |

| 4.IF |Point_A.x–Point_B.x| < 1/3 edge_image.width & |Point_A.y – Point_B.y| > 3/4 edge_image.height 5.THEN retain the plines(i) Draw the plines(i) 6.ENDIF 7.END END |

2.3.6. Distance Calculation

2.4. Experiments

3. Results

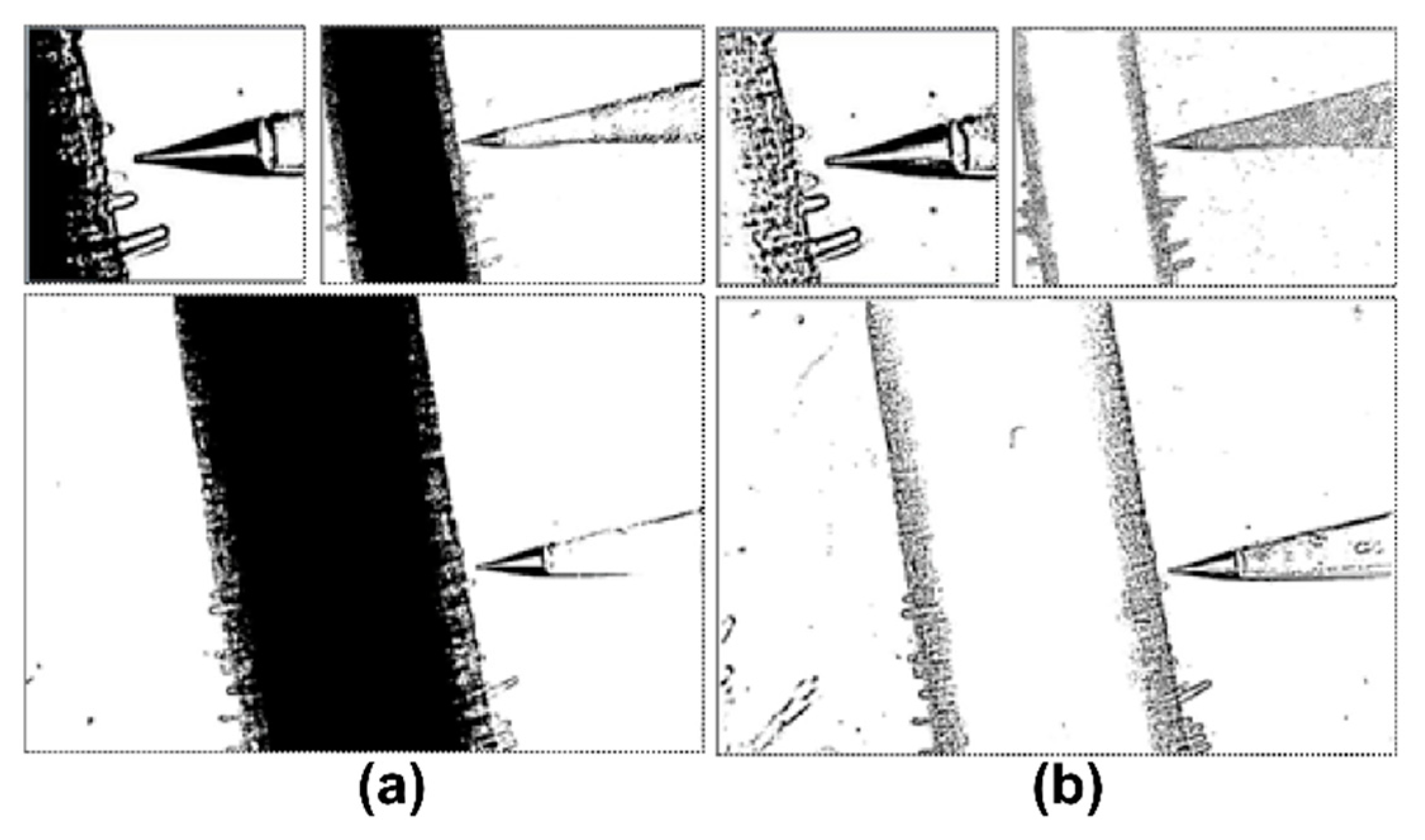

3.1. Image Preprocessing

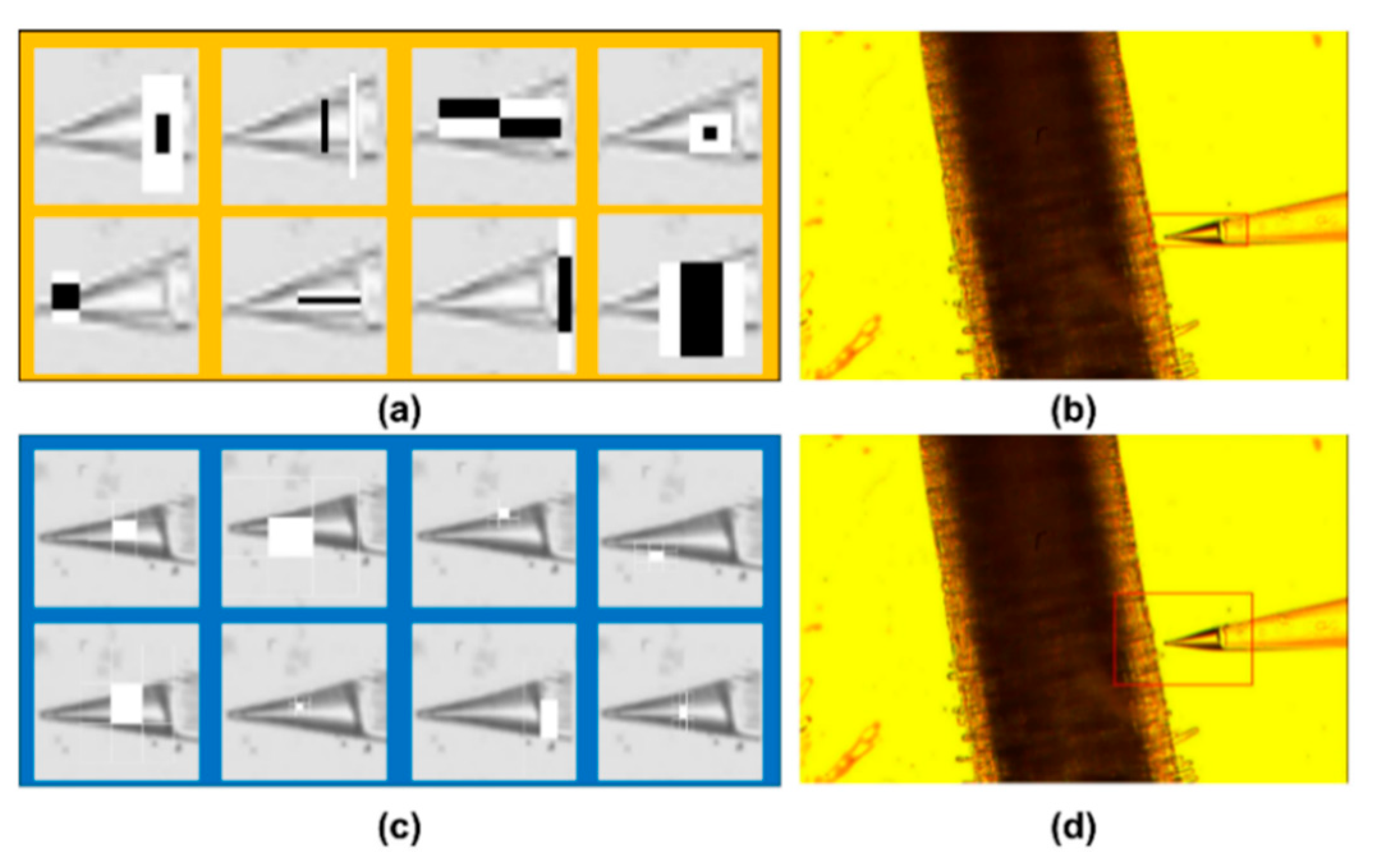

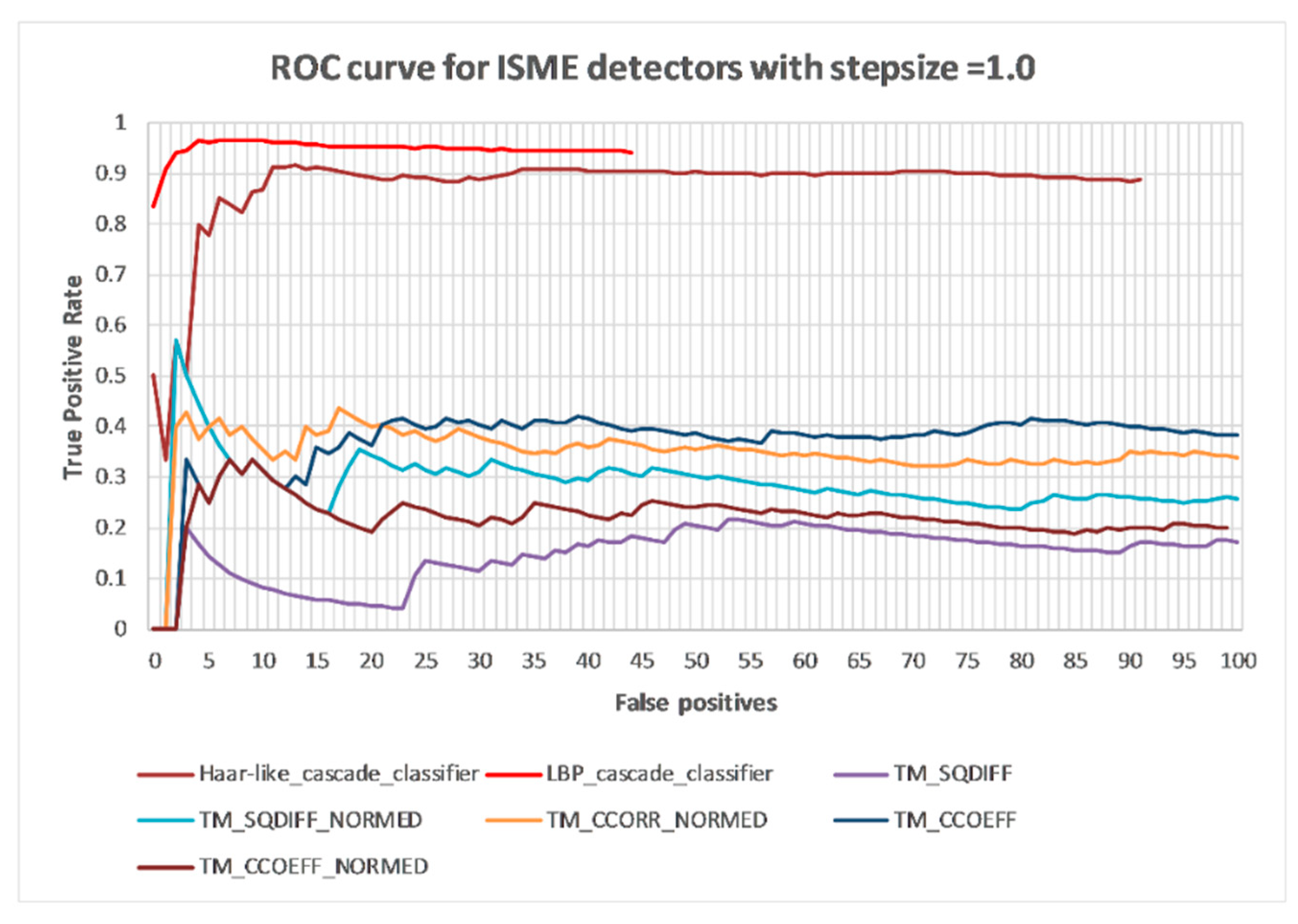

3.2. ISME Cascade Classifier Training and ISME Detection

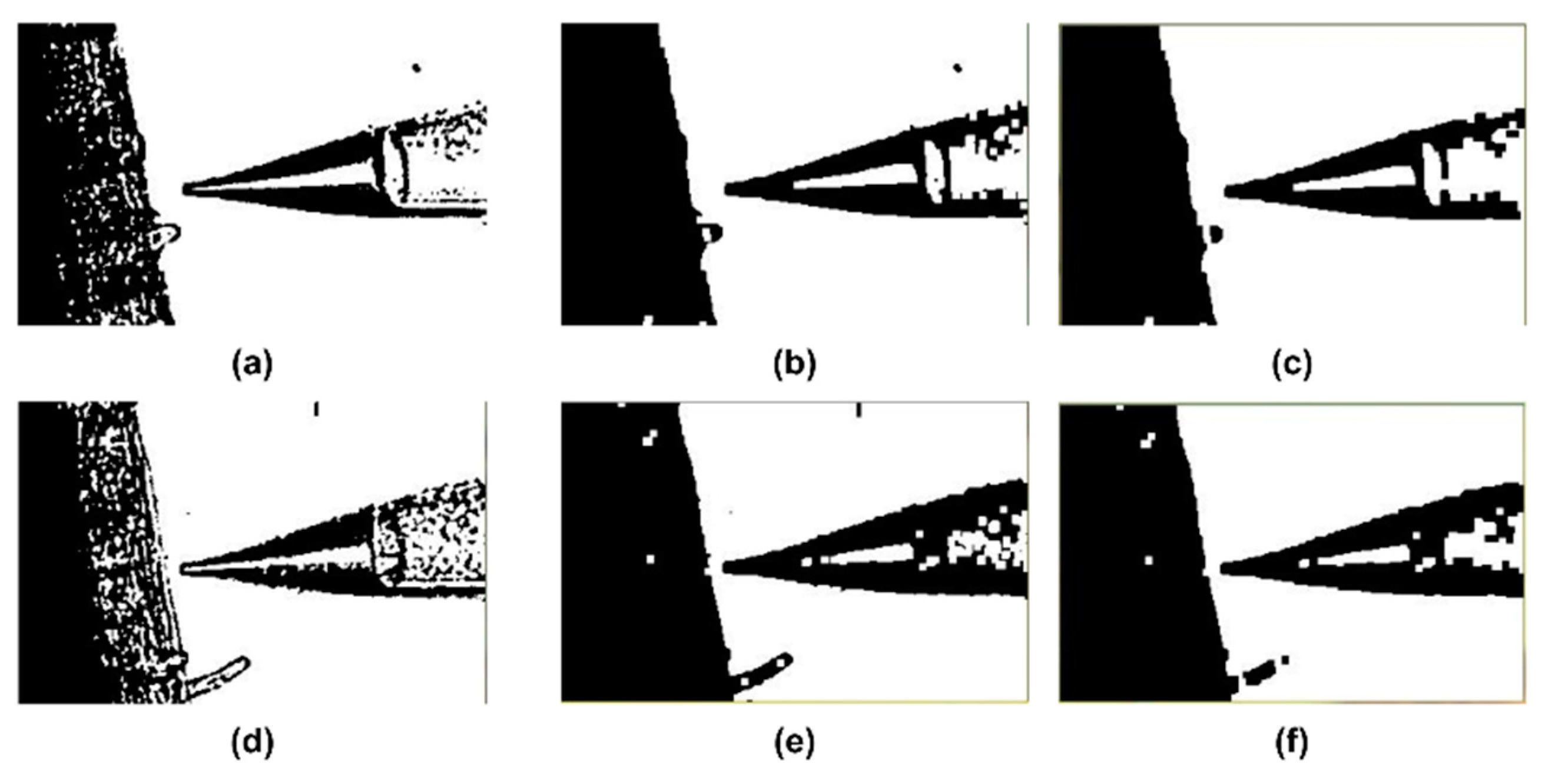

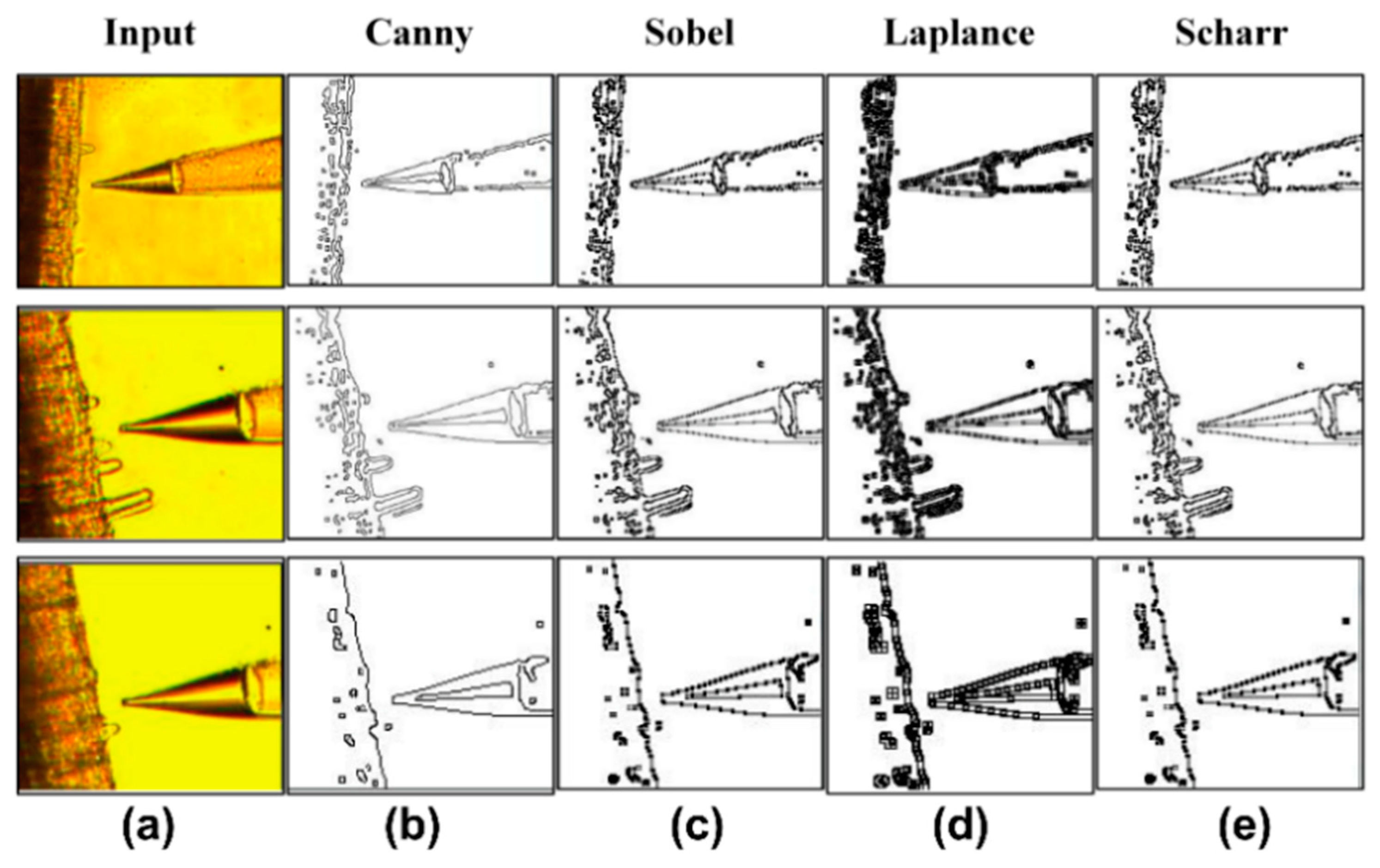

3.3. Edge Detection of Plant Tissues and Microelectrodes

- (1)

- The signal-to-noise ratio is higher.

- (2)

- The location of the edge points must be accurate; in other words, the detected edge points should be as close as possible to the center of the actual edge.

- (3)

- The detection must only have one response to a single edge, that is a single edge has only one unique response, and suppresses the response to false edge.

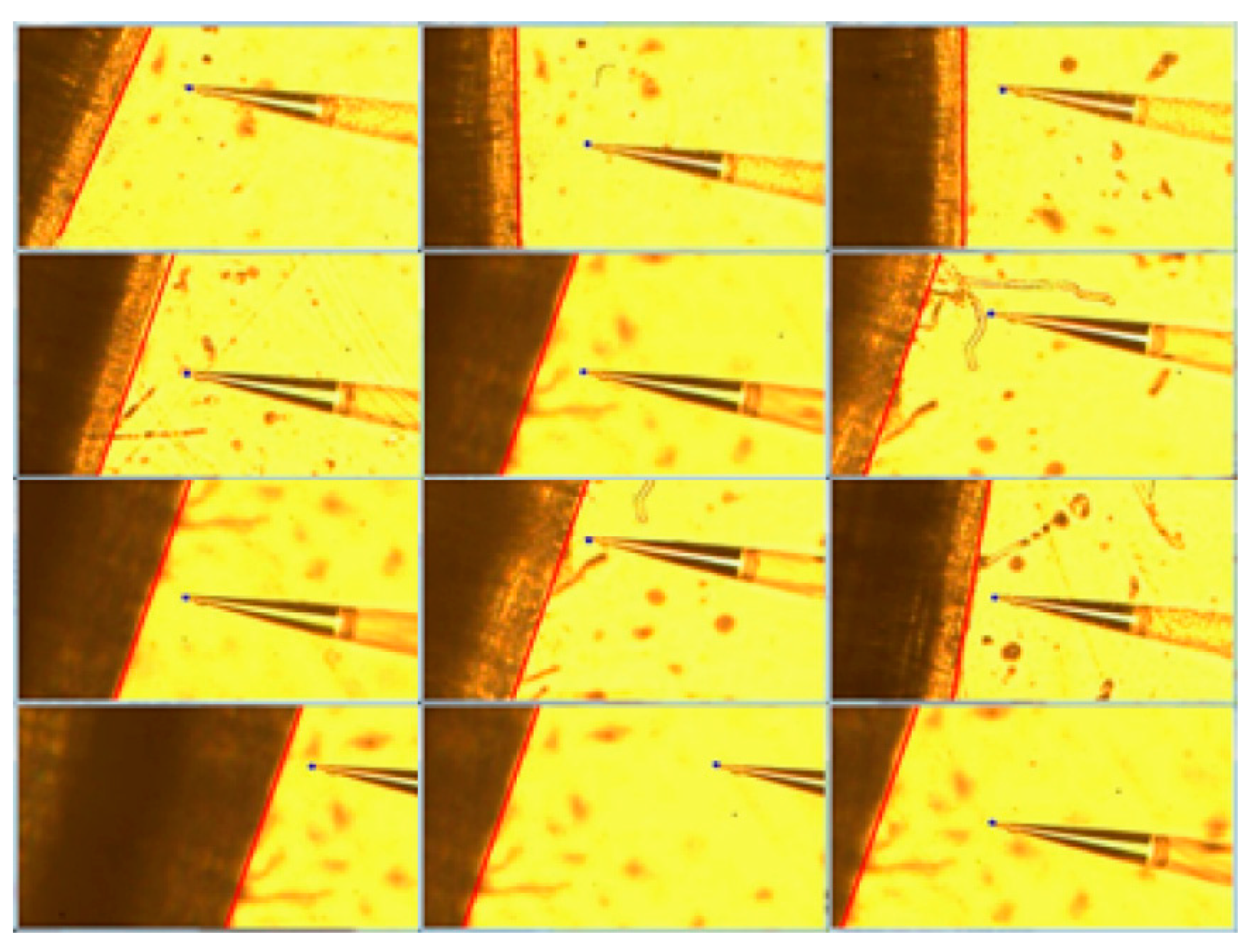

3.4. Contour Extraction and ISME Tip Localization

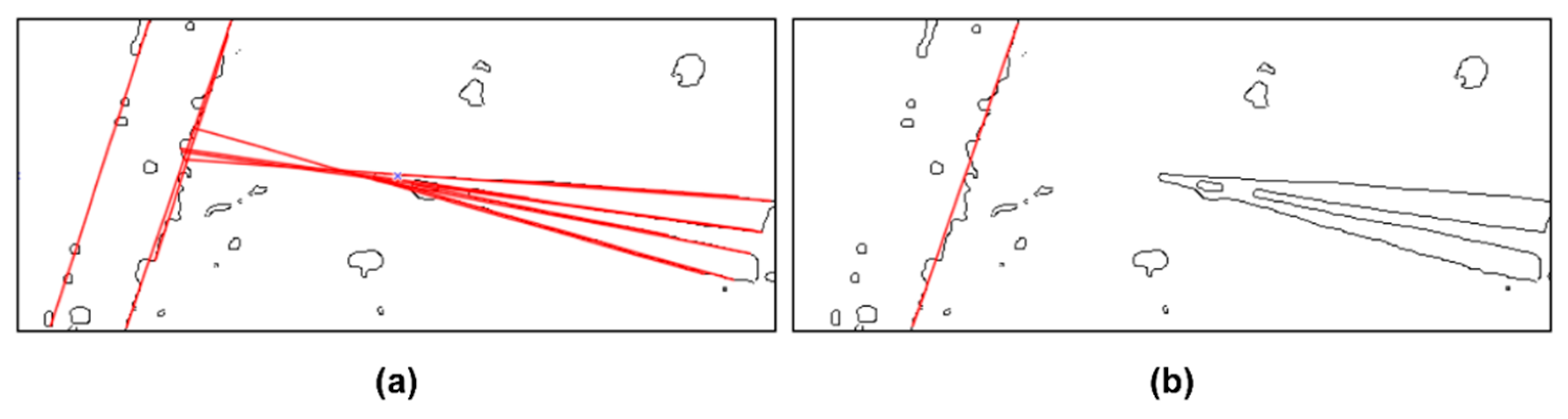

3.5. Edge Detection of Plant Root Using Hough Transformation

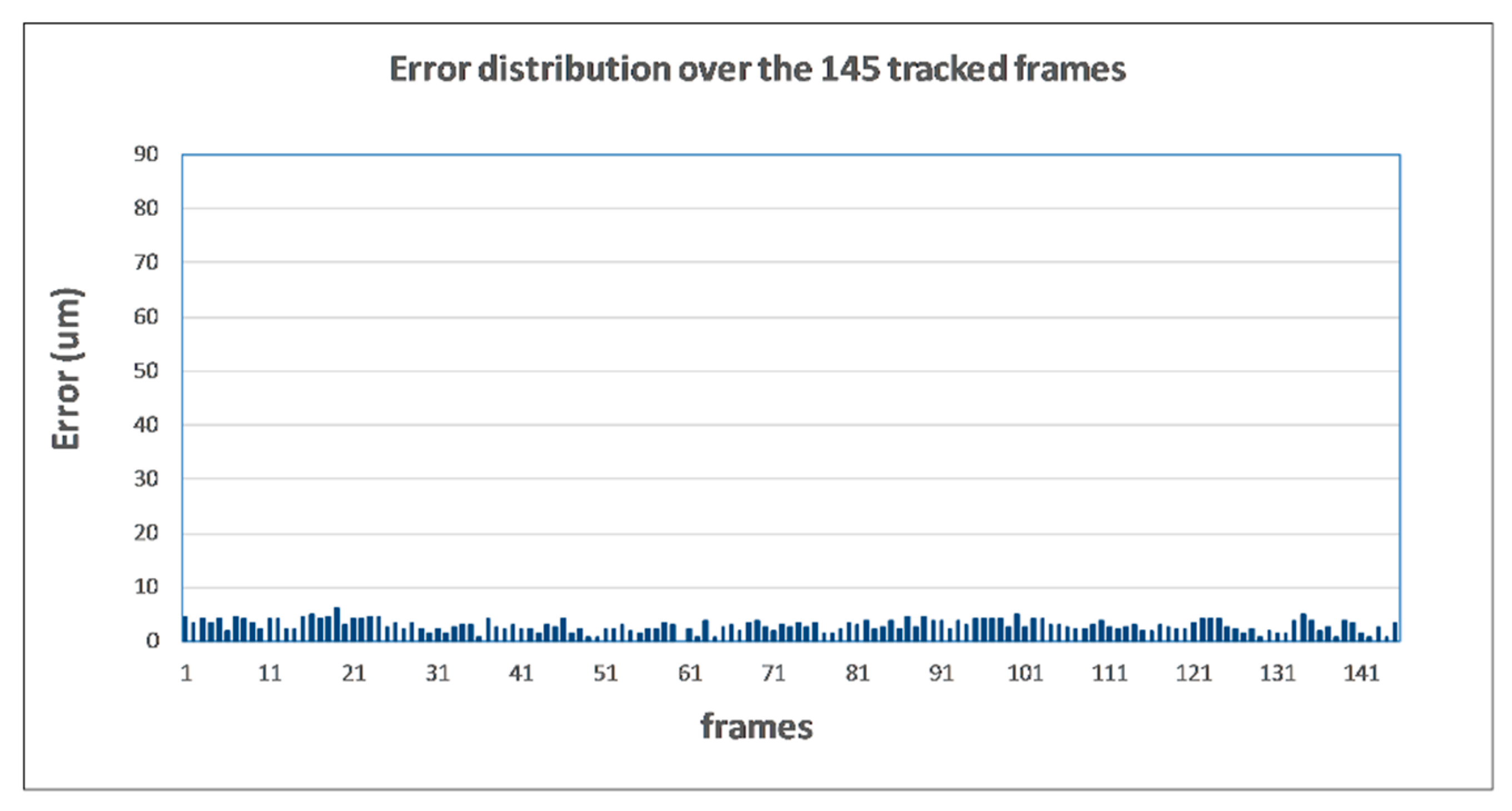

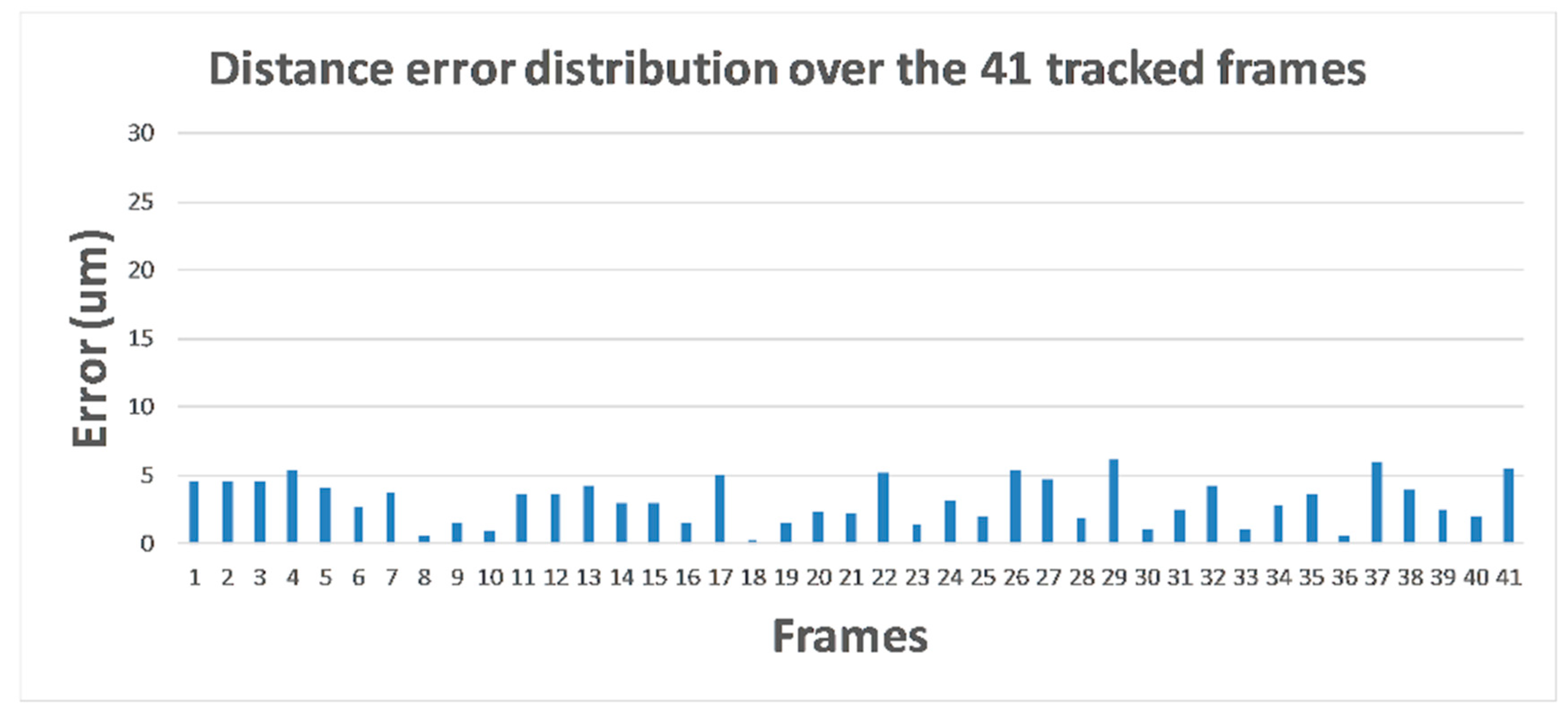

3.6. Computation of Relative Distance

4. Discussion

4.1. ISME Recognition

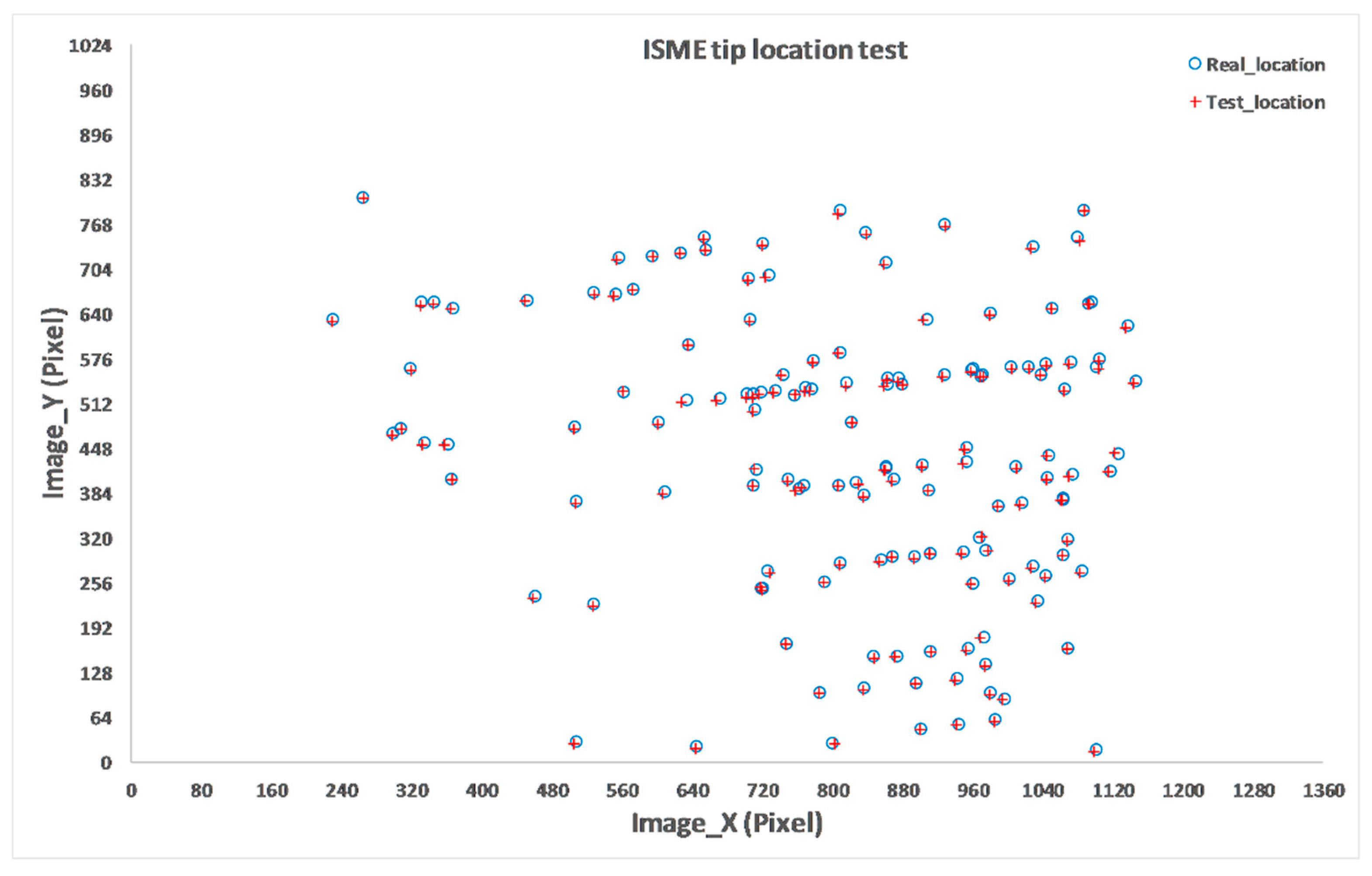

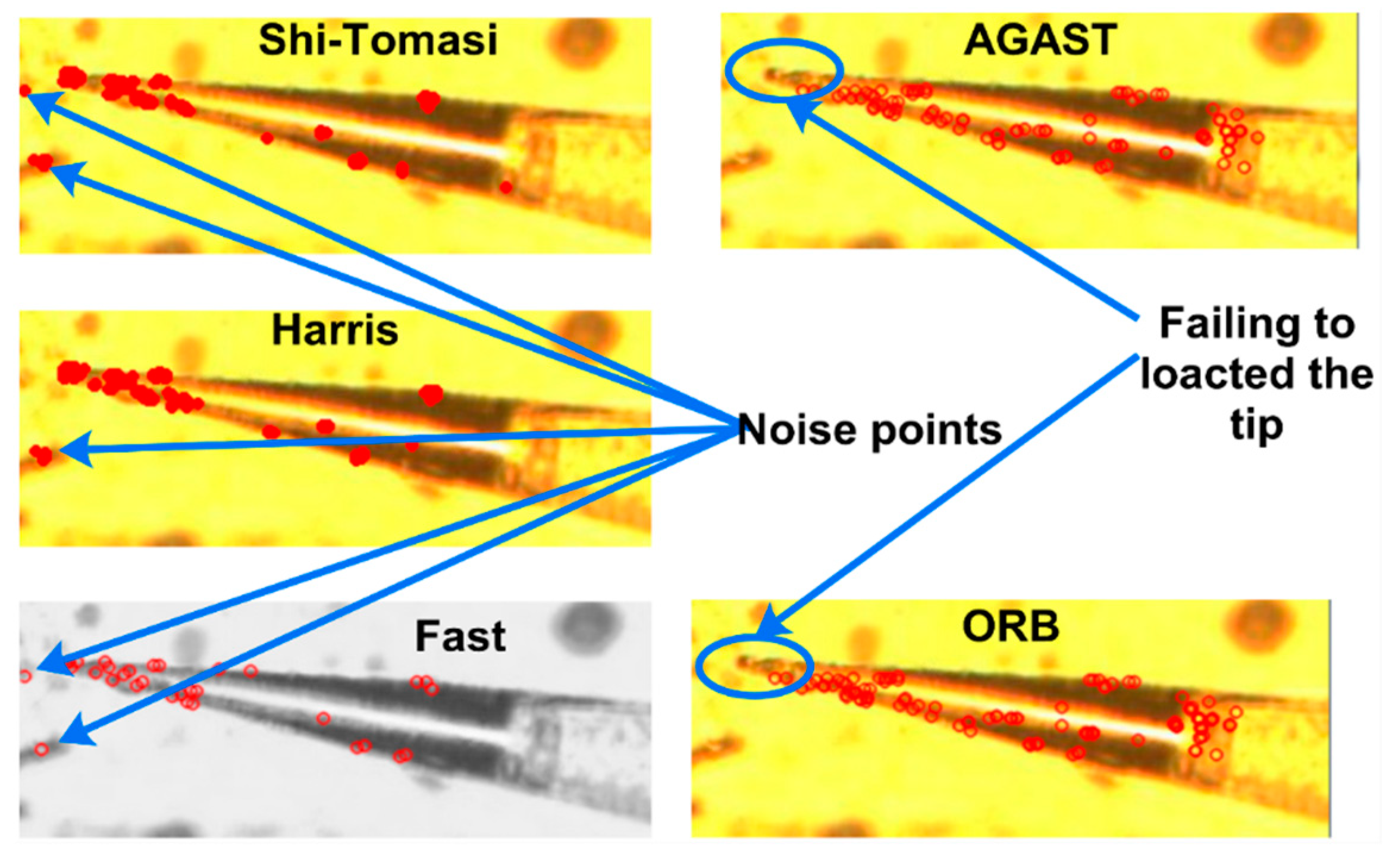

4.2. Location of the ISME Tip

4.3. Straight-Line Screening

4.4. Evaluation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shi, C.; Luu, D.K.; Yang, Q.; Liu, J.; Chen, J.; Ru, C.; Xie, S.; Luo, J.; Ge, J.; Sun, Y. Recent advances in nanorobotic manipulation inside scanning electron microscopes. Microsyst. Amp Nanoeng. 2016, 2, 16024. [Google Scholar] [CrossRef] [PubMed]

- Wang, E.K.; Zhang, X.; Pan, L.; Cheng, C.; Dimitrakopoulou-Strauss, A.; Li, Y.; Zhe, N. Multi-Path Dilated Residual Network for Nuclei Segmentation and Detection. Cells 2019, 8, 499. [Google Scholar] [CrossRef] [PubMed]

- Hung, J.; Carpenter, A. Applying faster R-CNN for object detection on malaria images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 56–61. [Google Scholar]

- Elsalamony, H.A. Detection of some anaemia types in human blood smears using neural networks. Meas. Sci. Technol. 2016, 27, 085401. [Google Scholar] [CrossRef]

- Jayakody, H.; Liu, S.; Whitty, M.; Petrie, P. Microscope image based fully automated stomata detection and pore measurement method for grapevines. Plant Methods 2017, 13, 94. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Paranawithana, I.; Youcef-Toumi, K.; Tan, U. Automatic Vision-Guided Micromanipulation for Versatile Deployment and Portable Setup. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1609–1620. [Google Scholar] [CrossRef]

- Yang, L.; Youcef-Toumi, K.; Tan, U. Detect-Focus-Track-Servo (DFTS): A vision-based workflow algorithm for robotic image-guided micromanipulation. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5403–5408. [Google Scholar]

- Yang, L.; Paranawithana, I.; Youcef-Toumi, K.; Tan, U. Self-initialization and recovery for uninterrupted tracking in vision-guided micromanipulation. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1127–1133. [Google Scholar]

- Yang, L.; Youcef-Toumi, K.; Tan, U. Towards automatic robot-assisted microscopy: An uncalibrated approach for robotic vision-guided micromanipulation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 5527–5532. [Google Scholar]

- Bilen, H.; Unel, M. Micromanipulation Using a Microassembly Workstation with Vision and Force Sensing. In Proceedings of the Advanced Intelligent Computing Theories and Applications. With Aspects of Theoretical and Methodological Issues, Berlin, Heidelberg, 15–18 September 2008; pp. 1164–1172. [Google Scholar]

- Sun, Y.; Nelson, B.J. Biological Cell Injection Using an Autonomous MicroRobotic System. Int. J. Robot. Res. 2002, 21, 861–868. [Google Scholar] [CrossRef]

- Saadat, M.; Hajiyavand, A.M.; Singh Bedi, A.-P. Oocyte Positional Recognition for Automatic Manipulation in ICSI. Micromachines 2018, 9, 429. [Google Scholar] [CrossRef]

- Xue, L.; Zhao, D.-J.; Wang, Z.-Y.; Wang, X.-D.; Wang, C.; Huang, L.; Wang, Z.-Y. The calibration model in potassium ion flux non-invasive measurement of plants in vivo in situ. Inf. Process. Agric. 2016, 3, 76–82. [Google Scholar] [CrossRef][Green Version]

- Luxardi, G.; Reid, B.; Ferreira, F.; Maillard, P.; Zhao, M. Measurement of Extracellular Ion Fluxes Using the Ion-selective Self-referencing Microelectrode Technique. J. Vis. Exp. JoVE 2015, e52782. [Google Scholar] [CrossRef]

- McLamore, E.S.; Porterfield, D.M. Non-invasive tools for measuring metabolism and biophysical analyte transport: Self-referencing physiological sensing. Chem. Soc. Rev. 2011, 40, 5308–5320. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, P.C.Y.; Nam, J.; Ge, R.; Lin, W. A micromanipulation system with dynamic force-feedback for automatic batch microinjection. J. Micromech. Microeng. 2007, 17, 314–321. [Google Scholar] [CrossRef]

- Zhang, W.; Sobolevski, A.; Li, B.; Rao, Y.; Liu, X. An Automated Force-Controlled Robotic Micromanipulation System for Mechanotransduction Studies of Drosophila Larvae. IEEE Trans. Autom. Sci. Eng. 2016, 13, 789–797. [Google Scholar] [CrossRef]

- Sun, F.; Pan, P.; He, J.; Yang, F.; Ru, C. Dynamic detection and depth location of pipette tip in microinjection. In Proceedings of the 2015 International Conference on Manipulation, Manufacturing and Measurement on the Nanoscale (3M-NANO), Changchun, China, 5–9 October 2015; pp. 90–93. [Google Scholar]

- Wang, Z.; Li, J.; Zhou, Q.; Gao, X.; Fan, L.; Wang, Y.; Xue, L.; Wang, Z.; Huang, L. Multi-Channel System for Simultaneous In Situ Monitoring of Ion Flux and Membrane Potential in Plant Electrophysiology. IEEE Access 2019, 7, 4688–4697. [Google Scholar] [CrossRef]

- Apolinar Muñoz Rodríguez, J. Microscope self-calibration based on micro laser line imaging and soft computing algorithms. Opt. Lasers Eng. 2018, 105, 75–85. [Google Scholar] [CrossRef]

- Apolinar, J.; Rodríguez, M. Three-dimensional microscope vision system based on micro laser line scanning and adaptive genetic algorithms. Opt. Commun. 2017, 385, 1–8. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; p. 3. [Google Scholar]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Liu, C. Detecting tomatoes in greenhouse scenes by combining AdaBoost classifier and colour analysis. Biosyst. Eng. 2016, 148, 127–137. [Google Scholar] [CrossRef]

- Yu, Y.; Ai, H.; He, X.; Yu, S.; Zhong, X.; Lu, M. Ship Detection in Optical Satellite Images Using Haar-like Features and Periphery-Cropped Neural Networks. IEEE Access 2018, 6, 71122–71131. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Wen, X.; Shao, L.; Xue, Y.; Fang, W. A rapid learning algorithm for vehicle classification. Inf. Sci. 2015, 295, 395–406. [Google Scholar] [CrossRef]

- Wen, X.; Shao, L.; Fang, W.; Xue, Y. Efficient Feature Selection and Classification for Vehicle Detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 508–517. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Maini, R.; Aggarwal, H. Study and comparison of various image edge detection techniques. Int. J. Image Process. (IJIP) 2009, 3, 1–11. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Suzuki, S.; Abe, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Bradley, D.; Roth, G. Adaptive thresholding using the integral image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Wellner, P.D. Adaptive thresholding for the DigitalDesk. Xerox EPC1993-110 1993, 110, 1–19. [Google Scholar]

- Schapire, R.E.; Singer, Y. Improved Boosting Algorithms Using Confidence-rated Predictions. Mach. Learn. 1999, 37, 297–336. [Google Scholar] [CrossRef]

- Kuang, H.; Chong, Y.; Li, Q.; Zheng, C. MutualCascade method for pedestrian detection. Neurocomputing 2014, 137, 127–135. [Google Scholar] [CrossRef]

- Ma, Y.; Dai, X.; Xu, Y.; Luo, W.; Zheng, X.; Zeng, D.; Pan, Y.; Lin, X.; Liu, H.; Zhang, D.; et al. COLD1 Confers Chilling Tolerance in Rice. Cell 2015, 160, 1209–1221. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, X.; Zhang, W.; Wang, L. Multifunctionality of Silicified Nanoshells at Cell Interfaces of Oryza sativa. ACS Sustain. Chem. Eng. 2016, 4, 6792–6799. [Google Scholar] [CrossRef]

- Bai, L.; Ma, X.; Zhang, G.; Song, S.; Zhou, Y.; Gao, L.; Miao, Y.; Song, C.-P. A Receptor-Like Kinase Mediates Ammonium Homeostasis and Is Important for the Polar Growth of Root Hairs in Arabidopsis. Plant Cell 2014, 26, 1497–1511. [Google Scholar] [CrossRef]

- Han, Y.-L.; Song, H.-X.; Liao, Q.; Yu, Y.; Jian, S.-F.; Lepo, J.E.; Liu, Q.; Rong, X.-M.; Tian, C.; Zeng, J.; et al. Nitrogen Use Efficiency Is Mediated by Vacuolar Nitrate Sequestration Capacity in Roots of Brassica napus. Plant Physiol. 2016, 170, 1684–1698. [Google Scholar] [CrossRef]

- Ma, Y.; He, J.; Ma, C.; Luo, J.; Li, H.; Liu, T.; Polle, A.; Peng, C.; Luo, Z.-B. Ectomycorrhizas with Paxillus involutus enhance cadmium uptake and tolerance in Populus × canescens. Plant Cell Environ. 2014, 37, 627–642. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, Y.; He, C.; Wang, Q. Ca2+ Signal Transduction Related to Neutral Lipid Synthesis in an Oil-Producing Green Alga Chlorella sp. C2. Plant Cell Physiol. 2014, 55, 634–644. [Google Scholar] [CrossRef]

| Feature of Methods | Positive Samples Number | Negative Samples Number | Positive Samples Resolution (pixel) | Training Time (hours) | Average Test Time (s/frame) | Detection Rate |

|---|---|---|---|---|---|---|

| Haar-like | 1118 | 1000 | 24 × 24 | 4.5 | 0.23 | 10.97% |

| LBP | 1118 | 1000 | 24 × 24 | 1 | 0.30 | 4.31% |

| Haar-like | 6600 | 3000 | 24 × 24 | 34 | 0.13 | 57.34% |

| LBP | 6600 | 3000 | 24 × 24 | 4.78 | 0.21 | 47.22% |

| Haar-like | 6600 | 3000 | 60 × 20 | weeks | 0.07 | 90.75% |

| LBP | 6600 | 3000 | 60 × 20 | 26 | 0.13 | 92.73% |

| LBP | 1118 | 1000 | 48 × 48 | 2.5 | 0.31 | 5.55% |

| LBP | 6600 | 3000 | 48 × 48 | 28.5 | 1.14 | 50.82% |

| LBP | 6600 | 3000 | 90 × 30 | 59.5 | 0.16 | 95.68% |

| LBP+Haar-like | 6600 | 3000 | 90 × 30 (LBP)/60 × 20 (Haar-like) | -- | 0.23 | 99.14% |

| Templet-matching | -- | -- | -- | -- | 0.33–0.44 | 17.50–39.95% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, S.-X.; Zhao, P.-F.; Gao, X.-Y.; Zhou, Q.; Li, J.-H.; Yao, J.-P.; Chai, Z.-Q.; Yue, Y.; Wang, Z.-Y.; Huang, L. Microscopic Object Recognition and Localization Based on Multi-Feature Fusion for In-Situ Measurement In Vivo. Algorithms 2019, 12, 238. https://doi.org/10.3390/a12110238

Yan S-X, Zhao P-F, Gao X-Y, Zhou Q, Li J-H, Yao J-P, Chai Z-Q, Yue Y, Wang Z-Y, Huang L. Microscopic Object Recognition and Localization Based on Multi-Feature Fusion for In-Situ Measurement In Vivo. Algorithms. 2019; 12(11):238. https://doi.org/10.3390/a12110238

Chicago/Turabian StyleYan, Shi-Xian, Peng-Fei Zhao, Xin-Yu Gao, Qiao Zhou, Jin-Hai Li, Jie-Peng Yao, Zhi-Qiang Chai, Yang Yue, Zhong-Yi Wang, and Lan Huang. 2019. "Microscopic Object Recognition and Localization Based on Multi-Feature Fusion for In-Situ Measurement In Vivo" Algorithms 12, no. 11: 238. https://doi.org/10.3390/a12110238

APA StyleYan, S.-X., Zhao, P.-F., Gao, X.-Y., Zhou, Q., Li, J.-H., Yao, J.-P., Chai, Z.-Q., Yue, Y., Wang, Z.-Y., & Huang, L. (2019). Microscopic Object Recognition and Localization Based on Multi-Feature Fusion for In-Situ Measurement In Vivo. Algorithms, 12(11), 238. https://doi.org/10.3390/a12110238