Facial Expression Recognition Based on Discrete Separable Shearlet Transform and Feature Selection

Abstract

1. Introduction

2. Theoretical Analysis

3. The Proposed Algorithm

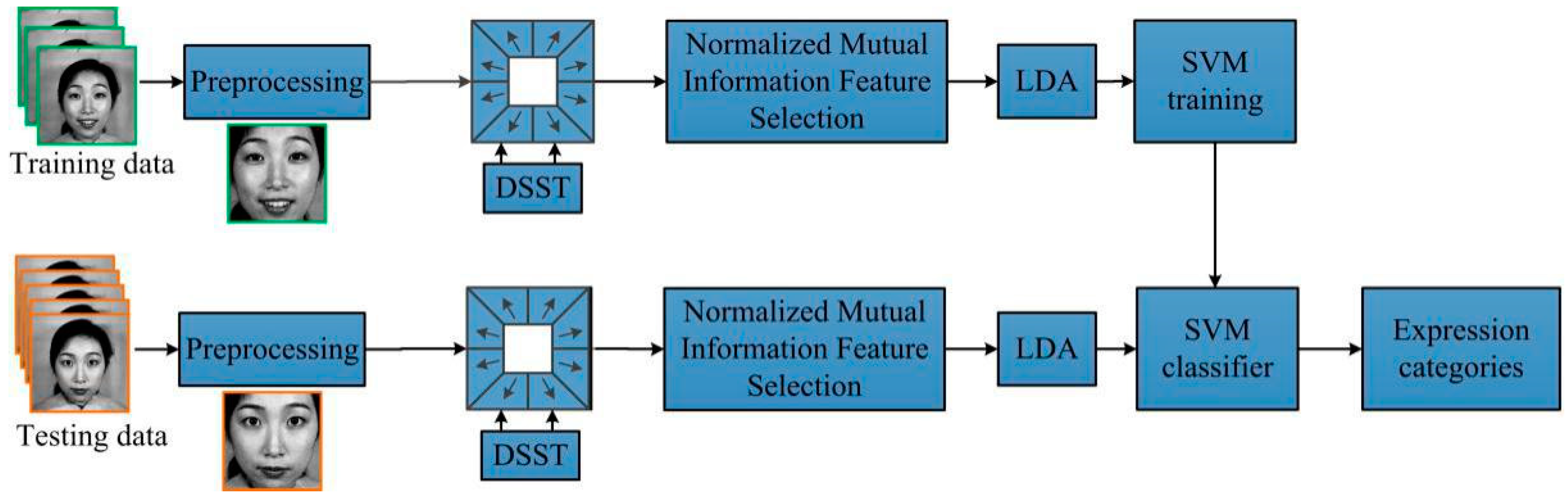

3.1. Framework of the Algorithm

- To reduce the computational complexity and satisfy the requirement of DSST for the input image size, the original image is first preprocessed.

- After preprocessing, the test and training images are discrete separable shearlet transformed, and all DSST coefficients are extracted as the original expression feature set.

- The improved normalized mutual information feature selection method proposed in this paper is used to find the optimal feature subset of the original feature set. The feature subset retains the key classification information of the original set.

- After the feature extraction and selection, the feature space is reduced by employing linear discriminant analysis (LDA).

- The support vector machine (SVM) is used to recognize the expressions.

3.2. Preprocessing

3.3. Discrete Separable Shearlet Transform

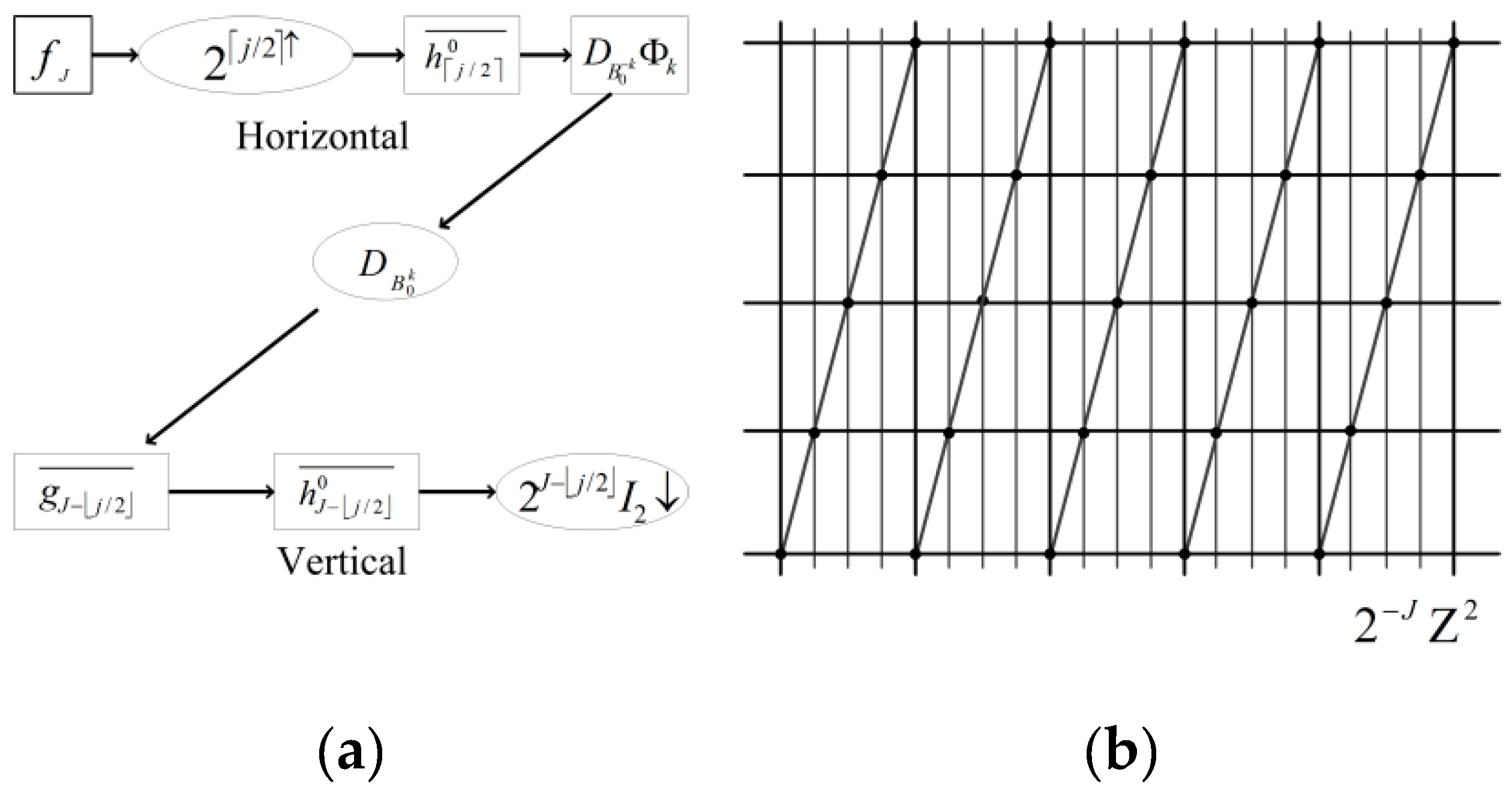

- is up-sampled by to obtain .

- Compute the 1D convolution of and , where is a 1D low-pass filter to obtain .

- is up-sampled by the shear matrix to obtain .

- is down-sampled by to obtain . The 1D convolution of and is then computed.

- Use the separable wavelet transform call and proceed through all the scales, ,.

3.4. Feature Selection

| Algorithm 1 Quantization algorithm | |

| Input: | -Total number of features, -Feature data, -The expected quantization error |

| Output: -Number of quantization levels, -Quantized data | |

| 1. begin | |

| 2. | |

| 3. while 1 do | |

| 4. for do | |

| 5. , , , | |

| , | |

| 6. if then | |

| 7. | |

| 8. end | |

| 9. end | |

| 10. If then | |

| 11. Break | |

| 12. end | |

| 13. | |

| 14. end | |

| 15. end | |

- Initialization: Assume as the original set of features; initialize as an empty set.

- Calculate the joint mutual information of each feature and class: .

- Find the first selected feature: find the feature that maximizes ; delete from set ; and then add to set , i.e., , .

- Repeat the following procedure until : (a) Computed the feature-feature mutual information ; (b) find the next selected feature: that maximizes the criterion function shown in (18); (c) delete from set , then add to set , i.e., , ; (d) the output set , which contains selected features, is the most relevant features subset from an initial set of .

3.5. Dimension Reduction Based on Linear Discriminant Analysis

3.6. Facial Expression Recognition and Classification Using Support Vector Machine

- Selection of the kernel function: in this method, we choose radial basis function (RBF) as the kernel function, as shown in (22). The reasons for choosing RBF are that RBF can realize nonlinear mapping and solve nonlinear separable problems, and, compared with other kernel functions, RBF has only one parameter , so its model complexity is lower than that of others.

- Selection of RBF parameter and penalty coefficient : when using the RBF kernel function, the values of two parameters, and , are considered. However, there is no theoretical guidance on how to select the two parameters’ values, therefore, we use Dr. Lin’s tool grid.py in LibSVM to select the optimal values of and , and .

- Construction of the multiclass SVM classifier: we adopt the one-against-one voting strategy of SVM. In the training stage, we use 6 categories of samples to construct SVM binary classifiers. We save the results of each SVM binary classifier into an array of structural cells, and hence save all the information needed for multiclass SVM classification into the array of structural cells. In the multiclass SVM classification stage, the training samples are successively passed through the 15 SVM binary classifiers, and the category of data is determined by one-against-one voting strategy.

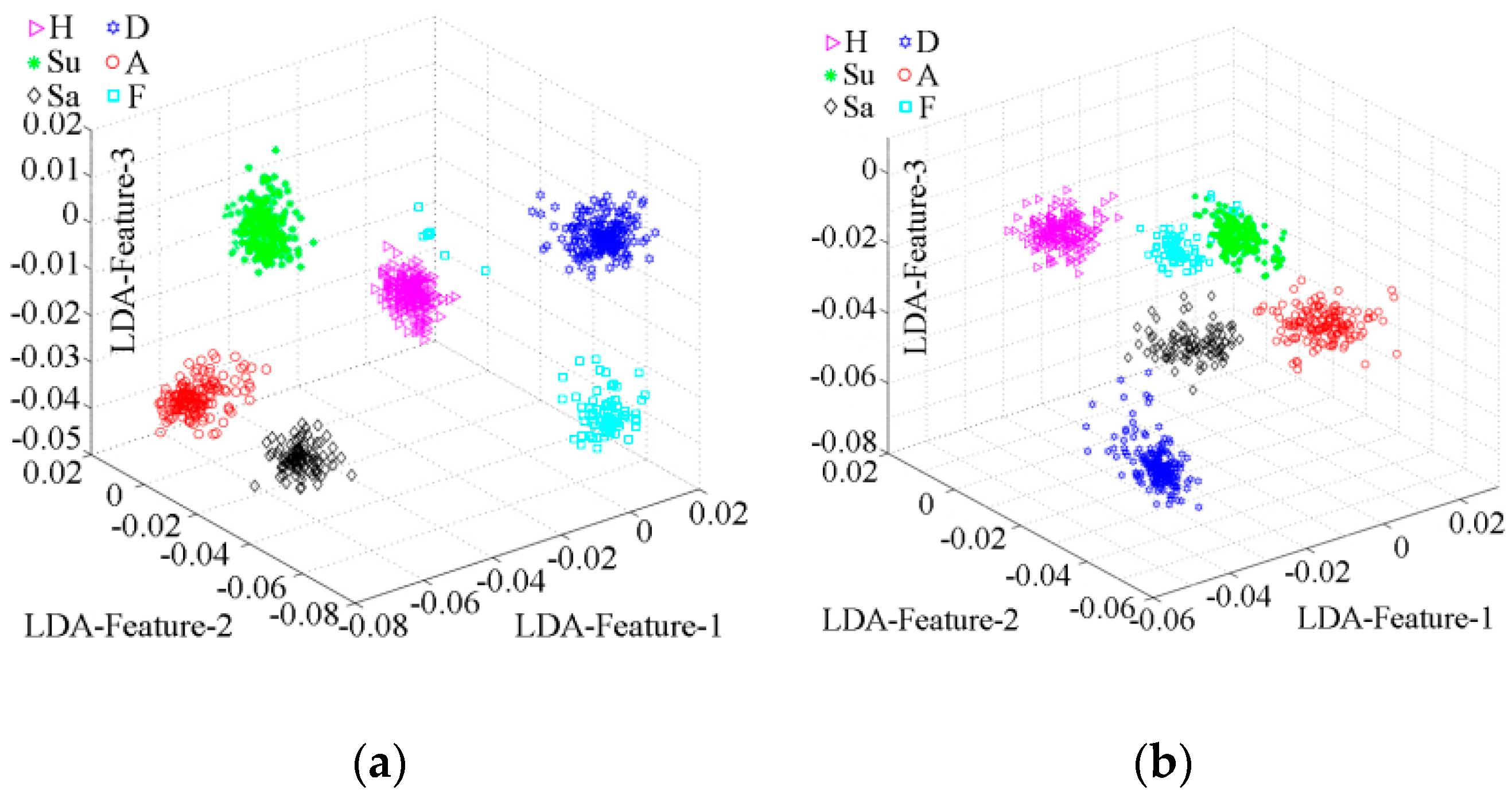

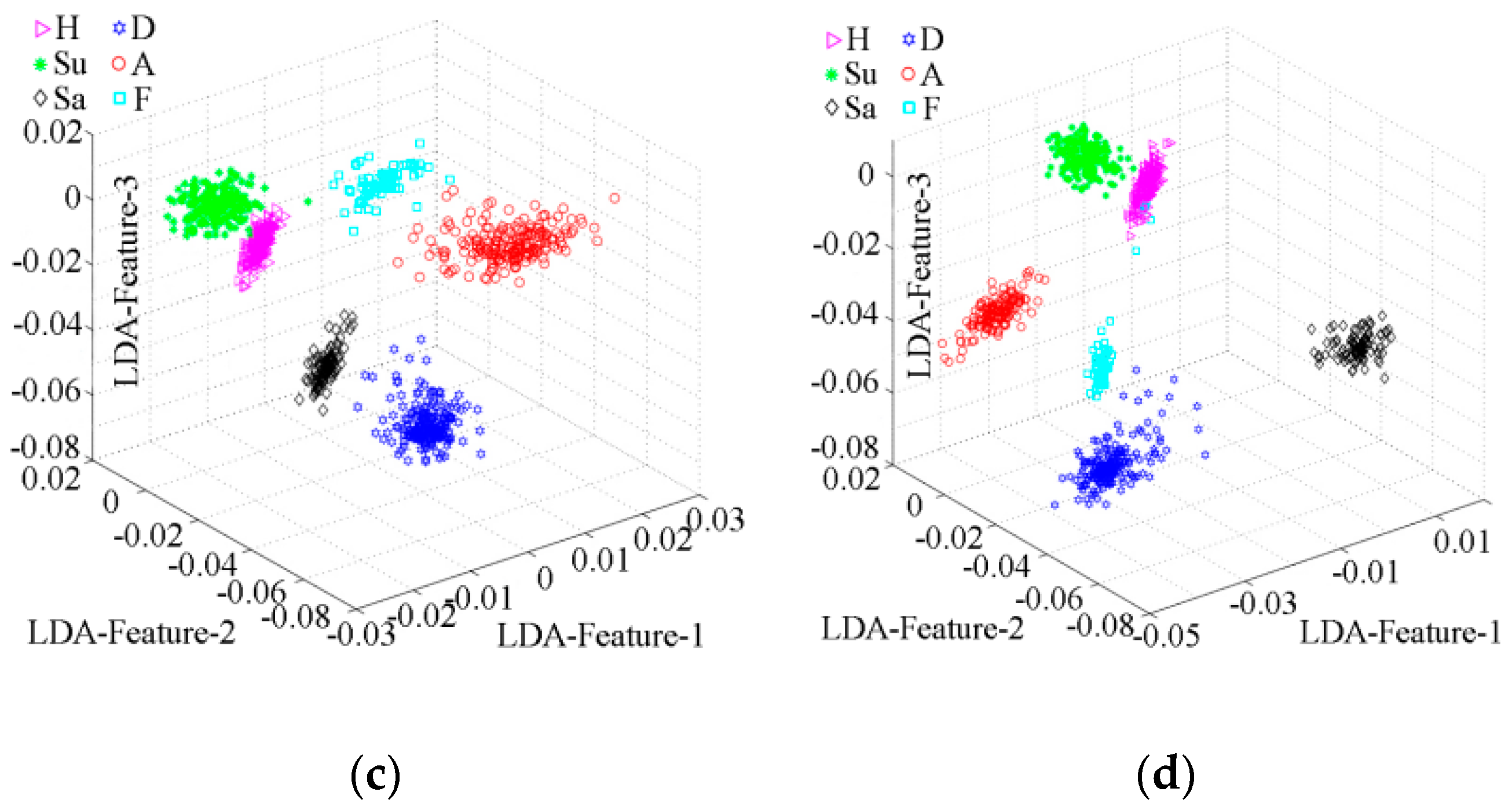

4. Results and Discussion

4.1. Experimental Database

4.2. Recognition Rates of the Proposed Method

4.3. Contrast Experiment

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, W.; Zhang, Y.; Ma, L.; Guan, J. Multimodal learning for facial expression recognition. Pattern Recognit. 2015, 48, 3192–3202. [Google Scholar] [CrossRef]

- Lekdioui, K.; Messoussi, R.; Ruichek, Y. Facial decomposition for expression recognition using texture/shape descriptors and SVM classifier. Signal Process. Image Commun. 2017, 58, 300–312. [Google Scholar] [CrossRef]

- Allaert, B.; Mennesson, J.; Bilasco, I.M. Impact of the face registration techniques on facial expressions recognition. Signal Process. Image Commun. 2018, 61, 44–53. [Google Scholar] [CrossRef]

- Liu, Y. Facial Expression Recognition with Fusion Features Extracted from Salient Facial Areas. Sensors 2017, 17, 712. [Google Scholar] [CrossRef] [PubMed]

- Stai, E.; Kafetzoglou, S.; Tsiropoulou, E.E.; Papavassiliou, S. A holistic approach for personalization, relevance feedback & recommendation in enriched multimedia content. Multimed. Tools Appl. 2018, 77, 283–326. [Google Scholar]

- Pouli, V.; Kafetzoglou, S.; Tsiropoulou, E.E.; Dimitriou, A.; Papavassiliou, S. Personalized multimedia content retrieval through relevance feedback techniques for enhanced user experience. In Proceedings of the IEEE 2015 13th International Conference on Telecommunications (ConTEL), London, UK, 13–15 July 2015; pp. 1–8. [Google Scholar]

- Bobadilla, J.; Ortega, F.; Hernando, A.; Gutiérrez, A. Recommender systems survey. Knowl.-Based Syst. 2013, 46, 109–132. [Google Scholar] [CrossRef]

- Balabanovic, M.; Shoham, Y. Fab: Content-based, collaborative recommendation. Commun. ACM 1997, 40, 66–72. [Google Scholar] [CrossRef]

- Mohammadi, M.R.; Fatemizadeh, E.; Mahoor, M.H. PCA-based dictionary building for accurate facial expression recognition via sparse representation. J. Vis. Commun. Image Represent. 2014, 25, 1082–1092. [Google Scholar] [CrossRef]

- Zhang, Y.D.; Yang, Z.J.; Lu, H.M. Facial Emotion Recognition Based on Biorthogonal Wavelet Entropy, Fuzzy Support Vector Machine, and Stratified Cross Validation. IEEE Access. 2017, 4, 8375–8385. [Google Scholar] [CrossRef]

- Wang, S.H.; Yang, W.; Dong, Z. Facial Emotion Recognition via Discrete Wavelet Transform, Principal Component Analysis, and Cat Swarm Optimization. In Proceedings of the 7th International Conference on Intelligence Science and Big Data Engineering (IScIDE 2017), Dalian, China, 22–23 September 2017; Springer: Berlin, Germany, 2017; pp. 203–214. [Google Scholar]

- Selesnick, I.W. Wavelets, a modern tool for signal processing. Phys. Today 2007, 60, 78–79. [Google Scholar] [CrossRef]

- Tang, M.; Chen, F. Facial expression recognition and its application based on curvelet transform and PSO-SVM. Optik-Int. J. Light Electron Opt. 2013, 123, 5401–5406. [Google Scholar] [CrossRef]

- Hou, B.; Zhang, X.; Bu, X. SAR Image Despeckling based on Nonsubsampled Shearlet Transform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 809–823. [Google Scholar] [CrossRef]

- Lim, W.Q. The discrete shearlet transform: A new directional transform and compactly supported shearlet frames. IEEE Trans. Image Process. 2010, 19, 1166–1180. [Google Scholar]

- Sun, W.Y. Facial Expression Recognition Arithmetic Research; Beijing Jiaotong University: Beijing, China, 2006; pp. 37–38. (In Chinese) [Google Scholar]

- Battiti, R. Using mutual information for selecting features in supervised neural net learning. IEEE Trans. Neural Netw. 1994, 5, 537–550. [Google Scholar] [CrossRef] [PubMed]

- Pablo, A.E.; Tesmer, M.; Perez, C.A. Normalized Mutual Information Feature Selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar]

- Wu, S.H. Generalization and application of Jensen inequality reinforcement. J. Sichuan Univ. (Nat. Sci. Ed.) 2005, 3, 437–443. [Google Scholar]

- Hong, Y.Y.; Wu, C.P. Day-Ahead Electricity Price Forecasting Using a Hybrid Principal Component Analysis Network. Energies 2012, 5, 4711–4725. [Google Scholar] [CrossRef]

- Wei, Y.; Yue, Y. Research on Fault Diagnosis of a Marine Fuel System Based on the SaDE-ELM Algorithm. Algorithms 2018, 11, 82. [Google Scholar] [CrossRef]

- Du, J.L.; Liu, Y.Y.; Yu, Y.N. A Prediction of Precipitation Data Based on Support Vector Machine and Particle Swarm Optimization (PSO-SVM) Algorithms. Algorithms 2017, 10, 57. [Google Scholar] [CrossRef]

- Lyons, M.; Akamatsu, S.; Kamachi, M. Coding Facial Expressions with Gabor Wavelets. In Proceedings of the 3rd IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Lucey, P. The extended Cohn-Kanade dataset (CK+): A complete expression dataset for action unit and emotion-specified expression. In Proceedings of the IEEE 3rd International Workshop on CVPR for Human Communicative Behavior Analysis, San Francisco, CA, USA, 18 June 2010; pp. 94–101. [Google Scholar]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Amsterdam, The Netherlands, 6 July 2005; p. 5. [Google Scholar]

- PICS Database. Available online: http://pics.psych.stir.ac.uk (accessed on 11 November 2018).

- Lu, Y.; Wang, S.G.; Zhao, W.T.; Zhao, Y. A novel approach of facial expression recognition based on shearlet transform. In Proceedings of the IEEE 5th Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 398–402. [Google Scholar]

- Ghimire, D.; Lee, J. Geometric feature-based facial expression recognition in image sequences using multi-class AdaBoost and support vector machines. Sensors 2013, 13, 7714–7734. [Google Scholar] [CrossRef]

- Uçar, A.; Demir, Y.; Güzeliş, C. A new facial expression recognition based on curvelet transform and online sequential extreme learning machine initialized with spherical clustering. Neural Comput. Appl. 2016, 27, 131–142. [Google Scholar] [CrossRef]

- Rivera, A.R.; Castillo, J.R.; Chae, O. Local directional number pattern for face analysis: Face and expression recognition. IEEE Trans. Image Process. 2013, 22, 1740–1752. [Google Scholar] [CrossRef] [PubMed]

- Lu, G.M.; Li, X.N.; Li, H.B. Research on Recognition for Facial Expression of Pain in Neonates. Acta Opt. Sin. 2008, 11, 664–667. [Google Scholar]

- Li, Y.Q.; Li, Y.J.; Li, H.B. Fusion of Global and Local Various Feature for Facial Expression Recognition. Acta Opt. Sin. 2014, 34, 172–178. [Google Scholar]

| Method | Data Set | Average Accuracy Rate (%) |

|---|---|---|

| With feature selection | JAFFE | 98.00 |

| CK+ | 95.17 | |

| MMI | 96.02 | |

| PICS | 97.33 | |

| Without feature selection | JAFFE | 94.06 |

| CK+ | 91.50 | |

| MMI | 92.23 | |

| PICS | 92.50 |

| Training Dataset. | Testing Datasets | Average Accuracy Rate (%) |

|---|---|---|

| JAFFE | CK+, MMI, PICS | 87.89 |

| CK+ | JAFFE, MMI, PICS | 84.97 |

| MMI | JAFFE, CK+, PICS | 85.62 |

| PICS | JAFFE, CK+, MMI | 86.38 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Wang, S.; Zhao, W. Facial Expression Recognition Based on Discrete Separable Shearlet Transform and Feature Selection. Algorithms 2019, 12, 11. https://doi.org/10.3390/a12010011

Lu Y, Wang S, Zhao W. Facial Expression Recognition Based on Discrete Separable Shearlet Transform and Feature Selection. Algorithms. 2019; 12(1):11. https://doi.org/10.3390/a12010011

Chicago/Turabian StyleLu, Yang, Shigang Wang, and Wenting Zhao. 2019. "Facial Expression Recognition Based on Discrete Separable Shearlet Transform and Feature Selection" Algorithms 12, no. 1: 11. https://doi.org/10.3390/a12010011

APA StyleLu, Y., Wang, S., & Zhao, W. (2019). Facial Expression Recognition Based on Discrete Separable Shearlet Transform and Feature Selection. Algorithms, 12(1), 11. https://doi.org/10.3390/a12010011