1. Introduction

Converter steelmaking involves top oxygen injection via lances and bottom gas agitation to lower carbon content and harmful elements (e.g., phosphorus, sulfur) in molten iron, scrap, and slag formers (such as dolomite, lime) to meet grade-specific standards. It also adjusts molten steel temperature and carbon levels to align with tapping specifications [

1]. Converter steelmaking is an exceedingly intricate, high-temperature physicochemical process characterized by turbulent multiphase flow and chemical reactions [

2]. Additionally, in high-temperature, corrosive chemical environments, existing detection methods cannot achieve continuous monitoring of the composition and temperature within the melt pool. As a result, most steel mills currently rely on manual experience for converter endpoint control, resulting in low control accuracy and instability. Being a key procedure, accurate regulation of endpoint carbon levels not only avoids excessive oxidation of molten steel and reduces post-furnace alloy consumption, but also aids in lowering carbon emissions in steelmaking. This, in turn, facilitates high-quality, efficient, and stable operations in converter smelting.

Currently, the primary methods for real-time prediction of carbon content in converters include MMs [

3], data-driven models [

4], and hybrid models [

5]. The mechanistic model is primarily based on material balance and decarbonization kinetics. Dering et al. [

6] proposed a first-principles-based dynamic model for the basic oxygen furnace (BOF), which comprehensively considers the major physical and chemical processes involved in the converter, including slag formation mechanisms and thermal equilibrium relationships. This model ultimately constructs a system of differential algebraic equations (DAEs) capable of predicting the decarbonization reaction process in the BOF. According to Root et al., [

7] based on multi-zone reaction kinetics, the decarbonization process in a converter is primarily divided into a jet impact zone and a slag-metal emulsification zone. Considering multiple factors, a corrected mass transfer coefficient and droplet size distribution function (RRS distribution) are introduced to calculate the total decarbonization rate by adjusting the droplet generation rate. Wang et al. [

8], based on the three-stage decarbonization theory and considering time-series data, use an explicit finite difference method to establish a continuous carbon content prediction model to achieve real-time prediction of carbon content. Although the above mechanism model is highly interpretable, it involves many key parameters that cannot be directly measured or calculated. It introduces many assumptions and simplifications, resulting in a low model accuracy.

Alongside the swift advancement of computer technology, networking technologies, artificial intelligence, and big data technologies, data-driven models are now widely used for predicting the carbon content endpoint in converters [

9,

10,

11]. Real-time carbon content prediction based on data-driven methods primarily relies on real-time detection techniques such as converter flue gas analysis and converter flame imaging. Such approaches are not reliant on in-depth comprehension of a system’s inherent physical mechanisms; instead, they emphasize extracting patterns from and analyzing the data itself. Given that flue gas sensing devices are positioned remotely from the molten bath reaction area, the resulting flue gas data exhibit a measurable time lag. To address this issue, Gu et al. [

12] fitted the real-time changes in converter carbon content using historical flue gas data. They established a dynamic carbon level forecasting model for the converter’s reblowing phase, leveraging case-based reasoning integrated with long short-term memory neural networks (CBR-LSTM) and utilizing time-series data as input. The flame patterns in steelmaking converter exhibit multi-directional, multi-scale irregular characteristics, making them difficult to describe. Liu et al. [

13] developed a flame image feature extraction approach leveraging a derivative-driven nonlinear mapping direction-weighted multi-layer complex network (DDMCN), integrated with a KNN regression model to enable real-time forecasting of carbon content in converter smelting. Data-driven approaches can uncover hidden patterns and correlations in data, though their accuracy is significantly dependent on the quality and quantity of the data [

14,

15]. Additionally, the lack of mechanistic knowledge may lead to violations of physical laws such as the conservation of mass.

To address the limitations of mechanistic models, which suffer from prediction biases due to simplifying assumptions, and data models, which lack physical interpretability, a hybrid model integrating data-driven and mechanistic approaches has been developed. This model leverages mechanistic knowledge to constrain model structure and reduce reliance on data, while utilizing data-driven methods to capture complex nonlinear relationships [

16]. Li et al. [

17], based on furnace gas analysis data, introduced melt pool homogeneity into the traditional exponential model, and used nonlinear fitting to calculate the impact of time-series data on the decarbonization rate of the melt pool. Integrating this with slag molecular theory, they developed a hybrid model for real-time forecasting of carbon levels in converter operations. However, the core of the model still relies on predefined functions and offline parameter calibration, making it challenging to respond in real time to the dynamic effects of instantaneous operations such as gun position changes and flow fluctuations. To enhance adaptability to dynamic processes, Wang et al. [

18] converted the conventional time-dependent exponential decarburization kinetic model into an oxygen-centric form. They employed case-based reasoning (CBR) to determine key model parameters, developed a hybrid model, and thereby enabled real-time forecasting of carbon levels in the converter’s reblowing phase. While this method improves parameter adaptability through data-driven approaches, it lacks direct constraints on the core principles of decarburization kinetics, making it challenging to avoid potential physical logic biases introduced by data-driven methods. To further strengthen mechanism constraints, Xia et al. [

19] developed a physical information neural network (PINN)-based model for predicting the final carbon content of a converter, achieving a hit rate of 93.52% within an error range of ±0.02%. PINN embeds physical laws directly into the neural network’s loss function, allowing the model to capture data correlations while abiding by predefined physical constraints [

20]. However, the model’s static network structure fails to capture dynamic variations in time-series data [

21], restricting its use to forecasting endpoint carbon content in the blowing process instead of continuously monitoring carbon content changes throughout the process [

22].

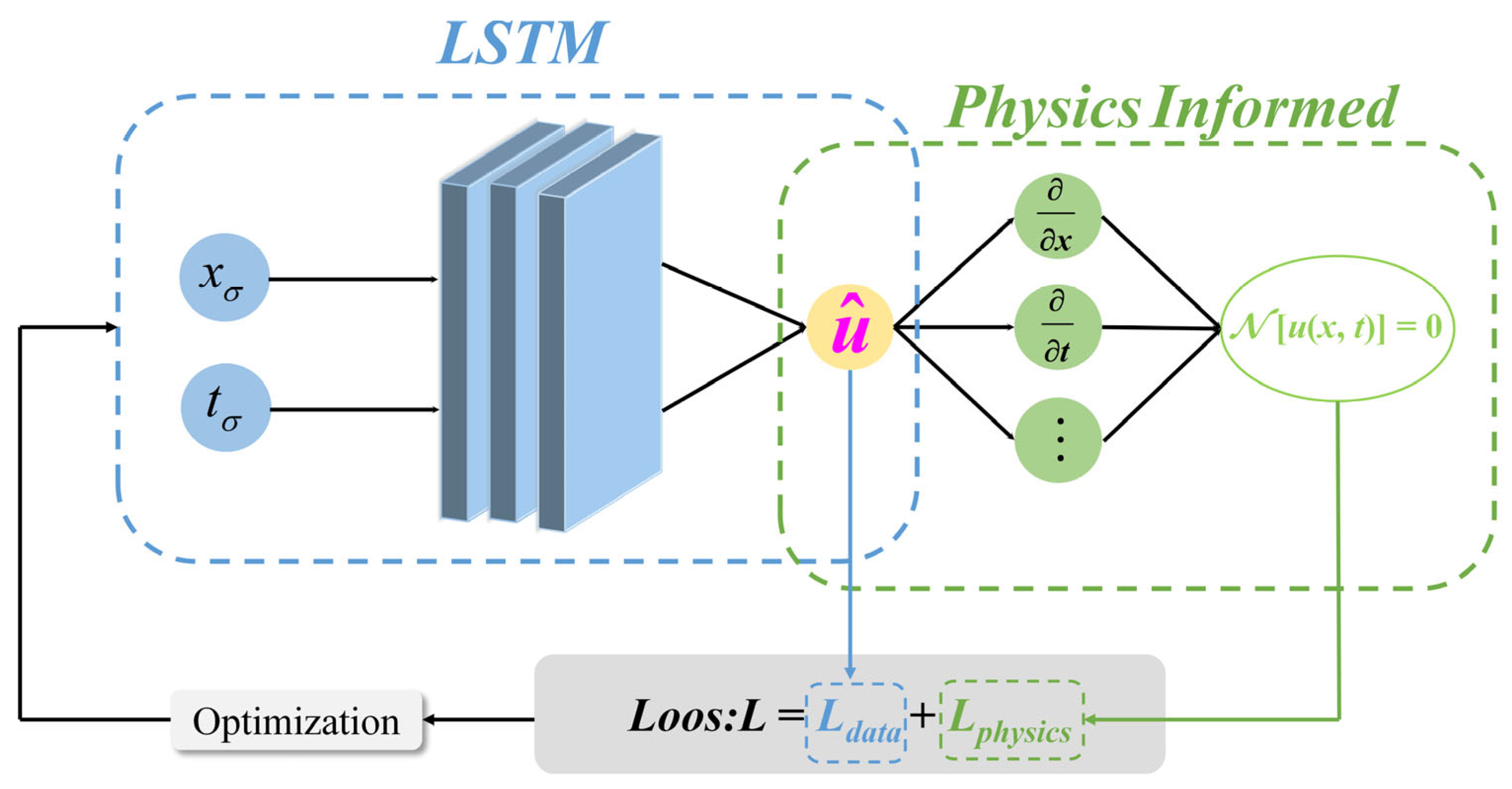

Under the PINN framework, traditional neural networks are substituted with LSTM [

23], resulting in the formation of a physics-informed long short-term memory neural network (PI-LSTM) [

24]. This PI-LSTM hybrid model has shown notable practical utility across various domains, including wave forecasting, material property prediction, and motor stator evaluation [

25,

26,

27,

28]. Still, no studies have yet been reported on its use for real-time prediction of carbon content in converters. This approach integrates the physical law compliance of PINNs with LSTM’s ability to handle nonlinear time series [

29], rendering it well-suited for dynamic modeling in converter carbon content forecasting. In contrast to other hybrid modeling techniques, PI-LSTM inherently embeds physical constraints, lessening reliance on data and boosting the model’s generalizability and interpretability [

30]. In contrast to PINN, the gating mechanism within PI-LSTM precisely captures the temporal characteristics of how variations in lance position and flow rate during the converter’s reblowing phase influence fluctuations in carbon levels. To conclude, through integrating time-series data characteristics of converter smelting with decarbonization dynamics, PI-LSTM can tackle the intricate dynamic properties of converter decarbonization modeling.

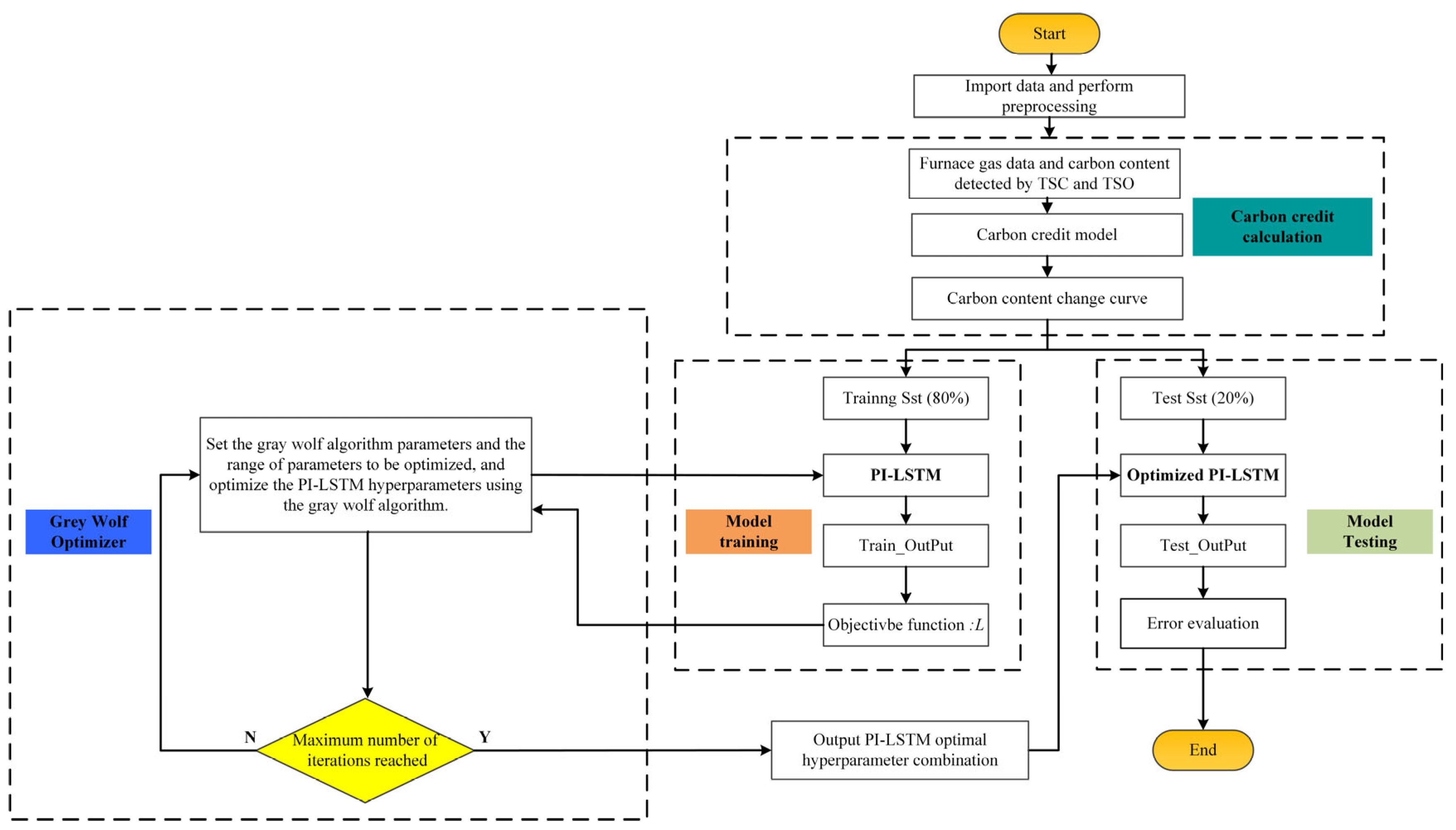

This study addresses the issues of intense data lag in blast furnace blowing process flue gas data and the limitations of current real-time carbon content prediction models. It innovatively constructs a real-time carbon content prediction model targeting the reblowing phase of blast furnaces, leveraging PI-LSTM. First, historical flue gas data from the converter were subjected to time-series alignment processing. Based on the concentrations of CO and CO2 in the exhaust gas, a carbon integration model was used to fit the carbon content change curves for each historical furnace run during the reblowing stage, serving as the calibration benchmark for the actual carbon content values. Second, based on an analysis of the decarbonization kinetics mechanism, the study focused on ascertaining non-measurable parameters in the decarbonization equation during the final stage of the converter procedure. The model employed a dual-branch network structure of LSTM models to simultaneously predict data-driven carbon content and unmeasurable parameters in the mechanistic formula. It used a dual loss function combining data-driven loss and physical mechanism constraint loss, training the model with process data as input and fitted carbon content change curves as output values. The model hyperparameters were optimized using the gray wolf algorithm. Eventually, a real-time carbon content forecasting model for the converter’s reblowing stage leveraging PI-LSTM is constructed, offering a basis for accurate closed-loop control of carbon levels in the converter blowing process.

2. Analysis of the Converter Steelmaking Process

2.1. Converter Smelting Process

As a high-temperature, high-pressure metallurgical reactor, the converter undergoes intense multi-component, multi-phase reactions [

31], as shown in

Figure 1. The objective of smelting is to reduce impurity elements in molten iron to meet specified thresholds and increase its temperature from 1350 °C to around 1650 °C [

32]. According to the operation time of the auxiliary lance, the blowing process is categorized into the main blowing stage and the reblowing stage. In the main blowing stage, scrap steel and molten iron are charged into the converter in sequence. Oxygen is subsequently injected into the molten bath through the oxygen lance at a defined flow rate, while auxiliary fluxes including lime and calcined dolomite are fed in batches. When the oxygen supply reaches approximately 85%, the auxiliary lance is inserted to measure the carbon content and temperature of the melt pool (TSC); In the secondary blowing stage, the required oxygen supply or cooling agent addition is adjusted based on the TSC measurement. After blowing is completed, the auxiliary lance is reinserted to measure the carbon content and temperature (TSO). If TSO meets the endpoint control requirements, alloying is performed; otherwise, re-blowing is conducted [

33].

2.2. Converter Decarbonization Process

Based on the stage-specific characteristics of decarburization kinetics during the converter blowing process, the decarburization process falls into three phases: the initial phase, the middle phase, and the terminal phase of blowing [

34], as illustrated in

Figure 2. During the initial phase of blowing, the melt pool temperature is relatively low (typically < 1400 °C), and oxygen preferentially oxidizes the silicon and manganese elements in the molten iron, while the carbon-oxygen reaction is inhibited. The decarburization rate is gradual and exhibits linear growth [

35]. During the medium blowing phase, as silicon and manganese content significantly decrease and the melt pool temperature rises above 1500 °C, the decarburization reaction enters a high-speed stable phase, reaching a peak decarburization rate that remains constant. At this stage, the rate is primarily governed by oxygen supply intensity, meaning that the higher the oxygen flow rate, the higher the decarburization rate. In the terminal blowing phase, once the carbon content drops below the critical value, the limiting factor of the decarburization reaction shifts to carbon mass transfer and diffusion, and the decarburization rate decreases exponentially with the reduction in carbon concentration. At this point, even if the oxygen supply is increased, the oxygen utilization rate decreases, leading to an exacerbation of oxygen accumulation in the melt pool [

8].

2.3. Converter Flue Gas Analysis

During the converter smelting process, CO generated by the decarburization reaction undergoes secondary combustion within the furnace, with part of it oxidizing into CO2, forming converter furnace gas primarily composed of CO and CO2. The converter furnace gas rises to the hood, where it mixes with air drawn in from outside the hood and undergoes secondary combustion outside the furnace, forming converter flue gas primarily composed of CO, CO2, O2, N2, Ar, H2, and other gases. Converter flue gas analysis and dynamic control continuously monitor the composition and flow rate of flue gas generated during the blowing process. Through model calculations, it provides real-time online forecasts of steel melt element composition and temperature changes, issues warnings, and controls slag conditions. It adjusts oxygen supply flow rates and slag-making regimes online to improve steel melt quality and final target achievement rates.

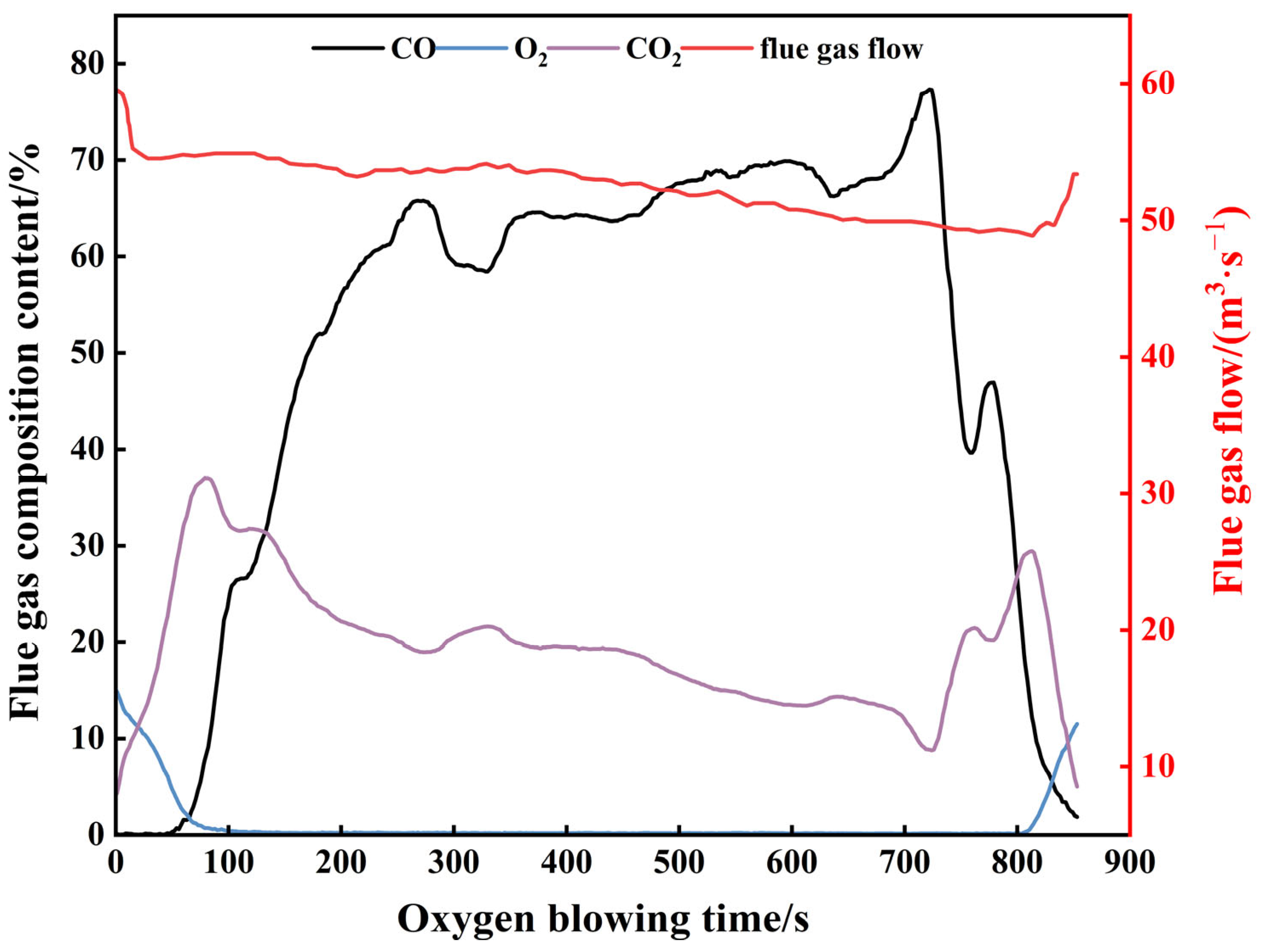

Key components of the flue gas and variations in flue gas flow rate in the converter smelting process are illustrated in

Figure 3. During converter smelting, the trends of CO and CO

2 are opposite. At the end of smelting, fluctuations in CO and CO

2 content are the result of sampling using an auxiliary lance. This is because after the auxiliary lance is inserted, the oxygen lance stops supplying oxygen, causing the CO combustion rate to slow down and resulting in a slight increase in CO content. The trend of CO changes in the figure generally aligns with the three-stage decarbonization theory, as the proportion of CO generated by carbon-oxygen reactions in the furnace smelting process is very high in the flue gas [

36]. By virtue of the volume fractions of CO and CO

2 in flue gas and its flow rate, the decarbonization rate in the melt pool can be computed via the carbon balance during converter smelting (Equation (1)).

In Equation (1), represents the decarbonization rate, kg/s; represents the flue gas flow rate, Nm3/s; , represent the volume fractions of CO and CO2 in the flue gas.

The carbon credit model enables the calculation of the molten pool’s carbon content by deducting the quantity of carbon continuously released from furnace gas as CO and CO

2 from the total carbon content in the TSC molten pool. The residual quantity corresponds to the carbon content in the molten steel pool [

17]. The molten pool instantaneous carbon

content can be obtained solving Equation (2):

In Equation (2), represents the carbon content at time t, %; represents the total carbon content under initial conditions, kg; represents the molten steel mass, kg.

Currently, on-site carbon content measurements are only available at the TSC and TSO time points, with no data available for carbon content during the process. Although, flue gas data inherently exhibits lag due to the influence of flue gas transmission pathways and the response characteristics of detection equipment, resulting in delays during online smelting, it is possible to align flue gas data with non-delayed sequential data, such as oxygen flow rates, based on the delay time. This allows the carbon content during the historical data two-blow process be calculated using a carbon integration model.

4. Model Results and Analysis

4.1. Data Preprocessing

This study employs practical production data gathered from a steel mill’s 300t low-carbon, low-phosphorus steel grade SDC as the research subject. To mitigate the impact of anomalous measurements on model performance, we cleansed the data by identifying and excluding outliers based on the Interquartile Range (IQR) criterion. This method is preferred for its robustness against non-normal data distributions. After sorting the data, we computed Q1 (25th percentile) and Q3 (75th percentile). The IQR (IQR = Q3 − Q1), a measure of statistical dispersion, was then used to establish a lower bound (Q1 − 1.5 × IQR) and an upper bound (Q3 + 1.5 × IQR). Observations outside this interval were classified as outliers and discarded. Applying this filter produced a refined dataset of 1207 high-quality heat records. The data was randomly partitioned, with 80% allocated as the training set and 20% as the test set.

The statistical results of the single-valued and reblowing stage time-series data from the converter blowing process are shown in

Table 1. The processed data for 1207 heats all pertain to the low-carbon, low-phosphorus steel grade SDC. The initial carbon content (

CTSC) ranged from 0.064% to 0.554% (mean 0.244%, standard deviation 0.1068%), while the final carbon content (

CTSO) ranged from 0.025% to 0.066% (mean 0.042%, standard deviation 0.00782%). The composition range covered the typical smelting requirements for this steel grade with no extreme deviations. Key process parameters (oxygen flow rate 28,164–64,228 Nm

3·h

−1; oxygen lance height 1498–5580 mm; bottom-blown gas flow rate 424–2868 Nm

3·h

−1) all fall within the standard operating range for 300-ton converters. The smelting cycle during the re-blowing phase lasted 1–3 min, consistent with industrial production practices.

Among them, CTSC and CTSO are the carbon content detected at TSC time and TSO time, respectively.

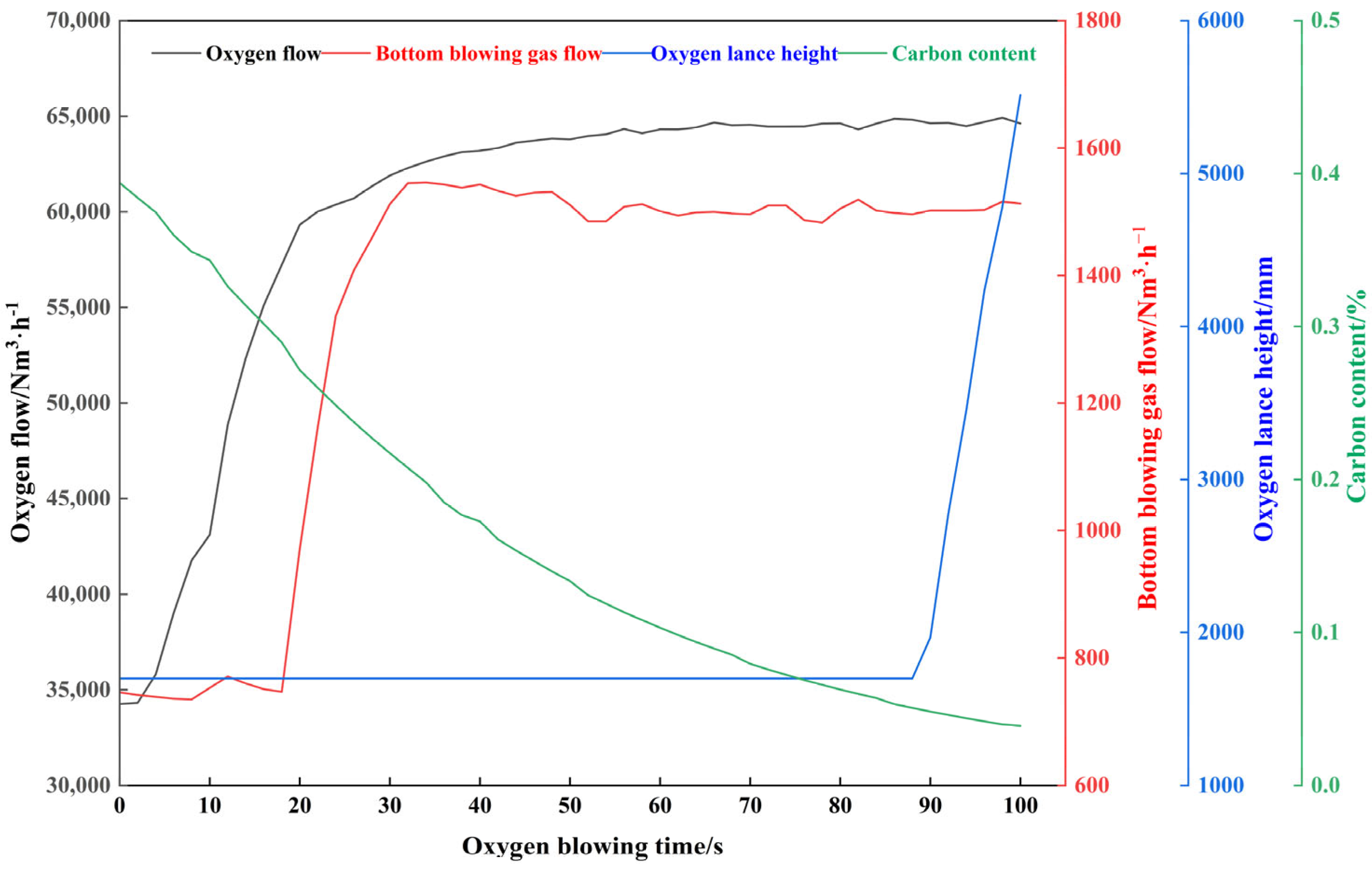

To address the issue of smoke data lag, the carbon content calculated from smoke data will be aligned with non-delayed time-series data, such as oxygen flow rate. The on-site data delay is 70 s. The time-series data for the reblowing stage of a certain heat after alignment is shown in

Figure 8:

Min-max normalization is employed to guarantee that data is scaled to a consistent range of [0, 1] in modeling, preventing gradient instability resulting from varying dimensions of process parameters, speeding up model convergence, and enhancing training efficiency and prediction stability.

In Equation (21), is the standardized data, is the original data, is the maximum value of the original data, and is the minimum value of the original data.

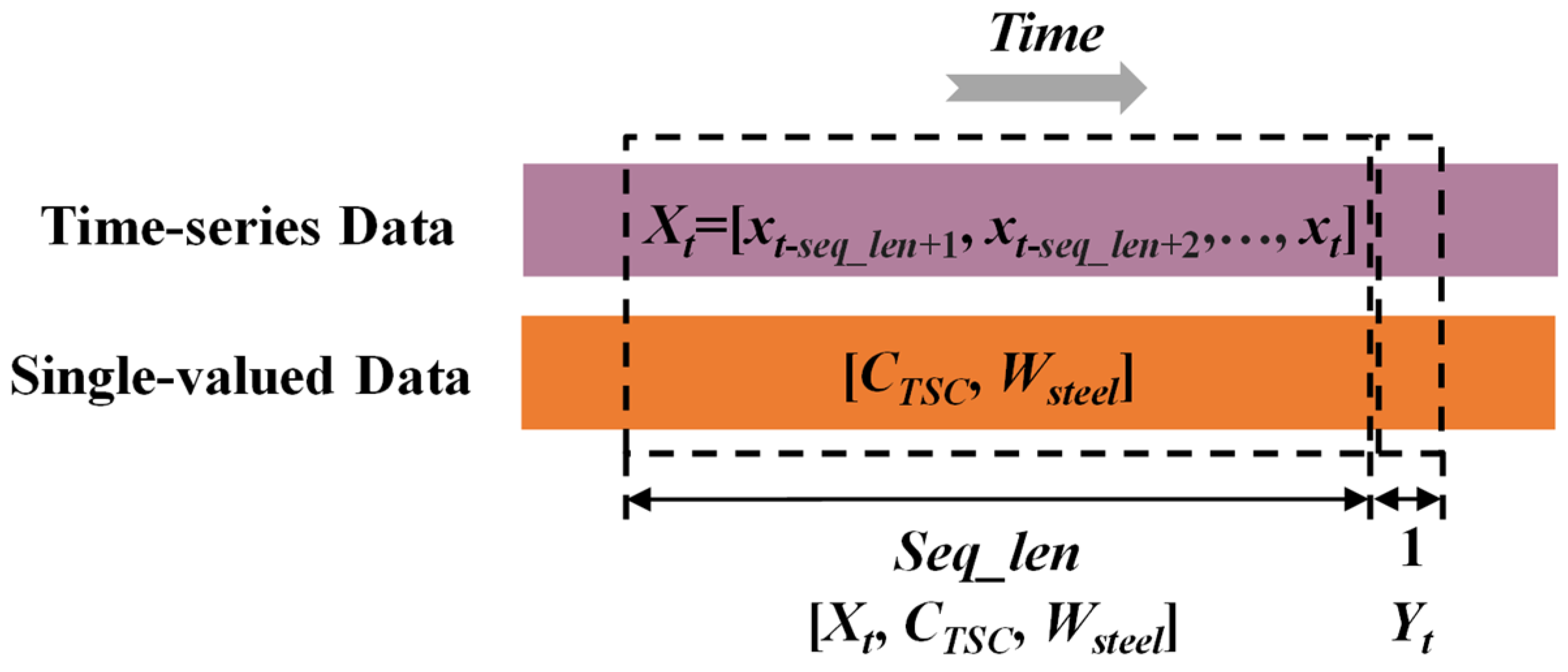

In real-time carbon content prediction scenarios, the temporal dependency of time-series data is critical. To effectively utilize this characteristic, a sliding window mechanism is employed to structurally process continuous data [

45], thereby generating data pairs consisting of input sequences and output labels. The processing workflow is illustrated in

Figure 9. For time-series features, the time-series feature observations from the most recent fixed number of consecutive time points in each data pair form the input sequence

Xt = [

xt-seq_len+1,

xt-seq_len+2, …, xt], with the corresponding output label

Yt being the carbon content value at the next time point. The input sequence length

seq_len is determined through hyperparameter optimization. Additionally, single-valued data such as TSC carbon content and molten steel volume are concatenated with the temporal input of each data pair to form the complete input vector [

Xt,

CTSC,

Wsteel]. The large number of overlapping samples generated in this manner can expand the training set, enhancing the model’s generalization ability. During the prediction phase, the window is dynamically updated to track changes in carbon content in real time.

4.2. Model Evaluation Indicators

In the evaluation of model prediction performance, the root mean square error (RMSE), mean absolute error (MAE), and hit rate (HR) of endpoint carbon content are used as key indicators. RMSE quantifies the overall error level between the predicted values and the actual values by calculating the square root of the mean of the squared differences between the predicted values and the actual values. The magnitude of the RMSE directly reflects the overall deviation of the prediction results, with smaller values indicating higher prediction accuracy; MAE measures the average size of prediction errors by calculating the average of the absolute values of the deviations between predicted and actual values. MAE is less affected by extreme errors and reflects the central tendency of prediction errors; HR is used to assess the accuracy of the model’s prediction of the final carbon content in smelting. It is defined as the proportion of furnace runs where the deviation between the predicted and actual endpoint carbon content falls within a predefined acceptable range, as shown in the formula below (Equations (22)–(24)):

In Equations (22)–(24), represents the actual carbon content value, represents the model-predicted carbon content value, represents the number of furnaces where the absolute value of the difference between the predicted endpoint carbon content and the TSO carbon content does not exceed ±0.005%, ±0.01%, or ±0.015%; and n represents the total number of heats participating in the model prediction.

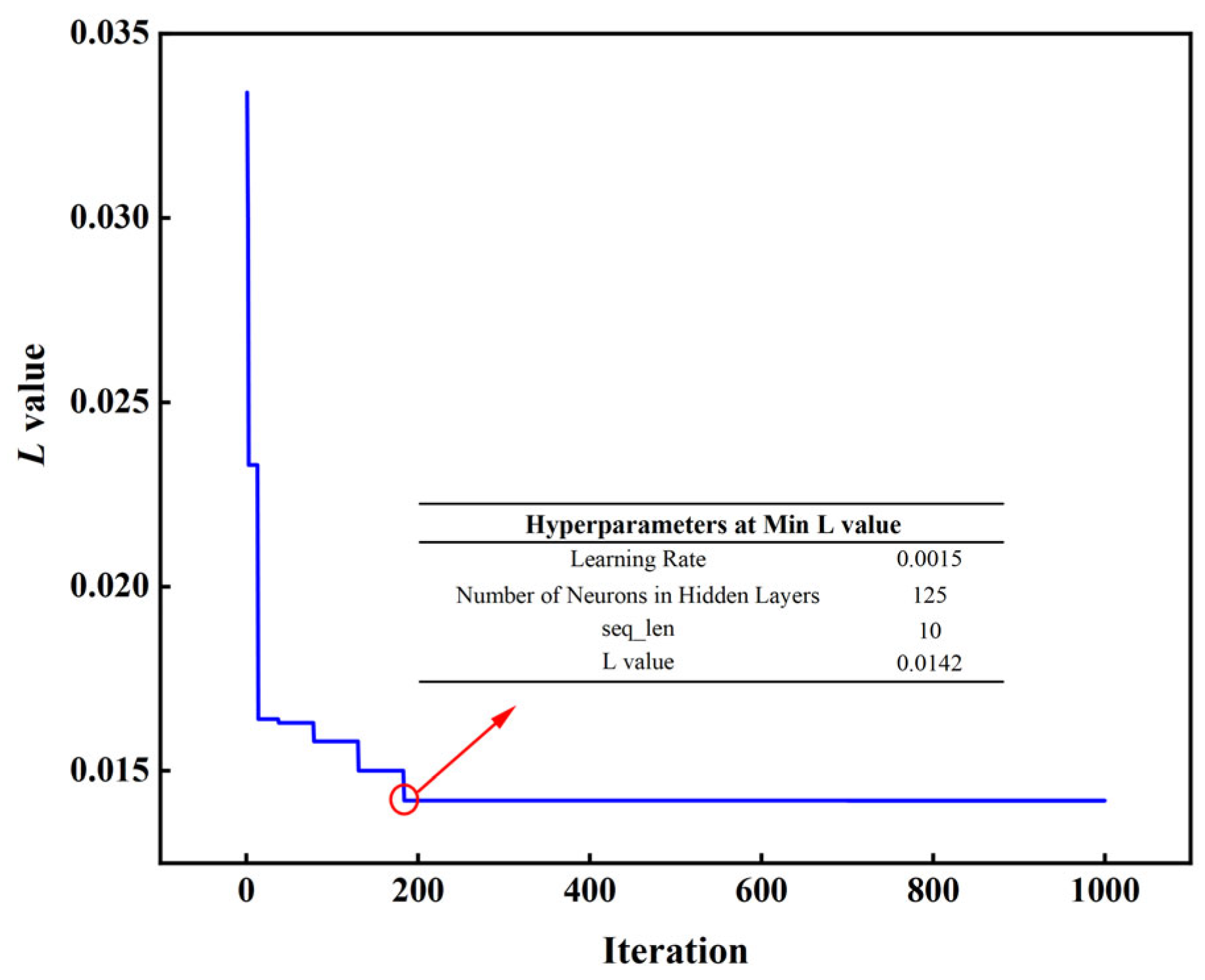

4.3. PI-LSTM Model Hyperparameter Optimization

To enhance the convergence speed, predictive accuracy, and generalization performance of the PI-LSTM model, GWO is employed to tune the hyperparameters of LSTM, including learning rate, hidden layer neuron count, and sequence length (seq_len). The learning rate regulates the step size of weight adjustments in model training. If excessively large, it might lead to training oscillations or divergence; if overly small, convergence will be sluggish. The setting range is [0.0001, 0.1]. The number of neurons in the hidden layer determines the capacity and complexity of the LSTM network. Too few neurons may result in underfitting, unable to capture complex temporal patterns; too many may lead to overfitting. Considering model capacity and computational efficiency, the setting range is [10, 500]. The input sequence length in the sliding window mechanism affects the model’s ability to capture time dependencies. Too small a length may ignore long-term dependencies, while too large a length increases computational burden and may introduce noise. Based on the data sampling frequency (0.5 Hz, i.e., one sample every 2 s) and the duration of the reblowing stage of the converter furnace (1~3 min), the setting range is [1, 15]. The configuration of the GWO algorithm is as follows: the gray wolf population size is set to 30, the maximum iteration count is 1000, and the fitness function adopts the joint loss

L specified in Equation (17). The training set data is fed into the model, with the iterative process illustrated in

Figure 10.

GWO converged to the global optimal solution after only 185 iterations, with the joint loss value L stabilizing at 0.0142, fully demonstrating its efficiency in hyperparameter optimization, particularly in complex nonlinear scenarios such as carbon content prediction during the reblowing stage of a converter. The final optimal parameter configuration determined was a learning rate of 0.0015, 125 hidden layer neurons, and an input sequence length of 10: The learning rate balances the model’s sensitivity to dynamic changes in carbon content with convergence stability; the number of neurons enhances the ability to extract multi-scale features (such as short-term operational fluctuations and long-term decarbonization trends), avoiding overfitting while improving the expression of temporal features; the sequence length of 10 corresponds to a 20 s historical window, aligning with the delay characteristics of decarbonization reactions, ensuring effective capture of lag effects. Overall, GWO optimization significantly improves the predictive performance of the PI-LSTM model.

The optimized model’s joint loss value

L is 0.0142, where the loss

LLSTM for data-driven prediction is 0.0057, and the loss

Lmech for mechanism-based prediction is 0.0085. To determine the weighting coefficient

λ for result fusion in Equation (18), the calculation is performed using Equation (25). The final adjustment of

λ is 0.6, meaning that the data-driven branch prediction accounts for 60% of the weight, and the mechanism-based prediction accounts for 40% of the weight. This allocation reflects the dominance of the data-driven branch due to its lower loss, fully leveraging its ability to capture the nonlinear relationships of temporal operational parameters; it also retains the necessary proportion of the mechanistic model, ensuring that the prediction results do not deviate from the decarbonization kinetic laws, even though its loss is relatively larger. The 40% weight ensures that the prediction results do not deviate from the decarbonization kinetic laws, avoiding potential physical and logical deviations that may arise from data-driven predictions, ultimately achieving a dynamic balance between data features and mechanistic constraints.

4.4. Model Results Analysis

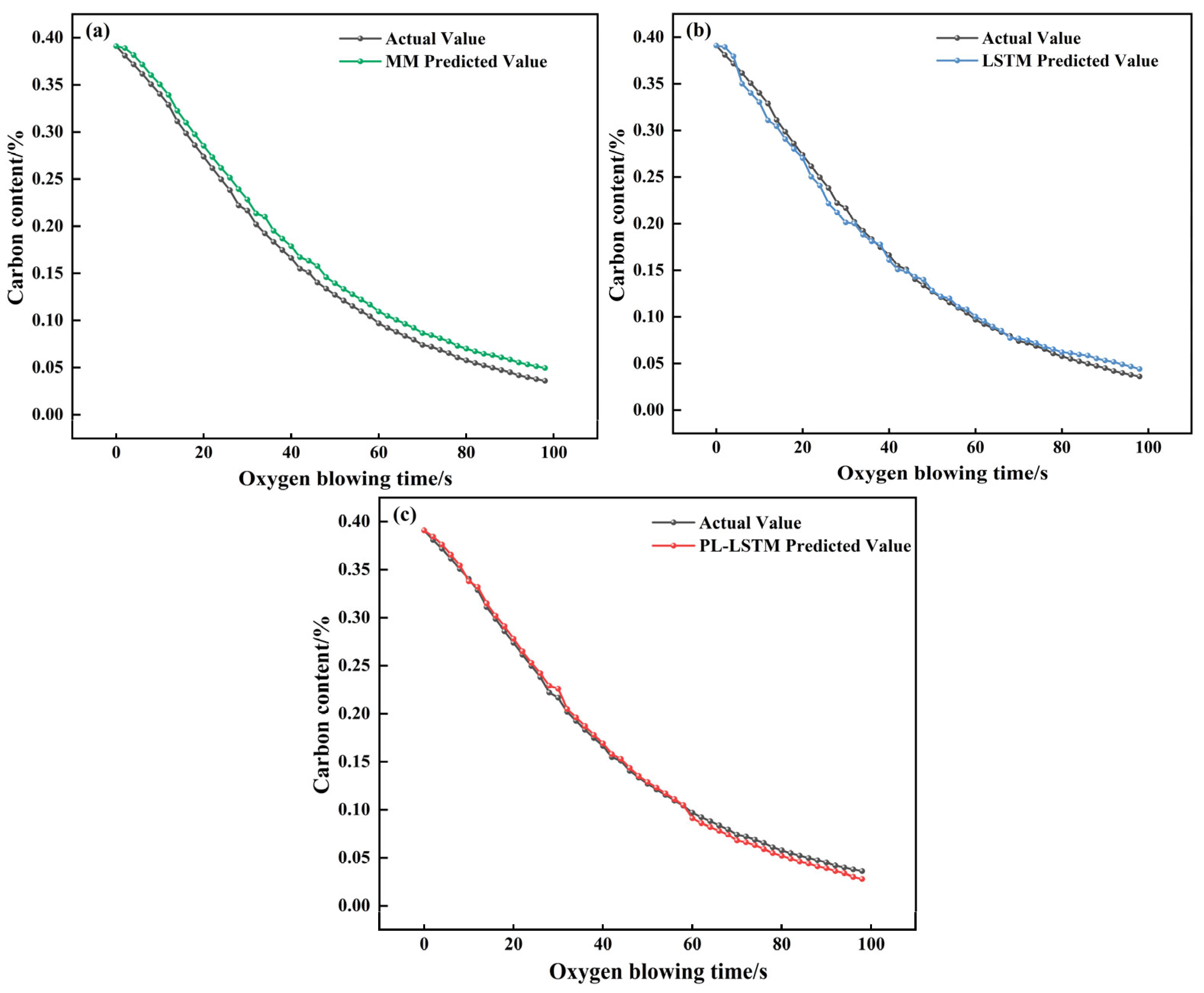

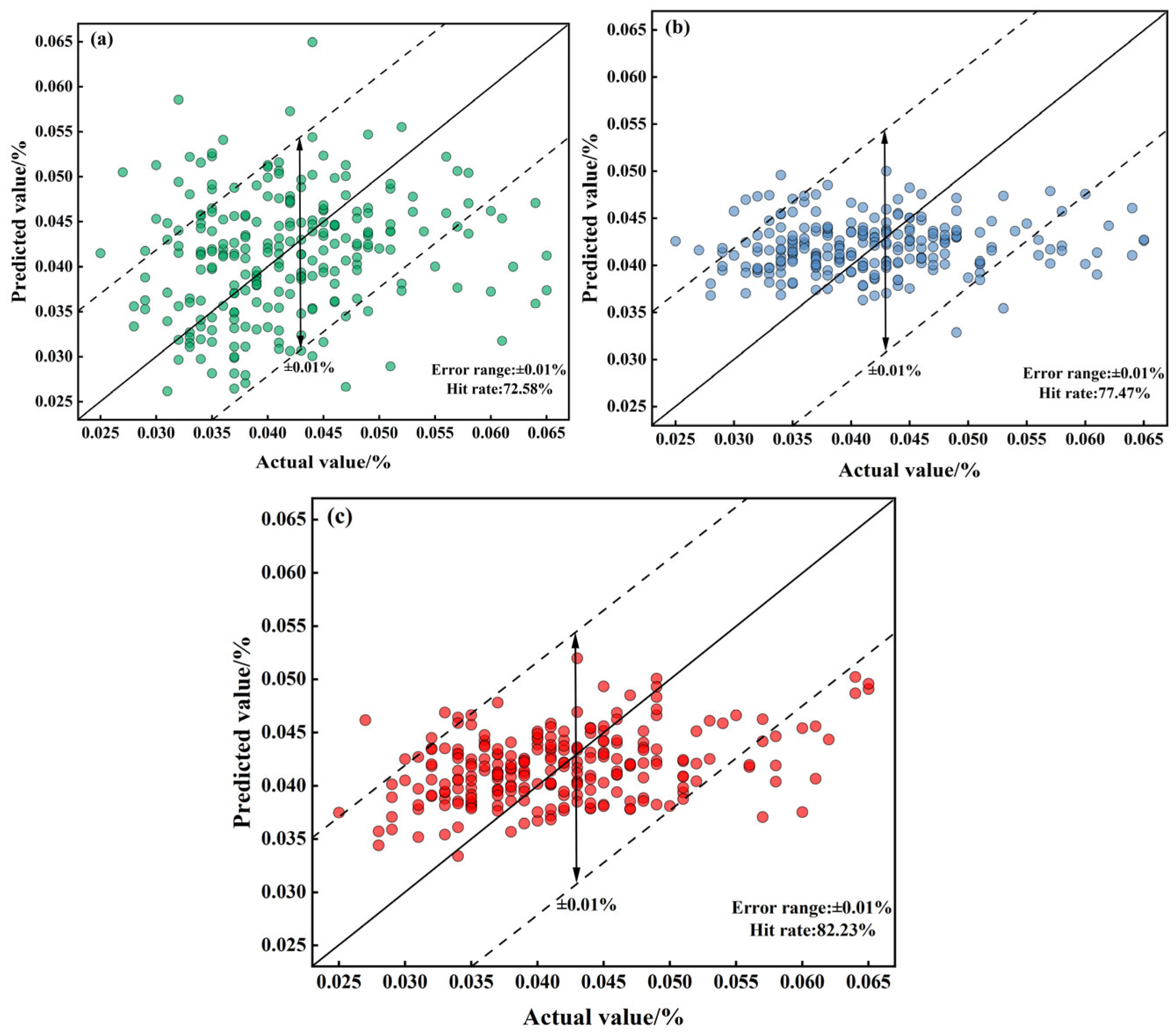

4.4.1. Analysis of Prediction Results

The predictive performance of the constructed PI-LSTM model was verified by comparing it with a mechanistic model (MM) and a purely data-driven LSTM model (to ensure fairness, the unobservable parameters of MM and the hyperparameters of LSTM were optimized based on the training set using the GWO algorithm). Additionally, results from the self-attention-based convolutional parallel network (SabCP) carbon content prediction model from reference [

22], the CBR-LSTM carbon content prediction model from reference [

12], and the PINN carbon content prediction model from reference [

19] were introduced for comparative analysis with the model proposed in this paper. The results are shown in

Table 2,

Figure 11,

Figure 12 and

Figure 13.

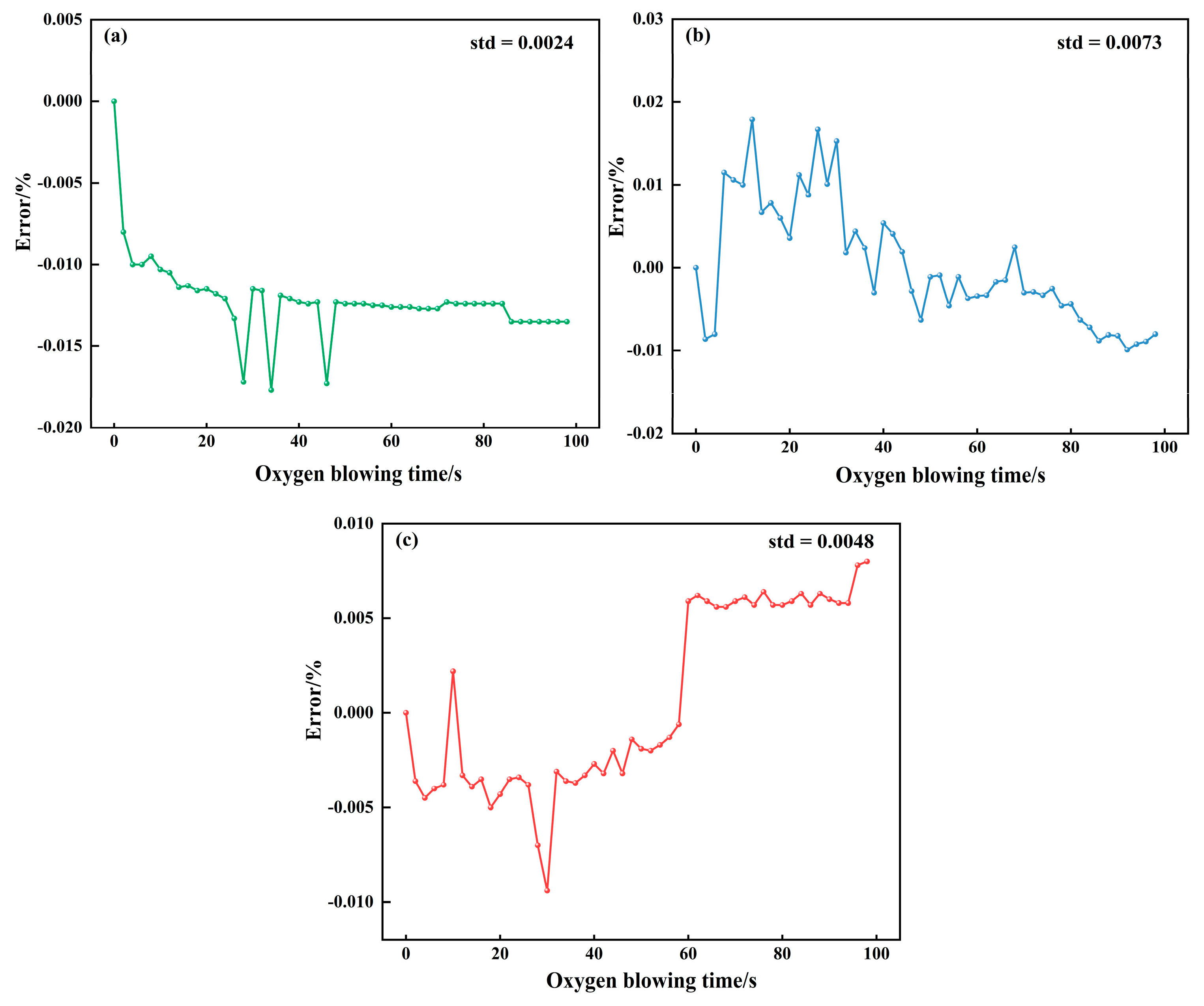

The mechanism-based model realizes prediction by virtue of the converter’s decarbonization kinetic formula, achieving an MAE of 0.0172 and an RMSE of 0.0207. Its hit rates within the error margins of ±0.005%, ±0.01%, and ±0.015% stand at 38.84%, 72.98%, and 89.53%, respectively. From the typical furnace prediction curve (

Figure 11a), it can be seen that although the model optimizes the key parameters in the decarbonization process using GWO, it is difficult to adapt to the dynamic changes in real-time smelting conditions, resulting in significant numerical deviations between the predicted curve and the actual value. It cannot capture the short-term fluctuations in carbon content caused by operations such as oxygen lance adjustment and bottom blowing flow rate fluctuations, and has a significant deviation from the actual value. Considering the typical heat error curve (

Figure 12a), the standard deviation of the error in the mechanism model is only 0.0024. Although the fluctuation range is small, the error sequence exhibits a significant systematic offset (errors concentrated between −0.015% and −0.020%) with no dynamic correction trend. This fundamentally stems from the fixed decarburization formula’s inability to match the real-time reaction state of the molten pool. The scatter plot of endpoint carbon content (

Figure 13a) shows that the predicted results are relatively scattered, with some points exceeding the ±0.01% error range. This reflects that although the mechanism model can follow the overall decarbonization law, it is difficult to adapt to the differences in smelting conditions of different furnaces, and its performance is limited in high-precision control scenarios.

The LSTM model captures temporal features through a purely data-driven approach, with an MAE of 0.0098 and an RMSE of 0.0134. The hit rates within the error ranges of ±0.005%, ±0.01%, and ±0.015% are 45.03%, 77.47%, and 92.14%, respectively. The pure LSTM model is more accurate than the mechanistic model but suffers from a critical flaw. It lacks knowledge of physical laws. Since it is not constrained by the principle that carbon content must decrease monotonically during blowing, its predictions can show unphysical fluctuations, such as temporary increases ((

Figure 11b). Furthermore, as shown in

Figure 12b, its prediction error is unstable and can change abruptly. While most of its endpoint predictions are accurate (

Figure 13b), it produces significant errors in scenarios rarely seen in the training data (e.g., very low carbon content), a classic sign of overfitting. This demonstrates that purely data-driven models are highly dependent on data quality and have limited generalization capability.

The PI-LSTM model integrates the temporal modeling capability of LSTM with decarbonization mechanism constraints, achieving the best performance with an MAE of 0.0077 and an RMSE of 0.0112. The hit rates within the error ranges of ±0.005%, ±0.01%, and ±0.015% are 53.71%, 82.23%, and 95.45%, respectively. The PI-LSTM model demonstrates superior performance, as its predictions almost perfectly match the actual measurements (

Figure 11c). This is achieved by its dual-branch design: one branch learns from real-time data to capture process dynamics, while the other enforces physical laws to ensure the predictions follow a realistic exponential decay, consistent with the three-stage decarbonization theory. The model’s exceptional stability is evident in its error profile (

Figure 12c), which has a very low standard deviation (0.0048) and fluctuates randomly within a narrow band around zero, showing no systematic bias. Finally, the scatter plot (

Figure 13c) confirms its high precision, with nearly all endpoint predictions falling within the ±0.01% error margin. This shows that the model successfully balances general physical principles with the specifics of each heat, delivering accurate, reliable, and physically plausible predictions for real-time control.

Furthermore, comparisons with other literature methods reveal that: Compared to the SabCP [

22] data-driven soft measurement model, the PI-LSTM model achieves approximately 6.73% improvement in hit rate at ±0.01% (from 75.50% to 82.23%) and approximately 8.55% improvement at ±0.015% (from 86.9% to 95.45%). This improvement stems from the integration of mechanism-based constraints, which mitigates the physical inconsistencies inherent in data-driven predictions. Compared to PINN [

12] (Physical Information Neural Network Hybrid Model), PI-LSTM achieves a ±0.005% accuracy improvement of approximately 3.49% (from 50.22% to 53.71%), with a low MAE of 0.0077. This is attributed to the LSTM gating mechanism’s enhanced ability to accurately capture the impact of dynamic temporal features—such as oxygen lance position adjustments during the re-blowing phase—on carbon content. Compared to CBR-LSTM [

19] (Case-Based Reasoning Hybrid Model), PI-LSTM achieves approximately 29.23% improvement in ±0.01% accuracy (from 53.00% to 82.23%) and approximately 23.45% improvement in ±0.015% accuracy (from 72.00% to 95.45%). The model’s dual-branch architecture enables direct optimization of unobservable parameters in the mechanistic model without relying on case matching, which exhibits poor adaptability to new smelting conditions.

To validate the validity of the mechanism parameter prediction results in the PI-LSTM model, the real-time changes in key parameters for a typical furnace operation are presented, as shown in

Figure 14.

Combining

Figure 8 and

Figure 14 reveals that the real-time predicted values of αₘ and βₘ in a typical furnace cycle exhibit dynamic changes highly consistent with the process operations during the secondary blowing stage and the decarbonization kinetics. αₘ remained within the range [1.48, 1.62] throughout the process, consistently falling within the theoretical range αₘ ∈ [1, 2] reported in reference [

37]. Its trend precisely responded to operational adjustments: it rose steadily from 0 to 20 s as oxygen supply intensity increased, reflecting enhanced oxygen utilization efficiency; reaching a peak and maintaining high levels during the stable operation period from 20 to 80 s; then steadily decreasing from 80 to 100 s as the oxygen lance was raised and oxygen supply weakened, aligning with the requirements of the diffusion-controlled phase in the late blowing stage. βₘ ranged between [25.2, 36.5], fully consistent with the expected range of βₘ ∈ [20, 40] reported in reference [

19]. It exhibited a monotonically decreasing trend with decreasing carbon content, perfectly aligning with the kinetic characteristic where decarburization reaction rates slow down due to carbon mass transfer limitations in the final stage. No abnormal fluctuations contrary to process logic were observed, validating the rationality of the model predictions.

4.4.2. Impact of Measurement Errors on the Model

In the carbon content prediction model for the second blowing stage of the converter, the carbon content at the TSC time and the molten steel weight are two irreplaceable key input parameters. Measurement errors in these parameters directly determine the accuracy of the model’s initial inputs and the reliability of subsequent predictions. Industrial TSC carbon content is measured by auxiliary guns, introducing systematic errors. Additionally, random errors may arise from variations in auxiliary gun insertion depth and molten pool inhomogeneity during actual smelting. This makes carbon content one of the single-valued parameters in model inputs that exhibit high sensitivity to prediction outcomes. Industrial molten steel weight is indirectly calculated from the charge ratio of pig iron and scrap steel, making it susceptible to errors due to weighing accuracy during charging—a parameter prone to inaccuracies within initial material conditions.

Industrial measurement errors must balance realism and gradient characteristics. A 5% error represents mild to moderate common industrial deviations, while a 10% error signifies moderate to extreme industrial deviations. This gradient clearly defines the model’s robustness boundaries. Errors typically follow a normal distribution to ensure randomness, as illustrated by Equations (26) and (27).

In Equations (26) and (27), denotes the carbon content at the original TSC time, denotes the original molten steel weight, denotes a random variable following a normal distribution, , or (5% noise) or (10% noise), denotes the carbon content at the TSC time after noise addition, and denotes the molten steel weight after noise addition.

To validate the fundamental stability of the PI-LSTM model under noisy conditions, the study introduced various types and intensities of noise into the model inputs and conducted retests. The final results are presented in

Table 3.

Whether adding 5% noise to the carbon content at the TSC moment or to the molten steel weight, the accuracy decline is limited, demonstrating the model’s reliability under normal industrial tolerances. When 10% noise was added, TSC carbon content caused MAE to increase from 0.0077 to 0.0092 (19.5% increase), and the ±0.01% hit rate decreased from 82.23% to 75.67% (8.0% decrease). In contrast, adding 10% noise to molten steel weight only increased MAE to 0.0088 (14.3% increase) while reducing ±0.01% hit rate to 77.85% (5.3% decrease). TSC represents the initial carbon content, where errors propagate continuously through the carbon integration model and mechanism formula. Meanwhile, molten steel weight corrects partial deviations in real-time via the model’s mechanism branch (based on the decarburization formula) by accumulating oxygen consumption, thereby mitigating error impacts.

When 5% noise was simultaneously added to both TSC-time carbon content and molten steel weight, the ±0.01% accuracy rate reached 78.14%, with errors remaining within acceptable limits. When 10% noise was added, MAE is 0.102, still significantly lower than the mechanism model’s MAE is 0.0172. The ±0.015% accuracy rate of 90.27% continues to meet industrial requirements.

The strong robustness of PI-LSTM against measurement errors stems from two aspects: firstly, MAE insensitivity to extreme errors prevents significant accuracy degradation due to input errors in individual heats; secondly, even when model inputs contain errors, the mechanism branch corrects deviations in the data-driven branch based on physical laws (such as the monotonic decrease of carbon content during the reblowing phase and decarburization rates conforming to the three-stage theory) ensuring predictions remain consistent with metallurgical principles.

4.4.3. Model Generalization Analysis

During converter steelmaking, process parameters and composition control targets vary across different steel grades. A key criterion for assessing a model’s industrial applicability is its ability to maintain stable prediction accuracy when applied to the same equipment but different steel grades. To validate the generalization capability of the PI-LSTM model, weathering steel SPA-H whose process characteristics differ from the original study subject (low-carbon, low-phosphorus steel SDC) was selected as the test subject. The data preprocessing workflow was identical to that for SDC steel (including IQR outlier removal, Min-Max normalization, sliding window time series reconstruction, and 8:2 random division of training and test sets) to ensure testing fairness. The processed dataset comprised 1012 heats. Statistical results for key input parameters of SPA-H steel are shown in

Table 4.

The smelting process parameters for SPA-H steel grade (such as oxygen flow rate and oxygen lance height) differ from those of SDC steel grade, primarily reflected in a higher maximum oxygen supply flow rate (to accommodate the decarburization requirements of weather-resistant steel) and a slightly higher initial carbon content. These differences simulate the actual production scenario of different steel grades processed on the same equipment in industrial settings, effectively testing the model’s adaptability to variations in parameter distributions.

For the 1012 heats data of SPA-H steel, the PI-LSTM model structure (dual-branch network), hyperparameter optimization method (GWO), and loss function were maintained consistent with those for SDC steel. After independent training, the optimized SPA-H-specific model was applied to its test set. The prediction results compared with those from the SDC steel model are shown in

Table 5.

As shown in

Table 5, after generalizing the PI-LSTM model to the SPA-H steel grade, the model achieved an MAE of 0.0089 and an RMSE of 0.0125 on external data, with a ±0.01% error hit rate of 79.50%. Although its performance is slightly lower than that on the original data, it still meets industrial accuracy requirements. This effectively reduces dependence on specific steel grade data and demonstrates potential for application across multiple steel grades within the same equipment.

4.4.4. Scope of Model Applicability

The application scope and validation prerequisites of the PI-LSTM model developed in this study are defined based on the following conditions:

Equipment Boundary: The model is applicable to 300 t nominal capacity converters. If applied to small converters below 120 t or large converters above 500 t, model hyperparameters must be re-optimized using GWO.

Steel Grade Boundary: The model is suitable for low-carbon steels, including low-carbon low-phosphorus steels (SDC type) and weather-resistant steels (SPA-H type).

Data Boundary: The application requires the availability of both single-value process data and time-series data, supported by flue gas analysis system data.

Model Validation and Comparison: Validation and comparative analysis with other models (e.g., MM, LSTM) must be conducted under consistent equipment specifications (300t converter) and similar steel grade compositions (low-carbon steel). This ensures the avoidance of accuracy comparison distortions caused by differences in equipment or steel grade.

5. Conclusions

This paper focuses on the challenge of the real-time forecasting of carbon content in a converter’s reblowing phase. An innovative PI-LSTM model integrating data-driven and mechanism-constrained approaches has been developed to enable real-time forecasting of carbon content changes during the reblowing phase, providing a basis for dynamic adjustments in the steelmaking process. The main research conclusions are as follows:

- (1)

A dual-branch PI-LSTM structure was proposed. The data-driven branch used LSTM to capture the dynamic characteristics of sequential operational parameters (such as oxygen flow rate and oxygen lance height), simultaneously predicting carbon content and key parameters of the decarburization kinetics. The mechanism-based branch calculated carbon content under mechanism constraints using key parameters and decarburization formulas, achieving synergistic optimization between data fitting and mechanism constraints. This addressed the issues of insufficient physical consistency in pure data models and limited accuracy in mechanism-based models.

- (2)

The gray wolf algorithm was used to optimize the LSTM hyperparameters: a learning rate of 0.0015, a hidden layer neuron count of 125, and an input sequence length of 10. The joint loss function converged to 0.0142, balancing the data-driven loss (0.0057) and mechanism-constrained loss (0.0085), thereby improving model convergence speed and prediction stability.

- (3)

Tests on converter data showed that the MAE of PI-LSTM was 0.0077, a 55.2% reduction compared to the MAE of the mechanism model (0.0172) and a 21.4% reduction compared to the MAE of LSTM (0.0098). The hit rate for endpoint carbon content within a ±0.01% error range reached 82.23%, significantly outperforming the comparison models, and the prediction curve accurately tracked the dynamic changes in carbon content, consistent with the physical laws of the three-stage decarburization theory.

- (4)

The PI-LSTM model demonstrates the strong robustness and cross-scenario generalization capability required in industrial applications. On one hand, when faced with common measurement errors in the field, the model maintains a hit rate of over 90% within a ±0.015% error margin, effectively resisting disturbances such as sublance detection deviations and charging weight inaccuracies. On the other hand, when generalizing from low-carbon low-phosphorus steel SDC to weather-resistant steel SPA-H, which has significantly different process characteristics, the model MAE only increases from 0.0077 to 0.0089, while the ±0.01% hit rate remains at 79.50%. This capability enables the model to adapt to complex industrial conditions, including different steel grades and sensor noise.

The PI-LSTM model developed in this study demonstrates key advantages, including high real-time prediction accuracy, enhanced interpretability through the integration of physical mechanisms, and strong practical applicability in industrial settings. The model exhibits good robustness against measurement errors and variations in steel grades, providing reliable support for real-time closed-loop control.

However, the model also has certain limitations. Firstly, its training and validation are based on data from a specific 300-ton converter at a single steel plant and limited steel grades (SDC and SPA-H). Its applicability to larger converters and steel grades with higher carbon content requires further verification. Secondly, although the model reduces data dependency through mechanism-based constraints, it still requires sufficient high-quality historical data for training.

Future work will focus on collecting more diverse industrial data to evaluate the model’s generalization capability and exploring integration with sensor error estimation techniques to further enhance its stability in complex industrial environments.