Performance Evaluation of Similarity Metrics in Transfer Learning for Building Heating Load Forecasting

Abstract

1. Introduction

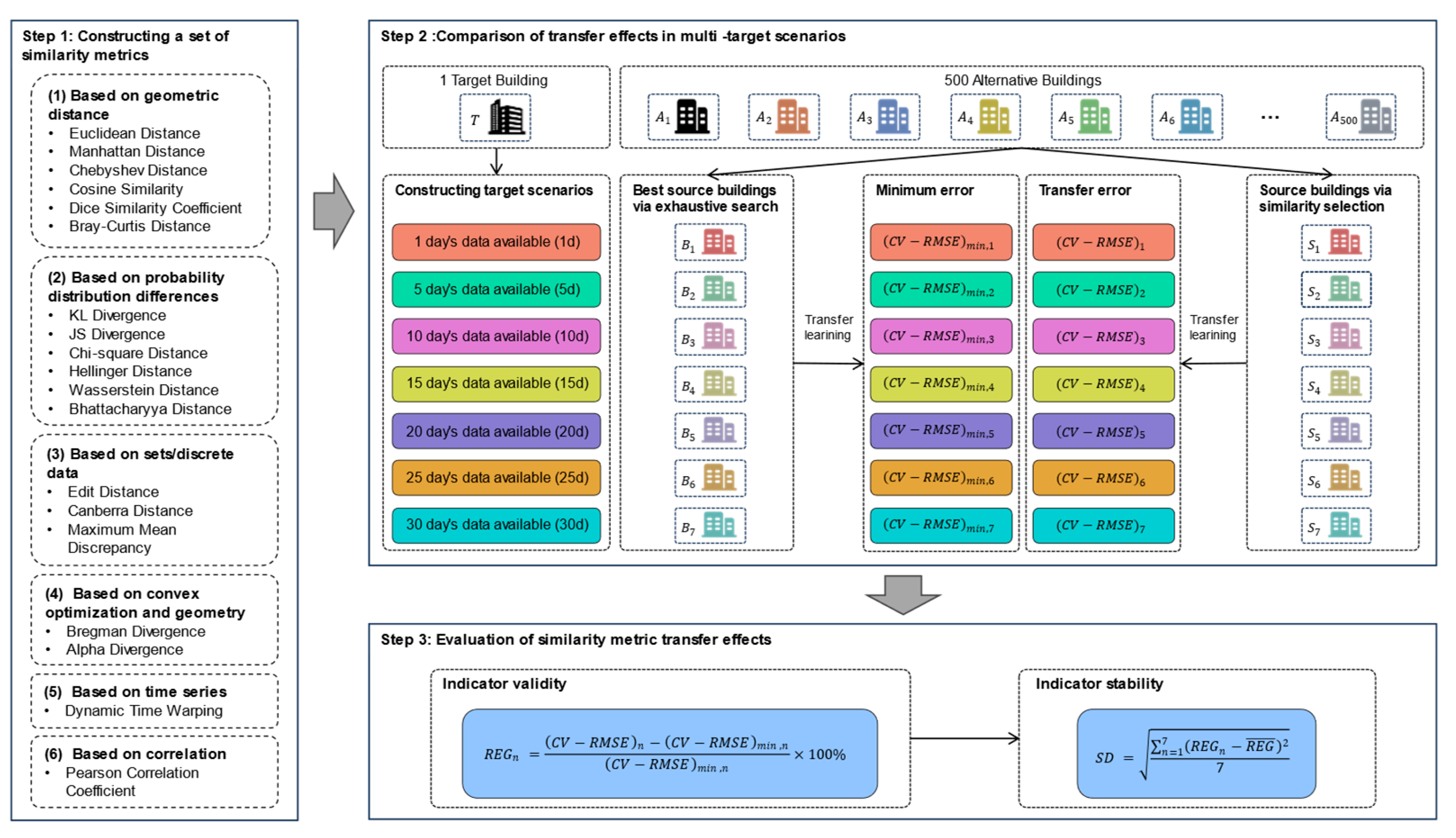

2. Methodology

2.1. Constructing a Set of Similarity Metrics

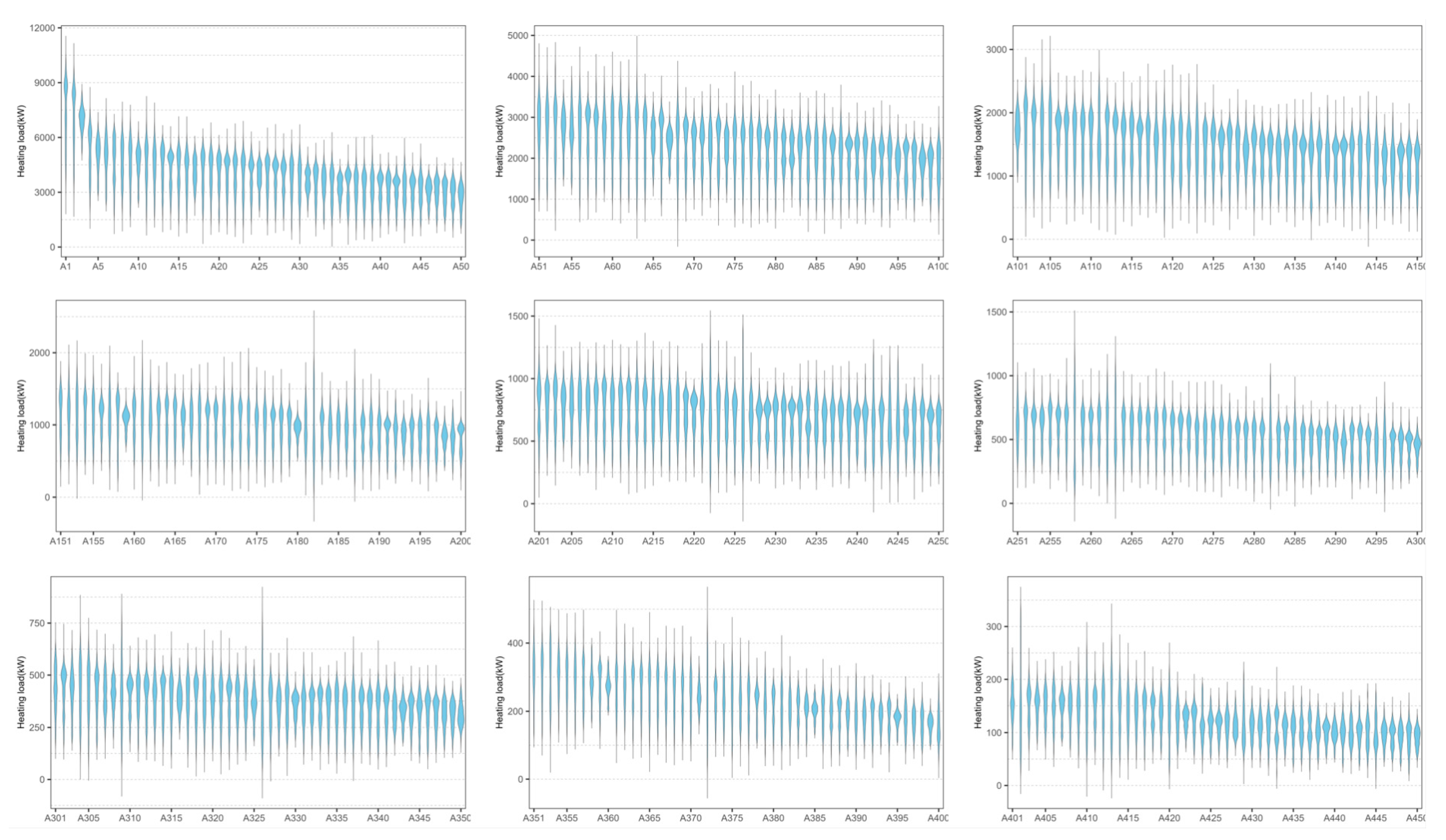

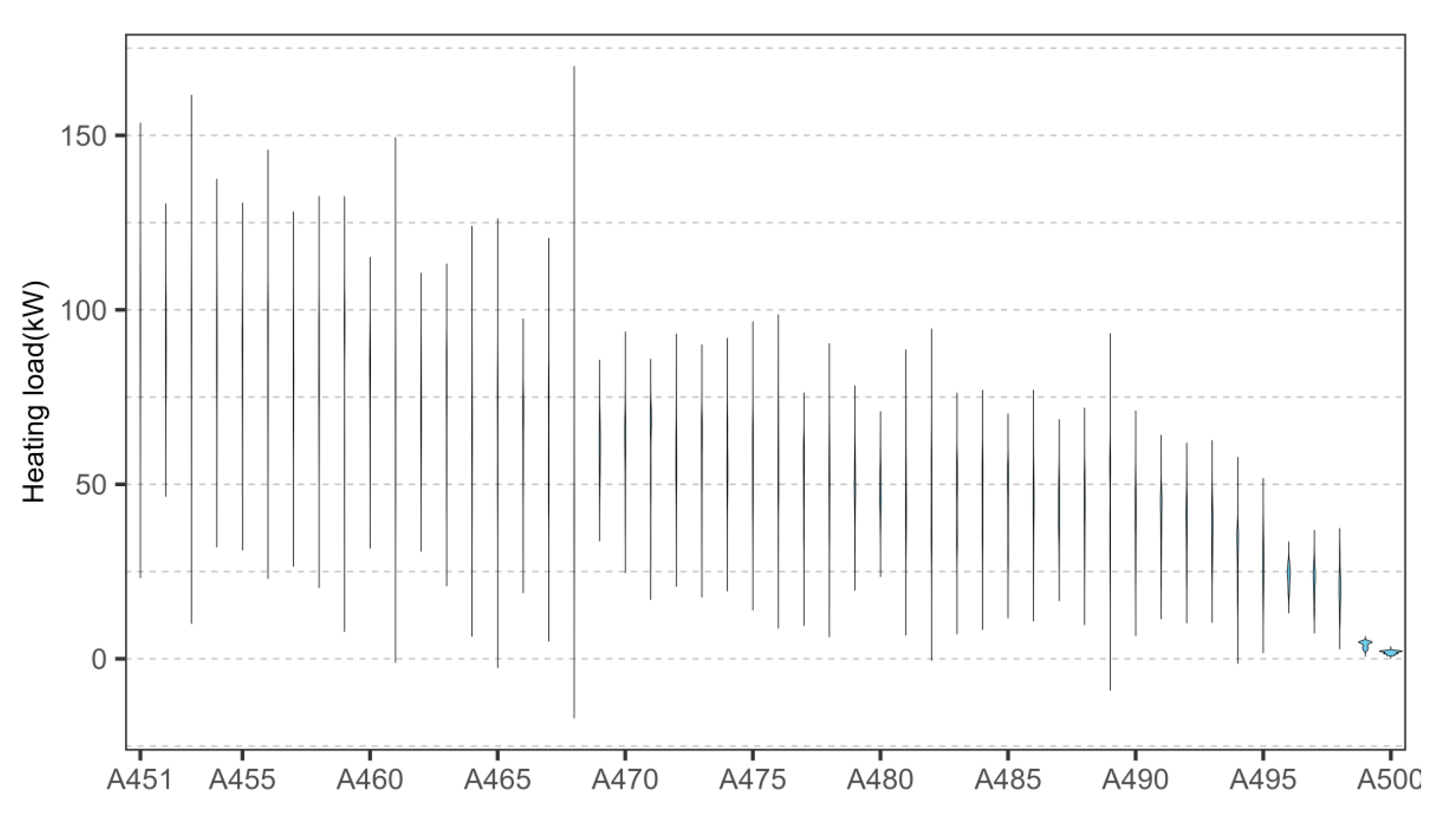

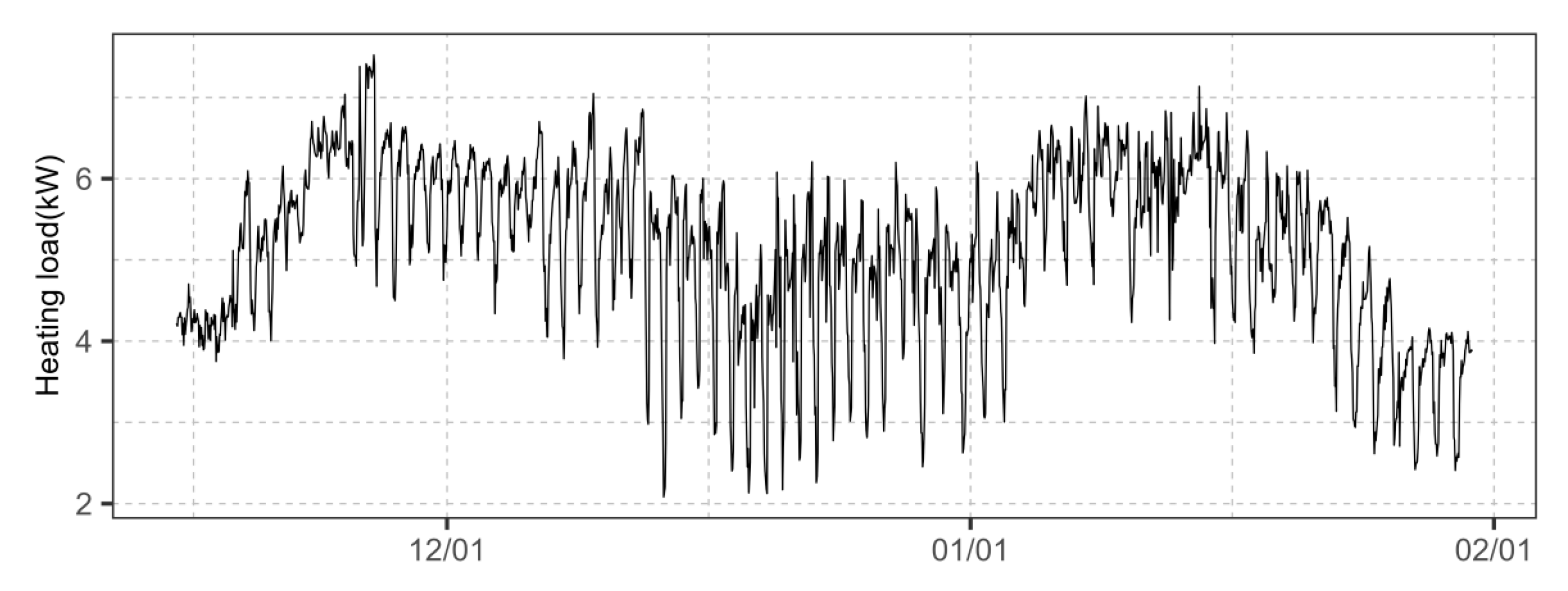

2.2. Comparison of Transfer Effects in Multi-Objective Scenarios

2.2.1. Best Source Buildings via Exhaustive Search

- ①

- Pre-train using the 500 candidate buildings to generate 500 pre-trained models.

- ②

- For each target scenario, fine-tune these pre-trained models to obtain 500 target task models specific to that scenario, resulting in a total of 7 × 500 models for the 7 target scenarios.

- ③

- Test the target task models for each target scenario, select the model with the smallest error as the optimal target task model, and record its corresponding source building as the optimal source building for that scenario, ultimately determining the best source buildings for 7 different scenarios.

2.2.2. Source Building Selection Using Similarity Metrics

- ①

- In each target scenario, use each similarity metric to select what it considers the “best” source building, choosing 20 buildings per scenario, for a total of 7 × 20 source buildings.

- ②

- Pre-train using these selected source buildings to generate 20 pre-trained models per target scenario, for a total of 7 × 20 pre-trained models.

- ③

- Fine-tune these pre-trained models for each target scenario to form 20 target task models specific to that scenario, for a total of 7 × 20 target task models.

- ④

- Test the target task models for each target scenario to assess the transfer effects of each metric in each target scenario.

2.3. Evaluation of Similarity Metric Transfer Effects

2.3.1. Effectiveness Evaluation: Relative Error Gap (REG)

2.3.2. Stability Evaluation: Standard Deviation (SD)

3. Results

3.1. Transfer Results of Different Similarity Metrics in Different Target Scenarios

3.2. Classification of Metrics Based on Source Domain Selection Results

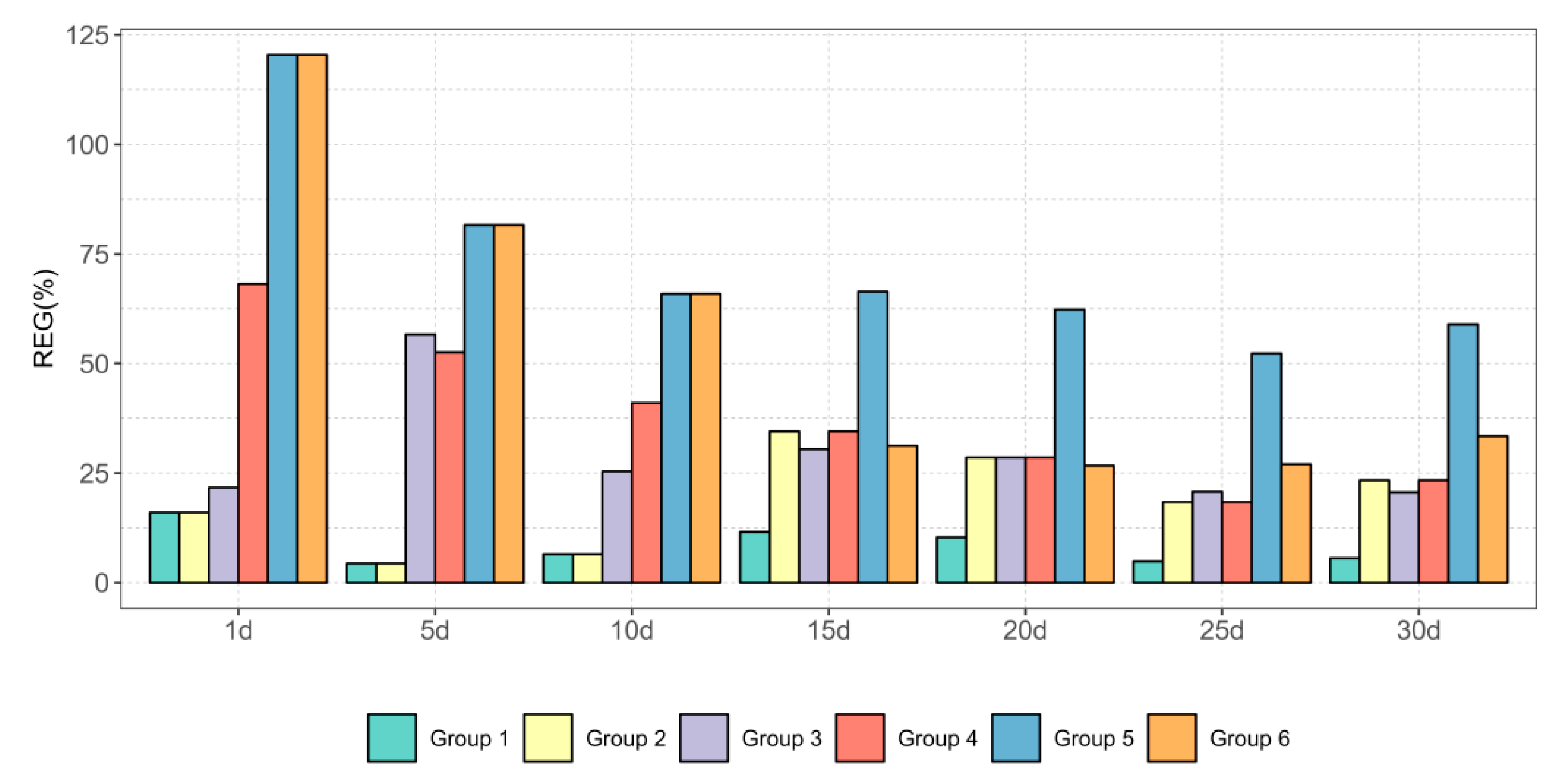

3.3. REG of Each Group’s Transfer Effects Compared to the Best Transfer Effects

4. Conclusions

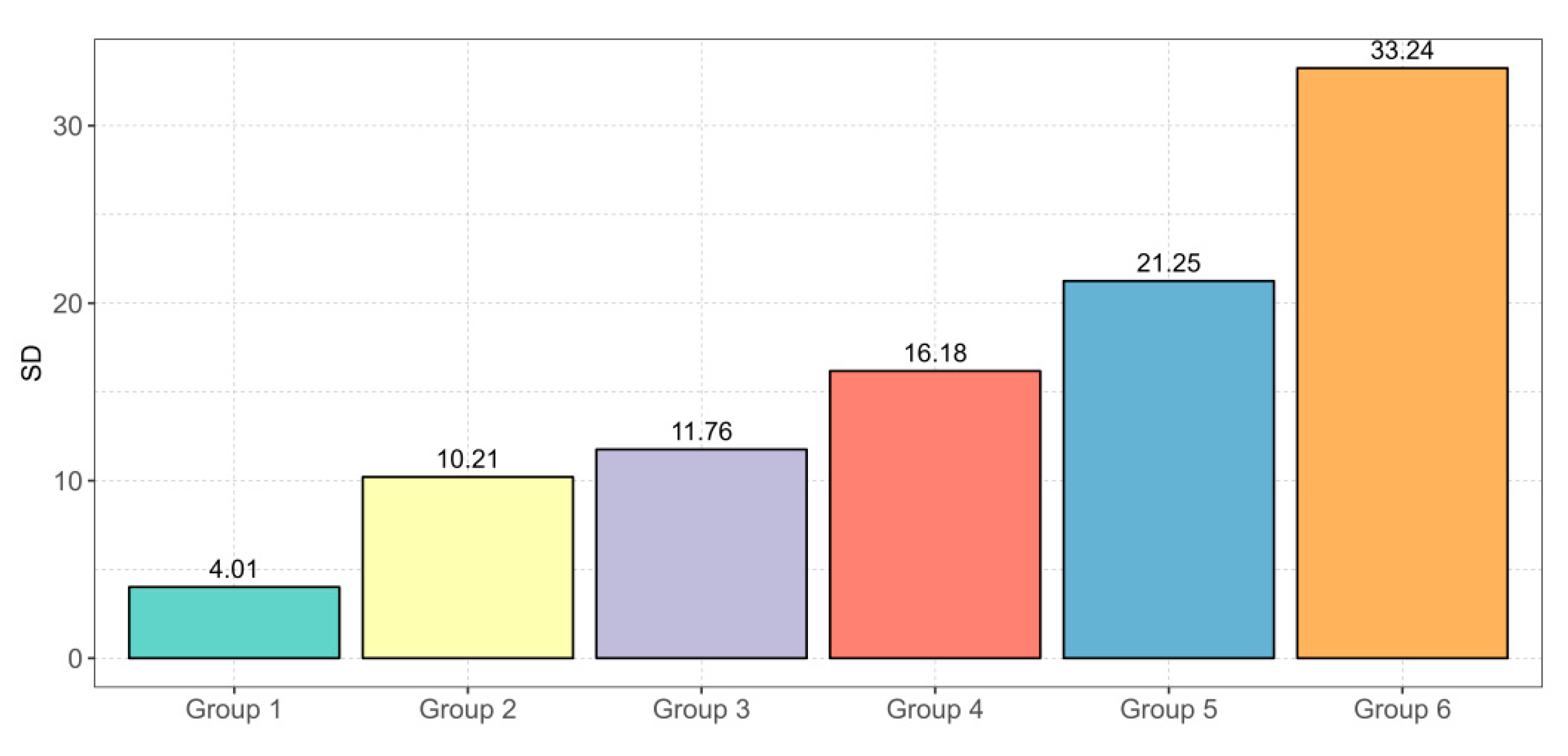

- There is a significant difference in the effectiveness of various similarity metrics in transfer learning strategies. The metrics in Group 1 (such as Euclidean distance) have low REG values (an average of 5%) in most scenarios, with transfer effects close to optimal. In contrast, metrics in Groups 4 to 6 (such as Bhattacharyya coefficient) have high REG values (an average of 15%), exhibiting poor performance and stability.

- Stability evaluation indicators show that while metrics in Group 1 demonstrate excellent REG values, they also maintain a low standard deviation, with an average of 2%, indicating superior stability. Certain metrics in Groups 2 and 3 perform well in specific scenarios but have a larger standard deviation (an average of 5%), indicating performance fluctuations. Metrics in Groups 4 to 6 have both high REG values and standard deviations (an average of 8%), indicating poor stability.

- As the time dimension of the target scenarios increases, the selection of source domains by certain metrics (such as Cosine Similarity in Group 3) changes. Cosine Similarity has a low REG value in the short term (an average of 6%), but as the time grows to 10 to 30 days, the REG value rises to 10%. This may indicate that as time progresses, the distribution characteristics of the data change, thereby affecting the selection of the metric.

- The underlying reason for the superior performance of Group 1 metrics lies in their alignment with the data characteristics of the building heating load forecasting problem. Distance and distribution metrics effectively capture the geometric and statistical similarities in the multi-variate input features that are most relevant for the regression model. This suggests that for transfer learning in building energy forecasting, metrics that prioritize overall magnitude and distribution alignment are more reliable than those focused solely on trend similarity or distribution overlap.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, D.; Hong, T.; Yan, D.; Wang, C. A detailed loads comparison of three building energy modeling programs: EnergyPlus, DeST and DOE-2.1 E. Build. Simul. 2013, 6, 323–335. [Google Scholar] [CrossRef]

- Jiao, Y.; Tan, Z.; Zhang, D.; Zheng, Q.P. Short-term building energy consumption prediction strategy based on modal decomposition and reconstruction algorithm. Energy Build. 2023, 290, 113074. [Google Scholar] [CrossRef]

- Tian, W.; Yang, S.; Li, Z.; Wei, S.; Pan, W.; Liu, Y. Identifying informative energy data in Bayesian calibration of building energy models. Energy Build. 2016, 119, 363–376. [Google Scholar] [CrossRef]

- Ali, U.; Bano, S.; Shamsi, M.H.; Sood, D.; Hoare, C.; Zuo, W.; O’Donnell, J. Urban building energy performance prediction and retrofit analysis using data-driven machine learning approach. Energy Build. 2024, 303, 113768. [Google Scholar] [CrossRef]

- Li, K.; Mu, Y.; Yang, F.; Wang, H.; Yan, Y.; Zhang, C. A novel short-term multi-energy load forecasting method for integrated energy system based on feature separation-fusion technology and improved CNN. Appl. Energy 2023, 351, 121823. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, L.; Hu, F.; Zhu, Z.; Zhang, Q.; Kong, W.; Cui, E. ISSA-LSTM: A new data-driven method of heat load forecasting for building air conditioning. Energy Build. 2024, 321, 114698. [Google Scholar] [CrossRef]

- Lu, Y.; Peng, X.; Li, C.; Tian, Z.; Kong, X.; Niu, J. Few-sample model training assistant: A meta-learning technique for building heating load forecasting based on simulation data. Energy 2025, 317, 134509. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Genkin, M.; McArthur, J.J. A transfer learning approach to minimize reinforcement learning risks in energy optimization for automated and smart buildings. Energy Build. 2024, 303, 113760. [Google Scholar] [CrossRef]

- Obayya, M.; Alotaibi, S.S.; Dhahb, S.; Alabdan, R.; Al Duhayyim, M.; Hamza, M.A.; Motwakel, A. Optimal deep transfer learning based ethnicity recognition on face images. Image Vis. Comput. 2022, 128, 104584. [Google Scholar] [CrossRef]

- Li, G.; Wu, Y.; Liu, J.; Fang, X.; Wang, Z. Performance evaluation of short-term cross-building energy predictions using deep transfer learning strategies. Energy Build. 2022, 275, 112461. [Google Scholar] [CrossRef]

- Fan, C.; Sun, Y.; Xiao, F.; Ma, J.; Lee, D.; Wang, J.; Tseng, Y.C. Statistical investigations of transfer learning-based methodology for short-term building energy predictions. Appl. Energy 2020, 262, 114499. [Google Scholar] [CrossRef]

- Santos, M.L.; García, S.D.; García-Santiago, X.; Ogando-Martínez, A.; Camarero, F.E.; Gil, G.B.; Ortega, P.C. Deep learning and transfer learning techniques applied to short-term load forecasting of data-poor buildings in local energy communities. Energy Build. 2023, 292, 113164. [Google Scholar] [CrossRef]

- Qian, F.; Gao, W.; Yang, Y.; Yu, D. Potential analysis of the transfer learning model in short and medium-term forecasting of building HVAC energy consumption. Energy 2020, 193, 116724. [Google Scholar] [CrossRef]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of representations for domain adaptation. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2006; Volume 19. [Google Scholar]

- Lim, B.; Arık, S.Ö.; Loeff, N.; Pfister, T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 2021, 37, 1748–1764. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, G.; Huang, S. A short-term load forecasting model based on mixup and transfer learning. Electr. Power Syst. Res. 2022, 207, 107837. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, Z.; Wang, Z.; Sun, Y.; Chen, Y. Attention mechanism-based transfer learning model for day-ahead energy demand forecasting of shopping mall buildings. Energy 2023, 270, 126878. [Google Scholar] [CrossRef]

- Xing, Z.; Pan, Y.; Yang, Y.; Yuan, X.; Liang, Y.; Huang, Z. Transfer learning integrating similarity analysis for short-term and long-term building energy consumption prediction. Appl. Energy 2024, 365, 123276. [Google Scholar] [CrossRef]

- Wei, N.; Yin, C.; Yin, L.; Tan, J.; Liu, J.; Wang, S.; Zeng, F. Short-term load forecasting based on WM algorithm and transfer learning model. Appl. Energy 2024, 353, 122087. [Google Scholar] [CrossRef]

- Zhou, H.; Luo, T.; He, Y. Dynamic collaborative learning with heterogeneous knowledge transfer for long-tailed visual recognition. Inf. Fusion 2025, 115, 102734. [Google Scholar] [CrossRef]

- Xu, B.; Feng, Z.; Zhou, J.; Shao, R.; Wu, H.; Liu, P.; Xiao, L. Transfer learning for well logging formation evaluation using similarity weights. Artif. Intell. Geosci. 2024, 5, 100091. [Google Scholar] [CrossRef]

- Chen, X.; Chen, Z.; Hu, S.; Gu, C.; Guo, J.; Qin, X. A feature decomposition-based deep transfer learning framework for concrete dam deformation prediction with observational insufficiency. Adv. Eng. Inform. 2023, 58, 102175. [Google Scholar] [CrossRef]

- ASHRAE. ASHRAE Guideline 14-2014: Measurement of Energy, Demand, and Water Savings; American Society of Heating, Refrigerating and Air-Conditioning Engineers: Atlanta, GA, USA, 2014. [Google Scholar]

| Category | Metric | Description |

|---|---|---|

| Based on geometric distance | Euclidean Distance | Measures the straight-line distance between two points in space. |

| Manhattan Distance | Sums the absolute differences between points along each dimension. | |

| Chebyshev Distance | Finds the maximum difference between any two dimensions of the points. | |

| Cosine Similarity | Compares the direction of vectors, not their magnitude. | |

| Dice Similarity Coefficient | Compares the overlap between two sets. | |

| Bray–Curtis Distance | Measures similarity based on the ratio of shared to total items. | |

| Based on probability distribution differences | KL Divergence | Compares how one probability distribution diverges from a second, expected distribution. |

| JS Divergence | A symmetric version of KL Divergence, measuring the average difference between two distributions. | |

| Chi-square Distance | Compares observed and expected frequencies in categorical data. | |

| Hellinger Distance | Measures the similarity between two probability distributions. | |

| Wasserstein Distance | Measures the distance between probability distributions. | |

| Bhattacharyya Distance | Compares the overlap between two probability distributions. | |

| Based on sets/discrete data | Edit Distance | Calculates the minimum number of operations to transform one string into another. |

| Canberra Distance | Compares the ratio of differences in corresponding elements of two vectors. | |

| Maximum Mean Discrepancy (MMD) | Compares the difference between the means of two distributions. | |

| Based on convex optimization and geometry | Bregman Divergence | Measures the difference between two points using a convex function. |

| Alpha Divergence | A flexible measure of the difference between probability distributions. | |

| Based on time series | Dynamic Time Warping (DTW) | Aligns and compares time series data, accounting for time shifts. |

| Based on correlation | Pearson Correlation Coefficient | Measures the linear relationship between two variables. |

| Layer | Number of Neurons | Activation Function |

|---|---|---|

| Input | 25 | - |

| Hidden Layer 1 | 100 | ReLU |

| Dropout | - | - |

| Hidden Layer 2 | 100 | ReLU |

| Dropout | - | - |

| Hidden Layer 3 | 100 | ReLU |

| Dropout | - | - |

| Output | 1 | Linear |

| Batch | Epochs | Learning Rate | |

|---|---|---|---|

| Pre-training | 32 | 300 | 0.001 |

| Fine-tuning | 32 | 10 | 0.0001 |

| 1d | 5d | 10d | 15d | 20d | 25d | 30d | ||

|---|---|---|---|---|---|---|---|---|

| Group 1 | Euclidean | A237 | A237 | A237 | A237 | A237 | A237 | A237 |

| Standardized Euclidean | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Manhattan | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Chi-square | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| KL Divergence | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| JS Divergence | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Hellinger | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Alpha Divergence | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Bregman Divergence | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| MMD | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Dice | A237 | A237 | A237 | A237 | A237 | A237 | A237 | |

| Group 2 | Chebyshev | A237 | A237 | A237 | A89 | A89 | A89 | A89 |

| Group 3 | Cosine | A415 | A302 | A176 | A176 | A176 | A176 | A176 |

| Group 4 | Bhattacharyya | A89 | A89 | A89 | A89 | A89 | A89 | A89 |

| Bray–Curtis | A89 | A89 | A89 | A89 | A89 | A89 | A89 | |

| DTW | A89 | A89 | A89 | A89 | A89 | A89 | A89 | |

| Wasserstein Distance | A89 | A89 | A89 | A89 | A89 | A89 | A89 | |

| Group 5 | Canberra | A421 | A421 | A421 | A421 | A421 | A421 | A421 |

| Edit | A421 | A421 | A421 | A421 | A421 | A421 | A421 | |

| Group 6 | Pearson | A421 | A421 | A421 | A58 | A58 | A311 | A311 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, D.; Ma, S.; Ma, H. Performance Evaluation of Similarity Metrics in Transfer Learning for Building Heating Load Forecasting. Energies 2025, 18, 4678. https://doi.org/10.3390/en18174678

Bai D, Ma S, Ma H. Performance Evaluation of Similarity Metrics in Transfer Learning for Building Heating Load Forecasting. Energies. 2025; 18(17):4678. https://doi.org/10.3390/en18174678

Chicago/Turabian StyleBai, Di, Shuo Ma, and Hongting Ma. 2025. "Performance Evaluation of Similarity Metrics in Transfer Learning for Building Heating Load Forecasting" Energies 18, no. 17: 4678. https://doi.org/10.3390/en18174678

APA StyleBai, D., Ma, S., & Ma, H. (2025). Performance Evaluation of Similarity Metrics in Transfer Learning for Building Heating Load Forecasting. Energies, 18(17), 4678. https://doi.org/10.3390/en18174678