A Confidence Calibration Based Ensemble Method for Oriented Electrical Equipment Detection in Thermal Images

Abstract

1. Introduction

2. Related Works

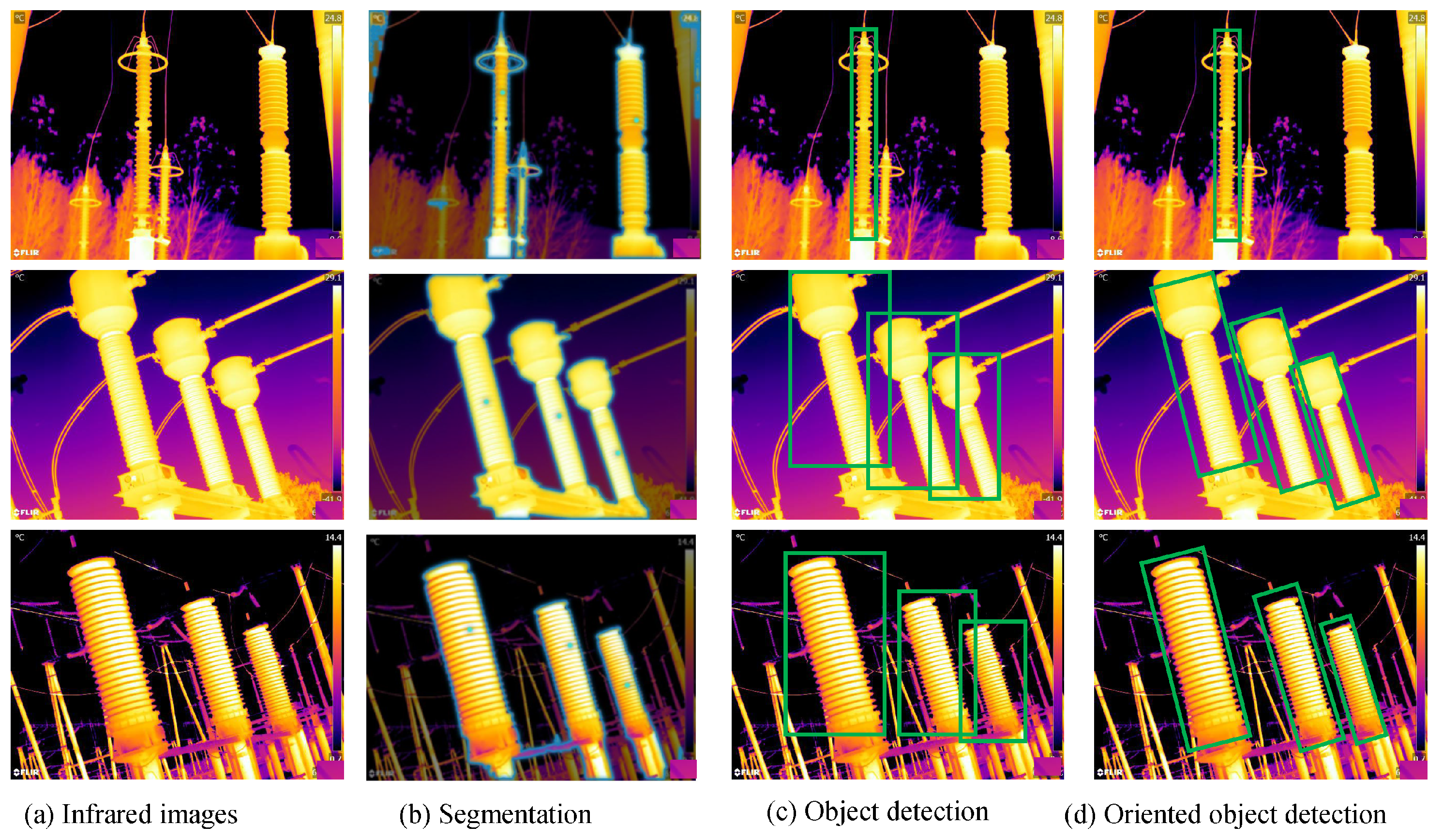

2.1. Electrical Equipment Detection

2.2. Oriented Object Detection

2.3. Ensemble Learning

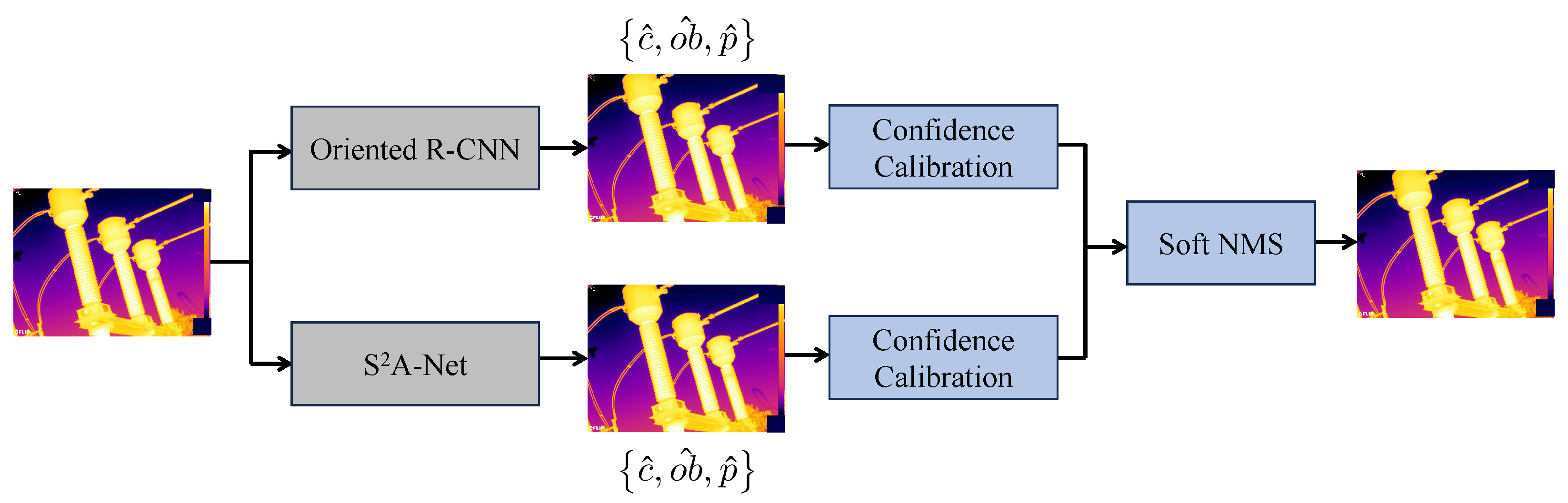

3. The Proposed Method

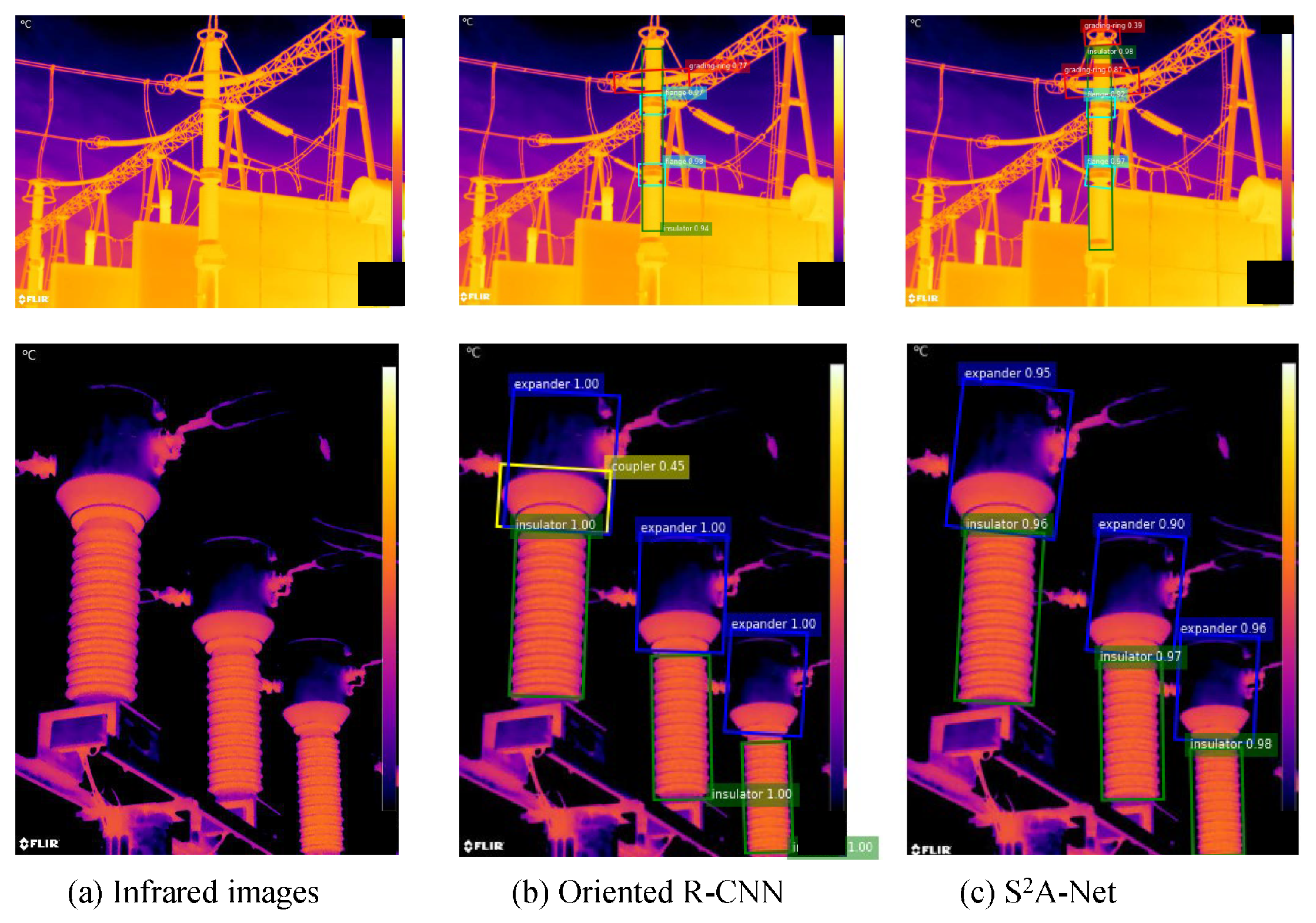

3.1. The Base-Oriented Object Detection Models

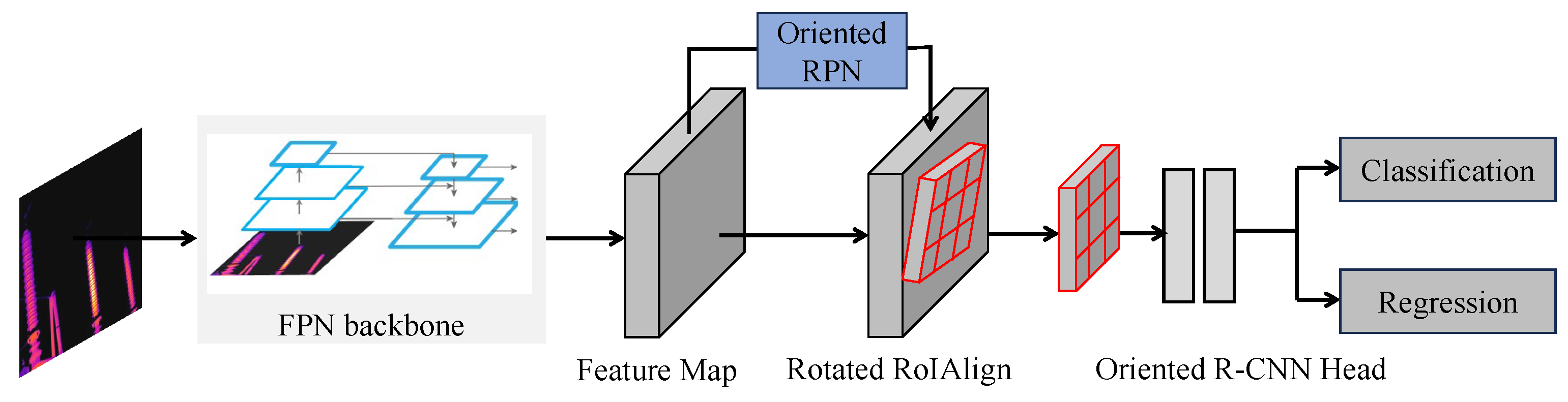

3.1.1. Oriented R-CNN

3.1.2. S2A-Net

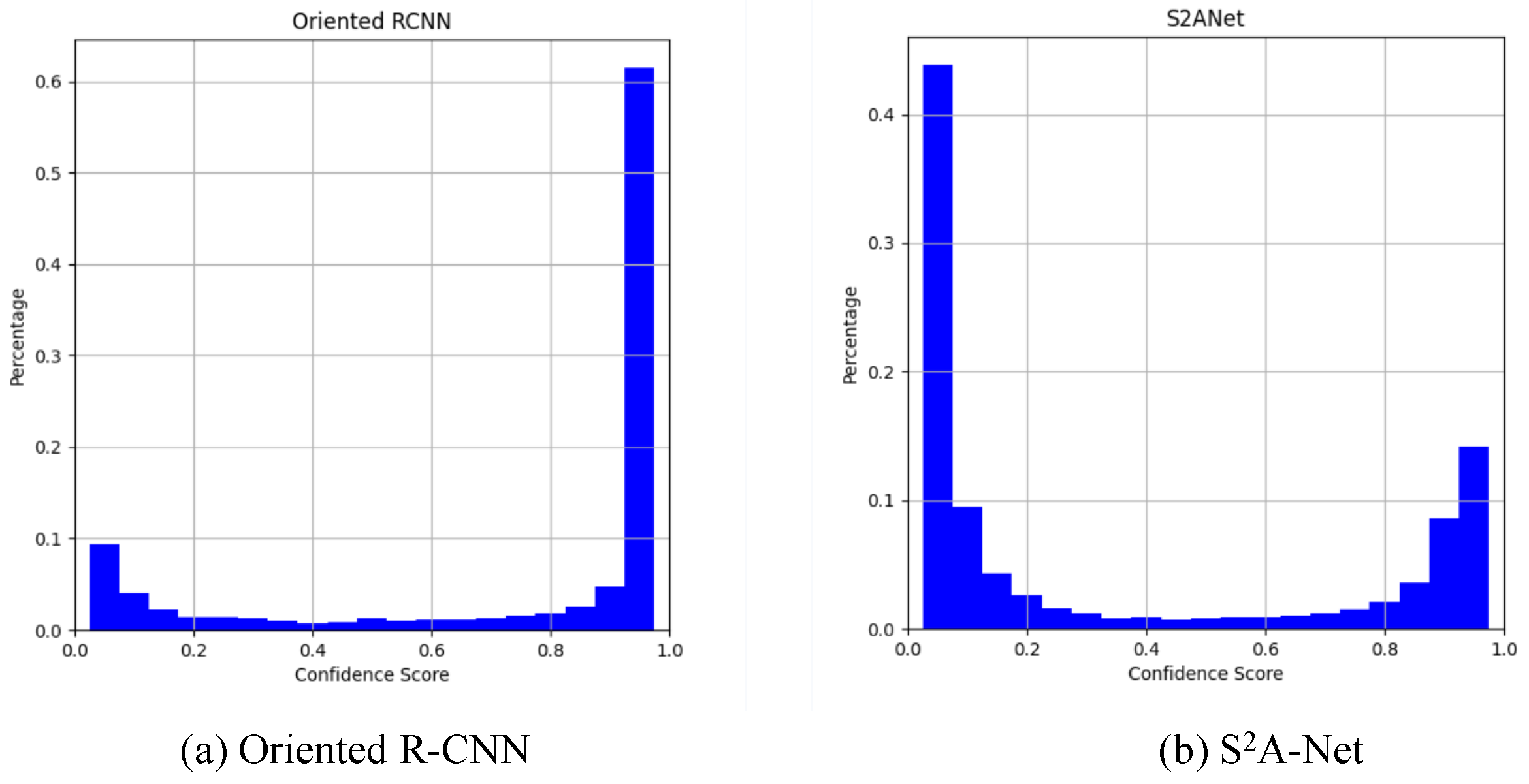

3.2. Confidence Calibration

3.2.1. Calibration Error

3.2.2. Model Calibration

3.3. Model Ensemble

4. Experiments

4.1. Dataset and Evaluation Metric

4.2. Experimental Setup

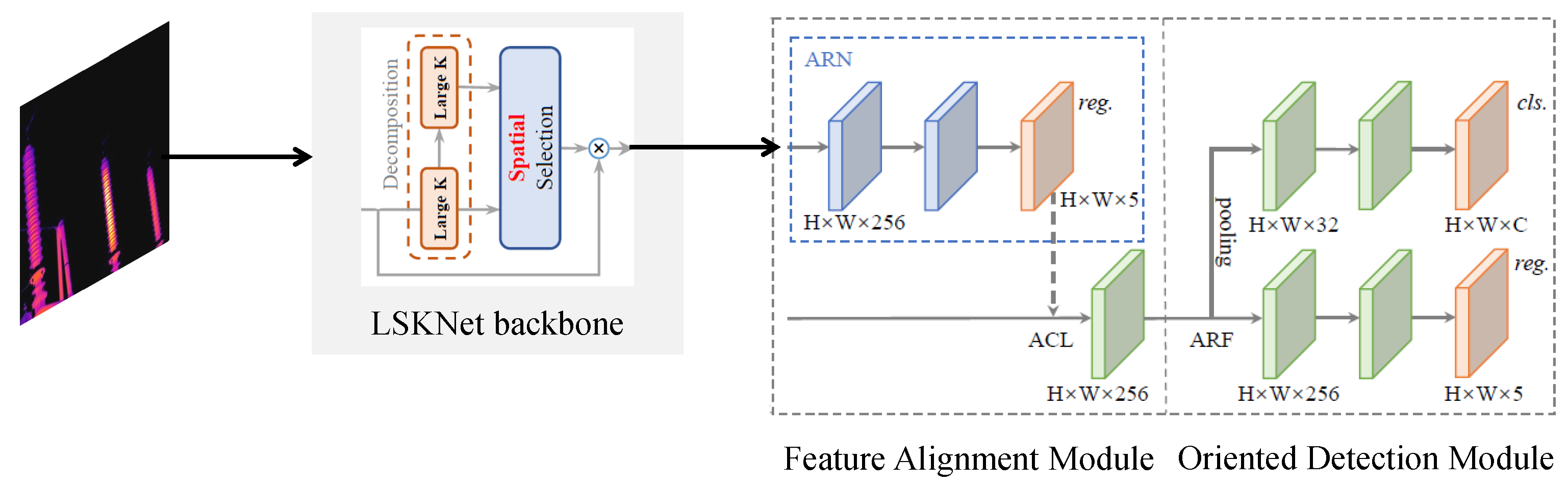

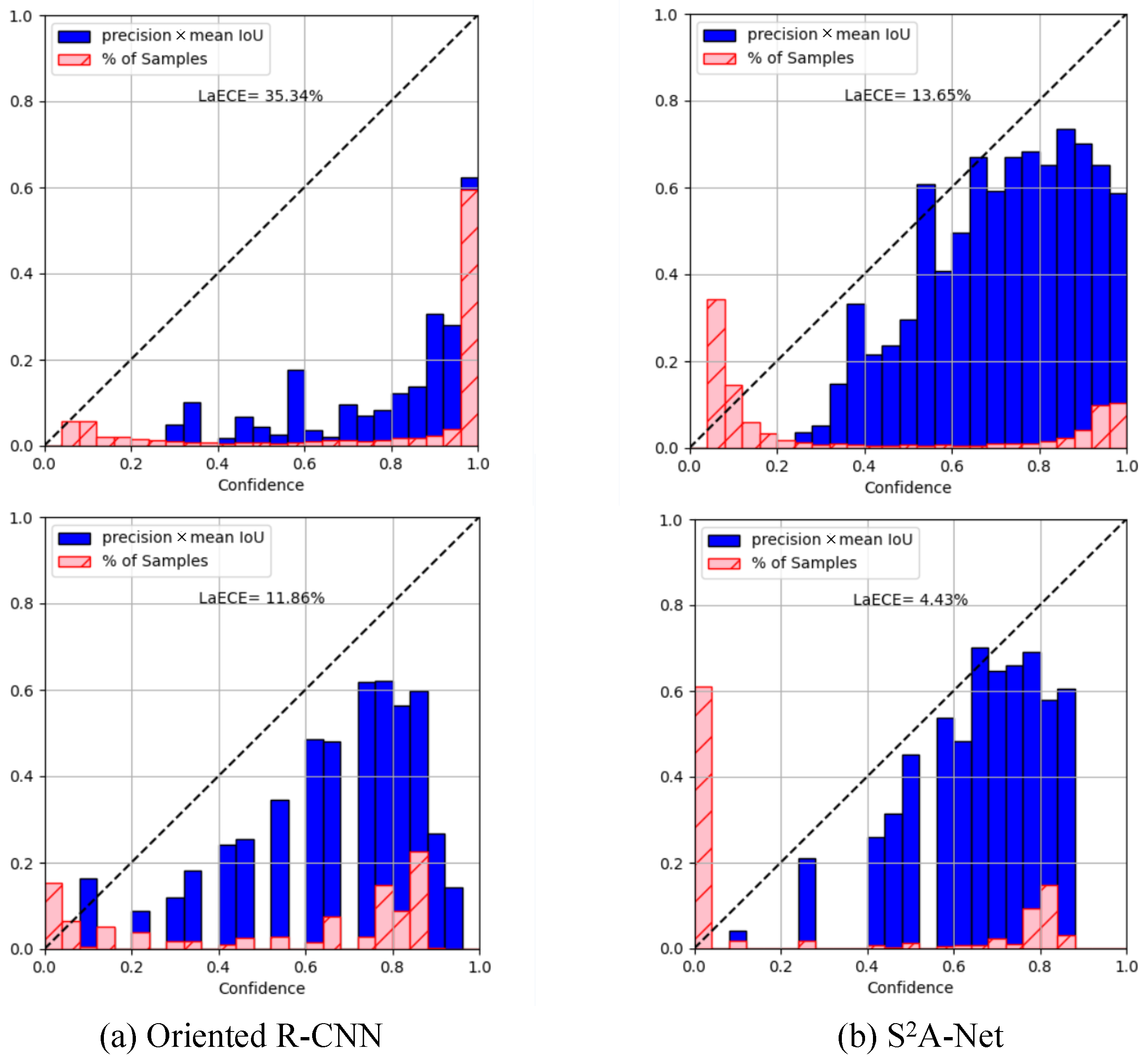

4.3. The Results of Confidence Calibration

4.4. Quantitative Evaluation

4.5. Qualitative Visualization

4.6. Complexity Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yan, N.; Zhou, T.; Gu, C.; Jiang, A.; Lu, W. Instance Segmentation Model for Substation Equipment Based on Mask R-CNN. In Proceedings of the 2020 International Conference on Electrical Engineering and Control Technologies (CEECT), Melbourne, Australia, 10–13 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Meng, Z.; Wang, Z.; Li, S. Electric Equipment Panel Detection and Segmentation based on Mask R-CNN. In Proceedings of the 2021 China International Conference on Electricity Distribution (CICED), Shanghai, China, 7–9 April 2021; pp. 343–347. [Google Scholar] [CrossRef]

- Tang, Z.; Jian, X. Thermal fault diagnosis of complex electrical equipment based on infrared image recognition. Sci. Rep. 2024, 14, 5547. [Google Scholar] [CrossRef] [PubMed]

- Han, S.; Yang, F.; Yang, G.; Gao, B.; Zhang, N.; Wang, D. Electrical equipment identification in infrared images based on ROI-selected CNN method. Electric Power Syst. Res. 2020, 188, 106534. [Google Scholar] [CrossRef]

- Zhang, Q.; Chang, X.; Meng, Z.; Li, Y. Equipment detection and recognition in electric power room based on faster R-CNN. Procedia Comput. Sci. 2021, 183, 324–330. [Google Scholar] [CrossRef]

- Qi, C.; Chen, Z.; Chen, X.; Bao, Y.; He, T.; Hu, S.; Li, J.; Liang, Y.; Tian, F.; Li, M. Efficient real-time detection of electrical equipment images using a lightweight detector model. Front. Energy Res. 2023, 11, 1291382. [Google Scholar] [CrossRef]

- Lin, T. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Han, J.; Ding, J.; Li, J.; Xia, G.S. Align deep features for oriented object detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5602511. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.; Feng, Z.; He, T. R3det: Refined single-stage detector with feature refinement for rotating object. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 3163–3171. [Google Scholar]

- Lyu, C.; Zhang, W.; Huang, H.; Zhou, Y.; Wang, Y.; Liu, Y.; Zhang, S.; Chen, K. Rtmdet: An empirical study of designing real-time object detectors. arXiv 2022, arXiv:2212.07784. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Han, J.; Ding, J.; Xue, N.; Xia, G.S. Redet: A rotation-equivariant detector for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2786–2795. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

- Oksuz, K.; Joy, T.; Dokania, P.K. Towards building self-aware object detectors via reliable uncertainty quantification and calibration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9263–9274. [Google Scholar]

- Oksuz, K.; Kuzucu, S.; Joy, T.; Dokania, P.K. MoCaE: Mixture of Calibrated Experts Significantly Improves Object Detection. arXiv 2023, arXiv:2309.14976. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Gong, X.; Yao, Q.; Wang, M.; Lin, Y. A Deep Learning Approach for Oriented Electrical Equipment Detection in Thermal Images. IEEE Access 2018, 6, 41590–41597. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. PIoU Loss: Towards Accurate Oriented Object Detection in Complex Environments. In Computer Vision, Proceedings of the ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part V; Springer: Berlin/Heidelberg, Germany, 2020; pp. 195–211. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A comprehensive review on ensemble deep learning: Opportunities and challenges. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Terzi, R. An Ensemble of Deep Learning Object Detection Models for Anatomical and Pathological Regions in Brain MRI. Diagnostics 2023, 13, 1494. [Google Scholar] [CrossRef] [PubMed]

- Casado-García, A.; Heras, J. Ensemble Methods for Object Detection. 2019. Available online: https://www.unirioja.es/cu/joheras/papers/ensemble.pdf (accessed on 5 May 2025).

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large Selective Kernel Network for Remote Sensing Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 16794–16805. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Yang, X.; Zhang, G.; Wang, J.; Liu, Y.; Hou, L.; Jiang, X.; Liu, X.; Yan, J.; Lyu, C.; et al. Mmrotate: A rotated object detection benchmark using pytorch. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 7331–7334. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

| Model | mAP | AP for 6 Classes in EPED Dataset | |||||

|---|---|---|---|---|---|---|---|

| Insulator | Expander | Grading-Ring | Flange | Interrupter | Coupler | ||

| RoI Transformer | 89.35 | 90.48 | 90.78 | 88.94 | 89.16 | 98.75 | 77.98 |

| Gliding Vertex | 88.97 | 89.85 | 89.56 | 89.11 | 88.41 | 97.39 | 79.48 |

| YOLOv11OOD | 91.76 | 90.25 | 90.56 | 92.21 | 89.41 | 98.30 | 89.82 |

| Oriented RCNN | 89.45 | 90.26 | 90.38 | 88.67 | 89.25 | 98.77 | 79.39 |

| S2A-Net | 90.95 | 89.70 | 89.75 | 90.35 | 89.18 | 98.04 | 88.70 |

| Ensemble | 90.87 | 90.03 | 90.35 | 95.29 | 89.21 | 98.96 | 81.41 |

| Cal-Ensemble | 92.85 | 90.58 | 90.62 | 96.50 | 89.80 | 99.11 | 90.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.; Li, Z.; Song, B.; Ge, N.; Sun, Y.; Gong, X. A Confidence Calibration Based Ensemble Method for Oriented Electrical Equipment Detection in Thermal Images. Energies 2025, 18, 3191. https://doi.org/10.3390/en18123191

Lin Y, Li Z, Song B, Ge N, Sun Y, Gong X. A Confidence Calibration Based Ensemble Method for Oriented Electrical Equipment Detection in Thermal Images. Energies. 2025; 18(12):3191. https://doi.org/10.3390/en18123191

Chicago/Turabian StyleLin, Ying, Zhuangzhuang Li, Bo Song, Ning Ge, Yiwei Sun, and Xiaojin Gong. 2025. "A Confidence Calibration Based Ensemble Method for Oriented Electrical Equipment Detection in Thermal Images" Energies 18, no. 12: 3191. https://doi.org/10.3390/en18123191

APA StyleLin, Y., Li, Z., Song, B., Ge, N., Sun, Y., & Gong, X. (2025). A Confidence Calibration Based Ensemble Method for Oriented Electrical Equipment Detection in Thermal Images. Energies, 18(12), 3191. https://doi.org/10.3390/en18123191