Reinforcement Learning-Based Energy Management for Fuel Cell Electrical Vehicles Considering Fuel Cell Degradation

Abstract

1. Introduction

- The creation of a DRL-based energy management system is intended to optimize hydrogen usage while maintaining battery SOC stability. It uses deep reinforcement learning skills to adapt to changing driving situations and vehicle states.

- Double Deep Q-learning (DDQL) implementation: DDQL is used to manage the energy of fuel cell electric vehicles (FCEVs). This approach estimates Q-values using two distinct neural networks, avoiding the overestimation problem associated with a single network. This dual-network technique improves the learning process’s accuracy and dependability.

- Incorporation of FCS degradation into energy management: The incorporation of Fuel Cell System (FCS) degradation factors into the energy management strategy is a novel component of this study. The DDQL structure does this by establishing a balance between fuel economy and FCS longevity.

- Validation employing standard drive cycles: The performance of the proposed technique is further confirmed by employing several standard driving cycles. These experiments indicate the strategy’s robustness in a variety of driving circumstances, including those not seen during training.

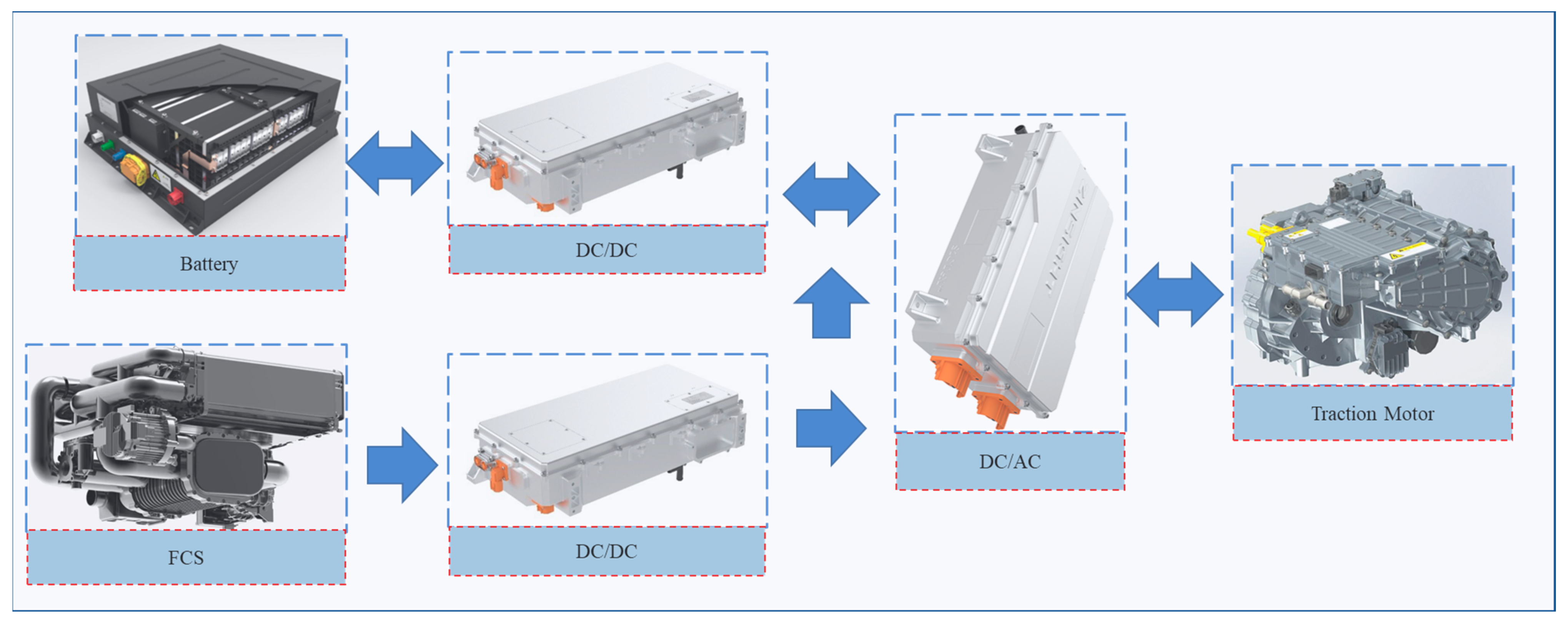

2. The Powertrain of the FCEV Model

2.1. System Configure

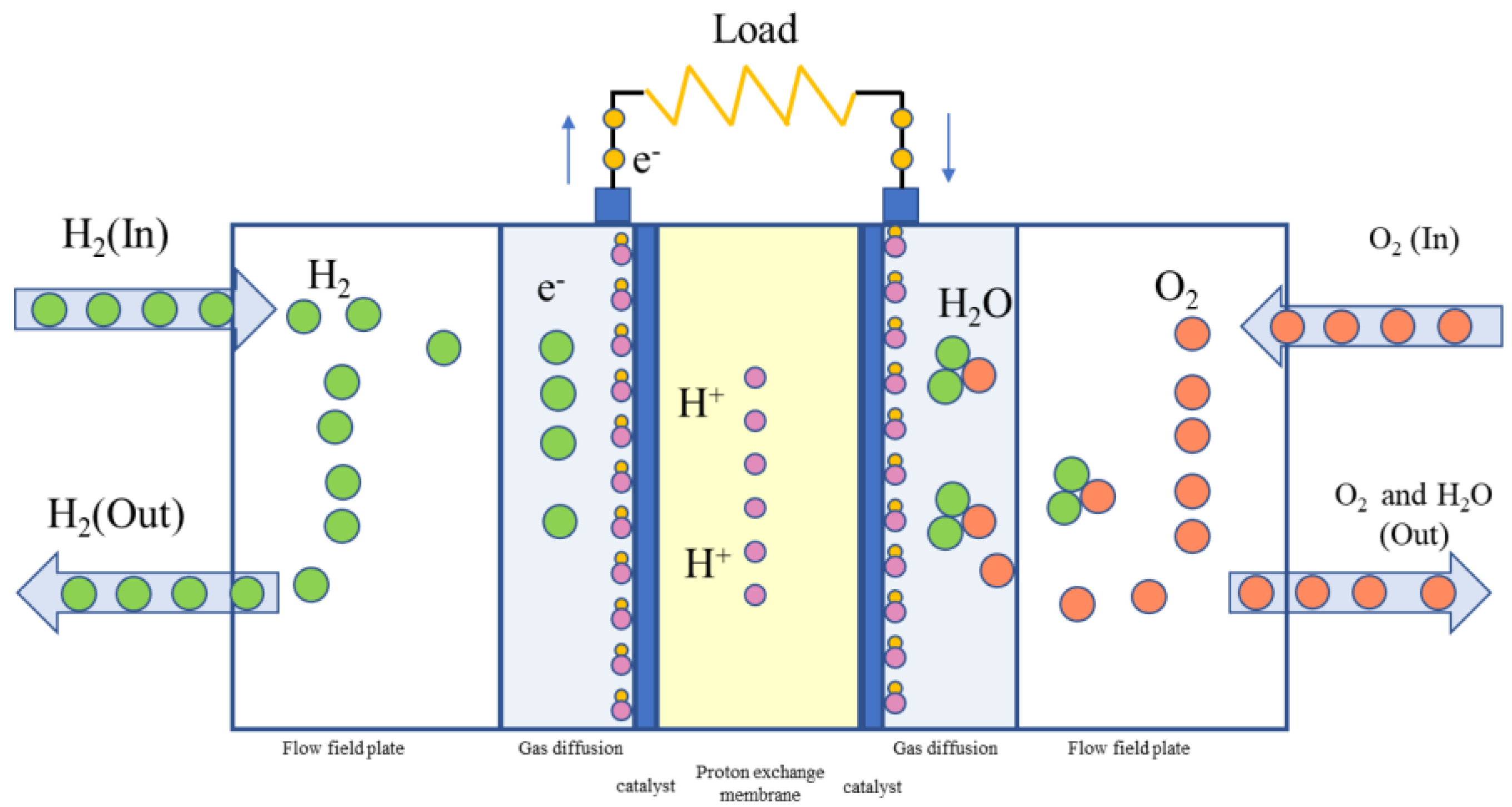

2.2. Fuel Cell System

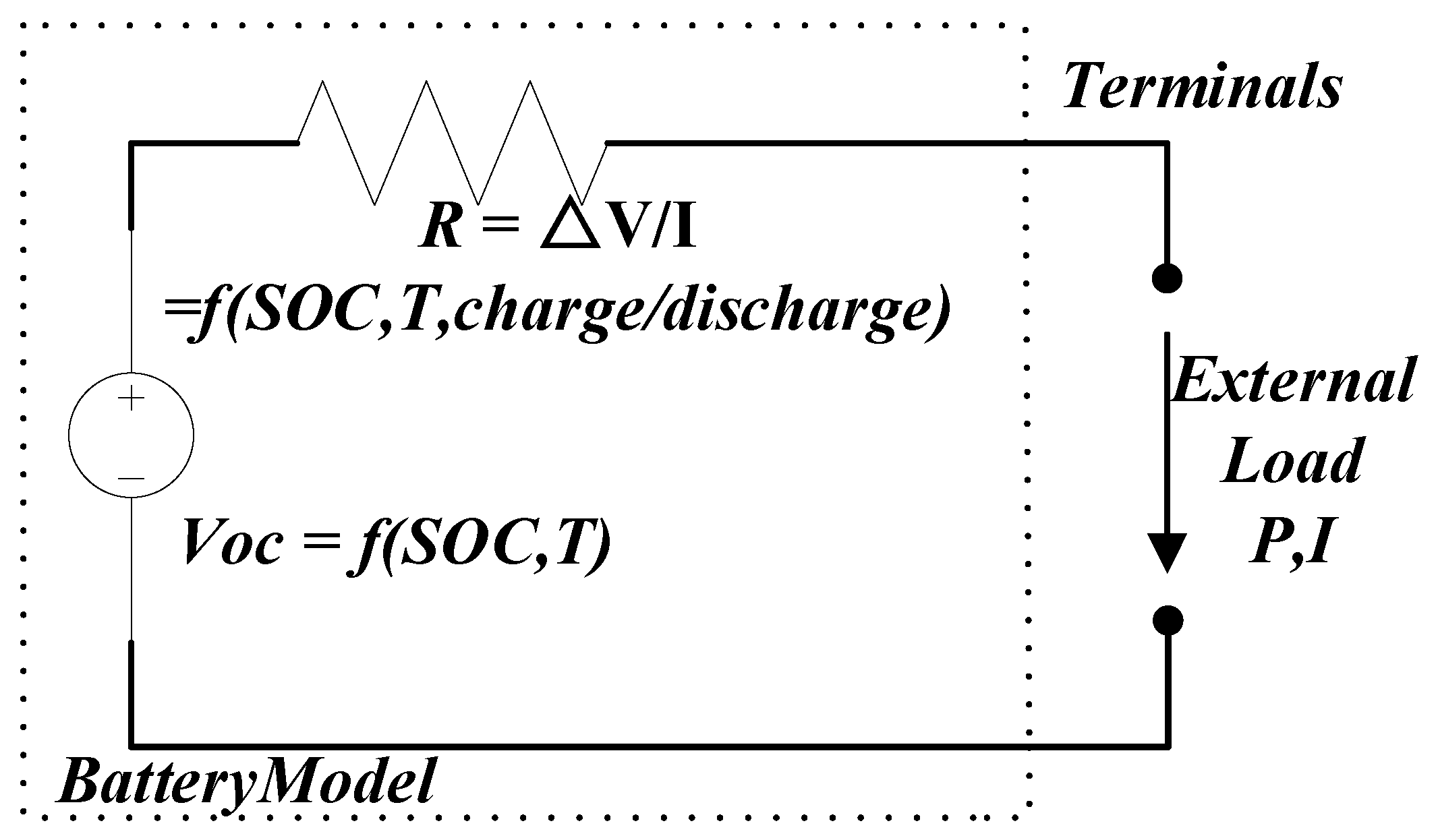

2.3. Li-Ion Battery

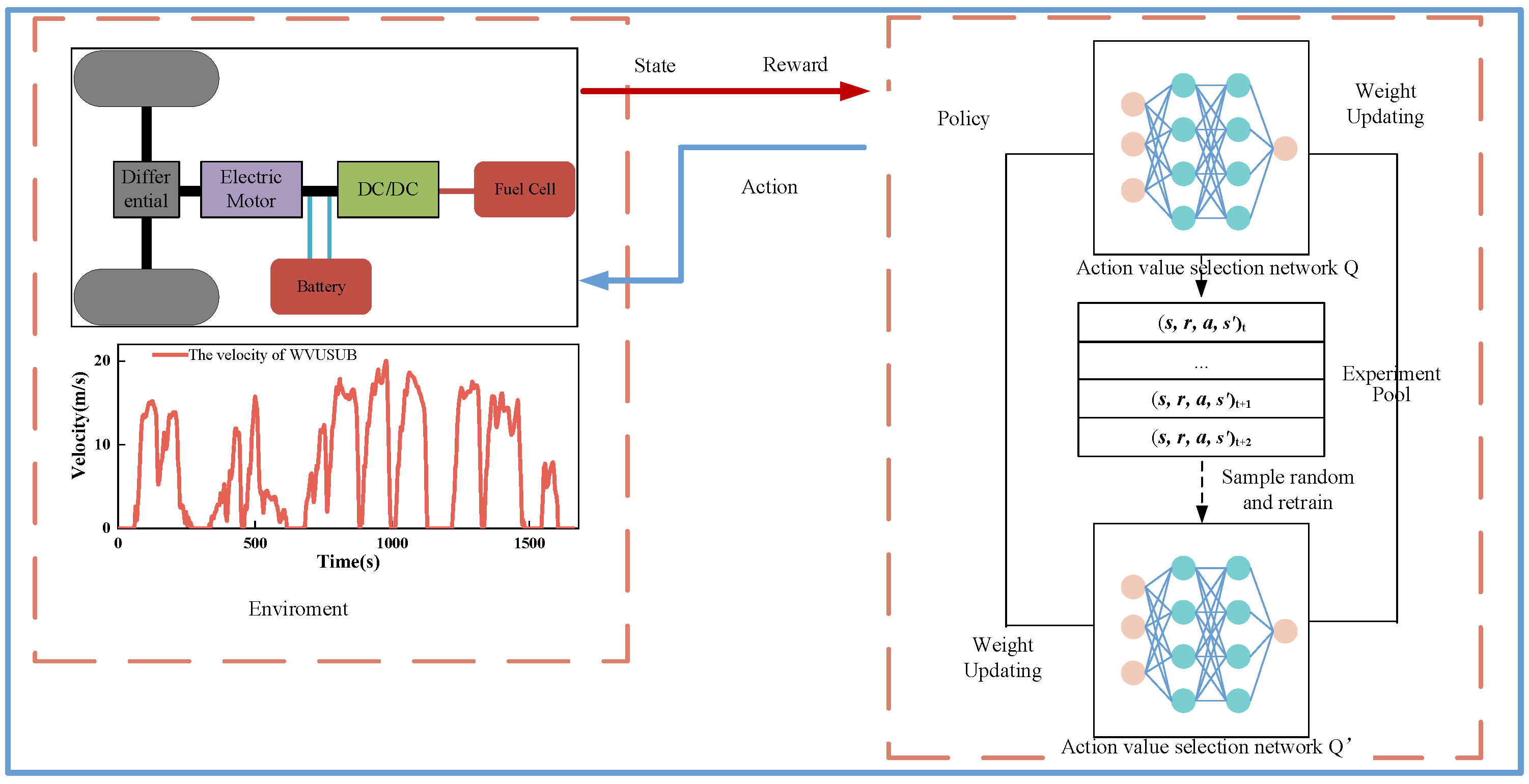

3. Design of DDQL-Based EMS Considered the Performance Degradation of FCS

3.1. The Algorithm of DQL

3.2. The Energy Management Strategy Design of Double-DQL

| Algorithm 1: Double Deep Q-learning. |

| Parameters: A, Initialize replay memory D with capacity N. Initialize action-value evaluating function Q with random weights . Initialize target action-value function Q∧ with weights . for episode = 1:max(episode) do for t = 1:max(during time) do With probability ε select a random action a; otherwise, select Execute action a with environment and observe reward Rt and next state st+1. Store transition in D. Sample random minibatch of transitions from D. For t = 1, n do Set: Perform gradient descent step on update the selection network. Every τ step reset , update target network end for end for |

4. Result and Discussion

4.1. Training Setting

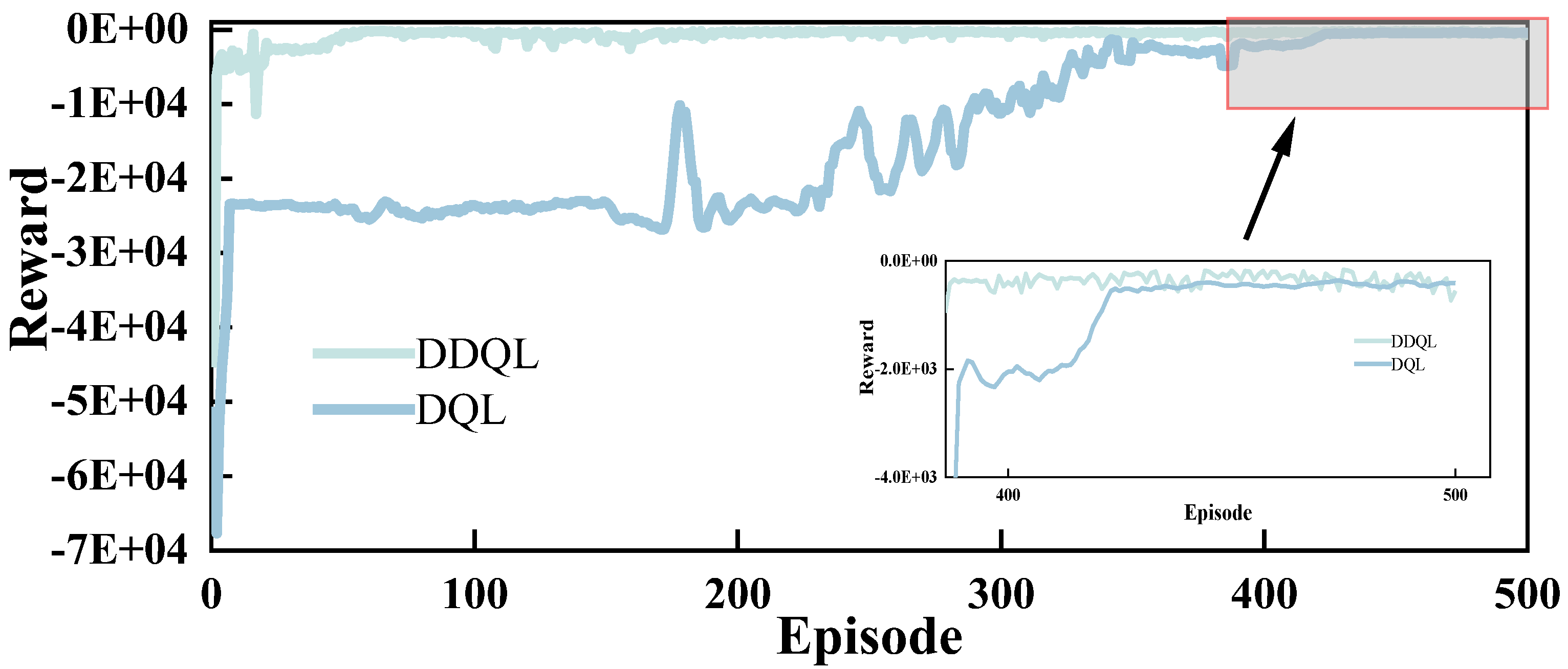

4.2. Training Performance Comparison

4.3. Optimality of DDQL-Based EMS

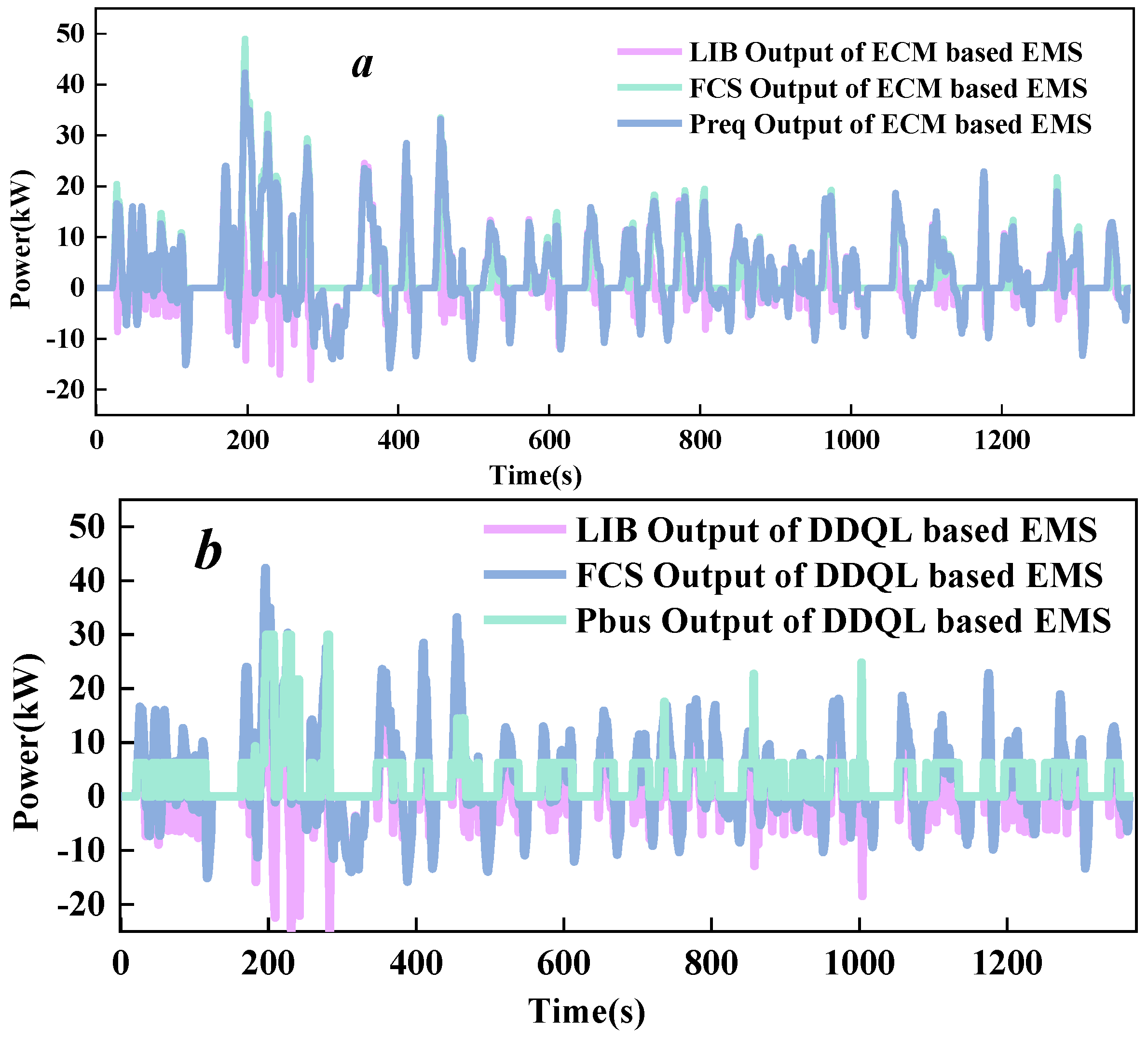

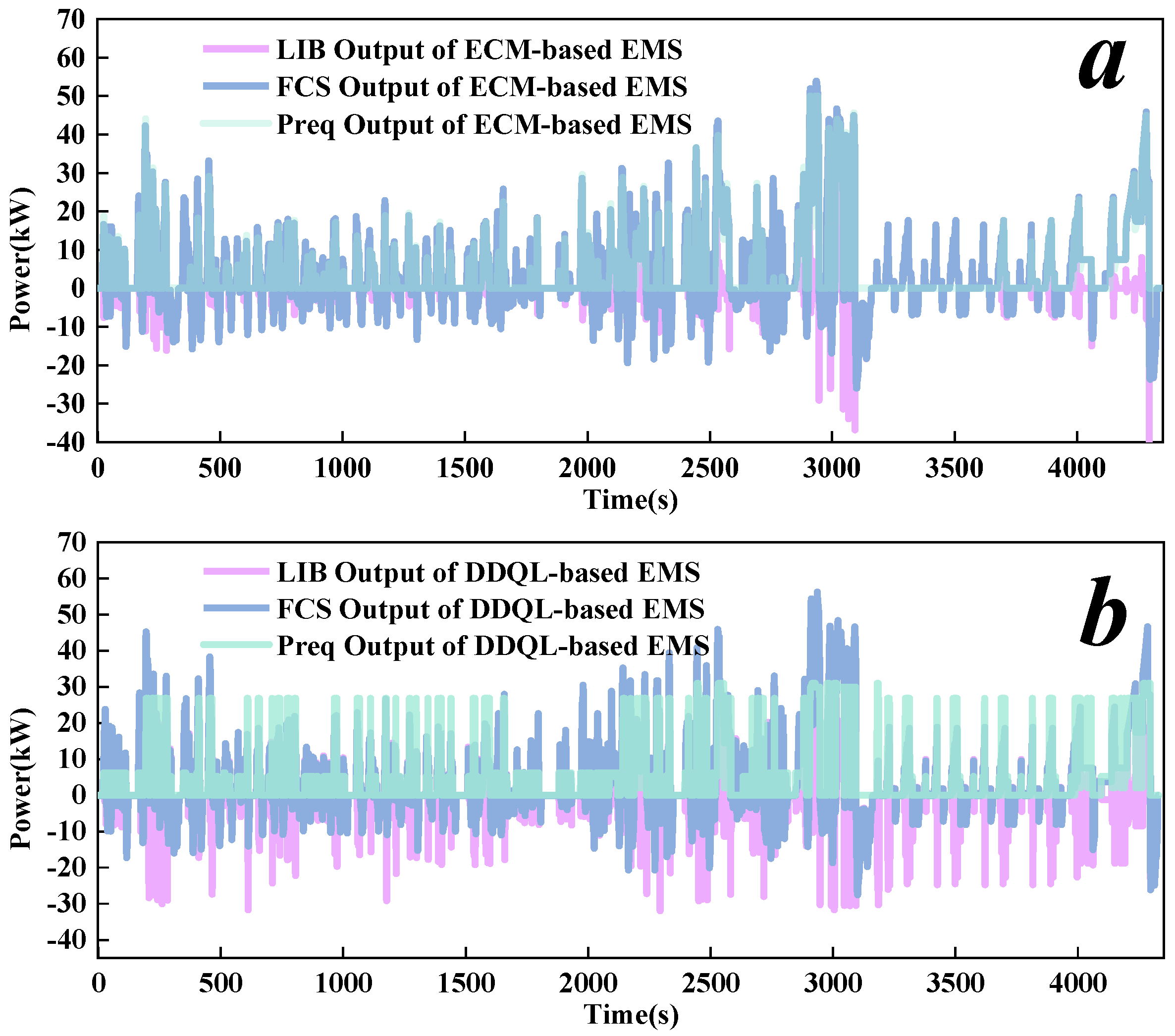

4.3.1. Power Distribution

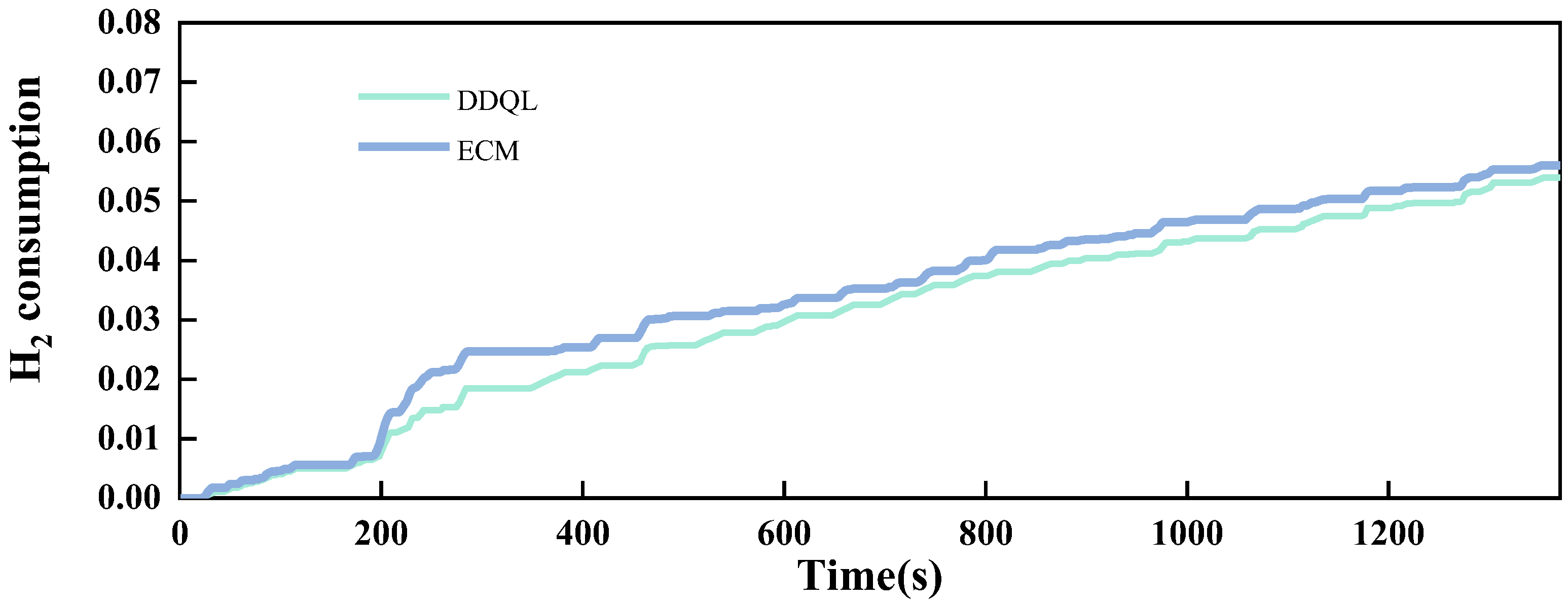

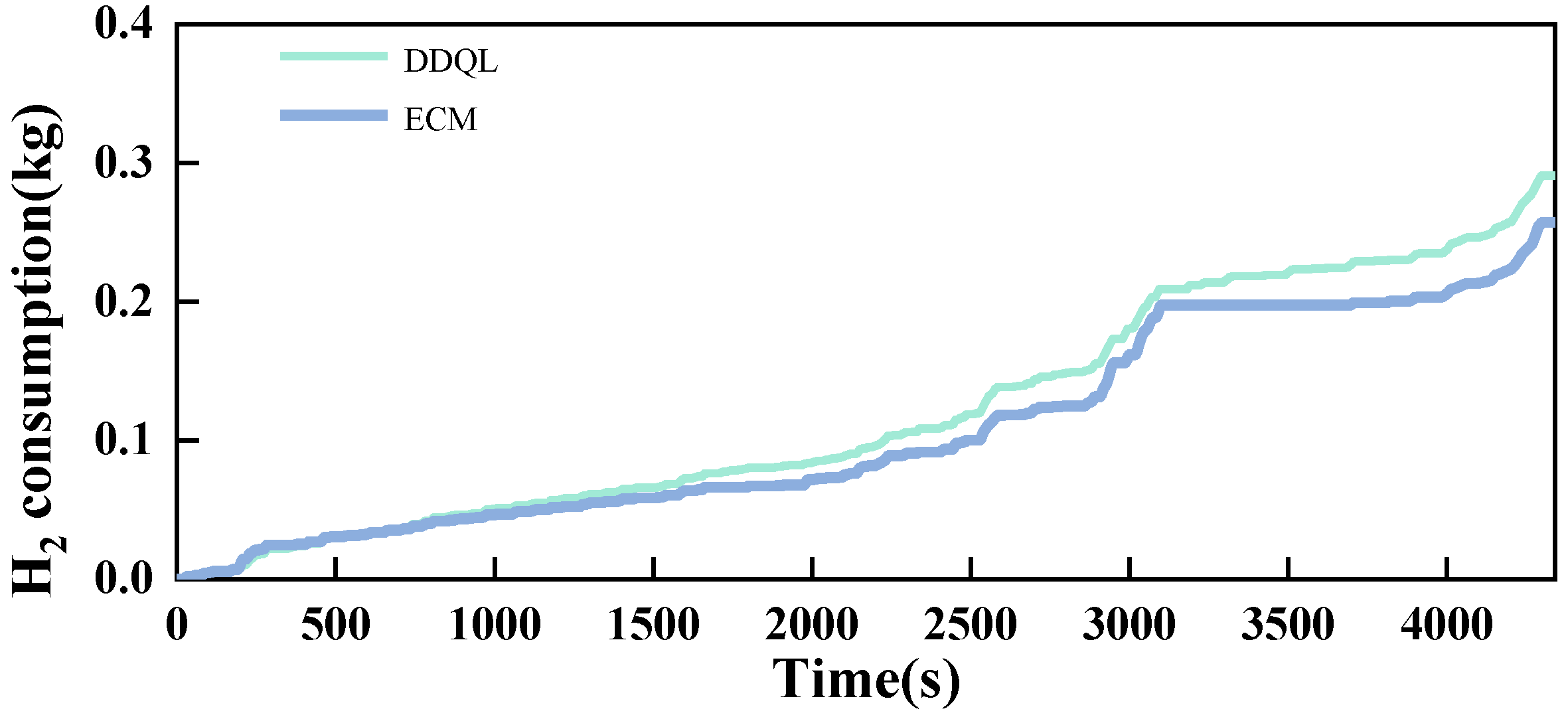

4.3.2. Fuel Economy

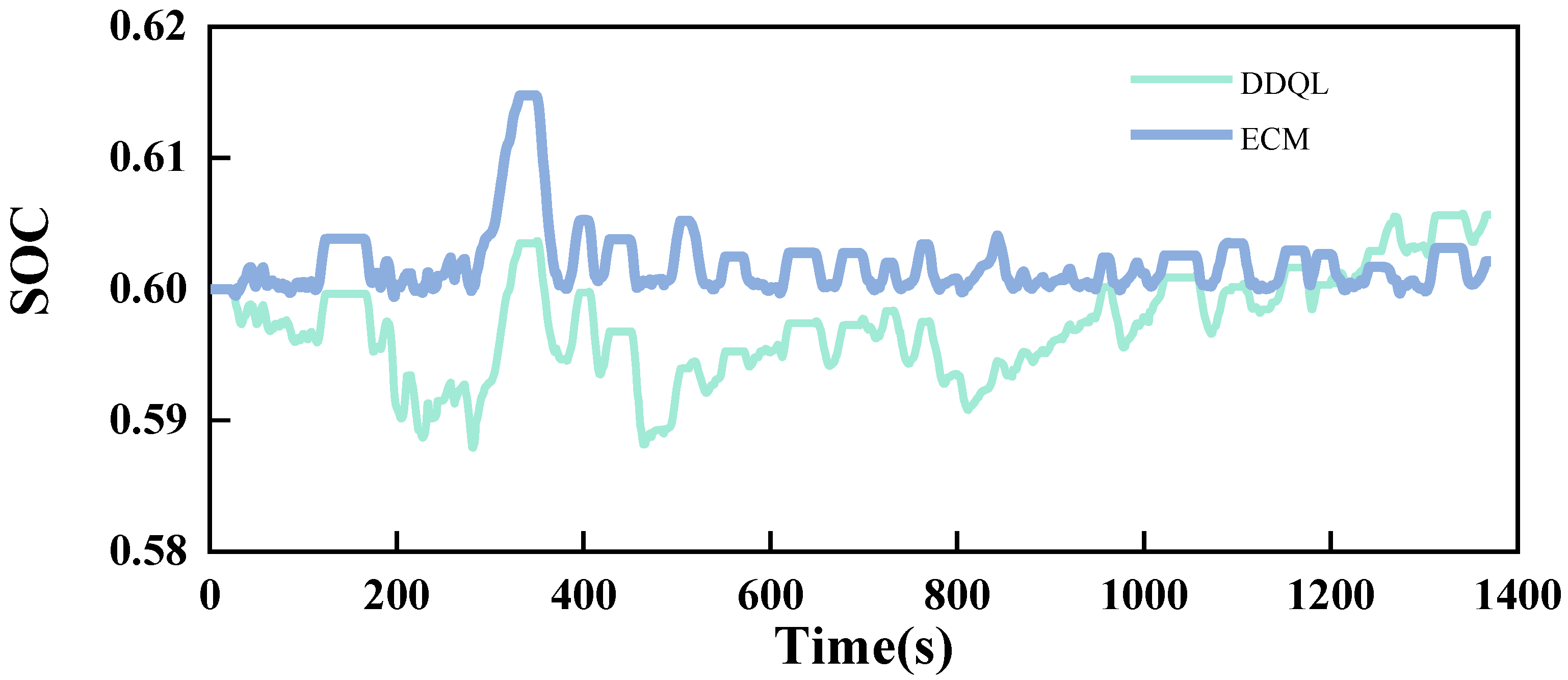

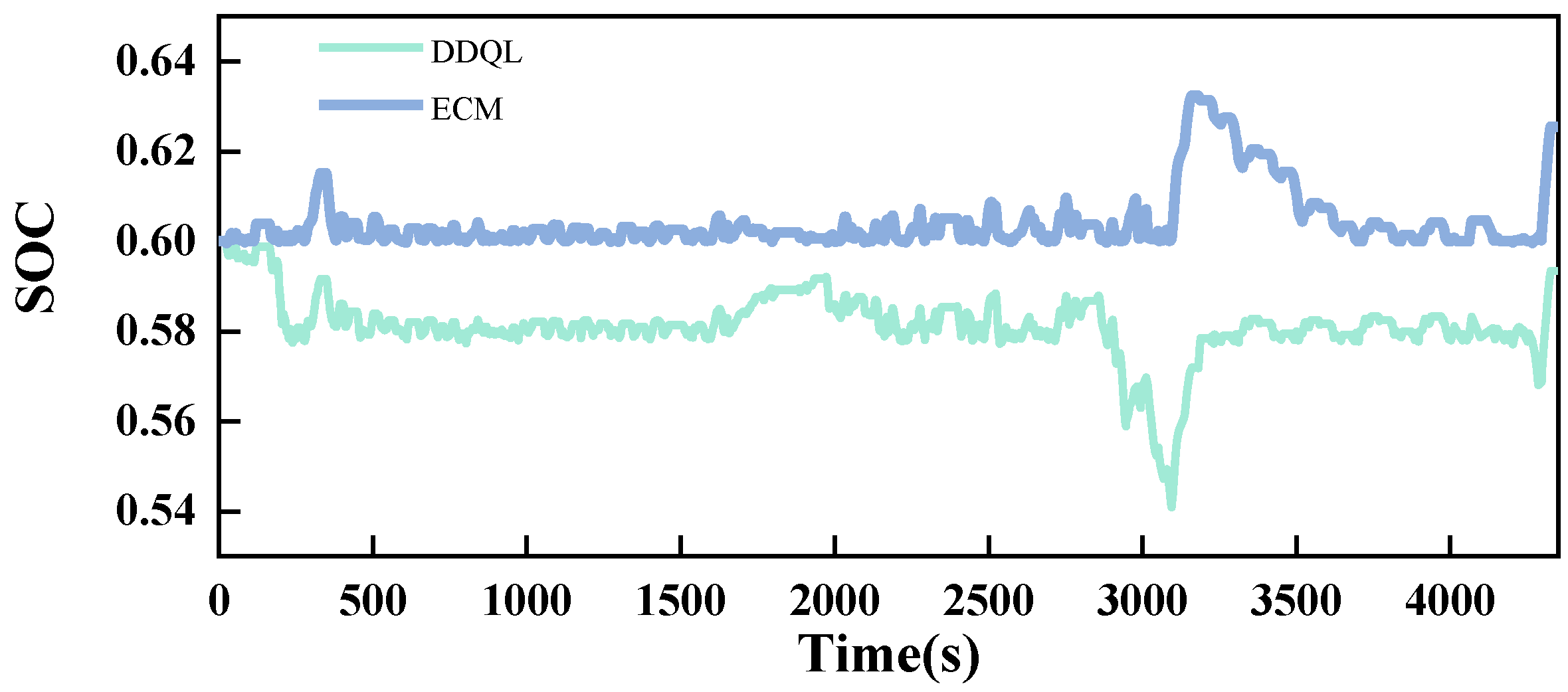

4.3.3. SOC Consistency

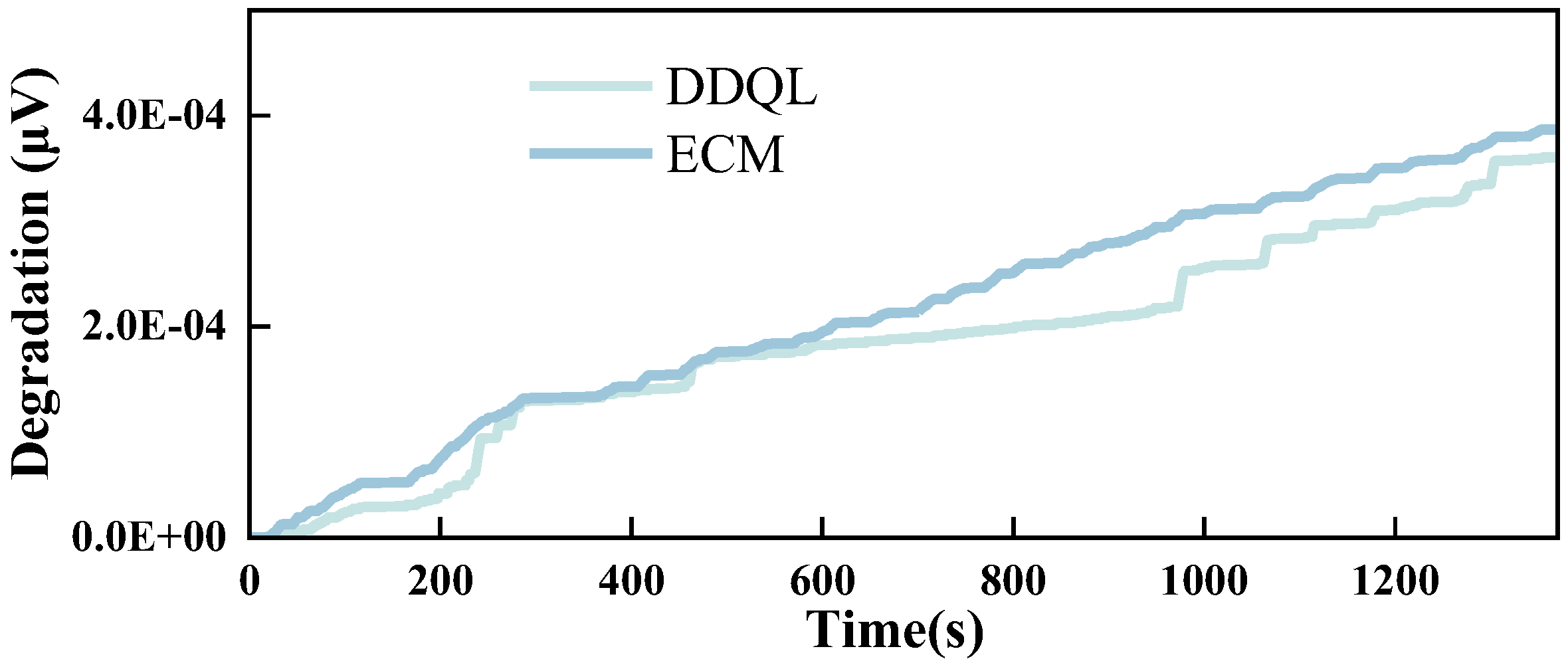

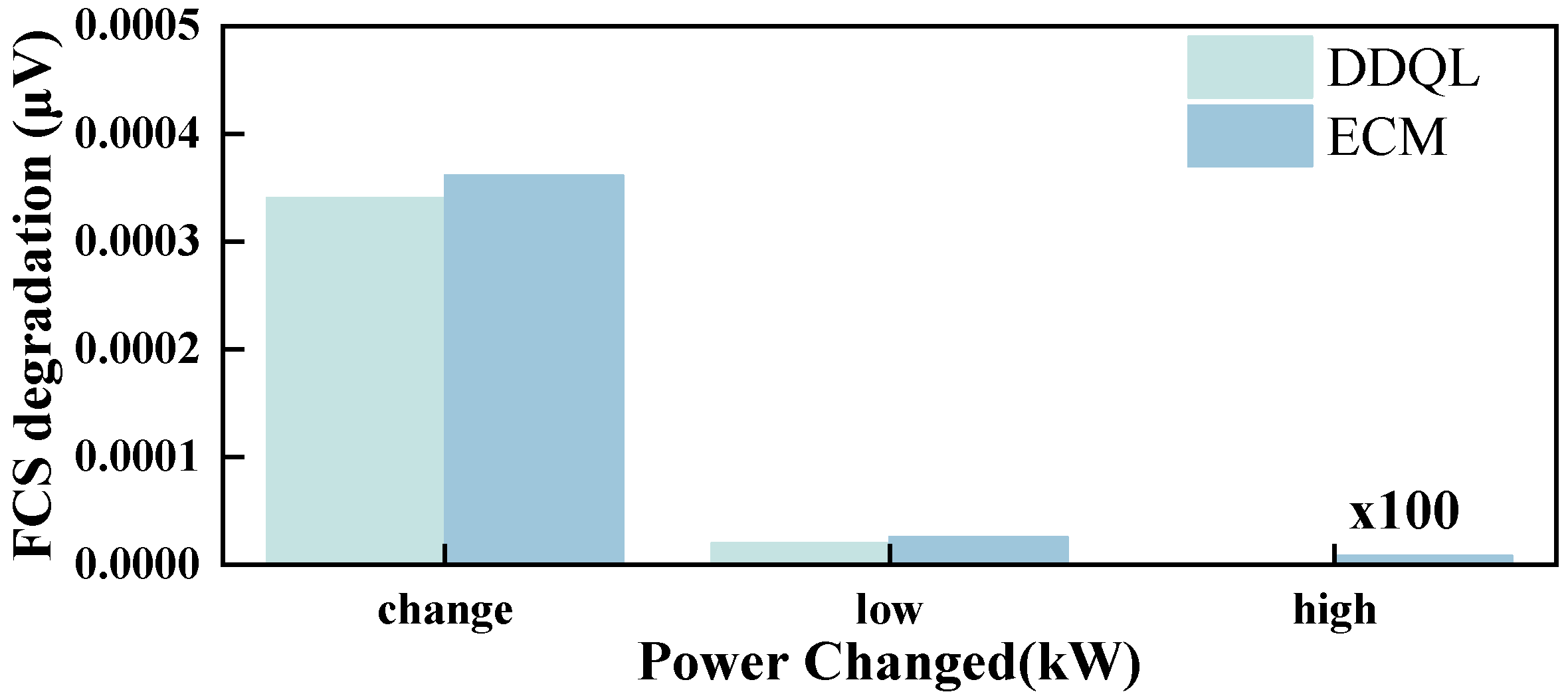

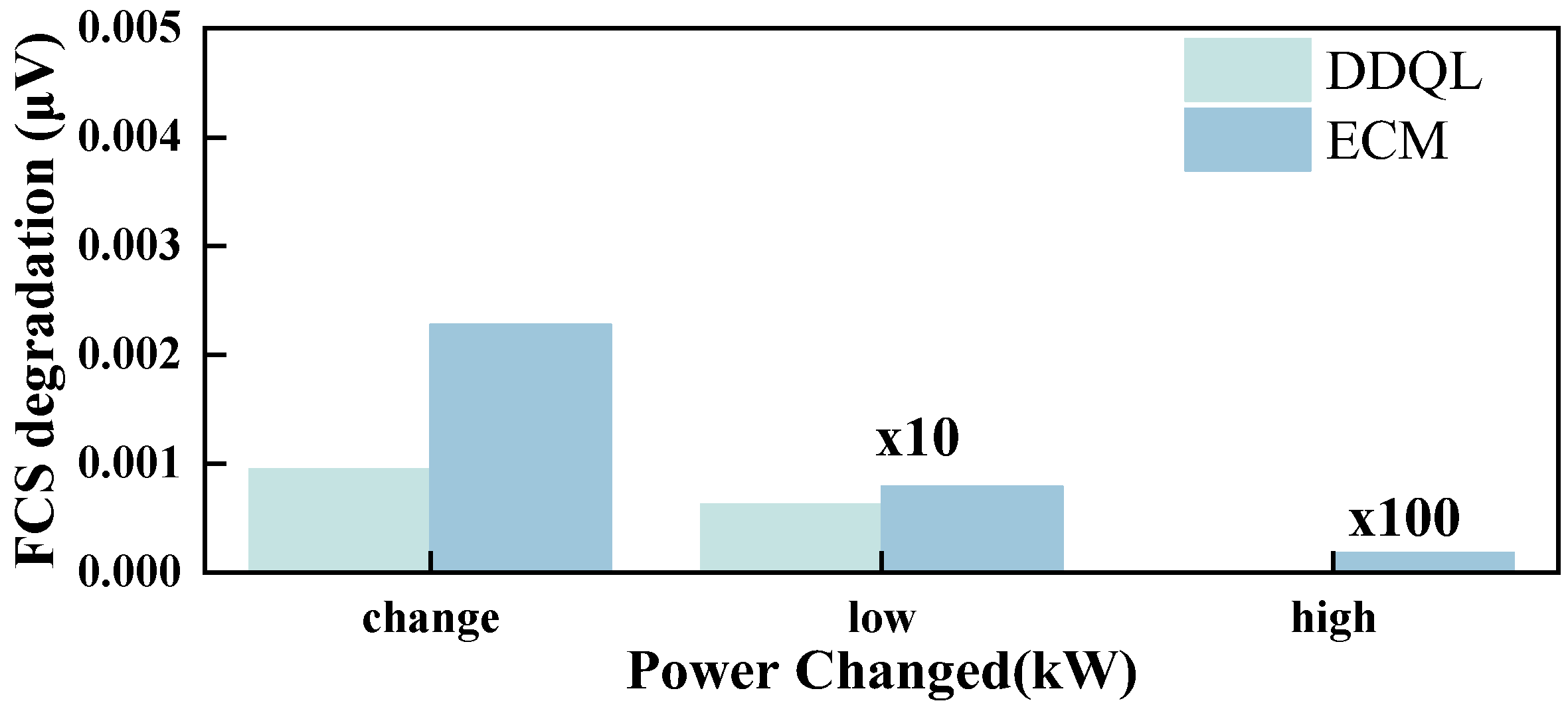

4.3.4. FCS Degradation

4.4. The Feasibility of Both Strategies in Different Environments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sorlei, I.-S.; Bizon, N.; Thounthong, P.; Varlam, M.; Carcadea, E.; Culcer, M.; Iliescu, M.; Raceanu, M. Fuel Cell Electric Vehicles—A Brief Review of Current Topologies and Energy Management Strategies. Energies 2021, 14, 252. [Google Scholar] [CrossRef]

- Ma, S.; Lin, M.; Lin, T.-E.; Lan, T.; Liao, X.; Maréchal, F.; Van herle, J.; Yang, Y.; Dong, C.; Wang, L. Fuel Cell-Battery Hybrid Systems for Mobility and off-Grid Applications: A Review. Renew. Sustain. Energy Rev. 2021, 135, 110119. [Google Scholar] [CrossRef]

- Han, J.; Feng, J.; Chen, P.; Liu, Y.; Peng, X. A Review of Key Components of Hydrogen Recirculation Subsystem for Fuel Cell Vehicles. Energy Convers. Manag. X 2022, 15, 100265. [Google Scholar] [CrossRef]

- Hua, Z.; Zheng, Z.; Pahon, E.; Péra, M.-C.; Gao, F. A Review on Lifetime Prediction of Proton Exchange Membrane Fuel Cells System. J. Power Sources 2022, 529, 231256. [Google Scholar] [CrossRef]

- Miotti, M.; Hofer, J.; Bauer, C. Integrated Environmental and Economic Assessment of Current and Future Fuel Cell Vehicles. Int. J. Life Cycle Assess 2017, 22, 94–110. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, C.; Fan, R.; Huang, S.; Yang, Y.; Xu, Q. Twin Delayed Deep Deterministic Policy Gradient-Based Deep Reinforcement Learning for Energy Management of Fuel Cell Vehicle Integrating Durability Information of Powertrain. Energy Convers. Manag. 2022, 274, 116454. [Google Scholar] [CrossRef]

- Luo, M.; Zhang, J.; Zhang, C.; Chin, C.S.; Ran, H.; Fan, M.; Du, K.; Shuai, Q. Cold Start Investigation of Fuel Cell Vehicles with Coolant Preheating Strategy. Appl. Therm. Eng. 2022, 201, 117816. [Google Scholar] [CrossRef]

- Krithika, V.; Subramani, C. A Comprehensive Review on Choice of Hybrid Vehicles and Power Converters, Control Strategies for Hybrid Electric Vehicles. Int. J. Energy Res. 2018, 42, 1789–1812. [Google Scholar] [CrossRef]

- Liu, S.; Du, C.; Yan, F.; Wang, J.; Li, Z.; Luo, Y. A Rule-Based Energy Management Strategy for a New BSG Hybrid Electric Vehicle. In Proceedings of the 2012 Third Global Congress on Intelligent Systems, Wuhan, China, 6–8 November 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 209–212. [Google Scholar]

- Peng, H.; Li, J.; Thul, A.; Deng, K.; Ünlübayir, C.; Löwenstein, L.; Hameyer, K. A Scalable, Causal, Adaptive Rule-Based Energy Management for Fuel Cell Hybrid Railway Vehicles Learned from Results of Dynamic Programming. eTransportation 2020, 4, 100057. [Google Scholar] [CrossRef]

- Liu, C.; Wang, Y.; Wang, L.; Chen, Z. Load-Adaptive Real-Time Energy Management Strategy for Battery/Ultracapacitor Hybrid Energy Storage System Using Dynamic Programming Optimization. J. Power Sources 2019, 438, 227024. [Google Scholar] [CrossRef]

- Peng, H.; Chen, Z.; Li, J.; Deng, K.; Dirkes, S.; Gottschalk, J.; Ünlübayir, C.; Thul, A.; Löwenstein, L.; Pischinger, S. Offline Optimal Energy Management Strategies Considering High Dynamics in Batteries and Constraints on Fuel Cell System Power Rate: From Analytical Derivation to Validation on Test Bench. Appl. Energy 2021, 282, 116152. [Google Scholar] [CrossRef]

- Musardo, C.; Rizzoni, G.; Guezennec, Y.; Staccia, B. A-ECMS: An Adaptive Algorithm for Hybrid Electric Vehicle Energy Management. Eur. J. Control 2005, 11, 509–524. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, H.; Khajepour, A.; He, H.; Ji, J. Model Predictive Control Power Management Strategies for HEVs: A Review. J. Power Sources 2017, 341, 91–106. [Google Scholar] [CrossRef]

- Xie, S.; Hu, X.; Qi, S.; Tang, X.; Lang, K.; Xin, Z.; Brighton, J. Model Predictive Energy Management for Plug-in Hybrid Electric Vehicles Considering Optimal Battery Depth of Discharge. Energy 2019, 173, 667–678. [Google Scholar] [CrossRef]

- Zheng, Y.; He, F.; Shen, X.; Jiang, X. Energy Control Strategy of Fuel Cell Hybrid Electric Vehicle Based on Working Conditions Identification by Least Square Support Vector Machine. Energies 2020, 13, 426. [Google Scholar] [CrossRef]

- Zhou, Y.; Ravey, A.; Péra, M.-C. Multi-Mode Predictive Energy Management for Fuel Cell Hybrid Electric Vehicles Using Markov Driving Pattern Recognizer. Appl. Energy 2020, 258, 114057. [Google Scholar] [CrossRef]

- Reddy, N.P.; Pasdeloup, D.; Zadeh, M.K.; Skjetne, R. An Intelligent Power and Energy Management System for Fuel Cell/Battery Hybrid Electric Vehicle Using Reinforcement Learning. In Proceedings of the 2019 IEEE Transportation Electrification Conference and Expo (ITEC), Detroit, MI, USA, 19–21 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Sun, W.; Qiu, Y.; Sun, L.; Hua, Q. Neural Network--based Learning and Estimation of Battery state--of--charge: A Comparison Study between Direct and Indirect Methodology. Int. J. Energy Res. 2020, 44, 10307–10319. [Google Scholar] [CrossRef]

- Sun, L.; Wang, X.; Su, Z.; Hua, Q.; Lee, K.Y. Energy Management of a Fuel Cell Based Residential Cogeneration System Using Stochastic Dynamic Programming. Process Saf. Environ. Prot. 2023, 175, 272–279. [Google Scholar] [CrossRef]

- Li, W.; Cui, H.; Nemeth, T.; Jansen, J.; Uenluebayir, C.; Wei, Z.; Zhang, L.; Wang, Z.; Ruan, J.; Dai, H. Deep Reinforcement Learning-Based Energy Management of Hybrid Battery Systems in Electric Vehicles. J. Energy Storage 2021, 36, 102355. [Google Scholar] [CrossRef]

- Wang, Y.; Moura, S.J.; Advani, S.G.; Prasad, A.K. Power Management System for a Fuel Cell/Battery Hybrid Vehicle Incorporating Fuel Cell and Battery Degradation. Int. J. Hydrogen Energy 2019, 44, 8479–8492. [Google Scholar] [CrossRef]

- Song, K.; Ding, Y.; Hu, X.; Xu, H.; Wang, Y.; Cao, J. Degradation Adaptive Energy Management Strategy Using Fuel Cell State-of-Health for Fuel Economy Improvement of Hybrid Electric Vehicle. Appl. Energy 2021, 285, 116413. [Google Scholar] [CrossRef]

- Sun, H.; Fu, Z.; Tao, F.; Zhu, L.; Si, P. Data-Driven Reinforcement-Learning-Based Hierarchical Energy Management Strategy for Fuel Cell/Battery/Ultracapacitor Hybrid Electric Vehicles. J. Power Sources 2020, 455, 227964. [Google Scholar] [CrossRef]

- Zhang, Z.; Guan, C.; Liu, Z. Real-Time Optimization Energy Management Strategy for Fuel Cell Hybrid Ships Considering Power Sources Degradation. IEEE Access 2020, 8, 87046–87059. [Google Scholar] [CrossRef]

- Mann, R.F.; Amphlett, J.C.; Hooper, M.A.; Jensen, H.M.; Peppley, B.A.; Roberge, P.R. Development and Application of a Generalised Steady-State Electrochemical Model for a PEM Fuel Cell. J. Power Sources 2000, 86, 173–180. [Google Scholar] [CrossRef]

- Pei, P.; Chang, Q.; Tang, T. A Quick Evaluating Method for Automotive Fuel Cell Lifetime. Int. J. Hydrogen Energy 2008, 33, 3829–3836. [Google Scholar] [CrossRef]

- Johnson, V.H. Battery Performance Models in ADVISOR. J. Power Sources 2002, 110, 321–329. [Google Scholar] [CrossRef]

| Component | Parameters | Value |

|---|---|---|

| FCEV | Mass | 1070 kg |

| Wheel rolling radius | 0.466 m | |

| Coefficient of rolling resistance | 0.00863 | |

| Air drag coefficient | 0.335 | |

| Equivalent windward area | 2.06 m2 | |

| FCS | Rated power | 50 kW |

| Lithium-ion Battery | Capacity | 20.6 Ah |

| DC/DC | Fixed efficiency | 0.98 |

| DC/AC | Fixed efficiency | 0.95 |

| Parameters | Value | Parameters | Value |

|---|---|---|---|

| E0 | 1.229 V | kp | 1.47 |

| ξ1 | −0.995 | k1 | 0.00126 (%/h) |

| ξ2 | 2.1228 × 10−3 | k2 | 0.0000593 (%/h) |

| ξ3 | 2.1264 × 10−5 | k3 | 0.00147 (%/h) |

| ξ4 | −1.1337 × 10−4 | LHVH2 | 242 kJ/mol |

| B | 0.497 |

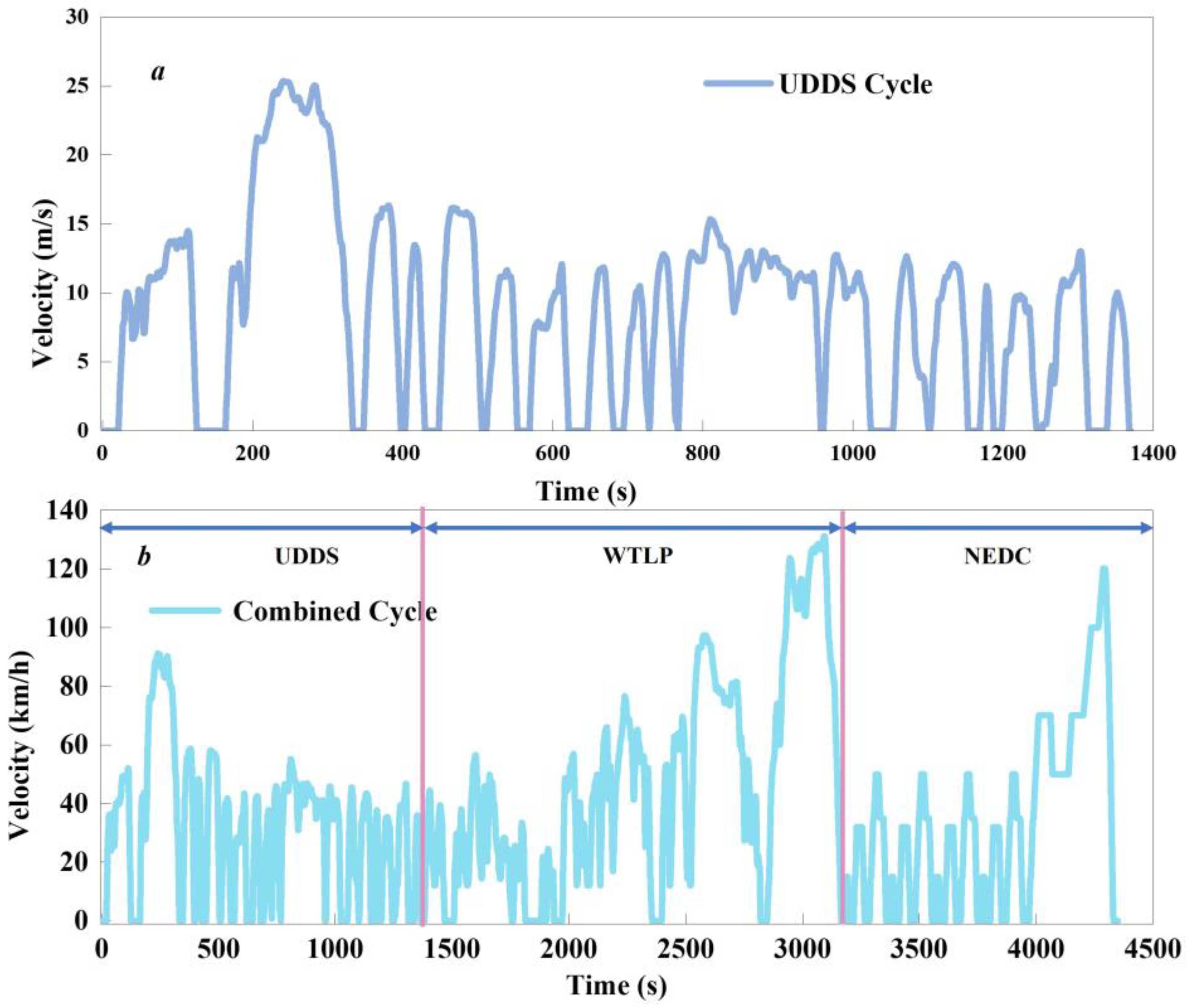

| Cycle | Speed Max (km/h) | Average Speed (km/h) | During Time (s) |

|---|---|---|---|

| UDDS | 91.25 | 31.5 | 1370 |

| WLTP | 131.3 | 46.5 | 1800 |

| NEDC | 120 | 24.71 | 1180 |

| Parameters | Value | Parameters | Value |

|---|---|---|---|

| Learning rate | 0.001 | Sample batches size | 64 |

| Layers of neural network | 3 | α | 4.4 |

| Discount factor | 0.99 | β | 2000 |

| Experience pool capacity | 10,000 | λ | 5000 |

| Cycle | DDQL | ECM | ||||

|---|---|---|---|---|---|---|

| H2 Consumption g/100 km | SOC | FCS Degradation μV | H2 Consumption g/100 km | SOC | FCS Degradation μV | |

| UDDS | 449.1 | 0.605 | 0.00036 | 466.7 | 0.602 | 0.0003872 |

| Combined | 627.3 g | 0.594 | 0.001031 | 555.5 | 0.624 | 0.002339 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shuai, Q.; Wang, Y.; Jiang, Z.; Hua, Q. Reinforcement Learning-Based Energy Management for Fuel Cell Electrical Vehicles Considering Fuel Cell Degradation. Energies 2024, 17, 1586. https://doi.org/10.3390/en17071586

Shuai Q, Wang Y, Jiang Z, Hua Q. Reinforcement Learning-Based Energy Management for Fuel Cell Electrical Vehicles Considering Fuel Cell Degradation. Energies. 2024; 17(7):1586. https://doi.org/10.3390/en17071586

Chicago/Turabian StyleShuai, Qilin, Yiheng Wang, Zhengxiong Jiang, and Qingsong Hua. 2024. "Reinforcement Learning-Based Energy Management for Fuel Cell Electrical Vehicles Considering Fuel Cell Degradation" Energies 17, no. 7: 1586. https://doi.org/10.3390/en17071586

APA StyleShuai, Q., Wang, Y., Jiang, Z., & Hua, Q. (2024). Reinforcement Learning-Based Energy Management for Fuel Cell Electrical Vehicles Considering Fuel Cell Degradation. Energies, 17(7), 1586. https://doi.org/10.3390/en17071586