Lithologic Identification of Complex Reservoir Based on PSO-LSTM-FCN Algorithm

Abstract

1. Introduction

2. Proposed Method

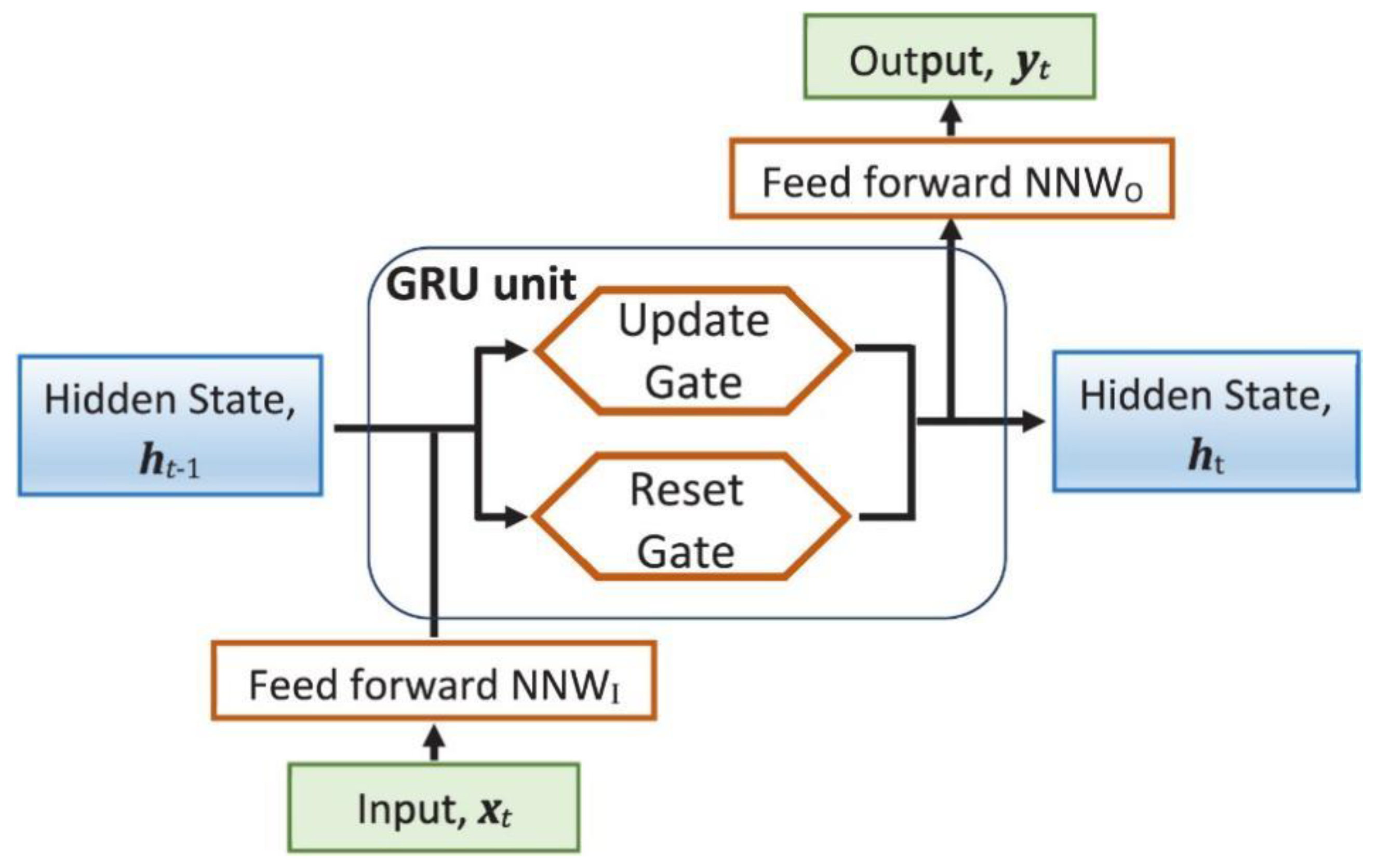

2.1. Recurrent Neural Networks

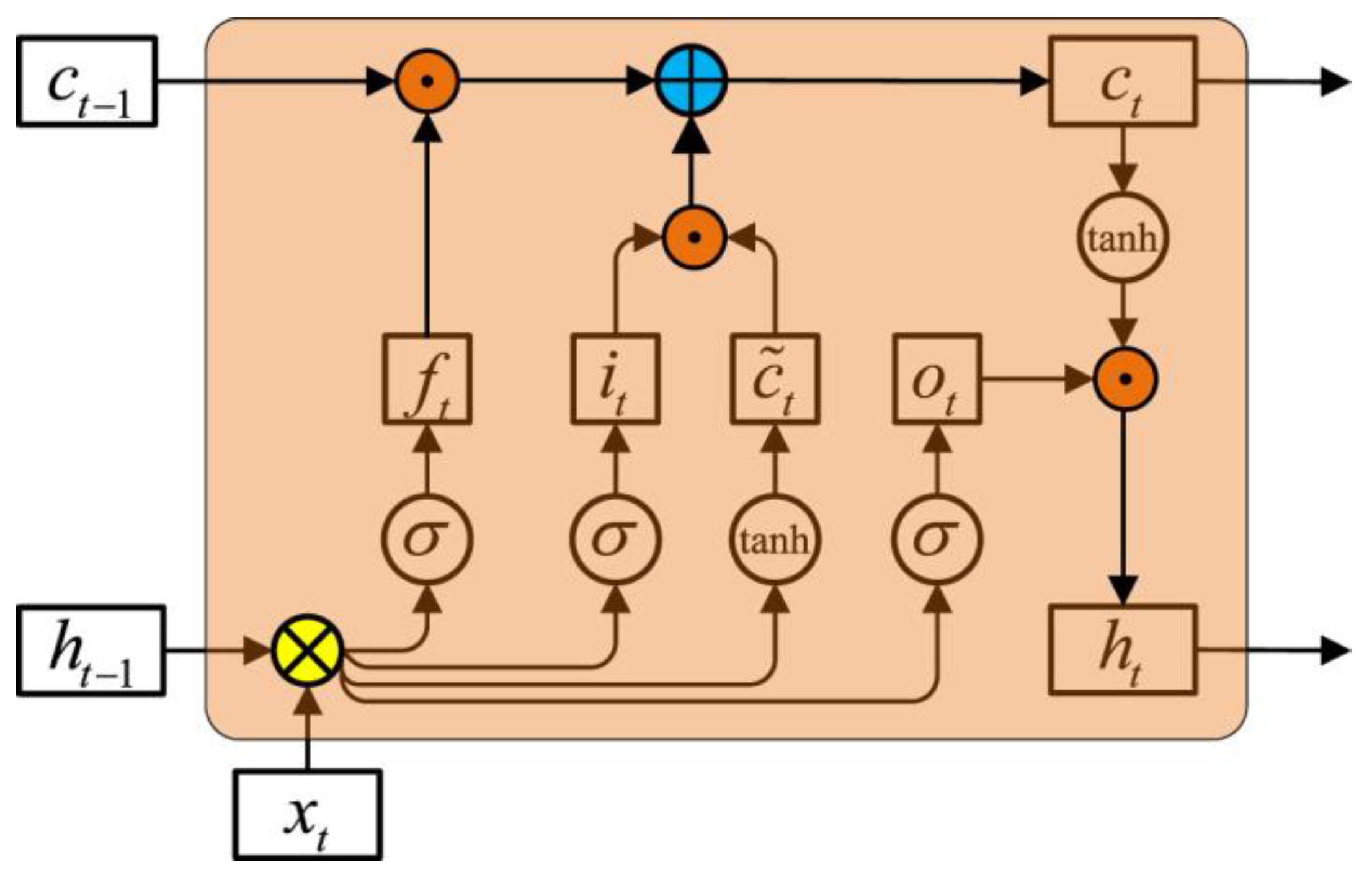

2.2. Long Short-Term Memory

2.3. Evolutionary Optimization

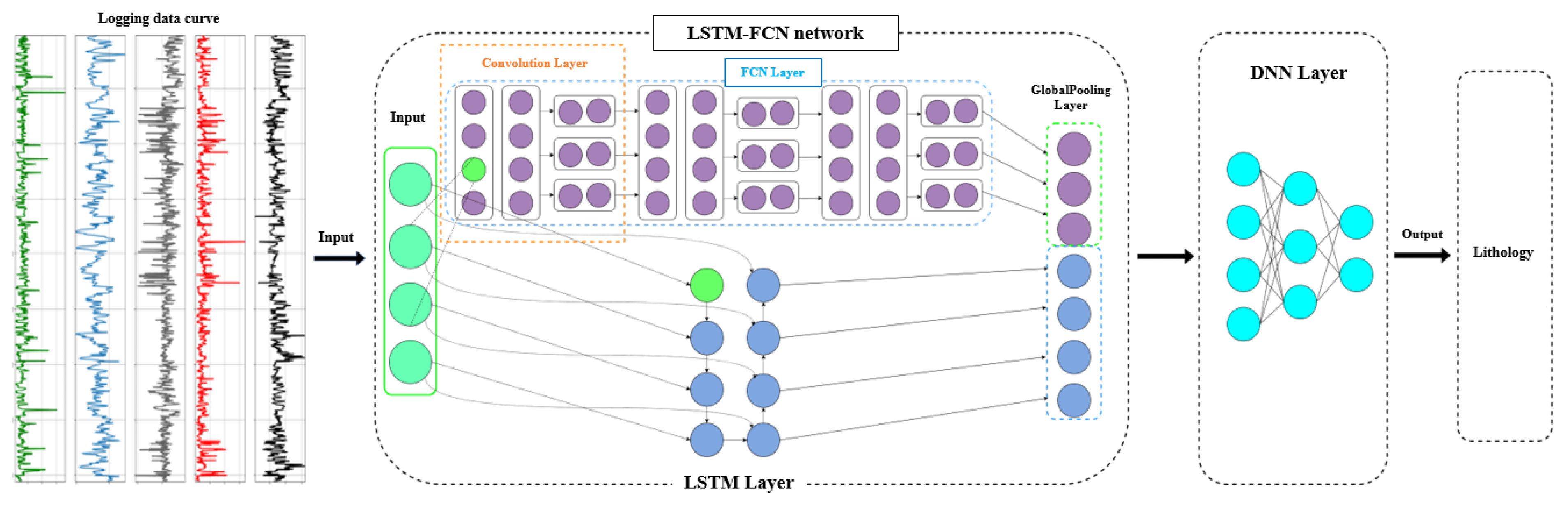

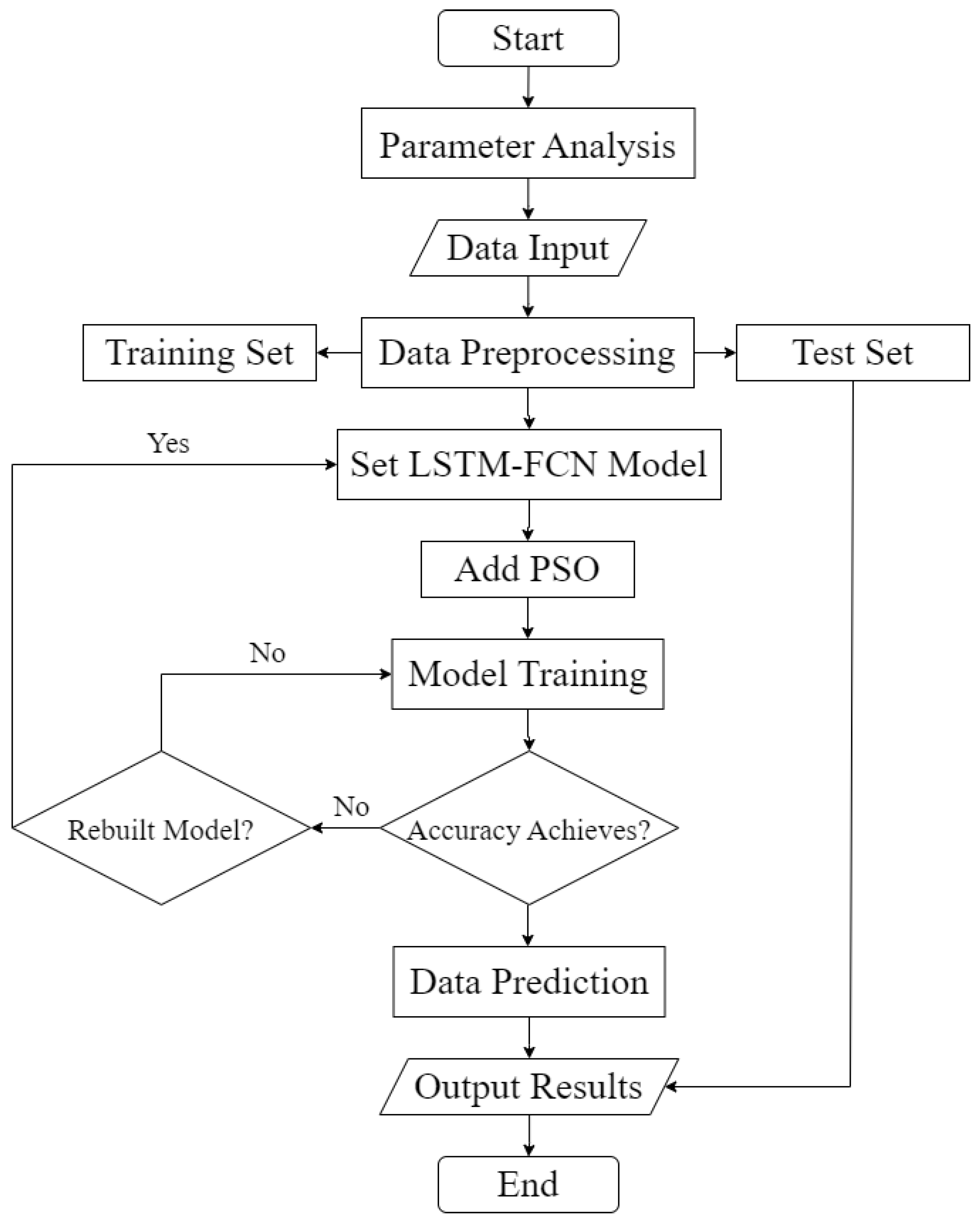

2.4. The Proposed Model: LSTM-FCN

- (1)

- prepare, analyze, and preprocess the data,

- (2)

- set the LSTM-FCN model, input the processed parameter data,

- (3)

- optimize the LSTM-FCN model using the PSO algorithm and output prediction results.

2.5. Model Evaluation Metrics

3. Results

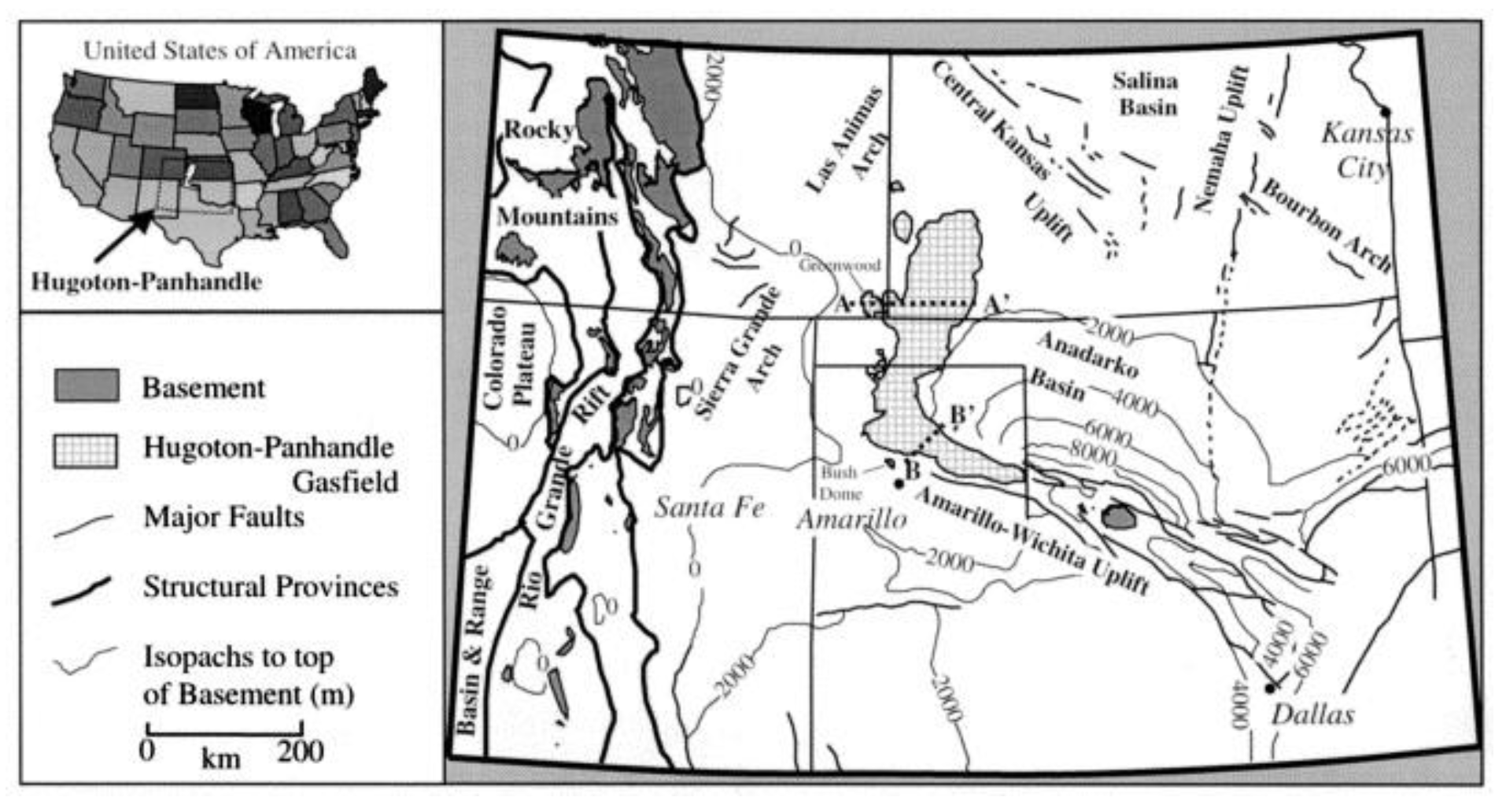

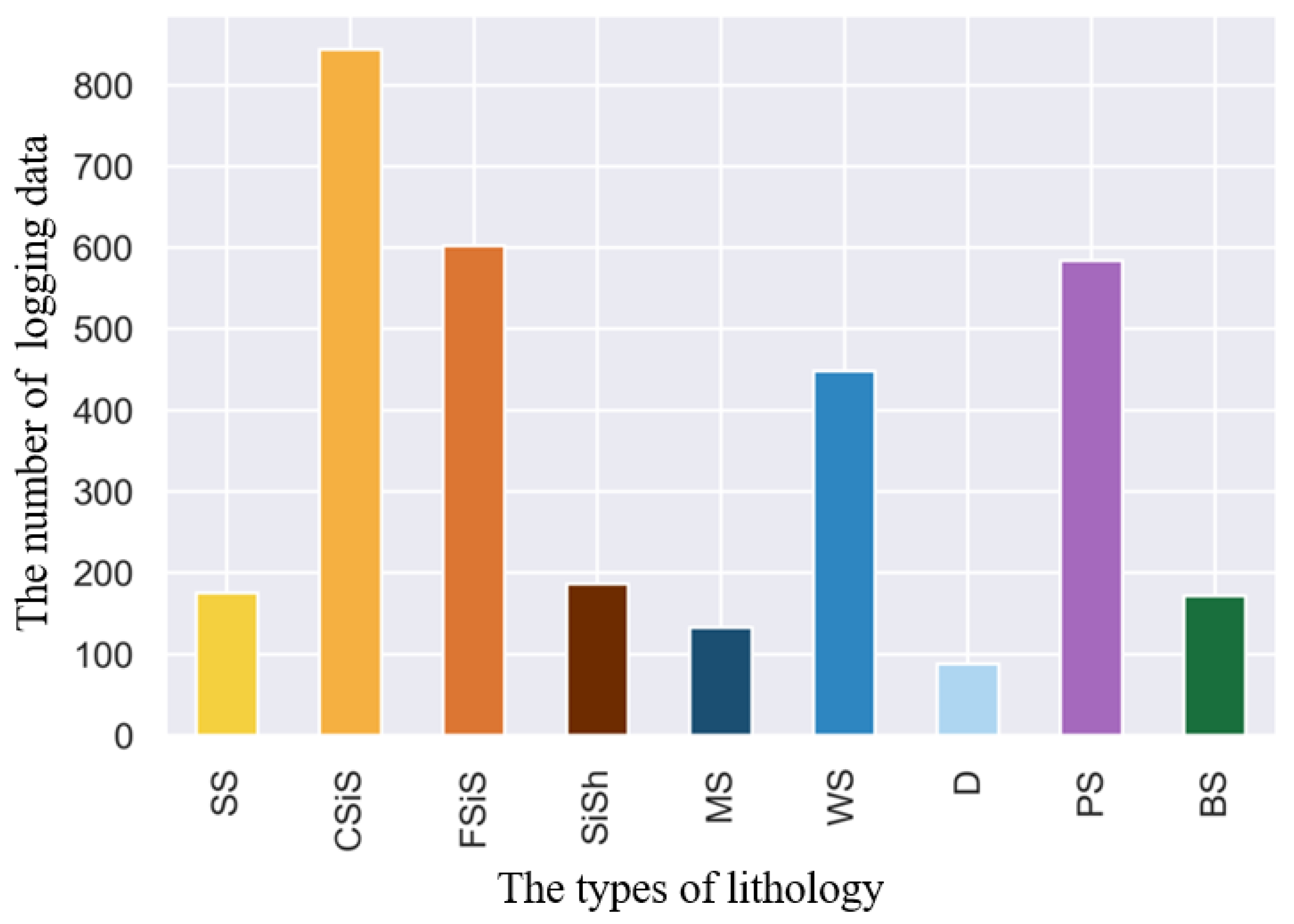

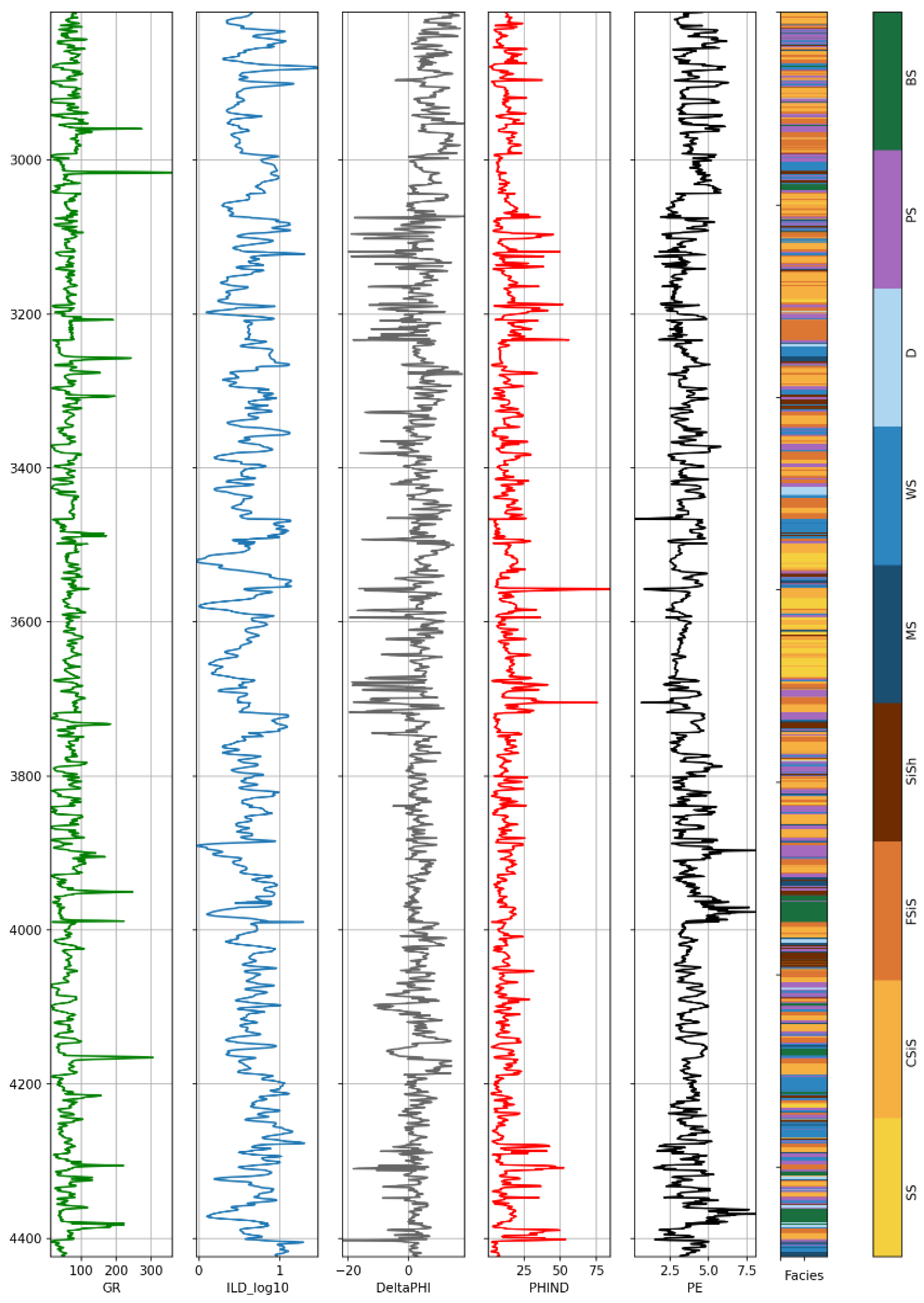

3.1. Data Preparation

3.2. Correlation Analysis

3.3. Data Normalization

3.4. Classifiers Comparison

- (1)

- Logistic regression (LR)

- (2)

- Gaussian process classifier (GPC)

- (3)

- Support vector machines (SVM)

- (4)

- Multi-layer Perceptron classifier (MLP)

- (5)

- Convolutional Neural Networks Classifier (CNN)

- (6)

- Long Short-term Memory (LSTM)

- (7)

- LSTM-CNN

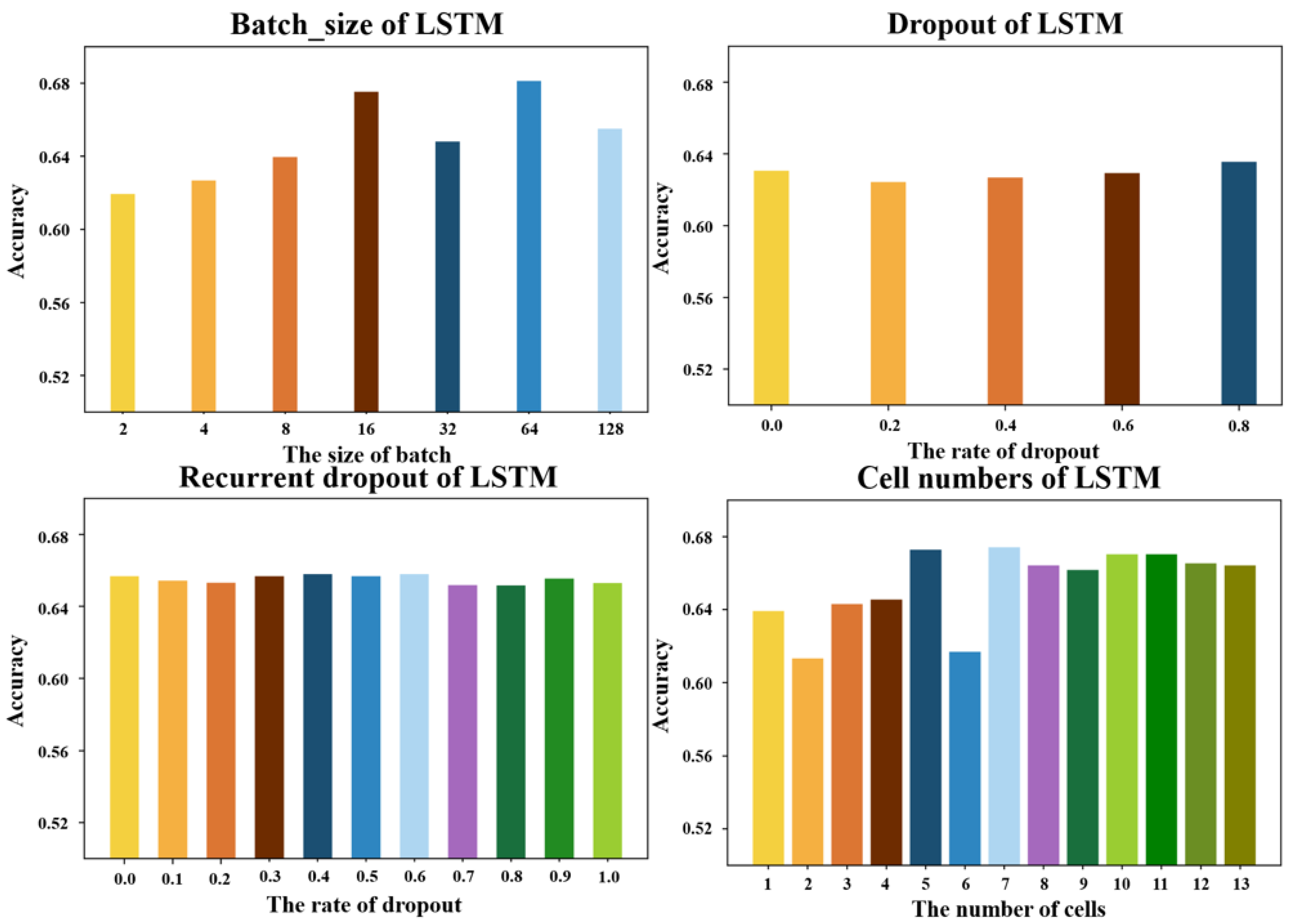

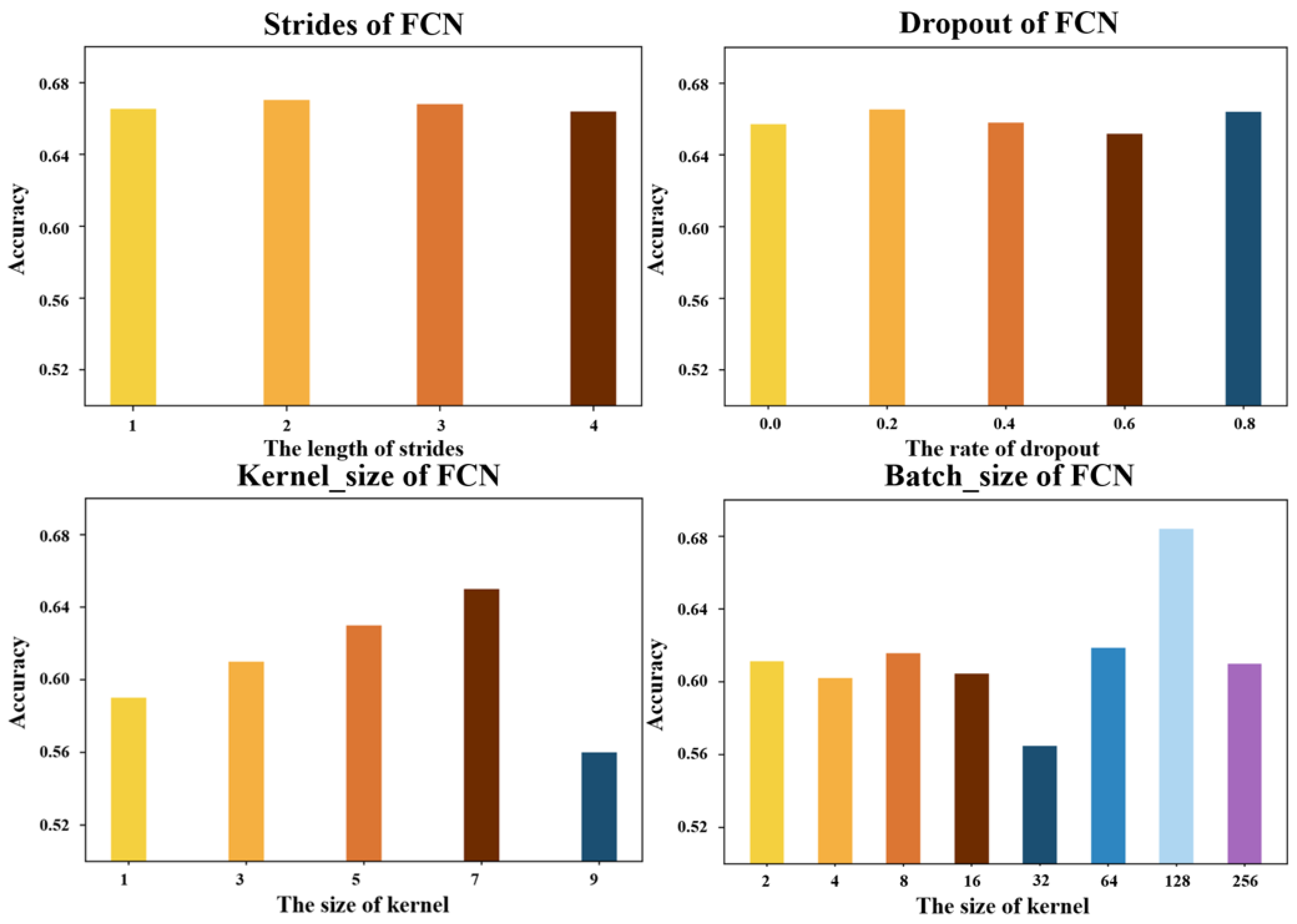

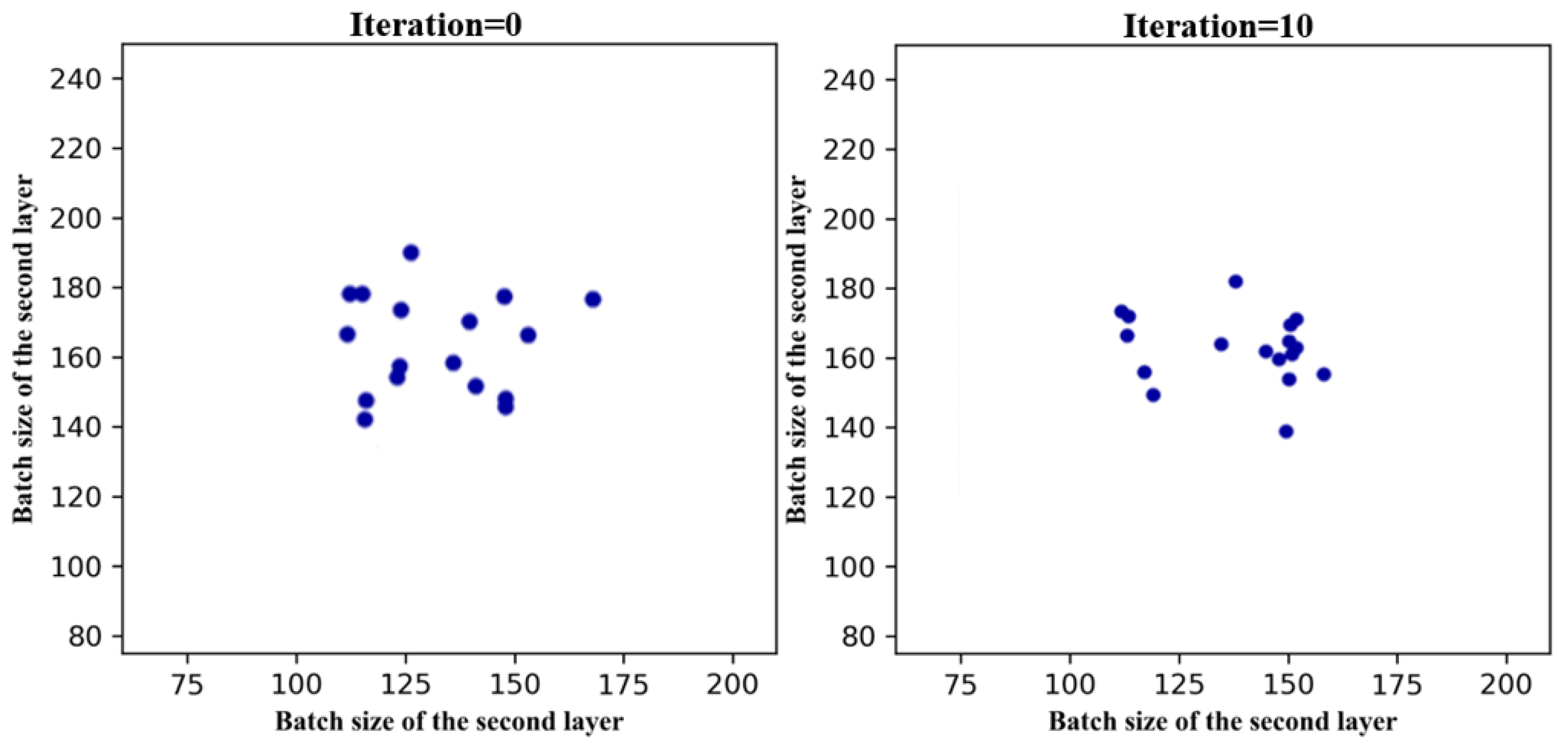

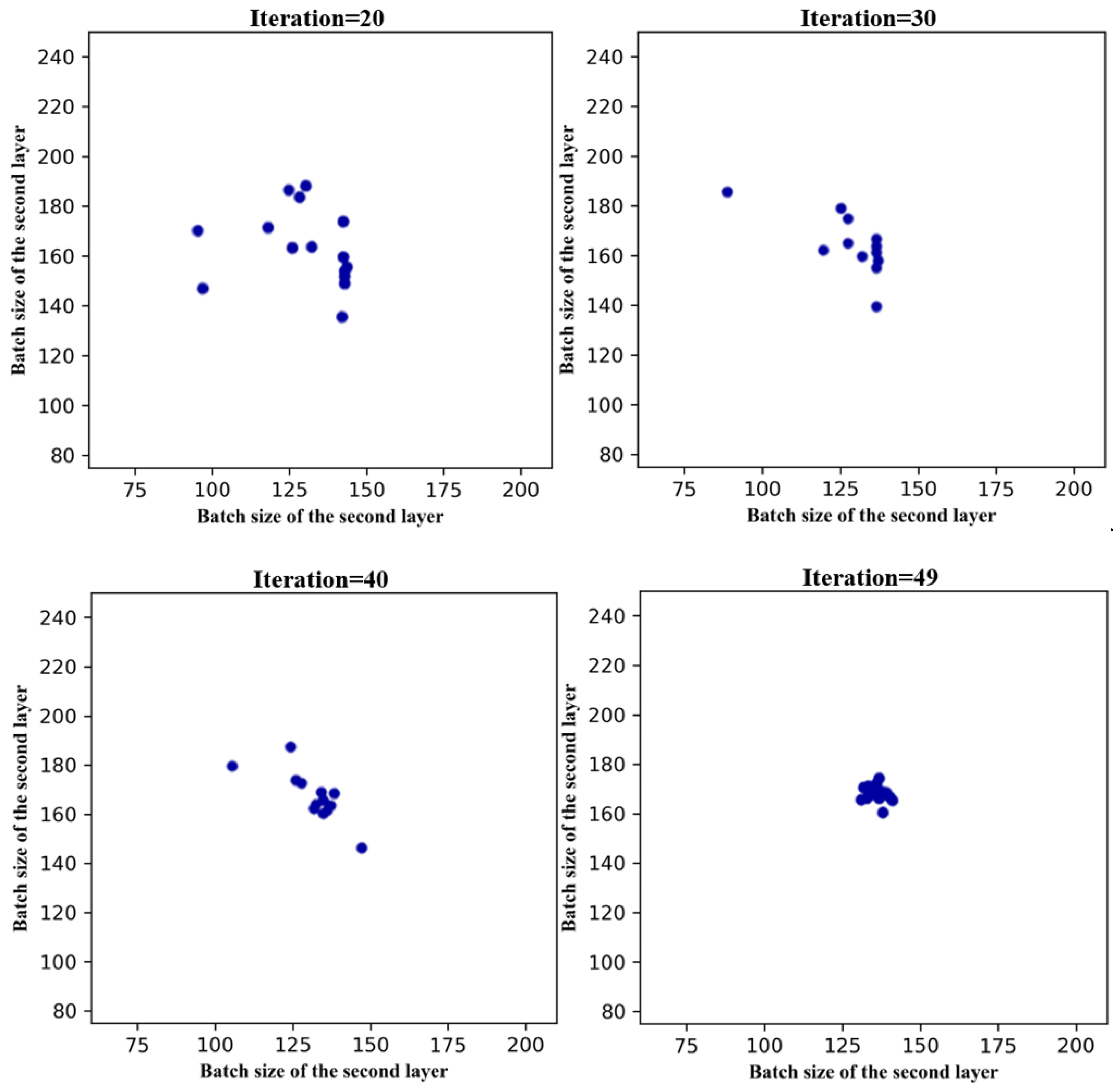

3.5. The PSO-LSTM-FCN Model

4. Discussion

5. Conclusions

- The paper investigated the application status of lithology identification and discovered the shortcoming of existing technology. On this basis, the paper proposed the PSO-LSTM-FCN model for lithology identification which is suitable for nonlinear discrete data.

- The experiment compared the LSTM-FCN model with seven classifiers. The F1-score and the Jaccard index showed that the proposed new model achieves 0.575 and 0.725 scores, surpassing all previous classifiers. Therefore, the LSTM-FCN model is selected for optimization and used to identify lithology.

- The experiment selected the parameters to be optimized through sensitivity analysis. In the LSTM layer, the analysis showed that batch_size had a greater influence on the accuracy. And in the FCN layer, the kernel_size and batch_size are to be selected. Then, through the PSO optimization, the accuracy of the model reaches 85%, greatly improving the accuracy of the machine learning model in lithology identification.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Valentín, M.B.; Bom, C.R.; Coelho, J.M.; Correia, M.D.; de Albuquerque, M.P.; de Albuquerque, M.P.; Faria, E.L. A deep residual convolutional neural network for automatic lithological facies identification in Brazilian pre-salt oilfield wellbore image logs. J. Pet. Sci. Eng. 2019, 179, 474–503. [Google Scholar] [CrossRef]

- Chaki, S.; Routray, A.; Mohanty, W.K. A probabilistic neural network (PNN) based framework for lithology classification using seismic attributes. J. Appl. Geophys. 2022, 199, 104578. [Google Scholar] [CrossRef]

- McPhee, C.; Reed, J.; Zubizarreta, I. Core Analysis: A Best Practice Guide; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Młynarczuk, M.; Górszczyk, A.; Ślipek, B. The application of pattern recognition in the automatic classification of microscopic rock images. Comput. Geosci. 2013, 60, 126–133. [Google Scholar] [CrossRef]

- Migeon, S.; Weber, O.; Faugeres, J.C.; Saint-Paul, J. SCOPIX: A new X-ray imaging system for core analysis. Geo-Mar. Lett. 1998, 18, 251–255. [Google Scholar] [CrossRef]

- Mitchell, J.; Chandrasekera, T.; Holland, D.; Gladden, L.; Fordham, E. Magnetic resonance imaging in laboratory petrophysical core analysis. Phys. Rep. 2013, 526, 165–225. [Google Scholar] [CrossRef]

- Martin, K.G.; Carr, T.R. Developing a quantitative mudrock calibration for a handheld energy dispersive X-ray fluorescence spectrometer. Sediment. Geol. 2020, 398, 105584. [Google Scholar] [CrossRef]

- Thomas, A.; Rider, M.; Curtis, A.; MacArthur, A. Automated lithology extraction from core photographs. First Break 2011, 29, 103–109. [Google Scholar] [CrossRef]

- Yang, J.; Wang, M.; Li, M.; Yan, Y.; Wang, X.; Shao, H.; Yu, C.; Wu, Y.; Xiao, D. Shale lithology identification using stacking model combined with SMOTE from well logs. Unconv. Resour. 2022, 2, 108–115. [Google Scholar] [CrossRef]

- Gifford, C.M.; Agah, A. Collaborative multi-agent rock facies classification from wireline well log data. Eng. Appl. Artif. Intell. 2010, 23, 1158–1172. [Google Scholar] [CrossRef]

- Kuhn, S.; Cracknell, M.J.; Reading, A.M. Lithological mapping in the Central African Copper Belt using Random Forests and clustering: Strategies for optimised results. Ore Geol. Rev. 2019, 112, 103015. [Google Scholar] [CrossRef]

- Liu, Z.; Cao, J.; You, J.; Chen, S.; Lu, Y.; Zhou, P. A lithological sequence classification method with well log via SVM-assisted bi-directional GRU-CRF neural network. J. Pet. Sci. Eng. 2021, 205, 108913. [Google Scholar] [CrossRef]

- Zhang, H.; Yu’nan, L. Research on identification model of element logging shale formation based on IPSO-SVM. Petroleum 2022, 8, 185–191. [Google Scholar] [CrossRef]

- Raeesi, M.; Moradzadeh, A.; Ardejani, F.D.; Rahimi, M. Classification and identification of hydrocarbon reservoir lithofacies and their heterogeneity using seismic attributes, logs data and artificial neural networks. J. Pet. Sci. Eng. 2012, 82–83, 151–165. [Google Scholar] [CrossRef]

- Zhang, P.Y.; Sun, J.M.; Jiang, Y.J.; Gao, J.S. Deep Learning Method for Lithology Identification from Borehole Images. In Proceedings of the 79th EAGE Conference and Exhibition 2017, Paris, France, 12–15 June 2017; European Association of Geoscientists & Engineers: Utrecht, The Netherlands, 2017; Volume 2017, pp. 1–5. [Google Scholar]

- Antle, R. Automated core fracture characterization by computer vision and image analytics of CT images. In Proceedings of the SPE Oklahoma City Oil and Gas Symposium, Oklahoma City, OK, USA, 9–10 April 2019. SPE-195181-MS. [Google Scholar] [CrossRef]

- Xu, Z.; Shi, H.; Lin, P.; Liu, T. Integrated lithology identification based on images and elemental data from rocks. J. Pet. Sci. Eng. 2021, 205, 108853. [Google Scholar] [CrossRef]

- Xu, Z.; Ma, W.; Lin, P.; Shi, H.; Pan, D.; Liu, T. Deep learning of rock images for intelligent lithology identification. Comput. Geosci. 2021, 154, 104799. [Google Scholar] [CrossRef]

- Lin, J.; Li, H.; Liu, N.; Gao, J.; Li, Z. Automatic lithology identification by applying LSTM to logging data A case study in X tight rock reservoirs. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1361–1365. [Google Scholar] [CrossRef]

- Li, K.; Xi, Y.; Su, Z.; Zhu, J.; Wang, B. Research on reservoir lithology prediction method based on convolutional recurrent neural network. Comput. Electr. Eng. 2021, 95, 107404. [Google Scholar] [CrossRef]

- Liu, Z.; Li, L.; Fang, X.; Qi, W.; Shen, J.; Zhou, H.; Zhang, Y. Hard-rock tunnel lithology prediction with TBM construction big data using a global-attention-mechanism-based LSTM network. Autom. Constr. 2021, 125, 103647. [Google Scholar] [CrossRef]

- Shi, M.; Yang, B.; Chen, R.; Ye, D. Logging curve prediction method based on CNN-LSTM-attention. Earth Sci. Inform. 2022, 15, 2119–2131. [Google Scholar] [CrossRef]

- Zhang, F.; Deng, S.; Wang, S.; Sun, H. Convolutional neural network long short-term memory deep learning model for sonic well log generation for brittleness evaluation. Interpretation 2022, 10, T367–T378. [Google Scholar] [CrossRef]

- Liu, J.; Liu, J. Integrating deep learning and logging data analytics for lithofacies classification and 3D modeling of tight sandstone reservoirs. Geosci. Front. 2022, 13, 101311. [Google Scholar] [CrossRef]

- Karim, F.; Majumdar, S.; Darabi, H.; Chen, S. Lstm fully convolutional networks for time series classification. IEEE Access 2017, 6, 1662–1669. [Google Scholar] [CrossRef]

- Budak, Ü.; Cömert, Z.; Rashid, Z.N.; Şengür, A.; Çıbuk, M. Computer-aided diagnosis system combining FCN and Bi-LSTM model for efficient breast cancer detection from histopathological images. Appl. Soft Comput. 2019, 85, 105765. [Google Scholar] [CrossRef]

- Ortego, P.; Diez-Olivan, A.; Del Ser, J.; Veiga, F.; Penalva, M.; Sierra, B. Evolutionary LSTM-FCN networks for pattern classification in industrial processes. Swarm Evol. Comput. 2020, 54, 100650. [Google Scholar] [CrossRef]

- Soltani, Z.; Imamalipour, A. An improved classification of mineralized zones using particle swarm optimization: A case study from Dagh-Dali ZnPb (±Au) prospect, Northwest Iran. Geochemistry 2022, 82, 125850. [Google Scholar] [CrossRef]

- Min, X.; Pengbo, Q.; Fengwei, Z. Research and application of logging lithology identification for igneous reservoirs based on deep learning. J. Appl. Geophys. 2020, 173, 103929. [Google Scholar] [CrossRef]

- Liang, H.; Chen, H.; Guo, J.; Bai, J.; Jiang, Y. Research on lithology identification method based on mechanical specific energy principle and machine learning theory. Expert Syst. Appl. 2022, 189, 116142. [Google Scholar] [CrossRef]

- Ren, Q.; Zhang, H.; Zhang, D.; Zhao, X.; Yan, L.; Rui, J.; Zeng, F.; Zhu, X. A framework of active learning and semi-supervised learning for lithology identification based on improved naive Bayes. Expert Syst. Appl. 2022, 202, 117278. [Google Scholar] [CrossRef]

- Pascanu, R.; Gulcehre, C.; Cho, K.; Bengio, Y. How to construct deep recurrent neural networks. arXiv 2013, arXiv:1312.6026. [Google Scholar] [CrossRef]

- Kawakami, K. Supervised Sequence Labelling with Recurrent Neural Networks; Technical University of Munich: Singapore, 2012; Volume 385, ISBN 978-3-642-24797-2. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Buyukada, M. Co-combustion of peanut hull and coal blends: Artificial neural networks modeling, particle swarm optimization and Monte Carlo simulation. Bioresour. Technol. 2016, 216, 280–286. [Google Scholar] [CrossRef]

- Liu, Y.; Dai, J.; Zhao, S.; Zhang, J.; Shang, W.; Li, T.; Zheng, Y.; Lan, T.; Wang, Z. Optimization of five-parameter BRDF model based on hybrid GA-PSO algorithm. Optik 2020, 219, 164978. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time Series Classification from Scratch with Deep Neural Networks: A Strong Baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar] [CrossRef]

- Saputelli, L.; Celma, R.; Boyd, D.; Shebl, H.; Gomes, J.; Bahrini, F.; Escorcia, A.; Pandey, Y. Deriving Permeability and Reservoir Rock Typing Supported with Self-Organized Maps SOM and Artificial Neural Networks ANN—Optimal Workflow for Enabling Core-Log Integration. In Proceedings of the SPE Reservoir Characterisation and Simulation Conference and Exhibition, Abu Dhabi, United Arab Emirates, 17–19 September 2019. SPE-196704-MS. [Google Scholar] [CrossRef]

- Krebs, C.J. Ecological Methodology; No. 574.5072 K7; Addison-Wesley Educational Publishers, Inc.: Menlo Park, CA, USA, 1999. [Google Scholar]

- Dubois, M.K.; Byrnes, A.P.; Bohling, G.C.; Doveton, J.H. Multiscale geologic and petrophysical modeling of the giant Hugoton gas field (Permian), Kansas and Oklahoma. In Giant Hydrocarbon Reservoirs of the World: From Rocks to Reservoir Characterization and Modeling; AAPG Memoir 88/SEPM Special Publication: London, UK, 2006; pp. 307–353. [Google Scholar] [CrossRef]

- Kim, J.; Lee, G.; Lee, S.; Lee, C. Towards expert–machine collaborations for technology valuation: An interpretable machine learning approach. Technol. Forecast. Soc. Chang. 2022, 183, 121940. [Google Scholar] [CrossRef]

- Singh, P.; Chaudhury, S.; Panigrahi, B.K. Hybrid MPSO-CNN: Multi-level Particle Swarm optimized hyperparameters of Convolutional Neural Network. Swarm Evol. Comput. 2021, 63, 100863. [Google Scholar] [CrossRef]

| Layer (Type) | Output Shape | Parameters | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | (None, 1, 5) | 0 | |

| permute (Permute) | (None, 5, 1) | 0 | input_1[0][0] |

| conv1D (Conv1D) | (None, 5, 128) | 1152 | permute [0][0] |

| batch_normalization (BatchNor) | (None, 5, 128) | 512 | conv1D [0][0] |

| activation (Activation) | (None, 5, 128) | 0 | batch_normalization [0][0] |

| conv1D_1 (Conv1D) | (None, 5, 256) | 164,096 | activation [0][0] |

| batch_normalization_1 (BatchNor) | (None, 5, 256) | 1024 | conv1D_1[0][0] |

| activation_1 (Activation) | (None, 5, 256) | 0 | batch_normalization_1[0][0] |

| conv1D_2 (Conv1D) | (None, 5, 128) | 98,432 | activation_1[0][0] |

| batch_normalization_2 (BatchNor) | (None, 5, 128) | 512 | conv1D_2[0][0] |

| lstm (LSTM) | (None, 8) | 448 | batch_normalization_2[0][0] |

| activation_2 (Activation) | (None, 5, 128) | 0 | input_1[0][0] |

| dropout (Dropout) | (None, 8) | 0 | lstm [0][0] |

| global_average_pooling1d (GlobalPooling) | (None, 128) | 0 | activation_2[0][0] |

| concatenate (Concatenate) | (None, 136) | 0 | dropout [0][0] global_average_pooling1d [0][0] |

| dense (Dense) | (None, 9) | 1233 | concatenate [0][0] |

| Facies | GR | ILD_log10 | DeltaPHI | PHIND | PE | Depth | |

|---|---|---|---|---|---|---|---|

| count | 3232 | 3232 | 3232 | 3232 | 3232 | 3232 | 3232 |

| mean | 4.52 | 66.14 | 0.64 | 3.56 | 13.48 | 3.73 | 3615.75 |

| std | 2.55 | 30.85 | 0.24 | 5.23 | 7.70 | 0.90 | 466.57 |

| min | 1.00 | 13.25 | −0.03 | −21.83 | 0.55 | 0.20 | 2808.00 |

| 25% | 2.00 | 46.92 | 0.49 | 1.16 | 8.35 | 3.10 | 3211.88 |

| 50% | 3.00 | 65.72 | 0.62 | 3.50 | 12.15 | 3.55 | 3615.75 |

| 75% | 7.00 | 79.63 | 0.81 | 6.43 | 16.45 | 4.30 | 4019.63 |

| max | 9.00 | 361.15 | 1.48 | 18.60 | 84.40 | 8.09 | 4423.50 |

| Parameter | Physical Significance | Range |

|---|---|---|

| The batch size of the first layer of FCN | The number of samples taken for one training in the first convolutional layer of FCN | 64–256 |

| The batch size of the second layer of FCN | The number of samples taken for one training in the second convolutional layer of FCN | 64–256 |

| The batch size of the third layer of FCN | The number of samples taken for one training in the third convolutional layer of FCN | 64–256 |

| The kernel size of the first layer of FCN | The number of steps in the first convolutional layer of FCN | 1–10 |

| The kernel size of the second layer of FCN | The number of steps in the second convolutional layer of FCN | 1–10 |

| The kernel size of the third layer of FCN | The number of steps in the third convolutional layer of FCN | 1–10 |

| The batch size of LSTM | The number of samples taken for one training of LSTM | 32–128 |

| Pred True | SS | CSiS | FSiS | SiSh | MS | WS | D | PS | BS | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| SS | 22 | 3 | 1 | 26 | ||||||

| CSiS | 8 | 107 | 48 | 1 | 1 | 2 | 167 | |||

| FSiS | 8 | 109 | 1 | 3 | 1 | 122 | ||||

| SiSh | 4 | 3 | 20 | 7 | 1 | 35 | ||||

| MS | 1 | 3 | 1 | 5 | 11 | 3 | 24 | |||

| WS | 1 | 3 | 67 | 1 | 16 | 1 | 89 | |||

| D | 1 | 14 | 2 | 17 | ||||||

| PS | 1 | 3 | 3 | 1 | 1 | 9 | 3 | 111 | 7 | 139 |

| BS | 2 | 1 | 2 | 23 | 28 | |||||

| Precision | 0.81 | 0.94 | 0.76 | 0.87 | 0.72 | 0.77 | 0.80 | 0.91 | 0.84 | 0.85 |

| Recall | 0.95 | 0.74 | 0.99 | 0.67 | 0.61 | 0.85 | 0.82 | 0.90 | 0.92 | 0.84 |

| F1-score | 0.87 | 0.83 | 0.86 | 0.76 | 0.71 | 0.86 | 0.80 | 0.90 | 0.88 | 0.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; Li, W.; Dong, Z.; Zhang, T.; Shi, Q.; Wang, L.; Wu, L.; Qian, S.; Wang, Z.; Liu, Z.; et al. Lithologic Identification of Complex Reservoir Based on PSO-LSTM-FCN Algorithm. Energies 2023, 16, 2135. https://doi.org/10.3390/en16052135

He Y, Li W, Dong Z, Zhang T, Shi Q, Wang L, Wu L, Qian S, Wang Z, Liu Z, et al. Lithologic Identification of Complex Reservoir Based on PSO-LSTM-FCN Algorithm. Energies. 2023; 16(5):2135. https://doi.org/10.3390/en16052135

Chicago/Turabian StyleHe, Yawen, Weirong Li, Zhenzhen Dong, Tianyang Zhang, Qianqian Shi, Linjun Wang, Lei Wu, Shihao Qian, Zhengbo Wang, Zhaoxia Liu, and et al. 2023. "Lithologic Identification of Complex Reservoir Based on PSO-LSTM-FCN Algorithm" Energies 16, no. 5: 2135. https://doi.org/10.3390/en16052135

APA StyleHe, Y., Li, W., Dong, Z., Zhang, T., Shi, Q., Wang, L., Wu, L., Qian, S., Wang, Z., Liu, Z., & Lei, G. (2023). Lithologic Identification of Complex Reservoir Based on PSO-LSTM-FCN Algorithm. Energies, 16(5), 2135. https://doi.org/10.3390/en16052135