Detection of Outliers in Time Series Power Data Based on Prediction Errors

Abstract

1. Introduction

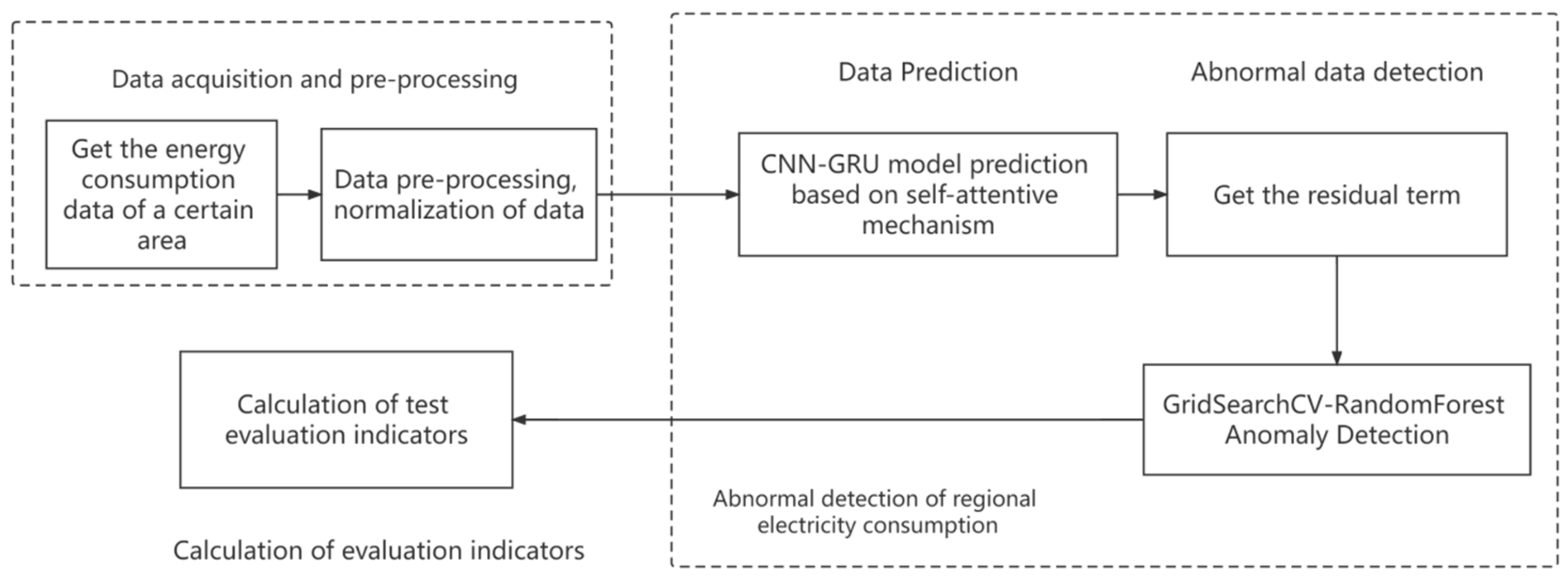

2. Anomaly Detection Method Based on Prediction Error

2.1. Methodology Overview

2.2. Predictive Models

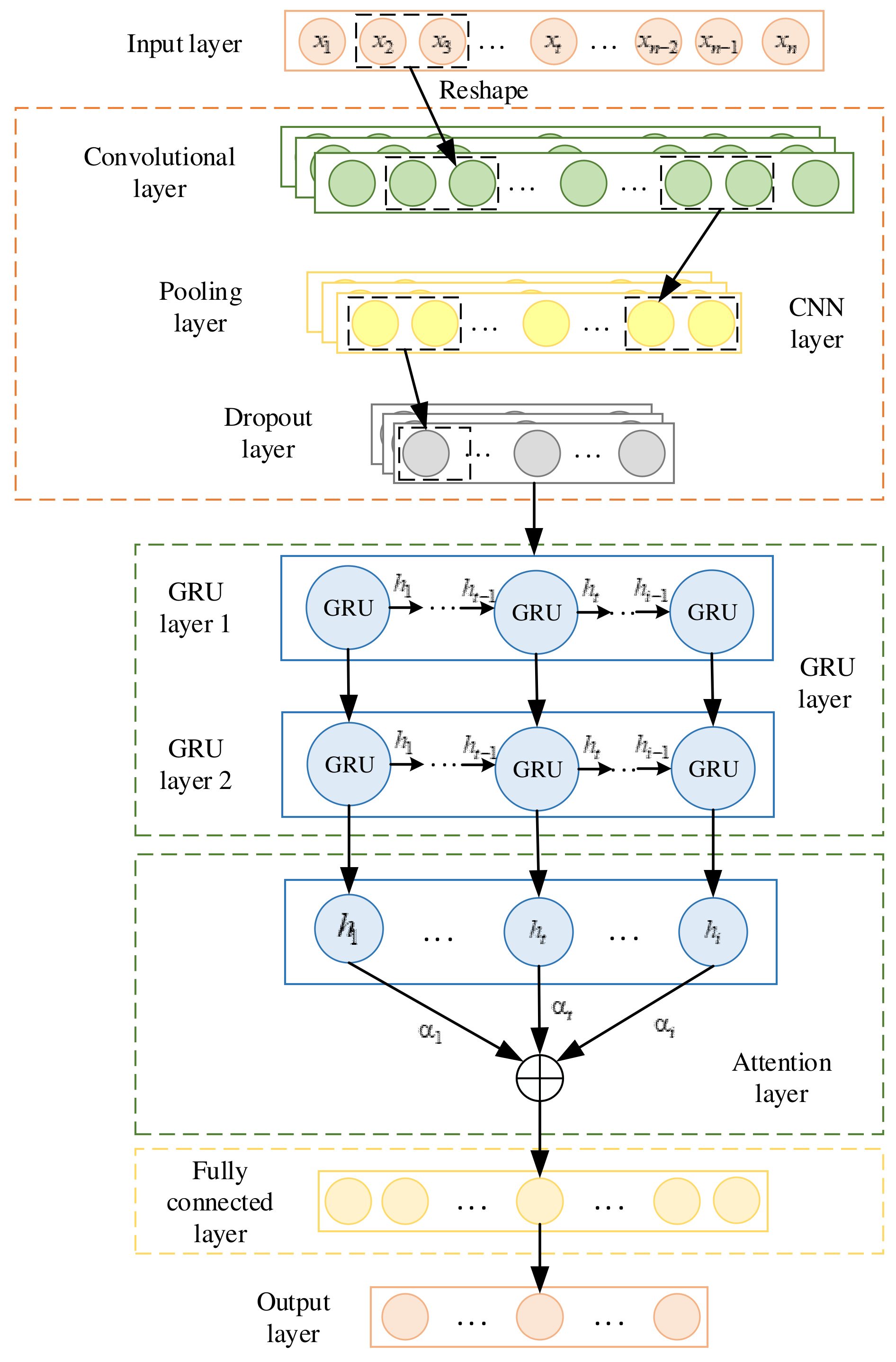

2.2.1. CNN-GRU Prediction Model Based on the Attention Mechanism

2.2.2. Model Structure

2.3. Detection Models

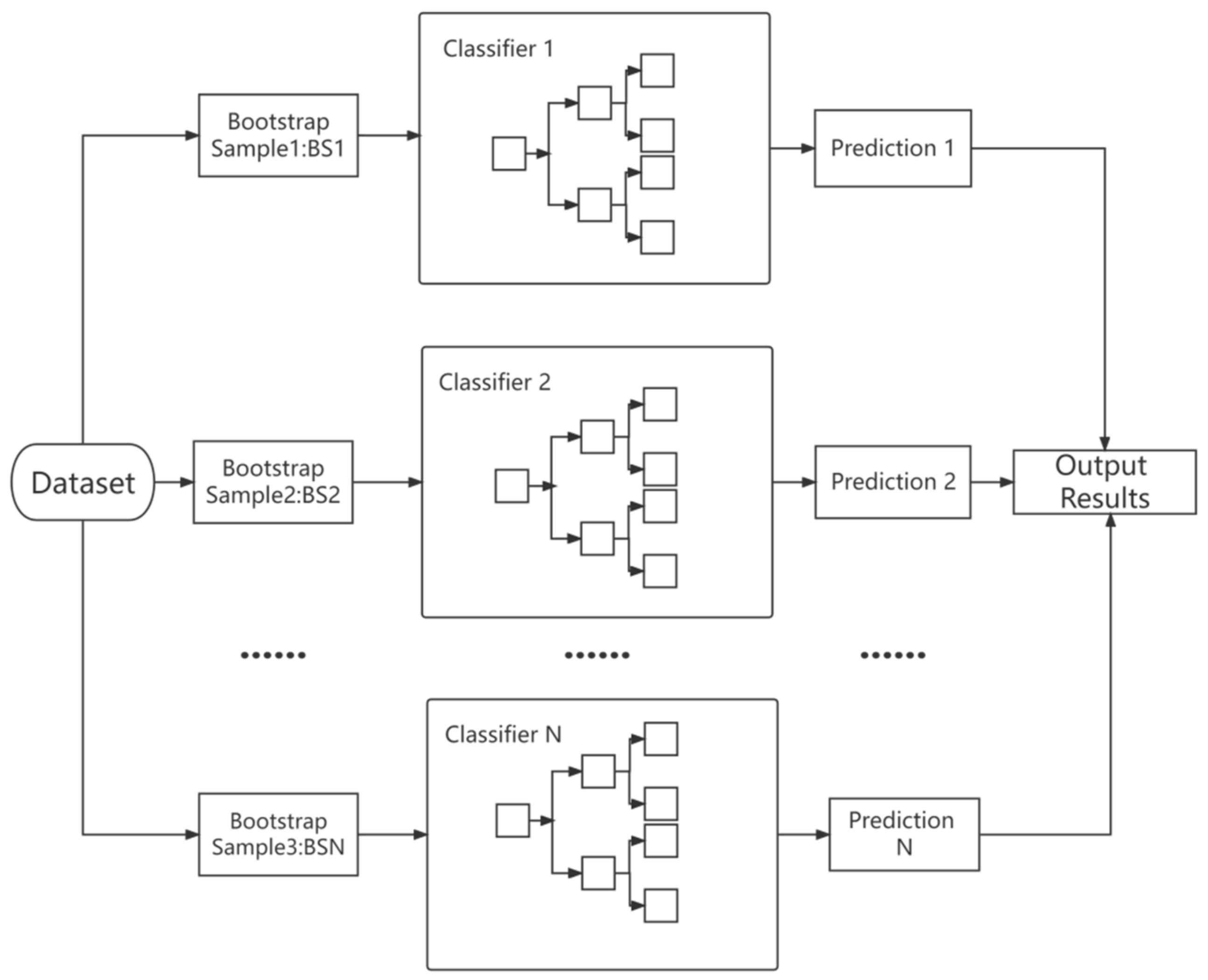

2.3.1. Random Forest Classification Detection Model

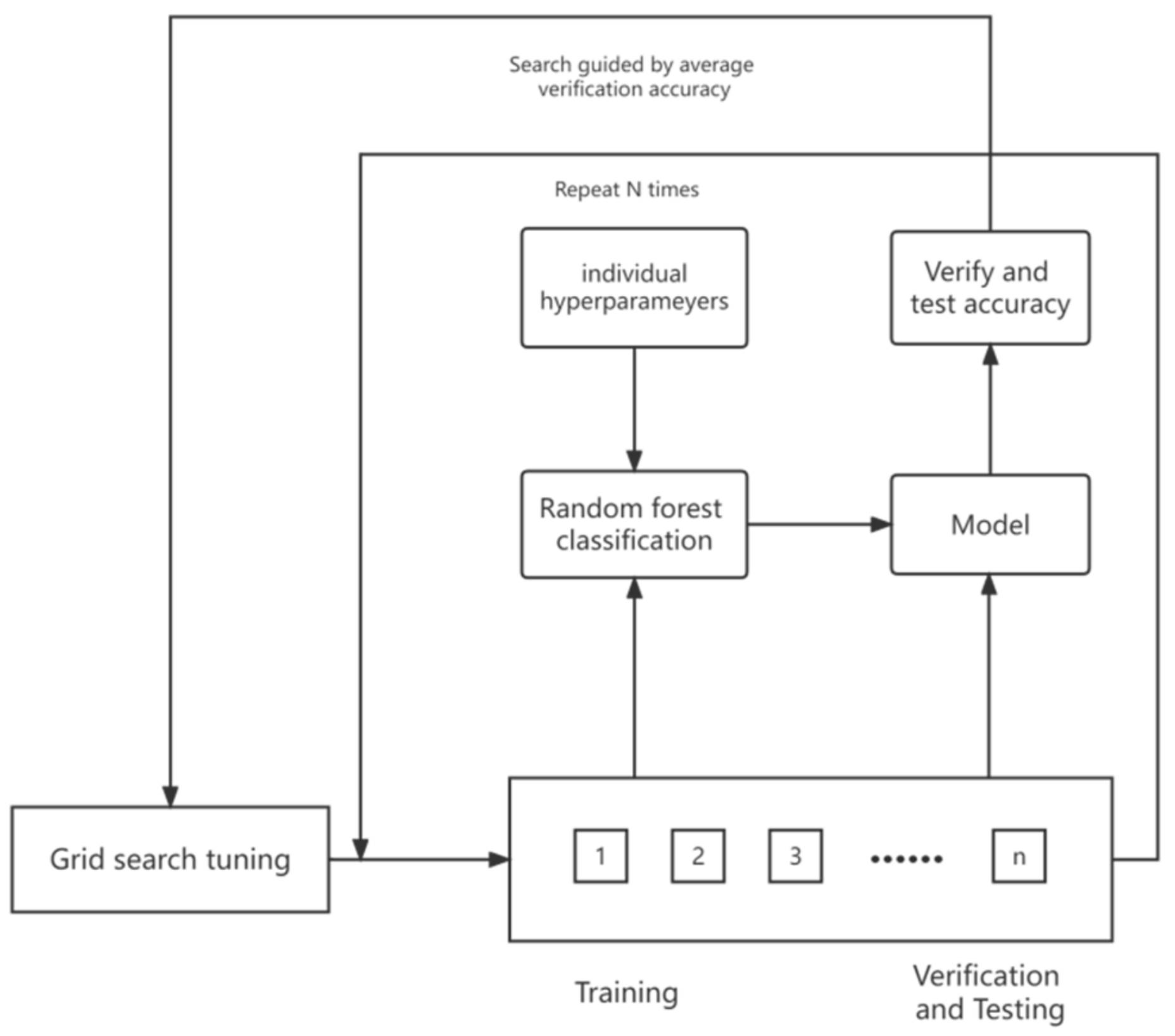

2.3.2. Optimization of Grid Search Parameters

2.4. Evaluation Metrics of Prediction and Detection Models

2.4.1. Evaluation Indices of Prediction Models

2.4.2. Evaluation Index of Detection Model

3. Results and Analysis

3.1. Performance Analysis and Comparison of Load Consumption Data Prediction Models

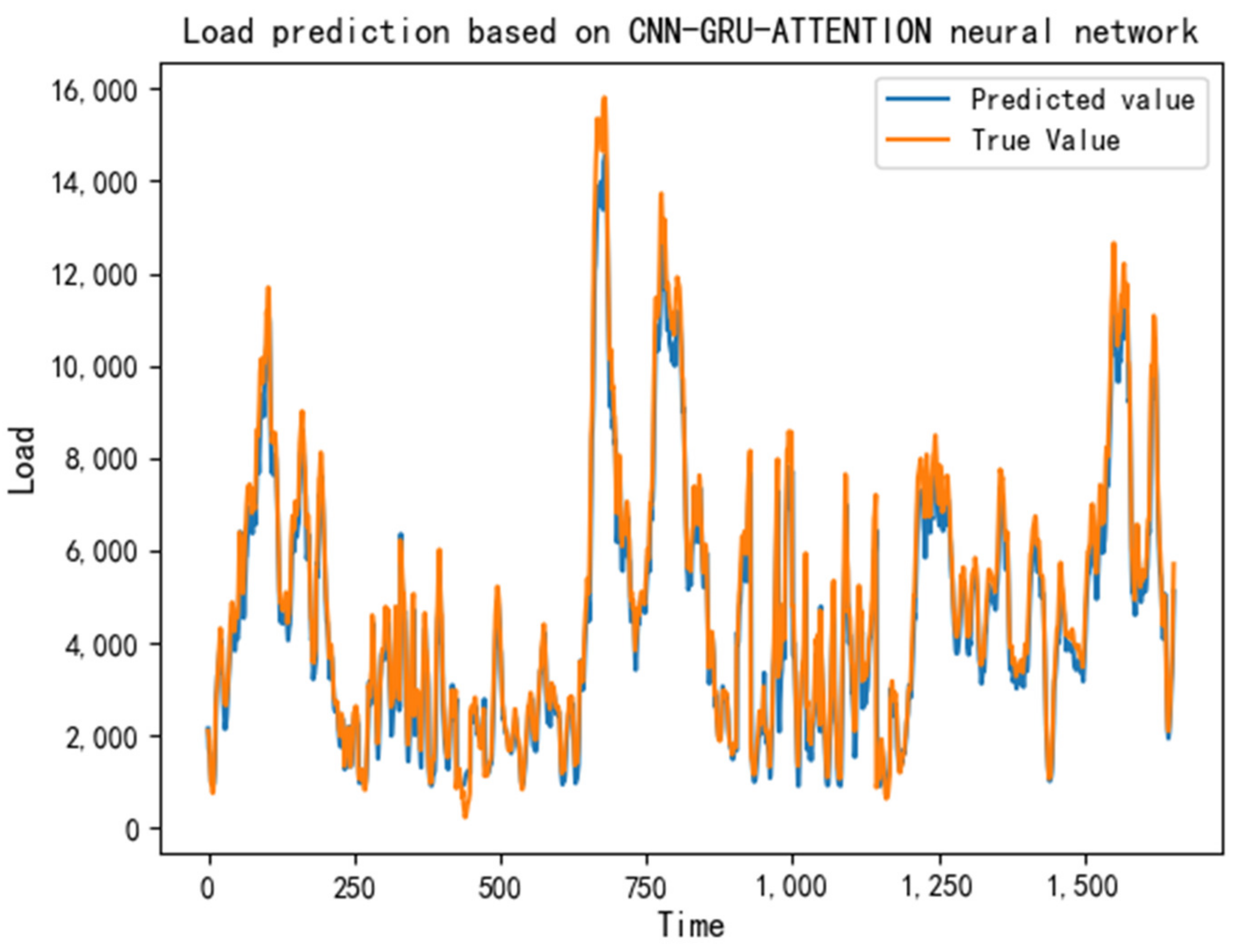

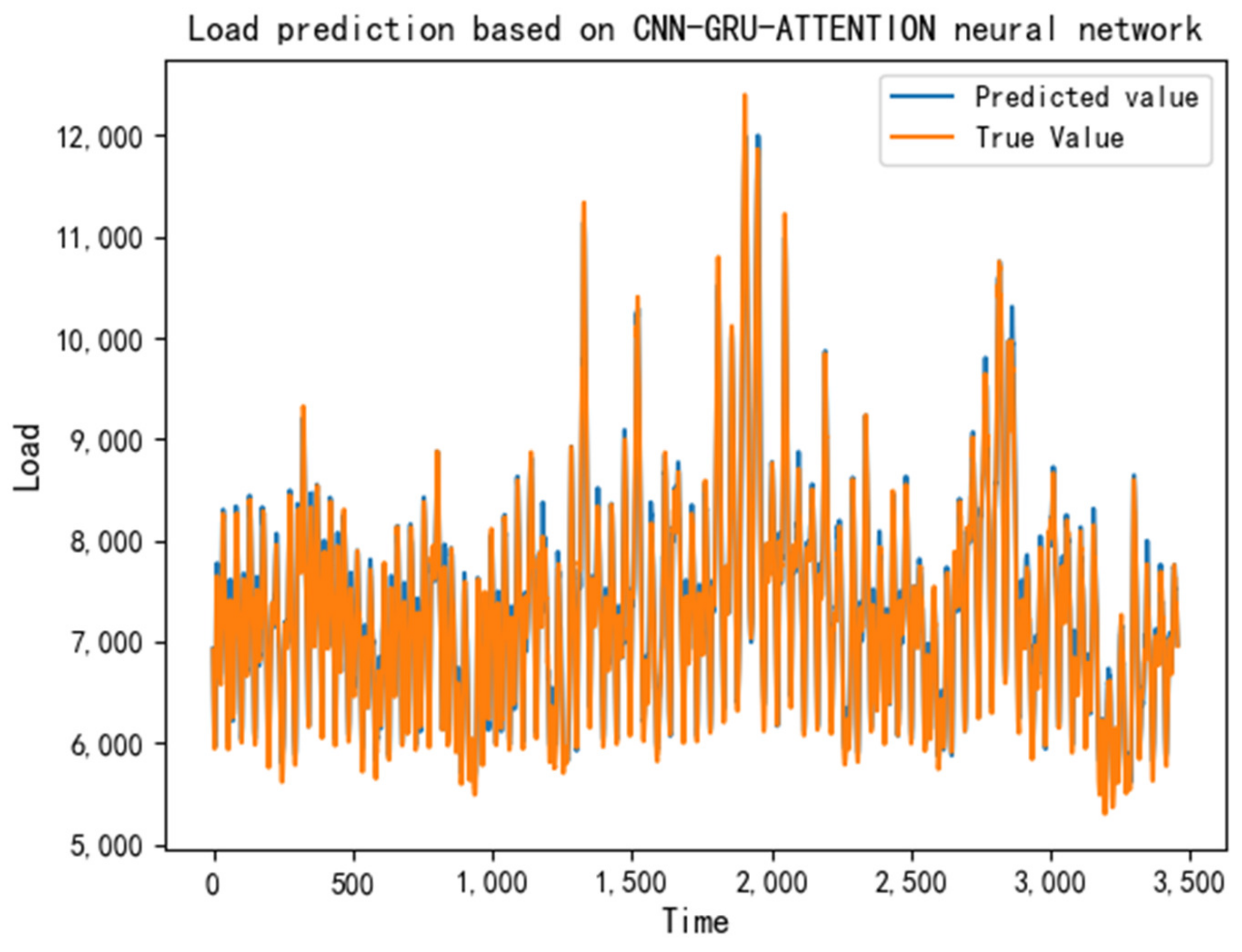

3.1.1. Comparison of Prediction Results for the Spanish Wind Power Dataset

3.1.2. Comparison of Forecast Results for the Australian Electricity Price Dataset

3.2. Performance Analysis and Comparison of Outlier Point Detection Models

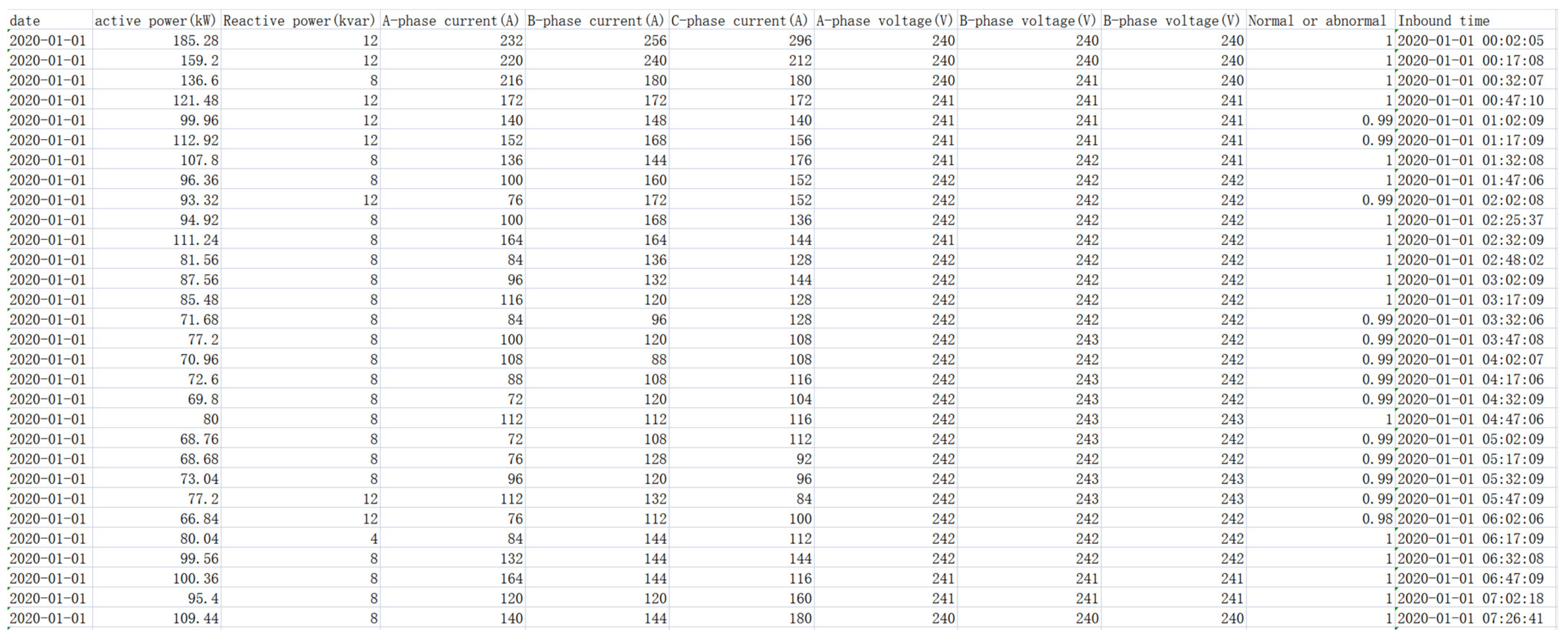

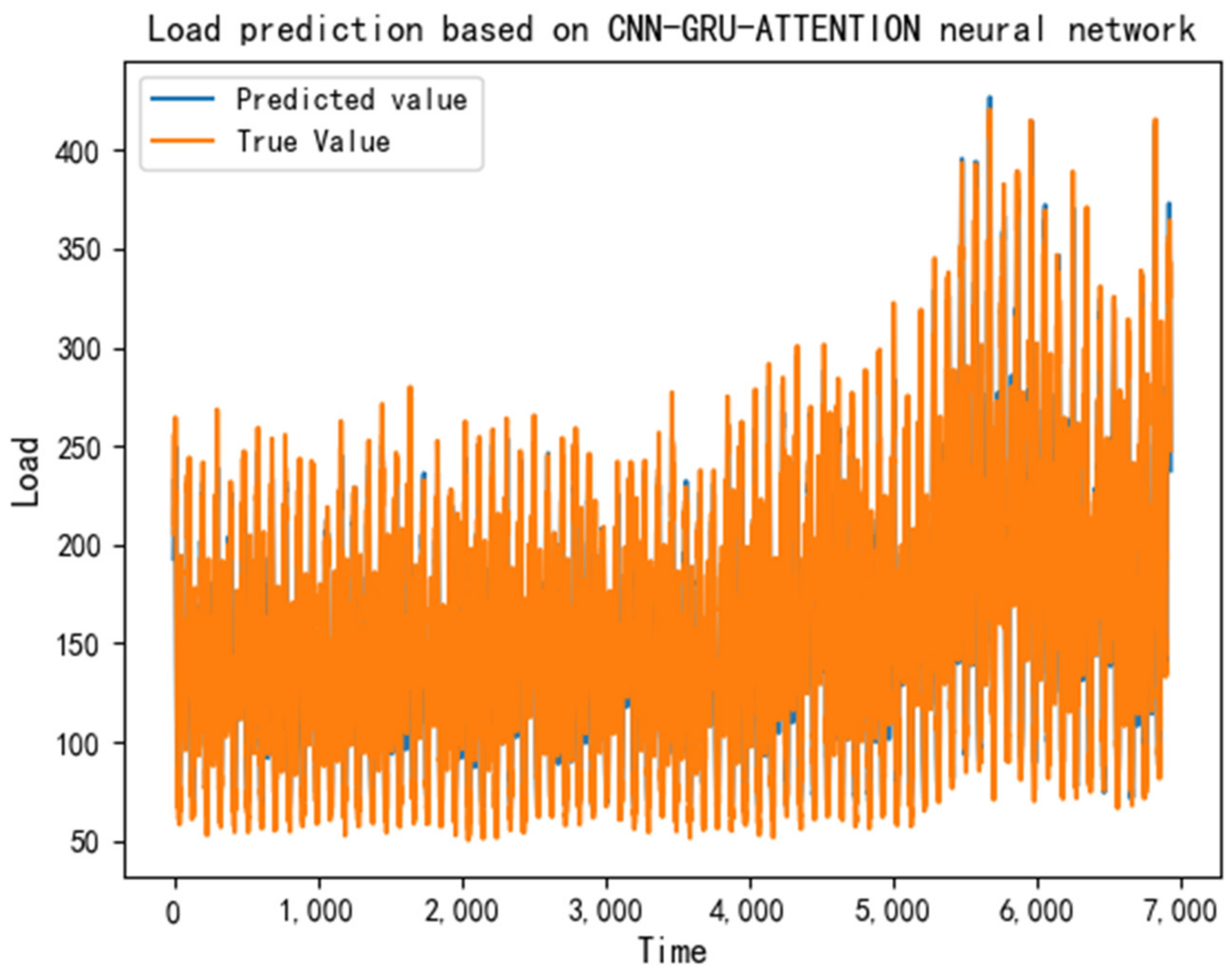

3.3. Validation of Outlier Detection Algorithm for Energy Consumption Data Based on the Prediction Error of Real Data Sets

3.3.1. Real Data Prediction

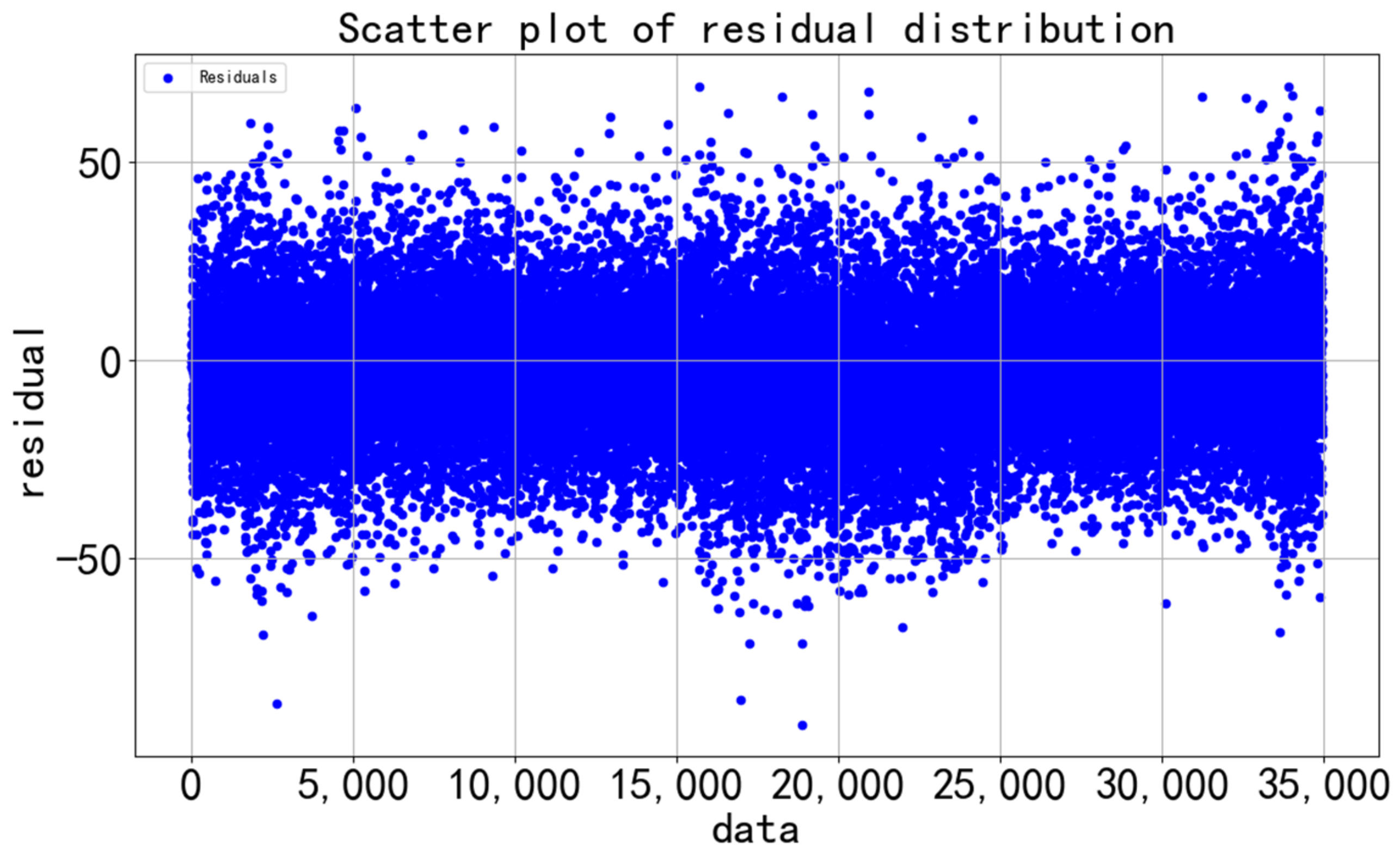

3.3.2. Outlier Detection

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lan, T.; Lin, Y.; Wang, J.; Leao, B.; Fradkin, D. Unsupervised Power System Event Detection and Classification Using Unlabeled PMU Data. In Proceedings of the 2021 IEEE PES Innovative Smart Grid Technologies Europe (ISGT Europe), Espoo, Finland, 18–21 October 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Rao, S.; Muniraju, G.; Tepedelenlioglu, C.; Srinivasan, D.; Tamizhmani, G.; Spanias, A. Dropout and Pruned Neural Networks for Fault Classification in Photovoltaic Arrays. IEEE Access 2021, 9, 120034–120042. [Google Scholar] [CrossRef]

- Mandhare, H.C.; Idate, S.R. A comparative study of cluster based outlier detection, distance based outlier detection and density based outlier detection techniques. In Proceedings of the 2017 International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 15–16 June 2017; pp. 931–935. [Google Scholar] [CrossRef]

- Wang, H.; Bah, M.J.; Hammad, M. Progress in Outlier Detection Techniques: A Survey. IEEE Access 2019, 7, 107964–108000. [Google Scholar] [CrossRef]

- Nascimento, G.F.M.; Wurtz, F.; Kuo-Peng, P.; Delinchant, B.; Batistela, N.J. Outlier Detection in Buildings’ Power Consumption Data Using Forecast Error. Energies 2021, 14, 8325. [Google Scholar] [CrossRef]

- Li, T.; Comer, M.L.; Delp, E.J.; Desai, S.R.; Mathieson, J.L.; Foster, R.H.; Chan, M.W. Anomaly Scoring for Prediction-Based Anomaly Detection in Time Series. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Salleh, N.S.M.; Saripuddin, M.; Suliman, A.; Jorgensen, B.N. Electricity Anomaly Point Detection using Unsupervised Technique Based on Electricity Load Prediction Derived from Long Short-Term Memory. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Data Sciences (AiDAS), Ipoh, Malaysia, 8–9 September 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Zhou, Q.; Liu, F.; Gong, H. Robust three-vector model predictive torque and stator flux control for PMSM drives with prediction error compensation. J. Power Electron. 2022, 22, 1917–1926. [Google Scholar] [CrossRef]

- Zhu, R.; Wang, P. Adaptive Control of Nonlinear System Under Input Constraints Combined with Prediction-Error Estimation for Uncertainty. In Proceedings of the 2022 IEEE 17th International Conference on Control & Automation (ICCA), Naples, Italy, 27–30 June 2022; pp. 63–67. [Google Scholar] [CrossRef]

- Madhusudhanan, A.K.; Na, X.; Ainalis, D.; Cebon, D. Engine Fuel Consumption Modelling Using Prediction Error Identification and On-Road Data. Available online: http://eprints.soton.ac.uk/id/eprint/457356 (accessed on 29 December 2022).

- Zhang, S.; Zhang, G.; Zhang, K. Coordinated Control Strategy of Wind-Photovoltaic Hybrid Energy Storage Considering Prediction Error Compensation and Fluctuation Suppression. In Proceedings of the 2021 IEEE 2nd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 17–19 December 2021; pp. 1185–1189. [Google Scholar] [CrossRef]

- Peñaloza, A.K.A.; Balbinot, A.; Leborgne, R.C. “Review of Deep Learning Application for Short-Term Household Load Forecasting. In Proceedings of the 2020 IEEE PES Transmission & Distribution Conference and Exhibition—Latin America (T&D LA), Montevideo, Uruguay, 28 September–2 October 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Shahi, T.B.; Shrestha, A.; Neupane, A.; Guo, W. Stock Price Forecasting with Deep Learning: A Comparative Study. Mathematics 2020, 8, 1441. [Google Scholar] [CrossRef]

- Jung, S.; Moon, J.; Park, S.; Hwang, E. An Attention-Based Multilayer GRU Model for Multistep-Ahead Short-Term Load Forecasting. Sensors 2021, 21, 1639. [Google Scholar] [CrossRef]

- Meng, Z.; Xie, Y.; Sun, J. Short-term load forecasting using neural attention model based on EMD. Electr. Eng. 2022, 104, 1857–1866. [Google Scholar] [CrossRef]

- Park, J.; Hwang, E. A Two-Stage Multistep-Ahead Electricity Load Forecasting Scheme Based on LightGBM and Attention-BiLSTM. Sensors 2021, 21, 7697. [Google Scholar] [CrossRef]

- Lin, T.; Pan, Y.; Xue, G.; Song, J.; Qi, C. A Novel Hybrid Spatial-Temporal Attention-LSTM Model for Heat Load Prediction. IEEE Access 2020, 8, 159182–159195. [Google Scholar] [CrossRef]

- Xia, X.; Togneri, R.; Sohel, F.; Huang, D. Random forest classification based acoustic event detection. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Munich, Germany, 16 November 2017; pp. 163–168. [Google Scholar] [CrossRef]

- Nagaraj, P.; Muneeswaran, V.; Deshik, G. Ensemble Machine Learning (Grid Search & Random Forest) based Enhanced Medical Expert Recommendation System for Diabetes Mellitus Prediction. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 17–19 August 2022; pp. 757–765. [Google Scholar] [CrossRef]

- Siji George, C.G.; Sumathi, B. Grid Search Tuning of Hyperparameters in Random Forest Classifier for Customer Feedback Sentiment Prediction. Int. J. Adv. Comput. Sci. Appl. IJACSA 2020, 11, 173–178. [Google Scholar]

- Abokhzam, A.A.; Gupta, N.K.; Bose, D.K. Efficient diabetes mellitus prediction with grid based random forest classifier in association with natural language processing. Int. J. Speech Technol. 2021, 24, 601–614. [Google Scholar] [CrossRef]

- Shi, H.; Wang, L.; Scherer, R.; Wozniak, M.; Zhang, P.; Wei, W. Short-Term Load Forecasting Based on Adabelief Optimized Temporal Convolutional Network and Gated Recurrent Unit Hybrid Neural Network. IEEE Access 2021, 9, 66965–66981. [Google Scholar] [CrossRef]

- Pavićević, M.; Popović, T. Forecasting Day-Ahead Electricity Metrics with Artificial Neural Networks. Sensors 2022, 22, 1051. [Google Scholar] [CrossRef]

- Ayub, N.; Irfan, M.; Awais, M.; Ali, U.; Ali, T.; Hamdi, M.; Alghamdi, A.; Muhammad, F. Big Data Analytics for Short and Medium-Term Electricity Load Forecasting Using an AI Techniques Ensembler. Energies 2020, 13, 5193. [Google Scholar] [CrossRef]

- Liu, K.; Hu, X.; Zhou, H.; Tong, L.; Widanalage, D.; Marco, J. Feature Analyses and Modelling of Lithium-ion Batteries Manufacturing based on Random Forest Classification. IEEE/ASME Trans. Mechatron. 2021, 26, 2944–2955. [Google Scholar] [CrossRef]

- Sales, M.H.R.; de Bruin, S.; Souza, C.; Herold, M. Land Use and Land Cover Area Estimates from Class Membership Probability of a Random Forest Classification. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 4402711. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, K.; Wang, Y.; Omariba, Z.B. Ice Detection Model of Wind Turbine Blades Based on Random Forest Classifier. Energies 2018, 11, 2548. [Google Scholar] [CrossRef]

- Xiong, F.; Cao, C.; Tang, M.; Wang, Z.; Tang, J.; Yi, J. Fault Detection of UHV Converter Valve Based on Optimized Cost-Sensitive Extreme Random Forest. Energies 2022, 15, 8059. [Google Scholar] [CrossRef]

- Sun, Y.; Que, H.; Cai, Q.; Zhao, J.; Li, J.; Kong, Z.; Wang, S. Borderline SMOTE Algorithm and Feature Selection-Based Network Anomalies Detection Strategy. Energies 2022, 15, 4751. [Google Scholar] [CrossRef]

- Dudek, G. A Comprehensive Study of Random Forest for Short-Term Load Forecasting. Energies 2022, 15, 7547. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Y.; Xie, D.; Wei, E.; Bao, X.; Chen, H.; Zhong, X. The Application of Improved Random Forest Algorithm on the Prediction of Electric Vehicle Charging Load. Energies 2018, 11, 3207. [Google Scholar] [CrossRef]

- Chi, Y.; Zhang, Y.; Li, G.; Yuan, Y. Prediction Method of Beijing Electric-Energy Substitution Potential Based on a Grid-Search Support Vector Machine. Energies 2022, 15, 3897. [Google Scholar] [CrossRef]

- Xia, D.; Zheng, Y.; Bai, Y.; Yan, X.; Hu, Y.; Li, Y.; Li, H. A parallel grid-search-based SVM optimization algorithm on Spark for passenger hotspot prediction. Multimedia Tools Appl. 2022, 81, 27523–27549. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, J.; Wei, M.; Zheng, Y.; Yang, Z. Optimal PI controller tuning for dynamic TITO systems with rate-limiters based on parallel grid search. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 5808–5811. [Google Scholar] [CrossRef]

- Kaewwiset, T.; Temdee, P. Promotion Classification Using DecisionTree and Principal Component Analysis. In Proceedings of the 2022 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunications Engineering (ECTI DAMT & NCON), Chiang Rai, Thailand, 26–28 January 2022; pp. 489–492. [Google Scholar] [CrossRef]

- Sadouni, O.; Zitouni, A. Task-based Learning Analytics Indicators Selection Using Naive Bayes Classifier and Regression Decision Trees. In Proceedings of the 2021 International Conference on Theoretical and Applicative Aspects of Computer Science (ICTAACS), Skikda, Algeria, 15–16 December 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Rahman, A.; Akter, Y.A. Topic Classification from Text Using Decision Tree, K-NN and Multinomial Naïve Bayes. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Zheng, H.; Xiao, F.; Sun, S.; Qin, Y. Brillouin Frequency Shift Extraction Based on AdaBoost Algorithm. Sensors 2022, 22, 3354. [Google Scholar] [CrossRef]

| Parameters of the Model | Value |

|---|---|

| CNN layer | 1 |

| Pooling layer | 1 |

| Activation function | ReLU |

| Dropout | 0.1 |

| GRU layer | 2 |

| Learning Rate | 0.01 |

| ATTENTION | 20 |

| Dense | 1 |

| Activation function | ReLU |

| Epoch | 30 |

| Date | Model | Evaluation Indicators | ||||

|---|---|---|---|---|---|---|

| RMSE | MSE | MAPE | MAE | R2 | ||

| 7.7–7.13 | CNN | 1135.941 | 1,290,361.632 | 12.214 | 585.238 | 0.778 |

| GRU | 1255.148 | 1,575,397.086 | 19.12 | 806.572 | 0.729 | |

| CNN-GRU | 1021.171 | 1,042,789.913 | 12.725 | 550.938 | 0.821 | |

| CNN-GRU-ATTENTION | 954.175 | 910,450.656 | 8.769 | 421.474 | 0.843 | |

| 8.9–8.15 | CNN | 850.32 | 723,044.915 | 19.473 | 670.68 | 0.861 |

| GRU | 1046.284 | 1,094,710.078 | 25.731 | 827.541 | 0.789 | |

| CNN-GRU | 528.219 | 279,015.322 | 12.111 | 385.355 | 0.946 | |

| CNN-GRU-ATTENTION | 381.397 | 145,463.416 | 6.923 | 225.526 | 0.972 | |

| 9.3–9.10 | CNN | 899.017 | 808,231.025 | 18.751 | 582.62 | 0.81 |

| GRU | 910.147 | 828,368.466 | 18.7 | 579.017 | 0.806 | |

| CNN-GRU | 813.783 | 662,242.698 | 17.11 | 462.629 | 0.845 | |

| CNN-GRU-ATTENTION | 715.628 | 512,124.114 | 11.532 | 343.945 | 0.88 | |

| 10.10–10.6 | CNN | 1136.644 | 1,291,958.965 | 21.276 | 953.556 | 0.814 |

| GRU | 1367.021 | 1868745.27 | 27.603 | 1211.353 | 0.731 | |

| CNN-GRU | 733.993 | 538,745.206 | 15.761 | 590.726 | 0.922 | |

| CNN-GRU-ATTENTION | 271.683 | 73,811.518 | 4.718 | 210.27 | 0.989 | |

| 11.11–11.17 | CNN | 552.078 | 304,790.535 | 17.406 | 381.762 | 0.83 |

| GRU | 723.403 | 523,311.337 | 26.181 | 586.918 | 0.708 | |

| CNN-GRU | 568.095 | 322,731.953 | 17.555 | 391.809 | 0.82 | |

| CNN-GRU-ATTENTION | 444.987 | 198,013.861 | 12.606 | 261.476 | 0.89 | |

| 12.20–12.26 | CNN | 704.854 | 496,819.766 | 15.71 | 565.275 | 0.896 |

| GRU | 939.185 | 882,067.55 | 19.323 | 825.093 | 0.816 | |

| CNN-GRU | 545.412 | 297,474.436 | 13.008 | 447.483 | 0.938 | |

| CNN-GRU-ATTENTION | 299.295 | 89,577.487 | 6.884 | 239.814 | 0.981 | |

| Parameters of the Model | Value |

|---|---|

| CNN layer | 1 |

| Pooling layer | 1 |

| Activation function | ReLU |

| Dropout | 0.2 |

| GRU layer | 2 |

| Learning Rate | 0.01 |

| ATTENTION | 50 |

| Dense | 1 |

| Activation function | ReLU |

| Epoch | 30 |

| Date | Model | Evaluation Indicators | ||||

|---|---|---|---|---|---|---|

| RMSE | MSE | MAPE | MAE | R2 | ||

| 11.5 | CNN | 242.513 | 58,812.314 | 3.032 | 197.926 | 0.868 |

| GRU | 249.603 | 62,301.758 | 3.12 | 204.326 | 0.861 | |

| CNN-GRU | 161.28 | 26,011.357 | 1.987 | 128.545 | 0.942 | |

| CNN-GRU-ATTENTION | 87.472 | 7651.373 | 1.048 | 69.202 | 0.983 | |

| 11.6 | CNN | 224.038 | 50,192.963 | 2.661 | 171.889 | 0.9 |

| GRU | 238.851 | 57,049.804 | 2.924 | 192.601 | 0.887 | |

| CNN-GRU | 191.55 | 36,691.222 | 2.194 | 142.646 | 0.927 | |

| CNN-GRU-ATTENTION | 87.584 | 7670.973 | 1.072 | 71.059 | 0.985 | |

| 11.7 | CNN | 204.26 | 41,722.352 | 2.371 | 161.488 | 0.912 |

| GRU | 217.894 | 47,477.897 | 2.547 | 174.975 | 0.899 | |

| CNN-GRU | 163.287 | 26,662.551 | 1.946 | 133.39 | 0.944 | |

| CNN-GRU-ATTENTION | 135.814 | 18,445.367 | 1.761 | 118.073 | 0.961 | |

| 11.8 | CNN | 226.874 | 51,472.017 | 2.718 | 194.502 | 0.913 |

| GRU | 184.299 | 33,966.042 | 2.098 | 147.527 | 0.943 | |

| CNN-GRU | 154.429 | 23,848.364 | 1.668 | 115.811 | 0.96 | |

| CNN-GRU-ATTENTION | 114.103 | 13,019.567 | 1.398 | 96.397 | 0.978 | |

| 11.9 | CNN | 252.1 | 63,554.307 | 2.836 | 196.709 | 0.902 |

| GRU | 254.582 | 64,811.78 | 3.18 | 224.129 | 0.9 | |

| CNN-GRU | 191.682 | 36,741.817 | 2.092 | 145.075 | 0.943 | |

| CNN-GRU-ATTENTION | 144.512 | 20,883.604 | 1.528 | 108.124 | 0.968 | |

| 11.10. | CNN | 307.486 | 94,547.492 | 3.843 | 260.032 | 0.871 |

| GRU | 220.718 | 48,716.269 | 2.607 | 181.417 | 0.934 | |

| CNN-GRU | 200.243 | 40,097.387 | 2.239 | 152.155 | 0.945 | |

| CNN-GRU-ATTENTION | 110 | 12,099.979 | 1.246 | 86.342 | 0.983 | |

| 11.11 | CNN | 274.768 | 75,497.405 | 3.285 | 227.867 | 0.9 |

| GRU | 383.623 | 147,166.845 | 4.283 | 300.485 | 0.804 | |

| CNN-GRU | 311.187 | 96,837.065 | 3.637 | 251.12 | 0.871 | |

| CNN-GRU-ATTENTION | 127.859 | 16,348.029 | 1.377 | 96.911 | 0.978 | |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 1261 | 164 | 157 | 0.887 | 0.889 | 0.884 | 0.887 |

| AdaBoost | 1370 | 55 | 99 | 0.947 | 0.932 | 0.961 | 0.946 |

| RandomForest | 1402 | 23 | 117 | 0.841 | 0.952 | 0.950 | 0.950 |

| GridSearchCV-RandomForest | 1402 | 23 | 120 | 0.949 | 0.951 | 0.949 | 0.949 |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 90 | 0 | 2 | 0.988 | 0.978 | 1.0 | 0.989 |

| AdaBoost | 90 | 0 | 0 | 1.0 | 1.0 | 1.0 | 1.0 |

| RandomForest | 90 | 0 | 0 | 1.0 | 1.0 | 1.0 | 1.0 |

| GridSearchCV-RandomForest | 90 | 0 | 0 | 1.0 | 1.0 | 1.0 | 1.0 |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 397 | 268 | 121 | 0.936 | 0.766 | 0.596 | 0.671 |

| AdaBoost | 605 | 60 | 60 | 0.980 | 0.909 | 0.909 | 0.909 |

| RandomForest | 503 | 162 | 8 | 0.972 | 0.984 | 0.756 | 0.855 |

| GridSearchCV-RandomForest | 620 | 45 | 12 | 0.992 | 0.981 | 0.932 | 0.956 |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 489 | 24 | 15 | 0.962 | 0.970 | 0.953 | 0.961 |

| AdaBoost | 465 | 48 | 18 | 0.935 | 0.962 | 0.906 | 0.933 |

| RandomForest | 497 | 16 | 16 | 0.968 | 0.968 | 0.968 | 0.968 |

| GridSearchCV-RandomForest | 498 | 15 | 15 | 0.934 | 0.970 | 0.970 | 0.970 |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 552 | 123 | 144 | 0.786 | 0.793 | 0.817 | 0.805 |

| AdaBoost | 570 | 105 | 147 | 0.798 | 0.794 | 0.844 | 0.818 |

| RandomForest | 603 | 72 | 71 | 0.835 | 0.894 | 0.893 | 0.893 |

| GridSearchCV-RandomForest | 612 | 63 | 88 | 0.879 | 0.874 | 0.906 | 0.890 |

| Parameters of the Model | Value |

|---|---|

| CNN layer | 1 |

| Pooling layer | 1 |

| Activation function | ReLU |

| Dropout | 0.2 |

| GRU layer | 2 |

| Learning Rate | 0.01 |

| ATTENTION | 20 |

| Dense | 1 |

| Activation function | ReLU |

| Epoch | 30 |

| RMSE | MSE | MAPE | MAE | R2 | |

|---|---|---|---|---|---|

| CNN | 22.959 | 527.094 | 13.090 | 18.354 | 0.879 |

| GRU | 46.992 | 2208.262 | 22.894 | 38.616 | 0.773 |

| CNN-GRU | 21.439 | 459.613 | 10.759 | 16.165 | 0.895 |

| CNN-GRU-ATTENTION | 16.903 | 17.030 | 8.545 | 12.804 | 0.935 |

| True Positives | False Negatives | False Positives | Accuracy | Precision | Recall | F1 Score | |

|---|---|---|---|---|---|---|---|

| DecisionTree | 1254 | 562 | 0 | 0.900 | 0.936 | 0.845 | 0.874 |

| AdaBoost | 1801 | 15 | 3813 | 0.324 | 0.513 | 0.500 | 0.251 |

| RandomForest | 1254 | 562 | 25 | 0.896 | 0.926 | 0.842 | 0.869 |

| GridSearchCV-RandomForest | 1623 | 193 | 39 | 0.959 | 0.964 | 0.893 | 0.933 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Liu, D.; Wang, M.; Wang, H.; Xu, S. Detection of Outliers in Time Series Power Data Based on Prediction Errors. Energies 2023, 16, 582. https://doi.org/10.3390/en16020582

Li C, Liu D, Wang M, Wang H, Xu S. Detection of Outliers in Time Series Power Data Based on Prediction Errors. Energies. 2023; 16(2):582. https://doi.org/10.3390/en16020582

Chicago/Turabian StyleLi, Changzhi, Dandan Liu, Mao Wang, Hanlin Wang, and Shuai Xu. 2023. "Detection of Outliers in Time Series Power Data Based on Prediction Errors" Energies 16, no. 2: 582. https://doi.org/10.3390/en16020582

APA StyleLi, C., Liu, D., Wang, M., Wang, H., & Xu, S. (2023). Detection of Outliers in Time Series Power Data Based on Prediction Errors. Energies, 16(2), 582. https://doi.org/10.3390/en16020582