1. Introduction

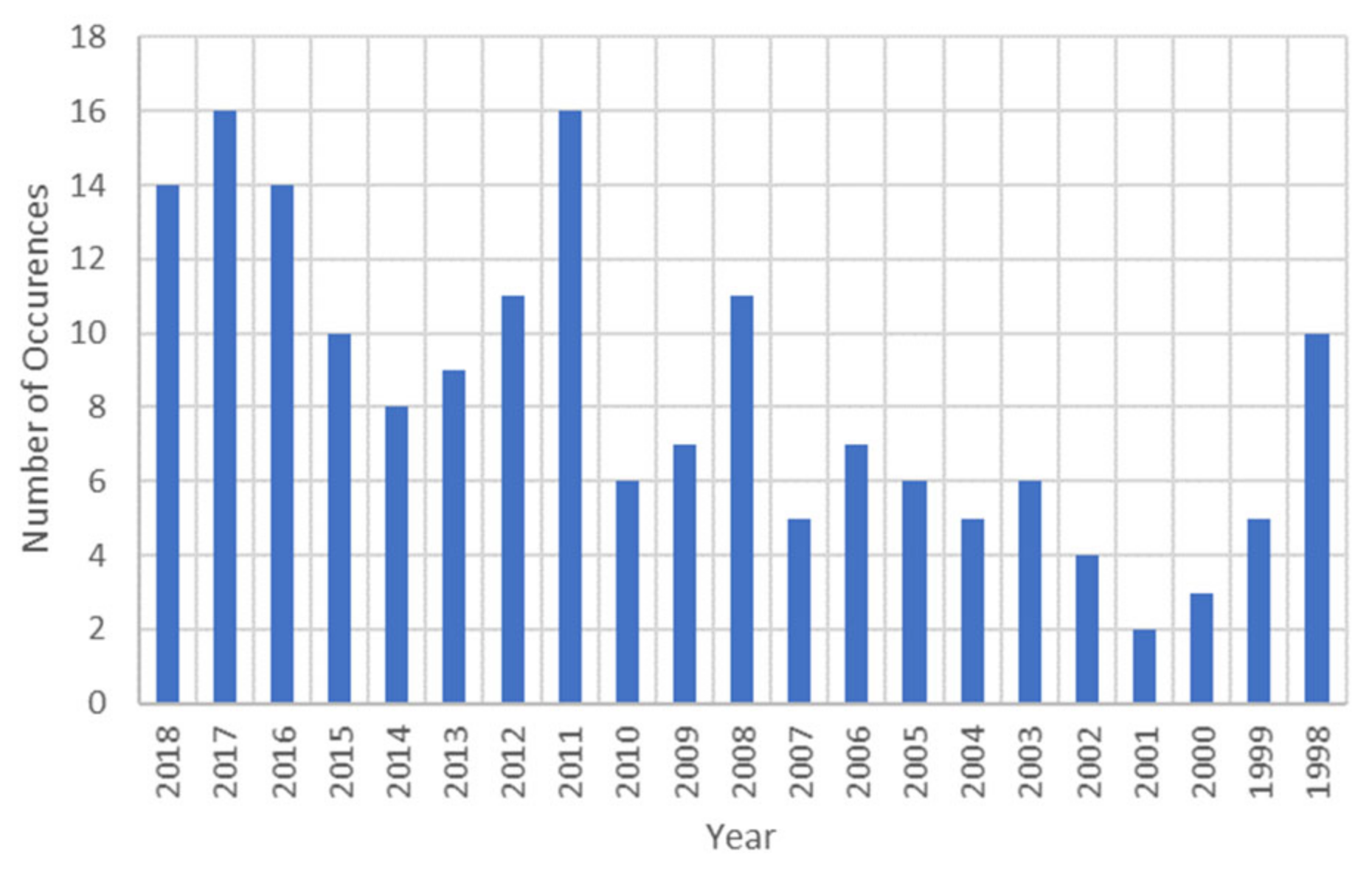

As the modern lifestyle becomes more electricity reliant, the conventional power distribution system faces challenges due to the rapid infiltration of distributed energy resources (DERs) and the increasing frequency of natural disasters [

1]. The number of occurrences of major natural disasters per year that resulted in the loss of over

$ 1 billion is shown in

Figure 1. This figure clearly indicates the growing frequency of massive calamities [

1]. Moreover, with a higher degree of electricity dependency, electric outages not only cause financial damage but also result in the loss of lives. Hurricane Irma provided a strong example where 29 out of 75 (39%) total deaths were due to power-related causes [

2] (see

Table 1). Hence, the modernization of the power grid in order to address such critical issues requires urgent attention. The deployment of microgrids (MGs) to improve grid reliability has been conceptualized as one of the solutions [

3].

Recent developments in microgrid technology have added different functions, such as improving reliability, supply-demand balance, and economic dispatch, to create what has been called advanced microgrid (AMG) systems by Cheng et al. in [

3]. The systems, such as AMG, introduce a three-tier hierarchical control structure where primary controls include protection, converter output control, voltage and frequency regulation, power sharing, and operation in the millisecond range [

4,

5,

6]. The secondary controls consist of the energy management systems (EMSs), which can be viewed as multi-objective optimization tools that take several inputs, such as load profiles, generation forecasts, and market information, and use them for specified objectives, such as cost mitigation, demand response management, and power quality maintenance [

3]. The operating time for secondary controls can range from seconds to hours [

3]. The proposed machine learning (ML)-based EMS replaces the traditional optimization methods with the objective of improving the power availability to critical loads during disasters and prolonged outages. Lastly, tertiary controls are the highest level controls, often enforced by the distribution system operator (DSO), and have the slowest time range of minutes to days [

3]. Tertiary controls will be out of the scope of the current study, as the proposed solution is targeted for implementation in residential environments independent of the energy providers.

The AMGs have focused on several objectives, such as cost optimization, supply-demand management, integration of DER, power quality maintenance, and the improvement of the reliability and resilience of the entire grid. However, limited attention has been dedicated to the improvement of power delivery to critical loads during disasters and prolonged outages. The load curtailing concept presented in [

3] requires either communication with the DSO during contingencies or additional predictive algorithms to estimate the time to recover (TTR). Furthermore, the model requires continuous updating, which is computationally expensive. A micro-grid formation concept is presented in [

7], which shares the closest objective with this study. The authors have used mixed integer linear programming (MILP) to optimally create microgrids within the existing grid infrastructure using connection points that already exist. This study presents an effective solution but considers a different approach to the system-level solution rather than focusing on the functionality of the microgrid and respective EMS/optimization techniques. Furthermore, the initial capacity of each DER in this model can cause significant changes in the formation patterns.

The conventional EMSs utilize model predictive controls (MPCs) that rely on deterministic models [

3]. The occurrence and consequences of natural disasters cannot be determined by deterministic models at the same time, a significant amount of DER infiltration at the end consumer level can cause discrepancies. Therefore, probabilistic models provide better solutions. Machine learning algorithms (MLAs) utilize the sample of trajectories gathered from interaction with a real system or simulation rather than depending on a deterministic model [

8]. In [

8], the authors prove that reinforcement learning (RL) can offer comparable performance with that of MPCs even when good deterministic models are available.

More recent interest in using machine intelligence for optimization and load scheduling has been presented in [

9,

10,

11,

12]. In [

9], authors present a dueling deep Q-learning targeted towards emergency load shedding. The difference in the priority (reward function) leads to a more complicated algorithm and is limited to simulations; this article neglects the details of implementation. In [

10], authors utilize Q learning to optimize the scheduling of electric vehicle charging. In [

11,

12], the authors propose different variations in Q-learning to achieve objectives, such as improving the profit margin and microgrid interconnection. While these studies establish that Q-learning can be an effective algorithm to be considered for various objectives within power distribution, the proposed study focuses on the objective of critical reliability improvement and provides an integrated real-time study with experimentation from both power and machine learning perspectives.

A solution for local microgrids with DER integration capabilities and machine intelligence has been suggested in [

13]. This article is the continuation of the work presented in [

13]. The objective of this study is to prove the feasibility of utilizing the Q-learning algorithm as the core of EMS targeted toward the improvement of critical reliability. The study includes the theoretical analysis, integrated simulation model, reliability analysis, and support of experimental results. In [

13], the support vector machine (SVM) was used for EMS, whereas this study considers Q-learning due to its inherent advantages, including its unsupervised nature, continuous learning, and adaptability. Furthermore, [

13] does not include any experimental verification, which is provided in this manuscript.

This paper is structured as follows:

Section 2 describes the power electronics (PE) model of MPEI with its state space equations and discusses changes in the model with varying converter functionality.

Section 3 describes the Q-learning-based EMS with Q-value generation, reward calculation, and flow chart for implementation.

Section 4 describes the effect of EMS output on the functionality and system-level model of the MPEI.

Section 5 and

Section 6 provide simulation results and experimentations, respectively.

Section 7 discusses the significance of the proposed method with experimental case studies.

Section 8 includes the conclusion.

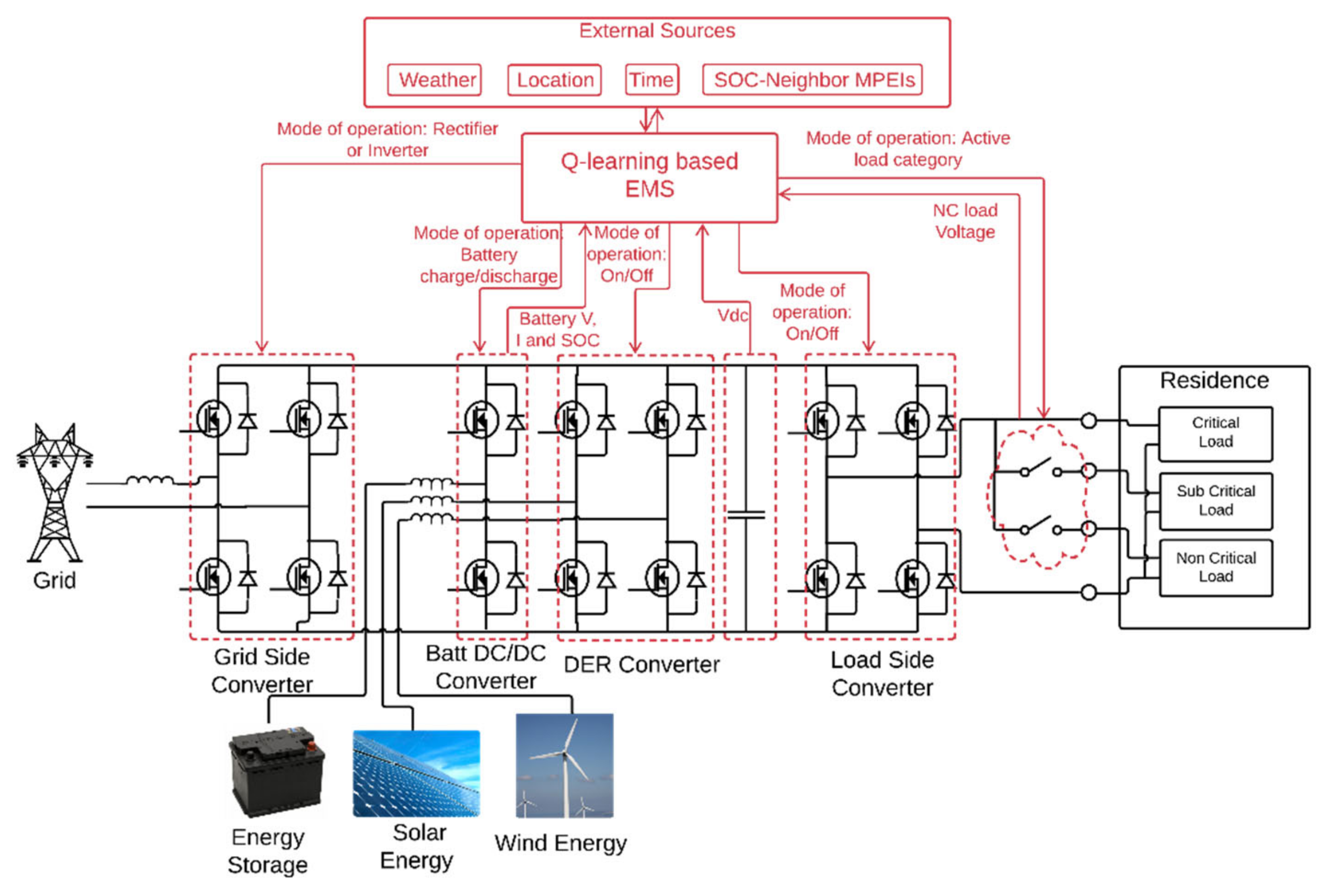

2. Power Electronics Model

The foundation of the presented power electronics interface is based on the multi-port power electronics interface (MPEI) described in [

14,

15]. The version of MPEI that was considered and developed for this study is based on a single-phase system that includes four individual converters with load categorization capabilities, as described in [

13]. Furthermore, the MPEI has the capability of incorporating the MLA outputs. The details and schematic are presented in

Figure 2.

The four different converters that have been considered are the grid side converter (GSC), battery interface (BI), DER converter (DERC), and load side converter (LSC). This section provides the details for the derivation of the state space equation for GSC and lists the state space models for the rest of the converters generated using a similar procedure. The subscripts

x and

conv used in naming convention

are listed in

Table 2.

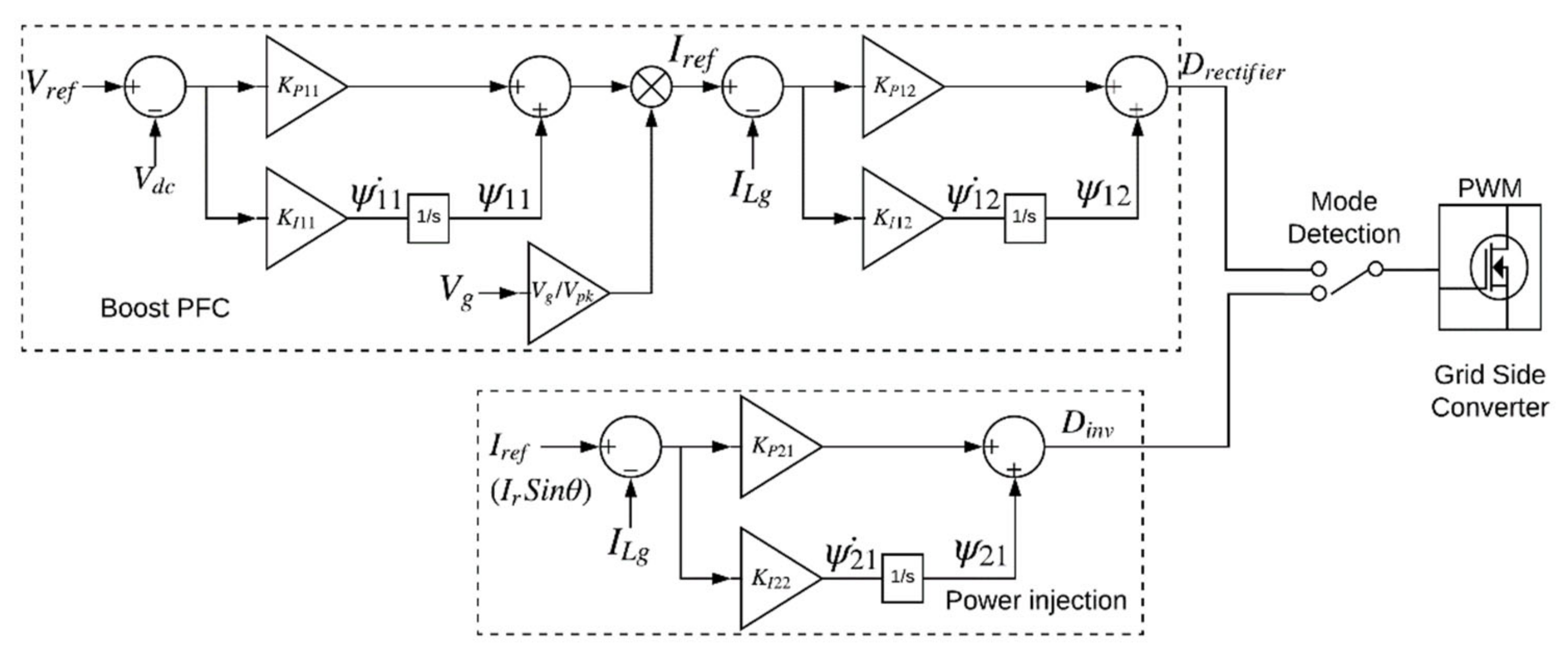

The GSC is a bidirectional converter that behaves as a rectifier with power factor correction (PFC) when the power flows from the grid to the DC bus and as an inverter when the power flows from the DC bus to the grid. The equivalent circuit of GSC while operating in rectifier and inverter modes has been included in [

13]. The control schemes implemented for both modes are shown in

Figure 3. The duty cycle-based weighted average state space equations can be generated. The parameters from the control schemes can be substituted into the average equations, and linearization can be performed to generate the complete state space models presented in Equations (1) and (2) for the rectifier and inverter modes, respectively [

13].

The battery interface is also a bidirectional converter which behaves as a boost converter when the battery provides power to the DC bus and as a buck converter in the reverse direction. The procedure described for GSC can be used to generate the state space equations for BI as presented by Equations (4) and (5) for the discharge and charge modes, respectively. The details of BI have been documented in [

13].

Similarly, the DERC is a unidirectional converter that resembles the boost mode of the BI and LSC is a unidirectional voltage mode inverter [

13]. The state space equations for DERC, and the LSC are included as Equations (3) and (4).

The controller gains for each of the converters are chosen independently, and the stability of the converters is ensured individually by locating the poles of the resultant transfer function in the left-hand side plane. The controller gains and the respective poles for the converters are listed in

Table 3. The converters are then integrated using a shared DC bus to create an MPEI. For this application, the behavior of the MPEI is a combined performance of the four converters discussed above. Hence, the characteristic of the MPEI varies with the operational mode of each of the converters, and the state space equation of the MPEI depends on the converter modes at the instant of consideration. There are 36 possible combinations of different modes for the four converters, as depicted in

Table 4. Each combination results in a different characteristic equation for the MPEI.

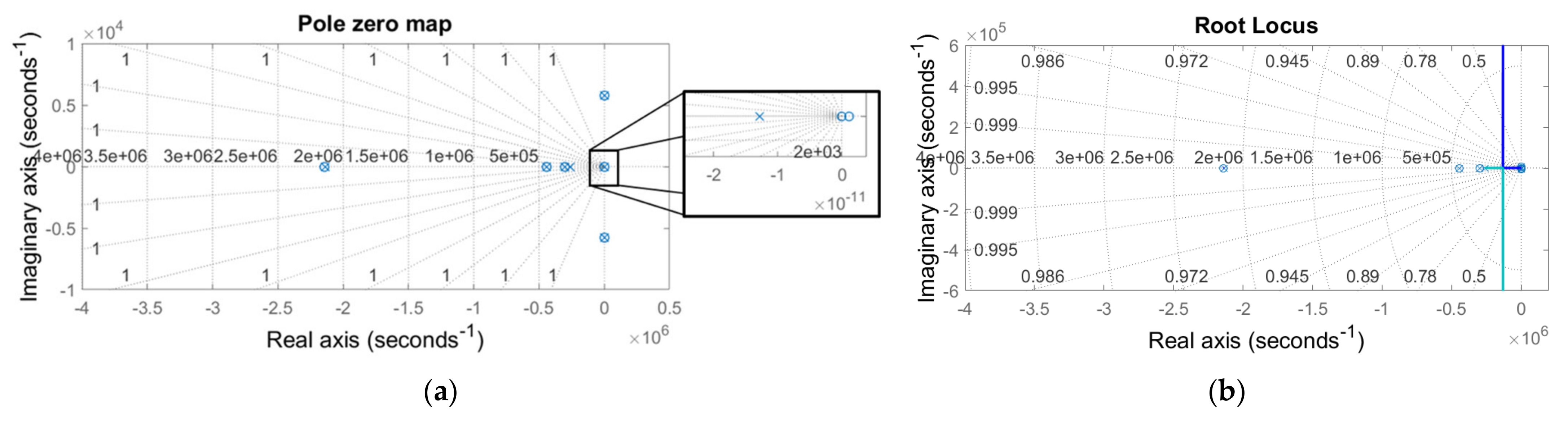

Considering the operational state of MPEI, where the modes of operation for the converters are as highlighted in

Table 5, the state space model of the MPEI can be represented by Equations (7)–(11). The component matrices of the state space Equation (7) are included as Equations (8)–(11). The pole-zero diagram and the root locus for this state of MPEI are shown in

Figure 4a and

Figure 4b, respectively. Notably, there are a pair of complex conjugate poles stemming from LSC. These poles can potentially initiate an underdamped oscillatory response. It is important to select the controller gains so that the closed loop poles of the system can be real and stable. As the operational state changes, the mode of operation for each converter changes leading to a different set of state space equations. Once the characteristic equation of the MPEI is determined, the stability analysis can be performed on the complete power electronics system. More details are documented in [

15,

16].

3. Energy Management System

As mentioned in the introduction, the Q-learning-based EMS (QEMS) implemented in this study is an MLA-based EMS. The MLA explored for this study is the Q-learning algorithm. Q-learning is a model-free reinforcement learning method that follows the Markov decision-making process. The Q-learning algorithm views MPEI as an agent that implements the controlled Markov process with the goal of selecting an optimal action for every possible state [

17].

The Q-learning operates by using three simple steps: reward calculation, Q value update, and locating the maximum rewards. The reward calculation is the user-set criteria that assign either a positive reward for desirable results or a negative penalty for undesirable results. A multitude of parameters can be chosen for reward calculation depending on the application. This is discussed in more detail in the following paragraphs. The Q value is the value stored in the Q table and is generated using Equation (12) [

17], where

is the current state,

is the action performed,

is the subsequent state,

is the immediate reward,

is the new Q value,

is the previous Q value, and

is the learning factor, most often chosen to be very close to 1. Lastly, the maximum Q value for a particular state is chosen using the argmax function, as shown in Equation (13). This article will not include extensive discussions of Q-learning algorithms as more details are provided by Watkins in [

17].

The Q table considered for this application consists of all the possible states of the input features as the rows and all possible actions as the columns. The input features that have been considered are weather, time, grid voltage, the state of charge (SoC) of the battery, and the SoC of neighboring battery storage systems. For simplification, the binary states of “good” or “bad” have been chosen for each of these features. Hence, the total number of possible states with given features is 2

5 (32). The actions are the modes of the MPEI or are the combined result of the four different converters operating in different modes. As discussed in

Section 2, the total number of possible actions is 36. Reducing the possible modes of the BI to discharge and charge only and eliminating the turn-off function reduces the number of actions to 24. Therefore, the Q-table for this application is a 32 × 24 matrix.

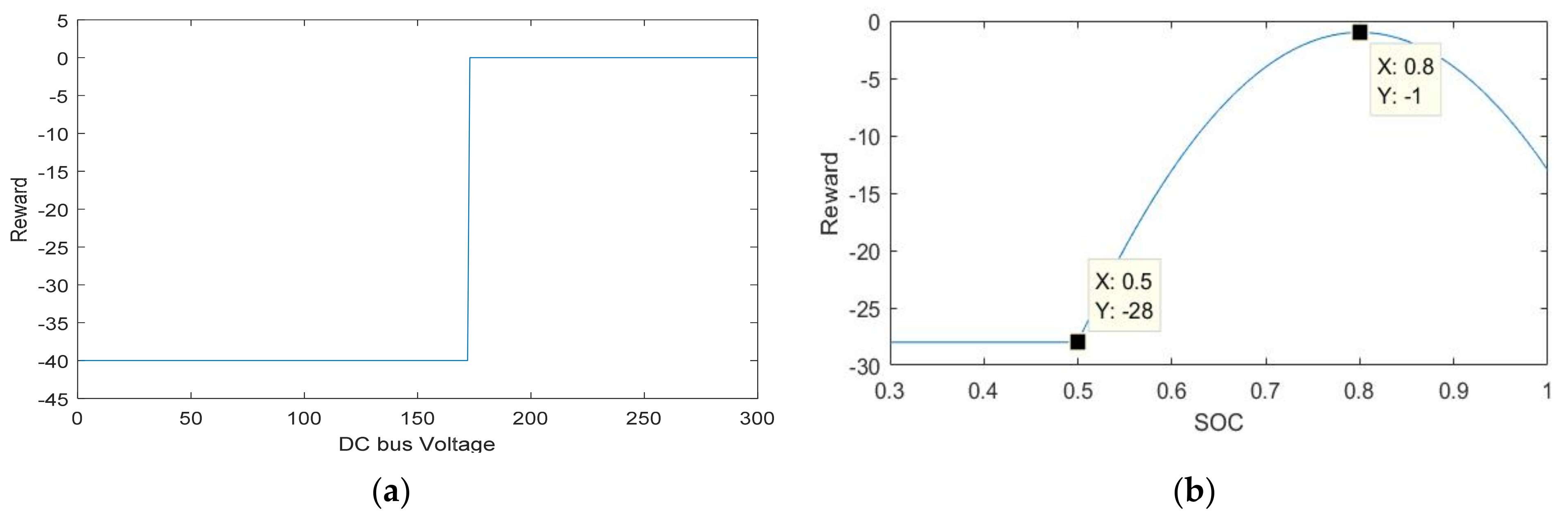

Reward calculation is an important step that assigns the parameters of interest, and priorities and, most importantly, allows for dictating the behavior of Q-learning algorithms to fit the application at hand. The objective of this study is to explore the feasibility of Q-learning algorithms when used as EMSs. EMSs can have complex multi-objective goals, but for proof-of-concept purposes, this study focuses on the single objective of reliability improvement. Since the LSC is directly connected to the DC bus, reliability translates to maintaining the DC bus to the desired value during all possible states. Hence, the main reward parameter is the DC bus voltage value. The bus voltage reward value is generated using a threshold detection method, as shown in

Figure 5a. The Q-learning algorithm penalizes the action with −40 points if the DC bus voltage drops below 170 V. The second parameter of interest is the SoC of the battery. The SoC reward is generated using a continuous function—a bell-shaped curve with a maximum at 80% SoC and a lower limit of −28 at 50% and below, as shown in

Figure 5b. The total reward is the cumulative value of both rewards.

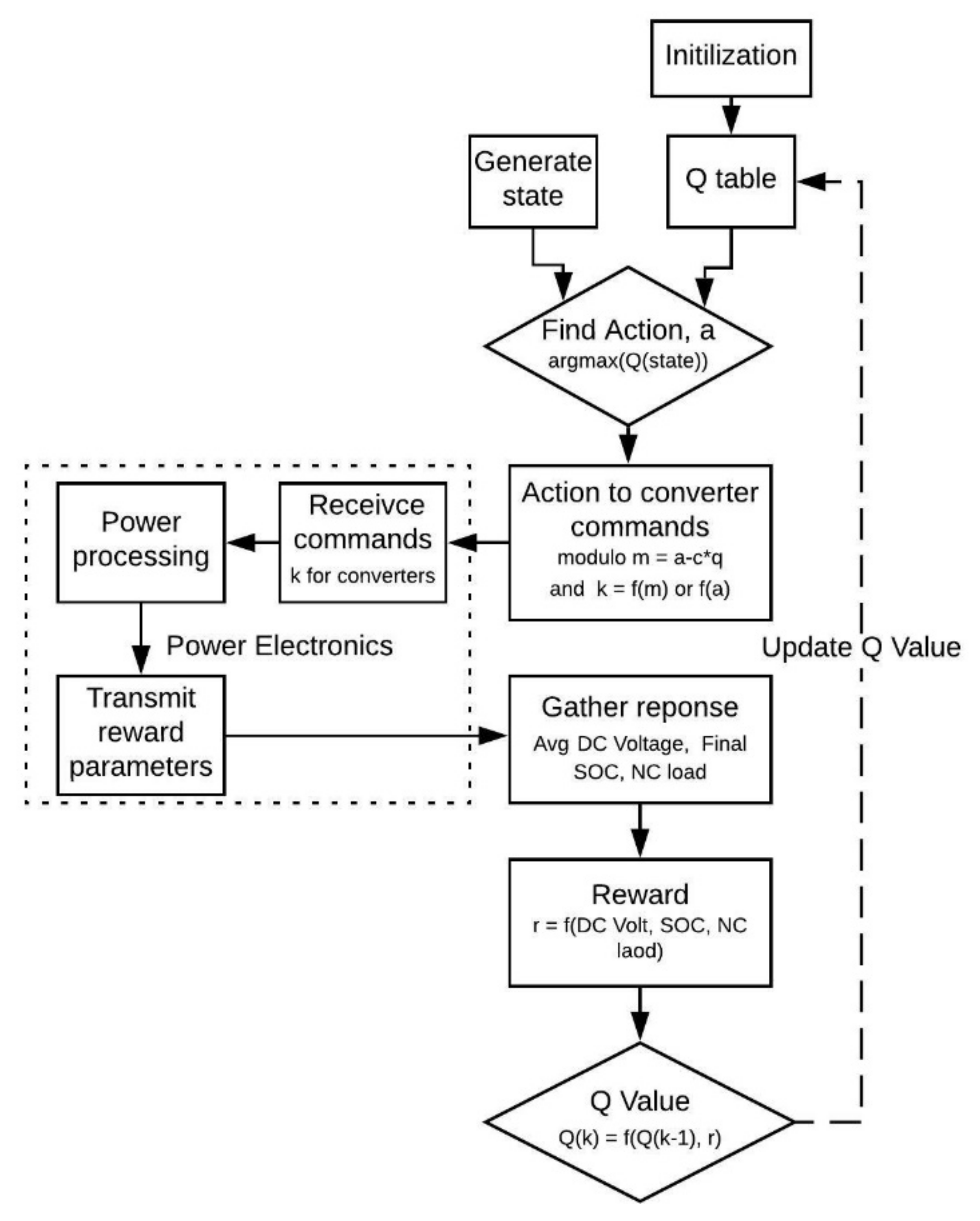

The flow diagram for the operation of the implemented Q-learning-based EMS is shown in

Figure 6. The Q-learning algorithm first detects the input features to generate the state. Once the input state is detected, the corresponding row of the Q-table is scanned using Equation (13) to locate the column with the maximum reward. The resultant column number is named the action value,

a, in this article. The

a value then gets communicated to the MPEI, where it is translated to specific operational functions for each of the converters. Once the corresponding power conversion occurs, the response is recorded, and the reward parameters are communicated back to the EMS. The reward is then calculated, and the Q-value for the particular element of the Q-table is updated using Equation (12). The process is continually repeated. The Q-table is initialized with all zeroes, and if the maximum Q-value repeats in a row, argmax picks the column where it encounters the value for the first time.

4. Theoretical Integration

As discussed in

Section 2, the MPEI can have different state space equations based on the mode of operation for the converters. The mode of the converters depends on the output of the Q-learning algorithm and the

a value. The theoretical integration of MPEI and Q-learning depends on how the

a value is translated to the commands for each of the converters and thereby allows for the determination of characteristic equations of the MPEI directly from the Q-learning output. This simplifies the process of performing the stability analysis by considering only the active converter modes.

The command for each of the converters is indicated with

where

x is the subscript corresponding to the converter (g represents GSC, b represents BI, and so on). For instance,

kg = 1,2,3 refer to GSC operating in the rectifier mode, inverter mode, and turned off, respectively. The

values for each of the converters are described in

Table 5. Furthermore,

represents the state space equation for each of the converters, as shown in

Table 5 and Equation (14). The

represents the state space model of the GSC in rectifier mode, which can be obtained by expanding the corresponding equation included in Equation (14) to Equation (1). The state space equations for the rest of the converters in different modes can be obtained similarly.

The

values for each of the converters can be obtained by using Heaviside step functions together with modular arithmetic. The Heaviside function is defined by Equation (15) where

h(x) is 0 for

x ≤ 0 and 1 for

x > 0. The value of

for GSC is determined directly using Equation (16), where

kg = 1 for

a < 8,

kg = 2 for

8 ≤

a < 16, and

kg = 3 for

a ≥ 16. This value of

kg determines the operation of GSC as shown in

Table 4. For the remaining converters, modular arithmetic is used in addition to the Heaviside function, as shown in Equations (17) and (18), where

m,

c, and

q are all positive integers, and

q is the maximum possible value for each

a. Each converter is assigned with a different value of

c, the parameter

m is then calculated using

c, and

m is finally used to calculate

kx. For instance, when

a = 7, for GSC:

kg = 1, for BI:

m = 3 (since

c = 4),

kb = 2, for DERC:

m = 7,

kd = 2, and for LSC:

m = 1,

kl = 2. The output of the Q-learning algorithm can hence be translated into commands for each converter in MPEI.

Furthermore, each

kx value is also associated with converter equations

and the combination of individual equations,

determines the total system equation for the MPEI. This can be represented as Equation (19), where the components of the leading matrices

g1,

g2,

g3,

b1,

b2,

b3,

d1,

d2,

l1,

l2 have values of 1 or 0 depending on the output of the Q-learning. The leading matrices are all initialized with 0 s. Once the

kx values are determined, the nth component of the leading matrices is replaced by 1 where n =

kx, as shown in Equation (18). For the scenario when a = 7, Equation (19) can be simplified to Equation (20), which can be further expanded using Equations (1)–(6), resulting in Equations (8)–(11). The stability and control analysis can then be performed for the MPEI for this particular mode without considering all the possible combinations. Similarly, if the scenario was to change to

a = 20, the system equations for MPEI would change to a scenario where the GSC is off, BI is discharging, DERC and LSC are on, and the equations are presented in [

16]. Hence, the system characteristics of the MPEI vary with the output (action value) of the Q-learning algorithm.

5. Simulation

The simulation of the system with the Q-learning algorithm and MPEI has been performed using Simulink. The MPEI model consists of four different converters, as described in

Section 2. The complete MPEI model has been described in detail in [

13]. The same Simulink model has been utilized in this study as well. However, in [

13], the simulation is rooted in the Simulink platform, where the different blocks for power electronics, communication, and machine learning have been included in the model. The support vector machine (SVM) was implemented using the Matlab classification learner application. In this study, the Q-learning algorithm has been scripted as an m-file, which generates the input features, performs the Q-learning algorithm, initializes and runs the Simulink-based MPEI model, and calculates and updates the rewards.

The Q-table is initialized with all zeros. Before the successful implementation of the Q-learning algorithm, the Q-table must be filled with appropriate reward values. This process is called training. Training is most often conducted in a controlled lab environment prior to deployment. For training purposes, the input features are randomly generated, and, in other words, the input states of the iterations are randomly determined. The simulated system has 36 total states with 24 different actions; hence, each state has to occur at least 24 times before the completion of training. Thus, the least number of iterations required is 864 (24 × 36). A total of 2550 iterations have been performed to ensure that the Q-table is appropriately populated. A workstation with 16 cores was used, where 15 cores were operated in parallel to reduce the computation time.

Since the MPEI model used in this study and [

13] is identical, the stability and dynamics of the MPEI at different modes can be confirmed using the results presented in [

13]. The more important outcomes of the Q-learning-based simulations are the results at the end of iterations that confirm the learning capability and performance of the Q-learning algorithm.

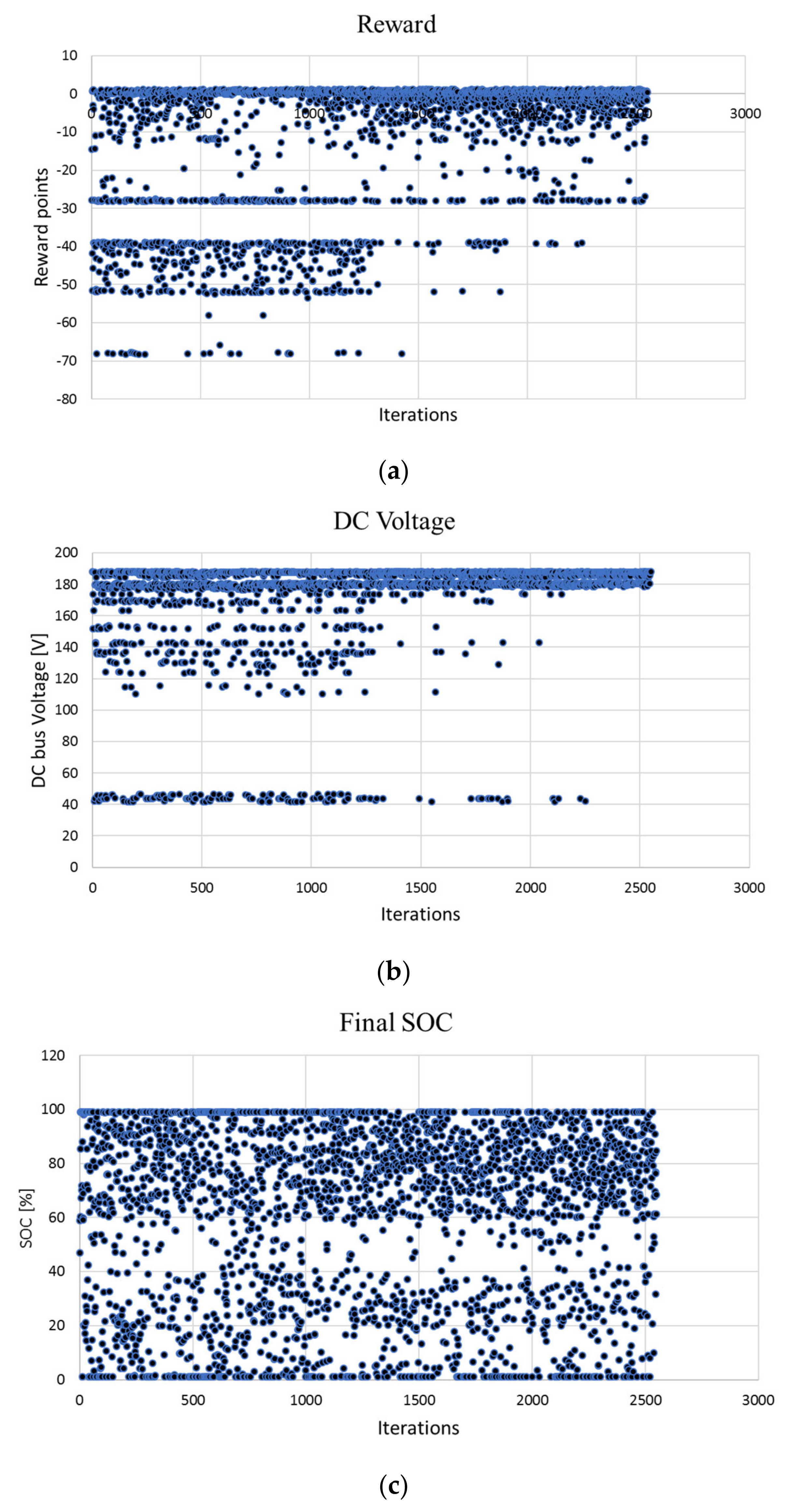

Figure 7a–c shows the total rewards, minimum DC bus voltages, and the SoC of the battery at the end of each iteration. The reward values were calculated as discussed in

Section 3 and are presented in

Figure 5. The Q-table is initialized with all zeros, and the input features are randomly chosen. Therefore, with the increase in iterations, the reward values are expected to increase (less negative), the DC bus voltage is expected to be maintained at a nominal value, and the final SoC of the battery should be determined by

Figure 5b. These trends can be observed in the results presented in

Figure 7a–c.

The reward value is the sum of the DC bus voltage reward and the final SoC-based reward in

Figure 5. Hence, the maximum possible penalty for this simulation is −68. If the system maintains the DC bus voltage, but the final SoC falls below 50%, then the reward is −28, and if the final SoC is 80% but the DC bus voltage is below 170 V, then the resultant penalty is −40. These values can be clearly as seen in

Figure 7a. The stepwise nature of the DC bus reward and the lower limit of the SoC reward results in the steps for the total reward distribution. The continuous nature of the SoC reward (inverse parabola) for the final SoC greater than 50% contributes towards the values between the steps. More importantly, as seen from

Figure 7a, highly negative penalties start disappearing as the number of iterations increases indicating that the Q-learning algorithm is learning and making corrective decisions while increasing the number of iterations.

Such trends can also be observed in the iterative distribution of the DC bus voltage. During the initial iterations, one can see that the frequency of the DC bus voltage dropped below the threshold of 170 V, which is very high. The bus voltage becomes more stable as the number of iterations increases, and it can be seen that after about 2000 iterations, the DC bus voltage drops only in six out of 550 iterations. Hence, the probability of obtaining a stable DC bus voltage using this system, considering all the possible scenarios, is about 0.989.

Lastly,

Figure 7c presents the final SoC recorded at the end of each iteration. The power electronics simulation runs for 0.3 s, but the time has been scaled such that 0.3 s of charging or discharging affects the total SoC of the battery by 6%. The initial SoC of the battery was chosen to be any random value between 0% and 100%; this provided the software with enormous amounts of selection options resulting in the requirement of a large number of iterations (2550). This is also the reason why the learning cannot be deciphered easily from the final SoC distribution. However, tracking the final SoC for one particular state reveals the improvement. The final SoC reward distribution has a lower limit starting at 50% SoC, which implies that the penalty for maintaining the battery SoC at 50% is the same as depleting it further. Hence, at a 50% initial SoC, the Q-learning algorithm commands the NC load to stay on even though the battery SoC is low. In practical applications, the discharge is turned off when SoC drops below a certain level; such protection has been implemented during experimentation.

Hence, the simulation results verify the successful training of the Q-learning algorithm and confirm the effective implementation. Furthermore, since the LSC is connected to the DC bus and is immune to fluctuations in the grid or any other source, the lower penalties and stable bus voltage towards the end of training (>2000 iterations) verify that using this Q-learning-based EMS can ensure the stable critical load voltage. As shown in

Figure 7b, the proposed Q-learning-based EMS allowed the load voltage to drop only 7 times in the last 550 iterations. Assuming that the model is fully trained with 2000 iterations and that the grid voltage value is bad (below 170 V

pk) for half of the total iterations, the suggested EMS ensures the stability of the load voltage with an overall probability of 0.987. The probability of obtaining a stable load voltage when the grid is unavailable is 0.974. Therefore, it can be concluded that the critical reliability of the system can be improved by using the proposed EMS compared to existing systems without an intelligent EMS. A thorough comparison with other AMG studies has not been included as a part of this article since the current objective of this study is to prove the feasibility of Q-learning-based EMS and to realize the initial improvement in terms of critical reliability; comparisons will be performed as a part of future work.

The hereby developed algorithm will be used with the MPEI test bed for experimental verification in the following section.

6. Experimental Verification

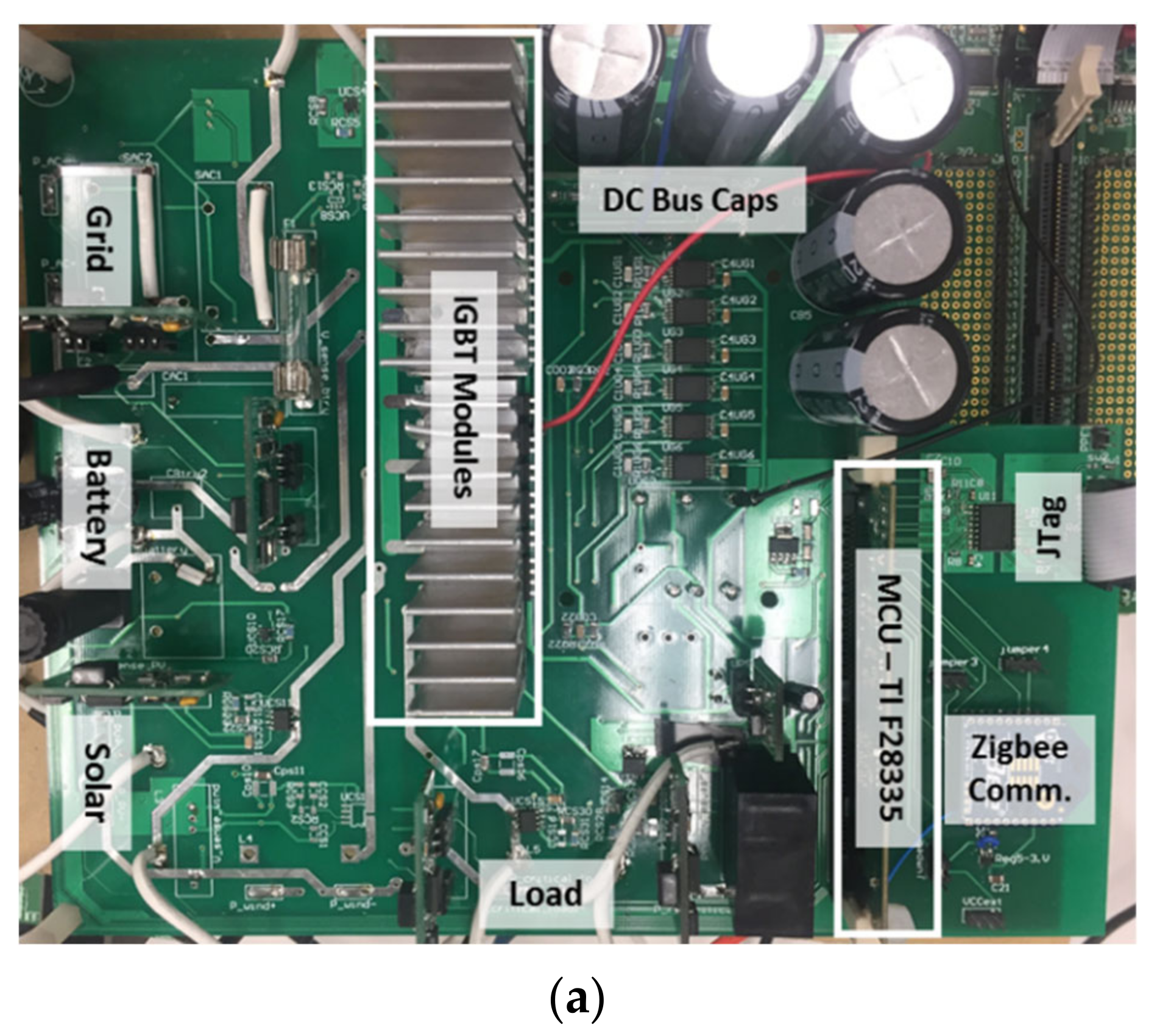

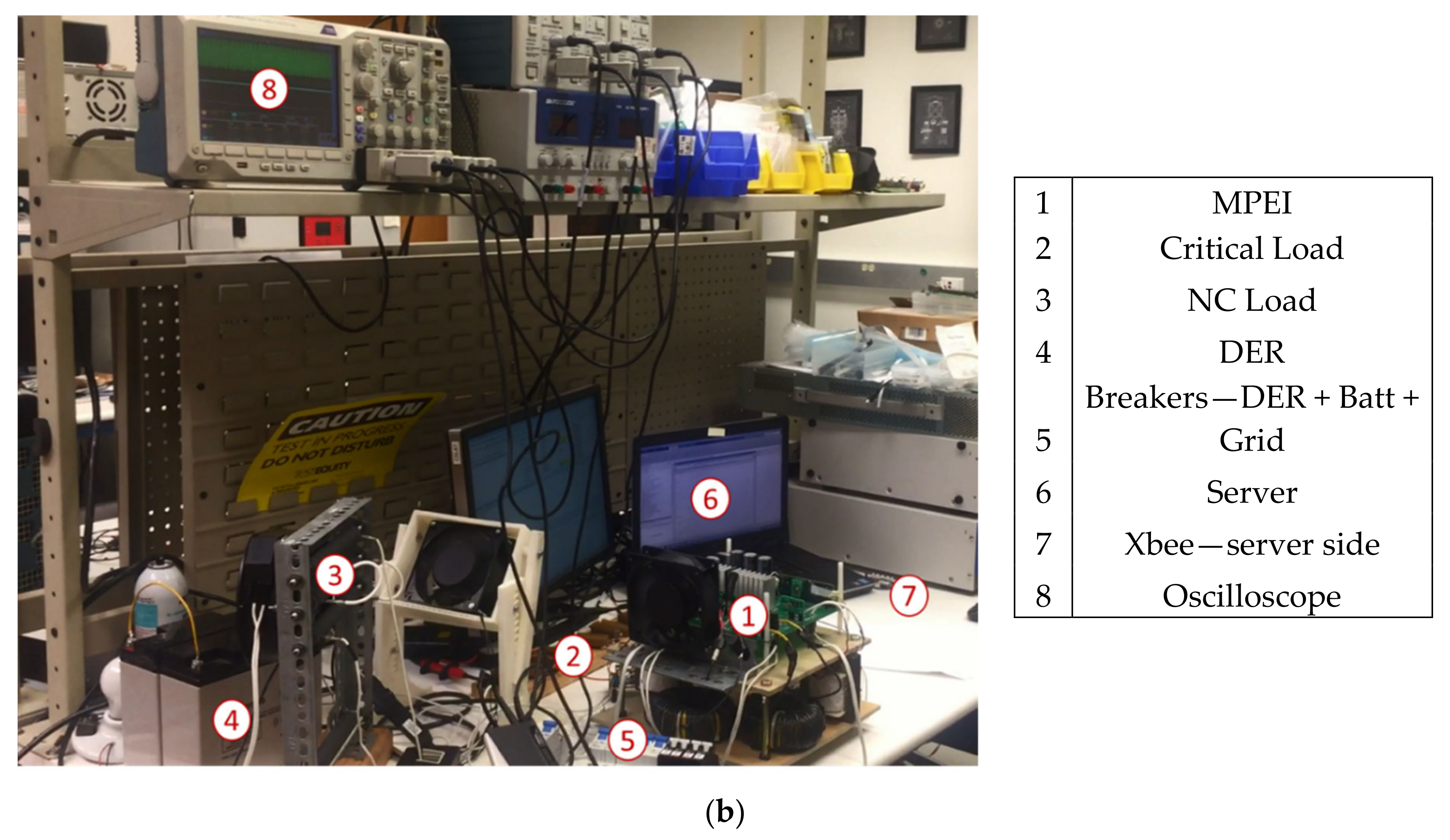

The experimental setup includes an MPEI unit developed as discussed in

Section 2, the Q-learning-based EMS with corresponding features of generation, scaling, and reward calculation techniques (written in MATLAB), and wireless communication between the EMS and MPEI.

The 2 kW MPEI unit, designed as part of this study, utilizes two STGIPS30C60 IGBT modules with three bridge legs each and a TMS320F28335 micro controller unit (MCU). The details of the MPEI board are shown in

Figure 8a. The MPEI consists of three different input sources labeled Grid, Battery, and Solar, and it has an output for the non-critical load. For this experiment, the critical load is directly connected to the DC bus. Therefore, the goal of ensuring stable power to the critical load can be translated as maintaining the DC bus voltage to the desired value. The communication between the MLA server and the MPEI is established wirelessly using Xbee S2C modules that are based on Zigbee protocols. The complete experimental setup is shown in

Figure 8b. The DER was emulated using a smaller battery bank, shown by #4 in

Figure 8b.

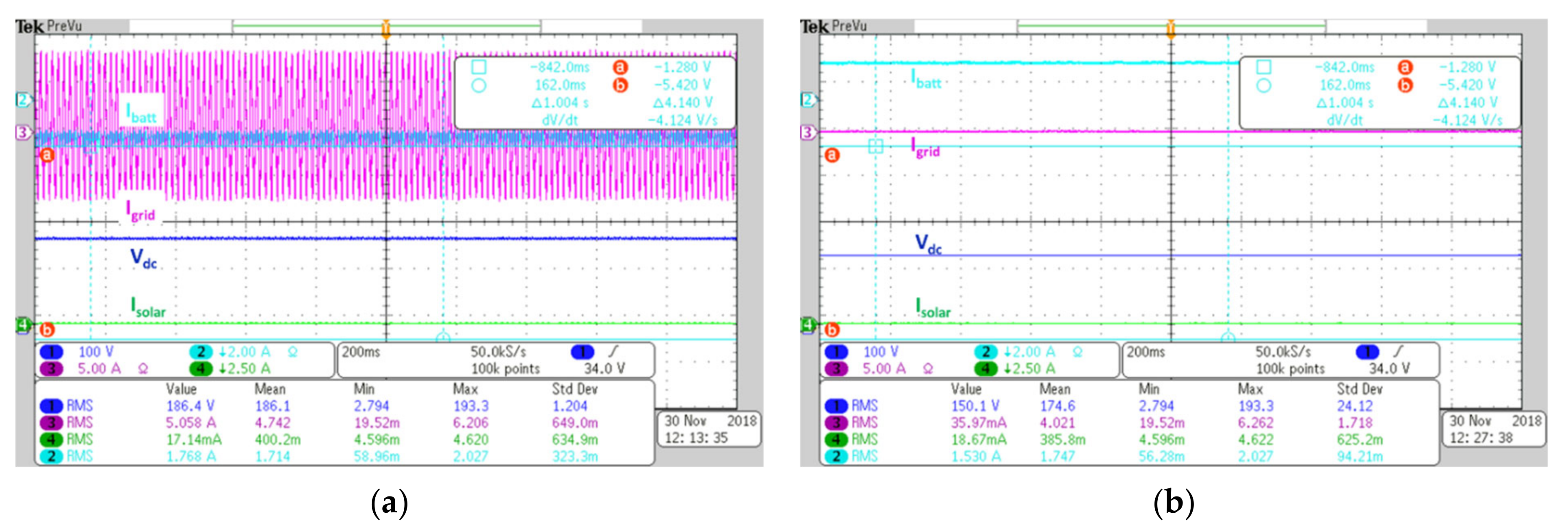

The MPEI was designed, developed, and tested without the EMS, the communication was established with the server, the Q-learning-based EMS was developed and implemented, and finally, the entire system was stabilized by manually varying the input states of the Q-learning algorithm. The results for one of such instances are shown in

Figure 9a,b, where the grid, which is initially on, will turn off and turn back on.

Figure 9b shows the transient response when the grid power comes back. The stable performance of the MPEI is apparent in

Figure 9; a similar process was repeated for all the possible input states and output actions.

The experimentation was conducted for the verification of the simulation results. During simulation, the grid faults and the availability of DER (such as day and night for solar) could be created using the software. For the experimental results, these scenarios had to be created physically. The GSC and DERC were used to create such disruptions. This is equivalent to real operational scenarios, which dictate that GSC is on when the grid is available and DERC is on when enough solar energy is available. The functionality of GSC is reduced to the turned-off mode or rectification when the power injection to the grid has been disabled. This simplifies the Q-learning table to a 4 × 6 table. The possible states are now determined by the feasible combination of GSC and DERC and the actions of different combinations of BI and LSC. Hence, the Q-table looks similar to

Table 6, where the function for each of the converters is outlined. Furthermore, from

Section 5, one realizes the limitation of the SoC reward that was used. So, the SoC reward was modified to extend the lower limit to the final SoC of 25% rather than 50% and the lower limit penalty was changed to −150 points. The parabolic function was changed to assign the penalty of −45 for the final SoC of 50%, and the maximum reward close to 0 at 80%. However, the BI stops discharging if the SoC falls below 30% as protection; hence, 25% of the final SoC should never occur. The initial SoC was fixed to 65% in order to reduce the number of iterations required to train the algorithm. The DC bus voltage penalty value has been increased to −100 when the bus voltage falls below 150 V. Lastly, a reward category related to the non-critical load has been introduced in the non-critical load voltage is below the threshold (or the non-critical load is turned off), and the respective penalty of −30 is assigned. This has been added to prevent the unnecessary turn-off mode for the non-critical load.

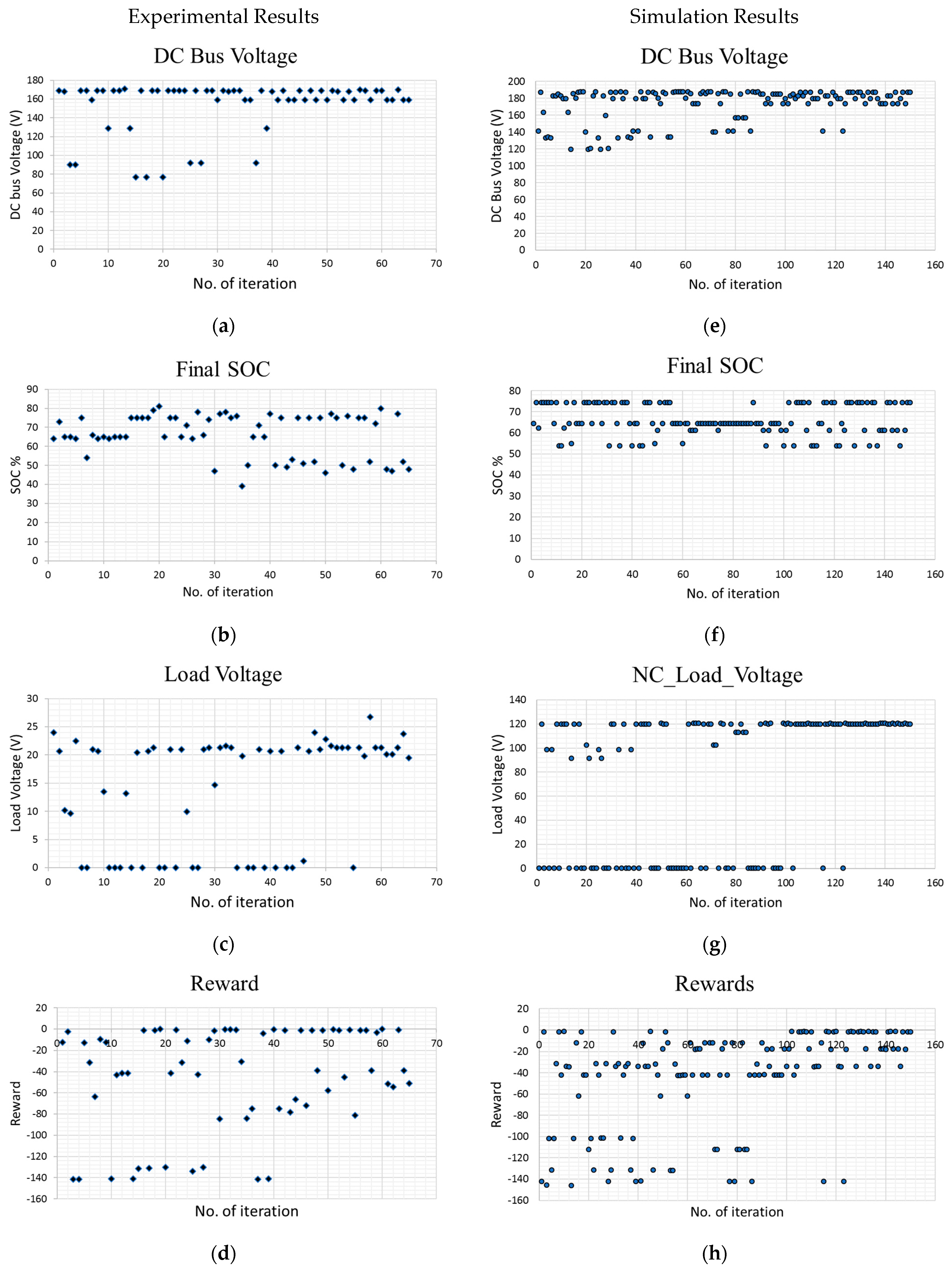

The experimentally obtained results are presented in

Figure 10a–d and in

Table 5. The first and most important observation is that the DC bus voltage never falls below the threshold after the 40th iteration. Since the critical load is connected directly to the DC bus, it does not experience instability after the 40th iteration. The two levels of the DC bus voltage exist because different references are assigned to GSC and BI (170 V and 160 V) in order to differentiate the action through the results. The final SoC has three distinct bands above and below the initial SoC of 65%, indicating that battery charges or discharges. As seen from

Figure 10c, the non-critical load is kept on in most of the iterations towards the end. Finally, it is clear from

Figure 10d that highly negative reward values disappear as the number of iterations increases indicating that the Q-learning algorithm is functioning and the training process is successful. The response of the system with a trained EMS for states 1 and 3 are shown in

Figure 11a,b. In state 1, the grid power is available, so the GSC is on, and the solar is not available, so the DERC is off. As a result, the BI charges the battery indicated by a negative current, and LSC is on. This agrees with the response dictated by action 1 of the Q-table (gray cell). In state 3, both GSC and DERC are off, so as a result, BI discharges the battery (positive current) to maintain the DC voltage, and LSC is on as dictated by action 3 of the Q-table. The DC bus voltage does not drop below 150 V when the grid and DER power is cut off, indicating that EMS is performing effectively. Furthermore, once the Q-learning algorithms are trained, the DC bus voltage never drops except during the brief transient mode changes.

The simulation presented in

Section 5 has been modified to replicate the experimental scenario. The results are presented in

Figure 10e–h. There are two distinct differences between the simulation and experimental models. The first is that the simulation model consists of an exponentially decaying randomness factor (α) which is later deemed unnecessary while using strictly negative rewards; hence, it was removed from the experimental MLA. This results in a higher number of iterations required to train a model. The second is the amount of energy provided by the DER; a realistic model was created in the simulation where DER was capable of providing most of the required energy (83.33%) when the grid was off. However, since a secondary smaller battery bank was used during the experiment in the lab environment, the DER could only provide 24.91% of the required energy in such a scenario. This affects the final SoC of the battery, as discussed in the following paragraphs.

The most significant validation of the simulation results can be obtained by comparing the Q-table of the simulated and experimental models. The comparison is provided in

Table 6.

Table 6 consists of the possible states as rows and the actions of columns. For each state determined by the combination of the modes of GSC and DERC, the optimal action is determined by the lowest penalty in the corresponding row. This is highlighted in

Table 6 with the gray background cells. As can be seen from

Table 6, the actions determined for all four modes through the simulation and experiment are identical. This ensures that the proposed Q-learning algorithm performs consistently during the experiment and the simulation. The reward values in the Q-table are similar when the battery charges, while they show a significant mismatch when the battery discharges; this is due to the difference in the final SoC caused by a discrepancy in the capacity of DER and the size of the load. However, the difference in reward values has no effect on the performance of the algorithm as long as the column/action with a maximum reward for each of the states is the same. Furthermore, the penalties assigned to the second most favorable actions for each state are significantly higher than those assigned to the chosen actions, indicating strong selections in both cases.

The DC bus voltage obtained through the experiment and simulation is shown in

Figure 10a and

Figure 10e, respectively. The distributions of the DC bus voltage values match closely except for the number of iterations. The slight differences in the maximum and minimum voltage values are due to different references set in the simulation and experiment, which has no effect on the performance of the EMS. More significantly, the DC bus voltage is maintained above the threshold voltage with an increased number of iterations in both models, ensuring the successful implementation of the Q-learning-based EMS.

The final SoC values obtained from the experiment and simulation are shown in

Figure 10b and

Figure 10f, respectively. The final SoC values of the trained experimental model are represented by the samples for iterations greater than 40; they have two distinct values of around 50% and 75%, while there are three values for the simulation results, approximately at 52%, 62%, and 75%. This can be explained by considering the difference in energy provided by the DER when the GSC is off, as mentioned earlier. In the simulation, when GSC is off, the battery provides 16.67% of the total energy required, resulting in the SoC dropping of around 2.2% and forming the third distinct cluster at the SoC value of 62%. On the other hand, in the experiment, the battery still accounts for 75.09% of the total energy, resulting in around an 11% SoC drop which is very close to the SoC drop of 15% caused when the DER is not available. The closeness of the resultant final SoC values (54% and 50%), along with the communication noise, makes it appear as if they make one cluster with a larger margin of error, while they are the results of optimal actions at two different modes. The fluctuations of more than 4% can be noticed in

Figure 10b.

The distribution for the simulated and experimental NC load voltages provided in

Figure 10g and

Figure 10c, respectively, present a close match. The NC load voltage reference values and LSC types are different in the simulation and experiment; however, this has no effect on the objective of the study. The communication disturbances are apparent in the experimental results as NC load voltages appear to fluctuate when LSC is on. In both cases, the EMS strives to keep LSC on more frequently as the number of iterations increases. This results in higher rewards for most of the modes when the initial battery SoC is 65%.

The total rewards for the simulation and experimental results can be compared from

Figure 10d,h. The reward values are equal, and the distribution is similar. The reward points obtained from the experiment are more scattered than those from the simulation; this is the result of a difference in SoC, as discussed above, communication disturbances, and other anomalies. More importantly, the total reward decreases in magnitude as the number of iterations increases in both experiment and simulated results. This verifies the accuracy of the simulation model; hence, it validates the simulation-based analysis provided in

Section 5.

7. Discussion

This section discusses the impact of the proposed technology on the reliability of the power distribution system. The distribution system reliability can be measured using metrics such as the system average interruption frequency index (SAIFI), system average interruption duration index (SAIDI), and expected energy not served (EENS). Since frequency-related metrics do not apply to the study at this stage, the system reliability and improvement have been measured in terms of the duration and energy available to the critical load, similar to SAIDI and EENS.

For reliability analysis, the setup described in

Section 6 has been re-trained. The changes have been made for the proper implementation and simplification, as described in this paragraph. The EMS has been trained in island mode for a simplified and reduced training time. The initial SOC, which was considered to be fixed in

Section 6, has been changed to four possible values of 35%, 55%, 75%, and 95%. Limiting the initial SOC to four possible values rather than a continuous value reduces the Q-table and allows faster training while providing enough data points for a valid justification. The combined SOC of the two batteries has been used as the system SOC as they share a common DC bus. The battery converters share a common functionality unless one of the battery SOCs falls below 31%; in this case, the battery converter turns off. The DER (solar) is used to charge the battery and provide a supporting current; the DER cannot meet the energy demand to maintain the DC bus voltage. The system starts load curtailing when SOC falls to 75% and disconnects all NC loads when SOC falls to 55%.

The Q-table has been attached as

Table A1 in

Appendix A. Highlighted in red are the optimal rewards for each operational mode, which corresponds to the state of each variable represented by the grayed cells at the end rows and columns of the table. The results of implementing this Q-table are shown in

Figure 12 below.

Figure 12a presents the results for the experimental setup described in

Section 6, where the total power consumption of the system is 206.3 W, the critical consumes 128 W, and the NC loads consume 53.3 W and 25 W each. The grey dotted line represents the grid without any microgrids, the dashed orange line represents the conventional microgrid with no load curtailing, and the solid blue line represents the microgrid with the proposed EMS. The power is cut off at t = t

0, and the power to the critical loads is immediately cut off for a traditional grid with no microgrids. The conventional microgrids with no load curtailing provide power to all the loads until the battery SOC depletes at t = t

3, at which point the power to the critical load is cut off as well. The proposed EMS keeps all the loads on until t = t

1, where the battery SOC falls below 75%. At t

1, the EMS starts load curtailing and disconnects NC load 1, the larger NC load. At t

2, the SOC drops to 55%, and all the NC loads are disconnected. The total SOC drops to 31% at t

4, and the critical load is disconnected. Compared to no microgrids, the proposed QEMS reduces the average interruption duration to the critical load from 18 h to 10 h. Compared to microgrids with no load curtailing, QEMS reduces the average interruption duration to the critical load from 12 h to 10 h; this indicates a SAIDI improvement of 16.66%.

However, it is important to consider that the critical to non-critical load ratio considered in the experiment above is exaggerated (128 W:78.3 W). Since the application is targeted at residential locations, the NC loads are expected to constitute the majority of the total load. Hence, the Q-table and experimental results in

Figure 12a have been extended to represent a more realistic scenario where critical loads account for 20% of the total load. The total load is kept constant as in the previous case. The results are presented in

Figure 12b. The average interruption duration for QEMS (t

5 − t

4) is now 3 h, which indicates a SAIDI improvement of 75%.