Abstract

Complex mechanical systems used in the mining industry for efficient raw materials extraction require proper maintenance. Especially in a deep underground mine, the regular inspection of machines operating in extremely harsh conditions is challenging, thus, monitoring systems and autonomous inspection robots are becoming more and more popular. In the paper, it is proposed to use a mobile unmanned ground vehicle (UGV) platform equipped with various data acquisition systems for supporting inspection procedures. Although maintenance staff with appropriate experience are able to identify problems almost immediately, due to mentioned harsh conditions such as temperature, humidity, poisonous gas risk, etc., their presence in dangerous areas is limited. Thus, it is recommended to use inspection robots collecting data and appropriate algorithms for their processing. In this paper, the authors propose red-green-blue (RGB) and infrared (IR) image fusion to detect overheated idlers. An original procedure for image processing is proposed, that exploits some characteristic features of conveyors to pre-process the RGB image to minimize non-informative components in the pictures collected by the robot. Then, the authors use this result for IR image processing to improve SNR and finally detect hot spots in IR image. The experiments have been performed on real conveyors operating in industrial conditions.

1. Introduction

Belt conveyors are used to transport bulk materials over long distances. Due to the harsh environmental conditions, the elements of the conveyor are subject to accelerated degradation processes and must be monitored. One of the key problems is monitoring the degradation of thousands of idlers installed to support the belt. Dusty conditions, high humidity, impulsive load (when oversized pieces of material on the belt pass the idler), temporal overloading, etc., may cause really accelerated degradation of the coating of the idler and rolling element bearings installed inside the idler in order to give the rolling ability. One of the most popular ways to evaluate the condition of the idler is its visual inspection, temperature measurement, and acoustic sound analysis. In the paper, we propose to use both RGB images and IR images from infrared cameras.

Even though the conveyor seems to be a simple mechanical system, it may happen that other hot elements may appear around an idler. Thus, we propose original procedure for image processing that exploit some characteristic features of conveyors to pre-process the RGB image to minimize non-informative components in the pictures collected by robots. Then, we use this result for IR image processing to improve signal to noise ratio (SNR), and finally, we detect hot spots in the IR images.

In our previous paper [1], we proposed a fusion of information from idler detection in RGB and IR images.

However, we have found that to be applied in real conditions, the method should be significantly extended due to the number of potential hot spots related to other sources. Thus, we proposed a combination of pre-processing of RGB images, processing IR images based on results of pre-processing, and finally, an analysis of IR images in order to detect hot spots related to the idler.

The method proposed in this paper is based on solutions that operate on two different, timewise synchronized datasets: RGB and IR images. The first solution consists of searching of a decreased region of interest (ROI) to minimize the number of potential artifacts, while the second part is focused solely on the thermal detection of damaged idlers. There are many available techniques to filter the image (image smoothing or edge filtering). A probabilistic Hough transform is popular in image analysis applications. It has been proposed here to crop out from the image exact area occupied by belt conveyor. This area is detected taking advantage of the fact that the outer edges of the belt can be simplified to straight lines.

The Hough transform is a commonly used algorithm, applicable both in case of simple shapes (lines, circles, etc.) easily visible in images and more complicated cases where contours become obstructed or take less regular forms. The most popular example in recent years, due to extensive research in the topic of car autonomy, is road lane tracking. It was presented among others in [2], where progressive probabilistic Hough transform helped to detect boundaries, keeping the vehicle on the road, or in [3], where it was discussed in terms of practical use and existing problems. Fruit detection in a natural environment presented in [4] is an example where a complex problem was solved by merging the Hough transform with other methods of shape detection. A solution similar to the one presented in this paper can be found in [5], where Hough transform and color-based detection were used for intelligent wielding flame recognition. In [6], a problem directly related to the topic of this paper was presented. Authors incorporated deep learning methods both in order to crop the belt conveyor and to detect idlers. Only after the initial ROI reduction was Hough transform applied in order to detect the lines that describe the left and right edges of the belt.

Hot spot detection applied on reduced ROI has been performed by a color-based blob detection algorithm. Blobs can be understood as a group of connected pixels that share one or more properties. There are many ways that blobs can be defined and localized [7], a main difference coming from the pixel group properties used in the detection process. In the past years, many improvements to the method were proposed, in terms of color detection performance (such as in the case of [8]) or by adapting modern CNN solutions to blob detection algorithm, as can be seen in agricultural applications [9]. The problem of hot spot detection in the case of belt conveyors was also presented in [10], being a natural extension of an article cited earlier [6]. The method implemented there takes advantage of the idler outer edge shape and temperature difference between the heating idler and its background.

An exemplary use of blob detection can be seen largely in the biomedical field (such as feature detection in medical images discussed in [11] and more narrow applications in the form of breast tumor detection in [12]) or industrial applications, such as automated defect detection in the material structure [13].

Nowadays, it is hard to imagine the existence of modern industries without some kind of assistance from systems operating on visual data. Ongoing research in fields of quality control [14,15,16]), medicine ([17,18,19], or agriculture ([20,21,22]) are just a few examples outside of the mining industry.

Further development in possible applications requires the simultaneous development of implemented tools, such as in the case of this paper, merging information from connected datasets: RGB and infrared images. Research on infrared image data as source of additional information obtainable through analysis was conducted alongside standard visual data analysis, as it can usually benefit from the same methods, although with complications. Solutions to improve quality of processed infrared data are still being researched, such as edge detection supported by spiking neural networks [23] or contrast enhancement based on memristive mapping [24].

In [25], the problem of infrared images segmentation was discussed, with a proposed solution emerging from a combination of infrared images and a depth map computed from two (stereo) images set on different angles. In [26], thermal infrared image analysis was proposed as a method of detecting breathing disorders, while in [27], the thermal inspection of animal welfare was presented with examples based on farm animal footage.

The fusion of infrared and RGB data is another topic going through intensive research. The goal of this operation can be stated as obtaining complementary data with abundant and detailed information in visual images and effectively small areas in IR that allow for the desired detection to be performed [28]. Road safety oriented solutions can be implement as in [29], where pedestrian detection was divided to night and day cycles, using visual camera for the former and infrared camera for the latter. An example of image fusion powered by neural convolutional network and its possibilities was presented in [30].

The identification of the damage in mine infrastructure via image analysis (RGB, IR) was presented in [31]. The authors analyzed the existing idlers inspection techniques and proposed their own solution based on an Unmanned Aerial Vehicle and an RGB and IR camera sensory system. In this case, the detection of objects was based on the ACF method—shallow learning techniques, which gave good results, but there is still room for increasing the effectiveness of operation. Pattern recognition algorithms, segmentation, feature extraction, and classification for the automatic identification of the typical elements on infrared images were used in [32]. The sensory system has been mounted on a mobile platform that moves along the conveyor. The measuring system has been integrated into the system for forecasting damage to the drive system and the transmission of the belt conveyor drive, based on the temperature measurement and recorded operating data, including failures.

Another solution combining the deep learning algorithm and the image processing technology was proposed in [6]. In addition, audio data were used to locate defects [33]. The deep-convolution-network-based algorithms for belt edge detection were presented in [34]. IR sensors are also used to detect leaks by analyzing a disturbance in the temperature profile in the observed infrastructure [35].

IR cameras have been already discussed in many papers. Szurgacz et al. [36] used IR cameras to evaluate the condition of a conveyor drive unit. A similar problem has been discussed in [37]. Gearboxes used in the drive system of the belt conveyor located in the underground mine were tested using the thermal imaging method [37]. In stationary operation, the temperature characteristics of the object were created as a reference for later tests. In [38], using IR cameras, the authors tried to correlate the temperature of conveyor belt (in a pipe conveyor) to the value of the tensioning force. Skoczylas [39] used acoustic data collected by a legged robot to maintain belt conveyors used in a mineral processing plant.

The authors in [40] proposed a system which combines a thermal-infrared camera with a range sensor to obtain three-dimensional (3D) models of environments with both appearance and temperature information. The proposed system creates a map by integrating RGB and IR images through timestamp-based interpolation and information obtained at the system calibration stage. The authors emphasize that the system is able to accurately reproduce the surroundings, even in complete darkness.

Collecting the images by mobile robot may be a significant factor influencing image analysis. It should be mentioned that the idea of replacing of human inspection by inspection robots is not new. Inspection robots are also used in other applications, for example, for the inspection of airports [41]. Raviola [42] discussed using collaborative robots to enhance Electro-Hydraulic Servo-Actuators used in aircrafts. A legged robot was proposed for inspection in [43], a wheeled robot equipped with a manipulator was discussed in [44], and finally, a specially designed robot with a self-leveling system and hybrid wheel-legged locomotion was discussed in [45]. Other solutions based on robots suspended on a rope above the conveyor [46] or UAV [31] are also interesting solutions.

To summarize, there is a strong need for robot-based inspection (instead of human-based), and an automatic procedures for data analysis are required. This is a very important issue from the practical point of view, especially in very harsh environments, such as the mining industry. Thus, in this paper, we proposed a solution for belt conveyor inspection based on UGV. After data acquisition, the data should be processed automatically; thus, a procedure for hot spot detection is proposed. The originality of the method is related to a combination of RGB and IR image pre-processing in order to minimize ROI and the number of potential artifacts.

The structure of the paper is as follows: First, we describe the experiment in the mine and the mobile inspection platform used to acquire the data. Then, we propose an original methodology that results in hot spot detection. Finally, the method has been applied to analyze real data captured during an inspection mission.

2. Problem Formulation

The purpose of the paper is to develop an automatic procedure for overheated idler detection in belt conveyors based on infrared and RGB image fusion. Images have been acquired by UGV inspection robots during field missions. “The Diagnostic Information” is included in the IR image, however, there are many other objects with potentially similar or higher temperatures. Thus, the idea applied here is to combine RGB and IR images. We use RBG images to “filter out” irrelevant data (that means segmentation of the image) before proper hot spot detection. Then, we extracted the ROI to the IR image, and for a limited area of IR we detect hot spots in IR image domain.

3. Experiment Description

The experiments were carried out on a real belt conveyor in an opencast minerals mine, close to the bunker where transported material is stored. A mobile robot equipped with sensor systems was going along the conveyor to record the image from the RGB and IR cameras. The cameras were directed towards the conveyor in order to better cover the area of interest. Then, the data were analyzed in order to detect damaged idlers (overheating).

3.1. The Belt Conveyor

The belt conveyor analyzed in this paper is a mechanical system used for the continuous horizontal transport of raw materials from the mine pit to the bunker. In this case, it was low alumina clays used as aluminosilicate refractory materials. The investigated object was several hundred meters long, and it operates in noisy environment. The design of the conveyor is classical. The key problem was to identify overheated idlers as potential source of fire.

3.2. The UGV Inspection Robot

The platform has four wheels in a skid steering approach. It is driven by two direct current (DC) motors integrated with the gear. The power transmission is performed by a toothed belts, one on each side. During the inspection, the platform was remotely controlled from the user’s panel. The measurement system, recording a series of data, was also started remotely. However, a fully autonomous version is the ultimate goal for inspection. The robot is presented in Figure 1.

Figure 1.

View of the robot during inspection.

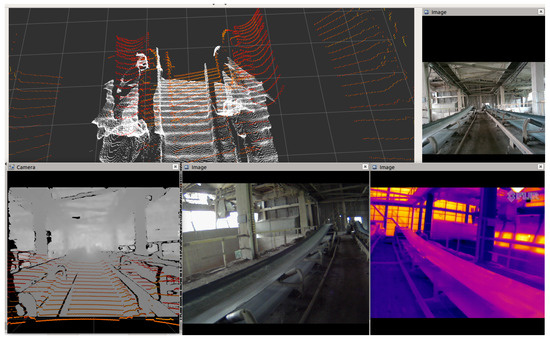

Visualization of the data recorded by the measurement system is presented in Figure 2. During the inspection, various data were recorded, including spatial, but in this case, we focus on damage detection using only RGB and IR images from the cameras.

Figure 2.

View in Rviz of recorded data during inspection. (Top left): depth camera data (gray) and lidar scan lines (red); (top right): front overview; (bottom left): depth camera data and lidar scan lines mapped onto 2D frontal view; (bottom center): RGB camera frame; (bottom right): IR camera frame (flipped horizontally).

Basic parameters of the cameras used during the experiment can be seen in Table 1. It is worth noting that the cameras’ frames per second (FPS) parameters varied in the range of 24–26 fps due to robot computer being under heavy information load coming from many different sensors mounted on the platform. No shifts of frames between both cameras have been detected. Value stated in the table is averaged.

Table 1.

Camera parameters.

3.3. The Inspection Mission

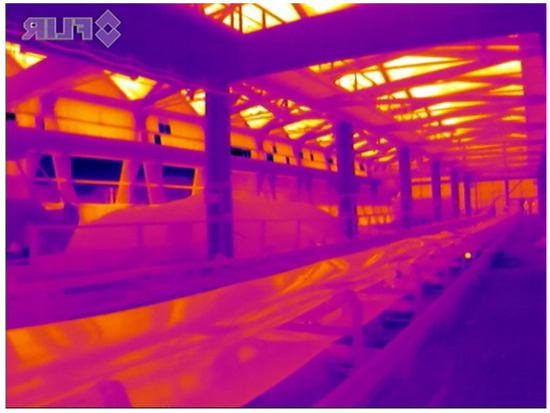

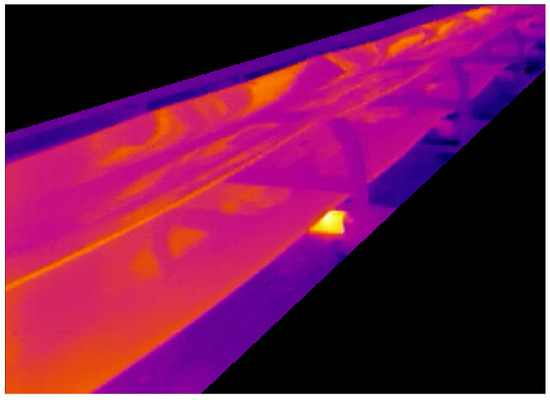

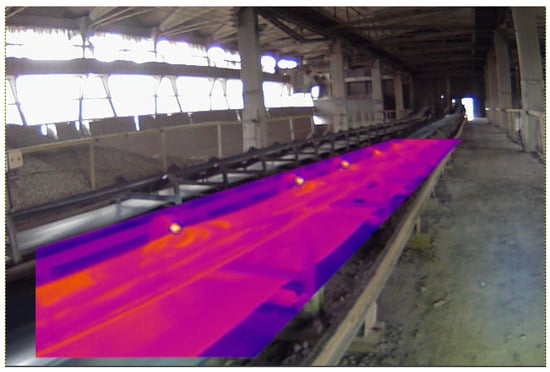

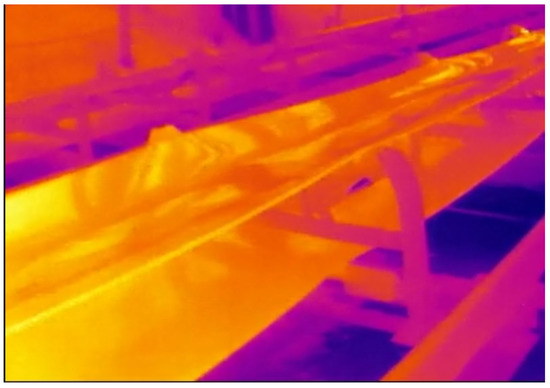

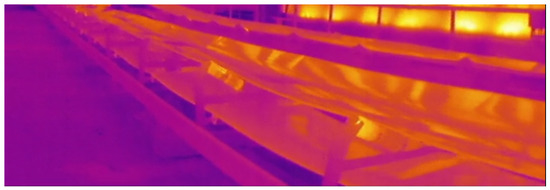

An experiment carried out in the mine resulted in the collection of diagnostic data acquired by the UGV inspection robot developed under Autonomous Monitoring and Control System for Mining Plants (AMICOS) project [47]. Various types of data were recorded (RGB images, infrared thermography images, noise, lidar data, etc.) for the purposes of various diagnostic tasks. Figure 3 shows a frame from the captured movie of a conveyor in the hall, the next Figure 4 shows an analogous view but recorded with an infrared camera.

Figure 3.

Example of RGB scene.

Figure 4.

Example of IR scene.

The conditions under which the experiment was conducted were quite difficult from the point of view of image analysis. Observing raw IR images, one may notice many potential hot spots, or in general, regions that could disturb the analysis, such as the warm ceiling of the hall (this is not diagnostic information). Thus, it is clear that pre-processing is needed to clean up the image before the actual hot spot detection procedure.

Basic parameters of the belt conveyor can be seen in Table 2. It is worth noting that inspection had not started at the very beginning of the belt conveyor.

Table 2.

Belt conveyor parameters.

4. Methodology for Hot Spot Detection

To achieve an efficient diagnostic procedure for overheated idler detection, a particular approach of RGB and IR image collection, pre-processing, and analysis is proposed. The approach consists of several consecutive steps, beginning with the image area reduction by cutting the upper half of the image. This decision is based on the camera angle applied during the robot ride and is useful by the means of repeatable empty area removal (in this example, containing windowed walls and ceilings). Of course, for other missions with other objects and different angles, this segmentation step needs to be adjusted accordingly. In this case, the parameter of cropping out the upper segment of the image has been set to the half of the image height. Reducing the size of the ROI allows improving the efficiency of the procedure (elimination of false-positives in unrealistic areas) as well as computational efficiency (smaller region to search). However, in the current implementation, the efficiency of computation does not matter a lot because the procedure is executed offline.

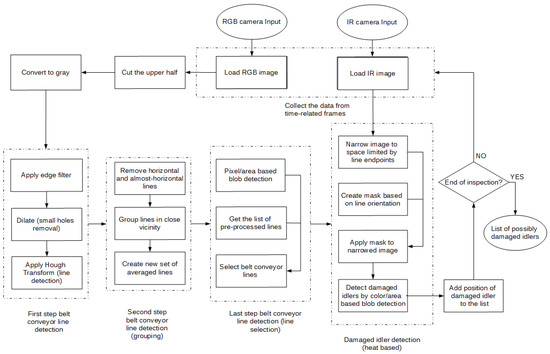

The initial step of pre-processing focuses on extracting the area contour of the belt conveyor from the RGB image. It can be divided into following subgroups (as shown in the Figure 5 flowchart):

Figure 5.

Simplified flowchart of algorithm.

- general straight line detection;

- incorrect line removal and line grouping;

- belt conveyor line selection;

- mask generation (based on selected line).

The final product of this process takes the form of a mask that can filter out the background of image, leaving only the conveyor belt for further analysis. Then, the standard color-based heat detection algorithm can be applied for overheating idler detection by using the second half of the dataset: infrared images. Thanks to timewise synchronization and only slight angle difference between used cameras, the mask extracted in the previous part of the method can be translated directly onto the IR image.

4.1. General Line Detection

For detection purposes, conveyor belt edges can be treated as a straight lines. Two methods of line detection were taken into consideration: Hough transform (HT) and probabilistic Hough transform, also referred to as randomized Hough transform (RHT).

HT can be explained as a voting process where each point belonging to the patterns votes for all the possible patterns passing through that point. These votes are accumulated in accumulator arrays called bins, and the pattern receiving the maximum votes is recognized as the desired pattern [48]. In Hough transform, lines are described by the polar form of line Equation (1), where represents the perpendicular distance of the line from the origin measured in pixels, represents the angle of deviation from the horizontal level measured in radians, and (x, y) represent the coordinates of the point that the line is going through:

Possible lines are deduced by locating local maxima in accumulated parameter space, the size of which depends on the number of pixels inside of an image. Since non-randomized Hough transform algorithms must compute through each pixel separately, the problem becomes one memory and time consumption. In HT, this problem is solved by introduction of random pixel sampling. It allows for significantly increased computation speed, sacrificing some accuracy in the process, depending on the complexity of the required lines and provided data.

In case of data used in this paper, results obtained from both HT and RHT did not show significant differences in terms of accuracy, therefore, the RHT algorithm was chosen, as lower computational time is extremely important in the viewpoint of the mobile platform and its hardware capabilities.

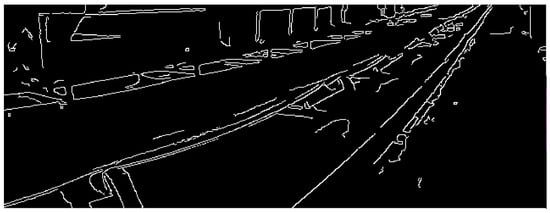

For the RHT algorithm to work correctly, several steps had to be taken before its implementation. Firstly, images were converted to gray scale and smoothed by Gaussian filter in order to remove part of the noise related to the used equipment and the quality of registered data. Then, a Canny filter was applied, as it gave better results than other filters in regard of lines continuity and noise influence. Similar conclusions in terms of filter performance were drawn in [49,50]. An example of an applied Canny filter is shown in Figure 6. The image can be dilated afterwards, as increased line continuity allows for better results from Hough transform.

Figure 6.

Exemplary effect of applied Canny filter.

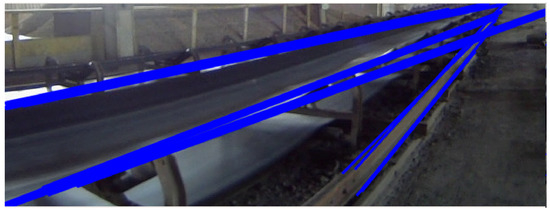

Parameters of detection in this transform are kept in a wide range, as it allows for smoother positioning and grouping in later steps. This especially considers the acceptance of long gaps during line detection and shifting the focus to possibly long line searches. Results of the implemented RHT are presented in Figure 7.

Figure 7.

Exemplary effect of general line detection (Hough transform).

4.2. Detected Lines Preliminary Selection and Grouping

During the inspection, the robot was moving along belt conveyor. The camera observation angle was considered as constant. Edge detection approach may provide many lines detected in the picture. Some of them are artifacts, others are not important for the next steps. Each group of lines obtained from the Hough transform can be therefore represented by the single line averaged based on group of lines with similar slope coefficient and position.

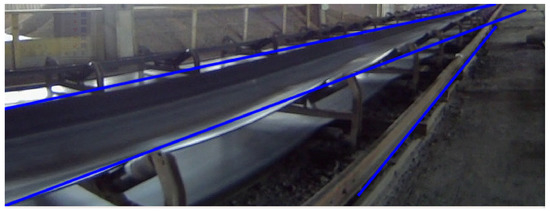

In case of the collected data, horizontal and nearly horizontal lines were removed, as they could not describe belt conveyor due to the camera angle. The next step consisted of grouping and merging of the lines in close vicinity (based on the m and parameter of a general line equation). It should be noted that vertical lines should be also removed (if detected). Results of the preliminary selection and grouping can be seen in Figure 8.

Figure 8.

Exemplary effect after line grouping.

4.3. Line Selection Supported by Blob Detection Algorithm

As can be seen in Figure 9, the blob detection algorithm can provide some incorrect detection results, so final idler detection should be supported by additional criteria.

Figure 9.

Example of incorrect idler blob detection.

Belt conveyor design contains several longitudinal elements (as the edge of a frame of the conveyor belt). Along these elements, we expect to find hot spots (overheated idler). The relation between the belt edge and the location of idlers may be used in two ways. First, the highest number of detected blobs located close to lines pre-selected in Section 4.2 allows concluding that one of these lines is the belt edge. Second, the distance between the identified belt edge and center of particular blob may be a criterion to remove incorrect blob detection result. Therefore, a combination of blob detection and the distance between blob center and the border line associated with the belt may be a basis to improve hot spot detection efficiency.

4.4. Mask Creation and Implementation

The first step to reduce ROI focuses on cropping out from IR image the area described by two corners. These corners are based on coordinates of selected line endpoints. Since the IR and RGB dataset were recorded with similar angles and synchronized timewise, the line endpoint positions can be directly translated between images, with a possibility of small but acceptable mismatch.

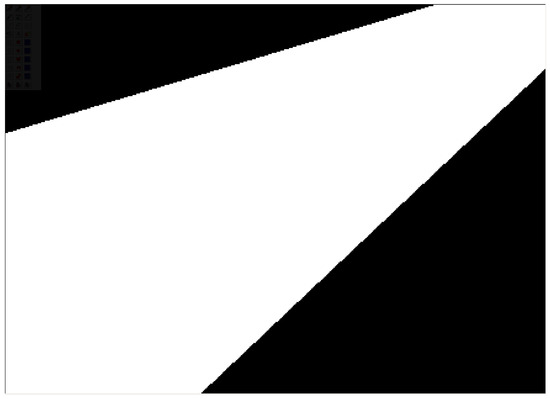

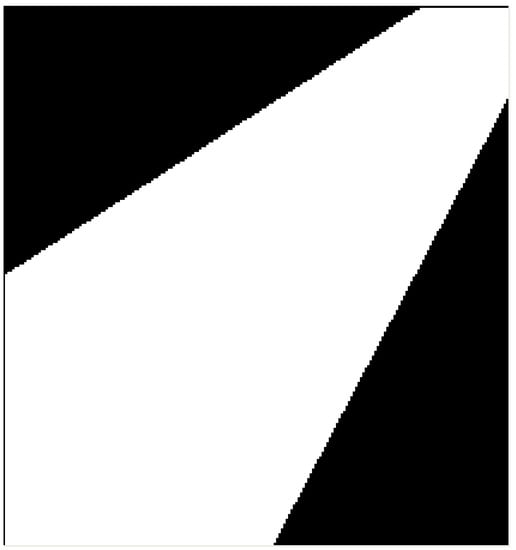

After that, a mask is created, with the initial ROI based on the previously cropped area. Borders of the mask are defined either by edge lines detected in previous process (as shown in Figure 8) or by the use of a predetermined shape. The predetermined shape takes the central diagonal (corner to corner) line as a base and creates constant borders based on the regular shape of belt that becomes smaller the further it is from the robot. An example of the created mask can be seen in Figure 10.

Figure 10.

Exemplary mask created in the process of ROI reduction.

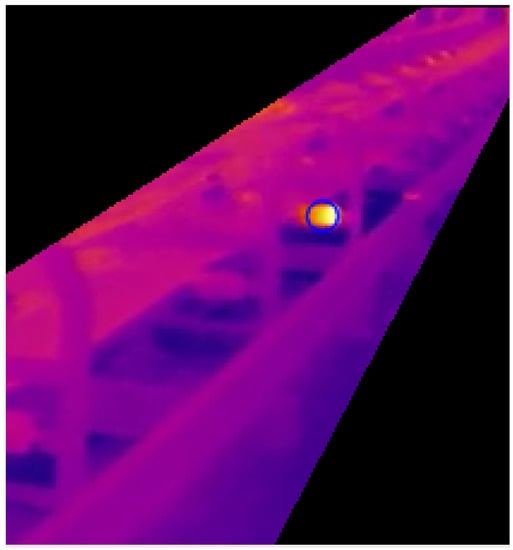

Then, the mask is applied on the IR image, creating an even smaller ROI. It limits the risk of false hot spot detection coming from the background, such as either working machines or materials prone to heating from different sources (heat transfer or exposure to sunlight). The result of a narrowed frame with the applied mask is presented in Figure 11.

Figure 11.

Frame narrowed to the main line with applied mask.

4.5. Heat-Based Damage Detection

The last step of the method focuses directly on hot spot detection. Thanks to ROI reduction, the possibility of incorrect classification is reduced, and simple methods can be implemented with higher rates of successful detection. This method uses the blob detection technique. In opposite to the previously implemented one (in the blob supported line selection), this time it is based on area and color parameters.

Main idler issues present in form of generated heat that can occur during inspection come from the following:

- heat generated during high friction contact between idler and belt;

- damaged idler bearing that causes the idler to stop;

- partially damaged bearing of the idler that causes the core of the idler to become hot without stopping the idler rotation.

The solution to find the potentially damaged idlers is based on combination of:

- focus on restricted color range;

- small, circular area of counted pixels;

- assumption that no other source of heat than the idler/belt friction can be found in the previously processed ROI.

To distinguish areas that can be recognized as damaged idlers, a color-based threshold was used. This process takes place on the hue-saturation-value (HSV) converted image. Hue, a parameter responsible for the color differentiation, was set to the bright orange to bright yellow range with the rest of the parameters set to a possibly wide range of intensities.

The thresholded image was then passed to the blob detection algorithm, focused on area detection wide enough to detect mid- to close-range passing idlers, but narrow enough to not consider bigger blobs created by heated belt.

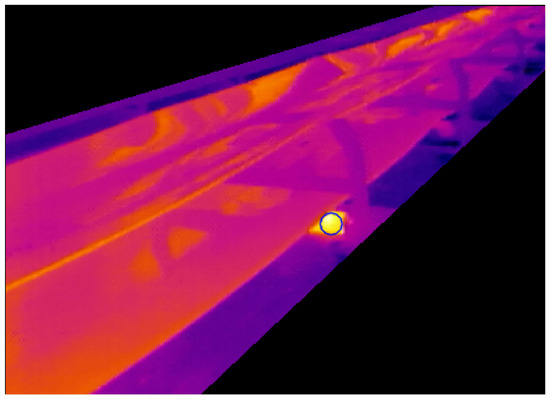

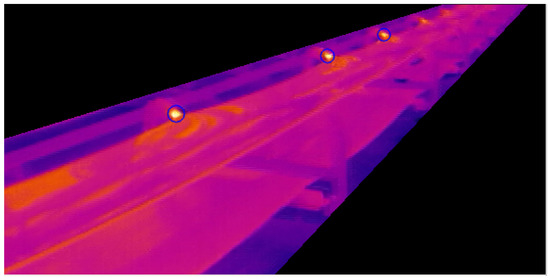

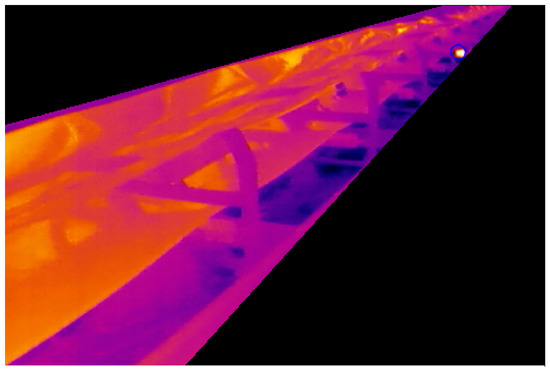

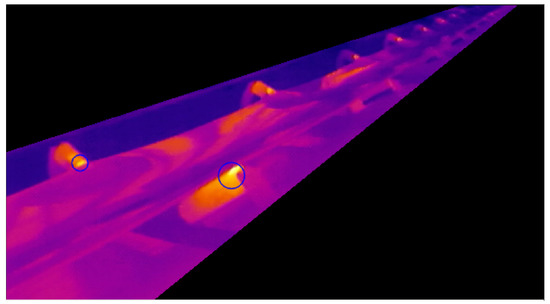

Examples of final results in the form of detected idlers can be seen in Figure 12, Figure 13, Figure 14 and Figure 15.

Figure 12.

Result of damaged idler detection, example 1.

Figure 13.

Result of damaged idler detection, example 2.

Figure 14.

Result of damaged idler detection, example 3.

Figure 15.

Result of damaged idler detection, example 4.

As can be seen in Figure 13 and Figure 15, some potentially damaged idlers are not detected over a single frame. It is due to the distance and fact that too small hot spots cannot be considered in process of detection, since noise (such as heating belt) can create spots with similar properties. It can be solved by reducing the ROI to lower x-axis values.

For better visualization of the method results, cropped ROI with detected idlers was applied on the original RGB frame in Figure 16. The differently colored part of the shown image represents an actual ROI cropped out by the proposed algorithm.

Figure 16.

Algorithm result visualisation on starting data.

As can be seen, angle difference between the RGB and IR cameras resulted in slightly shifted perspective that had to be taken into consideration during boundary creation. In case of not detected or incorrectly detected belt conveyor edges, the predetermined shape of the mask was used as substitution. Later steps do not deviate from the described method. The predetermined shape of the mask can be seen in Figure 17 and example result in Figure 18.

Figure 17.

Predetermined mask shape.

Figure 18.

Possible effect of applied predetermined mask shape.

The ratio between the frames with correctly detected lines and overall computed frames has been summarized in Table 3. Correct line detection represent overall number of instances where the line detection worked properly and no predetermined shape had to be applied. Analogically predetermined shape cases represent the summarized number of instances where the line detection part of the algorithm failed to obtain lines that could correctly describe belt conveyor.

Table 3.

Line detection results.

5. Results

The method presented in this paper was tested on a dataset consisting of several videos. Each detection case consisted of 1500+ frames with at least 20 different idlers present during the robot ride. Each detection case included both faulty and healthy idlers. Some sections of the belt conveyor did not have faulty idlers, which, in terms of the dataset, can be understood as some frames contained only healthy idlers. Therefore, susceptibility to false detections in these cases was also included in the research process. All frames were taken during the simulated robot inspection, where the robot was constantly moving along the belt conveyor.

The results described in this section focus on the video that presented the most difficulties to the algorithm. The trend of at least 10 frames late detection that occurred during all tested datasets can be explained by the parameters that were used in hot spot detection algorithm. These parameters were set to detect only objects of defined size, as a way to prevent false detection related to noise coming through the process.

The main problems of the algorithm performance of hot spot detection resulted from the increased influence of belt temperature. The algorithm was especially inefficient in correct classification in cases where high temperatures persisted over long fragments of the belt conveyor. In those cases, the number of invalid hits and missed idlers raised significantly.

In order to “measure” the quality of faulty idler detection, we use classical parameters in pattern recognition theory: sensitivity and specificity.

Sensitivity (TPR—True Positive Rate) was defined in (2), where true-positive (TP) represents the number of true positive cases and false-negative (FN) represents the number of false negative cases:

In terms of this paper, TP can be described as the number of frames where damaged idlers were detected correctly, whereas FN can be described as the number of frames where overestimation has occurred (spot on the image without damaged idler classified as one that contains it). Therefore, sensitivity relates to the algorithm’s ability to correctly detect the damaged idlers. The sensitivity of detection performed on the selected part of the dataset totaled at 0.83 after 1800 computed frames.

Specificity (TNR—True Negative Rate) was defined in (3), where TN represents the number of true negative cases and FP represents the number of false positive cases:

In terms of this paper, TN can be described as the number of frames where no damaged idlers were neither present nor detected, whereas FP can be described as the number of frames where underestimation has occurred (damaged idler was not detected by the algorithm). Therefore, the specificity relates to the algorithm’s ability to correctly reject spots in the computed images that do not contain damaged idlers. The sensitivity of the detection performed on the selected dataset totaled at 0.74 after 1800 computed frames.

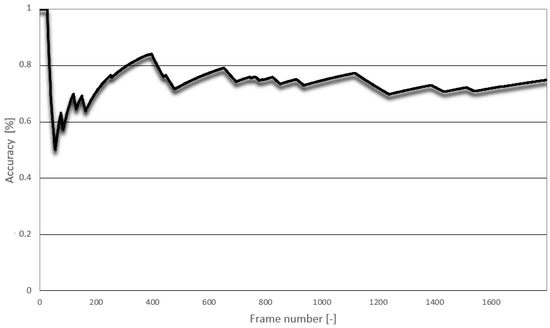

Accuracy (ACC) was defined in (4), where TP and TN represent the same parts of the data that were described in sensitivity and specificity, whereas N represents the total number of computed frames:

Accuracy can be therefore described as the ratio between the number of correct assessments and the number of computed frames. The accuracy of the detection performed on the selected dataset totaled at 0.78 after 1800 computed frames.

The summarized results are presented in Figure 19, as accuracy in correlation to computed frames. For better understanding, data were provide in the form of a table with absolute numbers of detection cases in Table 4.

Figure 19.

Detection accuracy timeline during inspection.

Table 4.

Accuracy parameters.

As can be seen in Figure 19, the main reason for lowered detection efficiency occurred mostly in the first part of the analyzed video. As stated in the second paragraph of this section, the case of high belt temperature over a long period of time was present. The exemplary frame can be seen in Figure 20.

Figure 20.

Exemplary frame with problematic case.

The difference between this situation and similar instances present through the rest of the video comes from the temperature of idlers that remained indistinguishable from the temperature of the belt surface. It resulted in an increased number of both false detections and potentially missed damaged idlers. An example of a case where detection works properly despite the increased belt temperature can be seen in Figure 21.

Figure 21.

Exemplary frame with problem solvable by algorithm.

6. Conclusions

A procedure for image processing and information fusion based on datasets acquired during belt conveyor inspection was proposed in this paper. Information was collected by sensors mounted on a mobile robot: RGB and IR cameras, among others. The reason for replacing human by autonomous robots has been highlighted in many papers—dangerous environment, noisy, dusty atmosphere, long distances, number of elements to asses, etc. Robots can do it automatically with established criteria and instant database completion. The methodology uses a combination of specific, distinctive features detectable on belt conveyors, techniques widely used in image processing, and their adaptation to the task of detecting overheated spots.

The measurements have been conducted in real conditions present in a mine during the robot ride taken along the belt conveyor and selected from different rides taken at different times. The first part of the proposed algorithm for RGB data analysis focused on cropping the belt conveyor out of the image and gave positive results in terms of ROI reduction. The second part of the proposed algorithm focused on direct hot spot detection. The result of RGB image cropping has been used for ROI identification in IR images. Then, the blob-based hot spot detection algorithm was applied. The results of hot spot detection proved to be sufficient for proper damaged idler classification in most of tested cases. The results presented in the paper are representative for the worst case scenario selected from the available dataset. With this approach, it is easier to evaluate difficult environmental impacts on the presented method performance.

Future work assumes the improvement of the algorithm in terms of adaptability to bigger changes in environment temperature. Since all measurements were conducted in same season, it is safe to presume that the described algorithm would give different results in, for example, colder parts of the year. Solutions to that can be looked for in adaptable recognition of temperature level. It can be based on the detected difference between the temperature of working elements and elements of background noise. In this paper, the hot spot detection part of the algorithm is based solely on two-step recognition, taking the color (without considering the color of the background) and number of pixels in close proximity.

Author Contributions

Conceptualization, P.D., J.S. and R.Z.; methodology, P.D. and J.S.; software, P.D.; validation, J.S., P.D., J.W. and R.Z.; formal analysis, J.S.; investigation, P.D. and J.S.; resources, R.Z.; data curation, J.S.; writing—original draft preparation, J.S., J.W. and P.D.; writing—review and editing, J.W. and R.Z.; visualization, J.S.; supervision, R.Z.; project administration, R.Z.; funding acquisition, R.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This activity has received funding from the European Institute of Innovation and Technology (EIT), a body of the European Union, under the Horizon 2020, the EU Framework Programme for Research and Innovation. This work is supported by EIT RawMaterials GmbH under Framework Partnership Agreement No. 19018 (AMICOS. Autonomous Monitoring and Control System for Mining Plants).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Archived data sets cannot be accessed publicly according to the NDA agreement signed by the authors.

Acknowledgments

Supported by the Foundation for Polish Science (FNP).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Szrek, J.; Wodecki, J.; Błazej, R.; Zimroz, R. An inspection robot for belt conveyor maintenance in underground mine-infrared thermography for overheated idlers detection. Appl. Sci. 2020, 10, 4984. [Google Scholar] [CrossRef]

- Marzougui, M.; Alasiry, A.; Kortli, Y.; Baili, J. A Lane Tracking Method Based on Progressive Probabilistic Hough Transform. IEEE Access 2020, 8, 84893–84905. [Google Scholar] [CrossRef]

- Huang, Q.; Liu, J. Practical limitations of lane detection algorithm based on Hough transform in challenging scenarios. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211008752. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Cheng, J.; Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Precis. Agric. 2020, 21, 160–177. [Google Scholar] [CrossRef]

- Chen, W.; Chen, S.; Guo, H.; Ni, X. Welding flame detection based on color recognition and progressive probabilistic Hough transform. Concurr. Comput. Pract. Exp. 2020, 32, e5815. [Google Scholar] [CrossRef]

- Liu, Y.; Miao, C.; Li, X.; Xu, G. Research on Deviation Detection of Belt Conveyor Based on Inspection Robot and Deep Learning. Complexity 2021, 2021, 3734560. [Google Scholar] [CrossRef]

- Kaspers, A. Blob Detection. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2011. [Google Scholar]

- Khanina, N.; Semeikina, E.; Yurin, D. Scale-space color blob and ridge detection. Pattern Recognit. Image Anal. 2012, 22, 221–227. [Google Scholar] [CrossRef]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting Apples and Oranges with Deep Learning: A Data-Driven Approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Liu, Y.; Miao, C.; Li, X.; Ji, J.; Meng, D. Research on the fault analysis method of belt conveyor idlers based on sound and thermal infrared image features. Measurement 2021, 186, 110177. [Google Scholar] [CrossRef]

- Han, K.T.M.; Uyyanonvara, B. A Survey of Blob Detection Algorithms for Biomedical Images. In Proceedings of the 2016 7th International Conference of Information and Communication Technology for Embedded Systems (IC-ICTES), Bangkok, Thailand, 20–22 March 2016; pp. 57–60. [Google Scholar]

- Moon, W.K.; Shen, Y.W.; Bae, M.S.; Huang, C.S.; Chen, J.H.; Chang, R.F. Computer-aided tumor detection based on multi-scale blob detection algorithm in automated breast ultrasound images. IEEE Trans. Med. Imaging 2012, 32, 1191–1200. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [PubMed]

- Muniategui, A.; de la Yedra, A.G.; del Barrio, J.A.; Masenlle, M.; Angulo, X.; Moreno, R. Mass production quality control of welds based on image processing and deep learning in safety components industry. In Proceedings of the Fourteenth International Conference on Quality Control by Artificial Vision, Mulhouse, France, 15–17 May 2019; Cudel, C., Bazeille, S., Verrier, N., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2019; Volume 11172, pp. 148–155. [Google Scholar]

- Liu, C.; Law, A.C.C.; Roberson, D.; Kong, Z.J. Image analysis-based closed loop quality control for additive manufacturing with fused filament fabrication. J. Manuf. Syst. 2019, 51, 75–86. [Google Scholar] [CrossRef]

- Wojnar, L. Image Analysis: Applications in Materials Engineering; CRC Press: Boca Raton, FL, USA, 2019; pp. 213–216. [Google Scholar]

- Wang, F.; Casalino, L.P.; Khullar, D. Deep learning in medicine—Promise, progress, and challenges. JAMA Intern. Med. 2019, 179, 293–294. [Google Scholar] [CrossRef] [PubMed]

- Poostchi, M.; Silamut, K.; Maude, R.J.; Jaeger, S.; Thoma, G. Image analysis and machine learning for detecting malaria. Transl. Res. 2018, 194, 36–55. [Google Scholar] [CrossRef]

- Choi, H. Deep learning in nuclear medicine and molecular imaging: Current perspectives and future directions. Nucl. Med. Mol. Imaging 2018, 52, 109–118. [Google Scholar] [CrossRef] [PubMed]

- Chiu, M.T.; Xu, X.; Wei, Y.; Huang, Z.; Schwing, A.G.; Brunner, R.; Khachatrian, H.; Karapetyan, H.; Dozier, I.; Rose, G.; et al. Agriculture-vision: A large aerial image database for agricultural pattern analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2828–2838. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Sharif, M.; Khan, M.A.; Iqbal, Z.; Azam, M.F.; Lali, M.I.U.; Javed, M.Y. Detection and classification of citrus diseases in agriculture based on optimized weighted segmentation and feature selection. Comput. Electron. Agric. 2018, 150, 220–234. [Google Scholar] [CrossRef]

- Wang, B.; Chen, L.; Zhang, Z. A novel method on the edge detection of infrared image. Optik 2019, 180, 610–614. [Google Scholar] [CrossRef]

- Wang, B.; Chen, L.; Liu, Y. New results on contrast enhancement for infrared images. Optik 2019, 178, 1264–1269. [Google Scholar] [CrossRef]

- Kluwe, B.; Christian, D.; Miknis, M.; Plassmann, P.; Jones, C. Segmentation of Infrared Images Using Stereophotogrammetry. In VipIMAGE 2017; Tavares, J.M.R., Natal Jorge, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 1025–1034. [Google Scholar]

- Procházka, A.; Charvátová, H.; Vyšata, O.; Kopal, J.; Chambers, J. Breathing analysis using thermal and depth imaging camera video records. Sensors 2017, 17, 1408. [Google Scholar] [CrossRef]

- Nääs, I.A.; Garcia, R.G.; Caldara, F.R. Infrared thermal image for assessing animal health and welfare. J. Anim. Behav. Biometeorol. 2020, 2, 66–72. [Google Scholar] [CrossRef]

- Zhao, C.; Huang, Y.; Qiu, S. Infrared and visible image fusion algorithm based on saliency detection and adaptive double-channel spiking cortical model. Infrared Phys. Technol. 2019, 102, 102976. [Google Scholar] [CrossRef]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian detection at day/night time with visible and FIR cameras: A comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef]

- Liu, Y.; Miao, C.; Ji, J.; Li, X. MMF: A Multi-scale MobileNet based fusion method for infrared and visible image. Infrared Phys. Technol. 2021, 119, 103894. [Google Scholar] [CrossRef]

- Carvalho, R.; Nascimento, R.; D’Angelo, T.; Delabrida, S.; Bianchi, A.G.C.; Oliveira, R.A.R.; Azpúrua, H.; Uzeda Garcia, L.G. A UAV-Based Framework for Semi-Automated Thermographic Inspection of Belt Conveyors in the Mining Industry. Sensors 2020, 20, 2243. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Ma, H. An inspection robot using infrared thermography for belt conveyor. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xian, China, 19–22 August 2016; pp. 400–404. [Google Scholar]

- Kim, S.; An, D.; Choi, J.H. Diagnostics 101: A Tutorial for Fault Diagnostics of Rolling Element Bearing Using Envelope Analysis in MATLAB. Appl. Sci. 2020, 10, 7302. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Zeng, C.; Zhang, W.; Li, J. Edge Detection for Conveyor Belt Based on the Deep Convolutional Network; Jia, Y., Du, J., Zhang, W., Eds.; Springer: Singapore, 2019; pp. 275–283. [Google Scholar]

- Kroll, A.; Baetz, W.; Peretzki, D. On autonomous detection of pressured air and gas leaks using passive IR-thermography for mobile robot application. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 921–926. [Google Scholar]

- Szurgacz, D.; Zhironkin, S.; Vöth, S.; Pokorný, J.; Sam Spearing, A.; Cehlár, M.; Stempniak, M.; Sobik, L. Thermal imaging study to determine the operational condition of a conveyor belt drive system structure. Energies 2021, 14, 3258. [Google Scholar] [CrossRef]

- Błazej, R.; Sawicki, M.; Kirjanów, A.; Kozłowski, T.; Konieczna, M. Automatic analysis of thermograms as a means for estimating technical of a gear system. Diagnostyka 2016, 17, 43–48. [Google Scholar]

- Michalik, P.; Zajac, J. Use of thermovision for monitoring temperature conveyor belt of pipe conveyor. Appl. Mech. Mater. 2014, 683, 238–242. [Google Scholar] [CrossRef]

- Skoczylas, A.; Stefaniak, P.; Anufriiev, S.; Jachnik, B. Belt conveyors rollers diagnostics based on acoustic signal collected using autonomous legged inspection robot. Appl. Sci. 2021, 11, 2299. [Google Scholar] [CrossRef]

- Vidas, S.; Moghadam, P.; Bosse, M. 3D Thermal Mapping of Building Interiors using an RGB-D and Thermal Camera. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2303–2310. [Google Scholar]

- Gui, Z.; Zhong, X.; Wang, Y.; Xiao, T.; Deng, Y.; Yang, H.; Yang, R. A cloud-edge-terminal-based robotic system for airport runway inspection. Ind. Robot. 2021, 48, 846–855. [Google Scholar] [CrossRef]

- Raviola, A.; Antonacci, M.; Marino, F.; Jacazio, G.; Sorli, M.; Wende, G. Collaborative robotics: Enhance maintenance procedures on primary flight control servo-actuators. Appl. Sci. 2021, 11, 4929. [Google Scholar] [CrossRef]

- Ramezani, M.; Brandao, M.; Casseau, B.; Havoutis, I.; Fallon, M. Legged Robots for Autonomous Inspection and Monitoring of Offshore Assets. In Proceedings of the OTC Offshore Technology Conference, Houston, TX, USA, 4–7 May 2020. [Google Scholar]

- Rocha, F.; Garcia, G.; Pereira, R.F.S.; Faria, H.D.; Silva, T.H.; Andrade, R.H.R.; Barbosa, E.S.; Almeida, A.; Cruz, E.; Andrade, W.; et al. ROSI: A Robotic System for Harsh Outdoor Industrial Inspection—System Design and Applications. J. Intell. Robot. Syst. 2021, 103, 30. [Google Scholar] [CrossRef]

- Bałchanowski, J. Modelling and simulation studies on the mobile robot with self-leveling chassis. J. Theor. Appl. Mech. 2016, 54, 149–161. [Google Scholar] [CrossRef][Green Version]

- Cao, X.; Zhang, X.; Zhou, Z.; Fei, J.; Zhang, G.; Jiang, W. Research on the Monitoring System of Belt Conveyor Based on Suspension Inspection Robot. In Proceedings of the 2018 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Kandima, Maldives, 1–5 August 2018; pp. 657–661. [Google Scholar]

- Szrek, J.; Zimroz, R.; Wodecki, J.; Michalak, A.; Góralczyk, M.; Worsa-Kozak, M. Application of the infrared thermography and unmanned ground vehicle for rescue action support in underground mine—The amicos project. Remote Sens. 2021, 13, 69. [Google Scholar] [CrossRef]

- Duan, D.; Xie, M.; Mo, Q.; Han, Z.; Wan, Y. An improved Hough transform for line detection. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010; Volume 2, pp. 354–357. [Google Scholar]

- Shrivakshan, G.; Chandrasekar, C. A comparison of various edge detection techniques used in image processing. Int. J. Comput. Sci. Issues (IJCSI) 2012, 9, 269. [Google Scholar]

- Roushdy, M. Comparative study of edge detection algorithms applying on the grayscale noisy image using morphological filter. GVIP J. 2006, 6, 17–23. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).