Abstract

In general, studies on short-term hourly electricity load modeling and forecasting do not investigate in detail the sources of uncertainty in forecasting. This study aims to evaluate the impact and benefits of applying bootstrap aggregation in overcoming the uncertainty in time series forecasting, thereby increasing the accuracy of multistep ahead point forecasts. We implemented the existing and proposed clustering-based bootstrapping methods to generate new electricity load time series. In the proposed method, we use singular spectrum analysis to decompose the series between signal and noise to reduce the variance of the bootstrapped series. The noise is then bootstrapped by K-means clustering-based generation of Gaussian normal distribution (KM.N) before adding it back to the signal, resulting in the bootstrapped series. We apply the benchmark models for electricity load forecasting, SARIMA, NNAR, TBATS, and DSHW, to model all new bootstrapped series and determine the multistep ahead point forecasts. The forecast values obtained from the original series are compared with the mean and median across all forecasts calculated from the bootstrapped series using the Malaysian, Polish, and Indonesian hourly load series for 12, 24, and 36 steps ahead. We conclude that, in this case, the proposed bootstrapping method improves the accuracy of multistep-ahead forecast values, especially when considering the SARIMA and NNAR models.

1. Introduction

Electricity load forecasting plays a critical role in controlling the balance between power demand and supply. Sometimes, the energy demand exceeds the energy supply and vice versa, which results in financial losses. An important aspect of a smart grid system is determining an accurate load forecasting model. Electricity load forecasting provides information that will simplify the work of planning consumption, generation, distribution, and other essential tasks of the smart grid system [1,2].

Much work has been performed to develop models and strategies to improve the electricity load forecasting accuracy. Generally, an hourly load series shows three relationships, i.e., between the observations for consecutive hours on a particular day, between the observations for the same hour on consecutive days, and between the observations for the same hour on the same day in successive weeks. In certain countries, the hourly load series may become more complex due to calendar variations [3]. The effect of calendar variation is usually considered by including a dummy variable in the model [4,5,6,7]. In countries with four seasons, the temperature is often included in the load forecasting model [8,9]. For countries with two seasons, such as Malaysia, it is also possible to include temperature information to improve the forecasts’ accuracy [10]. Many models, from simple to complex, have been proposed and developed by researchers and practitioners around the world to improve the accuracy of electricity load forecasting, e.g., regression and seasonal autoregressive integrated moving average (SARIMA) models [4,11,12], exponential smoothing [3,6,13,14,15], neural network (NN) [16,17,18,19], singular spectrum analysis (SSA) [20,21,22], wavelets [23,24], fuzzy systems [10,25,26], support vector machine [1,21,27,28], among others. However, the most suitable model for electricity load forecasting in a given country may not be the best to model the data in another country because of different consumption and behavioral characteristics.

In this study, we discuss the implementation of the bootstrap aggregating method to improve the accuracy of multistep ahead load forecast. Bootstrap aggregation, which is known by the acronym “bagging”, was proposed by [29] to reduce the variance of the predictor. It works by generating replicated bootstrap samples of the training data and using them to obtain the aggregated predictor. Bagging aims at improving the point forecast by considering sources of uncertainty, namely, the parameter estimates, the appropriate model determination, and the noise. In 2016, [30] successfully applied the development of this method in the field of time series forecasting by using the moving block bootstrap (MBB). Further, [31] explored how bagging improves point forecasts and showed that model selection as a solution to model uncertainty was the most influential on the success of bagging in time series. As described in [30], MBB bagging methods first apply the Box–Cox transform to the original series and then decompose it into a trend, seasonal, and noise using STL (Seasonal and Trend decomposition using Loess). STL is a decomposition method developed by [32]. In MBB, the noise is bootstrapped and added back to the trend and seasonal components. The new transformed bootstrapped series are then inverted and modeled. However, MBB is more appropriate for bootstrapping stationary time series. When the original data are not stationary, the bootstrapped series may be very noisy and do not fluctuate as the original series [30].

Recently, [33] proposed three clustering-based bootstrap aggregating methods, i.e., Smoothed MBB (S.MBB), K-means clustering based (KM), and K-means clustering based-generated from Gaussian normal distribution (KM.N), which perform better under noisy and fluctuating data. In adapting the fluctuating data, the S.MBB method smooths the noise using simple exponential smoothing before applying MBB. Meanwhile, KM and KM.N methods adapt to the noisy series by first implementing the K-means cluster. The original series clusters into K groups and then creates new time series based on the clusters. The difference between KM and KM.N is in how they generate the bootstrap series. In KM, a new time series is created directly by sampling values of clusters, while in KM.N, it is created by generating values based on the parameters of the Gaussian normal distribution of clusters. Both the KM and KM.N methods succeeded in making the bootstrapped time series have low variance between each other. Based on the experimental study of the electricity load series with multiple seasonal and calendar effects, KM.N performed better than the KM method [33]. However, this method creates a bootstrapped series based on the original data without sorting out signal and noise. Thus, in more complex series where the calendar effect may not be visible clearly in the original data, it will produce a noisier bootstrapped series at specific points, especially at times affected by calendar variation.

Inspired by [30,33], this study proposes an SSA–clustering-based method named SSA.KM.N as a modification of KM.N. Our proposed method combines singular spectrum analysis (SSA) as an alternative to the STL method in MBB and KM.N to generate new series from the remainder of the SSA decomposition. Literature shows that SSA is powerful in decomposing time series with complex seasonal patterns ([5,20]). SSA plays a role in breaking down time series, which have trends, multiple seasonal components, and are affected by calendar variation, into signal and noise, which generally contain extreme values representing calendar effects in more detail. By taking advantage of the unique strengths of SSA and KMN, our methodology can better adapt to fluctuating time series related to the effects of calendar variation. Bootstrapping the noise using KM.N and adding it to the signal is expected to produce a bootstrapped time series with low variance and values around the original series.

In this work, the proposed method compares with KM.N in its application to bootstrap two Malaysian load time series with different sample sizes and different time periods; one from Poland and another from Indonesia. We evaluate the impact and benefits of applying SSA.KM.N and KM.N in overcoming the source of uncertainty in time series forecasting and their success in increasing the accuracy of multistep ahead point forecast obtained by standard models such as SARIMA, NNAR, TBATS, and DSHW models.

The rest of the paper is organized as follows. Section 2 describes the methods used in this paper, starting from forecasting methods, decomposition, bagging, and ensemble methods. We also present the procedure of our proposed approach in this section. Section 3 reports the application of KM.N and SSA.KM.N to the four electricity load time series and shows the error evaluation for 12, 24, and 36 steps-ahead point forecasts obtained from SARIMA, NNAR, DSHW, and TBATS for further investigation and assessment. Conclusions are found in Section 4.

2. Materials and Methods

This section contains a brief overview of the methods used for time series modeling and forecasting, the decomposition method, the ensemble learning, and the proposed approach.

2.1. Forecasting Methods

SARIMA, and exponential smoothing (i.e., TBATS and DSHW), are popular approaches to forecast trend and seasonal time series. On the other hand, NNAR is a powerful method for capturing nonlinear relationships in time series data. These four methods are frequently used in modeling load series, and their forecast accuracy is used as benchmarks for other proposed methods [4,10,22]. For example, the Spanish Transmission System Operator uses autoregressive (AR) and NN models [7].

The seasonal ARIMA model, notated as SARIMA , is an extension of ARIMA model that accommodates the seasonal component of the time series [34,35], and can be written as follows:

where is observation at time , is seasonal period, , , , and are the orders of autoregressive, seasonal autoregressive, moving average, and seasonal moving average, respectively. Superscript and notate the regular and seasonal differentiation, while is a backshift operator, and , and are polynomials in B of degree and , respectively. Notations , and are polynomials in of degrees and , and is white noise. The orders of , , , and can be determined from the correlogram and partial correlogram. Oftentimes, the identification of these orders is not an easy task and the user experience is required [36]. The automatic algorithms discussed in [37] with the “auto.arima” function of the R software can be used to help handle this problem [31]. However, other researchers may prefer to estimate the parameters manually instead of using automated packages [38]. In this case, we use “Arima” function included in the package “forecast” in the software R [39].

NNAR is a feedforward neural network that consists of lagged input neurons, one hidden layer with nonlinear function, and one output neuron [40,41]. NNAR model can be represented as in Equation (2),

where and are biased, is the number of neurons in the hidden layer, is the order of the non-seasonal component, while P is the order of the seasonal component. The sigmoid function at the jth neuron in the hidden layer, , is defined in Equation (3),

where . In its implementation we use the “nnetar” function in the R package “forecast” ([39]). Later in the experimental study, is set to be 1, p is the optimal number of lags for the linear model fitted to the seasonally adjusted data, and k is determined by the rounded value of . The final forecast values are obtained by averaging 20 networks with different random starting weights.

TBATS and DSHW are modifications of the exponential smoothing to handle trends and multiple seasonal patterns in time series forecasting [42]. DSHW, proposed by [13], accommodates two seasonal patterns where one cycle may be nested within another. Meanwhile, TBATS, proposed by [3], can handle a more complex seasonal pattern in time series forecasting. The term “complex” means that the time series has a trend, and multiple seasonal patterns with integer or non-integer periods, and this may include dual-calendar seasonal effects. Success studies of the use of DSHW and TBATS in modeling electricity load time series can be found in [3,13,43]. Detail of these models can be found in [3,13,42,44]. In this paper, TBATS and DSHW are fitted by using the “tbats” and “dshw” functions included in the R package “forecast” ([39]).

2.2. SSA Decomposition Method

SSA is a technique in the field of time series analysis that has a vast range of applicability in decomposition [20], missing value imputation [45], and forecasting [46]. In this study, we focus on the use of the SSA algorithm to decompose time series into the following two components: signal and unstructured noise. SSA consists of four steps, namely, embedding, singular value decomposition (SVD), grouping, and diagonal averaging [47,48].

In embedding, we transform a time series into a trajectory matrix as in (4) as follows:

The matrix Z is then decomposed by SVD and expressed as follows:

where are the eigenvalues of matrix , and are left and right singular vectors of the matrix corresponding to the eigenvalues , respectively. We need to determine , the number of signal components used for reconstruction in the grouping stage. Finally, we can obtain signal and noise by the diagonal averaging procedure, i.e., the anti-diagonals that map the matrices of the signal and noise components back to time series. The original time series can then be expressed as follows:

where is the signal and is the noise.

2.3. Bagging and Ensemble Methods

The K-means clustering-based bagging method, proposed by [33], clusters a univariate time series into K groups by the K-means method. Each cluster has its average value and standard deviation as parameters of the cluster. A Gaussian normal distribution according to these parameters is then used to generate a random number as a new value of the bootstrap time series. For example, suppose is a value of the original time series at time that belongs to the ith cluster. In that case, we can obtain the new value of the bootstrap time series at time by generating a random value based on the Gaussian normal distribution of the particular cluster. The code for bootstrapping time series by K-means clustering-based-generated from Gaussian normal distribution (KM.N) can be found in [49].

2.4. Proposed Approach

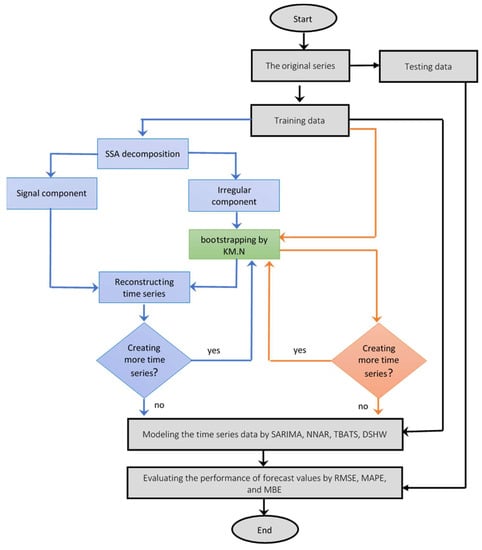

The proposed SSA.KM.N bagging method is a modification of KM.N where the first stage decomposes the original time series using SSA (Figure 1; blue cells). As shown in [20,50], SSA can be used to decompose complex time series into several simple pattern components.

Figure 1.

Procedure for generating bootstrapped time series by the SSA-KM.N method.

- Step 1.

- Divide the series into the following two parts: training and testing datasets;

- Step 2.

- Generate new series from the original training data by the KM.N method;

- Step 3.

- Generate new series from the original training data by the SSA.KM.N method;

- a.

- Apply SSA to the original training data to define the signal and the irregular component;

- b.

- Generate new series from the irregular component obtained in Step 3a by the KM.N method;

- c.

- Sum each new irregular component obtained from Step 3b with the signal component so that we obtain bootstrapped series of the original training data;

- Step 4.

- Model the original training data, and each bootstrapped series by SARIMA, NNAR, TBATS, and DSHW;

- Step 5.

- Calculate up to M-steps-ahead forecast values by each model obtained in Step 4;

- a.

- Define the M-steps-ahead forecast values from the SARIMA, NNAR, TBATS, and DSHW models obtained from the original training data series;

- b.

- Apply mean and median to calculate the final forecast of the bootstrap series determined in Step 2 and Step 3 for the first bootstrap series;

- Step 6.

- Evaluate the forecast accuracy based on root mean square error (RMSE) and mean absolute percentage error (MAPE).

The two accuracy measures considered in this study can be defined as follows. MAPE, calculated by Equation (7), is frequently used in evaluating load forecasting accuracy since it is a scale-independent error that may compare forecast performance between different data sets [44,51]. Meanwhile, RMSE, calculated using Equation (8), is a scale-dependent error that can be used to compare the accuracy performance of several models on the same data set [51].

In addition, we also evaluate the model using mean bias error (MBE) as defined in Equation (9). It provides information whether there is a positive or negative bias [52]. We can calculate MBE by the following:

where

and are the predicted value and the actual value at time (, respectively. H is the number of observations included in the calculation and is the size of training data.

In this study, each bootstrapped series is modeled separately, and the final forecast for time t is obtained by the following two ensemble methods: the mean and the median across all forecast values at time t, calculated from the bootstrap series. In this study, we obtain the mean and the median of the predicted values generated from the first (between 10 to 100) bootstrapped series to investigate whether the number of generated time series affects the accuracy of the forecast results.

3. Results and Discussion

In this section, we discuss two hourly electricity loads in Johor, Malaysia, and two other electricity load datasets from Poland and Indonesia. We decided to use these four data sets to show the generality of our work for electricity load forecasting.

3.1. Application to the Hourly Electricity Load of Johor, Malaysia

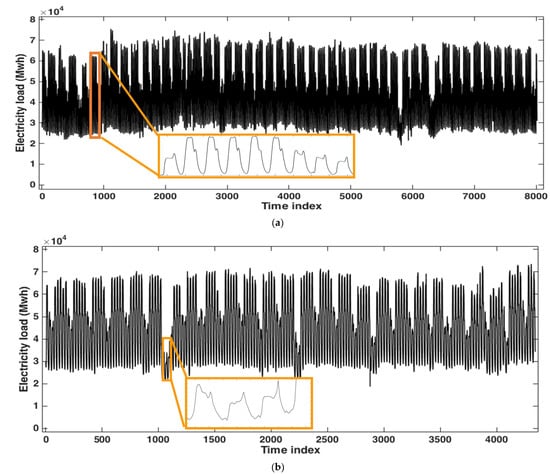

This subsection focuses on short-term forecasting of hourly electricity load with application to Malaysian data. We consider two datasets with different sizes that can be accessed in [53]. The first is the hourly load series from 1 January to 31 December 2009, and the second is the hourly load series from 1 January to 31 July 2010, which are depicted in Figure 2 (see Figure 2a,b, respectively).

Figure 2.

Hourly load series of Johor, Malaysia: (a) 1 January time 00:00, to 30 November 2009, time 23:00; (b) 1 January, time 00:00, to 30 June 2010, time 23:00.

The period from 1 January, time 00:00, to 30 November 2009, time 23:00, and the period from 1 January, time 00:00, to 30 June 2010, time 23:00, were used for estimation purposes as the training data. The remainder was used to evaluate the forecast performance of the models. These periods are summarized in Table 1.

Table 1.

Training and testing datasets of hourly load series used in the experimental study.

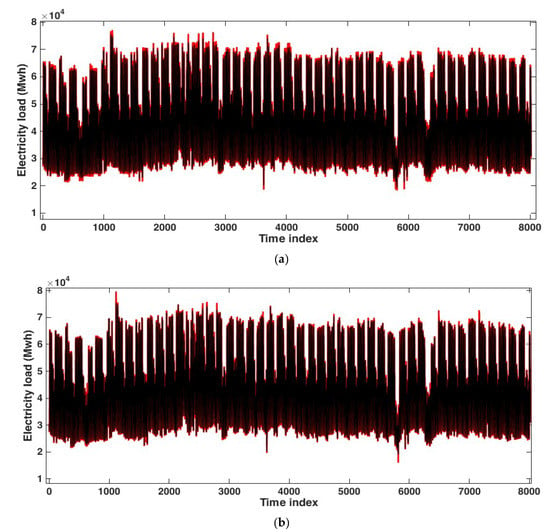

Our analysis generated 100 bootstrap time series using the KM.N and the SSA.KM.N methods. Note that the original series is included in those 100-bootstrap series. Figure 3 shows the original time series and a realization of the bootstrap series by each of the two methods.

Figure 3.

The original series (in black) and the bootstrap time series (in red) obtained by the (a) KM.N method; (b) SSA.KM.N method.

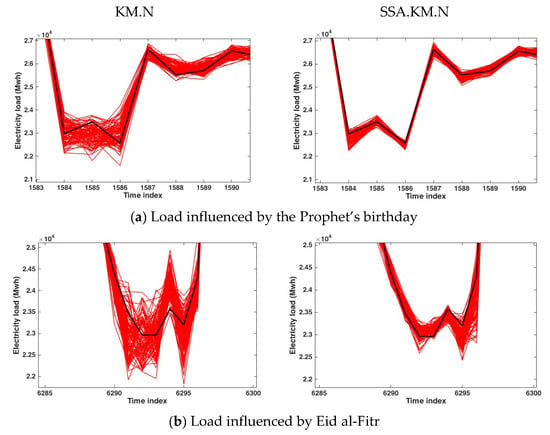

From Figure 3, we can see that both the KM.N and SSA.KM.N methods produce bootstrap series (in red) with almost the same pattern as the original series (in black). Even in certain parts, where the data have lower or higher values than other times as a result of the calendar variation, the SSA.KM.N can generate series closer to the original time series than the KM.N, visually. As illustrations, we zoom the load on the time period influenced by the Prophet’s birthday (Figure 4a) and Eid al-Fitr (Figure 4b). Figure 4 shows that the variance of bootstrapped series obtained by KM.N (left) is larger than those obtained by SSA.KM.N (right).

Figure 4.

The original series (in black) and the bootstrap time series (in red) obtained by the KM.N method (left) and SSA.KM.N (right) (a) for time period influenced by the Prophet’s birthday; (b) for time period influenced by the Aid al-Fitr.

The performance of the one-step ahead forecast accuracy of SARIMA, NNAR, TBATS, and DSHW is shown in Table 2. All these calculations were performed in the R software. From the analysis of the correlogram and the partial correlogram, the model SARIMA(2,0,3)(2,1,2)24 was chosen for the first data set and the SARIMA(2,0,0)(3,1,0)24 for the second data set. The most appropriate NNAR, TBATS, and DSHW models were reconstructed and chosen automatically by the “nnetar”, “tbats”, and “dshw” functions in R. Based on Table 2, we can see that both for the first and second data sets, the NNAR and the DSHW produce smaller RMSE and MAPE than SARIMA and TBATS in the case of one-step ahead forecasting.

Table 2.

RMSE and MAPE for one-step-ahead forecasts for the testing data of the two data sets of hourly electricity load in Malaysia, obtained by SARIMA, NNAR, TBATS, and DSHW.

Furthermore, we investigate how these four models work for multistep ahead load forecasting with and without bagging implementation. The comparative values of RMSE and MAPE for 12, 24, and 36 steps ahead for SARIMA, NNAR, TBATS, and DSHW are presented in Table 3, Table 4, Table 5 and Table 6, respectively. We also present in Table 3, Table 4, Table 5 and Table 6 the RMSE and MAPE obtained from the forecast values of each model with four different numbers of bootstrap time series, to infer whether the number of bootstrap samples interferes with the accuracy of the forecasts.

Table 3.

RMSE and MAPE of -step ahead forecast obtained by SARIMA model from the original series, KM.N and SSA.KM.N bootstrap series.

Table 4.

RMSE and MAPE of -step ahead forecast obtained by NNAR model from the original series, KM.N and SSA.KM.N bootstrap series.

Table 5.

RMSE and MAPE of -step ahead forecast obtained by TBATS model from the original series, KM.N and SSA.KM.N bootstrap series.

Table 6.

RMSE and MAPE of -step ahead forecast obtained by DSHW model from the original series, KM.N and SSA.KM.N bootstrap series.

Based on Table 3, we can see that the SSA.KM.N performed better than the KM.N in reducing the RMSE and MAPE of forecasts for 24- and 36 steps-ahead, respectively, obtained by the SARIMA model. The green cells in Table 3 represent the RMSE and MAPE values of the SARIMA model obtained from the bootstrap series, which are lower than those obtained from the original series. Bold values represent the lowest value in a column of each bagging method with green cells.

Moreover, in Table 3, it can be seen that for the first dataset, SARIMA provided high accuracy values for forecasting one day ahead (next 24 h), indicated by the MAPE values of less than two. For the second dataset, the MAPE values were between two and three. For each bagging method, there is no significant difference between the forecast results obtained by the mean and the median ensemble.

Based on the analysis for multistep-ahead forecasting by the SARIMA model with the bagging methods, it cannot be concluded that the more bootstrapped series used in the calculation, the more accurate the forecasting results will be. As we can see, the values in bold (see Table 3) are not in the = 100 row, being some in the = 25 row.

The comparative forecast results obtained by the NNAR model reconstructed from the original and bootstrap series are presented in Table 4. Based on the analysis of this table, the NNAR tends to produce larger RMSE than SARIMA. This is not in line with the results for predicting one step ahead (see Table 2). However, both the KM.N and SSA.KM.N methods can improve the accuracy performance of the forecasts. The 36-steps-ahead forecast values obtained from the bootstrap series using SSA.KM.N produced a larger RMSE than those obtained from the original time series, but the MAPE value showed the opposite direction. Similar to the results shown in Table 3, in this case, a greater number of bagging samples does not necessarily result in a better performance in terms of forecasting accuracy.

Table 5 shows that, for the first dataset, bagging did not improve the forecast accuracy obtained by the TBATS model. For the second dataset, the SSA.KM.N enhanced the performance of forecasting accuracy, but this did not apply to the KM.N. Based on the RMSE, the forecast values calculated from the TBATS model were more accurate than those obtained from the NNAR model.

Contrary to the results shown in Table 5, bagging implementation improved the forecasting accuracy of the DSHW model for the first dataset but not for the second dataset (see Table 6). In this case, the KM.N bagging performed better than the SSA.KM.N in reducing the forecasting error. Table 6 shows that the MAPE values obtained by the DSHW model for the first dataset are on average 2–3 times higher than those obtained by the DSHW model for the second dataset. However, in this case, the application of KM.N was able to reduce the MAPE value for 12-step ahead by approximately 36%.

By implementing the SSA.KM.N in the hourly load forecasting of Malaysia up to 36 steps ahead, the RMSE was able to be reduced by 4.97% and 40% when using SARIMA and NNAR, respectively. Meanwhile, KM.N was able to reduce the RMSE value by up to 3.8% for SARIMA and up to 35.43% for NNAR. Furthermore, although in one case, the SSA.KM.N bagging implementation for predicting up to 36 steps ahead using TBATS and DSHW can decrease the RMSE by more than 10%, in another case, it may behave differently. Similar conclusions were obtained when analyzing the MAPE.

For further evaluation, we consider MBE to see the direction of the models and present the results in Table 7 and Table 8. The MBE is supposed to provide information on the long-term performance of the model. Based on Table 7 and Table 8, the interpretation of the model performance is consistent with that based on RMSE and MAPE. The directions of the bias generated by the models with and without bagging are the same, except for the forecasting of 12 steps ahead by SARIMA (see Table 7). It may be related to the weakness of MBE, where the positive and negative errors can cancel each other, and high individual errors can result in low MBE values. However, we can see that bagging methods, both KM.N and SSA.KM.N, reduces MBE values obtained from the NNAR model compared with those obtained from the original time series. In the case of modeling the second data set by TBATS, SSA, KM.N yields lower MBEs than the KM.N bagging method.

Table 7.

MBEs of -step ahead forecast for the first data set obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 8.

MBEs of -step ahead forecast for the second data set obtained by SARIMA, NNAR, TBATS, and DSHW models.

3.2. Application to the Hourly Electricity Load of Poland

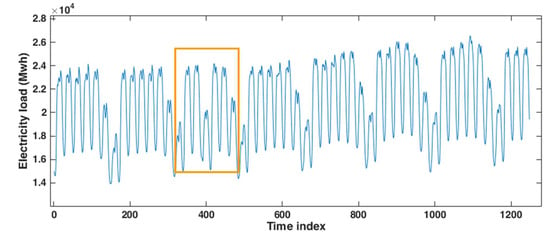

Figure 5 shows the hourly electricity load of Poland, in Megawatts (Mwh), from 26 October, at 01:00 to 16 December 2020 at 00:00. The data were accessed from https://www.pse.pl/obszary-dzialalnosci/krajowy-system-elektroenergetyczny/zapotrzebowanie-kse (accessed on 21 January 2021). This data set contains the linear trend and multiple seasonal patterns with daily and weekly periods. There was a slight pattern change around time index 400 (11 November 2020) due to the influence of the National Independence Day holiday (shown by the orange rectangle in Figure 5). We fit the model using the first 1212 observations and evaluated the forecasting accuracy performance using the last 36 observations.

Figure 5.

The hourly electricity load of Poland between 26 October and 16 December 2020.

In this experimental study, we generate 50 bootstrapped series from the original electricity load of Poland using KM.N and SSA.KM.N. We then model each generated time series by SARIMA, NNAR, TBATS, and NNAR, in the same way as for the Malaysian data. The accuracy evaluation was based on RMSE, MAPE, and MBE for 12, 24, and 36 steps ahead, and is summarized in Table 9, Table 10 and Table 11.

Table 9.

RMSEs of -step ahead forecast for the hourly electricity load of Poland obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 10.

MAPEs of -step ahead forecast for the hourly electricity load of Poland obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 11.

MBEs of -step ahead forecast for the hourly electricity load of Poland obtained by SARIMA, NNAR, TBATS, and DSHW models.

Based on Table 9 and Table 10, we can see that SSA.KM.N can improve the accuracy of 24 and 36 steps ahead of electricity load forecasting for the Polish data using the NNAR model, while KM.N fails to improve forecasting accuracy for this model. On the other hand, the KM.N bagging method works well on the DSHW model, while SSA.KM.N does not perform so well. However, both of them succeeded in increasing the accuracy of forecasting for the ARIMA model.

We can see from Table 10 that implementing the bagging method on Polish data does not reduce the MAPE values in the case of the TBATS model. Still, it makes the MBEs smaller (in absolute values) than those obtained from the original data (Table 11). Furthermore, although the RMSE and MAPE values of the DSHW model decreased with bagging, the results were the opposite when analyzing the MBE values. SSA.KM.N gives better outcomes for the NNAR model, while KM.N is better for the DSHW model.

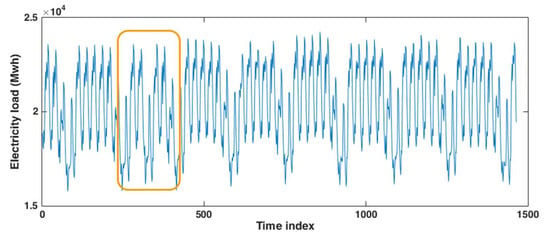

3.3. Application to the Hourly Electricity Load of Java-Bali, Indonesia

To show the generality of the implementation of bagging methods in electricity load forecasting, we also discuss the hourly electricity load of Java-Bali, Indonesia. The data consists of 1464 observations, from 1 October to 30 November 2015. Figure 6 shows that the data has no trend but has double seasonal patterns. It is relatively stable except at time points around 312–336 (14 October 2015) due to the influence of the Hijriyah New Year holiday (shown by the orange rectangle in Figure 6). Moreover, this data set was also discussed in [54].

Figure 6.

The hourly electricity load of Indonesia between 1 October and 30 November 2015.

We generate 50 bootstrapped time series for this case based on 1428 observations (1 October at 01.00 to 29 November at 12.00). The error evaluation in terms of RMSE, MAPE, and MBE obtained from the SARIMA, NNAR, TBATS, and DSHW models are summarized in Table 12, Table 13 and Table 14, respectively. The overall results shown in Table 12, Table 13 and Table 14 for this application are similar to those of the previous applications for Malaysian and Polish electricity load data.

Table 12.

RMSE of -step ahead forecast for the hourly electricity load of Indonesia obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 13.

MAPE of -step ahead forecast for the hourly electricity load of Indonesia obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 14.

MBE of -step ahead forecast for the hourly electricity load of Indonesia obtained by SARIMA, NNAR, TBATS, and DSHW models.

Table 13 shows that SSA.KM.N produces lower MAPE than KM.N for the NNAR model. Compared with that obtained from the original data, the MAPE of the NNAR model was able to be reduced by up to 31.38%, 24.27%, and 17% for 12, 24, and 36 steps ahead of forecast values, respectively. Meanwhile, KM.N failed to lower the MAPE value for 12 steps-ahead, and it only declined approximately 8.74% and 11% for 24 and 36 steps-ahead forecast values, respectively.

In addition, for the NNAR model, MBE presented in Table 14 shows the application of the SSA.KM.Ns bagging method provides less bias for 12 and 24 steps-ahead forecast values than without bagging. However, this does not apply to KM.N.

Based on the experimental findings of the four data sets, bagging implementation can work well to improve the forecasting accuracy of the SARIMA and NNAR models. However, the TBATS and DSHW did not yield the same behavior. The success of this implementation is thought to be influenced by the uncertainty of the models. In this experimental study, we found that some bootstrapped series failed to be modeled by TBATS and DSHW, affecting the final forecast results calculated based on the mean and median across all the forecast values.

The number of bootstrap series does not seem to affect the forecasting accuracy calculated by the mean and median ensemble. In some cases, the SSA.KM.N was able to improve the multistep-ahead forecasting accuracy, but in other cases, the KM.N provided better results.

In this case, the selection of the model is an important step to be considered. The application of bagging with the right forecasting model will increase the accuracy of multistep ahead forecast values. Further development of the hybrid model, i.e., FFORMA [55] and exponential smoothing-neural network [56] or other combinations depending on the pattern of the data, can be considered to help overcome the uncertainty of the models [30].

4. Conclusions

In this study, we evaluated the impact and benefit of applying the existing KM.N and our proposed clustering-based bootstrap method, SSA.KM.N, in overcoming the uncertainty in time series multistep-ahead point forecasts. We focused on time series with a trend, seasonal, and affected by calendar variation and considered two Malaysian, one Polish, and one Indonesian electricity load time series as illustrative examples.

KM.N is considered an appropriate method for bootstrapping data with complex seasonal patterns, such as electrical load data. In the proposed method, we combined SSA and KM.N with the hope of producing bootstrap values that are more similar to the original data. We considered the SSA method to decompose the load series into signal and noise. By SSA, the observed values influenced by the calendar variation appear more clearly in the noise component than in the original data. Bootstrapping this residual value and adding it to the signal will result in the bootstrap series values around the original data.

Furthermore, we applied the following four models, usually used as benchmark models in forecasting electricity load time series: SARIMA, NNAR, TBATS, and DSHW. These four models are applied to all bootstrapped series to obtain up to 36-steps ahead of forecast values. The final forecast at time t is obtained by the following two ensemble methods: the mean and the median across all forecast values at time t. Based on the experimental results, we note that the number of bootstrapped series does not seem to affect the forecasting accuracy calculated by the mean and the median ensemble. We also found that the model suitable for the original series is not necessarily good for all bootstrapped series. We note that the accuracy of multiple-step-ahead forecasting values can be improved when the model, with different parameters, is appropriate for both the original and bootstrap data. Thus, combining several models and ensemble learning methods can be the direction of future research.

Author Contributions

Conceptualization, methodology, analysis, writing-original draft preparation, W.S.; investigation, software, visualization, and project administration, W.S. and Y.Y.; writing-review and editing, supervision, validation, W.S. and P.C.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Education, Culture, Research, and Technology Indonesia with source from the DIPA DIKTI RISTEK (Direktorat Riset, Teknologi, dan Pengabdian Kepada Masyarakat, Direktorat Jenderal Pendidikan Tinggi, Riset, dan Teknologi) 2022, Number SP DIPA-023.17.1.690523/2022 (second revision on 22 April 2022), in the scheme of National Competitive Basic Research (Penelitian Dasar Kompetitif Nasional) with Contract Number 096/E5/PG.02.00.PT/2022.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data were obtained from [53] and are available at https://data.mendeley.com/datasets/f4fcrh4tn9/1 (accessed on 5 March 2021).

Acknowledgments

We thank the three anonymous reviewers for their valuable comments and suggestions to improve the quality of this paper. W. Sulandari and Y. Yudhanto acknowledge support from LPPM (Lembaga Penelitian dan Pengabdian kepada masyarakat) Universitas Sebelas Maret and thanks Subanar for his guidance. P.C. Rodrigues acknowledges financial support from the Brazilian national council for scientific and technological development (CNPq) grant “bolsa de produtividade PQ-2” 305852/2019-1.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

| Abbreviation | Definition |

| DSHW | Double Seasonal Holt-Winters |

| KM | K-means Clustering-Based |

| KM.N | K-means Clustering-Based Generated from Gaussian Normal Distribution |

| MAPE | Mean Absolute Percentage Error |

| MBE | Mean Bias Error |

| MBB | Moving Block Bootstrap |

| NN | Neural Network |

| NNAR | Neural Network Autoregressive |

| RMSE | Root Mean Square Error |

| SARIMA | Seasonal Autoregressive Integrated Moving Average |

| S.MBB | Smoothed Moving Block Bootstrap |

| SSA | Singular Spectrum Analysis |

| SSA.KM.N | Singular Spectrum Analysis, K-means Clustering-Based Generated from Gaussian Normal Distribution |

| STL | Seasonal and Trend decomposition using Loess |

| SVD | Singular Value Decomposition |

| TBATS | Trigonometric, Box–Cox transform, ARMA errors, Trend, and Seasonal Components |

References

- Ahmad, W.; Ayub, N.; Ali, T.; Irfan, M.; Awais, M.; Shiraz, M.; Glowacz, A. Towards Short Term Electricity Load Forecasting Using Improved Support Vector Machine and Extreme Learning Machine. Energies 2020, 13, 2907. [Google Scholar] [CrossRef]

- Ayub, N.; Irfan, M.; Awais, M.; Ali, U.; Ali, T.; Hamdi, M.; Alghamdi, A.; Muhammad, F. Big Data Analytics for Short and Medium-Term Electricity Load Forecasting Using an AI Techniques Ensembler. Energies 2020, 13, 5193. [Google Scholar] [CrossRef]

- De Livera, A.M.; Hyndman, R.J.; Snyder, R.D. Forecasting Time Series with Complex Seasonal Patterns Using Exponential Smoothing. J. Am. Stat. Assoc. 2011, 106, 1513–1527. [Google Scholar] [CrossRef]

- Soares, L.J.; Medeiros, M.C. Modeling and Forecasting Short-Term Electricity Load: A Comparison of Methods with an Ap-plication to Brazilian Data. Int. J. Forecast. 2008, 24, 630–644. [Google Scholar] [CrossRef]

- Sulandari, W.; Subanar, S.; Suhartono, S.; Utami, H.; Lee, M.H.; Rodrigues, P.C. SSA-Based Hybrid Forecasting Models and Applications. Bull. Electr. Eng. Inform. 2020, 9, 2178–2188. [Google Scholar] [CrossRef]

- Bernardi, M.; Petrella, L. Multiple Seasonal Cycles Forecasting Model: The Italian Electricity Demand. Stat. Methods Appl. 2015, 24, 671–695. [Google Scholar] [CrossRef]

- López, M.; Valero, S.; Sans, C.; Senabre, C. Use of Available Daylight to Improve Short-Term Load Forecasting Accuracy. Energies 2021, 14, 95. [Google Scholar] [CrossRef]

- Hong, T.; Wang, P.; White, L. Weather Station Selection for Electric Load Forecasting. Int. J. Forecast. 2015, 31, 286–295. [Google Scholar] [CrossRef]

- Luo, J.; Hong, T.; Fang, S.-C. Benchmarking Robustness of Load Forecasting Models under Data Integrity Attacks. Int. J. Forecast. 2018, 34, 89–104. [Google Scholar] [CrossRef]

- Sadaei, H.J.; de Lima e Silva, P.C.; Guimarães, F.G.; Lee, M.H. Short-Term Load Forecasting by Using a Combined Method of Convolutional Neural Networks and Fuzzy Time Series. Energy 2019, 175, 365–377. [Google Scholar] [CrossRef]

- Cabrera, N.G.; Gutiérrez-Alcaraz, G.; Gil, E. Load Forecasting Assessment Using SARIMA Model and Fuzzy Inductive Rea-soning. In Proceedings of the 2013 IEEE International Conference on Industrial Engineering and Engineering Management, Bangkok, Thailand, 10–13 December 2013; pp. 561–565. [Google Scholar] [CrossRef]

- Chikobvu, D.; Sigauke, C. Regression-SARIMA Modelling of Daily Peak Electricity Demand in South Africa. J. Energy South. Afr. 2012, 23, 23–30. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-Term Electricity Demand Forecasting Using Double Seasonal Exponential Smoothing. J. Opera. Tional. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Taylor, J.W. Triple Seasonal Methods for Short-Term Electricity Demand Forecasting. Eur. J. Oper. Res. 2010, 204, 139–152. [Google Scholar] [CrossRef]

- Arora, S.; Taylor, J.W. Short-Term Forecasting of Anomalous Load Using Rule-Based Triple Seasonal Methods. IEEE Trans. Power Syst. 2013, 28, 3235–3242. [Google Scholar] [CrossRef]

- Bakirtzis, A.G.; Petridis, V.; Kiartzis, S.J.; Alexiadis, M.C.; Maissis, A.H. A Neural Network Short Term Load Forecasting Model for the Greek Power System. IEEE Trans. Power Syst. 1996, 11, 858–863. [Google Scholar] [CrossRef]

- Charytoniuk, W.; Chen, M.-S. Very Short-Term Load Forecasting Using Artificial Neural Networks. IEEE Trans. Power Syst. 2000, 15, 263–268. [Google Scholar] [CrossRef]

- Mordjaoui, M.; Haddad, S.; Medoued, A.; Laouafi, A. Electric Load Forecasting by Using Dynamic Neural Network. Int. J. Hydrogen Energy 2017, 42, 17655–17663. [Google Scholar] [CrossRef]

- Leite Coelho da Silva, F.; da Costa, K.; Canas Rodrigues, P.; Salas, R.; López-Gonzales, J.L. Statistical and Artificial Neural Networks Models for Electricity Consumption Forecasting in the Brazilian Industrial Sector. Energies 2022, 15, 588. [Google Scholar] [CrossRef]

- Sulandari, W.; Subanar, S.; Suhartono, S.; Utami, H. Forecasting Time Series with Trend and Seasonal Patterns Based on SSA. In Proceedings of the 2017 3rd International Conference on Science in Information Technology (ICSITech) Theory and Applicattion of IT for Education, Industry and Society in Big Data Era, Bandung, Indonesia, 25–26 October 2017; pp. 694–699. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Zhang, K. Short-Term Electric Load Forecasting Based on Singular Spectrum Analysis and Support Vector Machine Optimized by Cuckoo Search Algorithm. Electr. Power Syst. Res. 2017, 146, 270–285. [Google Scholar] [CrossRef]

- Sulandari, W.; Subanar, S.; Lee, M.H.; Rodrigues, P.C. Time Series Forecasting Using Singular Spectrum Analysis, Fuzzy Systems and Neural Networks. MethodsX 2020, 7, 101015. [Google Scholar] [CrossRef]

- Tao, D.; Xiuli, W.; Xifan, W. A Combined Model of Wavelet and Neural Network for Short Term Load Forecasting. In Proceedings of the International Conference on Power System Technology, Kunming, China, 13–17 October 2002; Volume 4, pp. 2331–2335. [Google Scholar] [CrossRef]

- Moazzami, M.; Khodabakhshian, A.; Hooshmand, R. A New Hybrid Day-Ahead Peak Load Forecasting Method for Iran’s National Grid. Appl. Energy 2013, 101, 489–501. [Google Scholar] [CrossRef]

- Pandian, S.C.; Duraiswamy, K.; Rajan, C.C.A.; Kanagaraj, N. Fuzzy Approach for Short Term Load Forecasting. Electr. Power Syst. Res. 2006, 76, 541–548. [Google Scholar] [CrossRef]

- Al-Kandari, A.M.; Soliman, S.A.; El-Hawary, M.E. Fuzzy Short-Term Electric Load Forecasting. Int. J. Electr. Trical. Power Energy Syst. 2004, 26, 111–122. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, Y.; Liu, C.; Li, C.; Li, L. A Hybrid Application Algorithm Based on the Support Vector Machine and Artificial Intelligence: An Example of Electric Load Forecasting. Appl. Math. Model. 2015, 39, 2617–2632. [Google Scholar] [CrossRef]

- Li, Y.; Fang, T.; Yu, E. Study of Support Vector Machines for Short-Term Load Forecasting. Proc. CSEE 2003, 23, 55–59. [Google Scholar]

- Breiman, L. Bagging Predictors | SpringerLink. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Bergmeir, C.; Hyndman, R.J.; Benítez, J.M. Bagging Exponential Smoothing Methods Using STL Decomposition and Box–Cox Transformation. Int. J. Forecast. 2016, 32, 303–312. [Google Scholar] [CrossRef]

- Petropoulos, F.; Hyndman, R.J.; Bergmeir, C. Exploring the Sources of Uncertainty: Why Does Bagging for Time Series Fore-casting Work? Eur. J. Oper. Res. 2018, 268, 545–554. [Google Scholar] [CrossRef]

- Laurinec, P.; Lóderer, M.; Lucká, M.; Rozinajová, V. Density-Based Unsupervised Ensemble Learning Methods for Time Series Forecasting of Aggregated or Clustered Electricity Consumption. J. Intell. Inf. Syst. 2019, 53, 219–239. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S.; Terpenning, I. STL: A Seasonal-Trend Decomposition Procedure Based on Loess. J. Off. Stat. 1990, 6, 3. [Google Scholar]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Wei, W.W.-S. Time Series Analysis: Univariate and Multivariate Methods, 2nd ed.; Pearson Addison-Wesley: Boston, MA, USA, 2006. [Google Scholar]

- Huang, S.-J.; Shih, K.-R. Short-Term Load Forecasting via ARMA Model Identification Including Non-Gaussian Process Con-siderations. IEEE Trans. Power Syst. 2003, 18, 673–679. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Khandakar, Y. Automatic Time Series Forecasting: The Forecast Package for R. JSS 2008, 27, 1–23. [Google Scholar] [CrossRef]

- Mohamed, N.; Ahmad, M.H.; Ismail, Z. Improving Short Term Load Forecasting Using Double Seasonal Arima Model. World Appl. Sci. J. 2011, 15, 223–231. [Google Scholar]

- Hyndman, R.J.; Athanasopoulos, G.; Bergmeir, C.; Caceres, G.; Chhay, L.; O’Hara-Wild, M.; Petropoulos, F.; Razbash, S.; Wang, E.; Yasmeen, F. Forecast: Forecasting Functions for Time Series and Linear Models. In R Package Version 8.15. 2021. Available online: https://pkg.robjhyndman.com/forecast/ (accessed on 18 December 2021).

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice, 2nd ed.; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with Artificial Neural Networks: The State of the Art. Int. J. Forecast. 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Sulandari, W.; Suhartono; Subanar; Rodrigues, P.C. Exponential Smoothing on Modeling and Forecasting Multiple Seasonal Time Series: An Overview. Fluct. Noise Lett. 2021, 20, 2130003. [Google Scholar] [CrossRef]

- Sulandari, W.; Subanar, S.; Suhartono, S.; Utami, H. Forecasting Electricity Load Demand Using Hybrid Exponential Smoothing-Artificial Neural Network Model. Int. J. Adv. Intell. Inform. 2016, 2, 131–139. [Google Scholar] [CrossRef]

- Hyndman, R.; Koehler, A.B.; Ord, J.K.; Snyder, R.D. Forecasting with Exponential Smoothing: The State Space Approach; Springer Science & Business Media: Berlin, Germany, 2008. [Google Scholar]

- Golyandina, N.; Osipov, E. The “Caterpillar”-SSA Method for Analysis of Time Series with Missing Values. J. Stat. Plan. Inference 2007, 137, 2642–2653. [Google Scholar] [CrossRef]

- Golyandina, N.; Korobeynikov, A. Basic Singular Spectrum Analysis and Forecasting with R. Comput. Stat. Data Anal. 2014, 71, 934–954. [Google Scholar] [CrossRef]

- Golyandina, N.; Zhigljavsky, A. Singular Spectrum Analysis for Time Series, 2nd ed.; Springer Briefs in Statistics; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Golyandina, N.; Korobeynikov, A.; Zhigljavsky, A. Singular Spectrum Analysis with R; Springer: Berlin, Germany, 2018. [Google Scholar]

- PetoLau/petolau.github.io. GitHub. Available online: https://github.com/PetoLau/petolau.github.io (accessed on 19 April 2021).

- Hassani, H. Singular Spectrum Analysis: Methodology and Comparison. J. Data Sci. 2007, 5, 239–257. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B. Another Look at Measures of Forecast Accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Stone, R.J. Improved Statistical Procedure for the Evaluation of Solar Radiation Estimation Models. Sol. Energy 1993, 51, 289–291. [Google Scholar] [CrossRef]

- Guimaraes, F.; Javedani Sadaei, H. Data for: Short-Term Load Forecasting by Using a Combined Method of Convolutional Neural Networks and Fuzzy Time Series. Mendeley Data 2019, Version 1. Available online: https://data.mendeley.com/datasets/f4fcrh4tn9/1 (accessed on 5 March 2021).

- Sulandari, W.; Subanar; Lee, M.H.; Rodrigues, P.C. Indonesian Electricity Load Forecasting Using Singular Spectrum Analysis, Fuzzy Systems and Neural Networks. Energy 2020, 190, 116408. [Google Scholar] [CrossRef]

- Montero-Manso, P.; Athanasopoulos, G.; Hyndman, R.J.; Talagala, T.S. FFORMA: Feature-Based Forecast Model Averaging. Int. J. Forecast. 2020, 36, 86–92. [Google Scholar] [CrossRef]

- Smyl, S. A Hybrid Method of Exponential Smoothing and Recurrent Neural Networks for Time Series Forecasting. Int. J. Forecast. 2020, 36, 75–85. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).