Reducing WCET Overestimations in Multi-Thread Loops with Critical Section Usage

Abstract

1. Introduction

2. Related Research

3. WCET Estimation in Parallel Threads, Executing Loop Actions with Critical Section Usage

3.1. Theoretical Background for WCET Calculation in Parallel Threads, Executing Loop Actions with Critical Sections

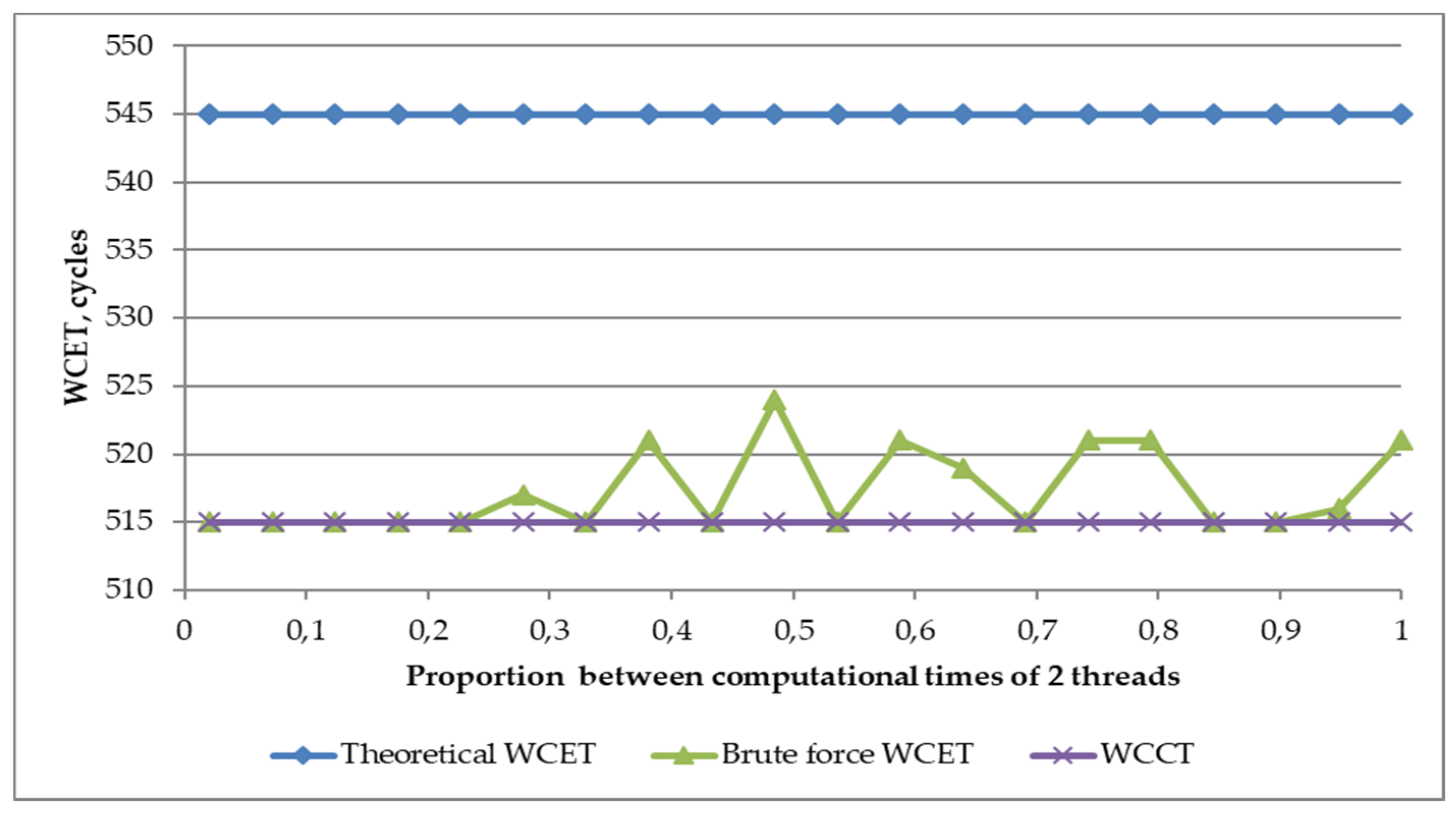

3.2. Experiments for WCET Overestimation Estimation

3.3. Proposed Model for WCET Overestimation Reduction

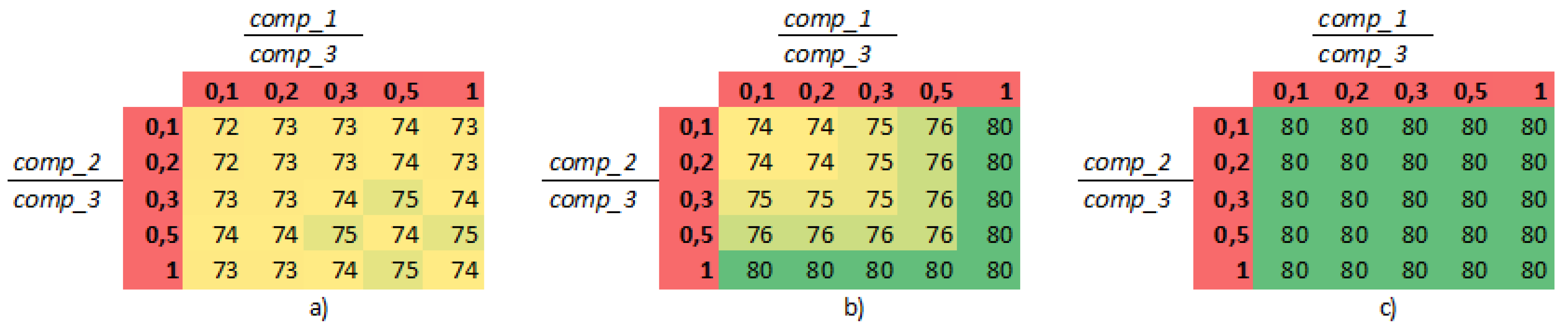

4. Validation of the Proposed Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- El Sayed, M.A.; El Sayed, M.S.; Aly, R.F.; Habashy, S.M. Energy-Efficient Task Partitioning for Real-Time Scheduling on Multi-Core Platforms. Computers 2021, 10, 10. [Google Scholar] [CrossRef]

- Reder, S.; Masing, L.; Bucher, H.; ter Braak, T.; Stripf, T.; Becker, J. A WCET-Aware Parallel Programming Model for Predictability Enhanced Multi-core Architectures. In Proceedings of the 2018 Design, Automation & Test in Europe Conference & Exhibition, Dresden, Germany, 19–23 March 2018. [Google Scholar]

- Meng, F.; Su, X. Reducing WCET Overestimations by Correcting Errors in Loop Bound Constraints. Energies 2017, 10, 2113. [Google Scholar] [CrossRef]

- Shiraz, M.; Gani, A.; Shamim, A.; Khan, S.; Ahmad, R.W. Energy Efficient Computational Offloading Framework for Mobile Cloud Computing. J. Grid Comput. 2015, 13, 1–18. [Google Scholar] [CrossRef]

- Shaheen, Q.; Shiraz, M.; Khan, S.; Majeed, R.; Guizani, M.; Khan, N.; Aseere, A.M. Towards Energy Saving in Computational Clouds: Taxonomy, Review, and Open Challenges. IEEE Access 2019, 6, 29407–29418. [Google Scholar] [CrossRef]

- Khan, M.K.; Shiraz, M.; Ghafoor, K.Z.; Khan, S.; Sadig, A.S.; Ahmed, G. EE-MRP: Energy-efficient multistage routing protocol for wireless sensor networks. Wirel. Commun. Mob. Comput. 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Wägemann, P.; Dietrich, C.; Distler, T.; Ulbrich, P.; Schröder-Preikschat, W. Whole-system worst-case energy-consumption analysis for energy-constrained real-time systems. In Proceedings of the 30th Euromicro Conference on Real-Time Systems (ECRTS 2018), Barcelona, Spain, 3–6 July 2018. [Google Scholar]

- Wang, Z.; Gu, Z.; Shao, Z. WCET-aware energy-efficient data allocation on scratchpad memory for real-time embedded systems. IEEE Trans. 2014, 23, 2700–2704. [Google Scholar] [CrossRef]

- Zaparanuks, D. Accuracy of performance counter measurements. In Performance Analysis of Systems and Software; IEEE: New York, NY, USA, 2009. [Google Scholar]

- Zoubek, C.; Trommler, P. Overview of worst case execution time analysis in single- and multicore environments. In Proceedings of the ARCS 2017—30th International Conference on Architecture of Computing Systems, Vienna, Austria, 3–6 April 2017. [Google Scholar]

- Lokuciejewski, P.; Marwedel, P. Worst-Case Execution Time Aware Compilation Techniques for Real-Time Systems—Summary and Future Work; Springer: Berlin/Heidelberg, Germany, 2011; pp. 229–234. [Google Scholar]

- Mushtaq, H.; AI-Ars, Z.; Bertels, K. Accurate and efficient identification of worst-case execution time for multi-core processors: A survey. In Proceedings of the 8th IEEE Design and Test Symposium, Marrakesh, Morocco, 16–18 December 2013. [Google Scholar]

- Bygde, S.; Ermedahl, A.; Lisper, B. An efficient algorithm for parametric WCET calculation. J. Syst. Archit. 2011, 57, 614–624. [Google Scholar] [CrossRef]

- Cassé, H.; Ozaktas, H.; Rochange, C. A Framework to Quantify the Overestimations of Static WCET Analysis. In 15th International Workshop on Worst-Case Execution Time Analysis; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2015. [Google Scholar]

- Gustavsson, A.; Gustafsson, J.; Lisper, B. Toward static timing analysis of parallel software. In 12th International Workshop on Worst-Case Execution Time Analysis; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2012; Volume 23, pp. 38–47. [Google Scholar]

- Rochange, C.; Bonenfant, A.; Sainrat, P.; Gerdes, M.; Wolf, J.; Ungerer, T.; Petrov, Z.; Mikulu, F. WCET analysis of a parallel 3D multigrid solver executed on the MERASA multi-core. In International Workshop on Worst-Case Execution Time Analysis; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2010; Volume 15. [Google Scholar]

- Gerdes, M.; Wolf, J.; Guliashvili, I.; Ungerer, T.; Houston, M.; Bernat, G.; Schnitzler, S.; Regler, H. Large drilling machine control code—parallelization and WCET speedup. In Proceedings of the Industrial Embedded Systems (SIES), 2011 6th IEEE International Symposium on, Vasteras, Sweden, 15–17 June 2011. [Google Scholar]

- Gustavsson, A.; Ermedahl, A.; Lisper, B.; Pettersson, P. Towards WCET analysis of multi core architectures using UPPAAL. In 10th International Workshop on Worst-Case Execution Time Analysis; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2010. [Google Scholar]

- Pellizzoni, R.; Betti, E.; Bak, S.; Yao, G.; Criswell, J.; Caccamo, M.; Kegley, R. A predictable execution model for COTS-based embedded systems. In Proceedings of the 2011 17th IEEE Real-Time and Embedded Technology and Applications Symposium, Chicago, IL, USA, 11–14 April 2011; pp. 269–279. [Google Scholar]

- Sun, J.; Guan, J.; Wang, W.; He, Q.; Yi, W. Real-Time Scheduling and Analysis of OpenMP Task Systems with Tied Tasks. In Proceedings of the 38th IEEE Real-Time Systems Symposium, Paris, France, 5–8 December 2017. [Google Scholar]

- Segarra, J.; Cortadella, J.; Tejero, R.G.; Vinals-Yufera, V. Automatic Safe Data Reuse Detection for the WCET Analysis of Systems With Data Caches. IEEE Access 2020, 8, 192379–192392. [Google Scholar] [CrossRef]

- Casini, D.; Biondi, A.; Buttazzo, G. Analyzing Parallel Real-Time Tasks Implemented with Thread Pools. In Proceedings of the 56th ACM/ESDA/IEEE Design Automation Conference (DAC 2019), Las Vegas, NV, USA, 2–6 June 2019. [Google Scholar]

- Ozaktas, H.; Rochange, C.; Sainrat, P. Automatic WCET Analysis of Real-Time Parallel. In 13th International Workshop on Worst-Case Execution Time Analysis; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2013. [Google Scholar]

- Alhammad, A.; Pellizzoni, R. Time-predictable execution of multithreaded applications on multicore systems. In Proceedings of the 2014 Design, Automation and Test in Europe Conference and Exhibition (DATE), Dresden, Germany, 24–28 March 2014; pp. 1–6. [Google Scholar]

- Schlatow, J.; Ernst, R. Response-Time Analysis for Task Chains in Communicating Threads. In Proceedings of the 2016 IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Vienna, Austria, 11–14 April 2016. [Google Scholar]

- Rouxel, R.; Derrien, S.; PuautI, I. Tightening Contention Delays While Scheduling Parallel Applications on Multi-core Architectures. ACM Trans. Embed. Comput. Syst. 2017, 16, 1–20. [Google Scholar] [CrossRef]

- Zhang, Q.; Huangfu, Y.; Zhang, W. Statistical regression models for WCET estimation. Qual. Technol. Quant. Manag. 2019, 16, 318–332. [Google Scholar] [CrossRef]

- Rouxel, R.; Skalistis, S.; Derrien, S.; PuautI, I. Hiding Communication Delays in Contention-Free Execution for SPM-Based Multi-Core Architectures. In Proceedings of the 31st Euromicro Conference on Real-Time Systems (ECRTS’19), Stuttgart, Germany, 9–12 July 2019. [Google Scholar]

| Authors | Research Background | Research Factors | Research Results or Proposal |

|---|---|---|---|

| Ozaktas et al. 2013 [23] | Parallel applications, composed of synchronizing threads | Analyzed two different parallel implementations (from 2 up to 64 threads) of a kernel | Proposed method obtains higher speed-ups in comparison to the iterative method. Stall times are relevant and contribute from 4% to 8% of the WCET. |

| Alhammad and Pellizzoni, 2014 [24] | Scheduling memory accesses performed by application threads | The model for synchronization among parallel threads | Proposed execution scheme yields an aggregated improvement of 21% contention execution over the application’s threads with uncontrolled access to the main memory. |

| Schlatow and Ernst, 2016 [25] | The communicating threads time analysis | Worst-case response time | Chaining tasks with arbitrary priorities incurs priority-inversion problems which lead to deferred load challenging the busy-window mechanism. |

| Rouxel and et al. 2017 [26] | Mapping and scheduling strategies | Contention delays | Scenario for mapping and scheduling. It improves the schedule make span by 19% on average. |

| Meng and Su, 2017 [3] | The overestimations time of worst-case execution time | WCET overestimation caused by non-orthogonal nested loops | Proposed approach reduces the specific WCET overestimation by an average of more than 82%, and 100% of corrected WCET is no less than the actual WCET. |

| Casini and et al. 2019 [22] | Scheduling strategy | Schedulability ratio (with thread pools and with blocking synchronization) | Experiment’s results show that schedulability decreases with the number of tasks (until 16 thread). |

| Zhang and et al. 2019 [27] | The execution times dependency on ‘Load’ and ‘Missing Rate’ | Safe and accurate load and cache miss rate | Miss rate is much more sensitive to extreme execution time values than the load. |

| Rouxel and et al. 2019 [28] | Scheduling technique | Communication latency | Proposed approach improves the schedule makespan by 4% on average for streaming application (8% on synthetic task graphs). |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramanauskaite, S.; Slotkiene, A.; Tunaityte, K.; Suzdalev, I.; Stankevicius, A.; Valentinavicius, S. Reducing WCET Overestimations in Multi-Thread Loops with Critical Section Usage. Energies 2021, 14, 1747. https://doi.org/10.3390/en14061747

Ramanauskaite S, Slotkiene A, Tunaityte K, Suzdalev I, Stankevicius A, Valentinavicius S. Reducing WCET Overestimations in Multi-Thread Loops with Critical Section Usage. Energies. 2021; 14(6):1747. https://doi.org/10.3390/en14061747

Chicago/Turabian StyleRamanauskaite, Simona, Asta Slotkiene, Kornelija Tunaityte, Ivan Suzdalev, Andrius Stankevicius, and Saulius Valentinavicius. 2021. "Reducing WCET Overestimations in Multi-Thread Loops with Critical Section Usage" Energies 14, no. 6: 1747. https://doi.org/10.3390/en14061747

APA StyleRamanauskaite, S., Slotkiene, A., Tunaityte, K., Suzdalev, I., Stankevicius, A., & Valentinavicius, S. (2021). Reducing WCET Overestimations in Multi-Thread Loops with Critical Section Usage. Energies, 14(6), 1747. https://doi.org/10.3390/en14061747