A Modified Niching Crow Search Approach to Well Placement Optimization

Abstract

1. Introduction

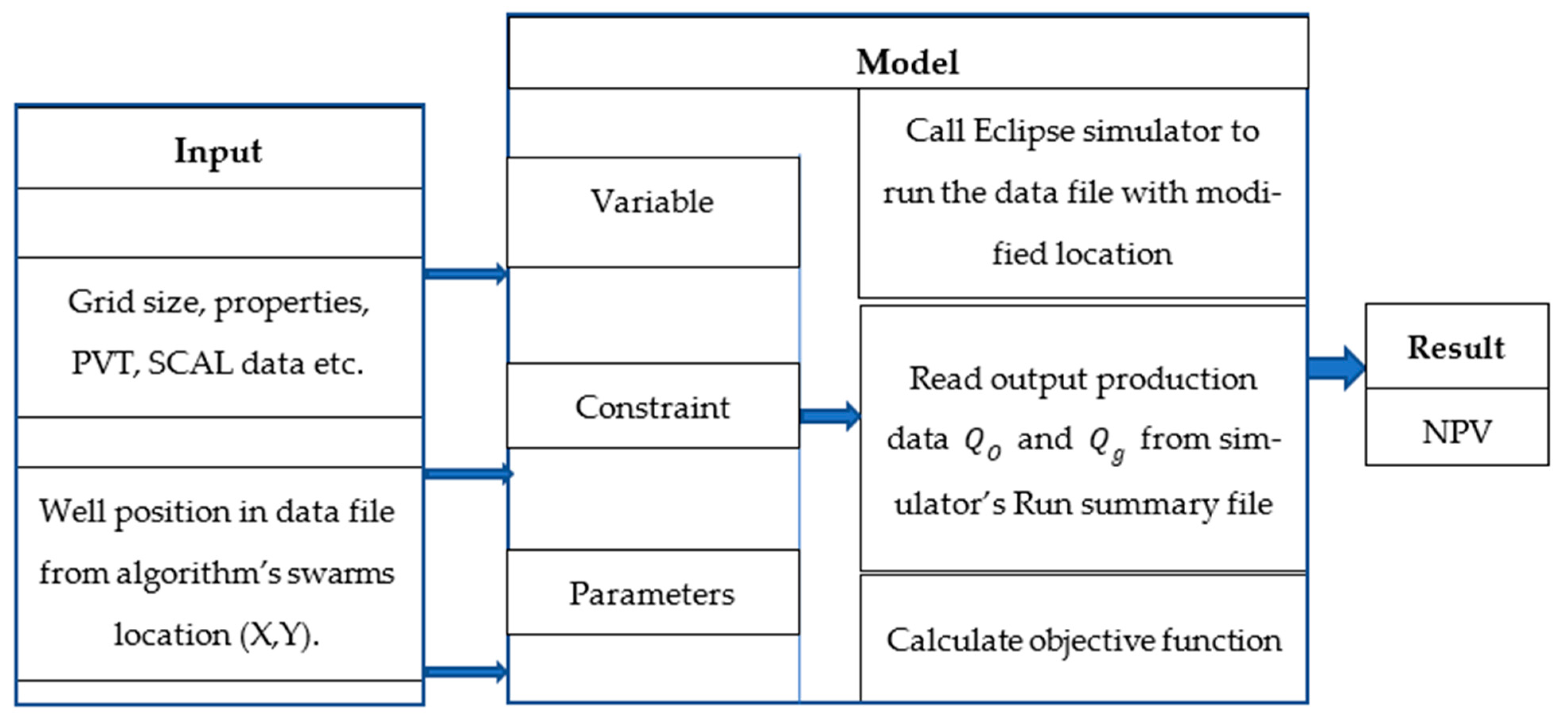

2. Problem Formulation

3. Methodology

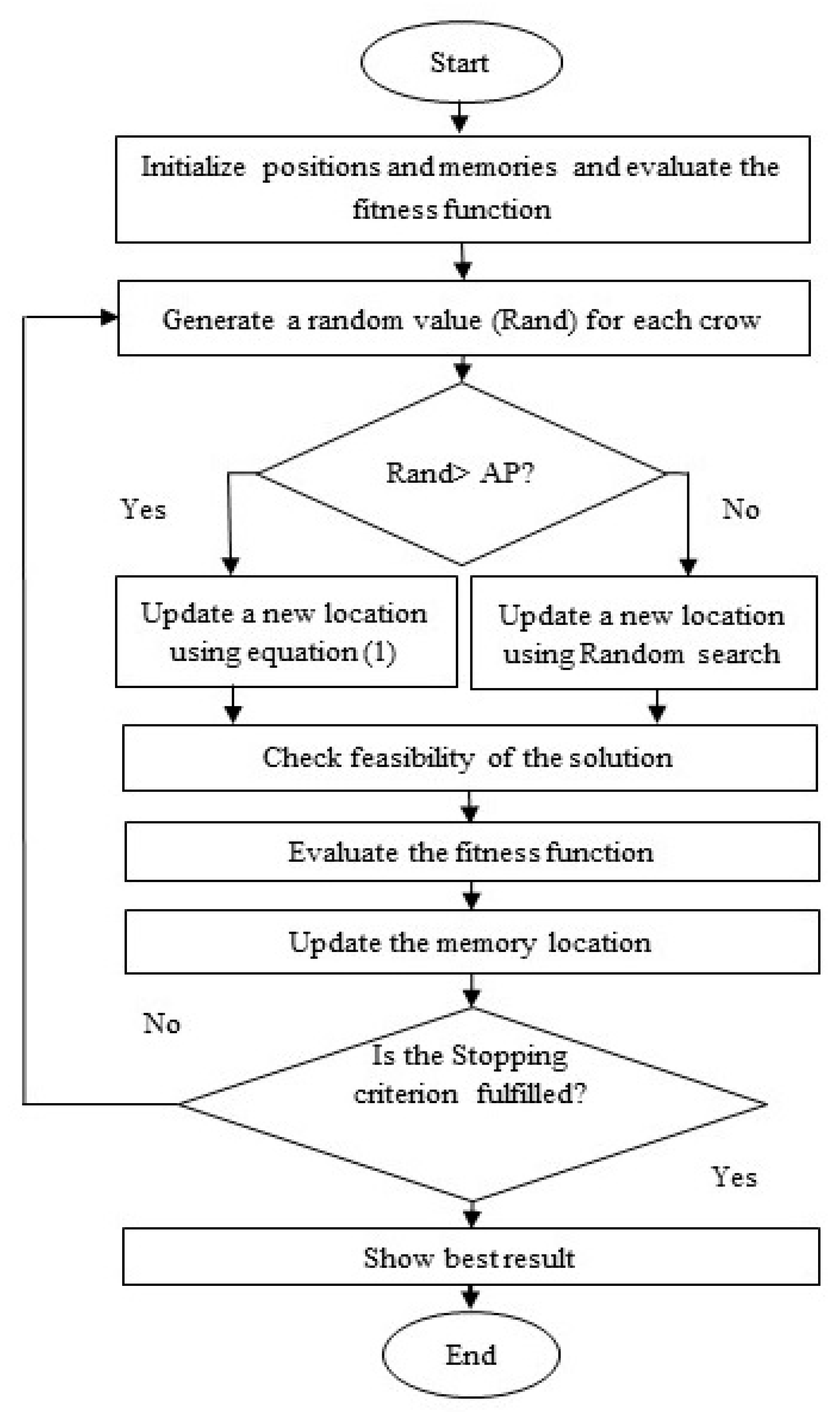

3.1. Crow Search Algorithm (CSA)

- Crows live in a herd;

- Crows remember the location of concealed places of food;

- Crows can commit burglary by following the other crows;

- Crows conceal their collectives that have been robbed.

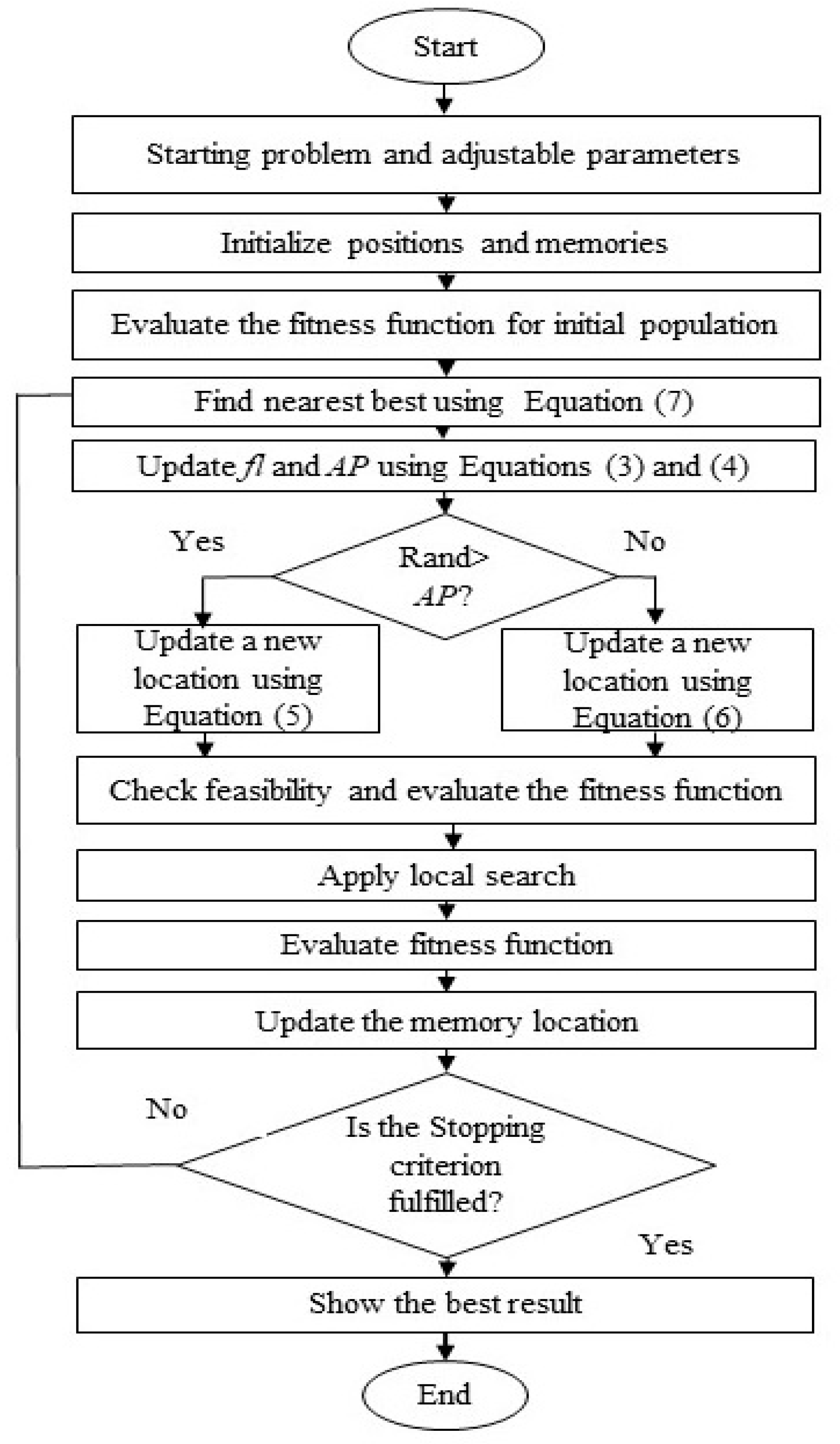

3.2. Niching Crow Search Algorithm (NCSA)

| Algorithm 1 Pseudo Code: Proposed NCSA |

| Begin Initialize positions, memory positions randomly and set crows size (D), swarm size of all crows (k), and maximum number of iterations (itermax); set iter = 0; whileiter < itermax do set itr = itr+1; forj = 1 swarm size do find euclidian distance among crow’s best position; calculate fitness euclidean distance ratio using (14) find nearest best for j th crow end for fori = 1 to swarm size calculateand AP using (10) and (11) ifrand > AP calculateusing (12) else calculateusing (13) end if evaluate the cost function; update memory position; do a local search; end for end while |

| Algorithm 2 Pseudo Code: Local Search |

| fori = 1 to swarm size find nearest memory location to memory location(i) iffitness value of nearest memory position > = fitness value of memory location(i) Local = memory location(i) +0.5 rand(1,D). (nearest memory location—memory location(i)) else Local = memory location(i) + rand (1,D). (memory location(i)—memory location nearest) end if evaluate the fitness value for Local iffitness value of Local > fitness value of memory location(i) memory location(i) = Local end if end for |

3.3. Computational Complexity

4. Result and Discussion

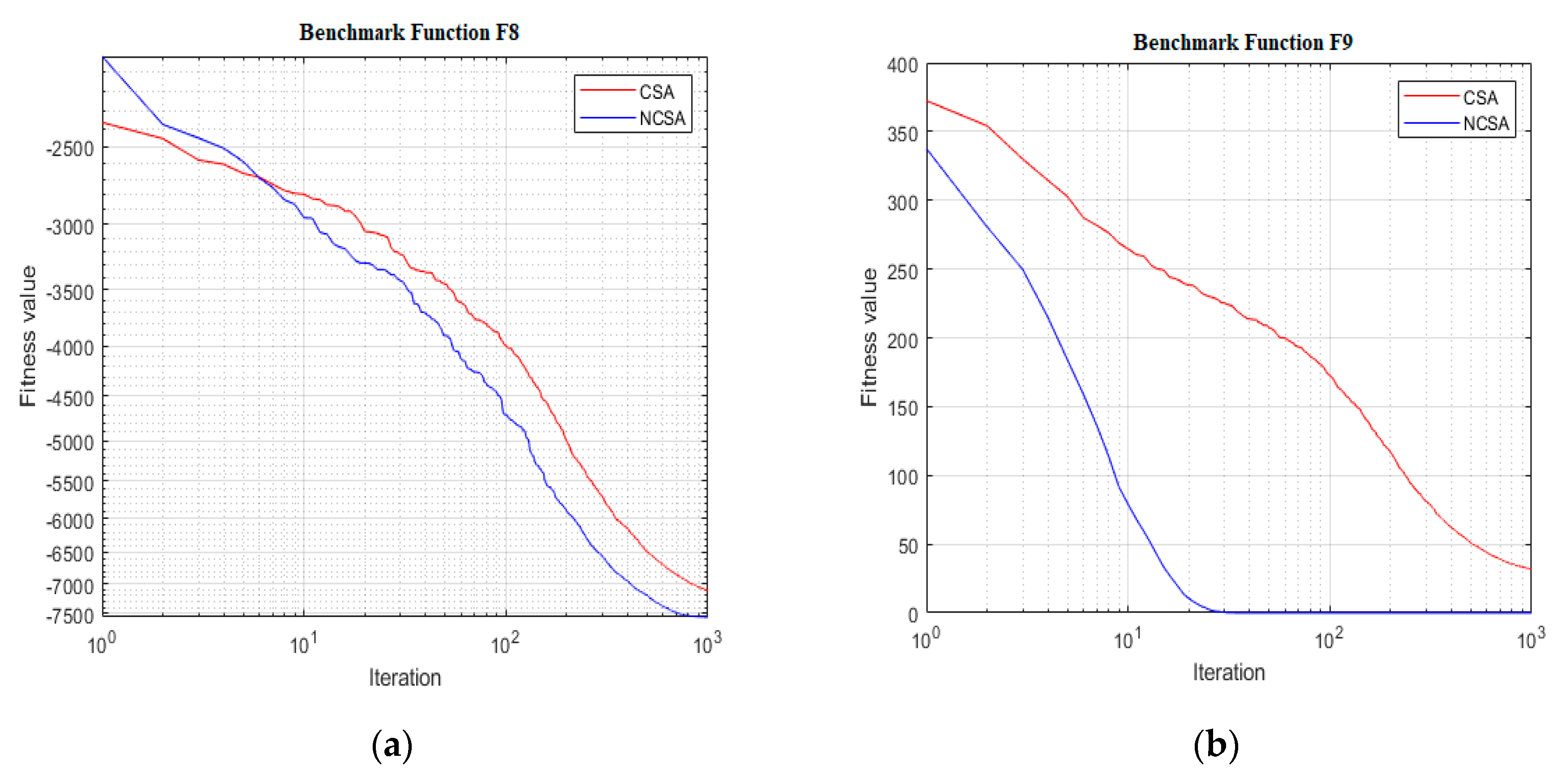

4.1. Benchmark Functions

4.1.1. Exploitation Analysis

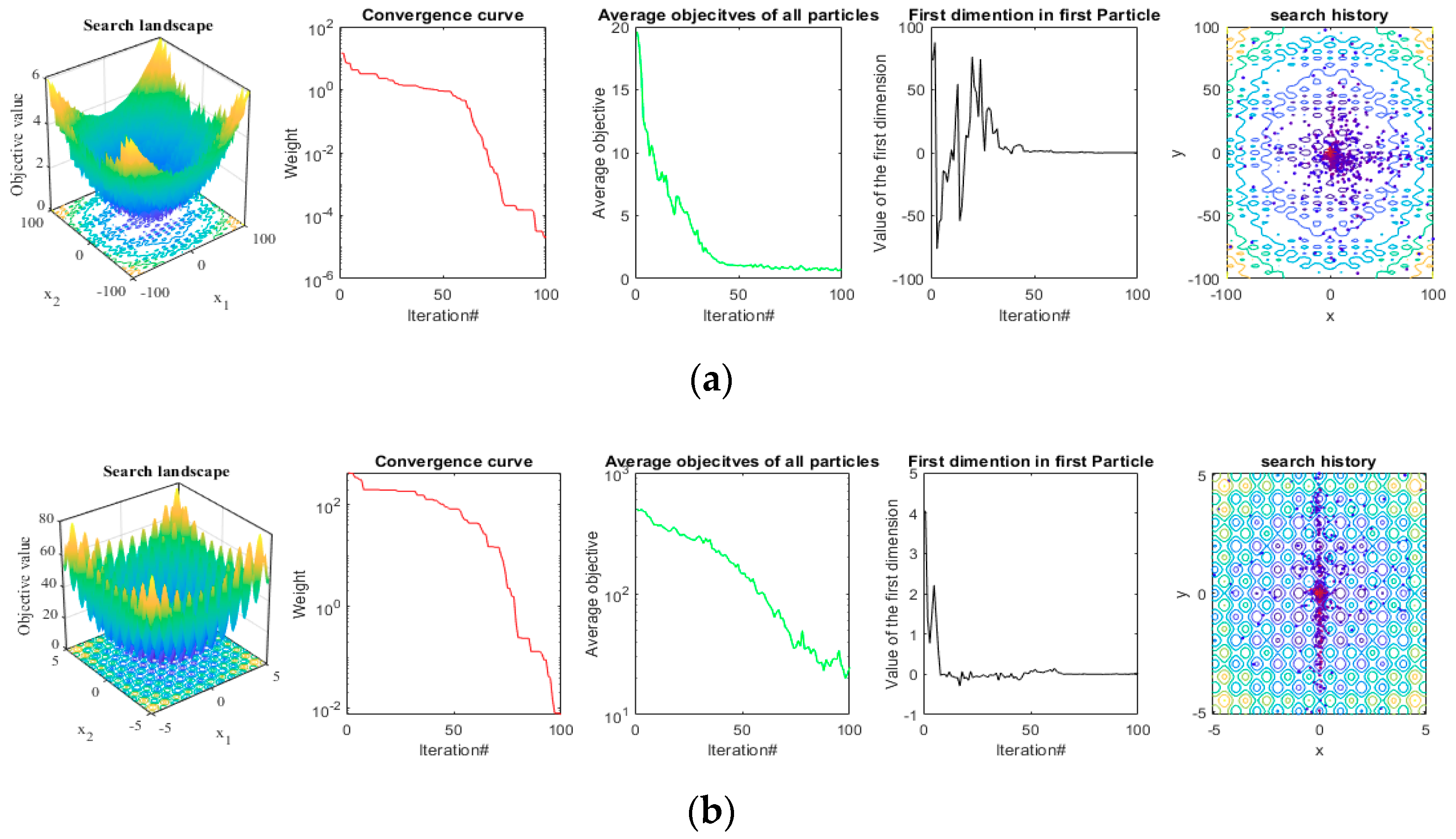

4.1.2. Exploration Analysis

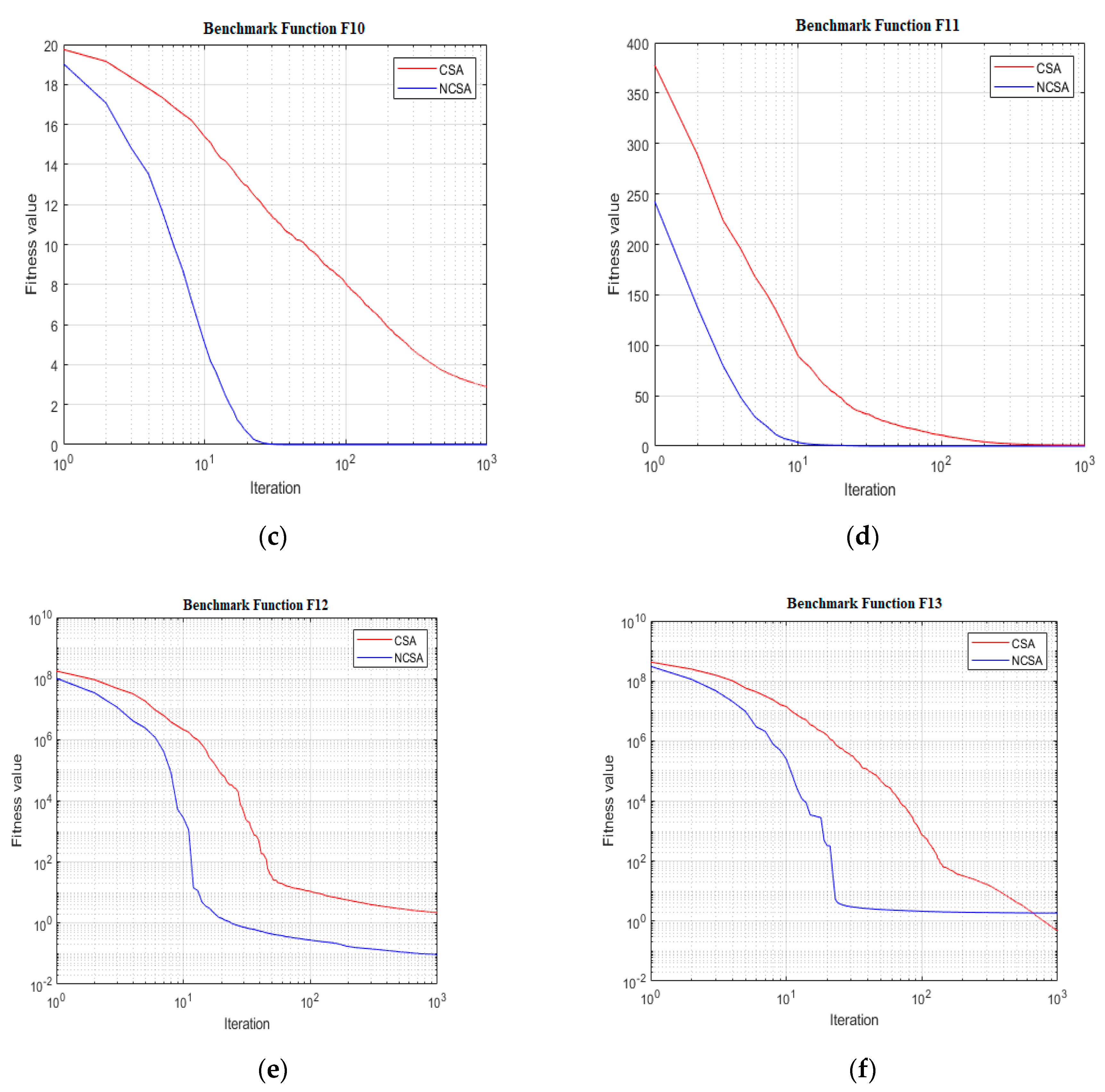

4.1.3. Convergence Analysis

4.2. Well Placement Optimization

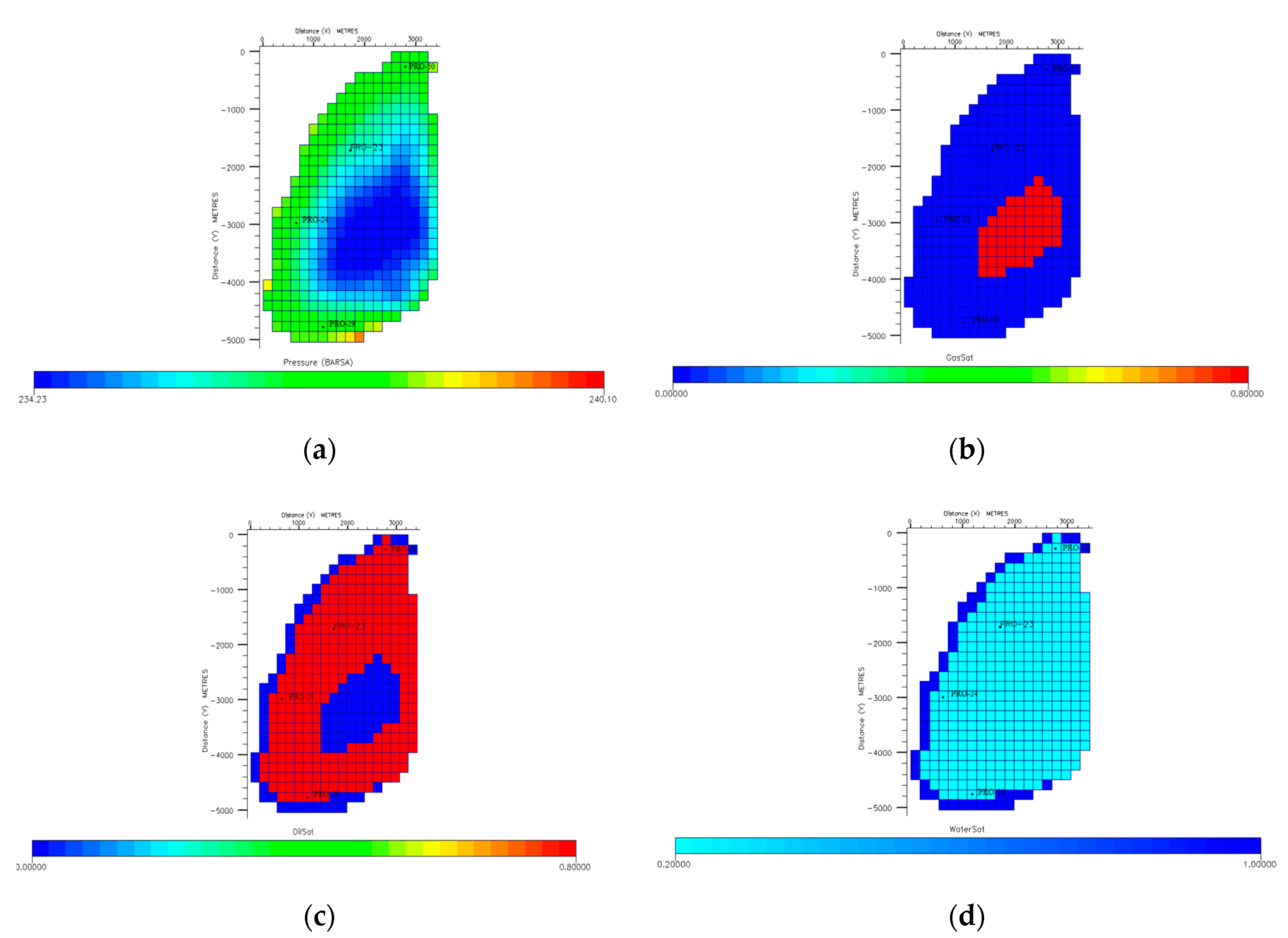

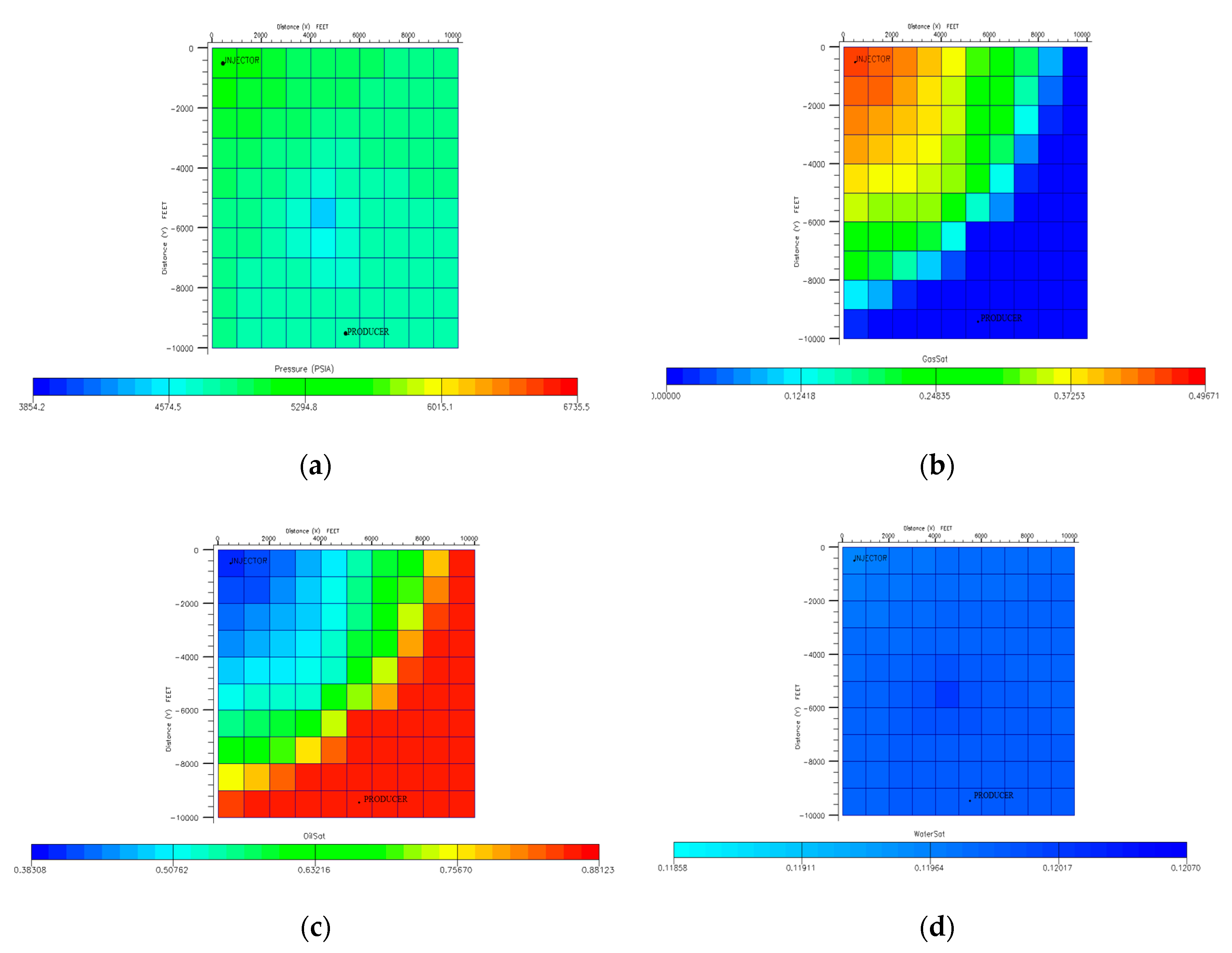

4.2.1. Description of Case Studies

4.2.2. Input Data

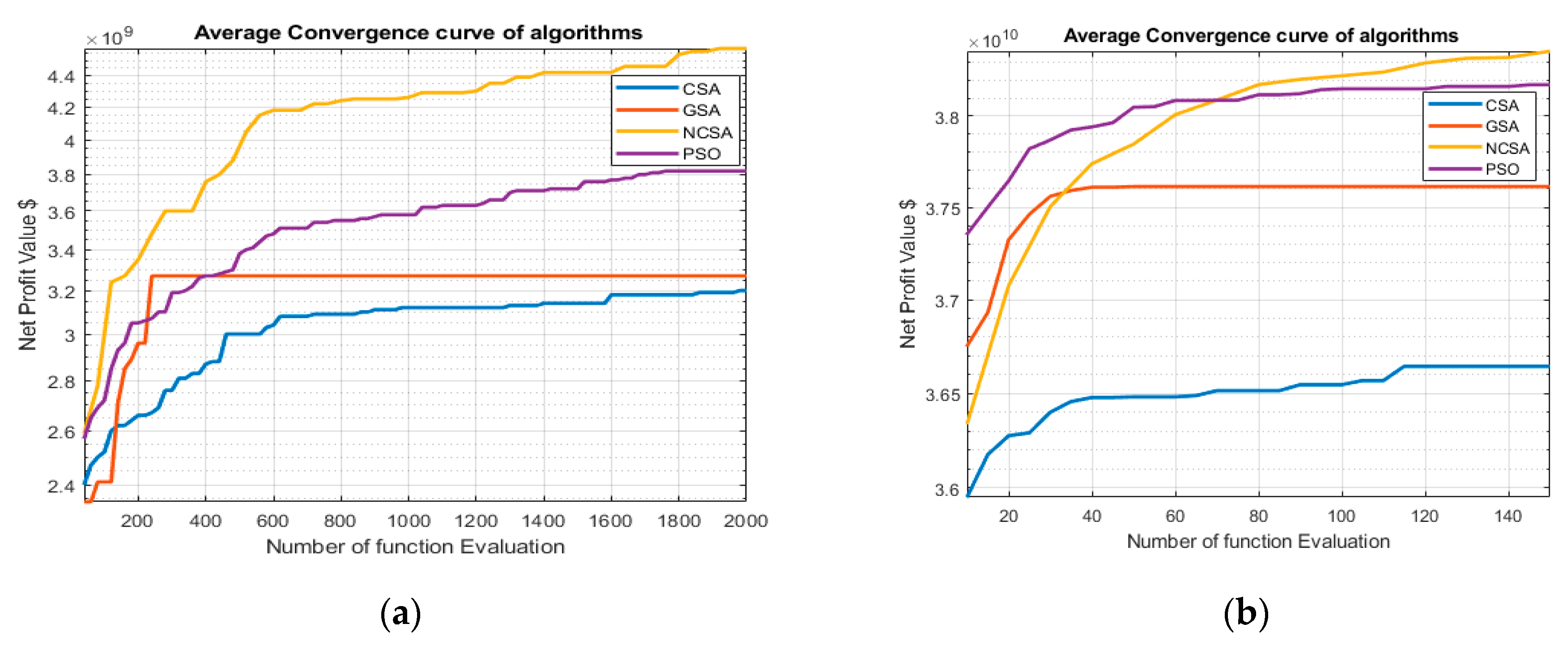

4.2.3. Convergence Analysis

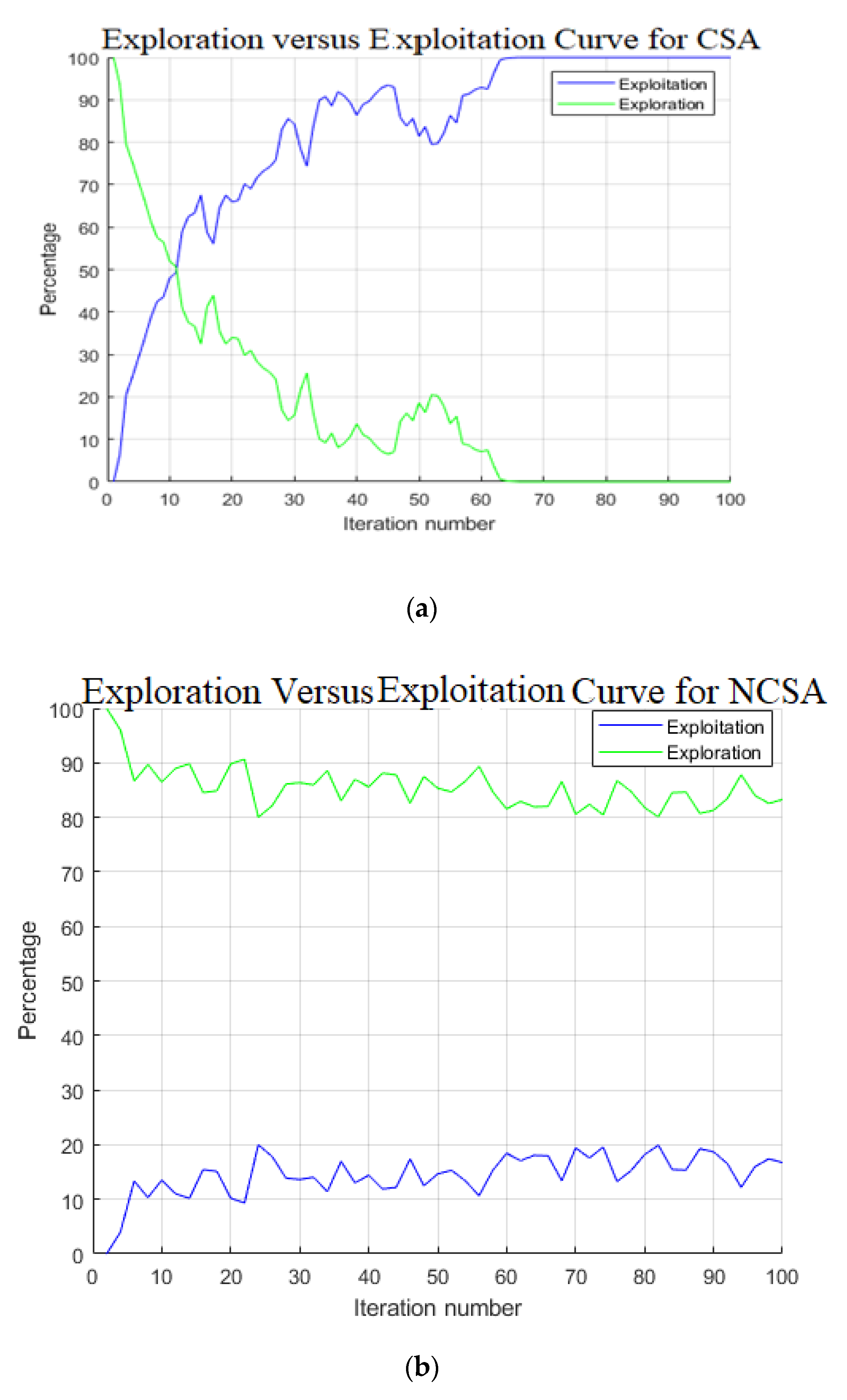

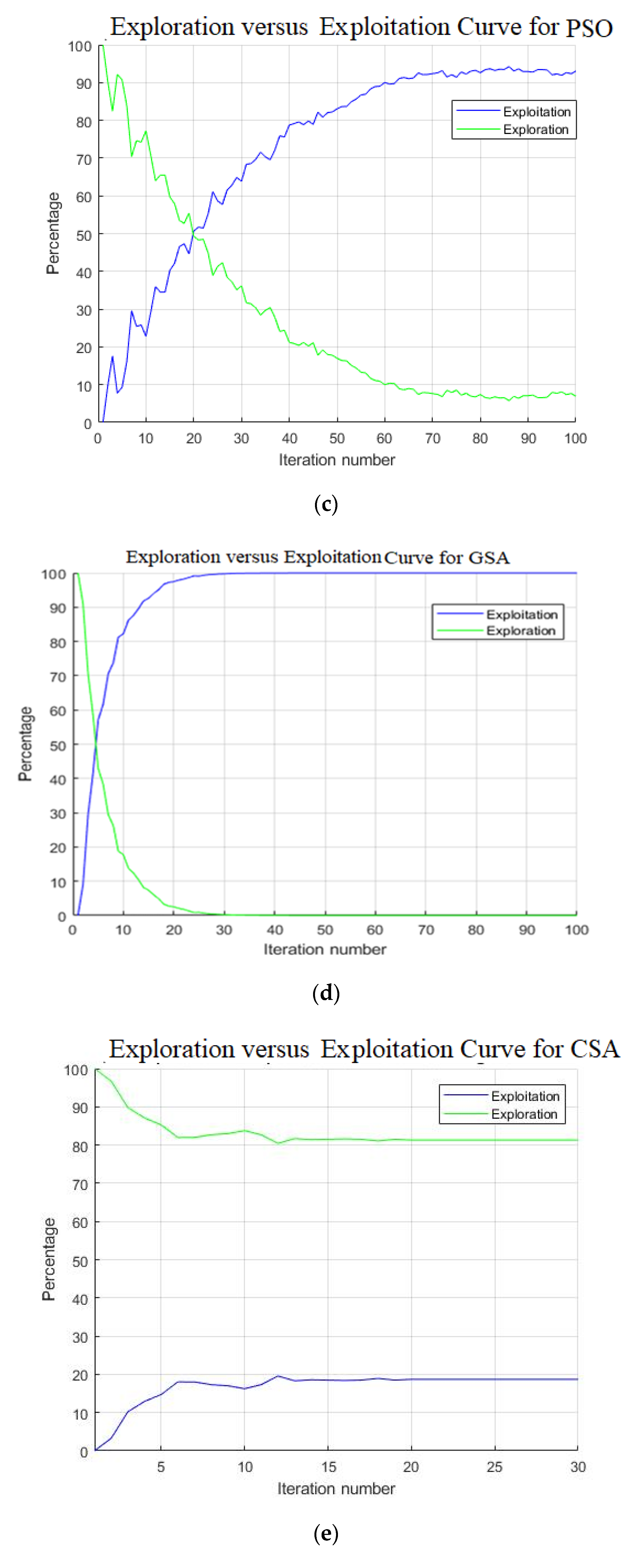

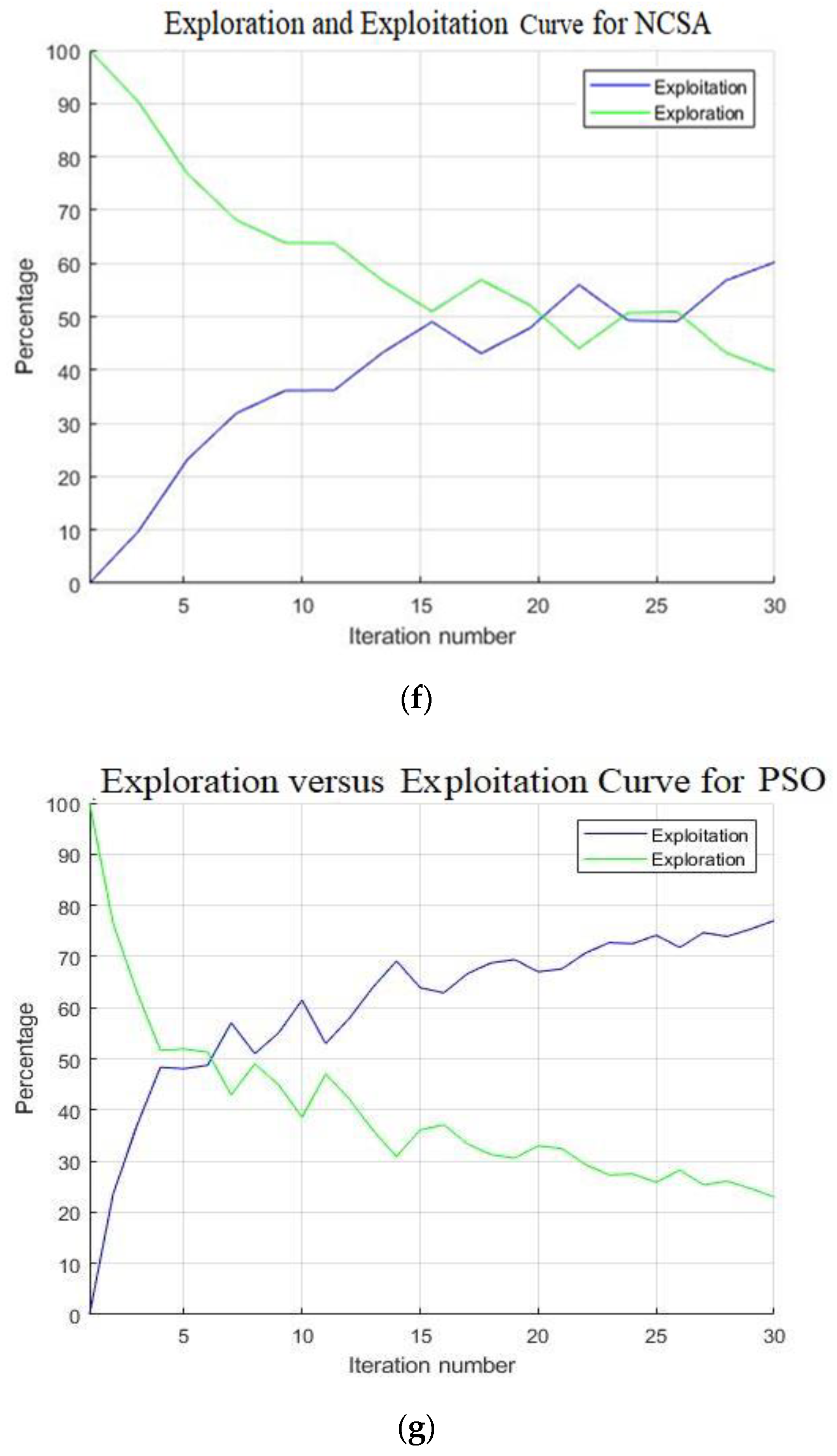

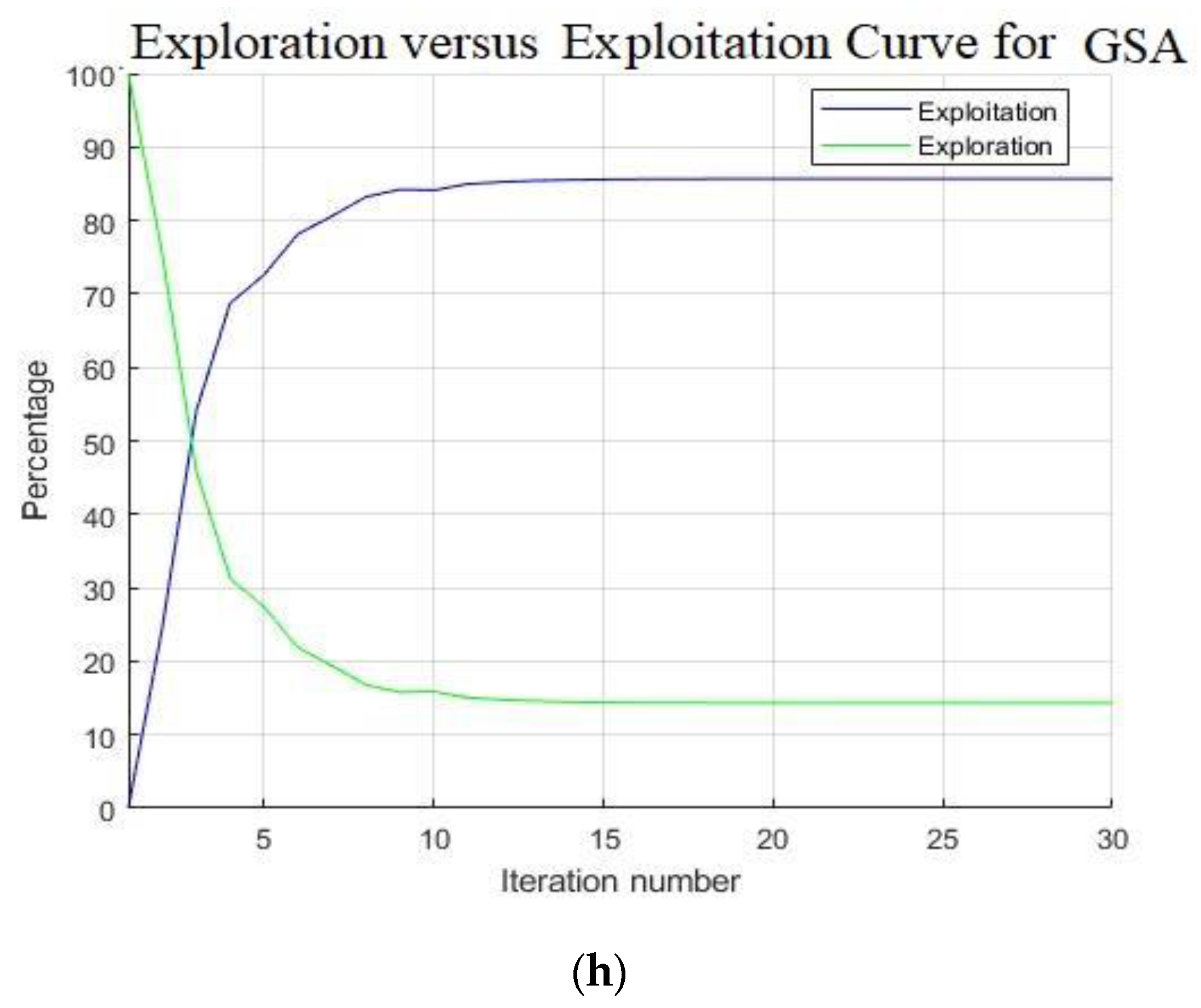

4.2.4. Exploration and Exploitation Analysis

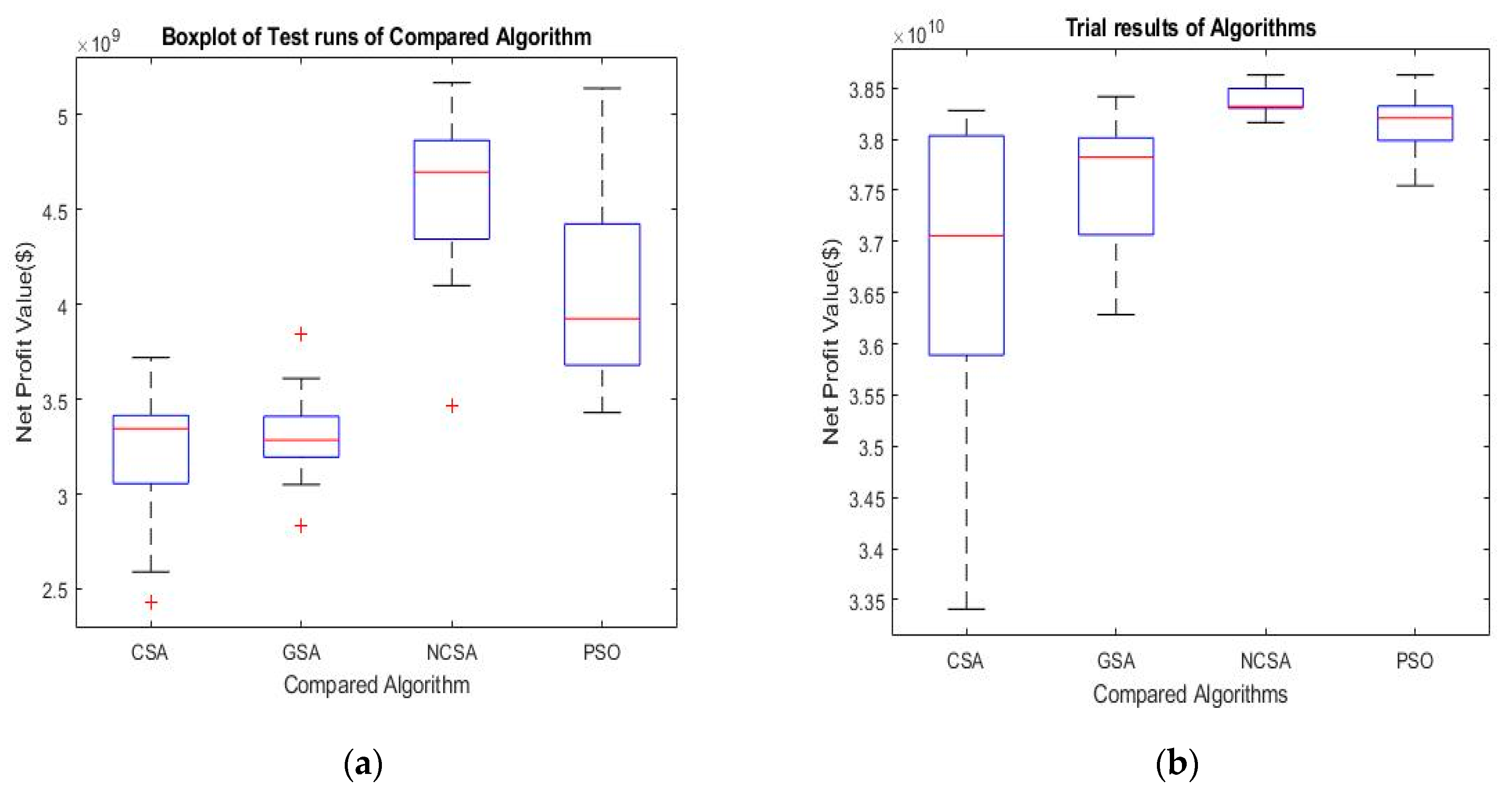

4.2.5. Performance Measurement and Statistical Analysis

4.2.6. Wilcoxon’s Test

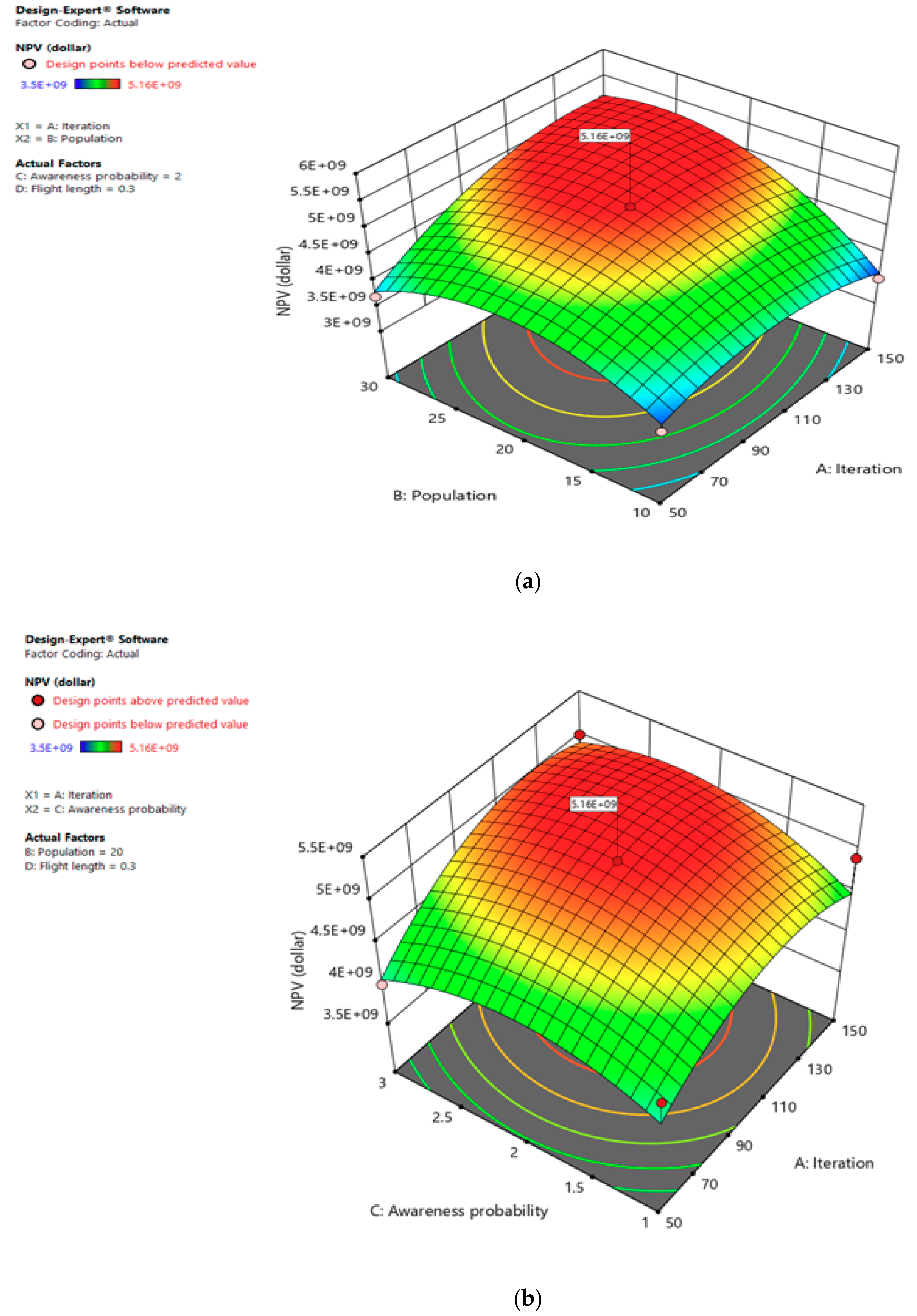

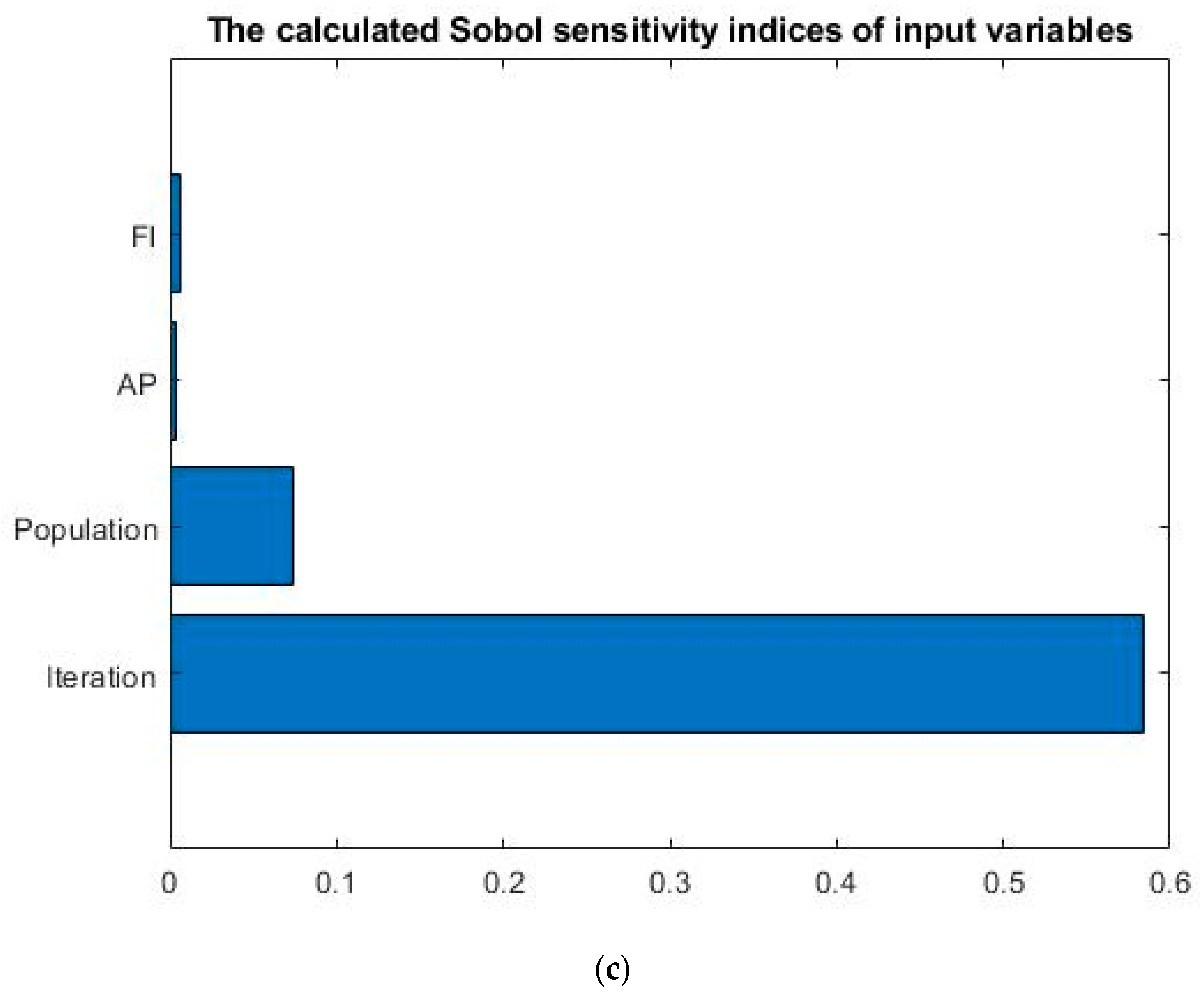

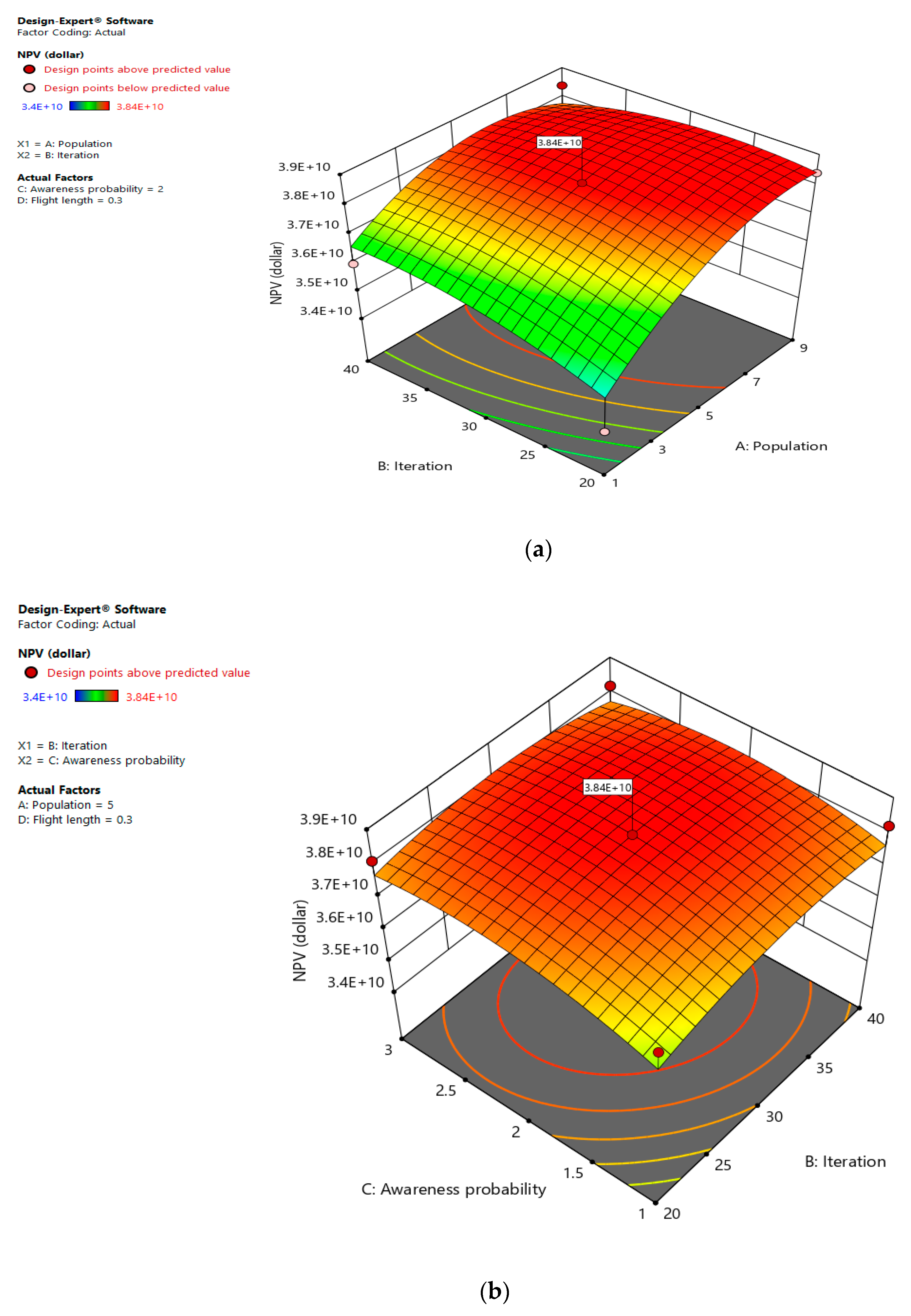

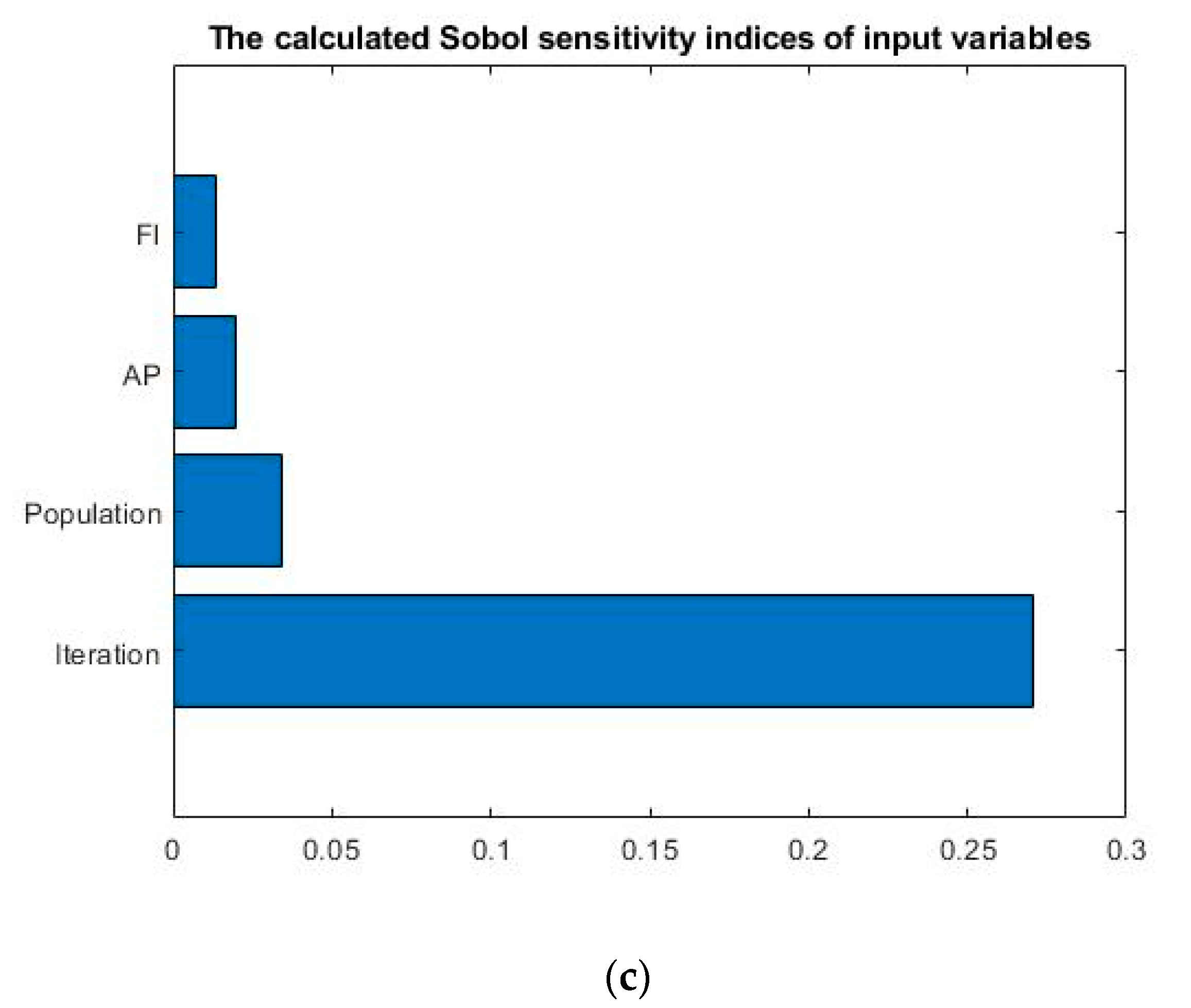

4.3. Sensitivity Analysis

4.3.1. Case Study 1

4.3.2. Case Study 2

4.4. Advantage and Disadvantage

4.5. Discussion

- NCSA can perform better than PSO, GSA, and CSA to tackle highly nonlinear, multimodal optimization problems as NCSA can automatically subdivide its population into subgroups since the niching technique is implemented.

- To avoid premature convergence, such as those in PSO and GSA, the awareness probability parameter keeps NCSA switching between the equations based on the personal best information, or explicit global best.

4.6. Limitations of the Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| ABC | Artificial Bee colony |

| Cw | Cost per unit volume of produced water ($/STB) |

| CSA | Crow Search Algorithm |

| CAPEX | Capital expenditure ($) |

| D | Discount rate (fraction) |

| Std | Standard deviation |

| Avg | Average |

| GA | Genetic Algorithm |

| ICA | Imperialist Competitive Algorithm |

| MA | Metaheuristic algorithms |

| NFL | No Free Lunch theorem |

| NPV | Net present value ($) |

| NCSA | Niching Crow Search Algorithm |

| OPEX | Operational expenditure ($) |

| O-CSMADS | Meta-optimized hybrid cat swarm MADS |

| PUNQ-S3 | A synthetic Reservoir |

| PSO | Particle Swarm Optimization |

| Po | Oil price ($/STB) |

| Qo | Cumulative oil production (STB) |

| Qw | Cumulative water production (STB) |

| QPSO | Quantum Particle Swarm optimization |

| PSO | Particle Swarm Optimization |

| SPE-1 | A Synthetic Reservoir |

| T | Number of years |

| WPO | Well Placement Optimization |

Appendix A

| Function | Range | Dim | fmin |

|---|---|---|---|

| [−100, 100] | 30 | 0 | |

| 2. | [−10, 10] | 30 | 0 |

| 3. | [−100, 100] | 30 | 0 |

| 4. (x) = maxi | [−100, 100] | 30 | 0 |

| 5. (x) = | [−30, 30] | 30 | 0 |

| 6. | [−100, 100] | 30 | 0 |

| 7. | [−1.28, 1.28] | 30 | 0 |

| 8. | [−500, 500] | 30 | −418.9829 5 |

| 9. | [−5.12, 5.12] | 30 | 0 |

| 10. | [−32, 32] | 30 | 0 |

| 11. | [−600, 600] | 30 | 0 |

| 12.+ | [−50, 50] | 30 | 0 |

| 13. + | [−50, 50] | 30 | −4.687 |

| 14. | [−65.536, 65.536] | 2 | 1 |

| 15. | [−5, 5] | 4 | 0.00030 |

| 16. | [−5, 5] | 2 | −1.0316 |

| 17. | [−5, 5] | 2 | 0.398 |

| 18. | [−2, 2] | 2 | 3 |

| 19. | [1, 3] | 3 | −3.86 |

| 20. | [0, 1] | 6 | −3.32 |

| 21. | [0, 10] | 4 | −10.1532 |

| 22. | [0, 10] | 4 | −10.4028 |

| 23. | [0, 10] | 4 | −10.5363 |

References

- Shahri, A.A.; Moud, F.M. Landslide susceptibility mapping using hybridized block modular intelligence model. Bull. Eng. Geol. Environ. 2020, 1–18. [Google Scholar] [CrossRef]

- Bindiya, T.; Elias, E. Modified metaheuristic algorithms for the optimal design of multiplier-less non-uniform channel filters. Circuits Syst. Signal. Proc. 2014, 33, 815–837. [Google Scholar] [CrossRef]

- Lee, H.M.; Jung, D.; Sadollah, A.; Kim, J.H. Performance comparison of metaheuristic algorithms using a modified Gaussian fitness landscape generator. Soft Comput. 2019, 24, 1–11. [Google Scholar] [CrossRef]

- Naik, A.; Satapathy, S.C.; Abraham, A. Modified Social Group Optimization—A meta-heuristic algorithm to solve short-term hydrothermal scheduling. Appl. Soft Comput. 2020, 95, 106524. [Google Scholar] [CrossRef]

- Shahri, A.A.; Moud, F.M. Liquefaction potential analysis using hybrid multi-objective intelligence model. Environ. Earth Sci. 2020, 79, 1–17. [Google Scholar]

- Mao, K.; Pan, Q.-K.; Pang, X.; Chai, T. A novel Lagrangian relaxation approach for a hybrid flowshop scheduling problem in the steelmaking-continuous casting process. Eur. J. Oper. Res. 2014, 236, 51–60. [Google Scholar] [CrossRef]

- Fattahi, P.; Hosseini, S.M.H.; Jolai, F.; Tavakkoli-Moghaddam, R. A branch and bound algorithm for hybrid flow shop scheduling problem with setup time and assembly operations. Appl. Math. Model. 2014, 38, 119–134. [Google Scholar] [CrossRef]

- Floudas, C.A.; Gounaris, C.E. A review of recent advances in global optimization. J. Glob. Opt. 2009, 45, 3–38. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Gao, Z.; Li, Y.; Yang, Y.; Wang, X.; Dong, N.; Chiang, H.-D. A GPSO-optimized convolutional neural networks for EEG-based emotion recognition. Neurocomputing 2020, 380, 225–235. [Google Scholar] [CrossRef]

- Bacanin, N.; Bezdan, T.; Tuba, E.; Strumberger, I.; Tuba, M. Monarch Butterfly Optimization Based Convolutional Neural Network Design. Mathematics 2020, 8, 936. [Google Scholar] [CrossRef]

- Islam, J.; Vasant, P.M.; Negash, B.M.; Laruccia, M.B.; Myint, M.; Watada, J. A holistic review on artificial intelligence techniques for well placement optimization problem. Adv. Eng. Softw. 2020, 141, 102767. [Google Scholar] [CrossRef]

- Onwunalu, J.E.; Durlofsky, L.J. Application of a particle swarm optimization algorithm for determining optimum well location and type. Comput. Geosci. 2010, 14, 183–198. [Google Scholar] [CrossRef]

- Ma, X.; Plaksina, T.; Gildin, E. Integrated horizontal well placement and hydraulic fracture stages design optimization in unconventional gas reservoirs. In Proceedings of the SPE Unconventional Resources Conference, Society of Petroleum Engineers, Calgary, AB, Canada, 5–7 November 2013. [Google Scholar]

- Rosenwald, G.W.; Green, D.W. A method for determining the optimum location of wells in a reservoir using mixed-integer programming. Soc. Petrol. Eng. J. 1974, 14, 44–54. [Google Scholar] [CrossRef]

- Pan, Y.; Horne, R.N. Improved methods for multivariate optimization of field development scheduling and well placement design. In Proceedings of the SPE Annual Technical Conference and Exhibition, Society of Petroleum Engineers, New Orleans, LA, USA, 27–30 September 1998. [Google Scholar]

- Zhang, L.M. Smart Well Pattern Optimization Using Gradient Algorithm. J. Energy Resour. Technol. Trans. Asme 2016, 138, 012901. [Google Scholar] [CrossRef]

- Li, L.L.; Jafarpour, B. A variable-control well placement optimization for improved reservoir development. Comput. Geosci. 2012, 16, 871–889. [Google Scholar] [CrossRef]

- Bangerth, W.; Klie, H.; Wheeler, M.F.; Stoffa, P.L.; Sen, M.K. On optimization algorithms for the reservoir oil well placement problem. Comput. Geosci. 2006, 10, 303–319. [Google Scholar] [CrossRef]

- Isebor, O.J.; Durlofsky, L.J.; Ciaurri, D.E. A derivative-free methodology with local and global search for the constrained joint optimization of well locations and controls. Comput. Geosci. 2014, 18, 463–482. [Google Scholar] [CrossRef]

- Giuliani, C.M.; Camponogara, E. Derivative-free methods applied to daily production optimization of gas-lifted oil fields. Comput. Chem. Eng. 2015, 75, 60–64. [Google Scholar] [CrossRef]

- Forouzanfar, F.; Reynolds, A.C. Well-placement optimization using a derivative-free method. J. Petrol. Sci. Eng. 2013, 109, 96–116. [Google Scholar] [CrossRef]

- Ma, J. An Intelligent Method for Deep-Water Injection-Production Well Pattern Design. In Proceedings of the 28th International Ocean and Polar Engineering Conference, Sapporo, Japan, 10–15 June 2018. [Google Scholar]

- Afshari, S.; Aminshahidy, B.; Pishvaie, M.R. Application of an improved harmony search algorithm in well placement optimization using streamline simulation. J. Petrol. Sci. Eng. 2011, 78, 664–678. [Google Scholar] [CrossRef]

- Awotunde, A.A. Inclusion of Well Schedule and Project Life in Well Placement Optimization. In Proceedings of the SPE Nigeria Annual International Conference and Exhibition, Society of Petroleum Engineers, Lagos, Nigeia, 5–7 August 2014. [Google Scholar]

- Das, K.N.; Parouha, R.P. Optimization of Engineering Design Problems via an Efficient Hybrid Meta-heuristic Algorithm. IFAC Proc. Vol. 2014, 47, 692–699. [Google Scholar] [CrossRef]

- Alghareeb, Z.M.; Walton, S.P.; Williams, J.R. Well placement optimization under constraints using modified cuckoo search. In Proceedings of the SPE Saudi Arabia Section Technical Symposium and Exhibition, Society of Petroleum Engineers, Al-Khobar, Saudi Arabia, 21–24 April 2014. [Google Scholar]

- Al Dossary, M.A.; Nasrabadi, H. Well placement optimization using imperialist competitive algorithm. J. Petrol. Sci. Eng. 2016, 147, 237–248. [Google Scholar] [CrossRef]

- Shahri, A.A.; Asheghi, R.; Zak, M.K. A hybridized intelligence model to improve the predictability level of strength index parameters of rocks. Neural Comput. Appl. 2020, 1–14. [Google Scholar] [CrossRef]

- Asheghi, R.; Shahri, A.A.; Zak, M.K. Prediction of uniaxial compressive strength of different quarried rocks using metaheuristic algorithm. Arab. J. Sci. Eng. 2019, 44, 8645–8659. [Google Scholar] [CrossRef]

- Shahri, A.A.; Moud, F.M.; Lialestani, S.P.M. A hybrid computing model to predict rock strength index properties using support vector regression. Eng. Comput. 2020, 1–16. [Google Scholar] [CrossRef]

- Sattar, D.; Salim, R. A smart metaheuristic algorithm for solving engineering problems. Eng. Comput. 2020, 1–29. [Google Scholar] [CrossRef]

- Bozorg-Haddad, O.; Solgi, M.; Loï, H.A. Meta-Heuristic and Evolutionary Algorithms for Engineering Optimization; John Wiley & Sons: New York, NY, USA, 2017. [Google Scholar]

- Onwunalu, J. Optimization of Field Development Using Particle Swarm Optimization and New Well Pattern Descriptions; Stanford University: Stanford, CA, USA, 2010. [Google Scholar]

- Siavashi, M.; Tehrani, M.R.; Nakhaee, A. Efficient Particle Swarm Optimization of Well Placement to Enhance Oil Recovery Using a Novel Streamline-Based Objective Function. J. Energy Resour. Technol. Trans. Asme 2016, 138, 052903. [Google Scholar] [CrossRef]

- Feng, Q.H. Well control optimization considering formation damage caused by suspended particles in injected water. J. Natl. Gas. Sci. Eng. 2016, 35, 21–32. [Google Scholar] [CrossRef]

- Greiner, D.; Periaux, J.; Quagliarella, D.; Magalhaes-Mendes, J.; Galván, B. Evolutionary Algorithms and Metaheuristics: Applications in Engineering Design and Optimization; Hindawi: London, UK, 2018. [Google Scholar]

- Khoshneshin, R.; Sadeghnejad, S. Integrated Well Placement and Completion Optimization using Heuristic Algorithms: A Case Study of an Iranian Carbonate Formation. J. Chem. Petrol. Eng. 2018, 52, 35–47. [Google Scholar]

- Naderi, M.; Khamehchi, E. Application of DOE and metaheuristic bat algorithm for well placement and individual well controls optimization. J. Natl. Gas. Sci. Eng. 2017, 46, 47–58. [Google Scholar] [CrossRef]

- Islam, J.; Vasant, P.M.; Hoqe, A.; Akand, T.; Negash, B.M. Well Location Optimization Using Novel Bat Optimization Algorithm for PUNQ-S3 Reservoir. Solid State Technol. 2020, 63, 4040–4045. [Google Scholar]

- Túpac, Y.J.; Vellasco, M.M.R.; Pacheco, M.A.C. Planejamento e Otimização do Desenvolvimento de um Campo de Petróleo por Algoritmos Genéticos. In Proceedings of the VIII International Conference on Industrial Engineering and Operations Management, Bandung, Indonesia, 6–8 March 2002. [Google Scholar]

- Montes, G.; Bartolome, P.; Udias, A.L. The use of genetic algorithms in well placement optimization. In Proceedings of the SPE Latin American and Caribbean petroleum engineering conference, Society of Petroleum Engineers, Buenos Aires, Argentina, 25–28 March 2001. [Google Scholar]

- Yeten, B.; Durlofsky, L.J.; Aziz, K.J.S.J. Optimization of nonconventional well type, location, and trajectory. SPE J. 2003, 8, 200–210. [Google Scholar] [CrossRef]

- Guyaguler, B.; Horne, R.N. Uncertainty assessment of well placement optimization. In SPE Annual Technical Conference and Exhibition; Society of Petroleum Engineers: New Orleans, LA, USA, 2001; Volume 30. [Google Scholar] [CrossRef]

- Lyons, J.; Nasrabadi, H. Well placement optimization under time-dependent uncertainty using an ensemble Kalman filter and a genetic algorithm. J. Petrol. Sci. Eng. 2013, 109, 70–79. [Google Scholar] [CrossRef]

- Humphries, T.D.; Haynes, R.D.; James, L.A. Simultaneous and sequential approaches to joint optimization of well placement and control. Comput. Geosci. 2014, 18, 433–448. [Google Scholar] [CrossRef]

- Aliyev, E. Use of Hybrid Approaches and Metaoptimization for Well Placement Problems. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2011. [Google Scholar]

- Emerick, A.A. Well placement optimization using a genetic algorithm with nonlinear constraints. In Proceedings of the SPE reservoir simulation symposium, Society of Petroleum Engineers, Woodlands, TX, USA, 2–4 February 2009. [Google Scholar]

- Negash, B.M.; Yaw, A.D. Artificial neural network based production forecasting for a hydrocarbon reservoir under water injection. Petrol. Explor. Dev. 2020, 47, 383–392. [Google Scholar] [CrossRef]

- Hamida, Z.; Azizi, F.; Saad, G. An efficient geometry-based optimization approach for well placement in oil fields. J. Petrol. Sci. Eng. 2017, 149, 383–392. [Google Scholar] [CrossRef]

- Irtija, N.; Sangoleye, F.; Tsiropoulou, E.E. Contract-Theoretic Demand Response Management in Smart Grid Systems. IEEE Access 2020, 8, 184976–184987. [Google Scholar] [CrossRef]

- Huang, X.-L.; Ma, X.; Hu, F. Machine learning and intelligent communications. Mob. Netw. Appl. 2018, 23, 68–70. [Google Scholar] [CrossRef]

- Nwankwor, E.; Nagar, A.K.; Reid, D.C. Hybrid differential evolution and particle swarm optimization for optimal well placement. Comput. Geosci. 2013, 17, 249–268. [Google Scholar] [CrossRef]

- Negash, B.; Ayoub, M.A.; Jufar, S.R.; Robert, A.J. History matching using proxy modeling and multiobjective optimizations. In ICIPEG 2016; Springer: Cham, Switzerland, 2017; pp. 3–16. [Google Scholar]

- Negash, B.M.; Him, P.C. Reconstruction of Missing Gas, Oil, and Water Flow-Rate Data: A Unified Physics and Data-Based Approach. SPE Res. Eval. Eng. 2020, 23. [Google Scholar] [CrossRef]

- Islam, J.; Negash, B.M.; Vasant, P.M.; Hossain, N.I.; Watada, J. Quantum-Based Analytical Techniques on the Tackling of Well Placement Optimization. Appl. Sci. 2020, 10, 7000. [Google Scholar] [CrossRef]

- Islam, J.; Vasant, P.; Negash, B.M.; Gupta, A.; Watada, J.; Banik, A. Well Placement Optimization Using Firefly Algorithm and Crow Search Algorithm. J. Adv. Eng. Comput. 2020, 4, 181–195. [Google Scholar] [CrossRef]

- Oliva, D.; Hinojosa, S.; Cuevas, E.; Pajares, G.; Avalos, O.; Gálvez, J. Cross entropy based thresholding for magnetic resonance brain images using Crow Search Algorithm. Exp. Syst. Appl. 2017, 79, 164–180. [Google Scholar] [CrossRef]

- Askarzadeh, A. Capacitor placement in distribution systems for power loss reduction and voltage improvement: A new methodology. IET Gene. Transm. Distrib. 2016, 10, 3631–3638. [Google Scholar] [CrossRef]

- Aleem, S.H.A.; Zobaa, A.F.; Balci, M.E. Optimal resonance-free third-order high-pass filters based on minimization of the total cost of the filters using Crow Search Algorithm. Electr. Power Syst. Res. 2017, 151, 381–394. [Google Scholar] [CrossRef]

- Jain, M.; Rani, A.; Singh, V. An improved Crow Search Algorithm for high-dimensional problems. J. Intell. Fuzzy Syst. 2017, 33, 3597–3614. [Google Scholar] [CrossRef]

- Sayed, G.I.; Hassanien, A.E.; Azar, A.T. Feature selection via a novel chaotic crow search algorithm. Neural Comput. Appl. 2019, 31, 171–188. [Google Scholar] [CrossRef]

- Díaz, P.; Pérez-Cisneros, M.; Cuevas, E.; Avalos, O.; Gálvez, J.; Hinojosa, S.; Zaldivar, D. An improved crow search algorithm applied to energy problems. Energies 2018, 11, 571. [Google Scholar] [CrossRef]

- Abdi, F.M.H. A modified crow search algorithm (MCSA) for solving economic load dispatch problem. Appl. Soft Comput. 2018, 71, 51–65. [Google Scholar]

- Rizk-Allah, R.M.; Hassanien, A.E.; Bhattacharyya, S. Chaotic crow search algorithm for fractional optimization problems. Appl. Soft Comput. 2018, 71, 1161–1175. [Google Scholar] [CrossRef]

- Gupta, D.; Sundaram, S.; Khanna, A.; Hassanien, A.E.; de Albuquerque, V.H.C. Improved diagnosis of Parkinson’s disease using optimized crow search algorithm. Comput. Electr. Eng. 2018, 68, 412–424. [Google Scholar] [CrossRef]

- Islam, J.; Vasant, P.M.; Negash, B.M.; Laruccia, M.B.; Myint, M. A Survey of Nature-Inspired Algorithms With Application to Well Placement Optimization. In Deep Learning Techniques and Optimization Strategies in Big Data Analytics; IGI Global: Hershey, PA, USA, 2020; pp. 32–45. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization (PSO). In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Islam, J.; Vasant, P.M.; Negash, B.M.; Watada, J. A modified crow search algorithm with niching technique for numerical optimization. In Proceedings of the 2019 IEEE Student Conference on Research and Development (SCOReD), Perak, Malaysia, 15–17 October 2019; pp. 170–175. [Google Scholar]

- Veeramachaneni, K.; Peram, T.; Mohan, C.; Osadciw, L.A. Optimization using particle swarms with near neighbor interactions. In Genetic and Evolutionary Computation Conference; Springer: Cham, Switzerland, 2003; pp. 110–121. [Google Scholar]

- Qu, B.-Y.; Liang, J.J.; Suganthan, P.N. Niching particle swarm optimization with local search for multi-modal optimization. Inform. Sci. 2012, 197, 131–143. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Khan, R.A.; Awotunde, A.A. Determination of vertical/horizontal well type from generalized field development optimization. J. Petrol. Sci. Eng. 2018, 162, 652–665. [Google Scholar] [CrossRef]

- Floris, F.; Bush, M.; Cuypers, M.; Roggero, F.; Syversveen, A.R. Methods for quantifying the uncertainty of production forecasts: A comparative study. Petrol. Geosci. 2001, 7, S87–S96. [Google Scholar] [CrossRef]

- Minton, J.J. A comparison of common methods for optimal well placement. Univ. Auckl. Res. Rep. 2012, 7. [Google Scholar] [CrossRef]

- Boah, E.A.; Kondo, O.K.S.; Borsah, A.A.; Brantson, E.T. Critical evaluation of infill well placement and optimization of well spacing using the particle swarm algorithm. J. Petrol. Exp. Prod. Technol. 2019, 9, 3113–3133. [Google Scholar] [CrossRef]

- Morales-Castañeda, B.; Zaldívar, D.; Cuevas, E.; Fausto, F.; Rodríguez, A. A better balance in metaheuristic algorithms: Does it exist? Swarm Evol. Comput. 2020, 54, 100671. [Google Scholar] [CrossRef]

- Hussain, K.; Salleh, M.N.M.; Cheng, S.; Shi, Y.; Naseem, R. Artificial bee colony algorithm: A component-wise analysis using diversity measurement. J. King Saud. Univ. Comput. Inform. Sci. 2018, 32, 794–808. [Google Scholar] [CrossRef]

- Clerc, M. From theory to practice in particle swarm optimization. In Handbook of Swarm Intelligence, (Adaptation, Learning, and Optimization); Panigrahi, B.K., Lim, M.H., Eds.; Springer: Cham, Switzerland, 2011; pp. 3–36. [Google Scholar]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Cham, Switzerland, 1992; pp. 196–202. [Google Scholar]

- García, S.; Molina, D.; Lozano, M.; Herrera, F. A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: A case study on the CEC’2005 special session on real parameter optimization. J. Heurist. 2009, 15, 617. [Google Scholar] [CrossRef]

- Lundstedt, T. Experimental design and optimization. Chem. Intell. Lab. Syst. 1998, 42, 3–40. [Google Scholar] [CrossRef]

- Fisher, R.A. The design of experiments. Br. Med. J. 1936, 1, 554. [Google Scholar] [CrossRef]

- Montgomery, D.C. Montgomery Design and Analysis of Experiments; John Wiley & Sons: Hoboken, NJ, USA, 1997. [Google Scholar]

- Myers, R.H.; Montgomery, D.C.; Vining, G.G.; Borror, C.M.; Kowalski, S.M. Response surface methodology: A retrospective and literature survey. J. Q. Technol. 2004, 36, 53–77. [Google Scholar] [CrossRef]

- Asheghi, R.; Hosseini, S.A.; Saneie, M.; Shahri, A.A. Updating the neural network sediment load models using different sensitivity analysis methods: A regional application. J. Hydroinform. 2020, 22, 562–577. [Google Scholar] [CrossRef]

- Cannavó, F. Sensitivity analysis for volcanic source modeling quality assessment and model selection. Comput. Geosci. 2012, 44, 52–59. [Google Scholar] [CrossRef]

| F | NCSA | GSA [71] | PSO [71] | CSA | ||||

|---|---|---|---|---|---|---|---|---|

| Ave | Std | Ave | Std | Ave | Ave | Ave | Std | |

| f1 | 3.48 | 1.05 | 2.53 | 9.67 | 1.36 | 2.02 | 4.19 | 1.16 |

| f2 | 6.64 | 1.71 | 5.57 | 1.94 | 4.21 | 4.54 | 2.72 | 9.03 |

| f3 | 1.60 | 5.97 | 8.97 | 3.19 | 7.01 | 2.21 | 2.35 | 7.16 |

| f4 | 1.67 | 7.64 | 7.35 | 1.74 | 1.09 | 3.17 | 5.11 | 1.22 |

| f5 | 2.68 | 4.18 | 6.75 | 6.22 | 9.67 | 6.01 | 2.24 | 1.15 |

| f6 | 3.16 | 2.39 | 2.50 | 1.74 | 1.02 | 8.28 | 3.88 | 1.45 |

| f7 | 8.06 | 4.80 | 8.94 | 4.34 | 1.23 | 4.50 | 3.30 | 1.17 |

| F | NCSA | GSA [71] | PSO [71] | CSA | ||||

|---|---|---|---|---|---|---|---|---|

| Ave | Std | Ave | Std | Ave | Std | Ave | Std | |

| f8 | −7.25 | 9.86 | −2.82 | 4.93 | −4.84 | 1.15 | −7.01 | 7.89 |

| f9 | 0.00 | 0.00 | 2.60 | 7.47 | 4.67 | 1.16 | 2.99 | 1.13 |

| f10 | 4.44 | 0.00 | 6.21 | 2.36 | 2.76 | 5 | 2.80 | 6.27 |

| f11 | 0.00 | 0.00 | 2.77 | 5.04 | 9.22 | 7.72 | 1.02 | 4.07 |

| f12 | 1.66 | 8.75 | 1.80 | 9.51 | 6.92 | 2.63 | 2.56 | 1.38 |

| f13 | 4.08 | 2.27 | 8.90 | 7.13 | 6.68 | 8.91 | 5.54 | 2.19 |

| f14 | 1.13 | 5.03 | 5.86 | 3.83 | 3.63 | 2.56 | 9.98 | 9.44 |

| f15 | 3.75 | 7.73 | 3.67 | 1.65 | 5.77 | 2.22 | 4.05 | 7.73 |

| f16 | −1.03 | 2.39 | −1.03 | 4.88 | −1.03 | 6.25 | −1.03 | 2.39 |

| F | NCSA | GSA [71] | PSO [71] | CSA | ||||

|---|---|---|---|---|---|---|---|---|

| Ave | Std | Ave | Std | Ave | Ave | Ave | Std | |

| f17 | 3.98 | 1.09 | 3.98 | 0.00 | 3.98 | 0.00 | 3.98 | 8.48 |

| f18 | 3.00 | 9.98 | 3.00 | 4.17 | 3.00 | 1.33 | 3.00 | 1.32 |

| f19 | −3.86 | 2.67 | −3.86 | 2.29 | −3.86 | 2.58 | −3.86 | 2.19 |

| f20 | −3.29 | 4.45 | −3.32 | 2.31 | −3.27 | 6.05 | −3.31 | 3.11 |

| f21 | −7.73 | 2.19 | −5.96 | 3.74 | −6.87 | 3.02 | −1.02 | 4.65 |

| f22 | −8.82 | 1.90 | −9.68 | 2.01 | −8.46 | 3.09 | −1.04 | 4.54 |

| f23 | −9.16 | 1.86 | −1.05 | 2.60 | 9.95 | 1.78 | −1.05 | 2.19 |

| Ref. | Years | Technique | Parameter Configuration | |

|---|---|---|---|---|

| 1. | [43] | 2018 | GA | Crossover = 60% Mutation = 5% |

| 2. | [72] | 2018 | PSO | Inertial factor = 0.729 & = 1.494 (where & represents acceleration) |

| 3. | Proposed | - | NCSA | Flight length, fl = 2 Awareness probability, Ap = 0.3 |

| 4. | Proposed | 2010 | CSA | Flight length, fl = 2 Awareness probability, Ap = 0.3 |

| Economic Parameter | Value | Unit |

|---|---|---|

| Gas price, | 0.126 | $/MScf |

| Oil price, | 290.572 | $/STB |

| Discount rate | 10% | - |

| Capital expenditure (CAPEX) | 6.4 × 107 | $ |

| Water production cost | 31.447 | $/STB |

| Oil production cost | 72.327 | $/STB |

| Criteria | GSA | PSO | CSA | NCSA |

|---|---|---|---|---|

| Max | 3.84 | 5.14 | 3.72 | 5.17 |

| Min | 2.83 | 3.43 | 2.43 | 4.06 |

| Average | 3.33 | 4.07 | 3.24 | 4.37 |

| Standard deviation | 2.62 | 5.72 | 3.73 | 1.10 |

| Effectiveness | 6.44 | 7.87 | 6.27 | 8.46 |

| Efficiency | 1.39 | 5.53 | 5.09 | 5.04 |

| GSA | PSO | CSA | NCSA | |

|---|---|---|---|---|

| Maximum | 3.84 | 3.86 | 3.83 | 3.86 |

| Minimum | 3.63 | 3.75 | 3.34 | 3.82 |

| Average | 3.76 | 3.82 | 3.66 | 3.84 |

| Standard deviation | 6.21 | 3.09 | 1.63 | 1.50 |

| Effectiveness | 9.74 | 9.88 | 9.49 | 9.94 |

| Efficiency | 9.79 | 1.52 | 1.54 | 3.05 |

| Case Study 1 | Case Study 2 | |||||

|---|---|---|---|---|---|---|

| Z Value | p Value One Tail | p Value Two Tails | Z Value | p Value One Tail | p Value Two Tails | |

| NCSA Versus GSA | 2.68 | 3.73 | 7.45 | 3.40 | 3.37 | 6.74 |

| NCSA Versus PSO | 1.90 | 2.87 | 5.74 | 3.45 | 2.79 | 5.57 |

| NCSA Versus CSA | 2.68 | 3.73 | 7.45 | 3.50 | 2.30 | 4.60 |

| Parameter | Upper Bound | Lower Bound |

|---|---|---|

| Iteration | 150 | 50 |

| Population | 30 | 10 |

| Awareness probability | 3 | 1 |

| Flight length | 0.3 | 0.1 |

| Parameter | Upper Bound | Lower Bound |

|---|---|---|

| Iteration | 40 | 20 |

| Population | 9 | 1 |

| Awareness probability | 3 | 1 |

| Flight length | 0.3 | 0.1 |

| Techniques | Advantages | Disadvantages |

|---|---|---|

| NCSA | Higher effectiveness High exploration rate Superior net profit value. | A high standard deviation and low efficiency are observed. |

| PSO | Less parameter to tune. Simple structure and less dependent on initial points. | Trapped in local optima due to weak local search. A high standard deviation and low efficiency are observed. |

| GSA | Low standard deviation | High exploitation provides less net profit value. |

| CSA | Less parameter to tune. Faster convergence. Easy Implementation. | Less effective in nonlinear optimization. Trapped in local Optima. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, J.; Rahaman, M.S.A.; Vasant, P.M.; Negash, B.M.; Hoqe, A.; Khalifa Alhitmi, H.; Watada, J. A Modified Niching Crow Search Approach to Well Placement Optimization. Energies 2021, 14, 857. https://doi.org/10.3390/en14040857

Islam J, Rahaman MSA, Vasant PM, Negash BM, Hoqe A, Khalifa Alhitmi H, Watada J. A Modified Niching Crow Search Approach to Well Placement Optimization. Energies. 2021; 14(4):857. https://doi.org/10.3390/en14040857

Chicago/Turabian StyleIslam, Jahedul, Md Shokor A. Rahaman, Pandian M. Vasant, Berihun Mamo Negash, Ahshanul Hoqe, Hitmi Khalifa Alhitmi, and Junzo Watada. 2021. "A Modified Niching Crow Search Approach to Well Placement Optimization" Energies 14, no. 4: 857. https://doi.org/10.3390/en14040857

APA StyleIslam, J., Rahaman, M. S. A., Vasant, P. M., Negash, B. M., Hoqe, A., Khalifa Alhitmi, H., & Watada, J. (2021). A Modified Niching Crow Search Approach to Well Placement Optimization. Energies, 14(4), 857. https://doi.org/10.3390/en14040857