Equipping Seasonal Exponential Smoothing Models with Particle Swarm Optimization Algorithm for Electricity Consumption Forecasting

Abstract

:1. Introduction

2. Methodologies

2.1. Seasonal Exponential Smoothing Models

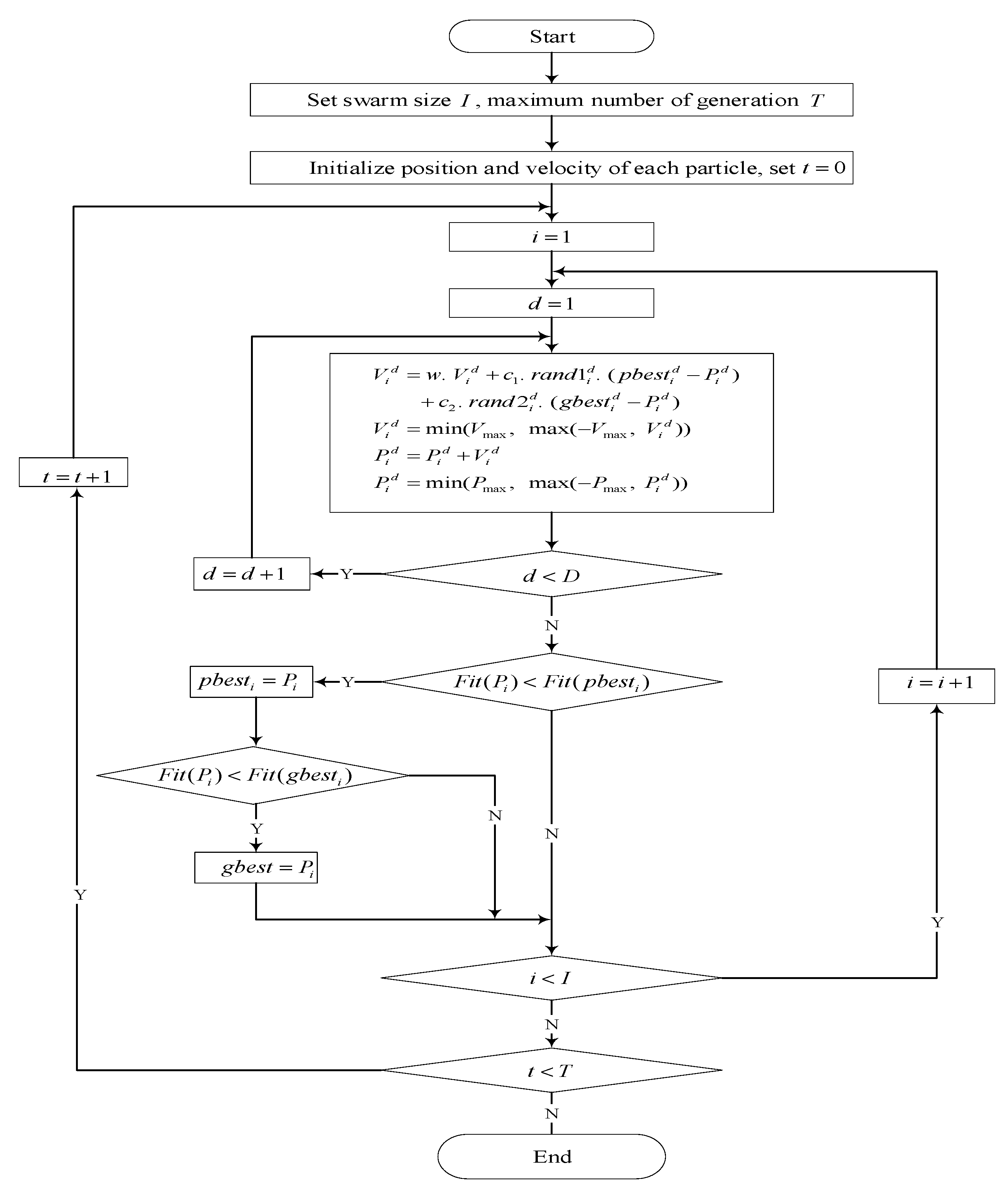

2.2. PSO Algorithm

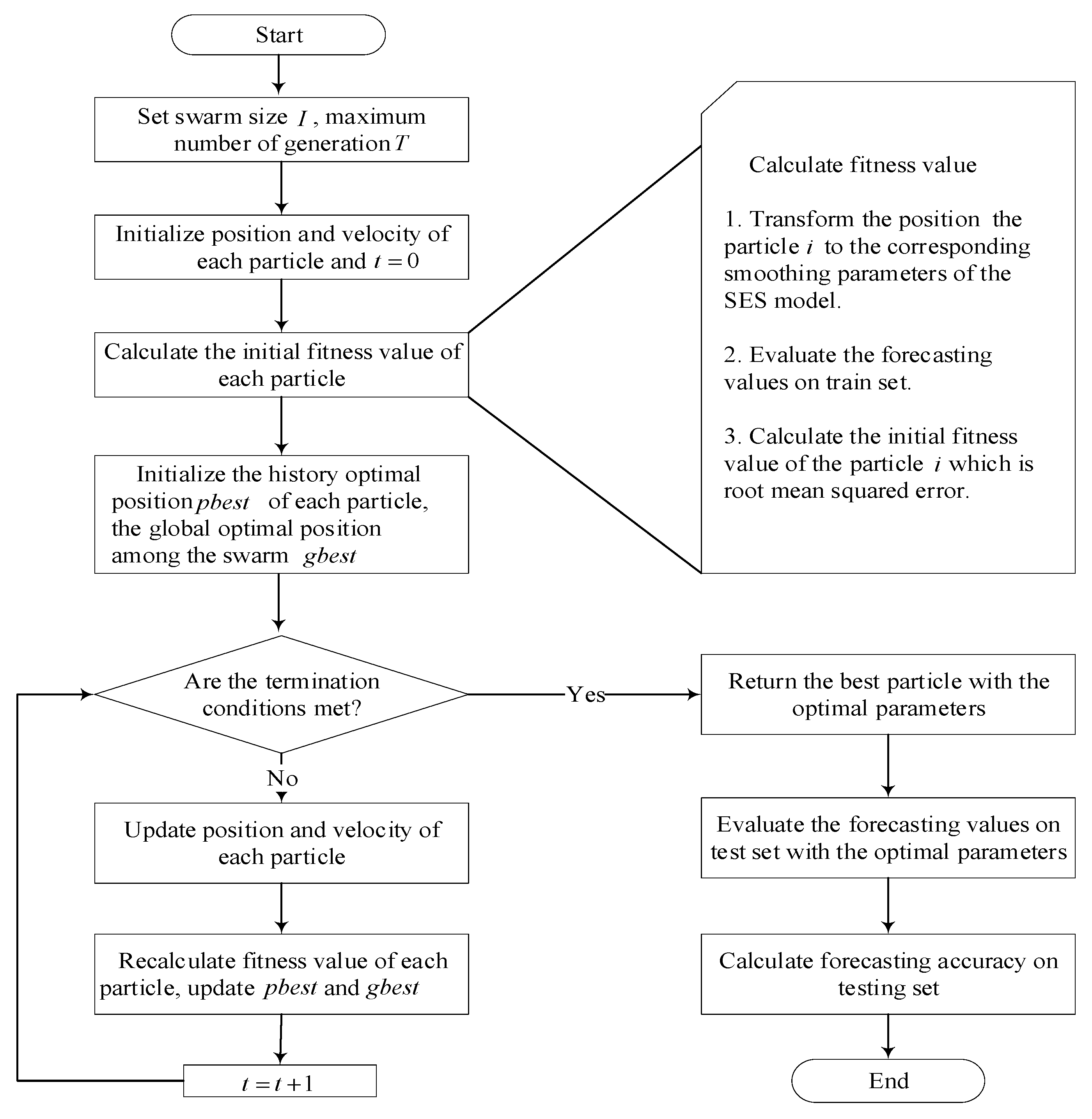

3. Proposed PSO-Based SES Modeling Strategy

- Initialization

- 2.

- Evaluation

- 3.

- Update

- 4.

- Prediction

4. Experimental Setup

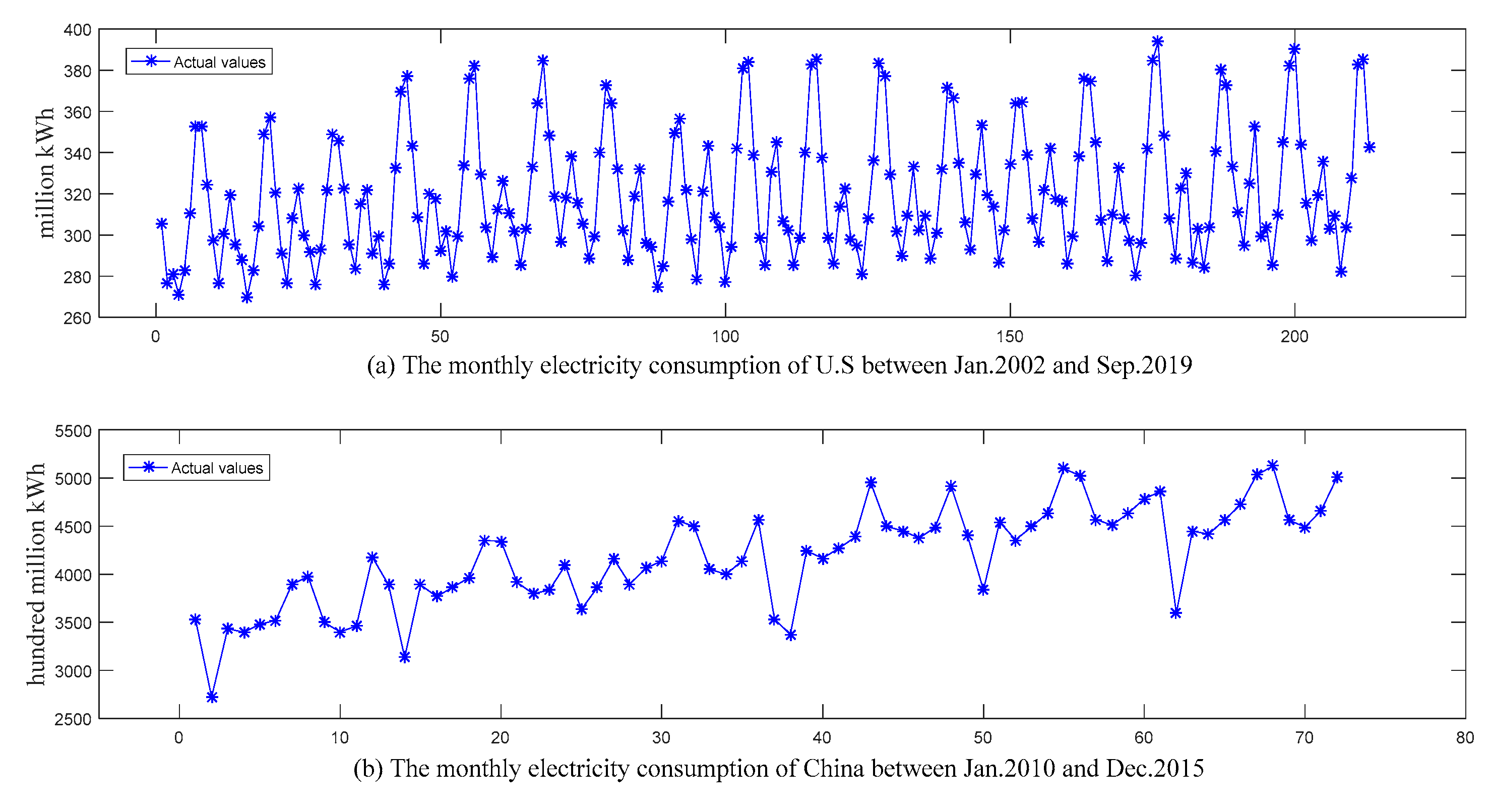

4.1. Data Description

4.2. Experimental Design

4.3. Performance Measure

5. Results and Discussion

5.1. Study 1: Examination of the PSO Algorithm for Parameter Optimization in SES Models

5.2. Study 2: Comparison with Well-Known Forecasting Models

- Stationarity test: a seasonal difference and a nonseasonal difference were performed for the original series. The augmented Dickey–Fuller test result showed that the difference series was stationary, so and ;

- model identification: the correlation coefficient graph of the difference sequence was analyzed and then the value range of ,,, and was determined;

- model selection: the trial-and-error method based on BIC was used to determine the order of the model. In this paper, the models established for the two electricity consumption datasets in U.S. and China were and , respectively, and all the estimated parameters in these two models passed the significance test;

- Residual error test: the series correlation LM test was performed on the fitted residual sequences of the above two models. The results showed that the two residual sequences were not correlated, which indicates that the models we established were credible;

- Prediction: Eviews 8 provided a dynamic forecast function for SARIMA’s multi-step-ahead prediction. Here, we obtained the predicted values of each sample in the test set from one step ahead to twelve steps ahead by changing the range of prediction sample, and then rearranged these prediction values according to the forecast horizon and further calculated the prediction accuracy of SARIMA at each forecast horizon.

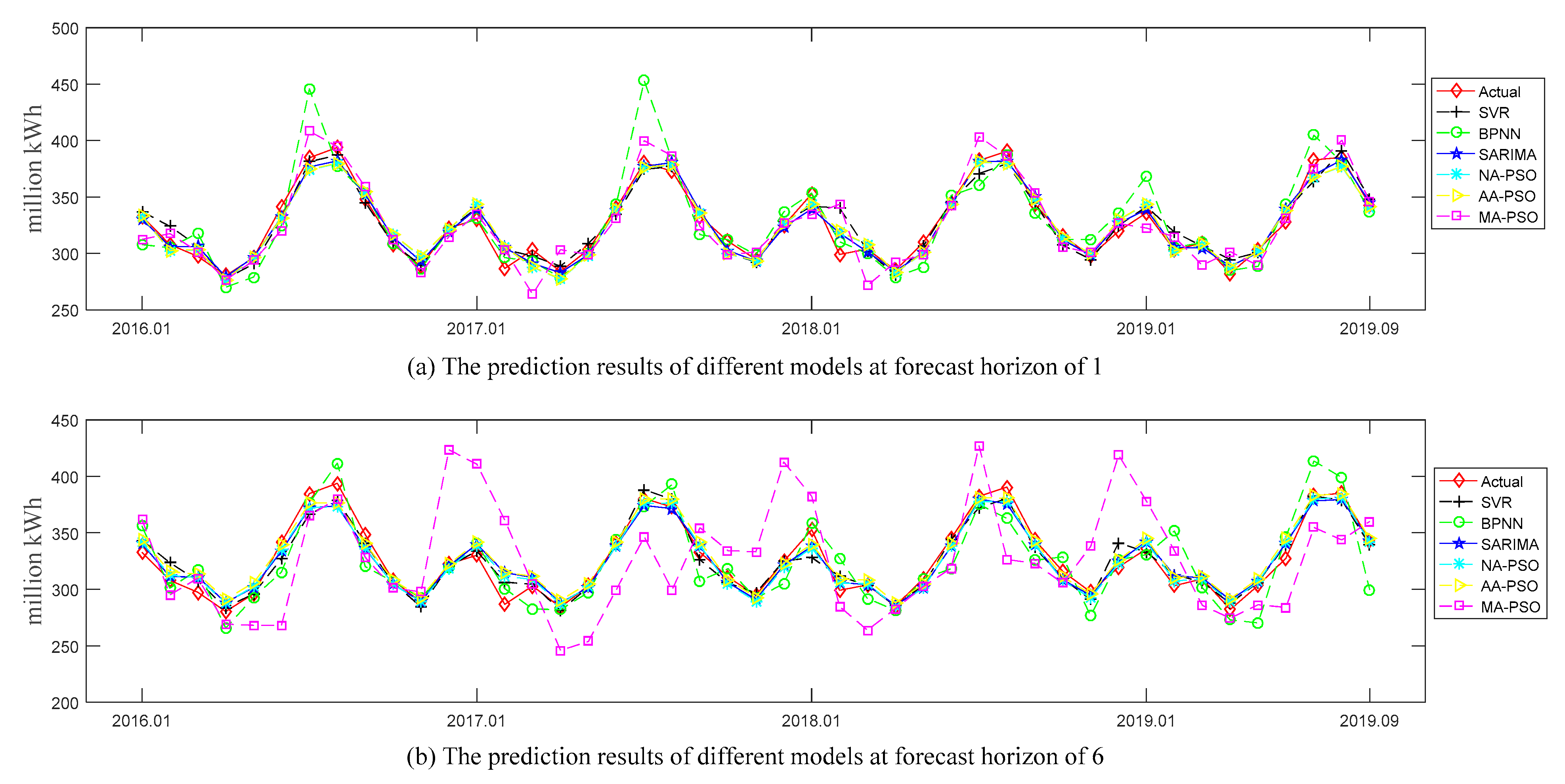

- Among all the examined models, the two models with the worst prediction results were MA-PSO and BPNN, which is obvious in Figure 4b.

- According to the average rank in terms of MAPE measure, the top three models turned out to be NA-PSO, then AA-PSO, and then SARIMA. The rankings with respect to NRMSE measure were SARIMA, then NA-PSO, and then AA-PSO. However, the average NRMSE measure of NA-PSO over all forecast horizons was less than that of SARIMA, which indicates that NA-PSO outperformed SARIMA to a certain extent.

- The proposed NA-PSO and AA-PSO consistently achieved more accurate forecasts than the MA-PSO regardless of the accuracy measures and forecast horizon considered. A possible reason is that multiplication form was not suitable for fitting the trend term of current electricity consumption data.

- As far as the comparison between the NA-PSO, AA-PSO, and SARIMA, we can see that the results were mixed among the horizons examined; there was an interesting phenomenon observed that whatever the accuracy measures considered, SARIMA won for one-step-ahead and two-step-ahead predictions. The main reason could be that the principle of SARIMA for multi-step-ahead prediction is recursive forecasting, which will cause errors to accumulate as the forecast horizon increases.

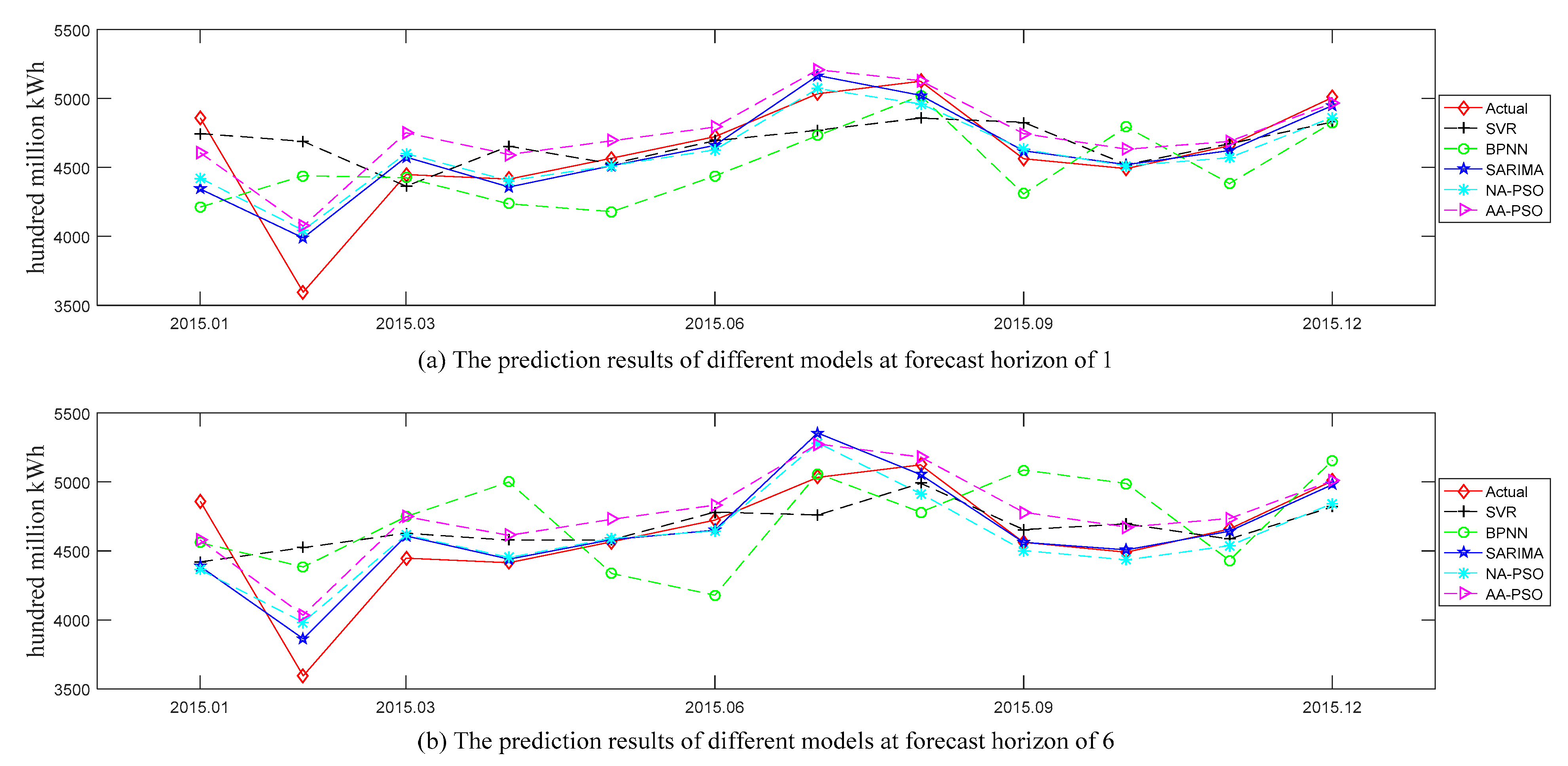

- Among all the examined models, the two models with the worst prediction results were SVR and BPNN, which is obvious in Figure 5a,b. The possible reason is that the methods based on machine learning were suitable for sufficient training samples, while there were only 72 samples in China dataset.

- According to the MAPE, the top three models turned out to be SARIMA, then NA-PSO, and then AA-PSO. The rankings with respect to the NRMSE measure were NA-PSO, and then AA-PSO and SARIMA tied for second. It should be noted that the reason why MA-PSO is not presented in Table 3 and Figure 5 for the China dataset is the MA-PSO performed the worst regardless of the accuracy measures and forecast horizon considered.

- As far as the comparison between the NA-PSO, AA-PSO, and SARIMA, we saw that the results were mixed among the horizons examined, but SARIMA won for one-step-ahead prediction regardless of the accuracy measures considered, which indicates that SARIMA is suitable for short-term forecasting.

- Overall, it is clear that the proposed NA-PSO held one of the top positions. The main reason could be that the electricity consumption associated with people’s daily activities and various economic activities has basically reached a saturated level, which is more intuitively reflected in Figure 3. It is not difficult to see that growth trends of electricity consumption in the U.S. and China were no longer obvious after 2005 and 2012, respectively.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lin, B.; Liu, C. Why is electricity consumption inconsistent with economic growth in China? Energy Policy 2016, 88, 310–316. [Google Scholar] [CrossRef]

- Kaytez, F.; Taplamacioglu, M.C.; Cam, E.; Hardalac, F. Forecasting electricity consumption: A comparison of regression analysis, neural networks and least squares support vector machines. Int. J. Electr. Power Energy Syst. 2015, 67, 431–438. [Google Scholar] [CrossRef]

- Wu, L.; Gao, X.; Xiao, Y.; Yang, Y.; Chen, X. Using a novel multi-variable grey model to forecast the electricity consumption of Shandong Province in China. Energy 2018, 157, 327–335. [Google Scholar] [CrossRef]

- Xie, W.; Wu, W.-Z.; Liu, C.; Zhao, J. Forecasting annual electricity consumption in China by employing a conformable fractional grey model in opposite direction. Energy 2020, 202, 117682. [Google Scholar] [CrossRef]

- Bianco, V.; Manca, O.; Nardini, S. Electricity consumption forecasting in Italy using linear regression models. Energy 2009, 34, 1413–1421. [Google Scholar] [CrossRef]

- Vu, D.H.; Muttaqi, K.M.; Agalgaonkar, A. A variance inflation factor and backward elimination based robust regression model for forecasting monthly electricity demand using climatic variables. Appl. Energy 2015, 140, 385–394. [Google Scholar] [CrossRef] [Green Version]

- Yuan, J.; Farnham, C.; Azuma, C.; Emura, K. Predictive artificial neural network models to forecast the seasonal hourly electricity consumption for a University Campus. Sustain. Cities Soc. 2018, 42, 82–92. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.; Sohrabkhani, S. A simulated-based neural network algorithm for forecasting electrical energy consumption in Iran. Energy Policy 2008, 36, 2637–2644. [Google Scholar] [CrossRef]

- Cao, G.; Wu, L. Support vector regression with fruit fly optimization algorithm for seasonal electricity consumption forecasting. Energy 2016, 115, 734–745. [Google Scholar] [CrossRef]

- Taylor, J.W. Short-term electricity demand forecasting using double seasonal exponential smoothing. J. Oper. Res. Soc. 2003, 54, 799–805. [Google Scholar] [CrossRef]

- Bianco, V.; Manca, O.; Nardini, S.; Minea, A.A. Analysis and forecasting of nonresidential electricity consumption in Romania. Appl. Energy 2010, 87, 3584–3590. [Google Scholar]

- Hussain, A.; Rahman, M.; Memon, J.A. Forecasting electricity consumption in Pakistan: The way forward. Energy Policy 2016, 90, 73–80. [Google Scholar] [CrossRef]

- Kialashaki, A.; Reisel, J.R. Development and validation of artificial neural network models of the energy demand in the industrial sector of the United States. Energy 2014, 76, 749–760. [Google Scholar] [CrossRef]

- Shen, M.; Sun, H.; Lu, Y. Household Electricity Consumption Prediction Under Multiple Behavioural Intervention Strategies Using Support Vector Regression. Energy Procedia 2017, 142, 2734–2739. [Google Scholar] [CrossRef]

- Hernández, L.; Baladrón, C.; Aguiar, J.M.; Calavia, L.; Lloret, J. Experimental Analysis of the Input Variables’ Relevance to Forecast Next Day’s Aggregated Electric Demand Using Neural Networks. Energies 2013, 6, 2927–2948. [Google Scholar] [CrossRef]

- Khosravi, A.; Nahavandi, S.; Creighton, D.; Srinivasan, D. Interval Type-2 Fuzzy Logic Systems for Load Forecasting: A Comparative Study. IEEE Trans. Power Syst. 2012, 27, 1274–1282. [Google Scholar] [CrossRef]

- Xiao, X.; Yang, J.; Mao, S.; Wen, J. An improved seasonal rolling grey forecasting model using a cycle truncation accumulated generating operation for traffic flow. Appl. Math. Model. 2017, 51, 386–404. [Google Scholar] [CrossRef]

- Khuntia, S.R.; Rueda, J.L.; van Der Meijden, M.A. Forecasting the load of electrical power systems in mid- and long-term horizons: A review. IET Gener. Transm. Distrib. 2016, 10, 3971–3977. [Google Scholar] [CrossRef] [Green Version]

- Jiang, W.; Wu, X.; Gong, Y.; Yu, W.; Zhong, X. Holt–Winters smoothing enhanced by fruit fly optimization algorithm to forecast monthly electricity consumption. Energy 2020, 193, 116779. [Google Scholar] [CrossRef]

- Taylor, J.W. An evaluation of methods for very short-term load forecasting using minute-by-minute British data. Int. J. Forecast. 2008, 24, 645–658. [Google Scholar] [CrossRef]

- Tratar, L.F.; Strmcnik, E. The comparison of Holt–Winters method and Multiple regression method: A case study. Energy 2016, 109, 266–276. [Google Scholar] [CrossRef]

- Tiao, G.C.; Xu, D. Robustness of maximum likelihood estimates for multi-step predictions: The exponential smoothing case. Biometrika 1993, 80, 623–641. [Google Scholar] [CrossRef]

- Ord, J.K.; Koehler, A.B.; Snyder, R.D. Estimation and prediction for a class of dynamic nonlinear statistical models. J. Am. Stat. Assoc. 1997, 92, 1621–1629. [Google Scholar] [CrossRef]

- Broze, L.; Melard, G. Exponential smoothing: Estimation by maximum likelihood. J. Forecast. 1990, 9, 445–455. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B.; Ord, J.K.; Snyder, R.D. Prediction intervals for exponential smoothing using two new classes of state space models. J. Forecast. 2005, 24, 17–37. [Google Scholar] [CrossRef]

- Zhang, X.-L.; Zhao, J.-H.; Cai, B. Prediction model with dynamic adjustment for single time series of PM2.5. Acta Autom. Sin. 2018, 44, 1790–1798. [Google Scholar]

- Hong, W.-C. Chaotic particle swarm optimization algorithm in a support vector regression electric load forecasting model. Energy Convers. Manag. 2009, 50, 105–117. [Google Scholar] [CrossRef]

- AlRashidi, M.; El-Naggar, K. Long term electric load forecasting based on particle swarm optimization. Appl. Energy 2010, 87, 320–326. [Google Scholar] [CrossRef]

- Hu, Z.; Bao, Y.; Xiong, T. Comprehensive learning particle swarm optimization based memetic algorithm for model selection in short-term load forecasting using support vector regression. Appl. Soft Comput. 2014, 25, 15–25. [Google Scholar] [CrossRef]

- Yang, Y.; Shang, Z.; Chen, Y.; Chen, Y. Multi-Objective Particle Swarm Optimization Algorithm for Multi-Step Electric Load Forecasting. Energies 2020, 13, 532. [Google Scholar] [CrossRef] [Green Version]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

- Trelea, I.C. The particle swarm optimization algorithm: Convergence analysis and parameter selection. Inf. Process. Lett. 2003, 85, 317–325. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B.; Snyder, R.D.; Grose, S. A state space framework for automatic forecasting using exponential smoothing methods. Int. J. Forecast. 2002, 18, 439–454. [Google Scholar] [CrossRef] [Green Version]

- Han, F.; Ling, Q.H. A new approach for function approximation incorporating adaptive particle swarm optimization and a priori information. Appl. Math. Comput. 2008, 205, 792–798. [Google Scholar] [CrossRef]

- Shi, Y. Particle Swarm Optimization: Developments, Applications and Resources. In Proceedings of the 2001 Congress on Evolutionary Computation (IEEE Cat. No. 01TH8546), Seoul, Korea, 27–30 May 2001; pp. 81–86. [Google Scholar]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Lawal, A.S.; Servadio, J.L.; Davis, T.; Ramaswami, A.; Botchwey, N.; Russell, A.G. Orthogonalization and machine learning methods for residential energy estimation with social and economic indicators. Appl. Energy 2021, 283, 116114. [Google Scholar] [CrossRef]

- Chen, Y.P.; Peng, W.C. Particle Swarm Optimization With Recombination and Dynamic Linkage Discovery. IEEE Trans. Syst Man Cybern. B Cybern. 2007, 37, 1460–1470. [Google Scholar] [CrossRef]

| Parameters | Values | Parameters | Values |

|---|---|---|---|

| Population size (ps) | 30 | Interaction coefficient (c2) | 2.0 |

| Number of iterations (T) | 200 | Initial inertial weight (w1) | 0.9 |

| Cognitive coefficient (c1) | 2.0 | Final inertial weight (w2) | 0.4 |

| Dataset | Strategy | Forecast Horizon (h) | Average 1—h | Average Rank | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 6 | 9 | 12 | 1–6 | 1–12 | |||

| U.S. | MAPE | |||||||||

| GS(0.05) | 1.993 | 2.019 | 2.077 | 1.835 | 1.799 | 1.799 | 1.954 | 1.915 | 1.625 | |

| PSO | 1.992 | 2.065 | 2.061 | 1.854 | 1.790 | 1.901 | 1.967 | 1.918 | 1.875 | |

| GA | 2.001 | 2.075 | 2.078 | 1.769 | 1.787 | 2.008 | 1.973 | 1.962 | 2.500 | |

| RMSE | ||||||||||

| GS(0.05) | 8.257 | 8.507 | 8.763 | 8.270 | 7.720 | 8.599 | 8.432 | 8.333 | 1.750 | |

| PSO | 8.256 | 8.595 | 8.741 | 8.269 | 7.766 | 8.667 | 8.431 | 8.339 | 1.750 | |

| GA | 8.307 | 8.726 | 8.724 | 8.203 | 7.813 | 9.157 | 8.536 | 8.577 | 2.500 | |

| NRMSE | ||||||||||

| GS(0.05) | 0.02530 | 0.02608 | 0.02683 | 0.02511 | 0.02475 | 0.02641 | 0.02592 | 0.02567 | 2.250 | |

| PSO | 0.02529 | 0.02561 | 0.02653 | 0.02502 | 0.02462 | 0.02672 | 0.02573 | 0.02552 | 1.375 | |

| GA | 0.02545 | 0.02675 | 0.02671 | 0.02491 | 0.02399 | 0.02803 | 0.02607 | 0.02622 | 2.375 | |

| Elapsed Time | ||||||||||

| GS(0.05) | 0.596 | 0.481 | 0.452 | 0.428 | 0.453 | 0.47 | 0.478 | 0.468 | 2.000 | |

| PSO | 0.187 | 0.188 | 0.181 | 0.182 | 0.179 | 0.182 | 0.184 | 0.183 | 1.000 | |

| GA | 293.13 | 540.22 | 663.69 | 1041.09 | 361.03 | 723.59 | 707.96 | 591.15 | 3.000 | |

| China | MAPE | |||||||||

| GS(0.05) | 4.030 | 4.416 | 3.504 | 3.442 | 4.678 | 2.813 | 3.660 | 3.802 | 3.750 | |

| GS(0.01) | 3.802 | 3.918 | 3.326 | 2.690 | 3.694 | 2.075 | 3.192 | 3.090 | 1.375 | |

| PSO | 3.819 | 3.961 | 3.337 | 2.671 | 3.693 | 2.108 | 3.225 | 3.100 | 1.875 | |

| GA | 3.817 | 4.067 | 3.317 | 5.266 | 4.449 | 2.513 | 3.896 | 3.811 | 3.000 | |

| RMSE | ||||||||||

| GS(0.05) | 215.229 | 215.850 | 184.854 | 185.216 | 237.467 | 140.873 | 191.187 | 195.588 | 3.500 | |

| GS(0.01) | 209.073 | 208.990 | 176.490 | 150.861 | 190.631 | 105.548 | 173.594 | 164.099 | 1.875 | |

| PSO | 209.082 | 207.852 | 175.738 | 151.670 | 191.163 | 103.904 | 172.216 | 163.795 | 1.625 | |

| GA | 208.587 | 215.862 | 175.248 | 262.722 | 224.159 | 125.866 | 203.947 | 199.078 | 3.000 | |

| NRMSE | ||||||||||

| GS(0.05) | 0.04655 | 0.04690 | 0.03930 | 0.03858 | 0.05074 | 0.02813 | 0.04069 | 0.04124 | 3.500 | |

| GS(0.01) | 0.04522 | 0.04541 | 0.03754 | 0.03142 | 0.04076 | 0.02114 | 0.03697 | 0.03578 | 2.000 | |

| PSO | 0.04521 | 0.04516 | 0.03736 | 0.03159 | 0.04084 | 0.02075 | 0.03669 | 0.03458 | 1.500 | |

| GA | 0.04511 | 0.04690 | 0.03726 | 0.05473 | 0.04790 | 0.02513 | 0.04334 | 0.04150 | 2.875 | |

| Elapsed Time | ||||||||||

| GS(0.05) | 0.257 | 0.278 | 0.253 | 0.297 | 0.278 | 0.333 | 0.265 | 0.274 | 2.000 | |

| GS(0.01) | 12.45 | 12.397 | 12.585 | 12.451 | 12.293 | 12.327 | 12.466 | 12.414 | 3.000 | |

| PSO | 0.0938 | 0.1007 | 0.093 | 0.0976 | 0.0951 | 0.1001 | 0.0970 | 0.0980 | 1.000 | |

| GA | 446.89 | 677.99 | 894.85 | 561.53 | 410.7 | 645.47 | 690.710 | 577.91 | 4.000 | |

| Dataset | Strategy | Forecast horizon (h) | Average 1—h | AverageRank | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 6 | 9 | 12 | 1–6 | 1–12 | |||

| U.S. | MAPE | |||||||||

| NA-PSO | 2.005 | 2.047 | 2.057 | 1.921 | 1.679 | 1.712 | 1.988 | 1.866 | 1.625 | |

| AA-PSO | 1.992 | 2.065 | 2.061 | 1.854 | 1.790 | 1.901 | 1.973 | 1.922 | 2.000 | |

| MA-PSO | 3.226 | 5.692 | 7.362 | 10.17 | 5.235 | 2.309 | 7.585 | 6.200 | 5.750 | |

| SARIMA | 1.767 | 2.010 | 2.149 | 2.035 | 1.912 | 1.843 | 2.010 | 1.975 | 2.375 | |

| SVR | 2.236 | 2.912 | 2.751 | 2.116 | 1.930 | 1.989 | 2.359 | 2.167 | 4.000 | |

| BPNN | 4.116 | 4.184 | 4.213 | 5.205 | 3.901 | 4.568 | 4.487 | 4.530 | 5.250 | |

| NRMSE | ||||||||||

| NA-PSO | 0.02534 | 0.02639 | 0.02639 | 0.02530 | 0.02260 | 0.02292 | 0.02579 | 0.02459 | 1.875 | |

| AA-PSO | 0.02529 | 0.02561 | 0.02653 | 0.02502 | 0.02462 | 0.02672 | 0.02573 | 0.02552 | 2.500 | |

| MA-PSO | 0.04189 | 0.06890 | 0.09325 | 0.12613 | 0.06467 | 0.03068 | 0.09471 | 0.08150 | 5.750 | |

| SARIMA | 0.02261 | 0.02513 | 0.02645 | 0.02647 | 0.02420 | 0.02414 | 0.02571 | 0.02546 | 1.750 | |

| SVR | 0.03055 | 0.03754 | 0.03439 | 0.02845 | 0.02733 | 0.02632 | 0.03117 | 0.02922 | 3.875 | |

| BPNN | 0.05385 | 0.05430 | 0.05360 | 0.07244 | 0.06309 | 0.05897 | 0.06060 | 0.05991 | 5.250 | |

| China | MAPE | |||||||||

| NA-PSO | 3.255 | 2.635 | 2.085 | 2.726 | 3.519 | 3.933 | 2.374 | 2.540 | 1.875 | |

| AA-PSO | 3.819 | 3.961 | 3.337 | 2.671 | 3.693 | 2.108 | 3.225 | 3.100 | 2.625 | |

| SARIMA | 3.054 | 2.761 | 2.726 | 2.684 | 2.772 | 2.622 | 2.786 | 2.747 | 1.750 | |

| SVR | 5.189 | 5.080 | 4.856 | 5.336 | 3.644 | 3.790 | 5.158 | 4.518 | 3.875 | |

| BPNN | 5.026 | 7.835 | 5.821 | 6.865 | 8.882 | 7.468 | 5.997 | 6.390 | 4.875 | |

| NRMSE | ||||||||||

| NA-PSO | 0.04373 | 0.03255 | 0.02527 | 0.03146 | 0.04040 | 0.03933 | 0.03001 | 0.03034 | 1.500 | |

| AA-PSO | 0.04521 | 0.04516 | 0.03736 | 0.03159 | 0.04084 | 0.02075 | 0.03669 | 0.03458 | 2.250 | |

| SARIMA | 0.04338 | 0.03928 | 0.03785 | 0.04116 | 0.03956 | 0.03781 | 0.03963 | 0.03906 | 2.250 | |

| SVR | 0.07696 | 0.07060 | 0.06424 | 0.07119 | 0.05189 | 0.04827 | 0.06891 | 0.06039 | 4.000 | |

| BPNN | 0.07712 | 0.10210 | 0.07600 | 0.08085 | 0.09905 | 0.09239 | 0.07996 | 0.08254 | 5.000 | |

| Dataset | Measure | Prediction | Rank of Strategies | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Horizon (h) | 1 | 2 | 3 | 4 | 5 | ||||||

| U.S. | MAPE | 1 | SARIMA | <* | AA-PSO | < | NA-PSO | <* | SVR | <* | BPNN |

| 2 | SARIMA | < | NA-PSO | < | AA-PSO | <* | SVR | <* | BPNN | ||

| 3 | NA-PSO | < | AA-PSO | <* | SARIMA | <* | SVR | <* | BPNN | ||

| 6 | AA-PSO | <* | NA-PSO | <* | SARIMA | <* | SVR | <* | BPNN | ||

| 9 | NA-PSO | <* | AA-PSO | <* | SARIMA | < | SVR | <* | BPNN | ||

| 12 | NA-PSO | <* | SARIMA | < | AA-PSO | < | SVR | <* | BPNN | ||

| NRMSE | 1,2 | SARIMA | <* | AA-PSO | < | NA-PSO | <* | SVR | <* | BPNN | |

| 3 | NA-PSO | < | SARIMA | < | AA-PSO | <* | SVR | <* | BPNN | ||

| 6 | AA-PSO | < | NA-PSO | <* | SARIMA | <* | SVR | <* | BPNN | ||

| 9 | NA-PSO | <* | AA-PSO | < | SARIMA | <* | SVR | <* | BPNN | ||

| 12 | NA-PSO | <* | SARIMA | <* | SVR | < | AA-PSO | <* | BPNN | ||

| China | MAPE | 1 | SARIMA | <* | NA-PSO | <* | AA-PSO | < | BPNN | < | SVR |

| 2,3 | NA-PSO | <* | SARIMA | <* | AA-PSO | <* | SVR | <* | BPNN | ||

| 6 | AA-PSO | < | SARIMA | < | NA-PSO | <* | SVR | <* | BPNN | ||

| 9 | SARIMA | <* | NA-PSO | <* | SVR | < | AA-PSO | <* | BPNN | ||

| 12 | AA-PSO | <* | SARIMA | <* | SVR | < | NA-PSO | <* | BPNN | ||

| NRMSE | 1 | SARIMA | < | NA-PSO | <* | AA-PSO | <* | SVR | < | BPNN | |

| 2 | NA-PSO | <* | SARIMA | <* | AA-PSO | <* | SVR | <* | BPNN | ||

| 3 | NA-PSO | <* | SARIMA | < | AA-PSO | <* | SVR | <* | BPNN | ||

| 6 | AA-PSO | < | NA-PSO | <* | SARIMA | <* | SVR | <* | BPNN | ||

| 9 | SARIMA | <* | NA-PSO | < | AA-PSO | <* | SVR | <* | BPNN | ||

| 12 | AA-PSO | <* | SARIMA | <* | NA-PSO | <* | SVR | <* | BPNN | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, C.; Zhang, X.; Huang, Y.; Bao, Y. Equipping Seasonal Exponential Smoothing Models with Particle Swarm Optimization Algorithm for Electricity Consumption Forecasting. Energies 2021, 14, 4036. https://doi.org/10.3390/en14134036

Deng C, Zhang X, Huang Y, Bao Y. Equipping Seasonal Exponential Smoothing Models with Particle Swarm Optimization Algorithm for Electricity Consumption Forecasting. Energies. 2021; 14(13):4036. https://doi.org/10.3390/en14134036

Chicago/Turabian StyleDeng, Changrui, Xiaoyuan Zhang, Yanmei Huang, and Yukun Bao. 2021. "Equipping Seasonal Exponential Smoothing Models with Particle Swarm Optimization Algorithm for Electricity Consumption Forecasting" Energies 14, no. 13: 4036. https://doi.org/10.3390/en14134036

APA StyleDeng, C., Zhang, X., Huang, Y., & Bao, Y. (2021). Equipping Seasonal Exponential Smoothing Models with Particle Swarm Optimization Algorithm for Electricity Consumption Forecasting. Energies, 14(13), 4036. https://doi.org/10.3390/en14134036