Bi-Level Poisoning Attack Model and Countermeasure for Appliance Consumption Data of Smart Homes

Abstract

:1. Introduction

- We assess a bi-level data poisoning strategy based on a sparse attack vector and optimization-based attack, which successfully corrupts the energy prediction model of home appliances (See Section 3);

- An effective solution for the poisoned energy prediction model is also implemented. The proposed defense strategy is evaluated on various benchmark regression models (See Section 3);

- Apparently, to the best of our knowledge, this is one of the earliest works on the attack and defense of poisoning attacks on ‘household energy prediction models’. Proposed methods are tested on a benchmark dataset from the UCI data repository (Section 5).

2. Related Work

2.1. Related Work on the Household Prediction Models

2.2. Related Work on the Security Vulnerabilities of the Predictive Models

3. Proposed Bi-Level-Poisoning-Based Adversarial Model on Energy Data

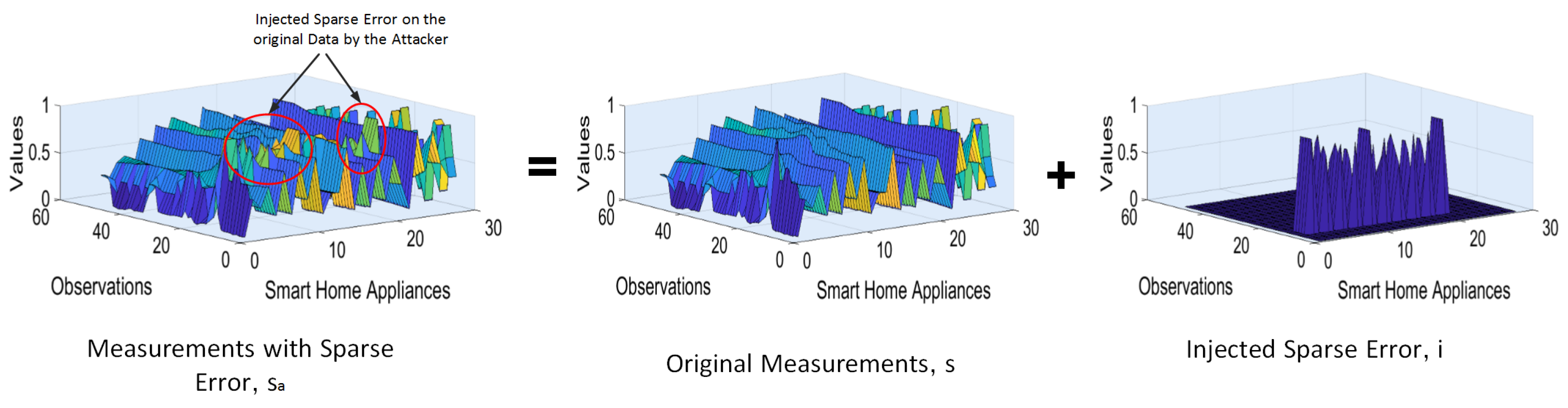

3.1. Poisoning Attack during Communication

3.2. Poisoning Attack on the Predictive ML Module

| Algorithm 1: Poisoning Attack on the Predictive ML Module. |

|

4. Proposed Defense Mechanism against Bi-Level-Poisoning-Based Adversarial Model

4.1. Defense Mechanism against Poisoning Attack during Communication

| Algorithm 2: Defense Mechanism Using Accelerated Proximal Gradient Algorithm in First-Level Attack. |

|

4.2. Defense Mechanism against Poisoning Attack on the Predictive ML Module

| Algorithm 3: Defense Mechanism Against Poisoning Attack on the Predictive ML Module. |

|

5. Results and Discussion

5.1. Datasets

5.2. Effects of Poisoning Attacks on Energy Consumption Data

5.3. Defense Algorithms

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cetin, K.S. Characterizing large residential appliance peak load reduction potential utilizing a probabilistic approach. Sci. Technol. Built Environ. 2016, 22, 720–732. [Google Scholar] [CrossRef]

- Jones, R.V.; Lomas, K.J. Determinants of high electrical energy demand in UK homes: Socio-economic and dwelling characteristics. Energy Build. 2015, 101, 24–34. [Google Scholar] [CrossRef] [Green Version]

- Seem, J.E. Using intelligent data analysis to detect abnormal energy consumption in buildings. Energy Build. 2007, 39, 52–58. [Google Scholar] [CrossRef]

- Zhao, P.; Suryanarayanan, S.; Simoes, M.G. An energy management system for building structures using a multi-agent decision-making control methodology. IEEE Trans. Ind. Appl. 2012, 49, 322–330. [Google Scholar] [CrossRef]

- Sadeghi, A.R.; Wachsmann, C.; Waidner, M. Security and privacy challenges in industrial internet of things. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar]

- Abir, S.M.A.A.; Anwar, A.; Choi, J.; Kayes, A.S.M. IoT-Enabled Smart Energy Grid: Applications and Challenges. IEEE Access 2021, 9, 50961–50981. [Google Scholar] [CrossRef]

- Macola, I.G. The Five Worst Cyberattacks against the Power Industry Since 2014. Available online: https://www.power-technology.com/features/the-five-worst-cyberattacks-against-the-power-industry-since2014/ (accessed on 9 April 2021).

- Polityuk, P.; Oleg Vukmanovic, S.J. Ukraine’s Power Outage was a Cyber Attack: Ukrenergo. 17 December 2016. Available online: https://www.reuters.com/article/us-ukraine-cyber-attack-energy/ukraines-power-outage-was-a-cyber-attack-ukrenergo-idUSKBN1521BA (accessed on 9 April 2021).

- Volz, D. U.S. Government Concludes Cyber Attack Caused Ukraine Power Outage. December 2015. Available online: https://www.reuters.com/article/us-ukraine-cybersecurity-idUSKCN0VY30K (accessed on 9 April 2021).

- Reda, H.T.; Anwar, A.; Mahmood, A. Comprehensive Survey and Taxonomies of False Injection Attacks in Smart Grid: Attack Models, Targets, and Impacts. arXiv 2021, arXiv:cs.CR/2103.10594. [Google Scholar]

- Reda, H.T.; Anwar, A.; Mahmood, A.N.; Tari, Z. A Taxonomy of Cyber Defence Strategies Against False Data Attacks in Smart Grid. arXiv 2021, arXiv:cs.CR/2103.16085. [Google Scholar]

- Biggio, B.; Nelson, B.; Laskov, P. Poisoning attacks against support vector machines. arXiv 2012, arXiv:1206.6389. [Google Scholar]

- Biggio, B.; Corona, I.; Maiorca, D.; Nelson, B.; Šrndić, N.; Laskov, P.; Giacinto, G.; Roli, F. Evasion attacks against machine learning at test time. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2013; pp. 387–402. [Google Scholar]

- Fredrikson, M.; Jha, S.; Ristenpart, T. Model inversion attacks that exploit confidence information and basic countermeasures. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 1322–1333. [Google Scholar]

- Perdisci, R.; Dagon, D.; Lee, W.; Fogla, P.; Sharif, M. Misleading worm signature generators using deliberate noise injection. In Proceedings of the 2006 IEEE Symposium on Security and Privacy (S&P’06), Berkeley/Oakland, CA, USA, 21–24 May 2006; p. 15. [Google Scholar]

- Rubinstein, B.I.; Nelson, B.; Huang, L.; Joseph, A.D.; Lau, S.H.; Rao, S.; Taft, N.; Tygar, J.D. Antidote: Understanding and defending against poisoning of anomaly detectors. In Proceedings of the 9th ACM SIGCOMM Conference on Internet Measurement, Chicago, IL, USA, 4–6 November 2009; pp. 1–14. [Google Scholar]

- Xiao, H.; Biggio, B.; Brown, G.; Fumera, G.; Eckert, C.; Roli, F. Is feature selection secure against training data poisoning? In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 1689–1698. [Google Scholar]

- Fumo, N.; Mago, P.; Luck, R. Methodology to estimate building energy consumption using EnergyPlus Benchmark Models. Energy Build. 2010, 42, 2331–2337. [Google Scholar] [CrossRef]

- Ghorbani, M.; Rad, M.S.; Mokhtari, H.; Honarmand, M.; Youhannaie, M. Residential loads modeling by norton equivalent model of household loads. In Proceedings of the 2011 Asia-Pacific Power and Energy Engineering Conference, Wuhan, China, 25–28 March 2011; pp. 1–4. [Google Scholar]

- Cetin, K.; Tabares-Velasco, P.; Novoselac, A. Appliance daily energy use in new residential buildings: Use profiles and variation in time-of-use. Energy Build. 2014, 84, 716–726. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, Z.J.; Kashani, A. Home appliance load modeling from aggregated smart meter data. IEEE Trans. Power Syst. 2014, 30, 254–262. [Google Scholar] [CrossRef] [Green Version]

- Jones, R.V.; Fuertes, A.; Lomas, K.J. The socio-economic, dwelling and appliance related factors affecting electricity consumption in domestic buildings. Renew. Sustain. Energy Rev. 2015, 43, 901–917. [Google Scholar] [CrossRef] [Green Version]

- Ruellan, M.; Park, H.; Bennacer, R. Residential building energy demand and thermal comfort: Thermal dynamics of electrical appliances and their impact. Energy Build. 2016, 130, 46–54. [Google Scholar] [CrossRef]

- Bacher, P.; Madsen, H.; Nielsen, H.A.; Perers, B. Short-term heat load forecasting for single family houses. Energy Build. 2013, 65, 101–112. [Google Scholar] [CrossRef] [Green Version]

- Hor, C.L.; Watson, S.J.; Majithia, S. Analyzing the impact of weather variables on monthly electricity demand. IEEE Trans. Power Syst. 2005, 20, 2078–2085. [Google Scholar] [CrossRef]

- Saldanha, N.; Beausoleil-Morrison, I. Measured end-use electric load profiles for 12 Canadian houses at high temporal resolution. Energy Build. 2012, 49, 519–530. [Google Scholar] [CrossRef]

- Kavousian, A.; Rajagopal, R.; Fischer, M. Determinants of residential electricity consumption: Using smart meter data to examine the effect of climate, building characteristics, appliance stock, and occupants’ behavior. Energy 2013, 55, 184–194. [Google Scholar] [CrossRef]

- Basu, K.; Hawarah, L.; Arghira, N.; Joumaa, H.; Ploix, S. A prediction system for home appliance usage. Energy Build. 2013, 67, 668–679. [Google Scholar] [CrossRef]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (sp), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Rndic, N.; Laskov, P. Practical evasion of a learning-based classifier: A case study. In Proceedings of the 2014 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 18–21 May 2014; pp. 197–211. [Google Scholar]

- Nelson, B.; Barreno, M.; Chi, F.J.; Joseph, A.D.; Rubinstein, B.I.; Saini, U.; Sutton, C.A.; Tygar, J.D.; Xia, K. Exploiting Machine Learning to Subvert Your Spam Filter. LEET 2008, 8, 1–9. [Google Scholar]

- Fredrikson, M.; Lantz, E.; Jha, S.; Lin, S.; Page, D.; Ristenpart, T. Privacy in pharmacogenetics: An end-to-end case study of personalized warfarin dosing. In Proceedings of the 23rd {USENIX} Security Symposium ({USENIX} Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 17–32. [Google Scholar]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 3–18. [Google Scholar]

- Jagielski, M.; Oprea, A.; Biggio, B.; Liu, C.; Nita-Rotaru, C.; Li, B. Manipulating machine learning: Poisoning attacks and countermeasures for regression learning. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), Francisco, CA, USA, 20–24 May 2018; pp. 19–35. [Google Scholar]

- Wright, J.; Ganesh, A.; Rao, S.; Ma, Y. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. In Coordinated Science Laboratory Report no. UILU-ENG-09-2210, DC-243; University of Illinois at Urbana: Champaign, IL, USA, 2009. [Google Scholar]

- Lin, Z.; Ganesh, A.; Wright, J.; Wu, L.; Chen, M.; Ma, Y. Fast convex optimization algorithms for exact recovery of a corrupted low-rank matrix. In Coordinated Science Laboratory Report no. UILU-ENG-09-2214, DC-246; University of Illinois at Urbana: Champaign, IL, USA, 2009. [Google Scholar]

- Candanedo, L.M.; Feldheim, V.; Deramaix, D. Data driven prediction models of energy use of appliances in a low-energy house. Energy Build. 2017, 140, 81–97. [Google Scholar] [CrossRef]

| Poisoning Rate | Appliance’s Energy Use (in WH) | Prediction without Poisoning | Change in Predicted Value | ||

|---|---|---|---|---|---|

| OLS | Ridge | Lasso | |||

| 1% | 580 | 579.96 | 44.07% | 23.25% | 27.46% |

| 2% | 91.10% | 53.11% | 51.62% | ||

| 3% | 70.61% | 70.64% | 103.65% | ||

| 4% | 95.17% | 105.28% | 118.17% | ||

| 5% | 139.25% | 128.53% | 145.64% | ||

| 6% | 186.28% | 158.39% | 169.79% | ||

| 7% | 165.79% | 175.92% | 221.83% | ||

| 8% | 190.35% | 210.56% | 236.34% | ||

| 9% | 234.43% | 233.82% | 263.81% | ||

| 10% | 281.46% | 263.67% | 287.96% | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Billah, M.; Anwar, A.; Rahman, Z.; Galib, S.M. Bi-Level Poisoning Attack Model and Countermeasure for Appliance Consumption Data of Smart Homes. Energies 2021, 14, 3887. https://doi.org/10.3390/en14133887

Billah M, Anwar A, Rahman Z, Galib SM. Bi-Level Poisoning Attack Model and Countermeasure for Appliance Consumption Data of Smart Homes. Energies. 2021; 14(13):3887. https://doi.org/10.3390/en14133887

Chicago/Turabian StyleBillah, Mustain, Adnan Anwar, Ziaur Rahman, and Syed Md. Galib. 2021. "Bi-Level Poisoning Attack Model and Countermeasure for Appliance Consumption Data of Smart Homes" Energies 14, no. 13: 3887. https://doi.org/10.3390/en14133887

APA StyleBillah, M., Anwar, A., Rahman, Z., & Galib, S. M. (2021). Bi-Level Poisoning Attack Model and Countermeasure for Appliance Consumption Data of Smart Homes. Energies, 14(13), 3887. https://doi.org/10.3390/en14133887