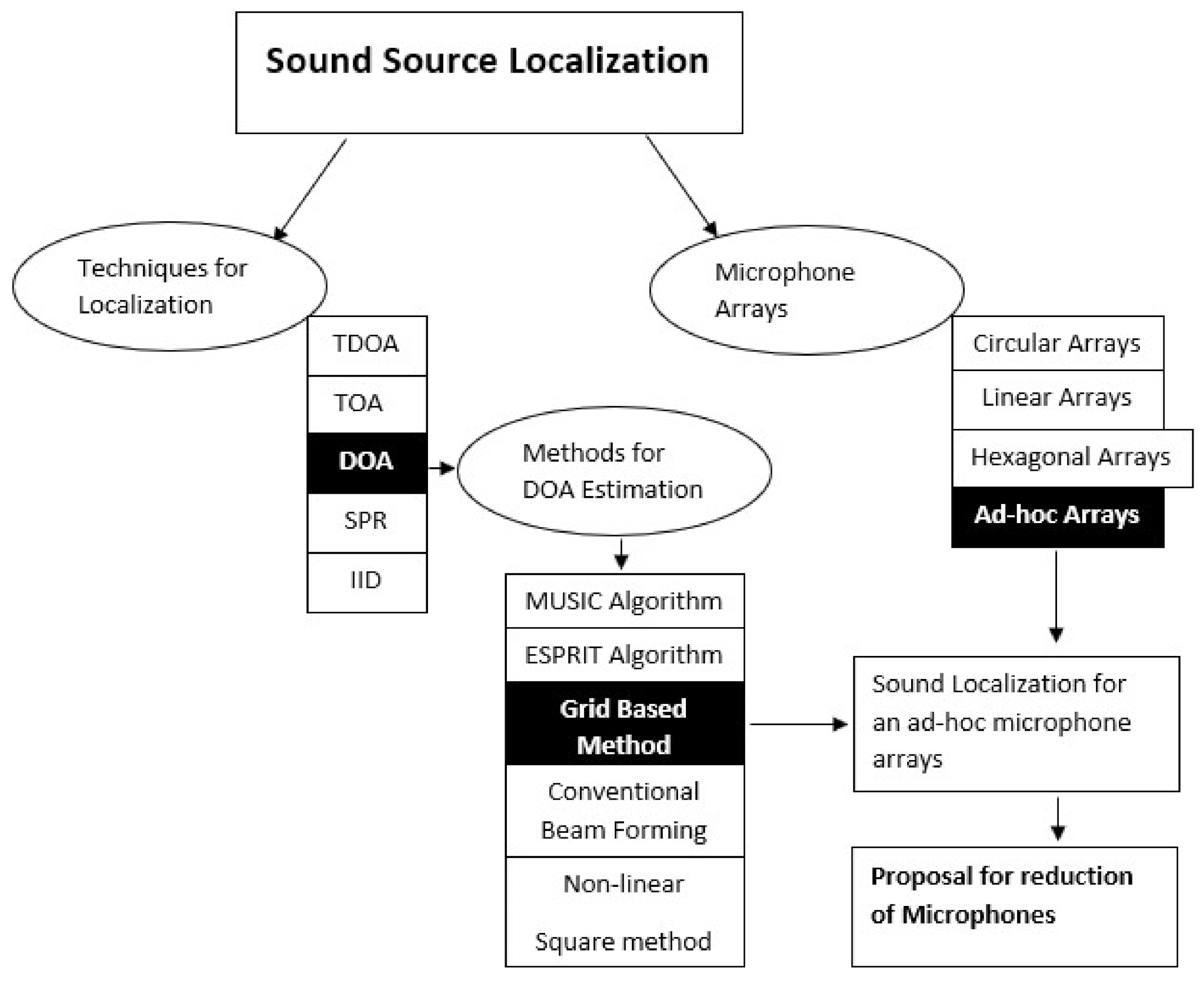

Sound Localization for Ad-Hoc Microphone Arrays

Abstract

:1. Introduction

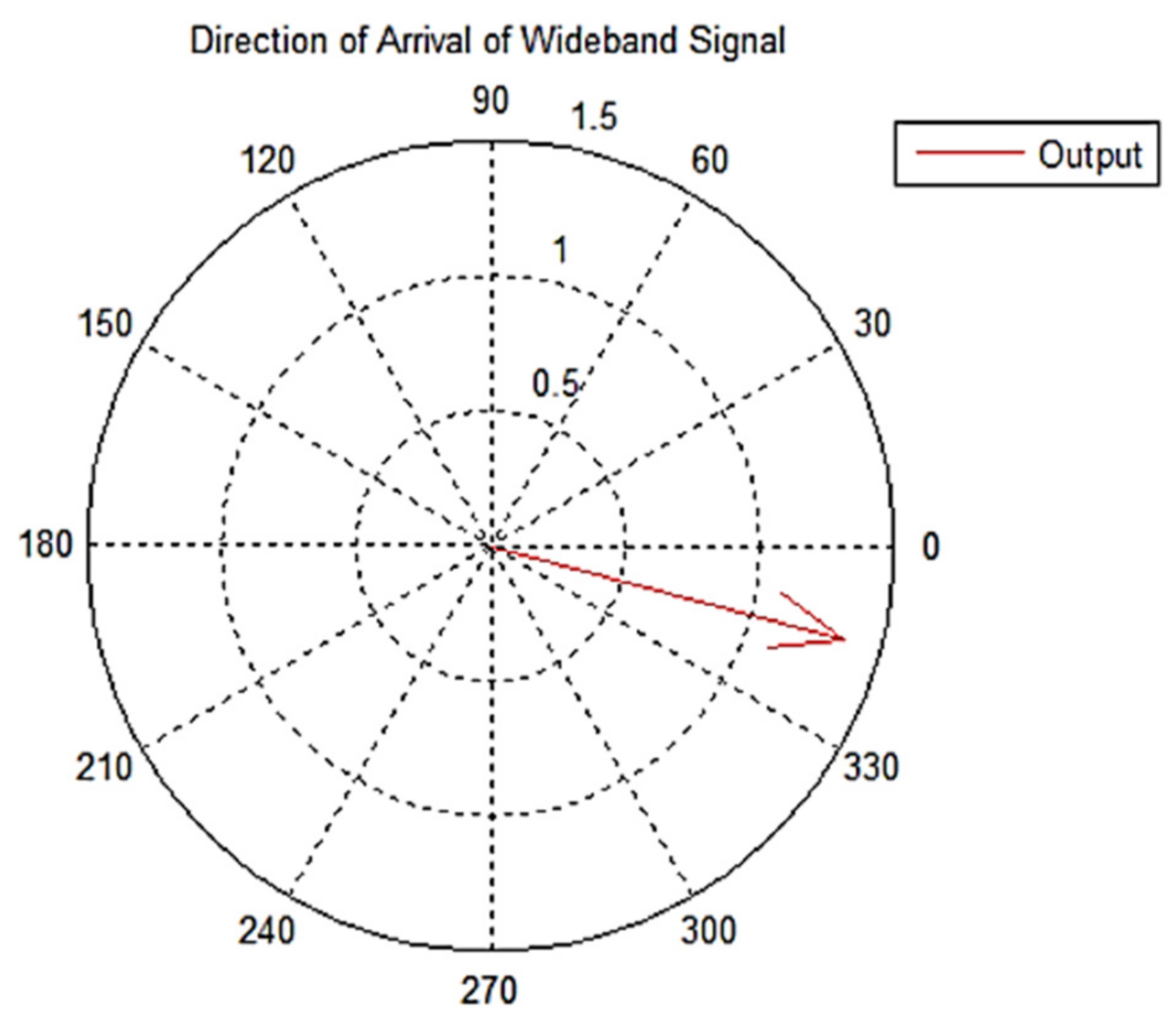

- (i)

- Direction of arrival estimates;

- (ii)

- Distance estimates.

- To test a configuration of sensors with a source that requires a minimum number of microphones, to reduce the complexity of the system and to decrease the resource consumption. Previously, this task has been accomplished using six microphones [16]. This study further reduces the number of microphones to four in order to minimize the resource consumption.

- To localize a source accurately by using both DOA and 3D localization. The study proposes novel methods to calculate these.

- To develop a sound localization system possessing only two nodes so that it could be compact and be ready to be integrated in devices such as hearing aids.

- The system is particularly developed to work in reverberant environments where there is excessive noise content. The purpose is achieved by adding noise to the input signals and calculating the error factor.

2. Related Work

3. Materials and Methods

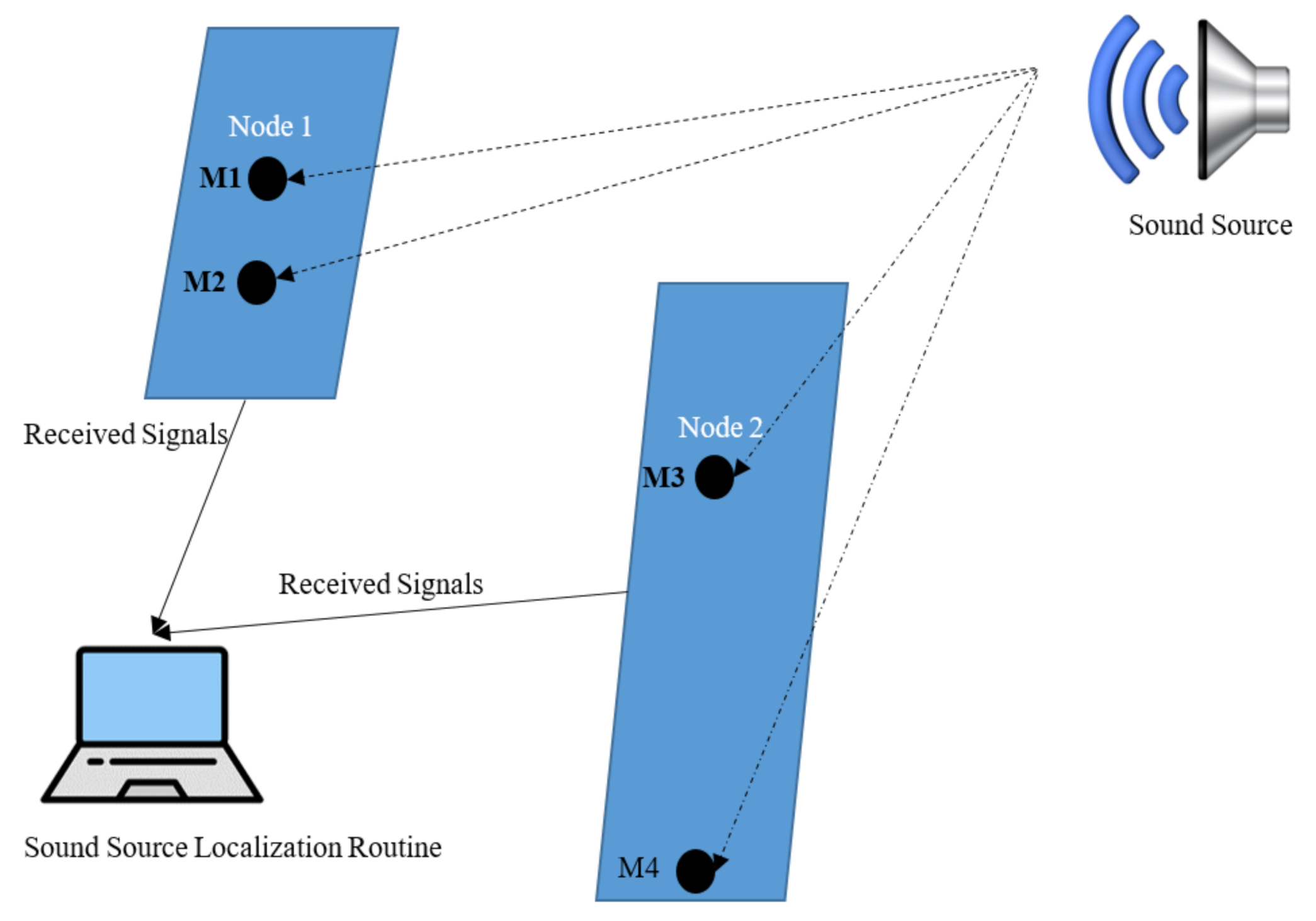

3.1. Materials

3.2. Assumptions

- Only one omni-directional and little sound source is present.

- Reflection of sound waves take place between the plane where the source and the neighboring items are located.

- There are no noise elements in the environment. The sound signal contains the level of noise deemed acceptable for the experiment.

- All microphones have matching phase and amplitudes.

- There are no self-producing noises in any of the microphones.

- The locations of audio sensing devices are predetermined.

- The difference in the velocity of sound caused by fluctuations in physical characteristics such as pressure and temperature are ignored.

- Sound velocity in the air is considered to be 330 m per second.

- The sound signals arriving at the sensors will be considered as planar rather than spherical in the case where the distance between the source and the sensors surpasses the gap between the microphones.

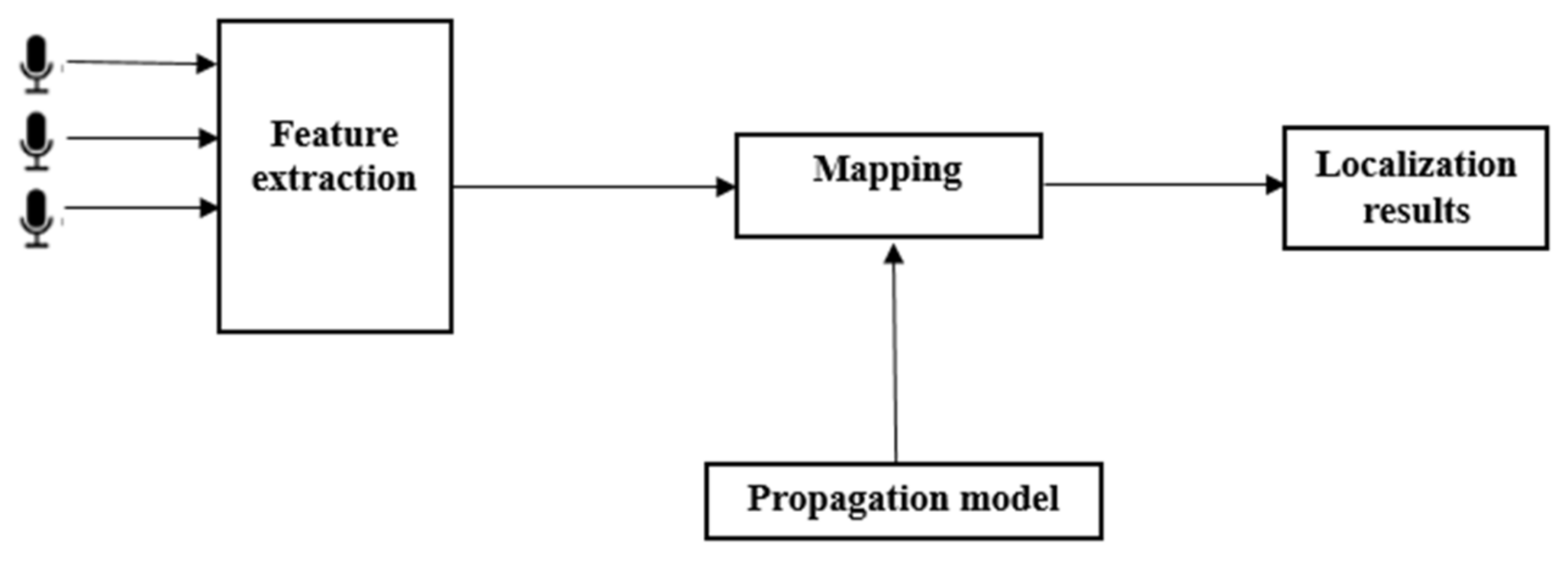

3.3. Background

3.3.1. Direction of Arrival Estimation

3.3.2. Model of a Signal

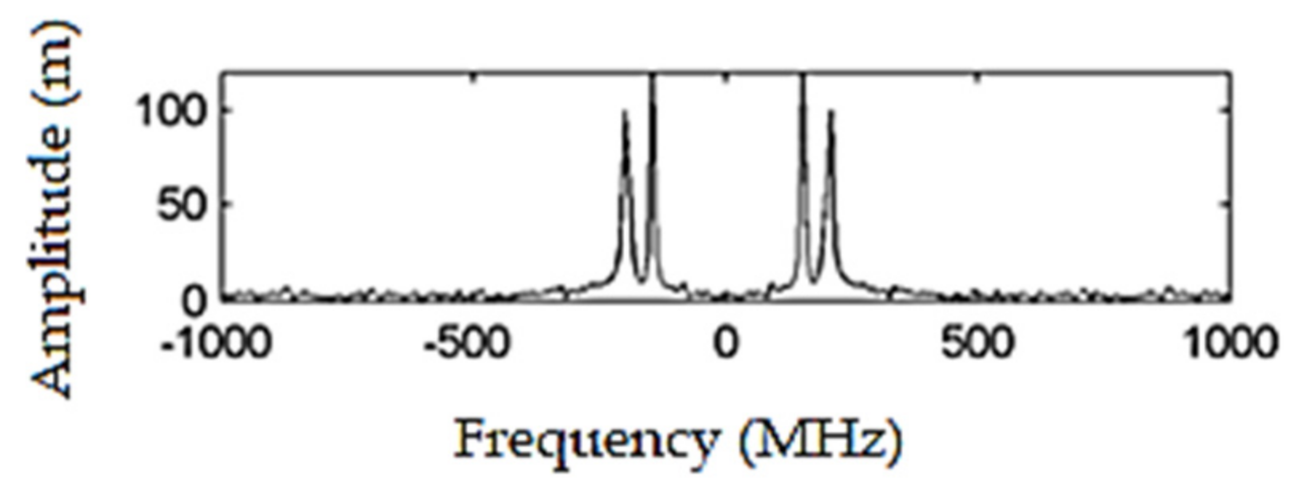

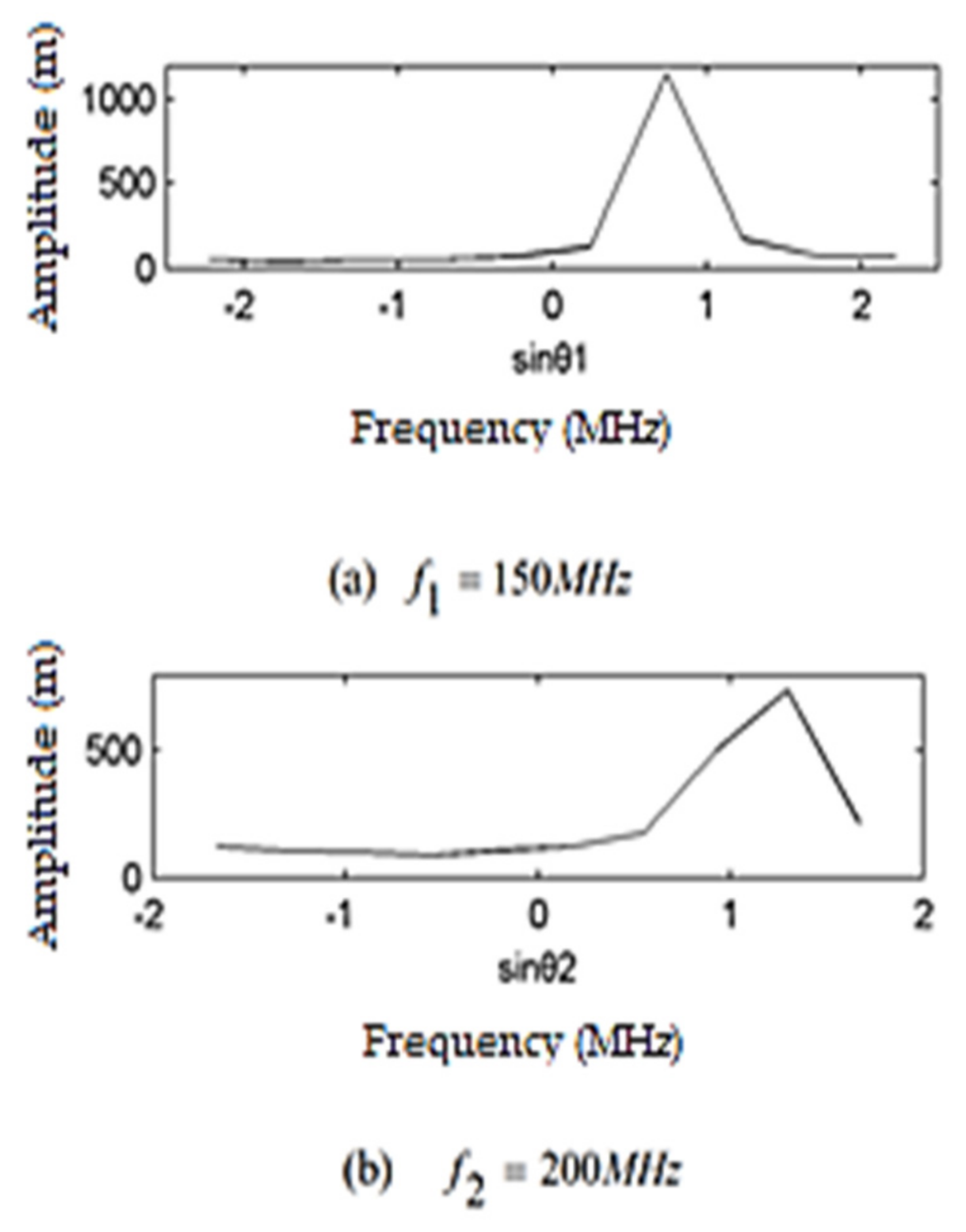

3.3.3. Spatial Frequency Distribution for the Estimation of DOA

3.3.4. DOA Calculation Using Phase Differences

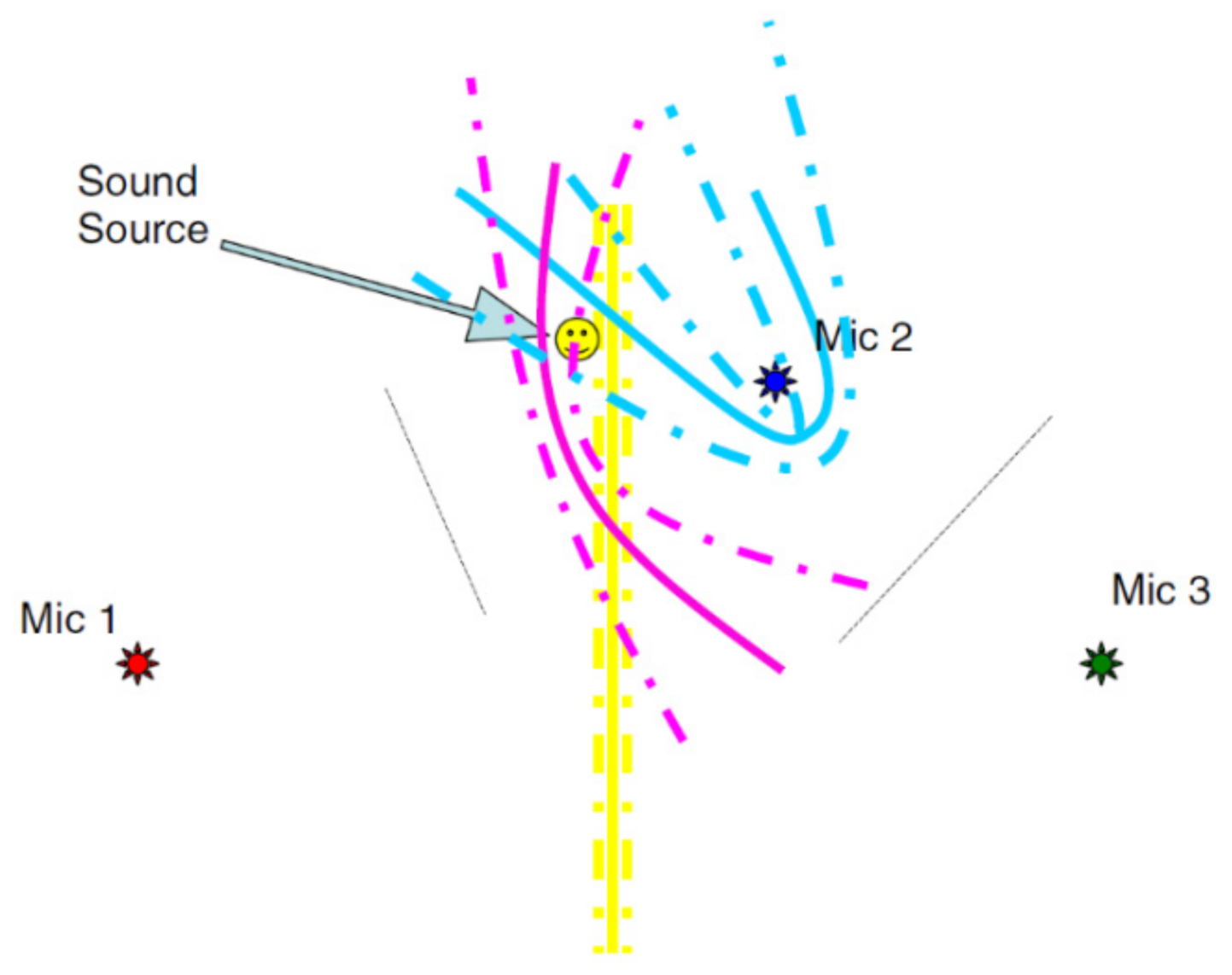

3.3.5. Source Localization in 3D Space

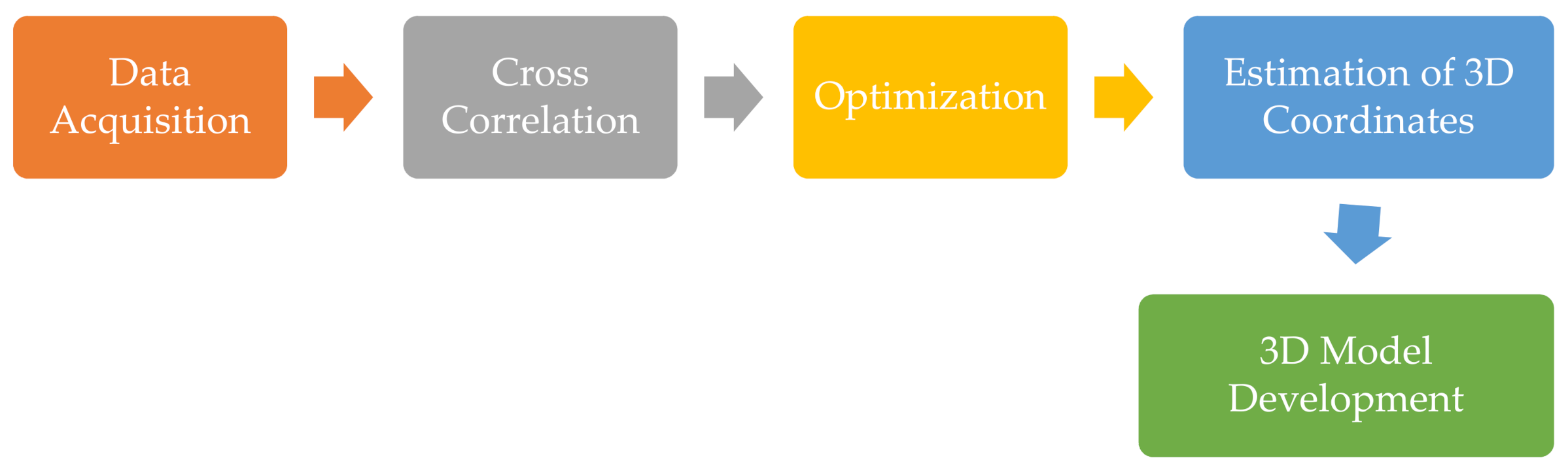

3.4. Proposed Method

3.4.1. Geometry of the Problem

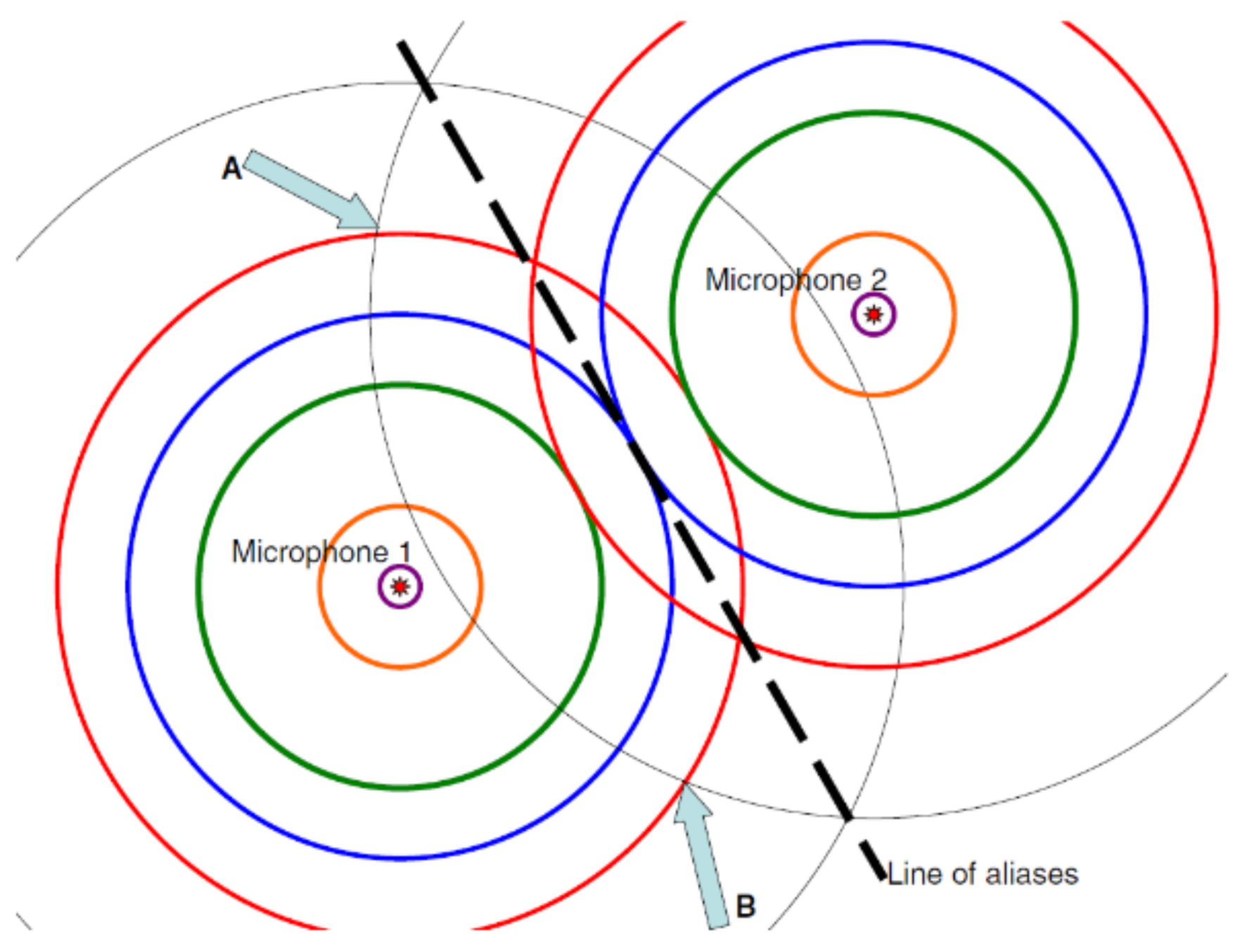

3.4.2. Cross Correlation for Detection of Delays

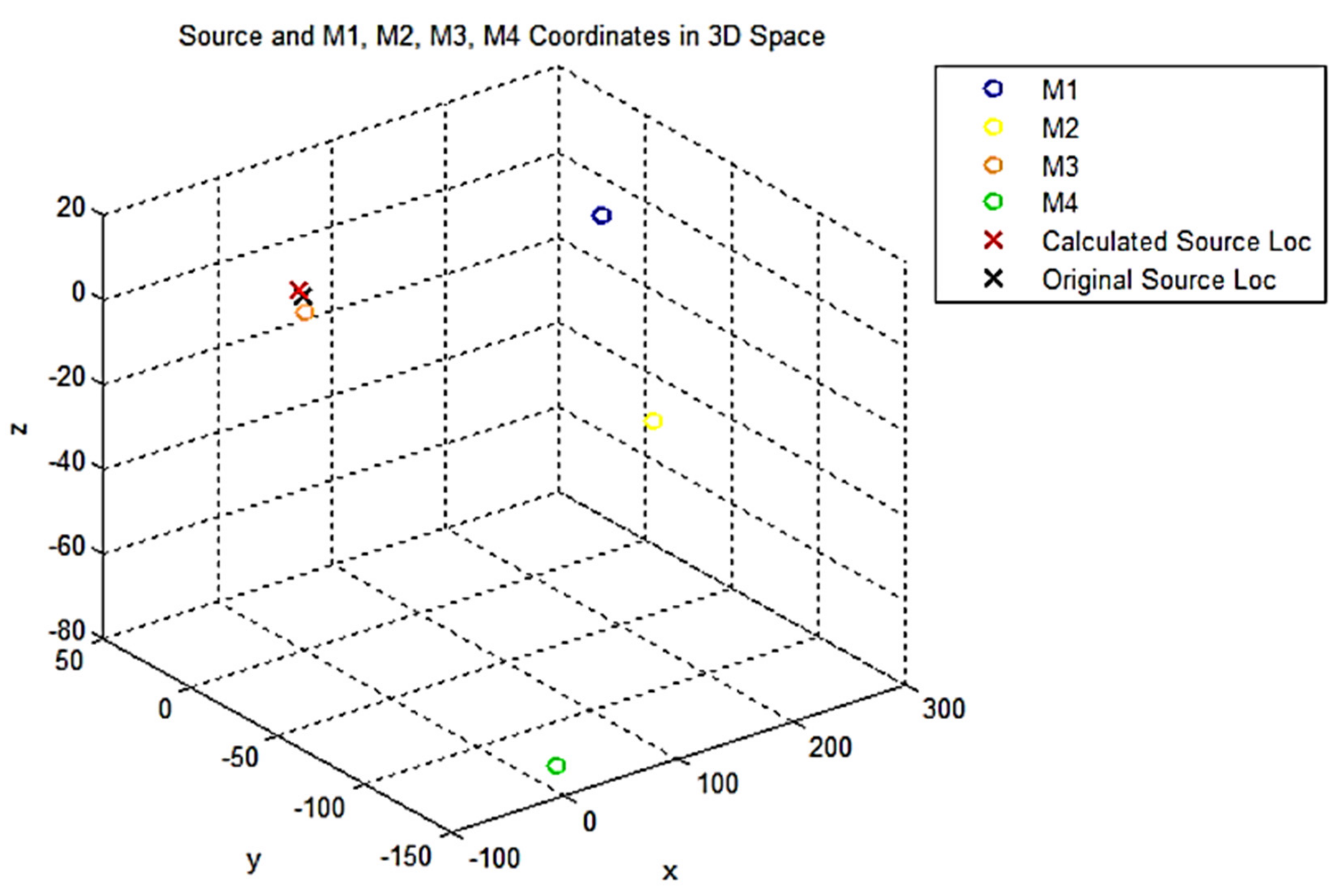

3.4.3. Sound Localization

4. Results

4.1. Experimental Set Up

4.2. Implementation

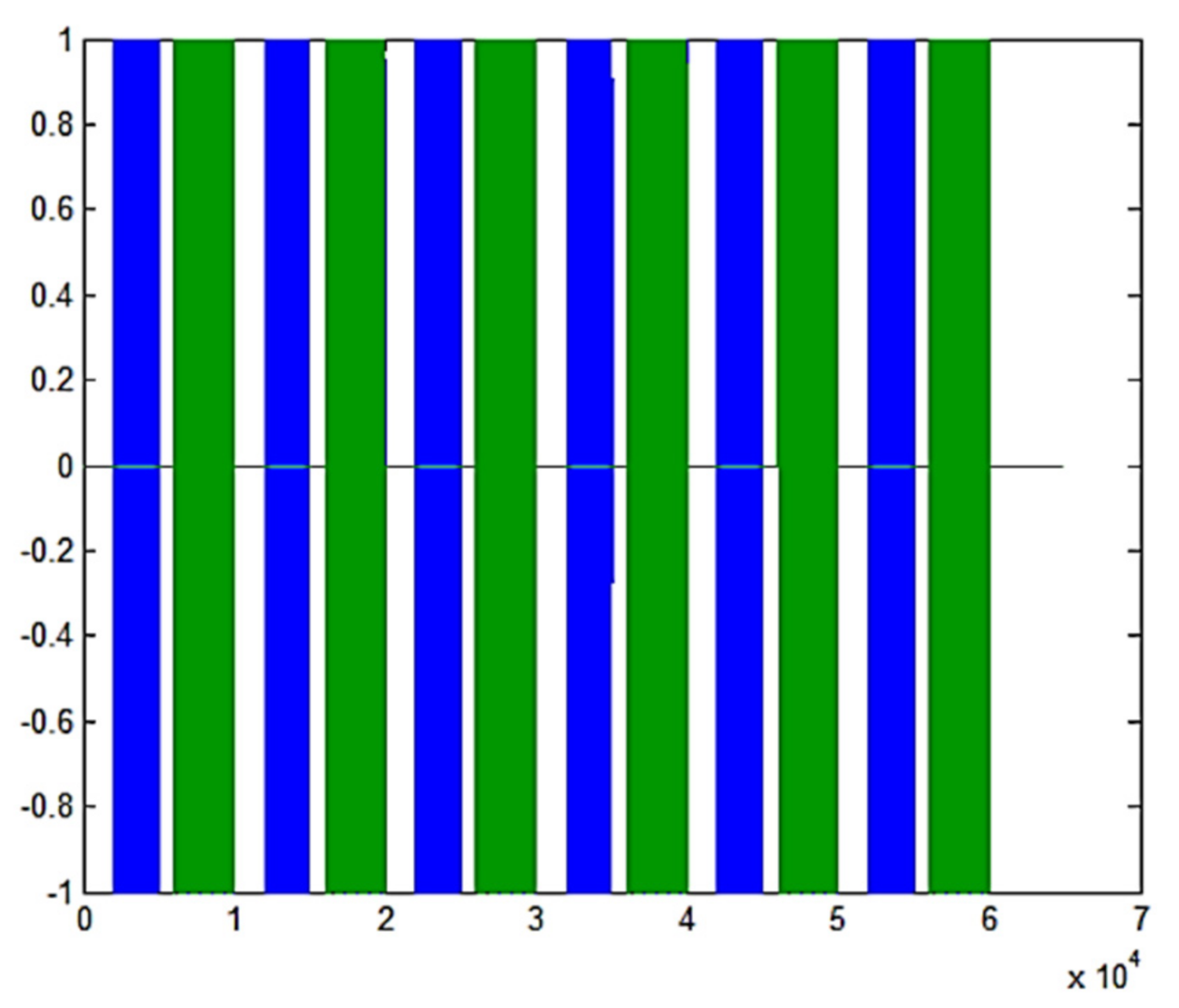

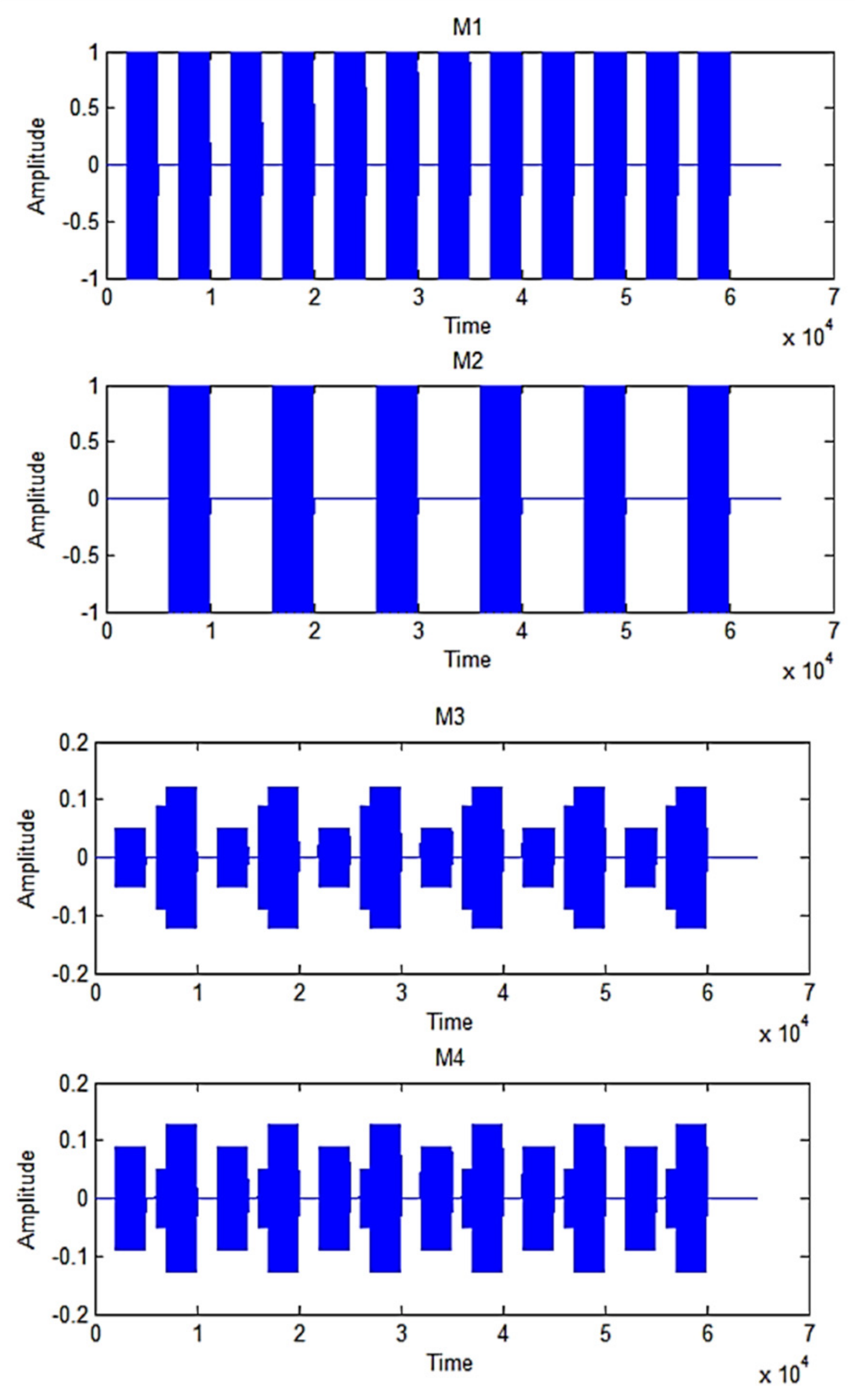

4.2.1. Developing Source Signal

4.2.2. Error Factor

4.3. Results

4.4. Comparison of Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Wu, K.; Khong, A.W. Sound Source Localization and Tracking. In Context Aware Human-Robot and Human-Agent Interaction; Springer: Cham, Switzerland, 2016; pp. 55–78. [Google Scholar]

- Alameda-Pineda, X.; Horaud, R. A Geometric Approach to Sound Source Localization from Time-Delay Estimates. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 2, 1082–1095. [Google Scholar] [CrossRef] [Green Version]

- Burges, S.; Kuang, Y.; Astroom, K. TOA sensor network self-callibration for receiver and transmitter spaces with difference in dimensions. Signal Process. 2015, 107, 33–42. [Google Scholar] [CrossRef] [Green Version]

- Chen, M.; Liu, Z.; He, L.-W.; Chou, P.; Zhang, Z. Energy-Based Position Estimation of Microphones and Speakers for Ad Hoc Microphone Arrays. In 2007 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2007; pp. 22–25. [Google Scholar]

- Sheng, X.; Hu, Y.H. Energy Based Acoustic Source Localization. In Information Processing in Sensor Networks; Lecture Notes in Computer Science; Springer: Berlin, Germany, 2003; Volume 2634, pp. 285–300. [Google Scholar]

- Kundu, T. Acoustic source localization. Ultrasonics 2014, 54, 25–38. [Google Scholar] [CrossRef]

- Aston, J. Sound Localization and New Applications of Its Research; Stephen, F., Ed.; Applied Perception Projects and Service-Learning Project; Austin State University: Nacogdoches, TX, USA, 2003. [Google Scholar]

- Choi, H.; Choi, H.; Park, J.; Park, J.; Lim, W.; Lim, W.; Yang, Y.-M.; Yang, Y.-M.; Choi, H.; Choi, H.; et al. Active-beacon-based driver sound separation system for autonomous vehicle applications. Appl. Acoust. 2021, 171, 107549. [Google Scholar] [CrossRef]

- Sakanashi, R.; Ono, N.; Miyabe, S.; Yamada, T.; Makino, S. Speech enhancement with ad-hoc microphone array using single source activity. In Proceedings of the 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Kaohsiung, Taiwan, 29 October–1 November 2013; pp. 1–6. [Google Scholar]

- Hennecke, M.H.; Fink, G.A. Towards acoustic self-localization of ad hoc smartphone arrays. In Proceedings of the 2011 Joint Workshop on Hands-Free Speech Communication and Microphone Arrays, Edinburgh, Scotland, 30 May–1 June 2001. [Google Scholar]

- Lienhart, R.; Kozintsev, I.; Wehr, S.; Yeung, M. On the importance of exact synchronization for distributed audio processing. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Hong Kong, China, 6–10 April 2003. [Google Scholar]

- Hassani, A.; Bertrand, A.; Moneen, M. Cooperative integrated noise reduction and node-specific direction-of-arrival estimation in a fully connected wireless acoustic sensor network. Signal Process. 2015, 107, 68–81. [Google Scholar] [CrossRef] [Green Version]

- Munawar, H.S.; Khan, S.I.; Anum, N.; Qadir, Z.; Kouzani, A.Z.; Parvez Mahmud, M.A. Post-Flood Risk Management and Resilience Building Practices: A Case Study. Appl. Sci. 2021, 11, 4823. [Google Scholar] [CrossRef]

- Yost, W.A.; Zhong, X. Sound source localization identification accuracy: Bandwidth dependencies. J. Acoust. Soc. Am. 2014, 136, 2737–2746. [Google Scholar] [CrossRef]

- Rascon, C.; Meza, I. Localization of sound sources in robotics: A review. Robot. Auton. Syst. 2017, 96, 184–210. [Google Scholar] [CrossRef]

- Song, T.; Chen, J.; Zhang, D.B.; Qu, T.S.; Wu, X.H. Sound source localization algorithm using microphone array with rigid body. In Proceedings of the 22nd International Congress on Acoustics, Buenos Aires, Argentina, 5–9 September 2016. [Google Scholar]

- Seco, F.; Jiménez, A.R.; Prieto, C.; Roa, J.; Koutsou, K. A survey of mathematical methods for indoor localization. In Proceedings of the 2009 IEEE International Symposium on Intelligent Signal Processing, Budapest, Hungary, 26–28 August 2009; pp. 9–14. [Google Scholar]

- Pertilä, P. Acoustic Source Localization in a Room Environment and at Moderate Distances; Tampere University of Technology: Tampere, Finland, 2009. [Google Scholar]

- Chen, J.; Benesty, J.; Huang, Y. Time delay estimation in room acoustic environments: An overview. EURASIP J. Adv. Signal Process. 2006. [Google Scholar] [CrossRef] [Green Version]

- Liu, R.; Wang, Y. Azimuthal source localization using interaural coherence in a robotic dog: Modeling and application. Robotica 2010, 28, 1013–1020. [Google Scholar] [CrossRef]

- Mandel, M.I.; Ellis, D.P.; Jebara, T. An em algorithm for localizing multiple sound: Sources in reverberant environments. In Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006. [Google Scholar]

- Viste, H.; Evangelista, G. On the use of spatial cues to improve binaural source separation. In Proceedings of the 6th International Conference on Digital Audio Effects (DAFx-03), London, UK, 8–11 September 2003; pp. 209–213. [Google Scholar]

- Woodruff, J.; Wang, D. Binaural Localization of Multiple Sources in Reverberant and Noisy Environments. IEEE Trans. Audio Speech Lang. Process. 2012, 20, 1503–1512. [Google Scholar] [CrossRef]

- Kulaib, A.R.; Al-Mualla, M.; Vernon, D. 2D Binaural Sound Localization: For Urban Search and Rescue Robotics. In Mobile Robotics; World Scientific: Singapore, 2009; pp. 423–435. [Google Scholar]

- Deleforge, A.; Horaud, R. 2D sound-source localization on the binaural manifold. In Proceedings of the 2012 IEEE International Workshop on Machine Learning for Signal Processing, Santander, Spain, 23–26 September 2006; pp. 1–6. [Google Scholar]

- Keyrouz, F.; Diepold, K. An enhanced binaural 3D sound localization algorithm. In Proceedings of the 2006 IEEE International Symposium on Signal Processing and Information Technology, Vancouver, BC, Canada, 27–30 August 2006; pp. 662–665. [Google Scholar]

- Keyrouz, F.; Diepold, K.; Keyrouz, S. Humanoid binaural sound tracking using Kalman filtering and HRTFs. In Robot Motion and Control; Springer: London, UK, 2007; pp. 329–340. [Google Scholar]

- Shaukat, M.A.; Shaukat, H.R.; Qadir, Z.; Munawar, H.S.; Kouzani, A.Z.; Mahmud, M.A.P. Cluster Analysis and Model Comparison Using Smart Meter Data. Sensors 2021, 21, 3157. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.C.; Yao, K.; Hudson, R.E. Acoustic Source Localization and Beamforming: Theory and Practice. EURASIP J. Adv. Signal Process. 2003, 2003, 926837. [Google Scholar] [CrossRef] [Green Version]

- Sheng, X.; Hu, Y.-H. Maximum likelihood multiple-source localization using acoustic energy measurements with wireless sensor networks. IEEE Trans. Signal Process. 2005, 53, 44–53. [Google Scholar] [CrossRef] [Green Version]

- So, H.C.; Chan, Y.T.; Chan, F.K.W. Closed-Form Formulae for Time-Difference-of-Arrival Estimation. IEEE Trans. Signal Process. 2008, 56, 2614–2620. [Google Scholar] [CrossRef] [Green Version]

- Urruela, A.; Riba, J. Novel closed-form ML position estimator for hyperbolic location. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 2, p. ii-149. [Google Scholar]

- Strobel, N.; Rabenstein, R. Classification of time delay estimates for robust speaker localization. In Proceedings of the 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, AZ, USA, 15–19 March 1999; Volume 6, pp. 3081–3084. [Google Scholar]

- Zhang, C.; Zhang, Z.; Florêncio, D. Maximum likelihood sound source localization for multiple directional microphones. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, Honolulu, HI, USA, 15–20 April 2007; Volume 1, p. I-125. [Google Scholar]

- Zhang, C.; Florencio, D.; Ba, D.E.; Zhang, Z. Maximum Likelihood Sound Source Localization and Beamforming for Directional Microphone Arrays in Distributed Meetings. IEEE Trans. Multimed. 2008, 10, 538–548. [Google Scholar] [CrossRef]

- Smith, J.; Abel, J. Closed-form least-squares source location estimation from range-difference measurements. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 1661–1669. [Google Scholar] [CrossRef]

- Brandstein, M.; Adcock, J.; Silverman, H. A closed-form location estimator for use with room environment microphone arrays. IEEE Trans. Speech Audio Process. 1997, 5, 45–50. [Google Scholar] [CrossRef]

- Brandstein, M.S.; Silverman, H.F. A practical methodology for speech source localization with microphone arrays. Comput. Speech Lang. 1997, 11, 91–126. [Google Scholar] [CrossRef] [Green Version]

- Friedlander, B. A passive localization algorithm and its accuracy analysis. IEEE J. Ocean. Eng. 1987, 12, 234–245. [Google Scholar] [CrossRef]

- Huang, Y.; Benesty, J.; Elko, G.W.; Mersereati, R.M. Real-time passive source localization: A practical linear-correction least-squares approach. IEEE Trans. Speech Audio Process. 2001, 9, 943–956. [Google Scholar] [CrossRef]

- Canclini, A.; Antonacci, F.; Sarti, A.; Tubaro, S. Acoustic source localization with distributed asynchronous microphone networks. IEEE Trans. Audio Speech Lang. Process. 2012, 21, 439–443. [Google Scholar] [CrossRef]

- Omologo, M.; Svaizer, P.; Brutti, A.; Cristoforetti, L. Speaker localization in CHIL lectures: Evaluation criteria and results. In International Workshop on Machine Learning for Multimodal Interaction; Springer: Berlin, Germany, 2015; pp. 476–487. [Google Scholar]

- Mori, R.D. Spoken Dialogues with Computers; Academic Press, Inc.: Cambridge, MA, USA, 1997. [Google Scholar]

- Brutti, A.; Omologo, M.; Svaizer, P. Oriented global coherence field for the estimation of the head orientation in smart rooms equipped with distributed microphone arrays. In Proceedings of the Ninth European Conference on Speech Communication and Technology, Lisboa, Portugal, 4–8 September 2005. [Google Scholar]

- Brutti, A.; Omologo, M.; Svaizer, P. Speaker localization based on oriented global coherence field. In Proceedings of the Ninth International Conference on Spoken Language Processing, Pittsburgh, PA, USA, 17–21 September 2006. [Google Scholar]

- Padois, T.; Berry, A. Two and three-dimensional sound source localization with beamforming and several deconvolution techniques. Acta Acust. United Acust. 2017, 103, 392–400. [Google Scholar] [CrossRef]

- Lehmann, E.A.; Johansson, A.M. Prediction of energy decay in room impulse responses simulated with an image-source model. J. Acoust. Soc. Am. 2008, 124, 269–277. [Google Scholar] [CrossRef] [Green Version]

- Leyffer, S. Integrating SQP and branch-and-bound for mixed integer nonlinear programming. Comput. Optim. Appl. 2001, 18, 295–309. [Google Scholar] [CrossRef]

- Li, Z.; Duraiswami, R. A robust and self-reconfigurable design of spherical microphone array for multi-resolution beamforming. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23–23 March 2005; Volume 4, p. iv-1137. [Google Scholar]

- Lim, J.S.; Pang, H.S. Time delay estimation method based on canonical correlation analysis. Circuits Syst. Signal Process. 2013, 32, 2527–2538. [Google Scholar] [CrossRef]

- Qadir, Z.; Ever, E.; Batunlu, C. Use of Neural Network Based Prediction Algorithms for Powering Up Smart Portable Accessories. Neural Process. Lett. 2021, 53, 721–756. [Google Scholar] [CrossRef]

- Ferguson, E.L.; Williams, S.B.; Jin, C.T. Sound source localization in a multipath environment using convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2386–2390. [Google Scholar]

- An, I.; Son, M.; Manocha, D.; Yoon, S.E. Reflection-aware sound source localization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 66–73. [Google Scholar]

- Grondin, F.; Michaud, F. Lightweight and optimized sound source localization and tracking methods for open and closed microphone array configurations. Robot. Auton. Syst. 2019, 113, 63–80. [Google Scholar] [CrossRef] [Green Version]

- Opochinsky, R.; Laufer-Goldshtein, B.; Gannot, S.; Chechik, G. Deep ranking-based sound source localization. In Proceedings of the 2019 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2019; pp. 283–287. [Google Scholar]

- Evers, C.; Löllmann, H.W.; Mellmann, H.; Schmidt, A.; Barfuss, H.; Naylor, P.A.; Kellermann, W. The LOCATA challenge: Acoustic source localization and tracking. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1620–1643. [Google Scholar] [CrossRef]

- Johnson, D.H.; Dudgeon, D.E. Array Signal Processing, Concepts and Techniques; Prentice Hall: Englewood Cliffs, NJ, USA, 1993; ISBN 978-0130485137. [Google Scholar]

- Leclère, Q.; Pereira, A.; Bailly, C.; Antoni, J.; Picard, C. A unified formalism for acoustic imaging based on microphone array measurements. Int. J. Aeroacoust. 2017, 16, 431–456. [Google Scholar] [CrossRef] [Green Version]

- Van Veen, B.; Buckley, K. Beamforming: A versatile approach to spatial filtering. IEEE ASSP Mag. 1988, 5, 4–24. [Google Scholar] [CrossRef]

- Merino-Martínez, R.; Sijtsma, P.; Snellen, M.; Ahlefeldt, T.; Antoni, J.; Bahr, C.J.; Blacodon, D.; Ernst, D.; Finez, A.; Funke, S.; et al. A review of acoustic imaging methods using phased microphone arrays. CEAS Aeronaut. J. 2019, 10, 197–230. [Google Scholar] [CrossRef] [Green Version]

- Cobos, M.; Antonacci, F.; Alexandridis, A.; Mouchtaris, A.; Lee, B. A Survey of Sound Source Localization Methods in Wireless Acoustic Sensor Networks. Wirel. Commun. Mob. Comput. 2017, 2017, 1–24. [Google Scholar] [CrossRef]

- Stoica, P.; Sharman, K.C. Maximum likelihood methods for direction-of-arrival estimation. IEEE Trans. Acoust. Speech Signal Process. 1990, 38, 1132–1143. [Google Scholar] [CrossRef]

- Xiong, B.; Li, G.; Lu, C. DOA Estimation Based on Phase-difference. In Proceedings of the 2006 8th International Conference on Signal Processing, ICSP, Guilin, China, 16–20 November 2006. [Google Scholar]

- Biniyam, T.T. Sound Source Localization and Separation; Macalester College: St. Paul, MN, USA, 2006. [Google Scholar]

- Jin, J.; Jin, S.; Lee, S.; Kim, H.; Choi, J.; Kim, M.; Jeon, J. Real-time Sound Localization Using Generalized Cross Correlation Based on 0.13 m CMOS Process. JSTS J. Semicond. Technol. Sci. 2014, 14, 175–183. [Google Scholar] [CrossRef]

- Jeffrey, T. LabVIEW for Everyone: Graphical Programming Made Easy and Fun, 3rd ed.; Kring, J., Ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2006; ISBN 0131856723. [Google Scholar]

- Comon, P.; Jutten, C. Handbook of Blind Source Separation, Independent Component Analysis and Applications; Academic Press: Oxford, UK, 2010; ISBN 978-0-12-374726-6. [Google Scholar]

- Risoud, M.; Hanson, J.-N.; Gauvrit, F.; Renard, C.; Lemesre, P.-E.; Bonne, N.-X.; Vincent, C. Sound source localization. Eur. Ann. Otorhinolaryngol. Head Neck Dis. 2018, 135, 259–264. [Google Scholar] [CrossRef]

- Pavlidi, D.; Griffin, A.; Puigt, M.; Mouchtaris, A. Real-Time Multiple Sound Source Localization and Counting Using a Circular Microphone Array. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 2193–2206. [Google Scholar] [CrossRef] [Green Version]

- Yalta, N.; Nakadai, K.; Ogata, T. Sound Source Localization Using Deep Learning Models. J. Robot. Mechatron. 2017, 29, 37–48. [Google Scholar] [CrossRef]

- Tuma, J.; Janecka, P.; Vala, M.; Richter, L. Sound source localization. In Proceedings of the 13th International Carpathian Control Conference (ICCC), High Tatras, Slovakia, 28–31 May 2012; pp. 740–743. [Google Scholar]

- Cobos, M.; Marti, A.; Lopez, J.J. A Modified SRP-PHAT Functional for Robust Real-Time Sound Source Localization with Scalable Spatial Sampling. IEEE Signal Process. Lett. 2010, 18, 71–74. [Google Scholar] [CrossRef]

- Zhao, S.; Ahmed, S.; Liang, Y.; Rupnow, K.; Chen, D.; Jones, D.L. A real-time 3D sound localization system with miniature microphone array for virtual reality. In Proceedings of the 2012 7th IEEE Conference on Industrial Electronics and Applications (ICIEA), Singapore, 18–20 July 2012; pp. 1853–1857. [Google Scholar] [CrossRef]

- Khan, S.I.; Qadir, Z.; Munawar, H.S.; Nayak, S.R.; Budati, A.K.; Verma, K.D.; Prakash, D. UAVs path planning architecture for effective medical emergency response in future networks. Phys. Commun. 2021, 47, 101337. [Google Scholar] [CrossRef]

- Munawar, H.S. Concepts, Methodologies and Applications. Flood Disaster Management: Risks, Technologies, and Future Directions. In Machine Vision Inspection Systems: Image Processing, Concepts, Methodologies and Applications; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2020; Volume 1, pp. 115–146. [Google Scholar]

- Munawar, H.S. Concepts, Methodologies and Applications. Image and Video Processing for Defect Detection in Key Infrastructure. In Machine Vision Inspection Systems: Image Processing, Concepts, Methodologies and Applications; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2020; Volume 1, pp. 159–177. [Google Scholar]

- Munawar, H.S.; Awan, A.A.; Khalid, U.; Munawar, S.; Maqsood, A. Revolutionizing Telemedicine by Instilling, H. 265. Int. J. Image Graph. Signal Process. 2017, 9, 20–27. [Google Scholar] [CrossRef] [Green Version]

- Munawar, H.S.; Hammad, A.; Ullah, F.; Ali, T.H. After the flood: A novel application of image processing and machine learning for post-flood disaster management. In Proceedings of the 2nd International Conference on Sustainable Development in Civil Engineering (ICSDC 2019), Jamshoro, Pakistan, 5–7 December 2019; pp. 5–7. [Google Scholar]

- Munawar, H.S. An Overview of Reconfigurable Antennas for Wireless Body Area Networks and Possible Future Prospects. Int. J. Wirel. Microw. Technol. 2020, 10, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Munawar, H.S. Reconfigurable Origami Antennas: A Review of the Existing Technology and its Future Prospects. Int. J. Wirel. Microw. Technol. 2020, 10, 34–38. [Google Scholar] [CrossRef]

- Munawar, H.S.; Maqsood, A. Isotropic Surround Suppression based Linear Target Detection using Hough Transform. Int. J. Adv. Appl. Sci. 2017. [Google Scholar] [CrossRef]

- Munawar, H.S.; Khalid, U.; Jilani, R.; Maqsood, A. Version Management by Time Based Approach in Modern Era. Int. J. Educ. Manag. Eng. 2017, 7, 13–22. [Google Scholar] [CrossRef] [Green Version]

- Munawar, H.S.; Qayyum, S.; Ullah, F.; Sepasgozar, S. Big Data and Its Applications in Smart Real Estate and the Disaster Management Life Cycle: A Systematic Analysis. Big Data Cogn. Comput. 2020, 4, 4. [Google Scholar] [CrossRef] [Green Version]

- Munawar, H.S.; Zhang, J.; Li, H.; Mo, D.; Chang, L. Mining multispectral aerial images for automatic detection of strategic bridge locations for disaster relief missions. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Macau, China, 14–17 April 2019; Springer: Cham, Switzerland, 2019; pp. 189–200. [Google Scholar]

- Physics@UNSW. Chapter 6: Quantifying Sound. Physclips, 2021. Available online: https://www.animations.physics.unsw.edu.au/waves-sound/quantifying/ (accessed on 18 January 2021).

- Sijtsma, P.; Stoker, R. Determination of absolute contributions of aircraft noise components using fly-over array measurements. In Proceedings of the 10th AIAA/CEAS Aeroacoustics Conference, Manchester, UK, 10–12 May 2004; p. 2958. [Google Scholar]

- Sijtsma, P. Phased array beamforming applied to wind tunnel and fly-over tests. In Proceedings of the SAE Brasil International Noise and Vibration Congress, Florianópolis, Brazil, 17–19 October 2010. [Google Scholar]

- Merino-Martínez, R.; Sijtsma, P.; Carpio, A.R.; Zamponi, R.; Luesutthiviboon, S.; Malgoezar, A.M.; Snellen, M.; Schram, C.; Simons, D.G. Integration methods for distributed sound sources. Int. J. Aeroacoustics 2019, 18, 444–469. [Google Scholar] [CrossRef]

- Qadir, Z.; Al-Turjman, F.; Khan, M.A.; Nesimoglu, T. ZIGBEE Based Time and Energy Efficient Smart Parking System Using IOT. In Proceedings of the 2018 18th Mediterranean Microwave Symposium (MMS), Istanbul, Turkey, 31 October–2 November 2018; pp. 295–298. [Google Scholar] [CrossRef]

- Qadir, Z.; Tafadzwa, V.; Rashid, H.; Batunlu, C. Smart Solar Micro-Grid Using ZigBee and Related Security Challenges. In Proceedings of the 2018 18th Mediterranean Microwave Symposium (MMS), Istanbul, Turkey, 31 October–2 November 2018; pp. 299–302. [Google Scholar] [CrossRef]

- Qadir, Z.; Ullah, F.; Munawar, H.S.; Al-Turjman, F. Addressing disasters in smart cities through UAVs path planning and 5G communications: A systematic review. Comput. Commun. 2021, 168, 114–135. [Google Scholar] [CrossRef]

- Qadir, Z.; Khan, S.I.; Khalaji, E.; Munawar, H.S.; Al-Turjman, F.; Mahmud, M.P.; Kouzani, A.Z.; Le, K. Predicting the energy output of hybrid PV–wind renewable energy system using feature selection technique for smart grids. Energy Rep. 2021. [Google Scholar] [CrossRef]

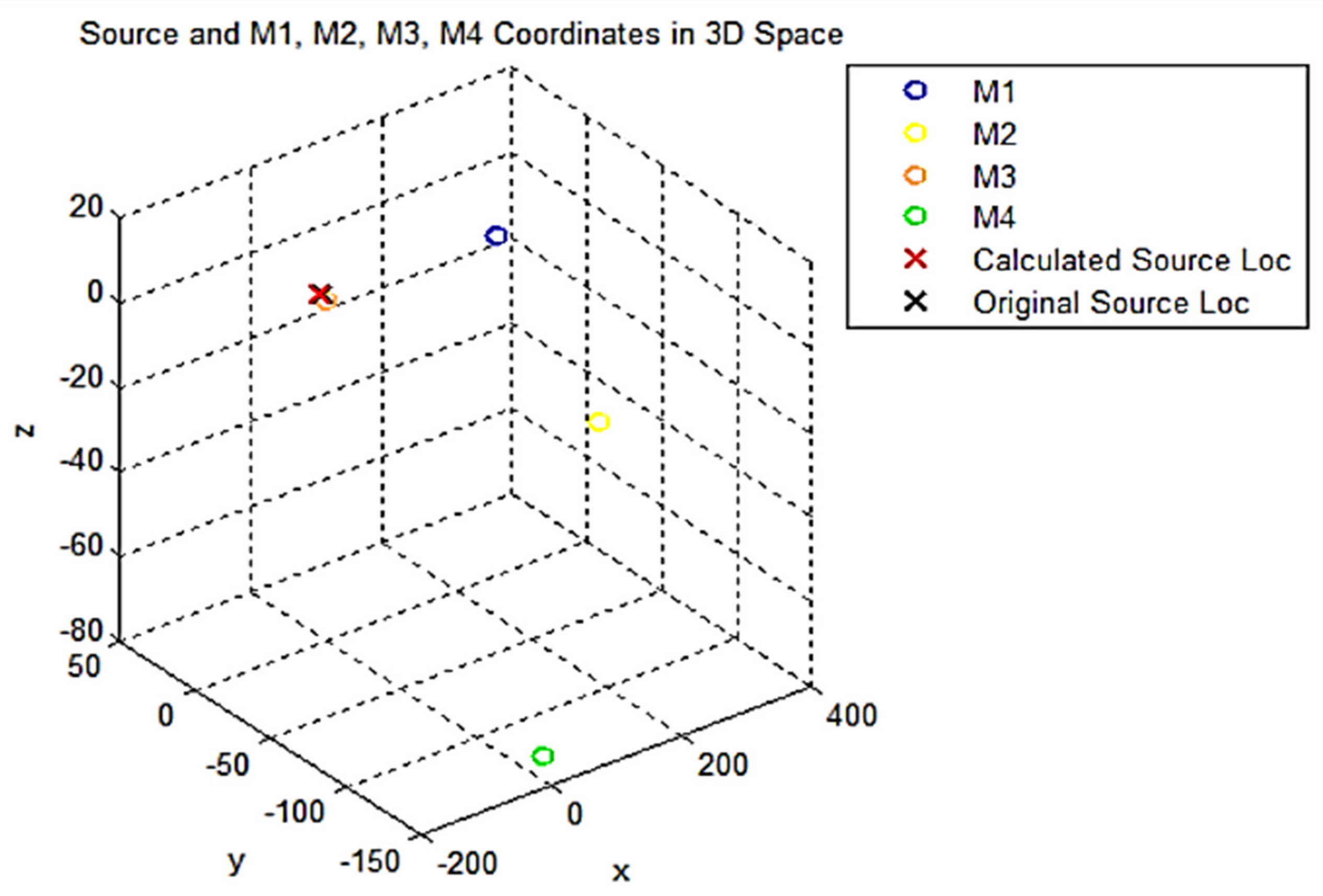

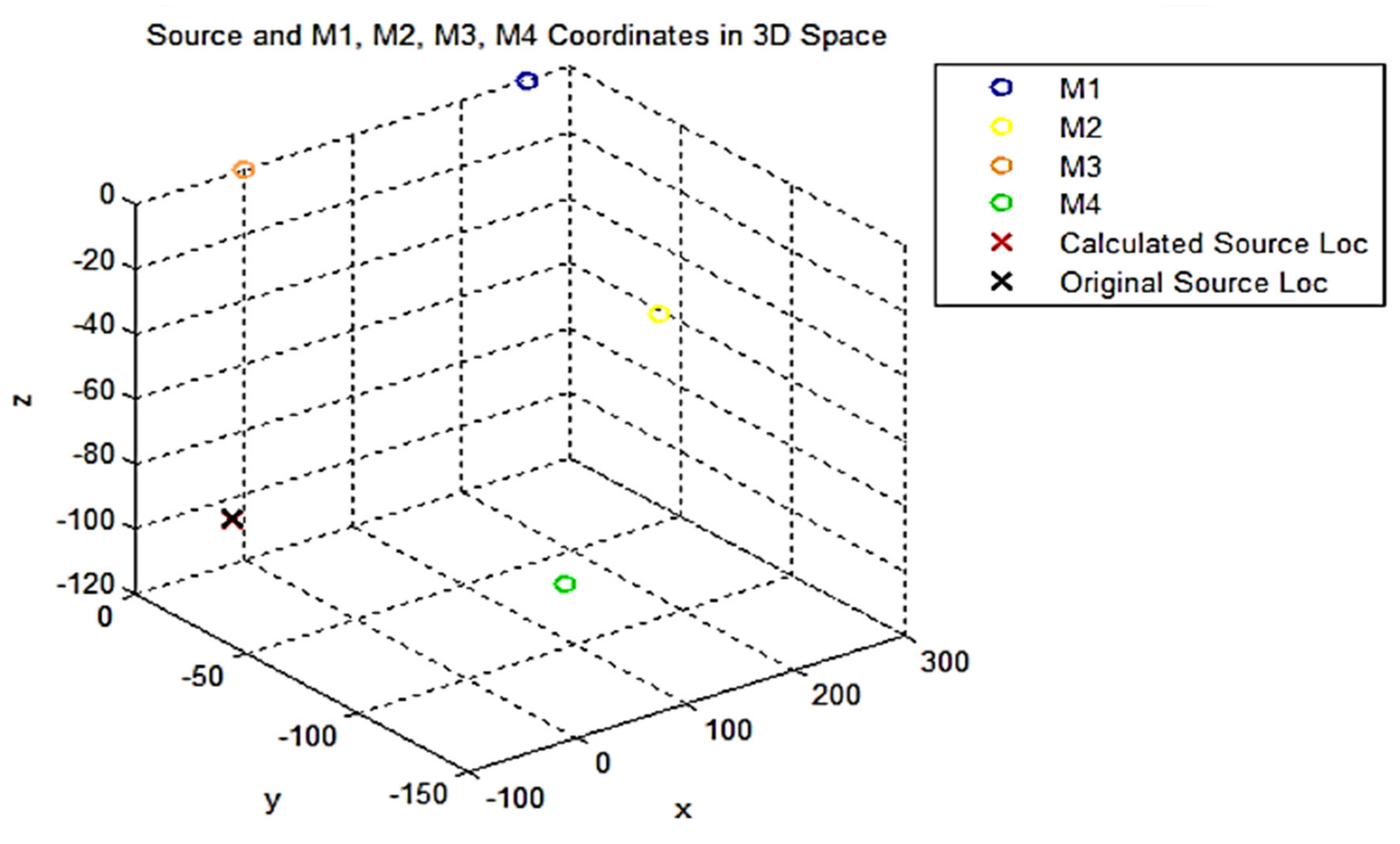

| No. | Sampling Rate | Original Source Location | Calculated Source Location | DOA (Degrees) | Condition Number |

|---|---|---|---|---|---|

| 1. | 100 | (30, 100, 10) | (30.0132, 100.4015, 10.0345) | 75.4 | 1.2557 |

| 2. | 5000 | (−2, 3, 1) | (−2.1378, 3.6586, 1.1087) | 50 | 0.999 |

| 3. | 10,000 | (−30, −10, −100) | (−29.7702, −9.3940, −100.8523) | 45.6 | 0.3350 |

| 4. | 50,000 | (0, 1, 2) | (0.0010, 1.2150, 2.0050) | 26.1 | 1.24 |

| No. | Method | Measured Factors | No. of Microphones | Results | Ref |

|---|---|---|---|---|---|

| 1. | Interaural time and level difference, head transfer function | Azimuth, elevation Distance | 0 | Uncertainty = 3–4 degrees | [69] |

| 2. | Time frequency | DOA | 3 | Success Score = 61.62% | [70] |

| 3. | Deep learning | DOA | 7 | Accuracy = 71.46% | [71] |

| 4. | Beamforming | TDOA, Distance | 3 | Error = 0.11% | [72] |

| 5. | GCC SRC-PHAT | DOA | 4 | RMSE = 0.31 | [73] |

| 6. | Proposed | DOA, 3D Coordinates | 4 | Accuracy = 96.77%, Error factor = 3.8% | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liaquat, M.U.; Munawar, H.S.; Rahman, A.; Qadir, Z.; Kouzani, A.Z.; Mahmud, M.A.P. Sound Localization for Ad-Hoc Microphone Arrays. Energies 2021, 14, 3446. https://doi.org/10.3390/en14123446

Liaquat MU, Munawar HS, Rahman A, Qadir Z, Kouzani AZ, Mahmud MAP. Sound Localization for Ad-Hoc Microphone Arrays. Energies. 2021; 14(12):3446. https://doi.org/10.3390/en14123446

Chicago/Turabian StyleLiaquat, Muhammad Usman, Hafiz Suliman Munawar, Amna Rahman, Zakria Qadir, Abbas Z. Kouzani, and M. A. Parvez Mahmud. 2021. "Sound Localization for Ad-Hoc Microphone Arrays" Energies 14, no. 12: 3446. https://doi.org/10.3390/en14123446

APA StyleLiaquat, M. U., Munawar, H. S., Rahman, A., Qadir, Z., Kouzani, A. Z., & Mahmud, M. A. P. (2021). Sound Localization for Ad-Hoc Microphone Arrays. Energies, 14(12), 3446. https://doi.org/10.3390/en14123446