Increasing the Energy-Efficiency in Vacuum-Based Package Handling Using Deep Q-Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Use Case Definition for Vacuum-Based Package Handling and Experimental Setup

2.2. Deep Q-Learning Implementation and Process Control Architecture

2.3. Design and Conduction of Experiments

3. Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- eMarketer. Retail e-Commerce Sales Worldwide from 2014 to 2024 (in Billion U.S. Dollars). Statista. 2021. Available online: https://www.statista.com/statistics/379046/worldwide-retail-e-commerce-sales/ (accessed on 12 April 2021).

- Gabriel, F.; Fahning, M.; Meiners, J.; Dietrich, F.; Dröder, K. Modeling of vacuum grippers for the design of energy efficient vacuum-based handling processes. Prod. Eng. Res. Dev. 2020. [Google Scholar] [CrossRef]

- Gabriel, F.; Bobka, P.; Dröder, K. Model-Based Design of Energy-Efficient Vacuum-Based Handling Processes. Procedia CIRP 2020, 93, 538–543. [Google Scholar] [CrossRef]

- Wolf, A.; Schunk, H. Grippers in Motion: The Fascination of Automated Handling Tasks, 1st ed.; Hanser: München, Germany, 2018; ISBN 978-1-56990-715-3. [Google Scholar]

- Hesse, S. Grundlagen der Handhabungstechnik; Carl Hanser Verlag GmbH & Co. KG: München, Germany, 2016; ISBN 978-3-446-44432-4. [Google Scholar]

- Kirsch, S.-M.; Welsch, F.; Schmidt, M.; Motzki, P.; Seelecke, S. Bistable SMA vacuum suction cup. In Proceedings of the Actuator 2018 16h International Conference on New Actuators, Bremen, Germany, 25–27 June 2018. [Google Scholar]

- Motzki, P.; Kunze, J.; Holz, B.; York, A.; Seelecke, S. Adaptive and energy efficient SMA-based handling systems. In SPIE Smart Structures and Materials + Nondestructive Evaluation and Health Monitoring, San Diego, California, United States, Sunday 8 March 2015; Liao, W.-H., Ed.; SPIE: Bellingham, WA, USA, 2015; p. 943116. [Google Scholar]

- Motzki, P.; Kunze, J.; York, A.; Seelecke, S. Energy-efficient SMA Vacuum Gripper System. In Proceedings of the Actuator 16-15th International Conference on New Actuators, Bremen, Germany, 13–15 June 2016. [Google Scholar]

- Welsch, F.; Kirsch, S.-M.; Motzki, P.; Schmidt, M.; Seelecke, S. Vacuum Gripper System Based on Bistable SMA Actuation. In Proceedings of the ASME 2018 Conference on Smart Materials, Adaptive Structures and Intelligent Systems, San Antonio, TX, USA, 10–12 September 2018. [Google Scholar]

- Kuolt, H.; Kampowski, T.; Poppinga, S.; Speck, T.; Moosavi, A.; Tautenhahn, R.; Weber, J.; Gabriel, F.; Pierri, E.; Dröder, K. Increase of energy efficiency in vacuum handling systems based on biomimetic principles. In 12th International Fluid Power Conference (12. IFK); Weber, J., Ed.; Dresdner Verein zur Förderung der Fluidtechnik e. V. Dresden: Dresden, Germany, 2020. [Google Scholar]

- Zhakypov, Z.; Heremans, F.; Billard, A.; Paik, J. An Origami-Inspired Reconfigurable Suction Gripper for Picking Objects with Variable Shape and Size. IEEE Robot. Autom. Lett. 2018, 3, 2894–2901. [Google Scholar] [CrossRef]

- Li, X.; Dong, L. Development and Analysis of an Electrically Activated Sucker for Handling Workpieces with Rough and Uneven Surfaces. IEEE/ASME Trans. Mechatron. 2016, 21, 1024–1034. [Google Scholar] [CrossRef]

- Okuno, Y.; Shigemune, H.; Kuwajima, Y.; Maeda, S. Stretchable Suction Cup with Electroadhesion. Adv. Mater. Technol. 2018, 26, 1800304. [Google Scholar] [CrossRef]

- Liu, J.; Tanaka, K.; Bao, L.M.; Yamaura, I. Analytical modelling of suction cups used for window-cleaning robots. Vacuum 2006, 80, 593–598. [Google Scholar] [CrossRef]

- Bing-Shan, H.; Li-Wen, W.; Zhuang, F.; Yan-Zheng, Z. Bio-inspired miniature suction cups actuated by shape memory alloy. Int. J. Adv. Robot. Syst. 2009, 6, 29. [Google Scholar] [CrossRef]

- Follador, M.; Tramacere, F.; Mazzolai, B. Dielectric elastomer actuators for octopus inspired suction cups. Bioinspir. Biomim. 2014, 9, 46002. [Google Scholar] [CrossRef] [PubMed]

- Fischmann, C. Verfahren zur Bewertung von Greifern für Photovoltaik-Wafer. 2014. Available online: https://elib.uni-stuttgart.de/handle/11682/4582 (accessed on 12 April 2021).

- Bahr, B.; Li, Y.; Najafi, M. Design and suction cup analysis of a wall climbing robot. Comput. Electr. Eng. 1996, 22, 193–209. [Google Scholar] [CrossRef]

- Mantriota, G. Optimal grasp of vacuum grippers with multiple suction cups. Mech. Mach. Theory 2007, 42, 18–33. [Google Scholar] [CrossRef]

- Mantriota, G. Theoretical model of the grasp with vacuum gripper. Mech. Mach. Theory 2007, 42, 2–17. [Google Scholar] [CrossRef]

- Mantriota, G.; Messina, A. Theoretical and experimental study of the performance of flat suction cups in the presence of tangential loads. Mech. Mach. Theory 2011, 46, 607–617. [Google Scholar] [CrossRef]

- Radtke, M. Untersuchungen zur Dimensionierung von Sauggreifern. Ph.D. Thesis, Technische Universität Dresden, Dresden, Germany, 1992. [Google Scholar]

- Karako, Y.; Moriya, T.; Abe, M.; Shimakawa, H.; Shirahori, S.; Saitoh, Y. A practical simulation method for pick-and-place with vacuum gripper. In Proceedings of the 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Kanazawa, Japan, 19–22 September 2017; pp. 1351–1356, ISBN 978-4-907764-57-9. [Google Scholar]

- Becker, R. Untersuchungen zum Kraftübertragungsverhalten von Vakuumgreifern; Verl. Praxiswissen: Dortmund, Germany, 1993; ISBN 3-929443-17-1. [Google Scholar]

- Horak, M.; Novotny, F. Numerical model of contact compliant gripping element with an object of handling. In Proceedings of the International Carpathian Control Conference ICCC, Malenovice, Czech Republic, 27–30 May 2002; pp. 691–696. [Google Scholar]

- Liu, X.; Hammele, W. Die Entwicklung von Sauggreifern mit Hilfe der Finite-Elemente-Methode. KGK Kautschuk Gummi Kunststoffe 2002, 10, 530–534. [Google Scholar]

- Valencia, A.J.; Idrovo, R.M.; Sappa, A.D.; Guingla, D.P.; Ochoa, D. A 3D vision based approach for optimal grasp of vacuum grippers. In Proceedings of the 2017 IEEE International Workshop of Electronics, Control, Measurement, Signals and their Application to Mechatronics (ECMSM), Donostia, San Sebastian, Spain, 24–26 May 2017; pp. 1–6, ISBN 978-1-5090-5582-1. [Google Scholar]

- Mahler, J.; Matl, M.; Liu, X.; Li, A.; Gealy, D.; Goldberg, K. Dex-Net 3.0: Computing Robust Vacuum Suction Grasp Targets in Point Clouds Using a New Analytic Model and Deep Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–8, ISBN 978-1-5386-3081-5. [Google Scholar]

- Sdahl, M.; Kuhlenkötter, B. CAGD—Computer Aided Gripper Design for a Flexible Gripping System. Int. J. Adv. Robot. Syst. 2005, 2, 15. [Google Scholar] [CrossRef]

- Gabriel, F.; Römer, M.; Bobka, P.; Dröder, K. Model-based grasp planning for energy-efficient vacuum-based handling. CIRP Ann. 2021, 1–4. [Google Scholar] [CrossRef]

- Fritz, F.; von Grabe, C.; Kuolt, H.; Murrenhoff, H. Benchmark of existing energy conversion efficiency definitions for pneumatic vacuum generators. In Proceedings of the Re-Engineering Manufacturing for Sustainability: Proceedings of the 20th CIRP International Conference on Life Cycle Engineering, Singapore, 17–19 April 2013; Nee, A.Y.C., Song, B., Ong, S.-K., Eds.; Springer: Singapore, 2013. ISBN 978-981-4451-47-5. [Google Scholar]

- Fritz, F.; Haefele, S.; Traut, A.; Eckerle, M. Manufacturing of Optimized Venturi Nozzles Based on Technical-Economic Analysis. In Proceedings of the Re-Engineering Manufacturing for Sustainability: Proceedings of the 20th CIRP International Conference on Life Cycle Engineering, Singapore, 17–19 April 2013; Nee, A.Y.C., Song, B., Ong, S.-K., Eds.; Springer: Singapore, 2013. ISBN 978-981-4451-47-5. [Google Scholar]

- Kuolt, H.; Gauß, J.; Schaaf, W.; Winter, A. Optimization of pneumatic vacuum generators—heading for energy-efficient handling processes. In Proceedings of the 10th International Fluid Power Conference, Dresden, Germany, 8–10 March 2016; Dresdner Verein zur Förderung der Fluidtechnik e.V.: Dresden, Germany, 2016. [Google Scholar]

- Fontanelli, G.A.; Paduano, G.; Caccavale, R.; Arpenti, P.; Lippiello, V.; Villani, L.; Siciliano, B. A Reconfigurable Gripper for Robotic Autonomous Depalletizing in Supermarket Logistics. IEEE Robot. Autom. Lett. 2020, 5, 4612–4617. [Google Scholar] [CrossRef]

- Tanaka, J.; Ogawa, A.; Nakamoto, H.; Sonoura, T.; Eto, H. Suction pad unit using a bellows pneumatic actuator as a support mechanism for an end effector of depalletizing robots. Robomech. J. 2020, 7. [Google Scholar] [CrossRef]

- Schaffrath, R.; Jäger, E.; Winkler, G.; Doant, J.; Todtermuschke, M. Vacuum gripper without central compressed air supply. Procedia CIRP 2021, 97, 76–80. [Google Scholar] [CrossRef]

- Shao, Q.; Hu, J.; Wang, W.; Fang, Y.; Liu, W.; Qi, J.; Ma, J. Suction Grasp Region Prediction using Self-supervised Learning for Object Picking in Dense Clutter. 2019. Available online: https://arxiv.org/pdf/1904.07402 (accessed on 12 April 2021).

- Mahler, J.; Goldberg, K. Learning deep policies for robot bin picking by simulating robust grasping sequences. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017. [Google Scholar]

- Han, M.; Liu, W.; Pan, Z.; Xue, T.; Shao, Q.; Ma, J.; Wang, W. Object-Agnostic Suction Grasp Affordance Detection in Dense Cluster Using Self-Supervised Learning. 2019. Available online: https://arxiv.org/pdf/1906.02995 (accessed on 12 April 2021).

- Jiang, P.; Ishihara, Y.; Sugiyama, N.; Oaki, J.; Tokura, S.; Sugahara, A.; Ogawa, A. Depth Image-Based Deep Learning of Grasp Planning for Textureless Planar-Faced Objects in Vision-Guided Robotic Bin-Picking. Sensors 2020, 20, 706. [Google Scholar] [CrossRef] [PubMed]

- Iriondo, A.; Lazkano, E.; Ansuategi, A. Affordance-Based Grasping Point Detection Using Graph Convolutional Networks for Industrial Bin-Picking Applications. Sensors 2021, 21, 816. [Google Scholar] [CrossRef]

- OHLF, e.V. Open Hybrid LabFactory. Available online: https://open-hybrid-labfactory.de/ (accessed on 19 May 2021).

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning, 2nd ed.; The MIT Press: Cambridge MA, USA, 2018; ISBN 9780262039406. [Google Scholar]

- Edelkamp, S.; Schrödl, S. Heuristic Search: Theory and Applications; Morgan Kaufmann is an imprint of Elsevier: Amsterdam, The Netherlands; Boston, MA, USA; Heidelberg, Germany, 2012; ISBN 9780123725127. [Google Scholar]

- Nguyen, H.; La, H. Review of Deep Reinforcement Learning for Robot Manipulation. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 590–595, ISBN 978-1-5386-9245-5. [Google Scholar]

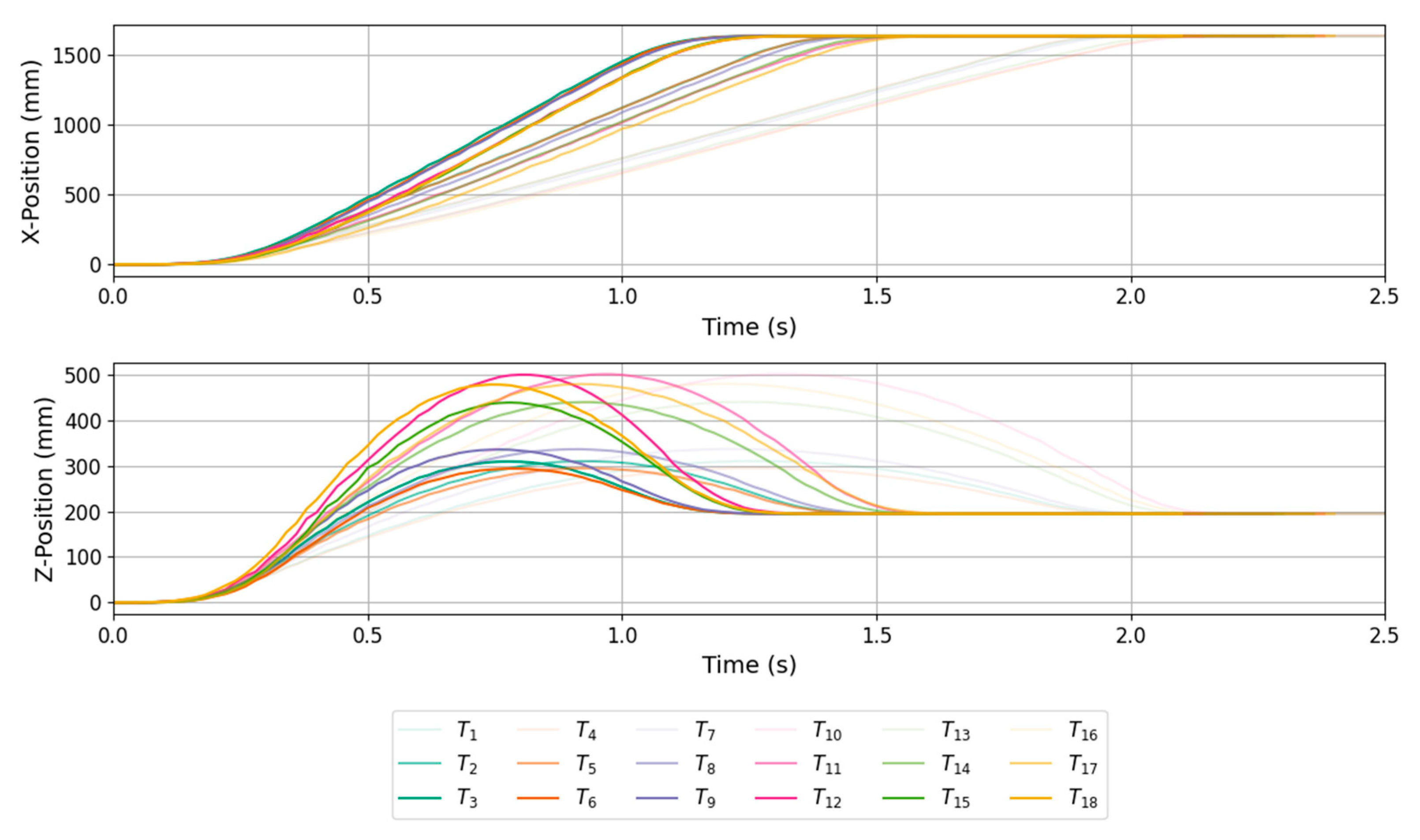

| Trajectory No. | States [ax,max, a+z,max, a-z,max] (m/s2) | Reference Air Consumption (L) |

|---|---|---|

| 1 | [5.3, 2.5, 0.0], [0.3, 0.0, 0.6], [5.8, 1.6, 0.0] | 3.262 |

| 2 | [7.7, 3.6, 0.0], [0.6, 0.0, 1.5], [8.0, 2.1, 0.9] | 2.507 |

| 3 | [9.2, 4.3, 0.0], [0.8, 0.0, 2.6], [9.9, 2.3, 0.0] | 2.114 |

| 4 | [5.3, 2.4, 0.0], [0.3, 0.0, 0.6], [5.8, 1.4, 0.0] | 3.262 |

| 5 | [7.4, 3.7, 0.0], [0.6, 0.0, 1.4], [8.0, 1.9, 0.0] | 2.507 |

| 6 | [9.1, 4.0, 0.0], [0.6, 0.0, 2.3], [10.0, 2.0, 0.0] | 2.144 |

| 7 | [5.0, 2.9, 0.0], [0.4, 0.0, 0.7], [5.6, 1.8, 0.0] | 3.292 |

| 8 | [7.0, 4.1, 0.0], [0.7, 0.0, 1.6], [8.0, 2.2, 0.0] | 2.537 |

| 9 | [8.9, 5.0, 0.0], [0.4, 0.0, 2.8], [9.9, 2.6, 0.0] | 2.144 |

| 10 | [4.4, 3.5, 0.0], [0.4, 0.0, 1.3], [4.6, 3.5, 0.0] | 3.533 |

| 11 | [6.0, 5.0, 0.0], [1.4, 0.0, 2.9], [6.6, 4.6, 0.0] | 2.688 |

| 12 | [7.4, 5.8, 0.0], [1.4, 0.0, 5.2], [8.4, 5.5, 0.0] | 2.265 |

| 13 | [4.3, 3.7, 0.0], [0.5, 0.0, 1.0], [5.2, 2.6, 0.0] | 3.443 |

| 14 | [5.9, 4.8, 0.0], [0.9, 0.0, 0.9], [7.1, 3.5, 0.0] | 2.597 |

| 15 | [7.7, 5.8, 0.0], [0.6, 0.0, 3.9], [9.7, 4.4, 0.0] | 2.235 |

| 16 | [3.8, 3.9, 0.0], [1.2, 0.0, 1.2], [5.1, 2.8, 0.0] | 3.473 |

| 17 | [5.4, 5.4, 0.0], [1.2, 0.0, 2.5], [7.2, 3.6, 0.0] | 2.688 |

| 18 | [6.6, 6.3, 0.0], [2.4, 0.0, 4.5], [9.4, 4.4, 0.0] | 2.265 |

| Category | Trajectory Indices |

|---|---|

| slow | 1,4,7,10,13,16 |

| medium | 2,5,8,11,14,17 |

| fast | 3,6,9,12,15,18 |

| Fold No. | Training indices | Test Indices |

|---|---|---|

| 1 | 1,2,3,4,5,6,7,9,11,12,13,14,15,16,17 | 8,10,18 |

| 2 | 1,2,3,4,5,7,8,9,10,11,12,14,15,16,18 | 6,13,17 |

| 3 | 1,3,4,5,6,8,9,10,11,13,14,15,16,17,18 | 2,7,12 |

| 4 | 2,4,5,6,7,8,9,10,11,12,13,15,16,17,18 | 1,3,14 |

| 5 | 1,2,3,4,6,7,8,9,10,11,12,13,14,17,18 | 5,15,16 |

| 6 | 1,2,3,5,6,7,8,10,12,13,14,15,16,17,18 | 4,9,11 |

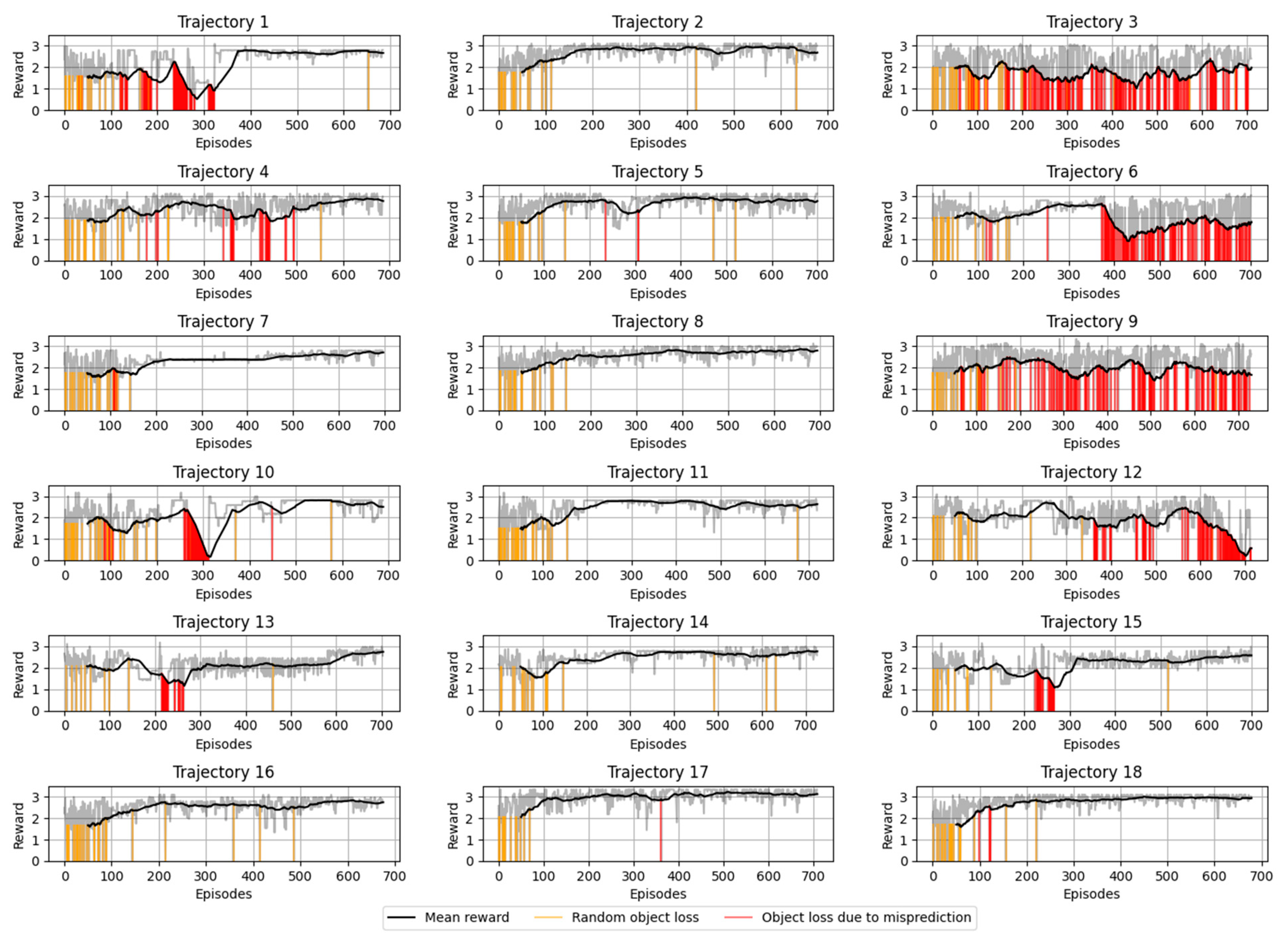

| Reward (Efficiency Reward + Cycle Reward) | Compressed Air Saving |

|---|---|

| 1.75 (0.20 + 0.25 + 0.30 + 1) | 25% |

| 2.5 (0.6 + 0.4 + 0.5 + 1) | 50% |

| 3.25 (0.75 + 0.75 + 0.75 + 1) | 75% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gabriel, F.; Bergers, J.; Aschersleben, F.; Dröder, K. Increasing the Energy-Efficiency in Vacuum-Based Package Handling Using Deep Q-Learning. Energies 2021, 14, 3185. https://doi.org/10.3390/en14113185

Gabriel F, Bergers J, Aschersleben F, Dröder K. Increasing the Energy-Efficiency in Vacuum-Based Package Handling Using Deep Q-Learning. Energies. 2021; 14(11):3185. https://doi.org/10.3390/en14113185

Chicago/Turabian StyleGabriel, Felix, Johannes Bergers, Franziska Aschersleben, and Klaus Dröder. 2021. "Increasing the Energy-Efficiency in Vacuum-Based Package Handling Using Deep Q-Learning" Energies 14, no. 11: 3185. https://doi.org/10.3390/en14113185

APA StyleGabriel, F., Bergers, J., Aschersleben, F., & Dröder, K. (2021). Increasing the Energy-Efficiency in Vacuum-Based Package Handling Using Deep Q-Learning. Energies, 14(11), 3185. https://doi.org/10.3390/en14113185