1D Barcode Detection: Novel Benchmark Datasets and Comprehensive Comparison of Deep Convolutional Neural Network Approaches

Abstract

:1. Introduction

- Benchmarking recent state-of-the-art and underexplored D-CNN frameworks with other prior well-known solutions by utilizing the novel barcode datasets: InventBar and ParcelBar and other former public and realistic datasets.

- Analyzing some significant characteristics of the recent publicly available barcode datasets corresponding with the application effects of the well-known D-CNNs on 1D barcode detection.

- Collecting and maintaining the recent barcode datasets with well-completed annotations and partitioning them into a series of training, validation, and test sets; those are ready for use.

- Evaluating both the performance and efficiency of all implemented D-CNN solutions.

2. Related Works

2.1. Previous Barcode Datasets

2.2. Deep Learning (DL) and Convolutional Neural Network (CNNs) for Barcode Recognition

3. Materials and Methods

3.1. Experimental Settings

3.2. Dataset Description

3.3. Data Annotations

3.4. Transfer Learning

3.5. Model Training and Testing

3.6. Evaluation Methodologies

4. Results and Discussion

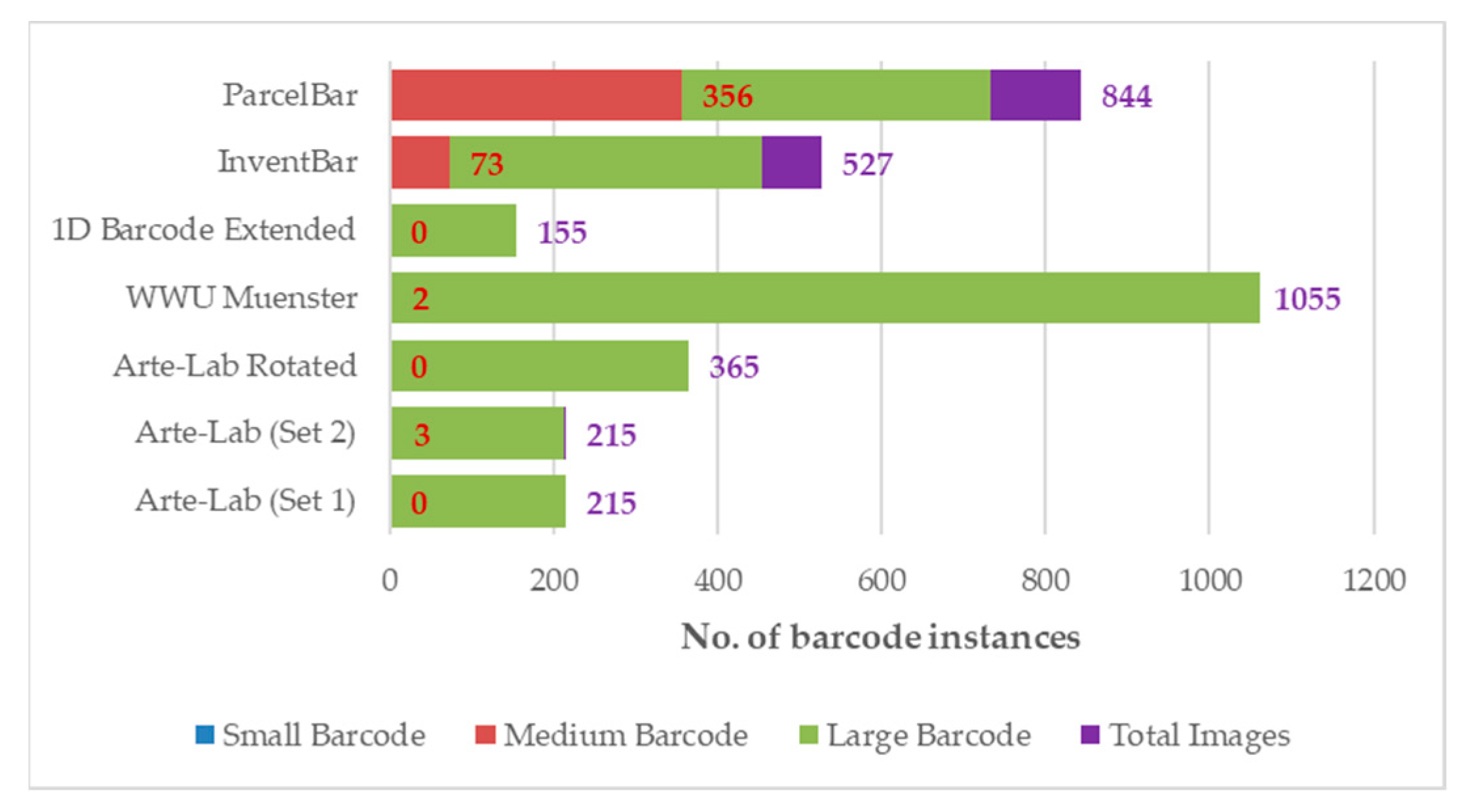

4.1. Dataset Statistics

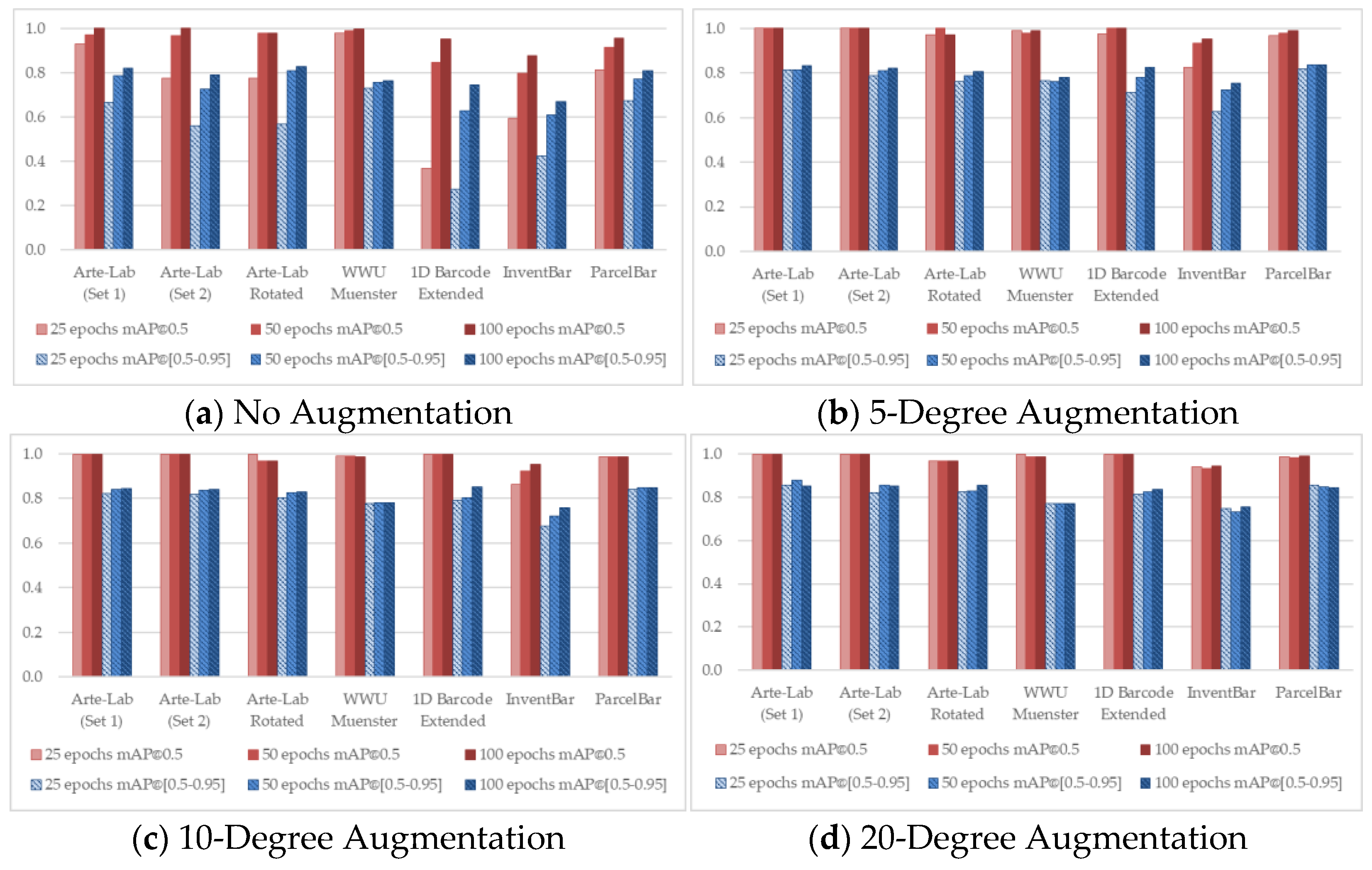

4.2. Barcode Recognition Accuracy

4.3. Runtime Performance

4.4. Application Effects of D-CNNs on 1D Barcode Recognition

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Shao, S.; Tao, M.; Bi, X.; Letaief, K.B. Deep Learning-Enabled Semantic Communication Systems with Task-Unaware Transmitter and Dynamic Data. 2022. Available online: https://arxiv.org/abs/2205.00271 (accessed on 4 October 2022).

- Panzer, M.; Bender, B. Deep Reinforcement Learning in Production Systems: A Systematic Literature Review. Int. J. Prod. Res. 2022, 60, 4316–4341. [Google Scholar] [CrossRef]

- Chen, M.-Y.; Sangaiah, A.K.; Chen, T.-H.; Lughofer, E.D.; Egrioglu, E. Deep Learning for Financial Engineering. Comput. Econ. 2022, 59, 1277–1281. [Google Scholar] [CrossRef]

- Cepeda-Pacheco, J.C.; Domingo, M.C. Deep Learning and Internet of Things for Tourist Attraction Recommendations in Smart Cities. Neural Comput. Appl. 2022, 34, 7691–7709. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Reddy Maddikunta, P.K.; Pham, Q.-V.; Gadekallu, T.R.; Krishnan S, S.R.; Chowdhary, C.L.; Alazab, M.; Jalil, P. Deep Learning and Medical Image Processing for Coronavirus (COVID-19) Pandemic: A Survey. Sustain. Cities Soc. 2021, 65, 102589. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, V.; Sharma, M.; Sharma, P.; Agarwal, D. Deep Learning in Gaming and Animations: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2021; ISBN 978-1-00-323153-0. [Google Scholar]

- Borgman, J.; Stark, K.; Carson, J.; Hauser, L. Deep Learning Encoding for Rapid Sequence Identification on Microbiome Data. Front. Bioinform. 2022, 2, 871256. [Google Scholar] [CrossRef] [PubMed]

- Duan, H.; Wang, P.; Huang, Y.; Xu, G.; Wei, W.; Shen, X. Robotics Dexterous Grasping: The Methods Based on Point Cloud and Deep Learning. Front. Neurorobot. 2021, 15, 658280. [Google Scholar] [CrossRef]

- Li, J.; Zhang, D.; Zhou, M.; Cao, Z. A Motion Blur QR Code Identification Algorithmbased on Feature Extracting and Improved Adaptive Thresholding. Neurocomputing 2022, 493, 351–361. [Google Scholar] [CrossRef]

- Pu, H.; Fan, M.; Yang, J.; Lian, J. Quick Response Barcode Deblurring via Doubly Convolutional Neural Network. Multimedia Tools Appl. 2019, 78, 897–912. [Google Scholar] [CrossRef]

- Chen, R.; Zheng, Z.; Yu, Y.; Zhao, H.; Ren, J.; Tan, H.-Z. Fast Restoration for Out-of-Focus Blurred Images of QR Code with Edge Prior Information via Image Sensing. IEEE Sens. J. 2021, 21, 18222–18236. [Google Scholar] [CrossRef]

- Do, T.; Tolcha, Y.; Jun, T.J.; Kim, D. Smart Inference for Multidigit Convolutional Neural Network Based Barcode Decoding. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milano, Italy, 10–15 January 2021; pp. 3019–3026. [Google Scholar]

- Brylka, R.; Schwanecke, U.; Bierwirth, B. Camera Based Barcode Localization and Decoding in Real-World Applications. In Proceedings of the 2020 International Conference on Omni-layer Intelligent Systems (COINS), Barcelona, Spain, 31 August 2020–2 September 2020; pp. 1–8. [Google Scholar]

- Zhang, L.; Sui, Y.; Zhu, F.; Zhu, M.; He, B.; Deng, Z. Fast Barcode Detection Method Based on ThinYOLOv4. In Cognitive Systems and Signal Processing, Proceedings of the ICCSIP 2020: Cognitive Systems and Signal Processing, Zhuhai, China, 25–27 December 2020; Sun, F., Liu, H., Fang, B., Eds.; Springer: Singapore, 2021; pp. 41–55. [Google Scholar]

- Elgendy, M. Deep Learning for Vision Systems; Simon and Schuster: New York, NY, USA, 2020. [Google Scholar]

- Majidifard, H.; Jin, P.; Adu-Gyamfi, Y.; Buttlar, W.G. Pavement Image Datasets: A New Benchmark Dataset to Classify and Densify Pavement Distresses. Transp. Res. Rec. 2020, 2674, 328–339. [Google Scholar] [CrossRef] [Green Version]

- Wudhikarn, R.; Charoenkwan, P.; Malang, K. Deep Learning in Barcode Recognition: A Systematic Literature Review. IEEE Access 2022, 10, 8049–8072. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Adibhatla, V.A.; Chih, H.-C.; Hsu, C.-C.; Cheng, J.; Abbod, M.F.; Shieh, J.-S. Applying Deep Learning to Defect Detection in Printed Circuit Boards via a Newest Model of You-Only-Look-Once. Math. Biosci. Eng. 2021, 18, 4411–4428. [Google Scholar] [CrossRef] [PubMed]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012; ChristopherSTAN; Liu, C.; Laughing; tkianai; yxNONG; Hogan, A.; et al. Ultralytics/Yolov5: V4.0-Nn.SiLU() Activations, Weights & Biases Logging, PyTorch Hub Integration. 2021. Available online: https://zenodo.org/record/4418161#.Y3B33OxBw1I (accessed on 14 April 2022).

- Ultralytics/Yolov5. 2022. Available online: https://github.com/ultralytics/yolov5 (accessed on 14 April 2022).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Katuk, N.; Mahamud, K.-R.K.; Zakaria, N.H. A review of the current trends and future directions of camera barcode reading. J. Theor. Appl. Inf. Technol. 2019, 97, 22. [Google Scholar]

- Sörös, G.; Flörkemeier, C. Blur-Resistant Joint 1D and 2D Barcode Localization for Smartphones. In Proceedings of the 12th International Conference on Mobile and Ubiquitous Multimedia-MUM’13, Luleå, Sweden, 2–5 December 2013; ACM Press: Luleå, Sweden, 2013; pp. 1–8. [Google Scholar]

- Bodnár, P.; Grósz, T.; Tóth, L.; Nyúl, L.G. Efficient Visual Code Localization with Neural Networks. Pattern Anal. Appl. 2018, 21, 249–260. [Google Scholar] [CrossRef]

- Wei, Y.; Tran, S.; Xu, S.; Kang, B.; Springer, M. Deep Learning for Retail Product Recognition: Challenges and Techniques. Comput. Intell. Neurosci. 2020, 2020, 8875910. [Google Scholar] [CrossRef]

- Kalinov, I.; Petrovsky, A.; Ilin, V.; Pristanskiy, E.; Kurenkov, M.; Ramzhaev, V.; Idrisov, I.; Tsetserukou, D. WareVision: CNN Barcode Detection-Based UAV Trajectory Optimization for Autonomous Warehouse Stocktaking. IEEE Robot. Autom. Lett. 2020, 5, 6647–6653. [Google Scholar] [CrossRef]

- Hansen, D.K.; Nasrollahi, K.; Rasmusen, C.B.; Moeslund, T.B. Real-Time Barcode Detection and Classification Using Deep Learning. In Proceedings of the 9th International Joint Conference on Computational Intelligence, Madeira, Portugal, 1–3 November 2017; pp. 321–327. [Google Scholar]

- Grzeszick, R.; Feldhorst, S.; Mosblech, C.; Fink, G.A.; Ten Hompel, M. Camera-Assisted Pick-by-Feel. Logist. J. 2016, 2016, 10. [Google Scholar] [CrossRef]

- Suh, S.; Lee, H.; Lee, Y.O.; Lukowicz, P.; Hwang, J. Robust Shipping Label Recognition and Validation for Logistics by Using Deep Neural Networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 4509–4513. [Google Scholar]

- Tan, H. Line Inspection Logistics Robot Delivery System Based on Machine Vision and Wireless Communication. In Proceedings of the 2020 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Chongqing, China, 29–30 October 2020; pp. 366–374. [Google Scholar]

- Suh, S.; Lukowicz, P.; Lee, Y.O. Fusion of Global-Local Features for Image Quality Inspection of Shipping Label. arXiv 2020, arXiv:2008.11440. [Google Scholar]

- Do, H.-T.; Pham, V.-C. Deep Learning Based Goods Management in Supermarkets. J. Adv. Inf. Technol. 2021, 12, 164–168. [Google Scholar] [CrossRef]

- Zamberletti, A.; Gallo, I.; Carullo, M.; Binaghi, E. Neural image restoration for decoding 1-d barcodes using common camera phones. In Proceedings of the International Conference on Computer Vision Theory and Applications, Angers, France, 17–21 May 2010; SciTePress: Pavia, Italy, 2010; pp. 5–11. [Google Scholar]

- ArteLab. Available online: http://artelab.dista.uninsubria.it/downloads/datasets/barcode/hough_barcode_1d/hough_barcode_1d.html (accessed on 4 October 2022).

- University of Münster WWU Muenster Pattern Recognition and Image Analysis. Available online: https://www.uni-muenster.de/PRIA/en/forschung/index.shtml (accessed on 4 October 2022).

- Zamberletti, A.; Gallo, I.; Albertini, S. Robust Angle Invariant 1D Barcode Detection. In Proceedings of the 2013 2nd IAPR Asian Conference on Pattern Recognition, Okinawa, Japan, 5–8 November 2013; pp. 160–164. [Google Scholar]

- Szentandrási, I.; Herout, A.; Dubská, M. Fast Detection and Recognition of QR Codes in High-Resolution Images. Available online: http://www.fit.vutbr.cz/research/groups/graph/pclines/pub_page.php?id=2012-SCCG-QRtiles (accessed on 4 October 2022).

- ArteLab. Available online: http://artelab.dista.uninsubria.it/downloads/datasets/barcode/medium_barcode_1d/medium_barcode_1d.html (accessed on 4 October 2022).

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Dris, A.B.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of Dataset Size on Classification Performance: An Empirical Evaluation in the Medical Domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

- Brownlee, J. Impact of Dataset Size on Deep Learning Model Skill and Performance Estimates. Machine Learning Mastery. 2019. Available online: https://machinelearningmastery.com/impact-of-dataset-size-on-deep-learning-model-skill-and-performance-estimates/ (accessed on 4 October 2022).

- Do, T.; Kim, D. Quick Browser: A Unified Model to Detect and Read Simple Object in Real-Time. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Svarnovics, V. DataMatrix Barcode Read Rate Improvement Using Image Enhancement. 2021. Available online: http://essay.utwente.nl/88947/1/Svarnovics_MA_EEMCS.pdf (accessed on 4 October 2022).

- Dodge, S.; Karam, L. Understanding How Image Quality Affects Deep Neural Networks. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; pp. 1–6. [Google Scholar]

- Sabottke, C.F.; Spieler, B.M. The Effect of Image Resolution on Deep Learning in Radiography. Radiol. Artif. Intell. 2020, 2, e190015. [Google Scholar] [CrossRef]

- Jia, J.; Zhai, G.; Ren, P.; Zhang, J.; Gao, Z.; Min, X.; Yang, X. Tiny-BDN: An Efficient and Compact Barcode Detection Network. IEEE J. Sel. Top. Signal Process. 2020, 14, 688–699. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2015, arXiv:1412.6572. [Google Scholar]

- Sharma, N.; Sharma, R.; Jindal, N. Machine Learning and Deep Learning Applications—A Vision. Glob. Transit. Proc. 2021, 2, 24–28. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Advances in Computer Vision; Arai, K., Kapoor, S., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2020; Volume 943, pp. 128–144. ISBN 978-3-030-17794-2. [Google Scholar]

- Flores, M.; Liu, Z.; Zhang, T.; Hasib, M.; Chiu, Y.-C.; Ye, Z.; Paniagua, K.; Jo, S.; Zhang, J.; Gao, S.-J.; et al. Deep Learning Tackles Single-Cell Analysis—A Survey of Deep Learning for ScRNA-Seq Analysis. Brief Bioinform 2022, 23, bbab531.74. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- O’Shea, T.J.; Hoydis, J. An Introduction to Deep Learning for the Physical Layer. arXiv 2017, arXiv:1702.00832. [Google Scholar] [CrossRef] [Green Version]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescape, A. Mobile Encrypted Traffic Classification Using Deep Learning. In Proceedings of the 2018 Network Traffic Measurement and Analysis Conference (TMA), Vienna, Austria, 26–29 June 2018; pp. 1–8. [Google Scholar]

- Fraga-Lamas, P.; Ramos, L.; Mondéjar-Guerra, V.; Fernández-Caramés, T.M. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sens. 2019, 11, 2144. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Sun, A.; Han, J.; Li, C. A Survey on Deep Learning for Named Entity Recognition. arXiv 2020, arXiv:1812.09449. [Google Scholar] [CrossRef] [Green Version]

- Chou, T.-H.; Ho, C.-S.; Kuo, Y.-F. QR Code Detection Using Convolutional Neural Networks. In Proceedings of the 2015 International Conference on Advanced Robotics and Intelligent Systems (ARIS), Taipei, Taiwan, 29–31 May 2015; pp. 1–5. [Google Scholar]

- Li, J.; Zhao, Q.; Tan, X.; Luo, Z.; Tang, Z. Using Deep ConvNet for Robust 1D Barcode Detection. In Advances in Intelligent Systems and Interactive Applications; Xhafa, F., Patnaik, S., Zomaya, A.Y., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2018; Volume 686, pp. 261–267. ISBN 978-3-319-69095-7. [Google Scholar]

- Zhang, H.; Shi, G.; Liu, L.; Zhao, M.; Liang, Z. Detection and Identification Method of Medical Label Barcode Based on Deep Learning. In Proceedings of the 2018 Eighth International Conference on Image Processing Theory, Tools and Applications (IPTA), Xi’an, China, 7–10 November 2018; pp. 1–6. [Google Scholar]

- Tian, Y.; Che, Z.; Zhai, G.; Gao, Z. BAN, A Barcode Accurate Detection Network. In Proceedings of the 2018 IEEE Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 9–12 December 2018; pp. 1–5. [Google Scholar]

- Ventsov, N.N.; Podkolzina, L.A. Localization of Barcodes Using Artificial Neural Network. In Proceedings of the 2018 IEEE East-West Design & Test Symposium (EWDTS), Kazan, Russia, 14–17 September 2018; pp. 1–6. [Google Scholar]

- Zhao, Q.; Ni, F.; Song, Y.; Wang, Y.; Tang, Z. Deep Dual Pyramid Network for Barcode Segmentation Using Barcode-30k Database. arXiv 2018, arXiv:1807.11886. [Google Scholar]

- Ren, Y.; Liu, Z. Barcode Detection and Decoding Method Based on Deep Learning. In Proceedings of the 2019 2nd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 28–30 September 2019; pp. 393–396. [Google Scholar]

- Yang, Q.; Golwala, G.; Sundaram, S.; Lee, P.; Allebach, J. Barcode Detection and Decoding in On-Line Fashion Images. Electron. Imaging 2019, 2019, 413-1–413-7. [Google Scholar] [CrossRef]

- Xiao, Y.; Ming, Z. 1D Barcode Detection via Integrated Deep-Learning and Geometric Approach. Appl. Sci. 2019, 9, 3268. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Jia, J.; Zhu, Z.; Min, X.; Zhai, G.; Zhang, X.-P. Fine Detection and Classification of Multi-Class Barcode in Complex Environments. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 306–311. [Google Scholar]

- Blanger, L.; Hirata, N.S.T. An Evaluation of Deep Learning Techniques for Qr Code Detection. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1625–1629. [Google Scholar]

- Yuan, Q.; Li, Y.; Jiang, J.-T.; Xu, L.; Guo, Y.; Xing, Z.; Zhang, D.; Guo, J.; Shen, K. MU R-CNN: A Two-Dimensional Code Instance Segmentation Network Based on Deep Learning. Future Internet 2019, 11, 197. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Tian, Y.; Tian, J.; Zhou, F. An Efficient Method for DPM Code Localization Based on Depthwise Separable Convolution. IEEE Access 2019, 7, 42014–42023. [Google Scholar] [CrossRef]

- Zhang, J.; Min, X.; Jia, J.; Zhu, Z.; Wang, J.; Zhai, G. Fine Localization and Distortion Resistant Detection of Multi-Class Barcode in Complex Environments. Multimedia Tools Appl. 2021, 80, 16153–16172. [Google Scholar] [CrossRef]

- Zharkov, A.; Vavilin, A.; Zagaynov, I. New Benchmarks for Barcode Detection Using Both Synthetic and Real Data. In International Workshop on Document Analysis Systems; Bai, X., Karatzas, D., Lopresti, D., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 481–493. [Google Scholar]

- Lohia, A.; Kadam, K.D.; Joshi, R.R.; Bongale, D.A.M. Bibliometric Analysis of One-Stage and Two-Stage Object Detection. Libr. Philos. Pract. 2021, 4910, 34. [Google Scholar]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using Channel Pruning-Based YOLO v4 Deep Learning Algorithm for the Real-Time and Accurate Detection of Apple Flowers in Natural Environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Saeed, F.; Ahmed, M.J.; Gul, M.J.; Hong, K.J.; Paul, A.; Kavitha, M.S. A Robust Approach for Industrial Small-Object Detection Using an Improved Faster Regional Convolutional Neural Network. Sci. Rep. 2021, 11, 23390. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, F.F.; Heckel, R. Image Recognition from Raw Labels Collected without Annotators. arXiv 2020, arXiv:1910.09055. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision–ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; Illustrated edition; The MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Pragati, B A Newbie-Friendly Guide to Transfer Learning. 2022. Available online: https://www.v7labs.com/blog/transfer-learning-guide (accessed on 5 October 2022).

- Seldon, Transfer Learning for Machine Learning. 2021. Available online: https://www.seldon.io/transfer-learning (accessed on 5 October 2022).

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning for Real-Time Fruit Detection and Orchard Fruit Load Estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Yohanandan, S. MAP (Mean Average Precision) Might Confuse You! 2020. Available online: https://towardsdatascience.com/map-mean-average-precision-might-confuse-you-5956f1bfa9e2 (accessed on 5 October 2022).

- Chen, Z.; Chen, D.; Zhang, Y.; Cheng, X.; Zhang, M.; Wu, C. Deep Learning for Autonomous Ship-Oriented Small Ship Detection. Saf. Sci. 2020, 130, 104812. [Google Scholar] [CrossRef]

- YOLO: You Only Look Once-Real Time Object Detection-GeeksforGeeks. Available online: https://www.geeksforgeeks.org/yolo-you-only-look-once-real-time-object-detection/ (accessed on 5 October 2022).

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object Detection Using YOLO: Challenges, Architectural Successors, Datasets and Applications. Multimedia Tools Appl. 2022, 1, 33. [Google Scholar] [CrossRef]

- Gillani, I.S.; Munawar, M.R.; Talha, M.; Azhar, S.; Mashkoor, Y.; uddin, M.S.; Zafar, U. Yolov5, Yolo-x, Yolo-r, Yolov7 Performance Comparison: A Survey. In Proceedings of the Artificial Intelligence and Fuzzy Logic System, Toronto, ON, Canada, 24–25 September 2022; pp. 17–28. [Google Scholar]

- Sharma, A. Introduction to the YOLO Family. 2022. Available online: https://pyimagesearch.com/2022/04/04/introduction-to-the-yolo-family/ (accessed on 6 October 2022).

| Name | Size | Resolution (Pixel) | Instance per Image | Image Feature | Barcode Annotation | |||

|---|---|---|---|---|---|---|---|---|

| Single | Multiple | Synthetic | Real-Life | Provided | No. of Annotations | |||

| Arte-Lab Medium Barcode (Set 1) | 215 | 640 × 480 | ✓ | ✓ | ||||

| Arte-Lab Medium Barcode (Set 2) | 215 | 640 × 480 | ✓ | ✓ | ||||

| Arte-Lab Rotated Barcode | 365 | 640 × 480 | ✓ | ✓ | ✓ | |||

| 1D Barcode Extended | 155 | 648 × 488 | ✓ | ✓ | ✓ | ✓ | 155 | |

| WWU Muenster | 1055 | 640 × 480 | ✓ | ✓ | ✓ | |||

| Dubská M. | 400 | 604 × 402 | ✓ | ✓ | ✓ | 400 | ||

| Sörös G. | 320 | 720 × 1280 | ✓ | ✓ | ✓ | 328 | ||

| Bodnár-Synthetic | 10,000 | 512 × 512 | ✓ | ✓ | ||||

| Bodnár-Huawei | 98 | 1600 × 1200 | ✓ | ✓ | ✓ | |||

| Percentage | 100% | 33.33% | 33.33% | 77.78% | 33.33% | |||

| Authors | Year | D-CNN | Public Dataset | Private Dataset | Accuracy |

|---|---|---|---|---|---|

| Chou et al. [61] | 2015 | CNN | CypherLab | 0.952 | |

| Grzeszick et al. [34] | 2016 | CNN | Product on the racks | 0.470 | |

| Li et al. [62] | 2017 | Faster R-CNN | ArteLab WWU Muenster | 0.989 0.994 | |

| Hansen et al. [33] | 2017 | YOLO v2 | ArteLab Rotated WWU Muenster Dubska’ M. Sörös G. | 0.914 (all) | |

| Zhang et al. [63] | 2018 | SSD | Medical Label | 0.945 | |

| Tian et al. [64] | 2018 | R-CNN | ArteLab WWU Muenster | Mixed Barcode | 0.963 (ArteLab and Muenster) 0.925 |

| Ventsov and Podkolzina [65] | 2018 | CNN | Ventsov N.N | 0.974 | |

| Zhao et al. [66] | 2018 | CNN | Barcode-30k | 0.942 | |

| Ren and Liu [67] | 2019 | SSD | ArteLab WWU Muenster CipherLab | 0.885 0.884 0.992 | |

| Yang et al. [68] | 2019 | CNN | Fashion Label | 0.967 | |

| Xiao and Ming [69] | 2019 | YOLO v2 | ArteLabWWU Muenster | 0.912 0.939 | |

| Pu et al. [11] | 2019 | CNN | Production line | 0.991 | |

| Zhang et al. [70] | 2019 | Fast R-CNN | ArteLabWWU Muenster Dubska´ M. Sörös G. | 0.871 (all) | |

| Blanger and Hirata [71] | 2019 | SSD | Blanger L. | 0.770 | |

| Yuan et al. [72] | 2019 | R-CNN | CipherLab COCO Val2017 | UAV123 Yuan, B. | 0.999 (all) |

| Li et al. [73] | 2019 | DSC | DPM Code QR Code Images | 0.999 (all) | |

| Suh et al. [35] | 2019 | YOLO v2 | ArteLab Rotated WWU Muenster | 15 Carriers Shipping Labels | 0.980 (all) |

| Kalinov et al. [32] | 2020 | CNN | UAV barcode | 0.961 | |

| Brylka et al. [14] | 2020 | YOLO v3 | ArteLab ArteLab Roated WWU Muenster | 0.870 (both ArteLabs) 0.860 | |

| Jia et al. [51] | 2020 | Faster R-CNN | ArteLab WWU Muenster Dubska´ M. Sörös G. Bodnár-Synthetic | Jia, J. | 0.834 (all) |

| Zhang et al. [74] | 2020 | Fast R-CNN | ArteLab WWU Muenster Dubska´ M. Sörös G | Zhang, J. | 0.879 (all) |

| Tan [36] | 2020 | CNN | Logistic Robot Barcode | 0.988 | |

| Zharkov et al. [75] | 2020 | CNN | ZVZ-Synth ZVZ-Real | 0.967 (all) | |

| Suh et al. [37] | 2021 | CNN | Shipping Labels | 0.997 | |

| Do and Pham [38] | 2021 | YOLO v3 | COCO Val2017 | Supermarket Products | 0.900 (all) |

| Zhang et al. [15] | 2021 | YOLO v4 | Liwei Z. | 0.906 |

| Dataset | No. of Images | No. Barcode Regions in Different Sizes | No. of Annotations | ||

|---|---|---|---|---|---|

| Small | Medium | Large | |||

| InventBar | 527 | 0 | 73 | 454 | 527 |

| ParcelBar | 844 | 0 | 356 | 732 | 1088 |

| Authors | D-CNN Methods | Backbone |

|---|---|---|

| Tan et al., 2020 [25] | EfficientDet | tf_lite0 |

| Ren et al., 2016 [27] | Faster R-CNN | resnet50_fpn_1x |

| Lin et al., 2018 [26] | RetinaNet | resnet50_fpn_1x |

| ultralytics/yolov5, 2022 [23] | YOLO v5 | small |

| Ge et al., 2021 [24] | YOLO x | yolox_s_8x8 |

| No. | Dataset | Training Set | Validation Set | Test Set | Total |

|---|---|---|---|---|---|

| 1 | Arte-Lab Medium Barcode (Set 1) | 86 | 86 | 43 | 215 |

| 2 | Arte-Lab Medium Barcode (Set 2) | 86 | 86 | 43 | 215 |

| 3 | Arte-Lab Rotated Barcode | 146 | 146 | 73 | 365 |

| 4 | WWU Muenster | 422 | 422 | 211 | 1055 |

| 5 | 1D Barcode Extended | 62 | 62 | 31 | 155 |

| 6 | InventBar | 337 | 338 | 169 | 844 |

| 7 | ParcelBar | 210 | 211 | 106 | 527 |

| D-CNN-Based Methods | Arte-Lab (Set 2) | Arte-Lab (Set 1) | Arte-Lab Rotated | WWU Muenster | 1D Barcode Extended | InventBar | ParcelBar | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP 0.5 | mAP 0.5–0.95 | mAP 0.5 | mAP 0.5–0.95 | mAP 0.5 | mAP 0.5–0.9 | mAP 0.5 | mAP 0.5–0.9 | mAP 0.5 | mAP 0.5–0.9 | mAP 0.5 | mAP 0.5–0.9 | mAP 0.5 | mAP 0.5–0.9 | |

| EfficientDet | 1.000 | 0.881 | 1.000 | 0.857 | 1.000 | 0.855 | 0.999 | 0.782 | 1.000 | 0.854 | 0.954 | 0.758 | 0.991 | 0.855 |

| Faster R-CNN | 1.000 | 0.882 | 1.000 | 0.861 | 1.000 | 0.859 | 1.000 | 0.792 | 1.000 | 0.880 | 0.997 | 0.827 | 0.985 | 0.854 |

| RetinaNet | 1.000 | 0.884 | 1.000 | 0.840 | 1.000 | 0.876 | 1.000 | 0.809 | 1.000 | 0.869 | 0.994 | 0.812 | 0.994 | 0.851 |

| YOLO v5 | 0.998 | 0.936 | 0.998 | 0.904 | 0.996 | 0.935 | 0.998 | 0.896 | 0.998 | 0.930 | 0.996 | 0.873 | 0.994 | 0.918 |

| YOLO x | 1.000 | 0.833 | 1.000 | 0.827 | 0.970 | 0.848 | 1.000 | 0.813 | 0.996 | 0.726 | 0.998 | 0.810 | 0.981 | 0.856 |

| Datasets | EfficientDet | Faster R-CNN | RetinaNet | YOLO v5 | YOLO x |

|---|---|---|---|---|---|

| Arte-Lab (Set 1) | 0:45:28 | 1:12:20 | 1:43:49 | 0:32:17 | 1:20:11 |

| Arte-Lab (Set 2) | 0:51:24 | 1:21:05 | 1:09:27 | 0:29:30 | 1:34:02 |

| Arte-Lab Rotated | 2:24:35 | 2:16:44 | 0:44:22 | 0:12:20 | 2:11:01 |

| WWU Muenster | 2:57:38 | 3:23:15 | 9:36:58 | 4:22:45 | 7:34:19 |

| 1D Barcode Extended | 0:39:08 | 0:14:06 | 1:07:39 | 1:17:35 | 0:54:28 |

| InventBar | 1:10:40 | 1:50:38 | 4:57:20 | 4:27:19 | 3:51:31 |

| ParcelBar | 1:35:02 | 2:25:25 | 3:38:58 | 1:48:22 | 5:44:34 |

| Total runtime 1 | 10:23:55 | 12:43:33 | 22:58:33 | 13:10:08 | 23:10:06 |

| Datasets | EfficientDet | Faster R-CNN | RetinaNet | YOLO v5 | YOLO x |

|---|---|---|---|---|---|

| Arte-Lab (Set 1) | 0:24:25 | 0:35:05 | 0:26:20 | 0:26:41 | 0:20:40 |

| Arte-Lab (Set 2) | 0:25:54 | 0:40:14 | 0:31:13 | 0:27:32 | 0:22:52 |

| Arte-Lab Rotated | 0:36:39 | 0:56:25 | 0:41:57 | 0:41:27 | 0:31:53 |

| WWU Muenster | 2:05:51 | 2:21:52 | 2:17:29 | 1:57:52 | 1:49:48 |

| 1D Barcode Extended | 0:15:36 | 0:22:51 | 0:17:25 | 0:19:23 | 0:13:52 |

| InventBar | 1:03:21 | 1:32:39 | 1:10:38 | 0:59:40 | 0:53:27 |

| ParcelBar | 1:30:38 | 2:19:18 | 1:43:05 | 1:39:07 | 1:19:54 |

| Total runtime 2 | 6:22:24 | 8:48:24 | 7:08:07 | 6:31:42 | 5:32:26 |

| D-CNN Methods | Model Type | Effects on 1D Barcode Recognition |

|---|---|---|

| EfficientDet | Sigle-stage |

|

| Faster R-CNN | Two-stage |

|

| RetinaNet | Single-stage |

|

| YOLO v5 | Single-stage |

|

| YOLO x | Single-stage |

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kamnardsiri, T.; Charoenkwan, P.; Malang, C.; Wudhikarn, R. 1D Barcode Detection: Novel Benchmark Datasets and Comprehensive Comparison of Deep Convolutional Neural Network Approaches. Sensors 2022, 22, 8788. https://doi.org/10.3390/s22228788

Kamnardsiri T, Charoenkwan P, Malang C, Wudhikarn R. 1D Barcode Detection: Novel Benchmark Datasets and Comprehensive Comparison of Deep Convolutional Neural Network Approaches. Sensors. 2022; 22(22):8788. https://doi.org/10.3390/s22228788

Chicago/Turabian StyleKamnardsiri, Teerawat, Phasit Charoenkwan, Chommaphat Malang, and Ratapol Wudhikarn. 2022. "1D Barcode Detection: Novel Benchmark Datasets and Comprehensive Comparison of Deep Convolutional Neural Network Approaches" Sensors 22, no. 22: 8788. https://doi.org/10.3390/s22228788